Ad

M.sc. engg (ict) admission guide database management system 4

- 2. M.Sc. Engg in ICT (BUET) Admission Test – October, 2015 Database Management Systems Book Text book: Database System Concept - Fifth edition Writer: Abraham Silberschatz, Henry F. Korth and Sudarshan Content Database Design Entity-relationship model Relational database design Database Management Systems Relational model File organization Indexing Query processing and optimization Transaction management Concurrency control Recovery Database Administration Advanced Database Management Systems Object database Distributed database

- 3. Multimedia database Introduction: Concept of database systems Purpose of Database Systems View of Data Database Languages Relational Databases Database Design Object-based and semi structured databases Data Storage and Querying Transaction Management Database Architecture Database Users and Administrators Overall Structure History of Database Systems Database Management System (DBMS) DBMS contains information about a particular enterprise Collection of interrelated data Set of programs to access the data An environment that is both convenient and efficient to use Database Applications: Banking: all transactions Airlines: reservations, schedules Universities: registration, grades Sales: customers, products, purchases Online retailers: order tracking, customized recommendations Manufacturing: production, inventory, orders, supply chain Human resources: employee records, salaries, tax deductions Databases touch all aspects of our lives

- 5. A Modern Data Architecture Purpose of Database Systems In the early days, database applications were built directly on top of file systems Drawbacks of using file systems to store data: Data redundancy and inconsistency Multiple file formats, duplication of information in different files Difficulty in accessing data Need to write a new program to carry out each new task Data isolation — multiple files and formats Integrity problems Integrity constraints (e.g. account balance > 0) become “buried” in

- 6. program code rather than being stated explicitly Hard to add new constraints or change existing ones Atomicity of updates Failures may leave database in an inconsistent state with partial updates carried out Example: Transfer of funds from one account to another should either complete or not happen at all Concurrent access by multiple users Concurrent accessed needed for performance Uncontrolled concurrent accesses can lead to inconsistencies – Example: Two people reading a balance and updating it at the same time Security problems Hard to provide user access to some, but not all, data Database systems offer solutions to all the above problems Levels of Abstraction Physical level: describes how a record (e.g., customer) is stored. Logical level: describes data stored in database, and the relationships among the data. type customer = record customer_id : string; customer_name : string; customer_street : string; customer_city : integer; end; View level: application programs hide details of data types. Views can also hide information (such as an employee’s salary) for security purposes. View of Data

- 7. Instances and Schemas Similar to types and variables in programming languages Schema – the logical structure of the database Example: The database consists of information about a set of customers and accounts and the relationship between them) Analogous to type information of a variable in a program Physical schema: database design at the physical level Logical schema: database design at the logical level Instance – the actual content of the database at a particular point in time Analogous to the value of a variable Physical Data Independence – the ability to modify the physical schema without changing the logical schema Applications depend on the logical schema In general, the interfaces between the various levels and components should be well defined so that changes in some parts do not seriously influence others.

- 8. Data Models A collection of tools for describing Data Data relationships Data semantics Data constraints Relational model Entity-Relationship data model (mainly for database design) Object-based data models (Object-oriented and Object-relational) Semistructured data model (XML) Other older models: Network model Hierarchical model Data Manipulation Language (DML) Language for accessing and manipulating the data organized by the appropriate data model DML also known as query language Retrieval of information Insertion of new information Deletion of information Modification of information Two classes of languages Procedural – user specifies what data is required and how to get those data Declarative (nonprocedural) – user specifies what data is required without specifying how to get those data SQL is the most widely used query language Data Definition Language (DDL) Specification notation for defining the database schema

- 9. Example: create table account ( account-number char(10), balance integer) DDL compiler generates a set of tables stored in a data dictionary Data dictionary contains metadata (i.e., data about data) Database schema Data storage and definition language Specifies the storage structure and access methods used Integrity constraints Domain constraints Referential integrity (references constraint in SQL) Assertions Authorization Relational Model Example of tabular data in the relational model A Sample Relational Database Attributes

- 10. DML for Relational Database: SQL SQL: widely used non-procedural language Example: Find the name of the customer with customer-id 192-83-7465 select customer.customer_name from customer wherecustomer.customer_id = ‘192-83-7465’ Example: Find the balances of all accounts held by the customer with customer-id 192-83-7465 select account.balance from depositor, account

- 11. where depositor.customer_id = ‘192-83-7465’ and depositor.account_number = account.account_number Application programs generally access databases through one of Language extensions to allow embedded SQL Application program interface (e.g., ODBC/JDBC) which allow SQL queries to be sent to a database Database Design The process of designing the general structure of the database: Logical Design – Deciding on the database schema. Database design requires that we find a “good” collection of relation schemas. Business decision – What attributes should we record in the database? Computer Science decision – What relation schemas should we have and how should the attributes be distributed among the various relation schemas? Physical Design – Deciding on the physical layout of the database The Entity-Relationship Model Models an enterprise as a collection of entities and relationships Entity: a “thing” or “object” in the enterprise that is distinguishable from other objects Described by a set of attributes Relationship: an association among several entities Represented diagrammatically by an entity-relationship diagram: Object-Relational Data Models Extend the relational data model by including object orientation and constructs to

- 12. deal with added data types. Allow attributes of tuples to have complex types, including non-atomic values such as nested relations. Preserve relational foundations, in particular the declarative access to data, while extending modeling power. Provide upward compatibility with existing relational languages. XML: Extensible Markup Language Defined by the WWW Consortium (W3C) Originally intended as a document markup language not a database language The ability to specify new tags, and to create nested tag structures made XML a great way to exchange data, not just documents XML has become the basis for all new generation data interchange formats. A wide variety of tools is available for parsing, browsing and querying XML documents/data Storage Management Storage manager is a program module that provides the interface between the low- level data stored in the database and the application programs and queries submitted to the system. The storage manager is responsible to the following tasks: Interaction with the file manager Efficient storing, retrieving and updating of data Components: Authorization and integrity manager Transaction manager File manager Buffer manager Issues: Storage access File organization Indexing and hashing Query Processing 1. Parsing and translation 2. Optimization

- 13. 3. Evaluation Alternative ways of evaluating a given query Equivalent expressions Different algorithms for each operation Cost difference between a good and a bad way of evaluating a query can be enormous Need to estimate the cost of operations Depends critically on statistical information about relations which the database must maintain Need to estimate statistics for intermediate results to compute cost of complex expressions Transaction Management A transaction is a collection of operations that performs a single logical function in a database application Transaction-management component ensures that the database remains in a consistent (correct) state despite system failures (e.g., power failures and operating system crashes) and transaction failures. Concurrency-control manager controls the interaction among the concurrent transactions, to ensure the consistency of the database.

- 14. Database Architecture The architecture of a database systems is greatly influenced by the underlying computer system on which the database is running: Centralized Client-server Parallel (multi-processor) Distributed Database Users Users are differentiated by the way they expect to interact with the system Application programmers – interact with system through DML calls Sophisticated users – form requests in a database query language Specialized users – write specialized database applications that do not fit into the traditional data processing framework Naïve users – invoke one of the permanent application programs that have been written previously Examples, people accessing database over the web, bank tellers, clerical staff Database Administrator Coordinates all the activities of the database system; the database administrator has a good understanding of the enterprise’s information resources and needs. Database administrator's duties include: Schema definition Storage structure and access method definition Schema and physical organization modification Granting user authority to access the database Specifying integrity constraints Acting as liaison with users Monitoring performance and responding to changes in requirements

- 15. History of Database Systems 1950s and early 1960s: Data processing using magnetic tapes for storage Tapes provide only sequential access Punched cards for input Late 1960s and 1970s: Hard disks allow direct access to data Network and hierarchical data models in widespread use Ted Codd defines the relational data model Would win the ACM Turing Award for this work IBM Research begins System R prototype UC Berkeley begins Ingres prototype High-performance (for the era) transaction processing 1980s: Research relational prototypes evolve into commercial systems SQL becomes industrial standard Parallel and distributed database systems Object-oriented database systems 1990s: Large decision support and data-mining applications Large multi-terabyte data warehouses Emergence of Web commerce

- 16. 2000s: XML and XQuery standards Automated database administration

- 17. 2014 11/16/2014

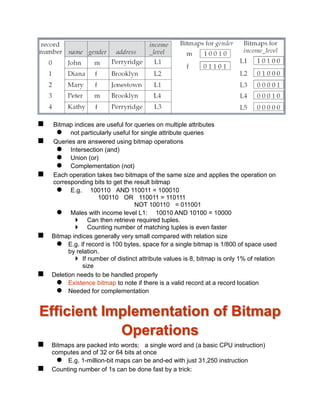

- 18. Relational Model Structure of Relational Databases Fundamental Relational-Algebra-Operations Additional Relational-Algebra-Operations Extended Relational-Algebra-Operations Null Values Modification of the Database Example of a Relation Basic Structure Formally, given sets D1, D2, …. Dn a relation r is a subset of D1 x D2 x … x Dn Thus, a relation is a set of n-tuples (a1, a2, …, an) where each ai Di Example: If customer_name = {Jones, Smith, Curry, Lindsay, …} /* Set of all customer names */ customer_street = {Main, North, Park, …} /* set of all street names*/ customer_city = {Harrison, Rye, Pittsfield, …} /* set of all city names */

- 19. Then r = { (Jones, Main, Harrison), (Smith, North, Rye), (Curry, North, Rye), (Lindsay, Park, Pittsfield) } is a relation over customer_name x customer_street x customer_city Attribute Types Each attribute of a relation has a name The set of allowed values for each attribute is called the domain of the attribute Attribute values are (normally) required to be atomic; that is, indivisible E.g. the value of an attribute can be an account number, but cannot be a set of account numbers Domain is said to be atomic if all its members are atomic The special value null is a member of every domain The null value causes complications in the definition of many operations We shall ignore the effect of null values in our main presentation and consider their effect later Relation Schema A1, A2, …, An are attributes R = (A1, A2, …, An ) is a relation schema Example: Customer_schema = (customer_name, customer_street, customer_city) r(R) denotes a relation r on the relation schema R Example: customer (Customer_schema) Relation Instance The current values (relation instance) of a relation are specified by a table An element t of r is a tuple, represented by a row in a table

- 20. Relations are Unordered Order of tuples is irrelevant (tuples may be stored in an arbitrary order) Example: account relation with unordered tuples Database A database consists of multiple relations

- 21. Information about an enterprise is broken up into parts, with each relation storing one part of the information account : stores information about accounts depositor : stores information about which customer owns which account customer : stores information about customers Storing all information as a single relation such as bank(account_number, balance, customer_name, ..) results in repetition of information e.g.,if two customers own an account (What gets repeated?) the need for null values e.g., to represent a customer without an account Normalization theory deals with how to design relational schemas The customer Relation

- 23. Keys Let K R K is a superkey of R if values for K are sufficient to identify a unique tuple of each possible relation r(R) by “possible r ” we mean a relation r that could exist in the enterprise we are modeling. Example: {customer_name, customer_street} and {customer_name} are both superkeys of Customer, if no two customers can possibly have the same name In real life, an attribute such as customer_id would be used instead of customer_name to uniquely identify customers, but we omit it to keep our examples small, and instead assume customer names are unique. K is a candidate key if K is minimal Example: {customer_name} is a candidate key for Customer, since it is a superkey and no subset of it is a superkey. Primary key: a candidate key chosen as the principal means of identifying tuples within a relation Should choose an attribute whose value never, or very rarely, changes. E.g. email address is unique, but may change

- 24. Foreign Keys A relation schema may have an attribute that corresponds to the primary key of another relation. The attribute is called a foreign key. E.g. customer_name and account_number attributes of depositor are foreign keys to customer and account respectively. Only values occurring in the primary key attribute of the referenced relation may occur in the foreign key attribute of the referencing relation. Schema diagram Query Languages Language in which user requests information from the database. Categories of languages Procedural Non-procedural, or declarative “Pure” languages: Relational algebra Tuple relational calculus Domain relational calculus Pure languages form underlying basis of query languages that people use. Relational Algebra Procedural language Six basic operators select:

- 25. project: union: set difference: – Cartesian product: x rename: The operators take one or two relations as inputs and produce a new relation as a result. Select Operation – Example Relation r A B C D 1 5 12 23 7 7 3 10 A=B ^ D > 5 (r) A B C D 1 23 7 10

- 26. Select Operation Notation: p(r) p is called the selection predicate Defined as: p(r) = {t | t r and p(t)} Where p is a formula in propositional calculus consisting of terms and or not) Each term is one of: <attribute> op <attribute> or <constant> where op Example of selection: branch_name=“Perryridge”(account) Project Operation – Example

- 27. Project Operation Notation: where A1, A2 are attribute names and r is a relation name. The result is defined as the relation of k columns obtained by erasing the columns that are not listed Duplicate rows removed from result, since relations are sets Example: To eliminate the branch_name attribute of account account_number, balance (account) Union Operation – Example

- 28. Union Operation Notation: r s Defined as: r s = {t | t r or t s} For r s to be valid. 1. r, s must have the same arity (same number of attributes) 2. The attribute domains must be compatible (example: 2nd column of r deals with the same type of values as does the 2nd column of s) Example: to find all customers with either an account or a loan customer_name (depositor) customer_name (borrower) Set Difference Operation – Example

- 29. Set Difference Operation Notation r – s Defined as: r – s = {t | t r and s} Set differences must be taken between compatible relations. r and s must have the same arity attribute domains of r and s must be compatible Cartesian-Product Operation – Example

- 30. Cartesian-Product Operation Notation r x s Defined as: r x s = {t q | t r and q s} Assume that attributes of r(R) and s(S) are disjoint. (That is, R S = ). If attributes of r(R) and s(S) are not disjoint, then renaming must be used. Composition of Operations Can build expressions using multiple operations Example: A=C(r x s)

- 31. A=C(r x s) Rename Operation Allows us to name, and therefore to refer to, the results of relational-algebra expressions. Allows us to refer to a relation by more than one name. Example: x (E) returns the expression E under the name X If a relational-algebra expression E has arity n, then returns the result of expression E under the name X, and with the attributes renamed to A1 , A2 , …., An . Banking Example branch (branch_name, branch_city, assets) customer (customer_name, customer_street, customer_city) account (account_number, branch_name, balance) loan (loan_number, branch_name, amount) depositor (customer_name, account_number) borrower (customer_name, loan_number) Example Queries Find all loans of over $1200 amount > 1200 (loan)

- 32. Find the loan number for each loan of an amount greater than $1200 Find the names of all customers who have a loan, an account, or both, from the bank loan_number (amount > 1200 (loan)) customer_name (borrower) customer_name (depositor)

- 33. Find the largest account balance Strategy: Find those balances that are not the largest

- 34. – Rename account relation as d so that we can compare each account balance with all others Use set difference to find those account balances that were not found in the earlier step. The query is: Formal Definition A basic expression in the relational algebra consists of either one of the following: A relation in the database A constant relation Let E1 and E2 be relational-algebra expressions; the following are all relational- algebra expressions: E1 E2 E1 – E2 E1 x E2 p (E1), P is a predicate on attributes in E1 s(E1), S is a list consisting of some of the attributes in E1 x (E1), x is the new name for the result of E1 Additional Operations We define additional operations that do not add any power to the relational algebra, but that simplify common queries. Set intersection Natural join Division balance (account) - account.balance (account.balance < d.balance (account x d (account)))

- 35. Assignment Set-Intersection Operation Notation: r s Defined as: r s = { t | t r and t s } Assume: r, s have the same arity attributes of r and s are compatible Note: r s = r – (r – s) Set-Intersection Operation – Example

- 36. Natural-Join Operation Let r and s be relations on schemas R and S respectively. Then, r s is a relation on schema R S obtained as follows: Consider each pair of tuples tr from r and ts from s. If tr and ts have the same value on each of the attributes in R S, add a tuple t to the result, where t has the same value as tr on r t has the same value as ts on s Example: R = (A, B, C, D) S = (E, B, D) Result schema = (A, B, C, D, E) r s is defined as: r.A, r.B, r.C, r.D, s.E (r.B = s.B r.D = s.D (r x s)) Natural Join Operation – Example

- 37. Division Operation Notation: Suited to queries that include the phrase “for all”. Let r and s be relations on schemas R and S respectively where R = (A1, …, Am , B1, …, Bn ) S = (B1, …, Bn) The result of r s is a relation on schema R – S = (A1, …, Am) r s = { t | t R-S (r u s ( tu r ) } Where tu means the concatenation of tuples t and u to produce a single tuple Division Operation – Example

- 39. Division Operation (Cont.) Property Let q = r s Then q is the largest relation satisfying q x s r Definition in terms of the basic algebra operation Let r(R) and s(S) be relations, and let S R r s R-S (r ) – R-S R-S (r ) x s ) – R-S,S(r )) To see why R-S,S (r) simply reorders attributes of r R-S R-S (r ) x s ) – R-S,S(r) ) gives those tuples t in

- 40. R-S (r ) such that for some tuple u s, tu r. Assignment Operation The assignment operation () provides a convenient way to express complex queries. Write query as a sequential program consisting of a series of assignments followed by an expression whose value is displayed as a result of the query. Assignment must always be made to a temporary relation variable. Example: Write r s as temp1 R-S (r ) temp2 R-S ((temp1 x s ) – R-S,S (r )) result = temp1 – temp2 May use variable in subsequent expressions. Bank Example Queries

- 41. Find all customers who have an account at all branches located in Brooklyn city. customer_name, branch_name (depositor account) branch_name (branch_city = “Brooklyn” (branch))

- 42. Extended Relational-Algebra- Operations Generalized Projection Aggregate Functions Outer Join Generalized Projection Extends the projection operation by allowing arithmetic functions to be used in the projection list. E is any relational-algebra expression Each of F1, F2, …, Fn are are arithmetic expressions involving constants and attributes in the schema of E. Given relation credit_info(customer_name, limit, credit_balance), find how much more each person can spend: customer_name, limit – credit_balance (credit_info) Aggregate Functions and Operations Aggregation function takes a collection of values and returns a single value as a result. avg: average value min: minimum value max: maximum value sum: sum of values count: number of values Aggregate operation in relational algebra E is any relational-algebra expression G1, G2 …, Gn is a list of attributes on which to group (can be empty) Each Fi is an aggregate function

- 43. Each Ai is an attribute name Aggregate Operation – Example

- 44. Aggregate Functions (Cont.) Result of aggregation does not have a name Can use rename operation to give it a name For convenience, we permit renaming as part of aggregate operation Outer Join An extension of the join operation that avoids loss of information. Computes the join and then adds tuples form one relation that does not match tuples in the other relation to the result of the join. branch_name g sum(balance) as sum_balance (account)

- 45. Uses null values: null signifies that the value is unknown or does not exist All comparisons involving null are (roughly speaking) false by definition. We shall study precise meaning of comparisons with nulls later Outer Join – Example

- 47. Null Values It is possible for tuples to have a null value, denoted by null, for some of their attributes null signifies an unknown value or that a value does not exist. The result of any arithmetic expression involving null is null. Aggregate functions simply ignore null values (as in SQL) For duplicate elimination and grouping, null is treated like any other value, and two nulls are assumed to be the same (as in SQL) Comparisons with null values return the special truth value: unknown If false was used instead of unknown, then not (A < 5) would not be equivalent to A >= 5 Three-valued logic using the truth value unknown: OR: (unknown or true) = true, (unknown or false) = unknown (unknown or unknown) = unknown AND: (true and unknown) = unknown, (false and unknown) = false, (unknown and unknown) = unknown NOT: (not unknown) = unknown In SQL “P is unknown” evaluates to true if predicate P evaluates to unknown Result of select predicate is treated as false if it evaluates to unknown Modification of the Database The content of the database may be modified using the following operations: Deletion Insertion Updating All these operations are expressed using the assignment operator. Deletion A delete request is expressed similarly to a query, except instead of displaying tuples to the user, the selected tuples are removed from the database. Can delete only whole tuples; cannot delete values on only particular attributes A deletion is expressed in relational algebra by: r r – E where r is a relation and E is a relational algebra query.

- 48. Deletion Examples Insertion To insert data into a relation, we either: specify a tuple to be inserted write a query whose result is a set of tuples to be inserted in relational algebra, an insertion is expressed by: r r E where r is a relation and E is a relational algebra expression. The insertion of a single tuple is expressed by letting E be a constant relation containing one tuple. Insertion Examples

- 49. Updating A mechanism to change a value in a tuple without charging all values in the tuple Use the generalized projection operator to do this task )(,,,, 21 rr lFFF Each Fi is either the I th attribute of r, if the I th attribute is not updated, or, if the attribute is to be updated Fi is an expression, involving only constants and the attributes of r, which gives the new value for the attribute Update Examples

- 51. 2014 11/16/2014

- 52. SQL Data Definition Basic Query Structure Set Operations Aggregate Functions Null Values Nested Subqueries Complex Queries Views Modification of the Database Joined Relations** History IBM Sequel language developed as part of System R project at the IBM San Jose Research Laboratory Renamed Structured Query Language (SQL) ANSI and ISO standard SQL: SQL-86 SQL-89 SQL-92 SQL:1999 (language name became Y2K compliant!) SQL:2003 Commercial systems offer most, if not all, SQL-92 features, plus varying feature sets from later standards and special proprietary features. Not all examples here may work on your particular system. Data Definition Language The schema for each relation. The domain of values associated with each attribute. Integrity constraints The set of indices to be maintained for each relations. Security and authorization information for each relation. The physical storage structure of each relation on disk. Basic Domain Types in SQL char(n). Fixed length character string, with user-specified length n.

- 53. varchar(n). Variable length character strings, with user-specified maximum length n. int. Integer (a finite subset of the integers that is machine-dependent). smallint. Small integer (a machine-dependent subset of the integer domain type). numeric(p,d). Fixed point number, with user-specified precision. real, double precision. Floating point and double-precision floating point numbers, with machine-dependent precision. float(n). Floating point number, with user-specified precision of at least n digits. Create Table Construct An SQL relation is defined using the create table command: create table r (A1 D1, A2 D2, ..., An Dn, (integrity-constraint1), ..., (integrity-constraintk)) r is the name of the relation each Ai is an attribute name in the schema of relation r Di is the data type of values in the domain of attribute Ai Example: create table branch (branch_name char(15) not null, branch_city char(30), assets integer) Integrity Constraints in Create Table not null primary key (A1, ..., An )

- 54. primary key declaration on an attribute automatically ensures not null in SQL-92 onwards, needs to be explicitly stated in SQL-89 Drop and Alter Table Constructs The drop table command deletes all information about the dropped relation from the database. The alter table command is used to add attributes to an existing relation: alter table r add A D where A is the name of the attribute to be added to relation r and D is the domain of A. All tuples in the relation are assigned null as the value for the new attribute. The alter table command can also be used to drop attributes of a relation: alter table r drop A where A is the name of an attribute of relation r Dropping of attributes not supported by many databases Basic Query Structure SQL is based on set and relational operations with certain modifications and enhancements A typical SQL query has the form: select A1, A2, ..., An from r1, r2, ..., rm where P Ai represents an attribute

- 55. ri represents a relation P is a predicate. This query is equivalent to the relational algebra expression. ))(( 21,,, 21 mPAAA rrrn The result of an SQL query is a relation. The select Clause The select clause list the attributes desired in the result of a query corresponds to the projection operation of the relational algebra Example: find the names of all branches in the loan relation: select branch_name from loan In the relational algebra, the query would be: branch_name (loan) NOTE: SQL names are case insensitive (i.e., you may use upper- or lower-case letters.) E.g. Branch_Name ≡ BRANCH_NAME ≡ branch_name Some people use upper case wherever we use bold font. SQL allows duplicates in relations as well as in query results. To force the elimination of duplicates, insert the keyword distinct after select. Find the names of all branches in the loan relations, and remove duplicates select distinct branch_name from loan The keyword all specifies that duplicates not be removed. select all branch_name from loan An asterisk in the select clause denotes “all attributes” select * from loan The select clause can contain arithmetic expressions involving the operation, +, –, , and /, and operating on constants or attributes of tuples. The query: select loan_number, branch_name, amount 100 from loan would return a relation that is the same as the loan relation, except that the value of the attribute amount is multiplied by 100.

- 56. The where Clause The where clause specifies conditions that the result must satisfy Corresponds to the selection predicate of the relational algebra. To find all loan number for loans made at the Perryridge branch with loan amounts greater than $1200. select loan_number from loan where branch_name = 'Perryridge' and amount > 1200 Comparison results can be combined using the logical connectives and, or, and not. Comparisons can be applied to results of arithmetic expressions. SQL includes a between comparison operator Example: Find the loan number of those loans with loan amounts between $90,000 and $100,000 (that is, $90,000 and $100,000) The from Clause The from clause lists the relations involved in the query Corresponds to the Cartesian product operation of the relational algebra. Find the Cartesian product borrower X loan select from borrower, loan Find the name, loan number and loan amount of all customers having a loan at the Perryridge branch. select customer_name, borrower.loan_number, amount from borrower, loan where borrower.loan_number = loan.loan_number and branch_name = 'Perryridge' The Rename Operation

- 57. The SQL allows renaming relations and attributes using the as clause: old-name as new-name Find the name, loan number and loan amount of all customers; rename the column name loan_number as loan_id. select customer_name, borrower.loan_number as loan_id, amount from borrower, loan where borrower.loan_number = loan.loan_number Tuple Variables Tuple variables are defined in the from clause via the use of the as clause. Find the customer names and their loan numbers for all customers having a loan at some branch. select customer_name, T.loan_number, S.amount from borrower as T, loan as S where T.loan_number = S.loan_number Find the names of all branches that have greater assets than some branch located in Brooklyn. select distinct T.branch_name from branch as T, branch as S where T.assets > S.assets and S.branch_city = 'Brooklyn' Keyword as is optional and may be omitted borrower as T ≡ borrower T String Operations SQL includes a string-matching operator for comparisons on character strings. The operator “like” uses patterns that are described using two special characters: percent (%). The % character matches any substring. underscore (_). The _ character matches any character. Find the names of all customers whose street includes the substring “Main”. select customer_name

- 58. from customer where customer_street like '% Main%' Match the name “Main%” like 'Main%' escape '' SQL supports a variety of string operations such as concatenation (using “||”) converting from upper to lower case (and vice versa) finding string length, extracting substrings, etc. Ordering the Display of Tuples List in alphabetic order the names of all customers having a loan in Perryridge branch select distinct customer_name from borrower, loan where borrower loan_number = loan.loan_number and branch_name = 'Perryridge' order by customer_name We may specify desc for descending order or asc for ascending order, for each attribute; ascending order is the default. Example: order by customer_name desc Duplicates In relations with duplicates, SQL can define how many copies of tuples appear in the result. Multiset versions of some of the relational algebra operators – given multiset relations r1 and r2: 1. (r1): If there are c1 copies of tuple t1 in r1, and t1 satisfies selections ,, then there are c1 copies of t1 in (r1). 2. A (r ): For each copy of tuple t1 in r1, there is a copy of tuple A (t1) in A (r1 A (t1) denotes the projection of the single tuple t1. 3. r1 x r2 : If there are c1 copies of tuple t1 in r1 and c2 copies of tuple t2 in r2, there are c1 x c2 copies of the tuple t1. t2 in r1 x r2 Example: Suppose multiset relations r1 (A, B) and r2 (C) are as follows: r1 = {(1, a) (2,a)} r2 = {(2), (3), (3)} Then B(r1) would be {(a), (a)}, while B(r1) x r2 would be {(a,2), (a,2), (a,3), (a,3), (a,3), (a,3)}

- 59. SQL duplicate semantics: select A1,, A2, ..., An from r1, r2, ..., rm where P is equivalent to the multiset version of the expression: ))(( 21,,, 21 mPAAA rrrn Set Operations The set operations union, intersect, and except operate on relations and correspond to the relational algebra operations Each of the above operations automatically eliminates duplicates; to retain all duplicates use the corresponding multiset versions union all, intersect all and except all. Suppose a tuple occurs m times in r and n times in s, then, it occurs: m + n times in r union all s min(m,n) times in r intersect all s max(0, m – n) times in r except all s

- 60. Aggregate Functions These functions operate on the multiset of values of a column of a relation, and return a value avg: average value min: minimum value max: maximum value sum: sum of values count: number of values

- 61. Aggregate Functions – Group By Find the number of depositors for each branch. select branch_name, count (distinct customer_name) from depositor, account where depositor.account_number = account.account_number group by branch_name Note: Attributes in select clause outside of aggregate functions must appear in group by list Aggregate Functions – Having Clause Find the names of all branches where the average account balance is more than

- 62. $1,200. select branch_name, avg (balance) from account group by branch_name having avg (balance) > 1200 Note: predicates in the having clause are applied after the formation of groups whereas predicates in the where clause are applied before forming groups Null Values It is possible for tuples to have a null value, denoted by null, for some of their attributes null signifies an unknown value or that a value does not exist. The predicate is null can be used to check for null values. Example: Find all loan number which appear in the loan relation with null values for amount. select loan_number from loan where amount is null The result of any arithmetic expression involving null is null Example: 5 + null returns null However, aggregate functions simply ignore nulls More on next slide Null Values and Three Valued Logic Any comparison with null returns unknown Example: 5 < null or null <> null or null = null Three-valued logic using the truth value unknown: OR: (unknown or true) = true, (unknown or false) = unknown (unknown or unknown) = unknown AND: (true and unknown) = unknown, (false and unknown) = false, (unknown and unknown) = unknown

- 63. NOT: (not unknown) = unknown “P is unknown” evaluates to true if predicate P evaluates to unknown Result of where clause predicate is treated as false if it evaluates to unknown Null Values and Aggregates Total all loan amounts select sum (amount ) from loan Above statement ignores null amounts Result is null if there is no non-null amount All aggregate operations except count(*) ignore tuples with null values on the aggregated attributes. Nested Subqueries SQL provides a mechanism for the nesting of subqueries. A subquery is a select-from-where expression that is nested within another query. A common use of subqueries is to perform tests for set membership, set comparisons, and set cardinality. Example Query Find all customers who have both an account and a loan at the bank. select distinct customer_name from borrower where customer_name in (select customer_name from depositor ) Find all customers who have a loan at the bank but do not have an account at the bank select distinct customer_name from borrower where customer_name not in (select customer_name from depositor ) Find all customers who have both an account and a loan at the Perryridge branch select distinct customer_name from borrower, loan where borrower.loan_number = loan.loan_number and branch_name = 'Perryridge' and (branch_name, customer_name ) in

- 64. (select branch_name, customer_name from depositor, account where depositor.account_number = account.account_number ) Note: Above query can be written in a much simpler manner. The formulation above is simply to illustrate SQL features. Set Comparison Find all branches that have greater assets than some branch located in Brooklyn. select distinct T.branch_name from branch as T, branch as S where T.assets > S.assets and S.branch_city = 'Brooklyn' Same query using > some clause select branch_name from branch where assets > some (select assets from branch where branch_city = 'Brooklyn') Definition of Some Clause

- 65. Example Query Find the names of all branches that have greater assets than all branches located in Brooklyn. select branch_name from branch where assets > all (select assets from branch where branch_city = 'Brooklyn') Definition of all Clause

- 66. Test for Empty Relations The exists construct returns the value true if the argument subquery is nonempty. exists r r Ø not exists r r = Ø Example Query Find all customers who have an account at all branches located in Brooklyn. select distinct S.customer_name from depositor as S where not exists ( (select branch_name from branch

- 67. where branch_city = 'Brooklyn') except (select R.branch_name from depositor as T, account as R where T.account_number = R.account_number and S.customer_name = T.customer_name )) Note that X – Y = Ø X Y Note: Cannot write this query using = all and its variants Test for Absence of Duplicate Tuples The unique construct tests whether a subquery has any duplicate tuples in its result. Find all customers who have at most one account at the Perryridge branch. select T.customer_name from depositor as T where unique ( select R.customer_name from account, depositor as R where T.customer_name = R.customer_name and R.account_number = account.account_number and account.branch_name = 'Perryridge') Example Query Find all customers who have at least two accounts at the Perryridge branch. select distinct T.customer_name from depositor as T where not unique ( select R.customer_name from account, depositor as R where T.customer_name = R.customer_name and R.account_number = account.account_number and account.branch_name = 'Perryridge') Variable from outer level is known as a correlation variable

- 68. Derived Relations SQL allows a subquery expression to be used in the from clause Find the average account balance of those branches where the average account balance is greater than $1200. select branch_name, avg_balance from (select branch_name, avg (balance) from account group by branch_name ) as branch_avg ( branch_name, avg_balance ) where avg_balance > 1200 Note that we do not need to use the having clause, since we compute the temporary (view) relation branch_avg in the from clause, and the attributes of branch_avg can be used directly in the where clause. With Clause The with clause provides a way of defining a temporary view whose definition is available only to the query in which the with clause occurs. Find all accounts with the maximum balance with max_balance (value) as select max (balance) from account select account_number from account, max_balance where account.balance = max_balance.value Complex Queries using With Clause Find all branches where the total account deposit is greater than the average of the total account deposits at all branches. with branch_total (branch_name, value) as select branch_name, sum (balance) from account group by branch_name with branch_total_avg (value) as select avg (value) from branch_total select branch_name

- 69. from branch_total, branch_total_avg where branch_total.value >= branch_total_avg.value Views In some cases, it is not desirable for all users to see the entire logical model (that is, all the actual relations stored in the database.) Consider a person who needs to know a customer’s name, loan number and branch name, but has no need to see the loan amount. This person should see a relation described, in SQL, by (select customer_name, borrower.loan_number, branch_name from borrower, loan where borrower.loan_number = loan.loan_number ) A view provides a mechanism to hide certain data from the view of certain users. Any relation that is not of the conceptual model but is made visible to a user as a “virtual relation” is called a view. View Definition A view is defined using the create view statement which has the form create view v as < query expression > where <query expression> is any legal SQL expression. The view name is represented by v. Once a view is defined, the view name can be used to refer to the virtual relation that the view generates. When a view is created, the query expression is stored in the database; the expression is substituted into queries using the view. Example Queries A view consisting of branches and their customers create view all_customer as (select branch_name, customer_name from depositor, account where depositor.account_number = account.account_number ) union (select branch_name, customer_name

- 70. from borrower, loan where borrower.loan_number = loan.loan_number ) Find all customers of the Perryridge branch select customer_name from all_customer where branch_name = 'Perryridge' Views Defined Using Other Views One view may be used in the expression defining another view A view relation v1 is said to depend directly on a view relation v2 if v2 is used in the expression defining v1 A view relation v1 is said to depend on view relation v2 if either v1 depends directly to v2 or there is a path of dependencies from v1 to v2 A view relation v is said to be recursive if it depends on itself. View Expansion A way to define the meaning of views defined in terms of other views. Let view v1 be defined by an expression e1 that may itself contain uses of view relations. View expansion of an expression repeats the following replacement step: repeat Find any view relation vi in e1 Replace the view relation vi by the expression defining vi until no more view relations are present in e1 As long as the view definitions are not recursive, this loop will terminate Modification of the Database – Deletion Delete all account tuples at the Perryridge branch

- 71. delete from account where branch_name = 'Perryridge' Delete all accounts at every branch located in the city ‘Needham’. delete from account where branch_name in (select branch_name from branch where branch_city = 'Needham') Example Query Delete the record of all accounts with balances below the average at the bank. delete from account where balance < (select avg (balance ) from account ) Problem: as we delete tuples from deposit, the average balance changes Solution used in SQL: 1. First, compute avg balance and find all tuples to delete 2. Next, delete all tuples found above (without recomputing avg or retesting the tuples) Modification of the Database – Insertion Add a new tuple to account insert into account values ('A-9732', 'Perryridge', 1200) or equivalently insert into account (branch_name, balance, account_number) values ('Perryridge', 1200, 'A-9732') Add a new tuple to account with balance set to null insert into account values ('A-777','Perryridge', null )

- 72. Provide as a gift for all loan customers of the Perryridge branch, a $200 savings account. Let the loan number serve as the account number for the new savings account insert into account select loan_number, branch_name, 200 from loan where branch_name = 'Perryridge' insert into depositor select customer_name, loan_number from loan, borrower where branch_name = 'Perryridge' and loan.account_number = borrower.account_number The select from where statement is evaluated fully before any of its results are inserted into the relation (otherwise queries like insert into table1 select * from table1 would cause problems) Modification of the Database – Updates Increase all accounts with balances over $10,000 by 6%, all other accounts receive 5%. Write two update statements: update account set balance = balance 1.06 where balance > 10000 update account set balance = balance 1.05 where balance 10000 The order is important Can be done better using the case statement (next slide) Case Statement for Conditional Updates Same query as before: Increase all accounts with balances over $10,000 by 6%, all other accounts receive 5%. update account set balance = case

- 73. when balance <= 10000 then balance *1.05 else balance * 1.06 end Update of a View Create a view of all loan data in the loan relation, hiding the amount attribute create view loan_branch as select loan_number, branch_name from loan Add a new tuple to branch_loan insert into branch_loan values ('L-37‘, 'Perryridge‘) This insertion must be represented by the insertion of the tuple ('L-37', 'Perryridge', null ) into the loan relation Some updates through views are impossible to translate into updates on the database relations create view v as select loan_number, branch_name, amount from loan where branch_name = ‘Perryridge’ insert into v values ( 'L-99','Downtown', '23') Others cannot be translated uniquely insert into all_customer values ('Perryridge', 'John') Have to choose loan or account, and create a new loan/account number! Most SQL implementations allow updates only on simple views (without aggregates) defined on a single relation Joined Relations** Join operations take two relations and return as a result another relation. These additional operations are typically used as subquery expressions in the from clause Join condition – defines which tuples in the two relations match, and what attributes are present in the result of the join. Join type – defines how tuples in each relation that do not match any tuple in the other relation (based on the join condition) are treated.

- 74. Joined Relations – Datasets for Examples Relation loan Relation borrower Note: borrower information missing for L-260 and loan information missing for L-155 Joined Relations – Examples loan inner join borrower on loan.loan_number = borrower.loan_number loan left outer join borrower on loan.loan_number = borrower.loan_number

- 75. loan natural inner join borrower loan natural right outer join borrower loan full outer join borrower using (loan_number) Find all customers who have either an account or a loan (but not both) at the bank. select customer_name from (depositor natural full outer join borrower ) where account_number is null or loan_number is null

- 76. 2014 11/16/2014

- 77. Entity-Relationship Model Entity Sets Relationship Sets Design Issues Mapping Constraints Keys E-R Diagram Extended E-R Features Design of an E-R Database Schema Reduction of an E-R Schema to Tables Entity Sets A database can be modeled as: a collection of entities, relationship among entities. An entity is an object that exists and is distinguishable from other objects. Example: specific person, company, event, plant Entities have attributes Example: people have names and addresses An entity set is a set of entities of the same type that share the same properties. Example: set of all persons, companies, trees, holidays Entity Sets customer and loan

- 78. Attributes An entity is represented by a set of attributes, that is descriptive properties possessed by all members of an entity set. Example: customer = (customer-id, customer-name, customer-street, customer-city) loan = (loan-number, amount) Domain – the set of permitted values for each attribute Attribute types: Simple and composite attributes.

- 79. Single-valued and multi-valued attributes E.g. multivalued attribute: phone-numbers Derived attributes Can be computed from other attributes E.g. age, given date of birth Composite Attributes Relationship Sets A relationship is an association among several entities Example: Hayes depositor A-102 customer entity relationship set account entity A relationship set is a mathematical relation among n 2 entities, each taken from entity sets {(e1, e2, … en) | e1 E1, e2 E2, …, en En} where (e1, e2, …, en) is a relationship Example: (Hayes, A-102) depositor

- 80. Relationship Set borrower Relationship Sets (Cont.) An attribute can also be property of a relationship set. For instance, the depositor relationship set between entity sets customer and account may have the attribute access-date

- 81. Degree of a Relationship Set Refers to number of entity sets that participate in a relationship set. Relationship sets that involve two entity sets are binary (or degree two). Generally, most relationship sets in a database system are binary. Relationship sets may involve more than two entity sets. E.g. Suppose employees of a bank may have jobs (responsibilities) at multiple branches, with different jobs at different branches. Then there is a ternary

- 82. relationship set between entity sets employee, job and branch Relationships between more than two entity sets are rare. Most relationships are binary. (More on this later.) Mapping Cardinalities Express the number of entities to which another entity can be associated via a relationship set. Most useful in describing binary relationship sets. For a binary relationship set the mapping cardinality must be one of the following types: One to one One to many Many to one Many to many

- 84. Mapping Cardinalities affect ER Design Can make access-date an attribute of account, instead of a relationship attribute, if each account can have only one customer I.e., the relationship from account to customer is many to one, or equivalently, customer to account is one to many

- 85. E-R Diagrams Rectangles represent entity sets. Diamonds represent relationship sets. Lines link attributes to entity sets and entity sets to relationship sets. Ellipses represent attributes Double ellipses represent multivalued attributes. Dashed ellipses denote derived attributes.

- 86. Underline indicates primary key attributes (will study later) E-R Diagram With Composite, Multivalued, and Derived Attributes Relationship Sets with Attributes

- 87. Roles Entity sets of a relationship need not be distinct The labels “manager” and “worker” are called roles; they specify how employee entities interact via the works-for relationship set. Roles are indicated in E-R diagrams by labeling the lines that connect diamonds to rectangles. Role labels are optional, and are used to clarify semantics of the relationship Cardinality Constraints We express cardinality constraints by drawing either a directed line (), signifying “one,” or an undirected line (—), signifying “many,” between the relationship set and the entity set. E.g.: One-to-one relationship: A customer is associated with at most one loan via the relationship borrower A loan is associated with at most one customer via borrower

- 88. One-To-Many Relationship In the one-to-many relationship a loan is associated with at most one customer via borrower, a customer is associated with several (including 0) loans via borrower Many-To-One Relationships In a many-to-one relationship a loan is associated with several (including 0) customers via borrower, a customer is associated with at most one loan via borrower

- 89. Many-To-Many Relationship A customer is associated with several (possibly 0) loans via borrower A loan is associated with several (possibly 0) customers via borrower Participation of an Entity Set in a Relationship Set Total participation (indicated by double line): every entity in the entity set participates in at least one relationship in the relationship set E.g. participation of loan in borrower is total

- 90. every loan must have a customer associated to it via borrower Partial participation: some entities may not participate in any relationship in the relationship set E.g. participation of customer in borrower is partial Alternative Notation for Cardinality Limits Cardinality limits can also express participation constraints Keys A super key of an entity set is a set of one or

- 91. more attributes whose values uniquely determine each entity. A candidate key of an entity set is a minimal super key Customer-id is candidate key of customer account-number is candidate key of account Although several candidate keys may exist, one of the candidate keys is selected to be the primary key. Keys for Relationship Sets The combination of primary keys of the participating entity sets forms a super key of a relationship set. (customer-id, account-number) is the super key of depositor NOTE: this means a pair of entity sets can have at most one relationship in a particular relationship set. E.g. if we wish to track all access-dates to each account by each customer, we cannot assume a relationship for each access. We can use a multivalued attribute though Must consider the mapping cardinality of the relationship set when deciding the what are the candidate keys Need to consider semantics of relationship set in selecting the primary key in case of more than one candidate key E-R Diagram with a Ternary Relationship

- 92. Cardinality Constraints on Ternary Relationship We allow at most one arrow out of a ternary (or greater degree) relationship to indicate a cardinality constraint E.g. an arrow from works-on to job indicates each employee works on at most one job at any branch. If there is more than one arrow, there are two ways of defining the meaning. E.g a ternary relationship R between A, B and C with arrows to B and C could mean 1. each A entity is associated with a unique entity from B and C or 2. each pair of entities from (A, B) is associated with a unique C entity, and each pair (A, C) is associated with a unique B Each alternative has been used in different formalisms To avoid confusion we outlaw more than one arrow Binary Vs. Non-Binary

- 93. Relationships Some relationships that appear to be non-binary may be better represented using binary relationships E.g. A ternary relationship parents, relating a child to his/her father and mother, is best replaced by two binary relationships, father and mother Using two binary relationships allows partial information (e.g. only mother being know) But there are some relationships that are naturally non-binary E.g. works-on Converting Non-Binary Relationships to Binary Form In general, any non-binary relationship can be represented using binary relationships by creating an artificial entity set. Replace R between entity sets A, B and C by an entity set E, and three relationship sets: 1. RA, relating E and A 2.RB, relating E and B 3. RC, relating E and C Create a special identifying attribute for E Add any attributes of R to E For each relationship (ai , bi , ci) in R, create 1. a new entity ei in the entity set E 2. add (ei , ai ) to RA 3. add (ei , bi ) to RB 4. add (ei , ci ) to RC Also need to translate constraints

- 94. Translating all constraints may not be possible There may be instances in the translated schema that cannot correspond to any instance of R Exercise: add constraints to the relationships RA, RB and RC to ensure that a newly created entity corresponds to exactly one entity in each of entity sets A, B and C We can avoid creating an identifying attribute by making E a weak entity set (described shortly) identified by the three relationship sets Design Issues Use of entity sets vs. attributes Choice mainly depends on the structure of the enterprise being modeled, and on the semantics associated with the attribute in question. Use of entity sets vs. relationship sets Possible guideline is to designate a relationship set to describe an action that occurs between entities Binary versus n-ary relationship sets Although it is possible to replace any nonbinary (n-ary, for n > 2) relationship set by a number of distinct binary relationship sets, a n-ary relationship set shows more clearly that several entities participate in a single relationship. Placement of relationship attributes How about doing an ER design interactively on the board? Suggest an application to be modeled.

- 95. Specialization Top-down design process; we designate subgroupings within an entity set that are distinctive from other entities in the set. These subgroupings become lower-level entity sets that have attributes or participate in relationships that do not apply to the higher-level entity set. Depicted by a triangle component labeled ISA (E.g. customer “is a” person). Attribute inheritance – a lower-level entity set inherits all the attributes and relationship participation of the higher-level entity set to which it is linked. Specialization Example

- 96. Generalization A bottom-up design process – combine a number of entity sets that share the same features into a higher-level entity set. Specialization and generalization are simple inversions of each other; they are represented in an E-R diagram in the same way.

- 97. The terms specialization and generalization are used interchangeably. Specialization and Generalization (Contd.) Can have multiple specializations of an entity set based on different features. E.g. permanent-employee vs. temporary- employee, in addition to officer vs. secretary vs. teller Each particular employee would be a member of one of permanent-employee or temporary-employee, and also a member of one of officer, secretary, or teller The ISA relationship also referred to as superclass - subclass relationship Design Constraints on a Specialization/Generalization Constraint on which entities can be members of a given lower-level entity set. condition-defined E.g. all customers over 65 years are members of senior-citizen entity set; senior-citizen ISA person. user-defined Constraint on whether or not entities may belong to more than one lower-level entity set within a single generalization. Disjoint

- 98. an entity can belong to only one lower-level entity set Noted in E-R diagram by writing disjoint next to the ISA triangle Overlapping an entity can belong to more than one lower-level entity set Design Constraints on a Specialization/Generalization (Contd.) Completeness constraint -- specifies whether or not an entity in the higher-level entity set must belong to at least one of the lower-level entity sets within a generalization. total : an entity must belong to one of the lower-level entity sets partial: an entity need not belong to one of the lower-level entity sets E-R Design Decisions The use of an attribute or entity set to represent an object. Whether a real-world concept is best expressed by an entity set or a relationship set. The use of a ternary relationship versus a pair of binary relationships. The use of specialization/generalization – contributes to modularity in the design. E-R Diagram for a Banking Enterprise

- 99. How about doing another ER design interactively on the board?

- 100. Summary of Symbols Used in E-R Notation

- 102. UML UML: Unified Modeling Language UML has many components to graphically model different aspects of an entire software system UML Class Diagrams correspond to E-R Diagram, but several differences. Summary of UML Class Diagram Notation

- 103. Entity sets are shown as boxes, and attributes are shown within the box, rather than as separate ellipses in E-R diagrams. Binary relationship sets are represented in UML by just drawing a line connecting the entity sets. The relationship set name is written adjacent to the line. The role played by an entity set in a relationship set may also be specified by writing the role name on the line, adjacent to the entity set. The relationship set name may alternatively be written in a box, along with attributes of the relationship set, and the box is connected, using a dotted line, to the line depicting the relationship set.

- 104. Non-binary relationships drawn using diamonds, just as in ER diagrams *Note reversal of position in cardinality constraint depiction *Generalization can use merged or separate arrows independent of disjoint/overlapping UML Class Diagrams (Contd.) Cardinality constraints are specified in the form l..h, where l denotes the minimum and h the maximum number of relationships an entity can participate in. Beware: the positioning of the constraints is exactly the reverse of the positioning of constraints in E-R diagrams. The constraint 0..* on the E2 side and 0..1 on the

- 105. E1 side means that each E2 entity can participate in at most one relationship, whereas each E1 entity can participate in many relationships; in other words, the relationship is many to one from E2 to E1. Single values, such as 1 or * may be written on edges; The single value 1 on an edge is treated as equivalent to 1..1, while * is equivalent to 0..*. Reduction of an E-R Schema to Tables Primary keys allow entity sets and relationship sets to be expressed uniformly as tables which represent the contents of the database. A database which conforms to an E-R diagram can be represented by a collection of tables. For each entity set and relationship set there is a unique table which is assigned the name of the corresponding entity set or relationship set. Each table has a number of columns (generally corresponding to attributes), which have unique names. Converting an E-R diagram to a table format is the basis for deriving a relational database design from an E-R diagram. Representing Entity Sets as

- 106. Tables A strong entity set reduces to a table with the same attributes. Composite and Multivalued Attributes Composite attributes are flattened out by creating a separate attribute for each component attribute E.g. given entity set customer with composite attribute name with component attributes first-name and last-name the table corresponding to the entity set has two attributes name.first-name and name.last-name A multivalued attribute M of an entity E is represented by a separate table EM Table EM has attributes corresponding to the primary key of E and an attribute corresponding to multivalued attribute M E.g. Multivalued attribute dependent-names of employee is represented by a table employee-dependent-names( employee-id, dname) Each value of the multivalued attribute maps to a separate row of the table

- 107. EM E.g., an employee entity with primary key John and dependents Johnson and Johndotir maps to two rows: (John, Johnson) and (John, Johndotir) Representing Weak Entity Sets Representing Relationship Sets as Tables A many-to-many relationship set is represented as a table with columns for the primary keys of the two participating entity sets, and any descriptive attributes of the relationship set. E.g.: table for relationship set borrower

- 108. Redundancy of Tables Many-to-one and one-to-many relationship sets that are total on the many- side can be represented by adding an extra attribute to the many side, containing the primary key of the one side E.g.: Instead of creating a table for relationship account-branch, add an attribute branch to the entity set account

- 109. For one-to-one relationship sets, either side can be chosen to act as the “many” side That is, extra attribute can be added to either of the tables corresponding to the two entity sets If participation is partial on the many side, replacing a table by an extra attribute in the relation corresponding to the “many” side could result in null values The table corresponding to a relationship set linking a weak entity set to its identifying strong entity set is redundant. E.g. The payment table already contains the information that would appear in the loan-payment table (i.e., the columns loan-number and payment- number). Representing Specialization as Tables Method 1: Form a table for the higher level entity Form a table for each lower level entity set, include primary key of higher level entity set and local attributes table table attributes person name, street, city customer name, credit-rating employee name, salary

- 110. Drawback: getting information about, e.g., employee requires accessing two tables Method 2: Form a table for each entity set with all local and inherited attributes table table attributes person name, street, city customer name, street, city , credit-rating employee name, street, city salary If specialization is total, table for generalized entity (person) not required to store information Can be defined as a “view” relation containing union of specialization tables But explicit table may still be needed for foreign key constraints Drawback: street and city may be stored redundantly for persons who are both customers and employees Relations Corresponding to Aggregation

- 111. 2014 11/16/2014

- 112. Advanced SQL SQL Data Types and Schemas Integrity Constraints Authorization Embedded SQL Dynamic SQL Functions and Procedural Constructs** Recursive Queries** Advanced SQL Features** Built-in Data Types in SQL date: Dates, containing a (4 digit) year, month and date Example: date ‘2005-7-27’ time: Time of day, in hours, minutes and seconds. Example: time ‘09:00:30’ time ‘09:00:30.75’ timestamp: date plus time of day Example: timestamp ‘2005-7-27 09:00:30.75’ interval: period of time Example: interval ‘1’ day Subtracting a date/time/timestamp value from another gives an interval value Interval values can be added to date/time/timestamp values Can extract values of individual fields from date/time/timestamp Example: extract (year from r.starttime) Can cast string types to date/time/timestamp Example: cast <string-valued-expression> as date Example: cast <string-valued-expression> as time User-Defined Types create type construct in SQL creates user-defined type create type Dollars as numeric (12,2) final create domain construct in SQL-92 creates user-defined domain types

- 113. create domain person_name char(20) not null Types and domains are similar. Domains can have constraints, such as not null, specified on them. Domain Constraints Domain constraints are the most elementary form of integrity constraint. They test values inserted in the database, and test queries to ensure that the comparisons make sense. New domains can be created from existing data types Example: create domain Dollars numeric(12, 2) create domain Pounds numeric(12,2) We cannot assign or compare a value of type Dollars to a value of type Pounds. However, we can convert type as below (cast r.A as Pounds) (Should also multiply by the dollar-to-pound conversion-rate) Large-Object Types Large objects (photos, videos, CAD files, etc.) are stored as a large object: blob: binary large object -- object is a large collection of uninterpreted binary data (whose interpretation is left to an application outside of the database system) clob: character large object -- object is a large collection of character data When a query returns a large object, a pointer is returned rather than the large object itself. Bfile Nclob Integrity Constraints Integrity constraints guard against accidental damage to the database, by ensuring that authorized changes to the database do not result in a loss of data consistency. A checking account must have a balance greater than $10,000.00 A salary of a bank employee must be at least $4.00 an hour A customer must have a (non-null) phone number Constraints on a Single

- 114. Relation not null primary key unique check (P ), where P is a predicate Not Null Constraint Declare branch_name for branch is not null branch_name char(15) not null Declare the domain Dollars to be not null create domain Dollars numeric(12,2) not null The Unique Constraint unique ( A1, A2, …, Am) The unique specification states that the attributes A1, A2, … Am form a candidate key. Candidate keys are permitted to be null (in contrast to primary keys). The check clause check (P ), where P is a predicate Example: Declare branch_name as the primary key for branch and ensure that the values of assets are non-negative. create table branch (branch_name char(15), branch_city char(30), assets integer, primary key (branch_name), check (assets >= 0)) The check clause in SQL-92 permits domains to be restricted: Use check clause to ensure that an hourly_wage domain allows only values

- 115. greater than a specified value. create domain hourly_wage numeric(5,2) constraint value_test check(value > = 4.00) The domain has a constraint that ensures that the hourly_wage is greater than 4.00 The clause constraint value_test is optional; useful to indicate which constraint an update violated. Referential Integrity Ensures that a value that appears in one relation for a given set of attributes also appears for a certain set of attributes in another relation. Example: If “Perryridge” is a branch name appearing in one of the tuples in the account relation, then there exists a tuple in the branch relation for branch “Perryridge”. Primary and candidate keys and foreign keys can be specified as part of the SQL create table statement: The primary key clause lists attributes that comprise the primary key. The unique key clause lists attributes that comprise a candidate key. The foreign key clause lists the attributes that comprise the foreign key and the name of the relation referenced by the foreign key. By default, a foreign key references the primary key attributes of the referenced table. Referential Integrity in SQL – Example create table customer (customer_name char(20), customer_street char(30), customer_city char(30), primary key (customer_name )) create table branch (branch_name char(15), branch_city char(30), assets numeric(12,2), primary key (branch_name )) create table account (account_number char(10), branch_name char(15), balance integer, primary key (account_number), foreign key (branch_name) references branch )

- 116. create table depositor (customer_name char(20), account_number char(10), primary key (customer_name, account_number), foreign key (account_number ) references account, foreign key (customer_name ) references customer ) When a referential Integrity constraint is violated, the normal procedure is to reject the action that cause the violation Exception delete, update create table depositor (customer_name char(20), account_number char(10), primary key (customer_name, account_number), foreign key (account_number ) references account on delete cascade, foreign key (customer_name ) references customer ) on delete cascade NULL values complicates the Referential Integrity constraint Attributes of foreign keys are allowed to be null unless otherwise declared IC can be added by using alter table table-name add constraint constraint-name Assertions An assertion is a predicate expressing a condition that we wish the database always to satisfy. An assertion in SQL takes the form create assertion <assertion-name> check <predicate> When an assertion is made, the system tests it for validity, and tests it again on every update that may violate the assertion This testing may introduce a significant amount of overhead; hence assertions should be used with great care. Asserting for all X, P(X) is achieved in a round-about fashion using not exists X such that not P(X) Assertion Example Every loan has at least one borrower who maintains an account with a minimum

- 117. balance or $1000.00 create assertion balance_constraint check (not exists ( select * from loan where not exists ( select * from borrower, depositor, account where loan.loan_number = borrower.loan_number and borrower.customer_name = depositor.customer_name and depositor.account_number = account.account_number and account.balance >= 1000))) The sum of all loan amounts for each branch must be less than the sum of all account balances at the branch. create assertion sum_constraint check (not exists (select * from branch where (select sum(amount ) from loan where loan.branch_name = branch.branch_name ) >= (select sum (amount ) from account where loan.branch_name = branch.branch_name ))) Authorization Forms of authorization on parts of the database: Read - allows reading, but not modification of data. Insert - allows insertion of new data, but not modification of existing data. Update - allows modification, but not deletion of data. Delete - allows deletion of data. Forms of authorization to modify the database schema Index - allows creation and deletion of indices. Resources - allows creation of new relations. Alteration - allows addition or deletion of attributes in a relation. Drop - allows deletion of relations.

- 118. Authorization Specification in SQL The grant statement is used to confer authorization grant <privilege list> on <relation name or view name> to <user list> <user list> is: a user-id public, which allows all valid users the privilege granted A role Granting a privilege on a view does not imply granting any privileges on the underlying relations. The grantor of the privilege must already hold the privilege on the specified item (or be the database administrator). Privileges in SQL select: allows read access to relation,or the ability to query using the view Example: grant users U1, U2, and U3 select authorization on the branch relation: grant select on branch to U1, U2, U3 insert: the ability to insert tuples update: the ability to update using the SQL update statement delete: the ability to delete tuples. all privileges: used as a short form for all the allowable privileges more in Chapter 8 Revoking Authorization in SQL The revoke statement is used to revoke authorization. revoke <privilege list> on <relation name or view name> from <user list> Example: revoke select on branch from U1, U2, U3 <privilege-list> may be all to revoke all privileges the revokee may hold. If <revokee-list> includes public, all users lose the privilege except those granted it

- 119. explicitly. If the same privilege was granted twice to the same user by different grantees, the user may retain the privilege after the revocation. All privileges that depend on the privilege being revoked are also revoked. Embedded SQL The SQL standard defines embeddings of SQL in a variety of programming languages such as C, Java, and Cobol. A language to which SQL queries are embedded is referred to as a host language, and the SQL structures permitted in the host language comprise embedded SQL. The basic form of these languages follows that of the System R embedding of SQL into PL/I. EXEC SQL statement is used to identify embedded SQL request to the preprocessor EXEC SQL <embedded SQL statement > END_EXEC Note: this varies by language (for example, the Java embedding uses # SQL { …. }; ) Example Query Specify the query in SQL and declare a cursor for it EXEC SQL declare c cursor for select depositor.customer_name, customer_city from depositor, customer, account where depositor.customer_name = customer.customer_name and depositor account_number = account.account_number and account.balance > :amount END_EXEC The open statement causes the query to be evaluated EXEC SQL open c END_EXEC The fetch statement causes the values of one tuple in the query result to be placed on host language variables. EXEC SQL fetch c into :cn, :cc END_EXEC Repeated calls to fetch get successive tuples in the query result A variable called SQLSTATE in the SQL communication area (SQLCA) gets set to ‘02000’ to indicate no more data is available The close statement causes the database system to delete the temporary relation that holds the result of the query. EXEC SQL close c END_EXEC

- 120. Note: above details vary with language. For example, the Java embedding defines Java iterators to step through result tuples. Updates Through Cursors Can update tuples fetched by cursor by declaring that the cursor is for update declare c cursor for select * from account where branch_name = ‘Perryridge’ for update To update tuple at the current location of cursor c update account set balance = balance + 100 where current of c Dynamic SQL Allows programs to construct and submit SQL queries at run time. Example of the use of dynamic SQL from within a C program. char * sqlprog = “update account set balance = balance * 1.05 where account_number = ?” EXEC SQL prepare dynprog from :sqlprog; char account [10] = “A-101”; EXEC SQL execute dynprog using :account; The dynamic SQL program contains a ?, which is a place holder for a value that is provided when the SQL program is executed. JDBC JDBC is a Java API for communicating with database systems supporting SQL JDBC supports a variety of features for querying and updating data, and for retrieving query results JDBC also supports metadata retrieval, such as querying about relations present in the database and the names and types of relation attributes Model for communicating with the database: Open a connection Create a “statement” object Execute queries using the Statement object to send queries and fetch results