MSC-Thesis-Wireless-Video-over-HIPERLAN2-JustinJohnstone

- 1. DEPARTMENT OF ELECTRICAL AND ELECTRONIC ENGINEERING UNIVERSITY OF BRISTOL ________________________________________ “WIRELESS VIDEO OVER HIPERLAN/2” A THESIS SUBMITTED TO THE UNIVERSITY OF BRISTOL IN ACCORDANCE WITH THE REQUIREMENTS OF THE DEGREE OF M.SC. IN COMMUNICATIONS SYSTEMS AND SIGNAL PROCESSING IN THE FACULTY OF ENGINEERING. ________________________________________ JUSTIN JOHNSTONE OCTOBER 2001

- 3. i ABSTRACT In this report, techniques are investigated to optimise the performance of scalable modes of video coding over a HiperLAN/2 wireless LAN. This report commences with a review of video coding theory and overviews of the H.263+ video coding standard and HiperLAN/2. A review of the nature of errors in wireless environments and how these errors impact the performance of video coding is then presented. Existing packet video techniques are reviewed, and these lead to the proposal of an approach to convey scaled video over HiperLAN/2. The approach is based on the Unequal Packet-Loss Protection (UPP) technique, and it prioritises layered video data into four priority streams, and associates each of these priority streams with an independent HiperLAN/2 connection. Connections are configured to provide increased amounts of protection for higher priority video data. A simulation system is integrated to allow benefits of this UPP approach to be assessed and compared against an Equal Packet-Loss Protection (EPP) scheme, where all video data shares the same HiperLAN/2 connection. Tests conclude that the UPP approach does improve recovered video performance. The case is argued that the UPP approach increases potential capacity offered by HiperLAN/2 to provide multiple simultaneous video services. This combination of improved performance and increased capacity allows the UPP approach to be endorsed. Testing highlighted the video decoder software’s intolerance to data loss or corruption. A number of options to treat errored packets and video frames, prior to passing them to the decoder, are implemented and assessed. Two options which improve recovered video performance are recommended within the context of this project. It is also recommended that the decoder program is modified to improve it’s robustness to data loss/corruption. Error models within the simulation system permitted configuration of bursty errors, and tests illustrate improved video performance under increasingly bursty error conditions. Based on these performance differences, and the fact that bursty errors are more representative of the nature of wireless channels; it is recommended that any future simulations include bursty error models.

- 4. ii ACKNOWLEDGMENTS I would like to thank the following for their support with this project: David Redmill, my project supervisor at Bristol University, for his patient guidance. James Chung-How and David Clewer, research staff within the Centre for Communications Research (CCR) at Bristol University, for their insights and assistance into use of the H.263+ video codec software, and specifically to James for providing the software utility to derive PSNR measurements. Tony Manuel and Richard Davies, technical managers at Lucent Technologies, for allowing me to undertake this MSc course at Bristol University. AUTHOR’S DECLARATION I declare that all the work presented in this report was produced by myself, unless otherwise stated. Recognition is made to my project supervisor, David Redmill, for his guidance. Any use of ideas of other authors has been fully acknowledged by use of references. Signed, Justin Johnstone DISCLAIMER The views and opinions expressed in this report are entirely those of the author and not the University of Bristol.

- 5. iii TABLE OF CONTENTS CHAPTER 1 ...... INTRODUCTION...................................................................................... 1 1.1. Motivation ............................................................................................................... 1 1.2. Scope of Investigations............................................................................................ 2 1.3. Organisation of this Report...................................................................................... 2 CHAPTER 2 ...... REVIEW OF THEORY AND STANDARDS .......................................... 3 2.1. Video Coding Concepts........................................................................................... 3 2.1.1. Digital Capture and Processing..................................................................................................3 2.1.2. Source Picture Format................................................................................................................4 2.1.3. Redundancy and Data Compression ..........................................................................................5 2.1.4. Coding Categories......................................................................................................................8 2.1.5. General Coding Techniques.......................................................................................................8 2.1.5.1. Block Structuring...............................................................................................................8 2.1.5.2. Intraframe and interframe coding.....................................................................................10 2.1.5.2.1. Motion Estimation ........................................................................................................10 2.1.5.2.2. Intraframe Predictive Coding........................................................................................11 2.1.5.2.3. Intra Coding..................................................................................................................12 2.1.6. Quality Assessment..................................................................................................................14 2.1.6.1. Subjective Assessment.....................................................................................................15 2.1.6.2. Objective/Quantitative Assessment..................................................................................15 2.2. Nature of Errors in Wireless Channels.................................................................. 17 2.3. Nature of Packet Errors ......................................................................................... 18 2.4. Impact of Errors on Video Coding ........................................................................ 19 2.5. Standards overview................................................................................................ 20 2.5.1. Selection of H.263+.................................................................................................................20 2.5.2. H.263+ : “Video Coding for Low Bit Rate Communications” ................................................21 2.5.2.1. Overview..........................................................................................................................21 2.5.2.2. SNR scalability ................................................................................................................21 2.5.2.3. Temporal scalability.........................................................................................................22 2.5.2.4. Spatial scalability.............................................................................................................22 2.5.2.5. Test Model Rate Control Methods...................................................................................23 2.5.3. HiperLAN/2.............................................................................................................................23 2.5.3.1. Overview..........................................................................................................................23 2.5.3.2. Physical Layer..................................................................................................................24 2.5.3.2.1. Multiple data rates ........................................................................................................25 2.5.3.2.2. Physical Layer Transmit Sequence ...............................................................................26 2.5.3.3. DLC layer ........................................................................................................................27 2.5.3.3.1. Data transport functions................................................................................................27 2.5.3.3.2. Radio Link Control functions........................................................................................28 2.5.3.4. Convergence Layer (CL)..................................................................................................28 CHAPTER 3 ...... REVIEW OF EXISTING PACKET VIDEO TECHNIQUES................... 29 3.1. Overview ............................................................................................................... 29 3.2. Techniques Considered.......................................................................................... 30 3.2.1. Layered Video and Rate Control .............................................................................................30 3.2.2. Packet sequence numbering and Corrupt/Lost Packet Treatment ............................................32 3.2.3. Link Adaptation .......................................................................................................................33 3.2.4. Intra Replenishment .................................................................................................................35 3.2.5. Adaptive Encoding with feedback ...........................................................................................35 3.2.6. Prioritised Packet Dropping.....................................................................................................36 3.2.7. Automatic Repeat Request and Forward Error Correction ......................................................36 3.2.7.1. Overview..........................................................................................................................36 3.2.7.2. Examples..........................................................................................................................37 3.2.7.2.1. H.263+ FEC..................................................................................................................37 3.2.7.2.2. HiperLAN/2 Error Control ...........................................................................................37 3.2.7.2.3. Reed-Solomon Erasure (RSE) codes ............................................................................39 3.2.7.2.4. Hybrid Delay-Constrained ARQ...................................................................................39 3.2.8. Unequal Packet Loss Protection (UPP) ...................................................................................39

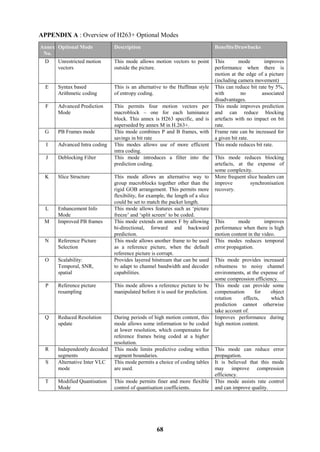

- 6. iv 3.2.9. Improved Synchronisation Codewords (SCW)........................................................................40 3.2.10. Error Resilient Entropy Coding (EREC)..................................................................................41 3.2.11. Two-way Decoding..................................................................................................................41 3.2.12. Error Concealment...................................................................................................................42 3.2.12.1. Repetition of Content from Previous Frame ....................................................................42 3.2.12.2. Content Prediction and Replacement ...............................................................................42 CHAPTER 4 ...... EVALUATION OF TECHNIQUES AND PROPOSED APPROACH .... 43 4.1. General Packet Video Techniques to apply........................................................... 43 4.2. Proposal: Layered video over HiperLAN/2........................................................... 44 4.2.1. Proposal Details.......................................................................................................................45 4.2.2. Support for this approach in the HiperLAN/2 Simulation .......................................................48 CHAPTER 5 ...... TEST SYSTEM IMPLEMENTATION..................................................... 49 5.1. System Overview................................................................................................... 49 5.2. Video Sequences.................................................................................................... 49 5.3. Video Codec .......................................................................................................... 50 5.4. Scaling Function.................................................................................................... 50 5.5. HIPERLAN/2 Simulation System ......................................................................... 51 5.5.1. Existing Simulations ................................................................................................................51 5.5.1.1. Hardware Simulation .......................................................................................................51 5.5.1.2. Software Simulations .......................................................................................................51 5.5.2. Development of Bursty Error Model .......................................................................................51 5.5.3. Hiperlan/2 Simulation Software...............................................................................................53 5.5.3.1. HiperLAN/2 Error Model ................................................................................................53 5.5.3.2. HiperLAN/2 Packet Error Treatment and Reassembly ....................................................53 5.6. Measurement system ............................................................................................. 54 5.7. Proposed Tests....................................................................................................... 54 5.7.1. Test 1: Layered Video with UPP over HiperLAN/2 ................................................................54 5.7.2. Test 2: Errored Packet and Frame Treatment ..........................................................................55 5.7.3. Test 3: Performance under burst and random errors ................................................................56 CHAPTER 6 ...... TEST RESULTS AND DISCUSSION...................................................... 57 6.1. Test 1: Layered Video with UPP over HiperLAN/2.............................................. 57 6.1.1. Results......................................................................................................................................57 6.1.2. Observations and Discussion ...................................................................................................57 6.2. Test 2: Errored packet and frame Treatment ......................................................... 59 6.2.1. Results......................................................................................................................................59 6.2.2. Discussion................................................................................................................................59 6.3. Test 3: Comparison of performance under burst and random errors..................... 60 6.3.1. Results......................................................................................................................................60 6.3.2. Discussion................................................................................................................................60 6.4. Limitations in Testing............................................................................................ 61 CHAPTER 7 ...... CONCLUSIONS AND RECOMMENDATIONS..................................... 62 7.1. Conclusions ........................................................................................................... 62 7.2. Recommendations for Further Work..................................................................... 63 REFERENCES .. .................................................................................................................... 65 APPENDICES ... .................................................................................................................... 67 APPENDIX A : Overview of H263+ Optional Modes................................................... 68 APPENDIX B : Command line syntax for all simulation programs .............................. 69 APPENDIX C : Packetisation and Prioritisation Software – Source code listings........ 71 APPENDIX D : Hiperlan/2 – Analysis of Error Patterns............................................... 77 APPENDIX E : Hiperlan/2 Error Models and Packet Reassembly/Treatment software 78 APPENDIX F : Summary Reports from HiperLAN/2 Simulation modules................ 107 APPENDIX G : PSNR calculation program ................................................................ 110 APPENDIX H : Overview and Sample of Test Execution .......................................... 111

- 7. v APPENDIX I : UPP2 and UPP3 – EP derivation, Performance comparison............... 112 APPENDIX J : Capacities of proposed UPP approach versus non-UPP approach .... 113 APPENDIX K : Recovered video under errored conditions ........................................ 114 APPENDIX L : Electronic copy of project files on CD............................................... 115

- 8. vi LIST OF TABLES Table 2-1 : Source picture format...........................................................................................................................5 Table 2-2 : Redundancy in digital image and video................................................................................................5 Table 2-3 : Compression Considerations................................................................................................................7 Table 2-4 : Video Layer Hierarchy.........................................................................................................................9 Table 2-5 : Distinctions between Intraframe and Interframe coding.....................................................................10 Table 2-6 : Intraframe Predictive Coding .............................................................................................................11 Table 2-7 : Wireless Propagation Issues...............................................................................................................17 Table 2-8 : Impact of Errors in Coded Video ......................................................................................................19 Table 2-9 : HiperLAN/2 Protocol Layers .............................................................................................................24 Table 2-10 : OSI Protocol Layers above HiperLAN/2 .........................................................................................24 Table 2-11 : HiperLAN/2 PHY modes.................................................................................................................25 Table 2-12 : HiperLAN/2 Channel Modes ...........................................................................................................27 Table 2-13 : Radio Link Control Functions..........................................................................................................28 Table 3-1 : General Strategies for Video Coding .................................................................................................29 Table 3-2 : Error Protection Techniques Considered ...........................................................................................30 Table 3-3 : Lost/Corrupt Packet and Frame Treatments Considered....................................................................32 Table 3-4 : Link Adpatation Algorithm – Control Issues .....................................................................................33 Table 3-5 : HiperLAN/2 Error Control Modes for user data ................................................................................38 Table 3-6 : Two-way Decoding ............................................................................................................................42 Table 4-1 : Packet Video Techniques – Extent Considered in Testing.................................................................44 Table 4-2 : Proposed Prioritisation.......................................................................................................................45 Table 4-3 : Default UPP setting for DUC of a point-to-point connection.............................................................45 Table 4-4 : Default UPP setting for DUC of a broadcast downlink connection ...................................................46 Table 4-5 : Default UPP setting for DUC of a point-to-point connection.............................................................48 Table 5-1 : Error Models for Priority Streams......................................................................................................53 Table 5-2 : Packet and frame treatment Options...................................................................................................54 Table 5-3 : Test Configurations For UPP Tests....................................................................................................55 Table 5-4 : Tests for Packet and Frame Treatment...............................................................................................55 Table 5-5 : Common Test Configurations For Packet and Frame Treatment .......................................................56 Table 5-6 : Test for Random and Burst Error Conditions.....................................................................................56 Table 5-7 : Common Test Configurations For Random and Burst Errors ............................................................56 Table 6-1 : Allocation of PHY Modes..................................................................................................................58 LIST OF ILLUSTRATIONS Figure 2-1 : Image Digitisation...............................................................................................................................4 Figure 2-2 : Generic Video System.........................................................................................................................5 Figure 2-3 : Categories of Image and Video Coding .............................................................................................8 Figure 2-4 : Video Layer Decomposition ...............................................................................................................9 Figure 2-5 : Predictive encoding relationship between I-, P- and B- frames ........................................................10 Figure 2-6 : Motion Estimation.............................................................................................................................11 Figure 2-7 : Block Encode/Decode Actions .........................................................................................................12 Figure 2-8 : Transform Coefficient Distribution and Quantisation Matrix ...........................................................13 Figure 2-9 : SNR scalability .................................................................................................................................22 Figure 2-10 : Temporal scalability........................................................................................................................22 Figure 2-11 : Spatial scalability............................................................................................................................23 Figure 2-12 : HiperLAN/2 PHY mode link requirements.....................................................................................25 Figure 2-13 : HiperLAN/2 Physical Layer Transmit Chain..................................................................................26 Figure 2-14 : HiperLAN/2 “Packet” (long PDU) format......................................................................................28 Figure 3-1 : HiperLAN/2 Link Throughput (channel Model A)...........................................................................34 Figure 4-1 : Proposed Admission Control/QoS ....................................................................................................47 Figure 5-1 : Test System Overview ......................................................................................................................49 Figure 5-2 : Burst Error Model.............................................................................................................................52 Figure 5-3 : HiperLAN/2 Simulation....................................................................................................................53 Figure 6-1 : Comparison of EPP with proposed UPP approach over HiperLAN/2. .............................................57 Figure 6-2 : Comparison of Errored Packet and Frame Treatment.......................................................................59 Figure 6-3 : Performance under Random and Burst Error Conditions..................................................................60

- 9. 1 Chapter 1 Introduction 1.1. Motivation There is significant growth today in the area of mobile wireless data connectivity, ranging from home and office networks to public networks, such as the emerging third generation mobile network. In all these areas, there is expectation of an increase in support for multimedia rich services, especially since increased data rates make this more feasible. While there is strong incentive to develop and provide such services, the network owner/operator is faced with many commercial and technical considerations to balance, including the following: Consideration 1. Given a finite radio spectrum resource, there is a need to balance the increasing bandwidth demands of any one single service against the requirement to support as many concurrent services/subscribers as possible without causing network congestion or overload. Consideration 2. The need to cater for user access devices with varying complexities and capabilities (for example, from laptop PCs to PDAs and mobile phones). Consideration 3. The need to support end-to-end services, which may be conveyed across numerous heterogenous networks and technology standards. For example, data originating in a corporate Ethernet network may be sent simultaneously to both: a) a mobile phone across a public mobile network. b) a PC on a home wireless LAN via a broadband internet connection. Consideration 4. The need to provide services which can adapt and compensate for the error characteristics of a wireless mobile environment. Digital video applications are expected to form a part of the increase in multimedia services. Video conferencing is just one example of real-time video applications that places great bandwidth and delay requirement demands on a network. In fact, [21] asserts that most real- time video applications exceed the bandwidth available with existing mobile systems, and that innovations in video source and channel coding are therefore required alongside the advances in radio and air interface technology. Within video compression and coding research, there has been much interest in scalable video coding techniques. Chapter 23 of [21] defines video scalability as “the property of the encoded bitstream that allows it to support various bandwidth constraints or display resolutions”. This facility allows video performance to be optimised depending on both bandwidth availability and decoder capability, albeit at some penalty to compression efficiency. In the case of broadcast transmission over a network, a rate control mechanism can be employed between video encoder and decoder that dynamically adapts to the bandwidth demands on multiple links. The interest in scalable video is clearly justified by the scenario where it is desirable to avoid compromising the quality of decoded video for one user with high bandwidth and high specification user equipment (e.g. a PC via a broadband internet connection), while still providing a reduced quality video to a second user with low bandwidth and low specification equipment (e.g. a mobile phone over a public mobile network). Another illustration of this problem is given in [19], which highlights the similar predicament currently facing the web design community. With the more recent introduction of web-connected platforms, such as PDAs and WAP phones, web designers are now challenged to produce web content which can dynamically cater for varying levels or classes of access device capability (e.g. screen size and processing power). One potential strategy

- 10. 2 identified in [19] echoes the video scalability principle, in that ideally, the means to derive “reduced complexity” content should be embedded within each web page. This would allow web designers to avoid the undesired task of publishing and maintaining multiple sites - each dedicated to the capabilities of each class of access device. With the potential for significant growth in video applications via broadband internet and third generation mobile access, it is therefore inevitable that for the same reason, scalable video will become increasingly important too. 1.2. Scope of Investigations The brief of this project is to focus on optimising aspects of scalable video coding over a wireless LAN, and specifically the HiperLAN/2 standard [2]. Within the HiperLAN/2 standard, a key feature of the physical layer is that it supports a number of different physical modes, which have differing data rates and link quality requirements. Specifically, the modes with higher nominal data rates require higher carrier-to-interference (C/I) levels to be able to achieve near their nominal data rates. Performance studies ([7],[16],[20]) have shown that as the C/I decreases, higher overall throughput can be maintained if the physical mode is reduced at specific threshold points. The responsibility to monitor and dynamically alter physical modes is designated to a function referred to as “link adaptation”. However, as this is function is not defined as part of the HiperLAN/2 standard, there is interest to propose algorithms in this area. This project was tasked to recommend an optimal scheme to convey layered video data over HiperLAN/2, including assessment of link adaptation algorithms. However, when making proposals in any one specific area of mobile multimedia applications, it is vital to consider these in the wider context of the entire system in which they will operate. Therefore this report also considers many of the more general techniques in the area of “wireless packet video”. 1.3. Organisation of this Report The remainder of this report is organised as follows: Chapter 2 commences with an introduction of video coding theory. It then provides an overview of nature of errors in a wireless environment, and what effect these have on the performance of video coding. Chapter 2 concludes with an overview of the standards used in the project, namely H.263+ and HiperLAN/2. Chapter 3 presents a review of some existing wireless packet video techniques, which are considered for investigation in this project. Chapter 4 proposes the approach intended to convey layered video bitstream over HiperLAN/2. The chapter also considers which of the more general techniques to apply during testing. Chapter 5 describes the implementation of components in the simulation system used for investigations, and then introduces the specific tests to be conducted. Chapter 6 discusses test results, and chapter 7 presents conclusions, including a list of areas identified for further investigation.

- 11. 3 Chapter 2 Review of Theory and Standards This chapter introduces some useful concepts regarding video coding, wireless channel errors and their effect on coded video. The chapter concludes with an overview of the standards used in the project; H.263+ and HiperLAN/2. 2.1. Video Coding Concepts The following basic concepts are discussed in this section: Digital image capture and processing considerations Picture Formats Redundancy and Compression Video Coding Techniques Quality assessment The video coding standards considered for use on this project include H.263+, MPEG-2 and MPEG-4, since all these standards incorporate scalability. While the basic concepts discussed in this section are common to all these standards, many of the illustrations are tailored to highlight specific details of H.263+, since this is the standard selected for use in this project. Further details of this standard, including it scalability modes, are discussed later in section 2.5.1. 2.1.1. Digital Capture and Processing Digital images provide a two dimensional representation of the visual perception of the three- dimensional world. As indicated in [22], the process to produce digital images involves the following two stages, shown also in Figure 2-1 below: Sampling: Here an image is partitioned into a grid of discrete regions called picture elements (abbreviated “pixels” or “pels”). An image capture device obtains measurements for each pixel. Typically this is performed by a scan, which commences in one corner of the grid, and proceeds sequentially row by row, and pixel by pixel within each row, until measurements for the last pixel, in the diagonally opposite corner to the start point are recorded. Commonly, image capture devices record the colour components for each pixel in RGB colour format. Quantisation: Measured values for each pixel are mapped to the nearest integer within a given range (typically this range is 0 to 255). In this way, digitisation results in an image being represented by an array of integers. Note:. The RGB representation is typically converted into YUV format (where Y represents luminance and U,V represent chrominance values). The significant benefit of this conversion is that it subsequently allows more efficient coding of the data.

- 12. 4 Figure 2-1 : Image Digitisation Digital video is a sequence of still images (or frames) displayed sequentially at a given rate, measured in frames per second (fps). When designing a video system, trade-off decisions need to be made between the perceived visual quality of the video and the complexity of the system, which may typically be characterised by a combination of the following: Storage Space/cost Bandwidth Requirements (i.e. data rate required for transmission) Processing power/cost. These trade-off decision can be partly made by defining the following parameters of the system. Note that increasing these parameters enhances visual quality at the expense of increased complexity. Resolution: This includes both: The number of chrominance/luminance samples in horizontal and vertical axes. The number of quantised steps in the range of chrominance and luminance values (for example, whether 8, 16 or more bits are stored for each value). Frame rate: The number of frames displayed per second. Sensitivity limitations of the Human Visual System (HVS) effectively establish practical upper thresholds for the above parameters, beyond which there are diminishing returns in perceived quality. An example of video coding adapting to the HVS limits is the technique known as “chrominance sub-sampling”. Here, the number of chrominance samples is reduced by a given factor (typically 2) relative to the number of luminance samples in one or both of the horizontal and vertical axes. The justification for this is that the HVS is spatially less sensitive to differences in colour than brightness. 2.1.2. Source Picture Format Common settings for resolution parameters have been defined within video standards. One example is the “Common Intermediate Format” (CIF) picture format. The parameters for CIF are shown in Table 2-1 below, along with some common variants.

- 13. 5 Table 2-1 : Source picture format Source Picture Format Resolution (Number of samples in horizontal x vertical) Luminance Chrominance 16CIF 1408x1152 704x576 4CIF 704x576 352x288 CIF 352 x 288 176 x 144 QCIF (Quarter-CIF) 176 x 144 88 x 72 sub-QCIF 128x96 64x48 2.1.3. Redundancy and Data Compression Even with fixed setting for resolution and frame rate parameters, it is both desirable and possible to further reduce the complexity of the video system. Significant reductions in storage or bandwidth requirements may be achieved by encoding and decoding of the video data to achieve data compression. A generic video coding scheme depicting this compression is shown in Figure 2-2 below. reference input video recovered output video transmission channel or data storage Video Encoder compressed data compressed data Video Decoder Figure 2-2 : Generic Video System Data compression is possible, since video data contains redundant information. Table 2-2 lists the types of redundancy and introduces two compression techniques which take advantage of this redundancy. These techniques are discussed later in section 2.1.5. Table 2-2 : Redundancy in digital image and video Domain where Redundancy Exists Description Applicable Compression Technique Spatial: Within a typical still image, spatial redundancy exists in a uniform region of the image, where luminance and chrominance values are highly correlated between neighbouring samples. Intraframe Predictive coding Temporal: Video contains temporal redundancy in that data in one frame is often highly correlated with data in the same region of the subsequent frame (e.g. where the same object appears in the subsequent frames, albeit with some motion effects). This correlation diminishes as the frame rate is reduced and/or the motion content within the video sequence increases. Interframe Predictive coding.

- 14. 6 While the encoding and decoding procedures do increase processing demands on the system, this penalty is often accepted when compared to the potential savings in storage and bandwidth costs. Apart from increased processing power, there are other options to consider during compression. These generally trade-off compression efficiency against perceived visual quality, as shown in Table 2-3 below.

- 15. 7 Table 2-3 : Compression Considerations Consideration Factor Effects on: Compression Efficiency Perceived Quality a) Resolution, Frame Rate Skipping frames/ reducing the frames rate will increase efficiency. Digitising the same picture with an increased number of pixels allows that more spatial correlation and redundancy can be exploited during compression. Increased resolution (e.g. going from 8 to 16 bit representation) will decrease efficiency. Increases in resolution and frame rate typically improve quality at the expense of increased processing complexity/cost. b) Latency: end-to-end delays introduced into the system due to finite processing delays. Improved compression may improve or degrade latency, dependant on other system features. At one extreme, it may increase latency due to increased complexity and therefore increased processing delays. At the other extreme, in a network, it may result in a reduced bandwidth demand. This may serve to reduce network load and actually reduce buffering delays and latency in the network. If frames are not played back at a regular frame rate, the resultant pausing or skipping will drastically reduce perceived quality. c) Lossless compression: where the original can be reconstructed exactly after decompression. This category of compression typically achieves lower compression efficiency than lossless compression. By definition, the recovered image is not degraded. This may be more applicable to medical imaging or legal applications where storage costs or latency are of less concern. d) Lossy compression: where the original cannot be reconstructed exactly. This typically achieves higher compression efficiency than lossless. By definition, the quality will be degraded, however techniques here may capitalise on HVS limitations, so that the losses are less perceivable. This is more applicable to consumer video applications.

- 16. 8 2.1.4. Coding Categories There is a wide selection of strategies for video coding. The general categories into which these strategies are allocated is shown in Figure 2-3 below. As indicated by the shading in the diagram, this project makes use of DCT transform coding, which is categorised as a lossy, waveform based method. Categories of Image and Video Coding MODEL based WAVEFORM based Statistical Universal Spatial Frequency LossyLoss-less example: - wire frame modelling examples: - sub-band examples: - fractal - predicitive(DPCM) example: - ZIP examples: - Huffman - Arithmetic - DCT transform Note: shaded selections identify the categories and method considered in this project. Figure 2-3 : Categories of Image and Video Coding 2.1.5. General Coding Techniques To achieve compression, a video encoder typically performs a combination of the following techniques, which are discussed briefly in the sub-sections below this list: Block Structure and Video Layer Decomposition Intra and Inter coding: Intraframe prediction, Motion Estimation Block Coding: Transform, Quantisation and Entropy Coding. 2.1.5.1. Block Structuring The raw array of data samples produced by digitisation is typically restructured into a format that the video codec can take advantage of during processing. This new structure typically consists of a four layer hierarchy of sub-elements. A graphical view of this decomposition of layers, with specific values for QCIF and H.263+ is given in Figure 2-4 below. When coding schemes apply this style of layering, with the lowest layer consisting of blocks, the coding scheme is said to be “block-based” or “block-structured”. Alternatives to block-based coding are object- and model-based coding. While these alternatives promise the potential for even lower bit rates, they are less mature than current block-based techniques. These will not be covered in this report, however more information can be found in chapter 19 of [21].

- 17. 9 Figure 2-4 : Video Layer Decomposition A brief description of the contents of these four layers is given in Table 2-4 below. Some of the terms introduced in this table are be covered later. Table 2-4 : Video Layer Hierarchy Layer Description/Contents a) Picture: This layer commences each frame in the sequence, and includes: Picture Start Code. Frame number. Picture type, which is very important as it indicates how to interpret subsequent information in the sub-layers. b) Group of blocks (GOB): GOB start code. Group number. Quantisation information. c) MacroBlock (MB): MB address. Type (indicates intra/inter coding, use of motion compensation). Quantisation information. Motion vector information. Coded Block Pattern. d) Block: Transform Coefficients. Quantiser Reconstruction Information.

- 18. 10 2.1.5.2. Intraframe and interframe coding The features and main distinctions between these two types of coding is summarised briefly in Table 2-5 below. Table 2-5 : Distinctions between Intraframe and Interframe coding Coding Style Features and Implications INTER coding Frames coded this way rely on information in previous frames, and code the relative changes from this reference frame. This coding improves compression efficiency (compared to intra coding). The decoder is only able to reconstruct an inter coded frame when it has received both: a) the reference frame, and b) the inter frame. The common type of inter coding, relevant to project, is ‘Motion Estimation and Compensation’, which is discussed below. The reference frame(s) can be forward or backwards in time (or a combination of both) relative to the predictive encoded frame. When the reference frame is a previous frame, the encoded frame is called a “P-frame”. When reference frames are previous and subsequent frames, the encoded frame is termed a “B-frame” (Bi-directional). This relationship is made clear in Figure 2-5 below. Since decoding depends on data in reference frames, any errors that occur in the reference will persist and propagate into subsequent frames – this is known as “temporal error propagation”. INTRA coding Does not rely on previous (or subsequent) frames in the video sequence. Compression efficiency is reduced (compared to inter coding). Recovered video quality is improved. Specifically, if any temporal error propagation existed, it will be removed at the point of receiving the intra coded frame. Frames coded this way are termed “I-frames”. I-frame I-frame P-frame B-frame P-frame B-frame Forward Prediction from Reference Bi-Directional Prediction from References time Figure 2-5 : Predictive encoding relationship between I-, P- and B- frames 2.1.5.2.1. Motion Estimation Motion estimation and compensation techniques provide data compression by exploiting temporal redundancy in a sequence of frames. “Block Matching Motion Estimation” is one such technique, which results in a high levels of data compression.

- 19. 11 The essence of this technique is that for each macroblock in the current frame, an attempt is made to locate a matching macroblock within a limited search window in the previous frame. If a matching block is found, the relative spatial displacement of the macroblock between frames is determined, and this is coded and sent as a motion vector. This is shown in Figure 2-6 below. Transmitting the motion vector instead of the entire block represents a vastly reduced amount of data. When no matching block is found, then the block will need to be intra coded. Limitations of this technique include the following: It does not cater for three dimensional aspects such as zooming, object rotation and object hiding. It suffers from temporal error propagation. matched MB n (x+ Dx, y+Dy) Macroblock n position in previous frame (x,y) Macroblock n position in current frame MB n (Dx, Dy) motion vector for MB n search window - vertical search range search window - horizontal search range Figure 2-6 : Motion Estimation 2.1.5.2.2. Intraframe Predictive Coding This technique provides compression by taking advantage of the spatial redundancy within a frame. This technique operates by performing the sequence of actions at the encoder and decoder as listed in Table 2-6 below. Table 2-6 : Intraframe Predictive Coding Actions performed by: Actions for each sample: 1. Encoder: a) An estimate for the value of the current sample is produced by a prediction algorithm that uses values from ‘neighbouring” samples. b) The difference (or error term) between the actual and the predicted values is calculated. c) The error term is sent to the decoder (see notes 1 and 2 below). 2. Decoder: a) The same prediction algorithm is performed to produce an estimate for the current sample. b) This estimate is adjusted by the error term received from the encoder to produce an improved approximation of the original sample.

- 20. 12 Notes: 1) For highly correlated samples in a uniform region of the frame, the prediction will be very precise, resulting in a small or zero error term, which can be coded efficiently. 2) When edges occur between non-uniform regions within an image, neighbouring samples will be uncorrelated and the prediction will produce a large error term, which diminishes coding efficiency. However, chapter 2 of [23] highlights the following two factors which determine that this technique does provide overall compression benefits: a) Within a wide variety of naturally occurring image data, the vast majority of error terms are very close to zero – hence on average, it is possible to code error terms efficiently. b) While the HVS is very sensitive to the location of edges, it is not as sensitive the actual magnitude change. This allows that the large magnitude error terms can be quantised coarsely, and hence coded more efficiently. 3) Since this technique only operates on elements within the same frame, the “intraframe” aspect of it’s name is consistent with the definitions in Table 2-5. However, since coded data depends on previous samples, it is subject to error propagation in a similar way to interframe coding. This error propagation may manifest itself as horizontal and/or vertical streaks to the end of a row or column. 2.1.5.2.3. Intra Coding When a block is intra coded (for example, when a motion vector cannot be used), the encoder translates the data in the block into a more compact representation before transmitting it to the decoder. The objective of such translation (from chapter 3 of [23]) is: “to alter the distribution of the values representing the luminance levels so that many of them can either be deleted entirely, or at worst be quantised with very few bits”. The decoder must perform the inverse of the translation actions to restore the block back to the original raw format. If the coding method is categorised as being loss-less, then the decoder will be able to reconstruct the original data exactly. However, this project makes use of lossy a coding method, where a proportion of the data is permanently lost during coding. This restricts the decoder to reconstruct an approximation of the original data. The generic sequence of actions involved in block encoding and decoding are shown in Figure 2-7 below. Each of these actions is discussed in the paragraphs below, which also introduce some specific examples relevant to this project. Transform block (e.g. 8x8) Entropy Code Quantise Inverse Transform Requantise transmission channel or storage Entropy Decode ENCODER block (e.g. 8x8) DECODER Figure 2-7 : Block Encode/Decode Actions

- 21. 13 Transformation This involves translation of the sampled data values in a block into another domain. In the original format, samples may be viewed as being in the spatial domain. However, chapter 3 of [23] indicates these may equally be considered to be in the temporal domain, since the scanning process during image capture provides a deterministic mapping between the two domains. The coding category highlighted in Figure 2-3 for use in this project is “Waveform/Lossy/Frequency”. The frequency aspect refers to the target domain for the transform. The transform can be therefore be viewed as a time to frequency domain transform, allowing selection of a suitable Fourier Transform based method. Possibilities for this include: Walsh-Hadamard Transform (WDT) Discrete Cosine Transform (DCT) Karhunen-Loeve Transform (KLT) The DCT is a popular choice for many standards, including JPEG and H.263. The output of the transform on an 8x8 (for example) block results in the same number (64) of transform coefficients, each representing the magnitude of frequency component. The coefficients are arranged in the block with the single DC coefficient in the upper left corner, then zig-zag along diagonals with the highest frequency coefficient in the lower right corner of the block (see Figure 2-8). Since the transform produces the same number of coefficients as there were to start with; it does not directly achieve any compression. However, compression is achieved in the subsequent step, namely quantisation. Further details of the DCT transform are not presented here, as these are not directly relevant to this project, however [23] may be referred to for more details. Quantisation This operation is performed on the transform coefficients. These coefficients usually have widely varying range of magnitudes, with a typical distribution as shown in the left side of Figure 2-8 below. This distribution makes it possible to ignore some of the coefficients with sufficiently small magnitudes. This is achieved by applying a variable quantisation step size to positions in the block, as shown in the right side of Figure 2-8 below. This approach provides highest resolution to the DC and high magnitude coefficients in the top left of the block, while reducing resolution toward the lower right corner of the block. The output of the quantisation typically results in a block with some non-zero values towards the upper left of the block, but many zeros towards the lower right corner of the block. Compression is achieved when these small or zero magnitude values are coded more efficiently as seen in the subsequent step, namely Entropy Coding. DC large magnitude low frequency coefficients small magnitude high frequency coefficients 8x8 block of transform coefficients smallest quantistion values - better resolution largest quantisation values, lowest resolution corresponding 8x8 matrix of quantisation coefficients increasing quantisation values increasing quantisation values coefficients loaded diagnoanally Figure 2-8 : Transform Coefficient Distribution and Quantisation Matrix

- 22. 14 Entropy Coding This type of source coding provides data compression without loss of information. In general, source coding schemes map each source symbol to a unique (typically binary) codeword prior to transmission. Compression is achieved by coding as efficiently as possible (i.e. using the fewest number of bits). Entropy coding achieves efficient coding by using variable length codewords. Shorter codewords are assigned to those symbols that occur more often, and longer codewords are assigned to symbols that occur less often. This means that on average, shorter codewords will be used more often, resulting in fewer bits being required to represent an average sequence of source symbols. An optional, but desirable property for variable length codes is the “prefix-free” property. This property is satisfied when no codeword is the prefix of any other codeword. This has the benefit that codewords are “instantaneously decodable”, which implies that the end of a codeword can be determined as the codeword is read (and without the need to receive the start of the next codeword). Unfortunately, there are also some drawbacks in using variable length codes. These include: 1) The instantaneous rate at which codewords are received will vary due to the variable codeword length. A decoder therefore requires buffering to smooth the rate at which codewords are received. 2) Upon receipt of a corrupt codeword, the decoder may lose track of the boundaries between codewords. This can result in the following two undesirable outcomes, which are relevant as their occurrence is evident later in this report: a) The decoder may only recognise the existence of an invalid codeword sometime after it has read beyond the start of subsequent codewords. In this way, a number of valid codewords may be incorrectly ignored until the decoder is able to resynchronise itself to the boundaries of valid codewords. b) When attempting to resynchronise, a “false synchronisation” may occur. This will result in further loss of valid codewords until the decoder detects this condition and is able to establish valid resynchronisation. An example of a prefix-free variable length code is the Huffman Code. This has the advantage that it “can achieve the shortest average code length” (chapter 11 of [24]). Further details of Huffman coding are not covered here, as they are not of specific interest to this project. Within a coded block of data, it common to observe a sequence of repeated data values. For example, the quantised transform coefficients near the lower right corner of the block are often reduced to zero. In this case, it is possible to modify and further improve the compression efficiency of Huffman (or other source) coding, by applying “Run-Length coding”. Instead of encoding each symbol in a lengthy run of repeated values, this technique describes the run with an efficient substitution code. This substitution code typically consists of a control code and a repetition count to allow the decoder to recreate the sequence exactly. 2.1.6. Quality Assessment When developing video coding systems, it is necessary to provide an assessment of the quality of the recovered decoded video. Such assessments provide performance indicators which are necessary to optimise system performance during development or even to confirm the viability of the system prior to deployment. It is usually desirable to perform subjective and quantitative assessments of video performance. It is quite common to perform quantitative assessments during earlier development and optimisation phases, and then to

- 23. 15 conduct subjective testing prior to deployment. Features of these two types of assessment are discussed below. 2.1.6.1. Subjective Assessment Testing is performed by conducting formal human observation trials with a statistically significant population of the target audience for the video application. During the trials, observers independently view video sequences and then rate their opinion of the quality according to a standardised five point scoring system. Scores from all observers are then averaged to provide a “Mean Opinion Score” (MOS) for each video sequence. A significant advantage of subjective assessment trials is that they allows observers to consider the entire range of psycho-visual impressions when rating a test sequence. Trial results therefore reflect a full consideration of all aspects of video quality. MOS scores are therefore a well accepted means to compare the relative performance of different systems. The following implications of subjective assessments are noted: Formal trials are relatively costly and time consuming. This can result in trials being skipped or deferred to later stages of system development. Performance of the same system can vary widely depending on the specific video content used in trials. In the same way, observers’ perceptions are equally dependent on the video content. Therefore, comparison of MOS scores between different systems is only credible if identical video sequences are used. Observers’ perceptions may vary dependant on the context of the environment in which they are viewed. An illustration of this is given in mobile telephony. Here, lower MOS scores for the audio quality of mobile telephony (when compared to higher MOS scores for the quality of PSTN) are tolerated when taking into account the benefits of mobility. This tolerant attitude is likely to persist toward video services offered over mobile networks. Therefore, observation trials should account for this attitude when conducting trials and reporting MOS scores. Alongside the anticipated rapid growth in mobile video services, it is likely that audience expectations will rapidly become more sophisticated. It is therefore suggested that comparing results between two trials is less meaningful if the trial dates are separated by a significant time period (say 2 years, for example). 2.1.6.2. Objective/Quantitative Assessment In strong contrast to subjective testing, where consideration of all aspects of the quality are incorporated within a MOS score; quantitative assessments are able to focus on specific aspects of video quality. It is therefore often more useful if these assessments are quoted in the context of some other relevant parameters (such as frame rate or channel error rate). The defacto measurement used in video research is Peak-Signal-to-Noise Ratio (PSNR), described below. Peak-Signal-to-Noise Ratio (PSNR) This assessment provides a measure in decibels of the difference between original and reconstructed pixels over a frame. It is common to quote PSNR for luminance values only, even though PSNR can be derived for chrominance values as well. This project adopts the convention of using luminance PSNR only. The luminance PSNR for a single frame is calculated according to equation (2) below.

- 24. 16 MSE PSNR frame 2 MAX_LEVEL 10log10 (2) Where, MAX_LEVEL= The maximum value luminance (when using 8 bit representation for luminance, this value is 255) MSE = “Mean Squared Error” of decoded pixel luminance amplitude compared with original reference pixel, given by equation (3) 2 ValuePixelOrignalValuePixelRecovered 1 pixelsNpixelsN MSE (3) Where, Npixels = Total number of pixels in the frame. PSNR may be also be calculated for an entire video sequence. This is simply an average of PSNR values for each frame, as shown in equation (4) below. framesAll frame frames sequence PSNR N PSNR 1 (4) Where, N frames = Total number of decoded frames in sequence. For example, if only frame numbers 1, 6 and 10 are decoded, then N frames = 3. The following implications of using PSNR as a quality assessment are noted: 1. PSNR for a sequence can only be based on the subset of original frames that are decoded. When compression results in frames being dropped, this will not be reflected in the PSNR result. This is a case where quoting the frame rate alongside PSNR results is appropriate. 2. The PSNR calculation requires a reference of the original input video is required at the receiver. This may only be possible in a controlled test environment. 3. PSNR is measured between the original (uncompressed) frames and the final decoded output frames. This therefore reflects a measure of effects across the entire system, including the transmission channel, which may introduce errors. Hence quoting the error rate of the channel alongside the PSNR result may be appropriate in this case 4. Since both: a) PSNRframe is averaged over the pixels in a frame b) PSNRsequence is averaged over the frames in a sequence, these averages are unable to highlight the occurrence of localised quality degradations which may be intolerable to a viewer. Based on this, it is highly recommended that when quoting PSNR measurement, observations from informal (as a minimum) viewing trials are noted as well.

- 25. 17 2.2. Nature of Errors in Wireless Channels Errors in wireless channels result from propagation issues described briefly in Table 2-7 below. Table 2-7 : Wireless Propagation Issues Propagation Issue Description, Implications 1) Attenuation For a line of sight (LOS) radio transmission, the signal power at the receiver will be attenuated due to “free space attenuation loss”, which is inversely proportional to square of the range between transmitter and receiver. For non-LOS transmissions, the signal power will also suffer “penetration losses” when entering or passing through objects. a) Multipath & Shadowing In a mobile wireless environment, transmitted signals will undergo effects such as reflection, absorption, diffraction and scattering off large objects in the environment (such as buildings or hills). Apart from further attenuating the signal, these effects result in multiple versions of the signal being observed at the receiver. This is termed multipath propagation. When the LOS signal component is lost, the effect on received signal power is determined by combination of these multipath signals. Severe power fluctuations at the mobile receiver result as it moves in relation to objects in the environment. The rate of these power fluctuations is limited by mobile speeds in relation to these objects, and is typically less than two per second. For this reason, this power variation is termed “slow fading”. Another type of signal fluctuation exists and this is termed “fast fading”. Due to the differing path lengths of the multipath signals; these signals possess different amplitudes and phases. These will result in constructive or destructive interference when combined instantaneously at the receiver. Minor changes in the environment or very small movements of the mobile receiver can dynamically modify these multipath properties, and this results in rapid fluctuations (above 50Hz, for example) of received signal power – hence the name “fast fading”. As slow and fast fading are occur at the same time, their effects on received power level are superimposed. During fades, received power levels can drops by tens of dB, which can drastically impair system performance. b) Delay Spread Another multipath effect is “delay dispersion”. Due to the varying paths lengths of the versions of the signal, these will arrive at slightly differing times at the receiver. If the delay spread is larger than a symbol period of the system, energy from one symbol will be received during the next symbol period, and this will interfere with the interpretation of that symbol’s true value. This undesirable phenomenon is termed Inter-Symbol Interference (ISI). c) Doppler effects When a mobile receiver moves within the reception area, the phase at the receiver will constantly change due to changing path length. This phase change represents a frequency, known as the Doppler Frequency. At the receiver, unless the offset of the Doppler frequency is tracked, this reduces the energy received over a symbol period, which degrades system performance.

- 26. 18 The combination of these effects, but specifically multipath, results in received signal strength undergoing continual fades of varying depth and duration. When the received signal falls below a given threshold during a fade, then unrecoverable errors will occur at the receiver until the received signal level exceeds the threshold again. Errors therefore occur in bursts over the duration of such fades. This bursty nature of the errors in a wireless is the key point raised in this section. Therefore, when simulating errors in a wireless transmission channel, it is realistic to represent this by a bursty error model (as opposed to a random error model). 2.3. Nature of Packet Errors Degradation of coded video in a packet network can be attributed to the following factors: a) Packet Delay: Whether overall packet delay is a problem depends on the application. One-way, non-interactive services such as video streaming or broadcast can typically withstand greater overall delay. However, two- way, interactive services such as video conferencing are adversely effected by overall delay. Excessive delay can result in effective packet loss, where, for example, if a packet arrives beyond a cut-off time for the decoder to make use of the packet, it is discarded. b) Packet Jitter: This relates to the amount of variation of delay. Jitter may be caused by varying queuing delays at each node across a network. Different prioritisation strategies for packets queues can have significant effects on delay, especially as network congestion increases. The effects of jitter depend on the applications, as before. One-way, non-interactive applications can cope as long as sufficient buffering exists to smooth the variable arrival rate of packets, so that the application can consume packets at a regular rate. Problems still exist for two-way, interactive services when excessive delays result in effective packet loss. c) Packet Loss: Corrupt packets will be abandoned as soon as they are detected in the network. Some queuing strategies can also discard valid packets if they are noted as being “older” than a specified temporal threshold. Since these packets are too late to be of use, there is no benefit in forwarding them. This technique can actually alleviate congestion in networks and reduce overall delays. The fact that an entire packet is lost (even if only a single bit in it was corrupt), further contributes to the bursty nature of errors already mentioned for wireless channels.

- 27. 19 2.4. Impact of Errors on Video Coding Chapter 27 of [21] provides a good illustration of the potentially drastic impact of errors in a video bitstream. Table 2-8 provides a summary of these impacts, showing the type of error and it’s severity. When error propagation results, the table categorises the type of propagation and lists some typical means to reduce the extent of the propagation. The examples in this table are ordered from lowest to highest severity impact. Table 2-8 : Impact of Errors in Coded Video Example 1: Location of error: Within a macroblock of an I-frame, which is not used as a reference in motion estimation. Extent of impact: The error is limited to a (macro)block within one frame only – there is no propagation to subsequent frames. This therefore results in a single block error. Severity: Low Error Propagation Category: Not applicable – no propagation Example 2: Location of error: In motion vectors of a P-frame, that does not cause loss of synchronisation. Extent of impact: Temporal error propagation results, with multiple block errors in subsequent frames until the next intra coded frame is received. Severity: Medium Error Propagation Category: Predictive Propagation Propagation Reduction strategy: The accepted recovery method is to send an intra coded frame to the decoder. Example 3: Location of error: In a variable length codeword of a block which is used for motion estimation, and causes loss of synchronisation. Extent of impact: When the decoder loses synchronisation, it will ignore valid data until it is able to recognise the next synchronisation pattern. If only one synch pattern is used per frame, the rest of the frame may be lost. If the error occurred at the start of an I- frame, not only is this frame lost, but subsequent P-frames will also be severely errored, due to temporal propagation. This potentially results in multiple frame errors. Severity: High Error Propagation Category: Loss of Synchronisation Propagation Reduction strategy: One way to reduce the extent of this propagation, is to insert more synchronisation codewords into the bitstream so that less data is lost before resynchronisation is gained. This has the drawback of increasing redundancy overheads.

- 28. 20 Example 4: Location of error: In the header of a frame. Extent of impact: Information in the header of a frame contains important information about how data is interpreted in the remainder of the frame. If this information is corrupt, data in the rest of the frame is meaningless – hence the entire frame can be lost. Severity: High Error Propagation Category: Catastrophic Propagation Reduction strategy: Protect the header by a powerful channel code to ensure it is received intact. Fortunately, header information represents a small percentage of the bitstream, so the overhead associated with protecting it is less onerous than might be expected. Based on the potentially dramatic impact of these errors, it is evident that additional techniques are required to protect against these errors and their effects in order to enhance the robustness of coded video. Examples of such techniques are discussed in chapter 3. 2.5. Standards overview 2.5.1. Selection of H.263+ H.263+ was selected as the video coding standard for use on this project. Justifications for choosing H.263+ in preference to MPEG include the following: H.263+ simulation software is more readily available and mature than MPEG software. Specifically, an H.263+ software codec [3] is available within the department, and there are research staff familiar with this software. The H263+ specification is much more concise than the MPEG specifications, and given the timeframe of this project, it was considered more feasible to work with this smaller standard. H.263+ is focussed at lower bit rates, which is of more interest in this project. This focus is based on the anticipation of significant growth in video applications being provided over the internet and public mobile networks. To make maximum use of their bandwidth investments, mobile network operators will be challenged to provide services at lower bit rates. Based on this choice, some unique aspects of MPEG-2 and MPEG-4, such as those listed below, are not covered in this project: Fine-Grained-Scalability (FGS). Higher bit rates (MPEG-2 operates from 2 to 30Mbps). Content based scalability (MPEG-4). Coding of natural and synthetic scenes (MPEG-4)

- 29. 21 2.5.2. H.263+ : “Video Coding for Low Bit Rate Communications” 2.5.2.1. Overview H.263+ refers to the version 2 issue of the original H.263 standard. This specification defines the coded representation and syntax for compressed video bitstream. The coding method is block-based and uses motion compensation, inter picture prediction, DCT transform coding, quantisation and entropy coding, as discussed earlier in Chapter 2. The standard specifies operation with all five picture formats listed in Table 2-1, namely sub-QCIF, QCIF, CIF, 4CIF and 16CIF. The specification defines the syntax in the four layer hierarchy (Picture, GOB, MacroBlock and Block) as discussed earlier. The specification defines sixteen negotiable coding modes in a number of annexes. A brief description of all these modes is given in Appendix A. However, the most significant mode for this project is given in annex O, which defines the three scalability modes supported by the standard. With scalability enabled, the coded bitstream is arranged into a number of layers, starting with a mandatory base layer and one or more enhancement layers, as follows: Base Layer A single base layer is coded which contains the most important information in the video bitstream. It is essential for this layer to be sent to the decoder as it contains what is considered as the ‘bare minimum’ amount of information required for the decoder to produce acceptable quality recovered video. This layer consists of I- frames and P-frames. Enhancement Layer(s) One or more enhancement layers are coded above the base layer. These contain additional information, which, if received by the decoder, serve to improve the perceived quality of the recovered video produced by the decoder. Each layer can only be used by the decoder if all layers below it have been received. The lower layer thus forms a reference layer for the layer above it. The modes of scalability relate directly to the way in which they improve the perceived quality of the recovered video, namely: resolution, spatial quality or temporal quality. These modes are described in the following sections. Note that it is possible to combine the scalability modes into a hybrid scheme, although this is not considered in this report. 2.5.2.2. SNR scalability The perceived quality improvement derived from the enhancement layers in this mode is pixel resolution (luminance and chrominance). This mode provides “coding error” information in the enhancement layer(s). The decoder uses this information in combination with that received in the base layer. After decoding information in the base layer to produce estimated luminance and chrominance value for each pixel, the coding error is then used to adjust these values to create a more accurate approximation of the original values. Frames in the enhancement layer are called Enhancement I-frames (EI) and Enhancement P-frames (EP). The “prediction direction” relationship between I and P frames is carried over to the EI and EP frames in the enhancement layer. This relationship is shown in Figure 2-9 below.

- 30. 22 EI EP EP EP I P P P SNR scaled sequence Enhancement Layer 1 BASE layer key: direction of prediction Figure 2-9 : SNR scalability 2.5.2.3. Temporal scalability The perceived quality improvement derived from the enhancement layers in this mode is frame rate. This mode provides the addition of B frames, which are bi-directionally predicted and inserted between I and P frames. These additional frames increase the decoded frame rate, as shown in Figure 2-10 below. I P P P B B B Temporal scalability key: direction of prediction enhancement layer: base layer: Figure 2-10 : Temporal scalability 2.5.2.4. Spatial scalability The perceived quality improvement factor derived from the enhancement layers in this mode is picture size. In this mode, the base layer codes the frame in a reduced size picture format (or equivalently, a reduced resolution at the same picture format size). The enhancement layer contains information to increase the picture to its original size (or resolution). Similar to the SNR scalability, the enhancement layer contains EI and EP frames with the same prediction relationship between them, as shown in Figure 2-11 below.

- 31. 23 EI I P P Spatial Scalability Enhancement Layer (e.g. CIF) BASE layer (e.g. QCIF) EP EP key: direction of prediction Figure 2-11 : Spatial scalability 2.5.2.5. Test Model Rate Control Methods [6] highlights the existence of a rate control algorithm called TMN-8, which is described in [27]. Although not an integral part of the H.263+ standard, this algorithm is mentioned here as it is incorporated within the video encoder software used on this project. TMN-8 controls the dynamic output bit rate of the encoder according to a specified target bit rate. It achieves this by adjusting the macroblock quantisation coefficients. Larger coefficients will reduce the bit rate and smaller coefficients will increase the bit rate. 2.5.3. HiperLAN/2 This section presents a brief overview of the HiperLAN/2 standard, and is largely based on [15]. 2.5.3.1. Overview HiperLAN/2 is an ETSI standard (see [2], [15] and [26]) defining a high speed radio communication system with typical data rates between 6 to 54 Mbit/s, operating in the 5 GHz frequency range. It connects portable devices, referred to as Mobile Terminals (MTs), via a base station, referred to as an Access Point (AP), with broadband networks based on IP, ATM or other technologies. HiperLAN/2 supports restricted user mobility, and is capable of supporting multimedia applications. A HiperLAN/2 network may operate in one of the two modes listed below. The first mode is more typical for business environments, whereas the second mode is more appropriate for home networks: a) “CENTRALISED mode”: where a one or more fixed location APs are configured to provide a cellular access network to a number MTs operating within the coverage area. All traffic passes through an AP either to an external core network or to another MT within the coverage area. b) “DIRECT mode”: where MTs communicate directly with one another in an ad-hoc manner, without routing data via an AP. One of the MTs may act as a Central Controller (CC), which can provide connection to an external core network. ETSI only standardised the radio access network and some of the convergence layer functions, which permit connection to a variety of core networks. The defined protocol layers are shown in Table 2-9 below, and these are described in more detail in each of the following sections.