Mule tcat server - deploying applications

Download as PPTX, PDF0 likes305 views

A Deployment is the mechanism that enables you to deploy one or more applications to multiple Tomcat instances or groups, and to undeploy them just as easily. This page describes the various tasks related to deployment.

1 of 14

Download to read offline

![Parallel Deployments [v 7.0.1]

◦ Starting with Tcat 7.0.1, you can perform truly parallel deployments such as selecting

multiple deployments and performing a group action on them (deploy, undeploy,

redeploy), and have them work in parallel. Earlier versions allowed you to perform

group actions, but the actions were queued and prior actions had to be finished for

subsequent actions to be executed. Lets explain this in greater detail.

◦ Let’s say we have 2 deployments; D1 and D2. You could select these deployments and

then click the "Deploy" button. In earlier versions of Tcat, deployment D1 would start

executing and deploying its webapps to the servers one at a time, while deployment

D2 would be queued; waiting for D1 to finish. In Tcat 7.0.1, both D1 and D2 can now

simultaneous run in parallel. However, by default this feature is not enabled. You need

to enable it before you start the Tcat console.](https://image.slidesharecdn.com/muletcatserver-deployingapplications-160714125714/85/Mule-tcat-server-deploying-applications-12-320.jpg)

Ad

Recommended

Mule environments

Mule environmentsSon Nguyen The Anypoint Platform allows you to create separate sandbox and production environments for deploying applications independently. Sandbox environments provide a safe testing space without risk to production. For example, a QA team can test new releases in a sandbox before deploying to production. You can manage access to environments by adding users only to sandbox and securing production. After testing, applications can be easily promoted from sandbox to production.

Deploy mule application

Deploy mule applicationSon Nguyen The document discusses deploying Mule applications using the Mule Management Console (MMC). The MMC provides centralized management and monitoring of Mule deployments. It allows users to deploy, undeploy, and redeploy applications to Mule servers. The Deployments tab in MMC lists all provisioned applications and their statuses, and allows users to perform actions like deploying, undeploying, and redeploying applications. Users can also create new deployment groups to specify servers and applications.

Mule deploying a cloud hub application

Mule deploying a cloud hub applicationcharan teja R The document discusses how to deploy a CloudHub application from Anypoint Studio or the CloudHub console. It describes the options on the Deploy Application page, including creating a unique application name that will serve as the application domain, choosing a deployment target of the CloudHub worker cloud or an on-premises server, and uploading the application file or getting it from a sandbox environment.

Best way to deploy mule application

Best way to deploy mule applicationSanjeet Pandey The document discusses how to deploy Mule ESB applications using the Mule Management Console (MMC). MMC provides a centralized interface to monitor, manage, and deploy Mule applications. To deploy an application, login to MMC and use the Deployments tab to provision applications to target Mule servers. The Deployments tab shows all provisioned apps, their status, and whether they are deployed. Users can deploy, undeploy, redeploy, and delete applications from the Deployments tab. New deployment groups can also be created to specify apps and servers.

Mule deploying a cloud hub application

Mule deploying a cloud hub applicationD.Rajesh Kumar To deploy a CloudHub application, you first select the application file to deploy and enter a unique application name. This name becomes the application domain and public URL. You then choose the deployment target, whether the CloudHub worker cloud or an on-premises server. The application file is uploaded and the application is deployed to the selected target.

Mule managing cloud hub

Mule managing cloud hubD.Rajesh Kumar This document discusses managing settings and resources on CloudHub. It describes how to set the default region, create separate sandbox and production environments for deploying applications, and how to view your CloudHub subscription level and usage. Sandbox environments allow safe testing without affecting production and make it easy to promote applications between environments.

Running mule as worker role on azure

Running mule as worker role on azureSon Nguyen Running Mule on Azure can be done using Worker Roles, which are VMs without IIS that perform complex tasks. This document outlines using the Azure toolkit for Eclipse to configure a worker role project that will run Mule standalone and deploy a sample app. Key steps include editing the componentsets.xml file to add Mule, configuring the app deployment, and running the project in the Azure Emulator to test Mule running locally. Improvements mentioned are adding health checks and deploying Mule as a Windows service.

Sharing Resources - Mule

Sharing Resources - MuleAnil Kumar V how to share resources using mule. A step by step guide for novice mule on how to share resources between the applications.

Debugging mule

Debugging muleSindhu VL This document describes debugging techniques for Mule applications, including the Studio Visual Debugger, troubleshooting, and logging. The Visual Debugger allows setting breakpoints to inspect message contents at different points in a flow. To use it, set breakpoints, run in debug mode, and view message data. Troubleshooting techniques include configuring stacktraces, debugging outside Studio by enabling remote debugging, and using log statements. Logging is useful to follow an application's state by tracking messages through the flow.

Introducing Mule Application and API

Introducing Mule Application and APIJitendra Bafna Mule applications can consist of single or multiple connected flows that accept messages from external sources through message sources. Messages are then processed through message processors in a flow. Mule applications are written in XML and deployed visually to a Mule runtime, which can be on-premise or cloud-based. The Mule runtime handles requests from different applications concurrently and enforces API governance policies. Anypoint Studio is an Eclipse-based IDE that provides pre-built connectors and tools for developing, testing, and deploying Mule applications to Mule runtime editions. Mule APIs can be designed using RAML and support HTTP methods, then published through Anypoint Portal for public or private use.

Troubleshooting mule

Troubleshooting muleSon Nguyen This document provides tips for troubleshooting issues with Mule ESB, including setting up an IDE like Eclipse for debugging, adjusting Log4j logging levels, checking your Java environment configuration, simplifying configurations to isolate issues, searching Jira for known problems, and asking for help if stuck.

Anypoint access management - Roles

Anypoint access management - RolesShanky Gupta A role within the Anypoint Platform is a set of pre-defined permissions for each different product within the Platform.

Depending on the product, you can find pre-defined roles with their standard permissions, or you can customize your own permissions for each role.

The Access Management section grants you a space in which you can create Roles for the products to which you own the appropriate entitlements.

Mule debugging

Mule debuggingSindhu VL This document describes various techniques for debugging Mule applications, including the Studio Visual Debugger, troubleshooting, and logging. The Visual Debugger allows setting breakpoints to inspect messages at different points in a flow. Troubleshooting options include configuring stacktraces, debugging outside of Studio using Eclipse or standalone mode, and using log statements to follow message state.

Mule Tcat server

Mule Tcat serverD.Rajesh Kumar This document provides instructions for installing Tcat Server on one machine and using the Tcat Administration Console to manage and deploy applications. It outlines the steps to run the installer, start the Tcat server, log into the console, register the server with the console, manage the Tcat instance, and add a new application. It also provides a brief definition of what a connector is in relation to Mule applications.

CloudHub Connector With Mulesoft

CloudHub Connector With MulesoftJitendra Bafna The document discusses the CloudHub connector in Mulesoft, which allows interactions with CloudHub from within a Mule application. It provides operations like starting and stopping mule applications, deploying updates, and sending notifications. The connector is installed from Anypoint Exchange and then used in a Mule application by selecting operations like creating notifications, listing applications, updating applications, and retrieving application logs.

Introduce anypoint studio

Introduce anypoint studioSon Nguyen - The document provides steps to introduce Anypoint Studio by creating a simple HTTP request-response application that receives a request, logs the payload, modifies and returns the payload as a response.

- It describes opening Studio, creating a new project called "Basic Tutorial", modeling the application flow using HTTP inbound, logger and set payload blocks, configuring the elements, running and testing the application locally, and then stopping the application.

Mule: Munit domain support

Mule: Munit domain supportShanky Gupta Mule allows you to define connectors and libraries in a Mule Domain, to which you can deploy different Mule applications.

These domain based applications can share the resources configured in the domain to which they were deployed.

With Domain Support, MUnit allows you to test applications that run referencing a mule domain.

Integration with CMIS using Mule ESB

Integration with CMIS using Mule ESBSanjeet Pandey This document provides instructions for integrating with a Content Management Interoperability Services (CMIS) repository using Mule ESB. It describes installing the Mule CMIS connector, configuring the global CMIS element, creating a Mule project flow with HTTP and CMIS connectors to create a folder and file in a CMIS repository, and running the Mule application to test the integration.

Anypoint runtime manager v1

Anypoint runtime manager v1Son Nguyen Anypoint Runtime Manager v1.2 enables users to monitor applications running in cloud or on-premises environments using popular tools like Splunk and ELK. It provides a unified view of all applications no matter where they are running. The update also allows on-premises users to integrate Runtime Manager with third party monitoring tools like Splunk and ELK for similar monitoring features. The demo shows how to easily integrate with Splunk by enabling it in Runtime Manager and then tracking custom business events and transactions on Splunk dashboards and reports.

Mule legacy modernization example

Mule legacy modernization exampleD.Rajesh Kumar This document summarizes a Mule application that demonstrates how to modernize a legacy system by automating communication between the legacy system and a web service. The application exposes a SOAP web service to accept orders and converts them to a flat file format that the legacy fulfillment system accepts. It demonstrates how Mule can act as a proxy to front-end the legacy system and enable it to process orders submitted over HTTP by converting them to the legacy system's file format.

Logging best practice in mule using logger component

Logging best practice in mule using logger componentGovind Mulinti Logging is a key part of application debugging and analysis. This document discusses best practices for logging in Mule applications using the logger component. It recommends configuring log4j to use RollingFileAppender to control log file sizes. The logger component should be used to log messages with the log level and category string providing meaning. The category string should indicate the project, functionality, and flow being logged to help decode where log messages originate. Log4j properties can enable logging at granular levels like specific flows by configuring logger categories. Following these practices helps support teams debug issues faster through meaningful logs.

Automatic documentation with mule

Automatic documentation with muleF K Anypoint Studio has a built-in feature to automatically generate documentation for Mule projects. The documentation plugin allows users to generate an HTML report of all flows, elements, and code including attributes and descriptions provided in doc:name tags. To generate documentation, users simply select the documentation plugin in Anypoint Studio and choose an output folder. The plugin then builds an index.html file containing graphical and code views of each flow and component in the project.

Github plugin setup in anypointstudio

Github plugin setup in anypointstudioRajkattamuri The document provides steps for setting up GitHub integration in Anypoint Studio, including installing the GitHub plugin, saving a project to a Git repository for version control, committing and pushing changes to a remote repository, and importing or cloning projects from Git repositories.

Mule with drools

Mule with droolsKhan625 This document discusses using Drools, a business rules management system, with Mule to execute business rules in applications. It provides an example of creating a simple Mule application that uses a .drl file to define rules to determine a warehouse destination based on a randomly generated weight variable. The application is deployed to a Mule standalone server and the rules are executed by the Drools component to set the destination variable according to the rules.

JUnit and MUnit Set Up In Anypoint Studio

JUnit and MUnit Set Up In Anypoint StudioSudha Ch Junit can be used to write functional test cases in Java code without any specific plugins. Mockito is needed to mock interfaces instead of connecting to real systems, and the MUnit plugin can be installed in Anypoint Studio by adding its update site URL, selecting the plugin, and completing the installation wizard, which will add a src/test/munit folder to projects.

Creating a mule project with raml and api

Creating a mule project with raml and apiBhargav Ranjit This document discusses how to create an API project in MuleSoft using RAML and API Kit Router. It covers designing the API using the RAML specification in API Designer, creating the project in Anypoint Studio, running and testing the API project locally, and publishing the API to the Anypoint Exchange to enhance discoverability.

Using maven with mule

Using maven with muleSindhu VL Maven can be used to develop and manage Mule projects. Mule provides built-in Maven functionality in Anypoint Studio and for Mule ESB. In Studio, new projects can be built with Maven or existing projects can have Maven support enabled. Maven plugins for Mule ESB allow controlling Mule instances from Maven and provide archetypes for regular Mule applications. Projects developed outside Studio using Maven can also be imported.

MUnit run and wait scope

MUnit run and wait scopeShanky Gupta The Run and Wait scope provided by MUnit allows you to instruct MUnit to wait until all asynchronous executions have completed. Hence, test execution does not start until all threads opened by production code have finished processing.

Cloudhub fabric

Cloudhub fabricShanky Gupta CloudHub Fabric provides scalability, workload distribution, and added reliability to CloudHub applications. These capabilities are powered by CloudHub’s scalable load-balancing service, Worker Scaleout, and Persistent Queues features.

Sap integration by mule esb

Sap integration by mule esbSon Nguyen - The document discusses using Mule ESB for SAP integration. Mule ESB supports SAP integration through a certified Java connector. The connector uses SAP Java Connector (JCo) libraries to enable bidirectional communication with SAP via interfaces like IDocs and BAPIs.

- The connector allows invoking SAP BAPIs and transmitting IDocs to and from SAP. For BAPIs, it supports synchronous and asynchronous RFC calls. For IDocs, it can receive and send documents to SAP.

- Integrating SAP with other applications via Mule ESB provides benefits like increased business efficiency, improved visibility, and cost savings. It allows automating and streamlining business processes across different systems

Ad

More Related Content

What's hot (20)

Debugging mule

Debugging muleSindhu VL This document describes debugging techniques for Mule applications, including the Studio Visual Debugger, troubleshooting, and logging. The Visual Debugger allows setting breakpoints to inspect message contents at different points in a flow. To use it, set breakpoints, run in debug mode, and view message data. Troubleshooting techniques include configuring stacktraces, debugging outside Studio by enabling remote debugging, and using log statements. Logging is useful to follow an application's state by tracking messages through the flow.

Introducing Mule Application and API

Introducing Mule Application and APIJitendra Bafna Mule applications can consist of single or multiple connected flows that accept messages from external sources through message sources. Messages are then processed through message processors in a flow. Mule applications are written in XML and deployed visually to a Mule runtime, which can be on-premise or cloud-based. The Mule runtime handles requests from different applications concurrently and enforces API governance policies. Anypoint Studio is an Eclipse-based IDE that provides pre-built connectors and tools for developing, testing, and deploying Mule applications to Mule runtime editions. Mule APIs can be designed using RAML and support HTTP methods, then published through Anypoint Portal for public or private use.

Troubleshooting mule

Troubleshooting muleSon Nguyen This document provides tips for troubleshooting issues with Mule ESB, including setting up an IDE like Eclipse for debugging, adjusting Log4j logging levels, checking your Java environment configuration, simplifying configurations to isolate issues, searching Jira for known problems, and asking for help if stuck.

Anypoint access management - Roles

Anypoint access management - RolesShanky Gupta A role within the Anypoint Platform is a set of pre-defined permissions for each different product within the Platform.

Depending on the product, you can find pre-defined roles with their standard permissions, or you can customize your own permissions for each role.

The Access Management section grants you a space in which you can create Roles for the products to which you own the appropriate entitlements.

Mule debugging

Mule debuggingSindhu VL This document describes various techniques for debugging Mule applications, including the Studio Visual Debugger, troubleshooting, and logging. The Visual Debugger allows setting breakpoints to inspect messages at different points in a flow. Troubleshooting options include configuring stacktraces, debugging outside of Studio using Eclipse or standalone mode, and using log statements to follow message state.

Mule Tcat server

Mule Tcat serverD.Rajesh Kumar This document provides instructions for installing Tcat Server on one machine and using the Tcat Administration Console to manage and deploy applications. It outlines the steps to run the installer, start the Tcat server, log into the console, register the server with the console, manage the Tcat instance, and add a new application. It also provides a brief definition of what a connector is in relation to Mule applications.

CloudHub Connector With Mulesoft

CloudHub Connector With MulesoftJitendra Bafna The document discusses the CloudHub connector in Mulesoft, which allows interactions with CloudHub from within a Mule application. It provides operations like starting and stopping mule applications, deploying updates, and sending notifications. The connector is installed from Anypoint Exchange and then used in a Mule application by selecting operations like creating notifications, listing applications, updating applications, and retrieving application logs.

Introduce anypoint studio

Introduce anypoint studioSon Nguyen - The document provides steps to introduce Anypoint Studio by creating a simple HTTP request-response application that receives a request, logs the payload, modifies and returns the payload as a response.

- It describes opening Studio, creating a new project called "Basic Tutorial", modeling the application flow using HTTP inbound, logger and set payload blocks, configuring the elements, running and testing the application locally, and then stopping the application.

Mule: Munit domain support

Mule: Munit domain supportShanky Gupta Mule allows you to define connectors and libraries in a Mule Domain, to which you can deploy different Mule applications.

These domain based applications can share the resources configured in the domain to which they were deployed.

With Domain Support, MUnit allows you to test applications that run referencing a mule domain.

Integration with CMIS using Mule ESB

Integration with CMIS using Mule ESBSanjeet Pandey This document provides instructions for integrating with a Content Management Interoperability Services (CMIS) repository using Mule ESB. It describes installing the Mule CMIS connector, configuring the global CMIS element, creating a Mule project flow with HTTP and CMIS connectors to create a folder and file in a CMIS repository, and running the Mule application to test the integration.

Anypoint runtime manager v1

Anypoint runtime manager v1Son Nguyen Anypoint Runtime Manager v1.2 enables users to monitor applications running in cloud or on-premises environments using popular tools like Splunk and ELK. It provides a unified view of all applications no matter where they are running. The update also allows on-premises users to integrate Runtime Manager with third party monitoring tools like Splunk and ELK for similar monitoring features. The demo shows how to easily integrate with Splunk by enabling it in Runtime Manager and then tracking custom business events and transactions on Splunk dashboards and reports.

Mule legacy modernization example

Mule legacy modernization exampleD.Rajesh Kumar This document summarizes a Mule application that demonstrates how to modernize a legacy system by automating communication between the legacy system and a web service. The application exposes a SOAP web service to accept orders and converts them to a flat file format that the legacy fulfillment system accepts. It demonstrates how Mule can act as a proxy to front-end the legacy system and enable it to process orders submitted over HTTP by converting them to the legacy system's file format.

Logging best practice in mule using logger component

Logging best practice in mule using logger componentGovind Mulinti Logging is a key part of application debugging and analysis. This document discusses best practices for logging in Mule applications using the logger component. It recommends configuring log4j to use RollingFileAppender to control log file sizes. The logger component should be used to log messages with the log level and category string providing meaning. The category string should indicate the project, functionality, and flow being logged to help decode where log messages originate. Log4j properties can enable logging at granular levels like specific flows by configuring logger categories. Following these practices helps support teams debug issues faster through meaningful logs.

Automatic documentation with mule

Automatic documentation with muleF K Anypoint Studio has a built-in feature to automatically generate documentation for Mule projects. The documentation plugin allows users to generate an HTML report of all flows, elements, and code including attributes and descriptions provided in doc:name tags. To generate documentation, users simply select the documentation plugin in Anypoint Studio and choose an output folder. The plugin then builds an index.html file containing graphical and code views of each flow and component in the project.

Github plugin setup in anypointstudio

Github plugin setup in anypointstudioRajkattamuri The document provides steps for setting up GitHub integration in Anypoint Studio, including installing the GitHub plugin, saving a project to a Git repository for version control, committing and pushing changes to a remote repository, and importing or cloning projects from Git repositories.

Mule with drools

Mule with droolsKhan625 This document discusses using Drools, a business rules management system, with Mule to execute business rules in applications. It provides an example of creating a simple Mule application that uses a .drl file to define rules to determine a warehouse destination based on a randomly generated weight variable. The application is deployed to a Mule standalone server and the rules are executed by the Drools component to set the destination variable according to the rules.

JUnit and MUnit Set Up In Anypoint Studio

JUnit and MUnit Set Up In Anypoint StudioSudha Ch Junit can be used to write functional test cases in Java code without any specific plugins. Mockito is needed to mock interfaces instead of connecting to real systems, and the MUnit plugin can be installed in Anypoint Studio by adding its update site URL, selecting the plugin, and completing the installation wizard, which will add a src/test/munit folder to projects.

Creating a mule project with raml and api

Creating a mule project with raml and apiBhargav Ranjit This document discusses how to create an API project in MuleSoft using RAML and API Kit Router. It covers designing the API using the RAML specification in API Designer, creating the project in Anypoint Studio, running and testing the API project locally, and publishing the API to the Anypoint Exchange to enhance discoverability.

Using maven with mule

Using maven with muleSindhu VL Maven can be used to develop and manage Mule projects. Mule provides built-in Maven functionality in Anypoint Studio and for Mule ESB. In Studio, new projects can be built with Maven or existing projects can have Maven support enabled. Maven plugins for Mule ESB allow controlling Mule instances from Maven and provide archetypes for regular Mule applications. Projects developed outside Studio using Maven can also be imported.

MUnit run and wait scope

MUnit run and wait scopeShanky Gupta The Run and Wait scope provided by MUnit allows you to instruct MUnit to wait until all asynchronous executions have completed. Hence, test execution does not start until all threads opened by production code have finished processing.

Viewers also liked (20)

Cloudhub fabric

Cloudhub fabricShanky Gupta CloudHub Fabric provides scalability, workload distribution, and added reliability to CloudHub applications. These capabilities are powered by CloudHub’s scalable load-balancing service, Worker Scaleout, and Persistent Queues features.

Sap integration by mule esb

Sap integration by mule esbSon Nguyen - The document discusses using Mule ESB for SAP integration. Mule ESB supports SAP integration through a certified Java connector. The connector uses SAP Java Connector (JCo) libraries to enable bidirectional communication with SAP via interfaces like IDocs and BAPIs.

- The connector allows invoking SAP BAPIs and transmitting IDocs to and from SAP. For BAPIs, it supports synchronous and asynchronous RFC calls. For IDocs, it can receive and send documents to SAP.

- Integrating SAP with other applications via Mule ESB provides benefits like increased business efficiency, improved visibility, and cost savings. It allows automating and streamlining business processes across different systems

Mule with velocity

Mule with velocityKhan625 Mule can be used to send emails with dynamically generated content from a Velocity template. A Velocity transformer class maps external properties to the email template. The template uses HTML tags to design the email with colors, images, and logos. Dynamic values like names are populated from a properties file. When a file is processed, its content is appended to the email sent using the Velocity template.

MUnit matchers

MUnit matchersShanky Gupta MUnit matchers are functions that help validate values in mocks and assertions during testing. They allow matching based on general types rather than specific values. Common matchers check for data types like null, strings, collections, and more. Additional matchers test regular expressions and containment. MUnit also provides matchers to directly access Mule message properties and attachments for assertions.

Configuring Anypoint Studio MQ connector

Configuring Anypoint Studio MQ connectorShanky Gupta This presentation describes how to install the Anypoint MQ connector in Anypoint Studio and how to configure Studio to handle applications.

Mule message structure

Mule message structureShanky Gupta The document summarizes the structure of Mule messages, which contain a header and payload. The header includes properties and variables that provide metadata about the message. Properties have inbound and outbound scopes, while variables have flow, session, and record scopes. The document describes how to set, copy, and remove properties and variables using message processors. It also explains how to set and enrich the message payload.

Cloud hub scalability and availability

Cloud hub scalability and availabilityShanky Gupta Mulesoft cloubhub scalability and availability. Continuation of presentation CloudHub architecture from http://www.slideshare.net/ShankyGupta7/cloud-hub-architecture-63765847

Mule agent notifications

Mule agent notificationsShanky Gupta The Mule agent publishes notifications about events that occur in the Mule instance in JSON format, which allows you to implement your own system for receiving and handling notifications. Notifications are sent over both the REST and WebSocket transports

Mule tcat server - Server profiles

Mule tcat server - Server profilesShanky Gupta Tcat Server supports a feature named server profiles that offers an automated way to apply file changes and environment variable settings changes to one or more Tcat or Tomcat installations, and a central point of administration and storage of these changes.

MuleSoft CloudHub FAQ

MuleSoft CloudHub FAQShanky Gupta CloudHub is an integration platform as a service (iPaaS) that allows developers to integrate and orchestrate applications without managing infrastructure. Applications deployed to CloudHub run on "workers", which are Mule instances that can be scaled horizontally for availability. Integration applications connect different systems and services, while Anypoint Connectors provide pre-built integrations. Environment variables allow passing configuration into applications.

Anypoint access management

Anypoint access management Shanky Gupta 1) The document discusses Anypoint Access Management which allows administrators to manage users, roles, and organizations on the Anypoint Platform.

2) Administrators can create business groups to delegate management of resources and delimit scopes of roles and permissions. Child business groups can be created within parent business groups.

3) Roles control access to resources and users must be granted roles within a business group to obtain membership and access its resources.

Mule testing

Mule testingShanky Gupta This page provides a brief overview of testing Mule, linking testing concepts to the tools available to test your Mule applications, as well as to more in-depth pages detailing each concept.

Rest security in mule

Rest security in muleSon Nguyen We can secure REST web services in Mule using OAuth 2.0 authentication. This involves 3 steps: 1) Obtaining a secret code by authorizing with a client ID and credentials, 2) Exchanging the secret code for an access token, and 3) Passing the access token in the header to access the secured web service. The OAuth 2.0 component validates the access token between the HTTP and CXF components in Mule.

Mule management console

Mule management consoleShanky Gupta Mule Management Console (MMC) centralizes management and monitoring functions for all your on-premise Mule ESB Enterprise deployments, whether they are running as standalone instances, as a cluster, or embedded in application servers.

Cloudhub and Mule

Cloudhub and MuleShanky Gupta The document compares deploying Mule applications to CloudHub versus deploying to on-premises servers. Key differences include: CloudHub provides out-of-the-box functionality like load balancing but has limitations, while on-premises deployments require configuring more server aspects but provide more flexibility. Management features, ports/hosts, disk persistence, high availability, logging, and other components differ depending on the deployment target. The document provides details on these differences to help developers build applications that can be deployed to either environment.

Mule Security

Mule SecurityShanky Gupta This document discusses security features in Mule, an integration platform. It describes out-of-the-box security tools like authentication, authorization, and transport security. It also discusses Anypoint Enterprise Security, a module that provides additional security features like an OAuth 2.0 token service, credentials vault for encrypted properties, message encryption, digital signatures, filtering, and CRC checksums. These features help secure access to information, bridge trust boundaries, and ensure message integrity between applications and web services.

Using mule with web services

Using mule with web servicesShanky Gupta This document discusses using Mule ESB to integrate with web services in various ways. It covers consuming existing web services, building and exposing web services, and creating a proxy for existing web services. The main technology used is Apache CXF, which is bundled with Mule ESB and allows for web service integration. It provides details on how to consume services by generating clients from WSDLs or service interfaces, and how to expose services by creating JAX-WS, WSDL first, or simple frontend services. Proxying services is described as a way to add functionality like security or transformations.

Mule esb mule message

Mule esb mule messagesathyaraj Anand This document provides an overview and agenda for a Mule Integration Workshop. It discusses Mule ESB 3.x, the Mule messaging framework, differences between Mule and traditional ESBs, messaging styles in Mule including one-way, request-response, synchronous, and asynchronous request-response, and message scopes in Mule including inbound, outbound, invocation, session, and application scopes. Code samples are also provided to demonstrate inbound, outbound, and invocation scopes.

Mule tcat server - Monitoring applications

Mule tcat server - Monitoring applicationsShanky Gupta The document describes the tabs in the administration console that provide monitoring information for applications deployed on a Mule TCAT server. The Summary tab shows runtime statistics and request charts. The Sessions tab lists current sessions with options to view details and destroy sessions. The Attributes tab displays servlet context attributes that can be removed.

Cloud hub architecture

Cloud hub architectureShanky Gupta CloudHub is MuleSoft's integration platform that provides a multi-tenant, secure, and elastic environment for running integrations. It has two major components - platform services which coordinate deployment and monitoring, and worker clouds which run integration applications in isolated containers across regions. Applications are deployed via the Runtime Manager console and run on workers that can be scaled based on processing needs. Workers and platform services work together to provide high availability and security in a multi-tenant environment.

Ad

Similar to Mule tcat server - deploying applications (20)

Deploying to cloud hub

Deploying to cloud hubSon Nguyen The document provides instructions for deploying a Mule application to Anypoint CloudHub from Anypoint Studio or the Runtime Manager. Key steps include selecting the deployment target as CloudHub, specifying an application name and domain, selecting runtime version and worker sizing, and configuring optional settings like properties, logging, and static IPs. Limitations include that only administrators can move apps between environments and an app name must be unique within an environment.

Gwt portlet

Gwt portletprabakaranbrick The document provides steps to create JSR-168/JSR-286 portlets using Google Web Toolkit (GWT) with Liferay Portal. It outlines installing necessary software including Eclipse, GWT, Java JDK, Ant, and Liferay Portal. It then describes creating a Liferay project and portlet, adding GWT to the portlet, and deploying the portlet on Liferay Portal.

Deploying configuring caching

Deploying configuring cachingaspnet123 The document discusses various techniques for deploying and managing ASP.NET web applications. It covers deployment options like using Visual Studio or an MSI file. It also covers configuring launch conditions, custom actions, caching, and publishing/precompiling applications.

1 app 2 developers 3 servers

1 app 2 developers 3 serversMark Myers This document discusses building a core application that can be deployed on different servers using a Java core. It provides examples using the Vaadin framework to build a basic application, deploying it on Apache Tomcat and IBM WebSphere Application Server. It also discusses setting up the application on IBM Domino using an OSGi plugin project. Additional topics covered include handling multiple authentication methods and implementing a data abstraction layer to make the application agnostic to the backend data store. The goal is to allow writing applications once and deploying them on any server with minimal changes.

Cloud hub deployment

Cloud hub deploymentsivachandra mandalapu CloudHub is an integration platform that allows deploying applications from Anypoint Studio directly or by exporting a mule deployable archive. There are several steps to set up an organization on Anypoint Platform and deploy an application. These include creating a project in Anypoint Studio, configuring HTTP connectors, deploying to CloudHub by signing in and selecting a domain name, runtime version and environment. The application is then packaged, uploaded and deployed to CloudHub and can be accessed via the assigned domain name. Potential issues include configuring the correct HTTP path to avoid resource not found errors.

Implementing xpages extension library

Implementing xpages extension librarydominion This document provides an overview of installing and using the XPages Extension Library in Domino Designer. It discusses downloading and installing the Extension Library plug-in, verifying the installation, developing applications that utilize Extension Library components, and deploying those applications to the Domino server. Helpful resources for further learning about the Extension Library and XPages development are also listed.

Pantheon basics

Pantheon basicsPlasterdog Web Design An overview presentation of the unique aspects of the getpantheon.com hosting platform and the development tools provided

Connect Azure Data Factory (ADF) With Azure DevOps

Connect Azure Data Factory (ADF) With Azure DevOpskomal chauhan The article includes detailed step-by-step instructions and helpful screenshots to help users connect Azure Data Factory (ADF) with Azure DevOps.

Website Toolkit: Netdrive tutorial

Website Toolkit: Netdrive tutorialIwl Pcu NetDrive is an application that will allow you to connect all of your web files and folders to a local PC drive from anywhere in the world. This is recommended for careful and conscious batch uploading.

Jakarta struts

Jakarta strutsrajeevsingh141 This document provides instructions for setting up a simple registration example using Struts. It involves:

1. Modifying the struts-config.xml file to map the URL /actions/register1.do to the RegisterAction1 class.

2. Adding a <forward> element to struts-config.xml to specify that the result1.jsp page should be displayed when RegisterAction1 returns "success".

3. Creating the RegisterAction1 class to handle requests to /actions/register1.do. When executed, it will always return "success".

The end result is that accessing /actions/register1.do via a web browser will invoke the RegisterAction1 class and

Great Java Application Server Debate

Great Java Application Server DebateHamed Hatami This document provides an overview and comparison of several popular Java application servers: Jetty, Tomcat, JBoss, Liberty Profile, and GlassFish. It discusses and scores each application server on factors like download/installation, tooling support, server configuration, and documentation. The document is broken into multiple parts that delve deeper into specific areas of comparison. It aims to help developers determine which application server may be best suited for their needs and projects.

Joget v5 Getting Started Guide

Joget v5 Getting Started GuideJoget Workflow Joget Workflow v5 is an open source platform to easily build enterprise web apps for cloud and mobile.

This guide provides a brief introduction, and more detailed information is available in the Knowledge Base at http://community.joget.org, as well as the official website at http://www.joget.org/

TFS_Presenttation

TFS_Presenttationaqtran2000 This document provides an overview of Team Foundation, Microsoft's application lifecycle management tool. It discusses software configuration management and branches. It describes how developers can check code into the main branch, queue builds, and view build details. It also covers merging branches, searching code history, comparing builds, and shelving/unshelving code.

Using galen framework for automated cross browser layout testing

Using galen framework for automated cross browser layout testingSarah Elson Galen Framework is a test automation framework which was originally introduced to perform cross browser layout testing of a web application in a browser. Nowadays, it has become a fully functional testing framework with rich reporting and test management system. This framework supports both Java and Javascript.

Upgrade database using cloud_control Provisioning

Upgrade database using cloud_control Provisioning Monowar Mukul This document summarizes using Oracle Cloud Control 12c to perform a multiple database upgrade from Oracle 11.2.0.4 to 12.1.0.2. The database provisioning features of OEM12c allow upgrading multiple databases simultaneously in parallel. The process selects the databases to upgrade, configures new listeners, inserts a breakpoint to review and troubleshoot, then resumes and monitors the upgrade process. Validation checks the upgrade results to confirm both databases were upgraded to 12.1.0.2 successfully.

Installation Process Of New Gen Lib Oss On Windows Xp For Library Automation ...

Installation Process Of New Gen Lib Oss On Windows Xp For Library Automation ...Goutam Biswas The document provides step-by-step instructions for installing NewGenLib, an open source library automation software, on Windows XP. It details downloading required files, setting up Java, PostgreSQL, and JBoss Application Server, extracting and configuring NewGenLib files, and accessing the software through a web browser.

Magento Mobile App Builder, Mobile App For Magento Ecommerce Store - AppJetty

Magento Mobile App Builder, Mobile App For Magento Ecommerce Store - AppJettyAppJetty The document is a user manual for MageMob App Builder version 2.0.0, which allows merchants to create mobile apps for their Magento stores. It provides instructions for installation, activation, configuration of features like banners, offers, categories and notifications. Merchants can manage these elements through the Magento admin panel. The manual also includes contact information for support and customization requests.

Installing d space on windows

Installing d space on windowsBibliounivbtn This document provides step-by-step instructions for installing DSpace on Windows. It covers downloading and installing prerequisite software like Java, Apache Maven, Apache Ant, Apache Tomcat, and PostgreSQL. It then guides the user through configuring DSpace, building the DSpace installation package using Maven, and deploying DSpace on Tomcat. The 10 steps include setting environment variables, creating a PostgreSQL database, editing DSpace configuration files, running Maven and Ant commands, and starting Tomcat to access the installed DSpace instance.

Install NewGenLib on Windows XP

Install NewGenLib on Windows XPRupesh Kumar This document provides step-by-step instructions for installing NewGenLib library management software on a Windows machine. It describes downloading required files, setting up Java, PostgreSQL and JBoss Application Server, extracting and configuring NewGenLib files, and accessing the software through a web browser. The installation process configures the database, web and application servers to run the NewGenLib software.

Ad

More from Shanky Gupta (11)

Mule tcat server - common problems and solutions

Mule tcat server - common problems and solutionsShanky Gupta This document discusses common problems encountered when using Mule TCAT server and provides workarounds. It addresses issues such as servers becoming unreachable when IP addresses change, main screens not appearing in Internet Explorer 7, deployment interruptions when switching browser tabs, monitoring screens disappearing when increasing font size in Firefox, file name issues in Firefox downloads, SSL/TLS handshake exceptions, and the TCAT server service failing to start on Windows. Workarounds include re-registering servers with the current IP, adding sites to the trusted zone, waiting for full deployment before changing tabs, using default font sizes, deleting original files before redownloading, configuring alternative connectors, and verifying JAVA paths.

Mule access management - Managing Environments and Permissions

Mule access management - Managing Environments and PermissionsShanky Gupta The Anypoint Platform allows you to create and manage separate environments for deploying, which are independent from each other. This presentation also explains how permissions work across different products and APIs managed feom the Anypoint Plaform.

Mule tcat server - Monitoring a server

Mule tcat server - Monitoring a serverShanky Gupta This presentation describes how system administrators can use the MuleSoft Tcat Server to monitor the health of a server, see which applications are up and which are down, and determine memory usage. To view server details, click the server name on the Servers tab. The Server Details screen displays the information on several different tabs, which are described in the presentation slides.

Mule tcat server - automating tasks

Mule tcat server - automating tasks Shanky Gupta The document discusses how to automate tasks using scripts in MuleSoft's Tcat Server. It provides instructions on creating, modifying, saving, running, and scheduling scripts in the Admin shell. Cron command syntax is used to schedule scripts to run periodically. Examples are given for common cron commands to run scripts daily, weekly, or monthly on a specified schedule.

Mule management console Architecture

Mule management console ArchitectureShanky Gupta The Mule Management Console (MMC) provides centralized management and monitoring of Mule ESB deployments through a web-based interface. MMC communicates with Mule instances through agents that collect data and apply configuration changes, and stores transaction and environment data in databases. It allows monitoring of applications and transactions across development, testing, and production environments from a single interface.

CloudHub networking guide

CloudHub networking guideShanky Gupta CloudHub provides a variety of tools to architect your integrations and APIs so that they are maintainable, secure, and scalable. This guide covers the basic network architecture, DNS, and firewall rules.

Anypoint access management - Users

Anypoint access management - UsersShanky Gupta This presentation assumes that you have an Organization Administrator role in your organization, or that you have API Version Owner permissions and want to manage user permissions for your API version.

The Mule Agent

The Mule AgentShanky Gupta The Mule agent is a plugin extension for a Mule runtime which exposes the Mule API. Using the Mule agent, you can monitor and control your Mule servers by calling APIs from external systems, and/or have Mule publish its own data to external systems.

MUnit - Testing Mule

MUnit - Testing MuleShanky Gupta MUnit is a Mule application testing framework that allows you to easily build automated tests for your integrations and APIs. It provides a full suite of integration and unit test capabilities, and is fully integrated with Maven and Surefire for integration with your continuous deployment environment.

OAuth 2.0 authorization

OAuth 2.0 authorization Shanky Gupta Simple flow diagram to explain OAuth 2 authorization flow for client credentials grant type

OAuth 2.0 authentication

OAuth 2.0 authentication Shanky Gupta A simple flow diagram to understand OAuth 2 authentication process

Recently uploaded (20)

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, presentation slides, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdf

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdfAbi john Analyze the growth of meme coins from mere online jokes to potential assets in the digital economy. Explore the community, culture, and utility as they elevate themselves to a new era in cryptocurrency.

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungen

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungenpanagenda Webinar Recording: https://www.panagenda.com/webinars/hcl-nomad-web-best-practices-und-verwaltung-von-multiuser-umgebungen/

HCL Nomad Web wird als die nächste Generation des HCL Notes-Clients gefeiert und bietet zahlreiche Vorteile, wie die Beseitigung des Bedarfs an Paketierung, Verteilung und Installation. Nomad Web-Client-Updates werden “automatisch” im Hintergrund installiert, was den administrativen Aufwand im Vergleich zu traditionellen HCL Notes-Clients erheblich reduziert. Allerdings stellt die Fehlerbehebung in Nomad Web im Vergleich zum Notes-Client einzigartige Herausforderungen dar.

Begleiten Sie Christoph und Marc, während sie demonstrieren, wie der Fehlerbehebungsprozess in HCL Nomad Web vereinfacht werden kann, um eine reibungslose und effiziente Benutzererfahrung zu gewährleisten.

In diesem Webinar werden wir effektive Strategien zur Diagnose und Lösung häufiger Probleme in HCL Nomad Web untersuchen, einschließlich

- Zugriff auf die Konsole

- Auffinden und Interpretieren von Protokolldateien

- Zugriff auf den Datenordner im Cache des Browsers (unter Verwendung von OPFS)

- Verständnis der Unterschiede zwischen Einzel- und Mehrbenutzerszenarien

- Nutzung der Client Clocking-Funktion

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...Impelsys Inc. Impelsys provided a robust testing solution, leveraging a risk-based and requirement-mapped approach to validate ICU Connect and CritiXpert. A well-defined test suite was developed to assess data communication, clinical data collection, transformation, and visualization across integrated devices.

2025-05-Q4-2024-Investor-Presentation.pptx

2025-05-Q4-2024-Investor-Presentation.pptxSamuele Fogagnolo Cloudflare Q4 Financial Results Presentation

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptx

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptxAnoop Ashok In today's fast-paced retail environment, efficiency is key. Every minute counts, and every penny matters. One tool that can significantly boost your store's efficiency is a well-executed planogram. These visual merchandising blueprints not only enhance store layouts but also save time and money in the process.

TrsLabs - Fintech Product & Business Consulting

TrsLabs - Fintech Product & Business ConsultingTrs Labs Hybrid Growth Mandate Model with TrsLabs

Strategic Investments, Inorganic Growth, Business Model Pivoting are critical activities that business don't do/change everyday. In cases like this, it may benefit your business to choose a temporary external consultant.

An unbiased plan driven by clearcut deliverables, market dynamics and without the influence of your internal office equations empower business leaders to make right choices.

Getting things done within a budget within a timeframe is key to Growing Business - No matter whether you are a start-up or a big company

Talk to us & Unlock the competitive advantage

Drupalcamp Finland – Measuring Front-end Energy Consumption

Drupalcamp Finland – Measuring Front-end Energy ConsumptionExove How to measure web front-end energy consumption using Firefox Profiler. Presented in DrupalCamp Finland on April 25th, 2025.

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...Aqusag Technologies In late April 2025, a significant portion of Europe, particularly Spain, Portugal, and parts of southern France, experienced widespread, rolling power outages that continue to affect millions of residents, businesses, and infrastructure systems.

Splunk Security Update | Public Sector Summit Germany 2025

Splunk Security Update | Public Sector Summit Germany 2025Splunk Splunk Security Update

Sprecher: Marcel Tanuatmadja

AI EngineHost Review: Revolutionary USA Datacenter-Based Hosting with NVIDIA ...

AI EngineHost Review: Revolutionary USA Datacenter-Based Hosting with NVIDIA ...SOFTTECHHUB I started my online journey with several hosting services before stumbling upon Ai EngineHost. At first, the idea of paying one fee and getting lifetime access seemed too good to pass up. The platform is built on reliable US-based servers, ensuring your projects run at high speeds and remain safe. Let me take you step by step through its benefits and features as I explain why this hosting solution is a perfect fit for digital entrepreneurs.

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In France

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In Francechb3 The latest updates on Manifest's pre-seed stage progress.

How analogue intelligence complements AI

How analogue intelligence complements AIPaul Rowe

Artificial Intelligence is providing benefits in many areas of work within the heritage sector, from image analysis, to ideas generation, and new research tools. However, it is more critical than ever for people, with analogue intelligence, to ensure the integrity and ethical use of AI. Including real people can improve the use of AI by identifying potential biases, cross-checking results, refining workflows, and providing contextual relevance to AI-driven results.

News about the impact of AI often paints a rosy picture. In practice, there are many potential pitfalls. This presentation discusses these issues and looks at the role of analogue intelligence and analogue interfaces in providing the best results to our audiences. How do we deal with factually incorrect results? How do we get content generated that better reflects the diversity of our communities? What roles are there for physical, in-person experiences in the digital world?

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...organizerofv IEDM 2024 Tutorial2

AI and Data Privacy in 2025: Global Trends

AI and Data Privacy in 2025: Global TrendsInData Labs In this infographic, we explore how businesses can implement effective governance frameworks to address AI data privacy. Understanding it is crucial for developing effective strategies that ensure compliance, safeguard customer trust, and leverage AI responsibly. Equip yourself with insights that can drive informed decision-making and position your organization for success in the future of data privacy.

This infographic contains:

-AI and data privacy: Key findings

-Statistics on AI data privacy in the today’s world

-Tips on how to overcome data privacy challenges

-Benefits of AI data security investments.

Keep up-to-date on how AI is reshaping privacy standards and what this entails for both individuals and organizations.

Semantic Cultivators : The Critical Future Role to Enable AI

Semantic Cultivators : The Critical Future Role to Enable AIartmondano By 2026, AI agents will consume 10x more enterprise data than humans, but with none of the contextual understanding that prevents catastrophic misinterpretations.

Big Data Analytics Quick Research Guide by Arthur Morgan

Big Data Analytics Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

Mule tcat server - deploying applications

- 1. MULE TCAT SERVER DEPLOYING APPLICATIONS Shanky Gupta

- 2. Introduction ◦ A Deployment is the mechanism that enables you to deploy one or more applications to multiple Tomcat instances or groups, and to undeploy them just as easily. This page describes the various tasks related to deployment. ◦ A Deployment is a grouping of applications you can deploy to servers or groups.

- 3. Creating a Deployment ◦ To create the deployment: ◦ On the Deployments tab, click New Deployment and enter a unique name for this entity. ◦ Just beneath the Web Applications list, click Add from Repository or Upload New Version. ◦ Browse to the .war file and click Open. ◦ For a new web application, you can edit the URL context path by clicking Advanced Options and entering it in the Name field. ◦ Edit version information (optional). ◦ Click Add.

- 4. ◦ To the right of the Web Applications list, select the target server or group for this deployment. ◦ To the bottom right, click Save. ◦ The package now appears on the Deployments tab. If you saved the package without deploying it, you can deploy it later by selecting its check box and clicking Deploy.

- 5. Uploading Applications ◦ There are three ways to upload applications (WAR files) into the repository: ◦ Add an application manually when you are creating a deployment (described below). ◦ Add applications directly into the repository using the Repository tab (administrator permissions are required). ◦ When developing an application, upload it to the repository as part of the build process using the Maven Publishing Plug-in.

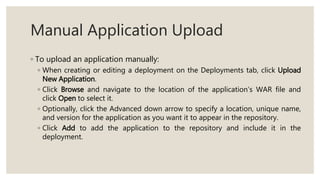

- 6. Manual Application Upload ◦ To upload an application manually: ◦ When creating or editing a deployment on the Deployments tab, click Upload New Application. ◦ Click Browse and navigate to the location of the application’s WAR file and click Open to select it. ◦ Optionally, click the Advanced down arrow to specify a location, unique name, and version for the application as you want it to appear in the repository. ◦ Click Add to add the application to the repository and include it in the deployment.

- 7. Modifying a Deployment ◦ After you have created a deployment, you might want to modify some of its details, including its applications, configuration files, or target servers. ◦ To modify a deployment: ◦ On the Deployments tab, click the name of the deployment you want to modify. ◦ Make the changes you want to the deployment, and then click Save to save the changes without deploying the updated version yet (you can click Deploy later), or click Deploy to save and deploy in one step.

- 8. Uploading a new web app ◦ To upload a new web application: ◦ On the Deployments tab, click the name of the deployment you want to modify. ◦ Click on the green up arrow to the right of the web application you want to replace. ◦ Browse to a the new .war file, click Open. ◦ Click Add to upload the new .war file. ◦ Click Save.

- 9. ◦ The deployment is now updated, but appears with a yellow icon (for unreconciled) until it is deployed.

- 10. Viewing History on a Deployment ◦ Each time you modify, deploy, or undeploy, a new version of the deployment is created. To see what has changed over time, select the deployment and click on the History tab. ◦ Note: Deployments can be restored from history using the Restore button.

- 11. ** Important ◦ Two or more deployments can contain the same web application, as long as the context path of each web application is different. If the context path of the web applications is the same, the deploy action would fail.

- 12. Parallel Deployments [v 7.0.1] ◦ Starting with Tcat 7.0.1, you can perform truly parallel deployments such as selecting multiple deployments and performing a group action on them (deploy, undeploy, redeploy), and have them work in parallel. Earlier versions allowed you to perform group actions, but the actions were queued and prior actions had to be finished for subsequent actions to be executed. Lets explain this in greater detail. ◦ Let’s say we have 2 deployments; D1 and D2. You could select these deployments and then click the "Deploy" button. In earlier versions of Tcat, deployment D1 would start executing and deploying its webapps to the servers one at a time, while deployment D2 would be queued; waiting for D1 to finish. In Tcat 7.0.1, both D1 and D2 can now simultaneous run in parallel. However, by default this feature is not enabled. You need to enable it before you start the Tcat console.

- 13. Enabling parallel deployment ◦ To enable parallel deployment: ◦ Open the webapps/console/WEB-INF/classes/galaxy.properties file ◦ Determine the number of deployments you would want to run in parallel and then update the following properties with that value for example, in the above scenario we want two deployments in parallel so we would set the properties as follows: galaxy.properties The above set the size of the "ThreadPool" which contains threads, each of which is responsible for one deployment. deployments.corePoolSize=2 deployments.maxPoolSize=2

- 14. ◦ Similarly, within a Deployment you could have multiple Webapps which need to be deployed to multiple Servers. To perform that in parallel (enabled by default), you could change the following: galaxy.properties If you change these properties while the console is running, then you need to restart the console for the new values to be picked up. deploymentExecutor.corePoolSize=5 deploymentExecutor.maxPoolSize=20