Multicolinearity

- 1. PRESENTATION ON MULTICOLINEARITY GROUP MEMBERS KRISHNA CHALISE ANKUR SHRESTHA PAWAN KAWAN SARITA MAHARJAN RITU JOSHI SONA SHRESTHA DIPIKA SHRESTHA

- 2. Multicollinearity • Multicollinearity occurs when two or more independent variables in a regression model are highly correlated to each other. • Standard error of the OLS parameter estimate will be higher if the corresponding independent variable is more highly correlated to the other independent variables in the model.

- 3. Cont… Multicollinearity is a statistical phenomenon in which two or more predictor variables in a multiple regression model are highly correlated, meaning that one can be linearly predicted from the others with a non- trivial degree of accuracy.

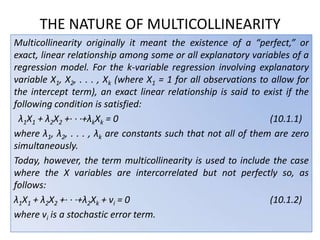

- 4. THE NATURE OF MULTICOLLINEARITY Multicollinearity originally it meant the existence of a “perfect,” or exact, linear relationship among some or all explanatory variables of a regression model. For the k-variable regression involving explanatory variable X1, X2, . . . , Xk (where X1 = 1 for all observations to allow for the intercept term), an exact linear relationship is said to exist if the following condition is satisfied: λ1X1 + λ2X2 +· · ·+λkXk = 0 (10.1.1) where λ1, λ2, . . . , λk are constants such that not all of them are zero simultaneously. Today, however, the term multicollinearity is used to include the case where the X variables are intercorrelated but not perfectly so, as follows: λ1X1 + λ2X2 +· · ·+λ2Xk + vi = 0 (10.1.2) where vi is a stochastic error term.

- 5. To see the difference between perfect and less than perfect multicollinearity, assume, for example, that λ2 ≠ 0. Then, (10.1.1) can be written as: which shows how X2 is exactly linearly related to other variables. In this situation, the coefficient of correlation between the variable X2 and the linear combination on the right side of (10.1.3) is bound to be unity. Similarly, if λ2 ≠ 0, Eq. (10.1.2) can be written as: which shows that X2 is not an exact linear combination of other X’s because it is also determined by the stochastic error term vi.

- 6. Perfect Multicollinearity • Perfect multicollinearity occurs when there is a perfect linear correlation between two or more independent variables. • When independent variable takes a constant value in all observations.

- 7. Symptoms of Multicollinearity • The symptoms of a multicollinearity problem 1. independent variable(s) considered critical in explaining the model’s dependent variable are not statistically significant according to the tests

- 8. Symptoms of Multicollinearity 2. High R2, highly significant F-test, but few or no statistically significant t tests 3. Parameter estimates drastically change values and become statistically significant when excluding some independent variables from the regression

- 10. Causes of Multicollinearity Data collection method employed Constraints on the model or in the population being sampled. Statistical model specificati on An over determine d model

- 12. CONSEQUENCES OF MULTICOLLINEARITY In cases of near or high multicollinearity, one is likely to encounter the following consequences: 1. Although BLUE, the OLS estimators have large variances and covariance, making precise estimation difficult. 2. The confidence intervals tend to be much wider, leading to the acceptance of the “zero null hypothesis” (i.e., the true population coefficient is zero) more readily.

- 13. 1. Large Variances and Co variances of OLS Estimators The variances and co variances of βˆ2 and βˆ3 are given by It is apparent from (7.4.12) and (7.4.15) that as r23 tends toward 1, that is, as co linearity increases, the variances of the two estimators increase and in the limit when r23 = 1, they are infinite. It is equally clear from (7.4.17) that as r23 increases toward 1, the covariance of the two estimators also increases in absolute value.

- 14. 2. Wider Confidence Intervals Because of the large standard errors, the confidence intervals for the relevant population parameters tend to be larger. Therefore, in cases of high multicollinearity, the sample data may be compatible with a diverse set of hypotheses. Hence, the probability of accepting a false hypothesis (i.e., type II error) increases.

- 16. 3) t ratio of one or more coefficients tends to be statistically insignificant. • In cases of high collinearity, as the estimated standard errors increased, the t values decreased.. i.e. t= βˆ2 /S.E(βˆ2 ) Therefore, in such cases, one will increasingly accept the null hypothesis that the relevant true population value is zero

- 17. 4) Even the t ratio is insignificant, R2 can be very high. Since R2 is very high, we reject the null hypothesis i.e H0: β1 =β2 = Βk = 0; due to the significant relationship between the variables. But since t ratios are small again we reject H0. Therefore there is a contradictory conclusion in the presence of multicollinearity.

- 18. 5) The OLS estimators and their standard errors can be sensitive to small changes in the data. As long as multicollinearity is not perfect, estimation of the regression coefficients is possible but the OLS estimates and their standard errors are very sensitive to even the slightest change in the data. If we change even only one observation, there is dramatic change in the values.

- 20. Detection of Multicollinearity • Multicollinearity cannot be tested; only the degree of multicollinearity can be detected. • Multicollinearity is a question of degree and not of kind. The meaningful distinction is not between the presence and the absence of multicollinearity, but between its various degrees. • Multicollinearity is a feature of the sample and not of the population. Therefore, we do not “test for multicollinearity” but we measure its degree in any particular sample.

- 21. A. The Farrar Test • Computation of F-ratio to test the location of multicollinearity. • Computation of t-ratio to test the pattern of multicollinearity. • Computation of chi-square to test the presence of multicollinearity in a function with several explanatory variables.

- 22. B. Bunch Analysis a. Coefficient of determination, R2 , in the presence of multicollinearity, R2 is high. b. In the presence of muticollinearity in the data, the partial correlation coefficients, r12 also high. c. The high standard error of the parameters shows the existence of multicollinearity.

- 23. Unsatisfactory Indicator of Multicollinearity • A large standard error may be due to the various reasons not because of the presence of linear relationships. • A very high rxiyi only is neither sufficient but nor necessary condition for the existence of multicollinearity. • R2 may be high to rxiyi and the result may be highly imprecise and significant.

- 25. Rule-of-Thumb Procedures Other methods of remedying multicollinearity Additional or new data Transformation of variables Dropping a variable(s) and specification bias Combining cross-sectional and time series data A priori information

- 26. REMEDIAL MEASURES • Do nothing – If you are only interested in prediction, multicollinearity is not an issue. – t-stats may be deflated, but still significant, hence multicollinearity is not significant. – The cure is often worse than the disease. • Drop one or more of the multicollinear variables. – This solution can introduce specification bias. WHY? – In an effort to avoid specification bias a researcher can introduce multicollinearity, hence it would be appropriate to drop a variable.

- 27. • Transform the multicollinear variables. – Form a linear combination of the multicollinear variables. – Transform the equation into first differences or logs. • Increase the sample size. – The issue of micronumerosity. – Micronumerosity is the problem of (n) not exceeding (k). The symptoms are similar to the issue of multicollinearity (lack of variation in the independent variables). • A solution to each problem is to increase the sample size. This will solve the problem of micronumerosity but not necessarily the problem of multicollinearity.

- 28. • Estimate a linear model with a double-logged model. – Such a model may be theoretically appealing since one is estimating the elasticities. – Logging the variables compresses the scales in which the variables are measured hence reducing the problem of heteroskedasticity. • In the end, you may be unable to resolve the problem of heteroskedasticity. In this case, report White’s heteroskedasticity-consistent variances and standard errors and note the substantial efforts you made to reach this conclusion

- 29. CONCLUSION

- 30. Conclusion • Multicollinearity is a statistical phenomenon in which there exists a perfect or exact relationship between the predictor variables. • When there is a perfect or exact relationship between the predictor variables, it is difficult to come up with reliable estimates of their individual coefficients. • The presence of multicollinearity can cause serious problems with the estimation of β and the interpretation.

- 31. • When multicollinearity is present in the data, ordinary least square estimators are imprecisely estimated. • If goal is to understand how the various X variables impact Y, then multicollinearity is a big problem. Thus, it is very essential to detect and solve the issue of multicollinearity before estimating the parameter based on fitted regression model.

- 32. • Detection of multicollinearity can be done by examining the correlation matrix or by using VIF. • Remedial measures help to solve the problem of multicollinearity.