MySQL in the Hosted Cloud

2 likes1,011 views

You want to use MySQL in Amazon RDS, Rackspace Cloud, Google Cloud SQL or HP Helion Public Cloud? Check this out, from Percona Live London 2014. (Note that pricing of Google Cloud SQL changed prices on the same day after the presentation)

1 of 42

Download to read offline

Ad

Recommended

Bootstrapping Using Free Software

Bootstrapping Using Free SoftwareColin Charles why startups should use open source software. presented at startup camp kl 2008, to a lively discussion

Migrating and Running DBs on Amazon RDS for Oracle

Migrating and Running DBs on Amazon RDS for OracleMaris Elsins The process of migrating Oracle DBs to Amazon RDS is quite complex. Some of the challenges are - capacity planning, efficient loading of data, dealing with limitations of RDS, provisioning instance configurations, and lack and SYSDBA's access to the database. The author has migrated over 20 databases to Amazon RDS, and will provide an insight into how these challenges can be addressed. Once done with the migrations – the support of the databases is very different too, because the SYSDBA access is not provided. The author will talk about his experience on migrating to and supporting databases on Amazon RDS for Oracle from Oracle DBAs perspective, and will reveal the different problems encountered as well the solutions applied.

Managing multi tenant resource toward Hive 2.0

Managing multi tenant resource toward Hive 2.0Kai Sasaki This document discusses Treasure Data's migration architecture for managing resources across multiple clusters when upgrading from Hive 1.x to Hive 2.0. It introduces components like PerfectQueue and Plazma that enable blue-green deployment without downtime. It also describes how automatic testing and validation is done to prevent performance degradation. Resource management is discussed to define resources per account across different job queues and Hadoop clusters. Brief performance comparisons show improvements from Hive 2.x features like Tez and vectorization.

Scaling Pinterest

Scaling PinterestC4Media Video and slides synchronized, mp3 and slide download available at URL http://bit.ly/1awkL99.

Details on Pinterest's architeture, its systems -Pinball, Frontdoor-, and stack - MongoDB, Cassandra, Memcache, Redis, Flume, Kafka, EMR, Qubole, Redshift, Python, Java, Go, Nutcracker, Puppet, etc. Filmed at qconsf.com.

Yash Nelapati is an infrastructure engineer at Pinterest where he focusses on scalability, capacity planning and architecture. Prior to Pinterest he was into web development and rapidly prototyping UI. Marty Weiner joined Pinterest in early 2011 as the 2nd engineer. Previously worked at Azul Systems as a VM engineer focused on building/improving the JIT compilers in HotSpot.

Building Google-in-a-box: using Apache SolrCloud and Bigtop to index your big...

Building Google-in-a-box: using Apache SolrCloud and Bigtop to index your big...rhatr You’ve got your Hadoop cluster, you’ve got your petabytes of unstructured data, you run mapreduce jobs and SQL-on-Hadoop queries. Something is still missing though. After all, we are not expected to enter SQL queries while looking for information on the web. Altavista and Google solved it for us ages ago. Why are we still requiring SQL or Java certification from our enterprise bigdata users? In this talk, we will look into how integration of SolrCloud into Apache Bigtop is now enabling building bigdata indexing solutions and ingest pipelines. We will dive into the details of integrating full-text search into the lifecycle of your bigdata management applications and exposing the power of Google-in-a-box to all enterprise users, not just a chosen few data scientists.

Kudu - Fast Analytics on Fast Data

Kudu - Fast Analytics on Fast DataRyan Bosshart This document discusses Apache Kudu, an open source column-oriented storage system that provides fast analytics on fast data. It describes Kudu's design goals of high throughput for large scans, low latency for short accesses, and database-like semantics. The document outlines Kudu's architecture, including its use of columnar storage, replication for fault tolerance, and integrations with Spark, Impala and other frameworks. It provides examples of using Kudu for IoT and real-time analytics use cases. Performance comparisons show Kudu outperforming other NoSQL systems on analytics and operational workloads.

Hadoop and rdbms with sqoop

Hadoop and rdbms with sqoop Guy Harrison This document discusses using SQOOP to connect Hadoop and relational databases. It describes four common interoperability scenarios and provides an overview of SQOOP's features. It then focuses on optimizing SQOOP for Oracle databases by discussing how the Quest/Cloudera OraOop extension improves performance by bypassing Oracle parallelism and buffering. The document concludes by recommending best practices for using SQOOP and its extensions.

Bay Area Impala User Group Meetup (Sept 16 2014)

Bay Area Impala User Group Meetup (Sept 16 2014)Cloudera, Inc. The document discusses Impala releases and roadmaps. It outlines key features released in different Impala versions, including SQL capabilities, performance improvements, and support for additional file formats and data types. It also describes Impala's performance advantages compared to other SQL-on-Hadoop systems and how its approach is expected to increasingly favor performance gains. Lastly, it encourages trying out Impala and engaging with their community.

Introducing Apache Kudu (Incubating) - Montreal HUG May 2016

Introducing Apache Kudu (Incubating) - Montreal HUG May 2016Mladen Kovacevic The document introduces Apache Kudu (incubating), a new updatable columnar storage system for Apache Hadoop designed for fast analytics on fast and changing data. It was designed to simplify architectures that use HDFS and HBase together. Kudu aims to provide high throughput for scans, low latency for individual rows, and database-like ACID transactions. It uses a columnar format and is optimized for SSD and new storage technologies.

Oracle Databases on AWS - Getting the Best Out of RDS and EC2

Oracle Databases on AWS - Getting the Best Out of RDS and EC2Maris Elsins More and more companies consider moving all IT infrastructure to cloud to reduce the running costs and simplify management of IT assets. I've been involved in such migration project to Amazon AWS. Multiple databases were successfully moved to Amazon RDS and a few to Amazon EC2. This presentation will help you understand the capabilities of Amazon RDS and EC2 when it comes to running Oracle Databases, it will help you make the right choice between these two services, and will help you size the target instances and storage volumes according to your needs.

Hive on spark berlin buzzwords

Hive on spark berlin buzzwordsSzehon Ho June 2015 Berlin Buzzwords Presentation

http://berlinbuzzwords.de/file/bbuzz-2015-szehon-ho-hive-spark

https://berlinbuzzwords.de/session/hive-spark

Speaker Interview:

https://berlinbuzzwords.de/news/speaker-interview-szehon-ho

Big Data Day LA 2016/ NoSQL track - Apache Kudu: Fast Analytics on Fast Data,...

Big Data Day LA 2016/ NoSQL track - Apache Kudu: Fast Analytics on Fast Data,...Data Con LA 1) Apache Kudu is a new updatable columnar storage engine for Apache Hadoop that facilitates fast analytics on fast data.

2) Kudu is designed to address gaps in the current Hadoop storage landscape by providing both high throughput for big scans and low latency for short accesses simultaneously.

3) Kudu integrates with various Hadoop components like Spark, Impala, MapReduce to enable SQL queries and other analytics workloads on fast updating data.

Multi-tenant, Multi-cluster and Multi-container Apache HBase Deployments

Multi-tenant, Multi-cluster and Multi-container Apache HBase DeploymentsDataWorks Summit Multi-tenant, Multi-cluster and Multi-container Apache HBase Deployments

Jonathan Hsieh

Dima Spivak

Cloudera

Hadoop @ eBay: Past, Present, and Future

Hadoop @ eBay: Past, Present, and FutureRyan Hennig An overview of eBay's experience with Hadoop in the Past and Present, as well as directions for the Future. Given by Ryan Hennig at the Big Data Meetup at eBay in Netanya, Israel on Dec 2, 2013

Intro to Apache Kudu (short) - Big Data Application Meetup

Intro to Apache Kudu (short) - Big Data Application MeetupMike Percy Slide deck from my 20 minute talk at the Big Data Application Meetup #BDAM. See http://getkudu.io for more info.

Using Kafka and Kudu for fast, low-latency SQL analytics on streaming data

Using Kafka and Kudu for fast, low-latency SQL analytics on streaming dataMike Percy The document discusses using Kafka and Kudu for low-latency SQL analytics on streaming data. It describes the challenges of supporting both streaming and batch workloads simultaneously using traditional solutions. The authors propose using Kafka to ingest data and Kudu for structured storage and querying. They demonstrate how this allows for stream processing, batch processing, and querying of up-to-second data with low complexity. Case studies from Xiaomi and TPC-H benchmarks show the advantages of this approach over alternatives.

Scaling MySQL using Fabric

Scaling MySQL using FabricKarthik .P.R This is my presentation on MySQL Fabric in LSPE meet up ( Large Scale Production Engineering ) held at Yahoo! India on 13-09-2014.

Kudu: Resolving Transactional and Analytic Trade-offs in Hadoop

Kudu: Resolving Transactional and Analytic Trade-offs in Hadoopjdcryans Kudu is a new column-oriented storage system for Apache Hadoop that is designed to address the gaps in transactional processing and analytics in Hadoop. It aims to provide high throughput for large scans, low latency for individual rows, and database semantics like ACID transactions. Kudu is motivated by the changing hardware landscape with faster SSDs and more memory, and aims to take advantage of these advances. It uses a distributed table design partitioned into tablets replicated across servers, with a centralized metadata service for coordination.

A brave new world in mutable big data relational storage (Strata NYC 2017)

A brave new world in mutable big data relational storage (Strata NYC 2017)Todd Lipcon The ever-increasing interest in running fast analytic scans on constantly updating data is stretching the capabilities of HDFS and NoSQL storage. Users want the fast online updates and serving of real-time data that NoSQL offers, as well as the fast scans, analytics, and processing of HDFS. Additionally, users are demanding that big data storage systems integrate natively with their existing BI and analytic technology investments, which typically use SQL as the standard query language of choice. This demand has led big data back to a familiar friend: relationally structured data storage systems.

Todd Lipcon explores the advantages of relational storage and reviews new developments, including Google Cloud Spanner and Apache Kudu, which provide a scalable relational solution for users who have too much data for a legacy high-performance analytic system. Todd explains how to address use cases that fall between HDFS and NoSQL with technologies like Apache Kudu or Google Cloud Spanner and how the combination of relational data models, SQL query support, and native API-based access enables the next generation of big data applications. Along the way, he also covers suggested architectures, the performance characteristics of Kudu and Spanner, and the deployment flexibility each option provides.

January 2015 HUG: Using HBase Co-Processors to Build a Distributed, Transacti...

January 2015 HUG: Using HBase Co-Processors to Build a Distributed, Transacti...Yahoo Developer Network Monte Zweben Co-Founder and CEO of Splice Machine, will discuss how to use HBase co-processors to build an ANSI-99 SQL database with 1) parallelization of SQL execution plans, 2) ACID transactions with snapshot isolation and 3) consistent secondary indexing.

Transactions are critical in traditional RDBMSs because they ensure reliable updates across multiple rows and tables. Most operational applications require transactions, but even analytics systems use transactions to reliably update secondary indexes after a record insert or update.

In the Hadoop ecosystem, HBase is a key-value store with real-time updates, but it does not have multi-row, multi-table transactions, secondary indexes or a robust query language like SQL. Combining SQL with a full transactional model over HBase opens a whole new set of OLTP and OLAP use cases for Hadoop that was traditionally reserved for RDBMSs like MySQL or Oracle. However, a transactional HBase system has the advantage of scaling out with commodity servers, leading to a 5x-10x cost savings over traditional databases like MySQL or Oracle.

HBase co-processors, introduced in release 0.92, provide a flexible and high-performance framework to extend HBase. In this talk, we show how we used HBase co-processors to support a full ANSI SQL RDBMS without modifying the core HBase source. We will discuss how endpoint transactions are used to serialize SQL execution plans over to regions so that computation is local to where the data is stored. Additionally, we will show how observer co-processors simultaneously support both transactions and secondary indexing.

The talk will also discuss how Splice Machine extended the work of Google Percolator, Yahoo Labs’ OMID, and the University of Waterloo on distributed snapshot isolation for transactions. Lastly, performance benchmarks will be provided, including full TPC-C and TPC-H results that show how Hadoop/HBase can be a replacement of traditional RDBMS solutions.

Low latency high throughput streaming using Apache Apex and Apache Kudu

Low latency high throughput streaming using Apache Apex and Apache KuduDataWorks Summit True streaming is fast becoming a necessity for many business use cases. On the other hand the data set sizes and volumes are also growing exponentially compounding the complexity of data processing pipelines.There exists a need for true low latency streaming coupled with very high throughput data processing. Apache Apex as a low latency and high throughput data processing framework and Apache Kudu as a high throughput store form a nice combination which solves this pattern very efficiently.

This session will walk through a use case which involves writing a high throughput stream using Apache Kafka,Apache Apex and Apache Kudu. The session will start with a general overview of Apache Apex and capabilities of Apex that form the foundation for a low latency and high throughput engine with Apache kafka being an example input source of streams. Subsequently we walk through Kudu integration with Apex by walking through various patterns like end to end exactly once, selective column writes and timestamp propagations for out of band data. The session will also cover additional patterns that this integration will cover for enterprise level data processing pipelines.

The session will conclude with some metrics for latency and throughput numbers for the use case that is presented.

Speaker

Ananth Gundabattula, Senior Architect, Commonwealth Bank of Australia

Introducing Kudu

Introducing KuduJeremy Beard Jeremy Beard, a senior solutions architect at Cloudera, introduces Kudu, a new column-oriented storage system for Apache Hadoop designed for fast analytics on fast changing data. Kudu is meant to fill gaps in HDFS and HBase by providing efficient scanning, finding and writing capabilities simultaneously. It uses a relational data model with ACID transactions and integrates with common Hadoop tools like Impala, Spark and MapReduce. Kudu aims to simplify real-time analytics use cases by allowing data to be directly updated without complex ETL processes.

Apache Kudu (Incubating): New Hadoop Storage for Fast Analytics on Fast Data ...

Apache Kudu (Incubating): New Hadoop Storage for Fast Analytics on Fast Data ...Cloudera, Inc. This document provides an overview of Apache Kudu, an open source storage layer for Apache Hadoop that enables fast analytics on fast data. Some key points:

- Kudu is a columnar storage engine that allows for both fast analytics queries as well as low-latency updates to the stored data.

- It addresses gaps in the existing Hadoop storage landscape by providing efficient scans, individual row lookups, and mutable data all within the same system.

- Kudu uses a master-tablet server architecture with tablets that are horizontally partitioned and replicated for fault tolerance. It supports SQL and NoSQL interfaces.

- Integrations with Spark, Impala and MapReduce allow it to be used for both

C* Summit 2013: Time for a New Relationship - Intuit's Journey from RDBMS to ...

C* Summit 2013: Time for a New Relationship - Intuit's Journey from RDBMS to ...DataStax Academy This session talks about Intuit’s journey of our Consumer Financial Platform that is built to scale to petabytes of data. The original system used a major RDBMS and from there, we redesigned to use the distributed nature of Cassandra. This talk will go through our transition including the data model used for the final product. As with any large system transition, many hard lessons are learned and we will discuss those and share our experiences.

Introduction to Kudu: Hadoop Storage for Fast Analytics on Fast Data - Rüdige...

Introduction to Kudu: Hadoop Storage for Fast Analytics on Fast Data - Rüdige...Dataconomy Media Kudu is a new column-oriented storage system for Apache Hadoop that is designed to enable fast analytics on fast, changing data. It aims to provide high throughput for large scans and low latency for random access simultaneously. Kudu is optimized for workloads that involve high volumes of incoming data and require real-time analytics through inserts, updates, scans and lookups. It simplifies architectures for real-time analytics compared to hybrid approaches using HBase and HDFS. Currently, Kudu is in beta and provides APIs for Java, C++, Impala, MapReduce and Spark.

Building Effective Near-Real-Time Analytics with Spark Streaming and Kudu

Building Effective Near-Real-Time Analytics with Spark Streaming and KuduJeremy Beard This document discusses building near-real-time analytics pipelines using Apache Spark Streaming and Apache Kudu on the Cloudera platform. It defines near-real-time analytics, describes the relevant components of the Cloudera stack (Kafka, Spark, Kudu, Impala), and how they can work together. The document then outlines the typical stages involved in implementing a Spark Streaming to Kudu pipeline, including sourcing from a queue, translating data, deriving storage records, planning mutations, and storing the data. It provides performance considerations and introduces Envelope, a Spark Streaming application on Cloudera Labs that implements these stages through configurable pipelines.

Cloudera Impala + PostgreSQL

Cloudera Impala + PostgreSQLliuknag Hacking Cloudera Impala for running on PostgreSQL cluster as MPP style. Performances under typical sql stmt and concurrence case are verified.

MySQL Query Optimization (Basics)

MySQL Query Optimization (Basics)Karthik .P.R This document discusses various techniques for optimizing queries in MySQL databases. It covers storage engines like InnoDB and MyISAM, indexing strategies including different index types and usage examples, using explain plans to analyze query performance, and rewriting queries to improve efficiency by leveraging indexes and removing unnecessary functions. The goal of these optimization techniques is to reduce load on database servers and improve query response times as data volumes increase.

MariaDB for developers

MariaDB for developersColin Charles Learn about the MariaDB 10 features that exist for developers: microseconds, virtual columns, PCRE regular expressions, DELETE ... RETURNING, geospatial extensions (GIS), dynamic columns. Use cases, and a hint of storage engines

MariaDB for Developers and Operators (DevOps)

MariaDB for Developers and Operators (DevOps)Colin Charles MariaDB features that both developers and operators will find benefit from, especially when we focus on the 10.0 release. This is specific to a Red Hat Summit/DevNation crowd.

Ad

More Related Content

What's hot (20)

Introducing Apache Kudu (Incubating) - Montreal HUG May 2016

Introducing Apache Kudu (Incubating) - Montreal HUG May 2016Mladen Kovacevic The document introduces Apache Kudu (incubating), a new updatable columnar storage system for Apache Hadoop designed for fast analytics on fast and changing data. It was designed to simplify architectures that use HDFS and HBase together. Kudu aims to provide high throughput for scans, low latency for individual rows, and database-like ACID transactions. It uses a columnar format and is optimized for SSD and new storage technologies.

Oracle Databases on AWS - Getting the Best Out of RDS and EC2

Oracle Databases on AWS - Getting the Best Out of RDS and EC2Maris Elsins More and more companies consider moving all IT infrastructure to cloud to reduce the running costs and simplify management of IT assets. I've been involved in such migration project to Amazon AWS. Multiple databases were successfully moved to Amazon RDS and a few to Amazon EC2. This presentation will help you understand the capabilities of Amazon RDS and EC2 when it comes to running Oracle Databases, it will help you make the right choice between these two services, and will help you size the target instances and storage volumes according to your needs.

Hive on spark berlin buzzwords

Hive on spark berlin buzzwordsSzehon Ho June 2015 Berlin Buzzwords Presentation

http://berlinbuzzwords.de/file/bbuzz-2015-szehon-ho-hive-spark

https://berlinbuzzwords.de/session/hive-spark

Speaker Interview:

https://berlinbuzzwords.de/news/speaker-interview-szehon-ho

Big Data Day LA 2016/ NoSQL track - Apache Kudu: Fast Analytics on Fast Data,...

Big Data Day LA 2016/ NoSQL track - Apache Kudu: Fast Analytics on Fast Data,...Data Con LA 1) Apache Kudu is a new updatable columnar storage engine for Apache Hadoop that facilitates fast analytics on fast data.

2) Kudu is designed to address gaps in the current Hadoop storage landscape by providing both high throughput for big scans and low latency for short accesses simultaneously.

3) Kudu integrates with various Hadoop components like Spark, Impala, MapReduce to enable SQL queries and other analytics workloads on fast updating data.

Multi-tenant, Multi-cluster and Multi-container Apache HBase Deployments

Multi-tenant, Multi-cluster and Multi-container Apache HBase DeploymentsDataWorks Summit Multi-tenant, Multi-cluster and Multi-container Apache HBase Deployments

Jonathan Hsieh

Dima Spivak

Cloudera

Hadoop @ eBay: Past, Present, and Future

Hadoop @ eBay: Past, Present, and FutureRyan Hennig An overview of eBay's experience with Hadoop in the Past and Present, as well as directions for the Future. Given by Ryan Hennig at the Big Data Meetup at eBay in Netanya, Israel on Dec 2, 2013

Intro to Apache Kudu (short) - Big Data Application Meetup

Intro to Apache Kudu (short) - Big Data Application MeetupMike Percy Slide deck from my 20 minute talk at the Big Data Application Meetup #BDAM. See http://getkudu.io for more info.

Using Kafka and Kudu for fast, low-latency SQL analytics on streaming data

Using Kafka and Kudu for fast, low-latency SQL analytics on streaming dataMike Percy The document discusses using Kafka and Kudu for low-latency SQL analytics on streaming data. It describes the challenges of supporting both streaming and batch workloads simultaneously using traditional solutions. The authors propose using Kafka to ingest data and Kudu for structured storage and querying. They demonstrate how this allows for stream processing, batch processing, and querying of up-to-second data with low complexity. Case studies from Xiaomi and TPC-H benchmarks show the advantages of this approach over alternatives.

Scaling MySQL using Fabric

Scaling MySQL using FabricKarthik .P.R This is my presentation on MySQL Fabric in LSPE meet up ( Large Scale Production Engineering ) held at Yahoo! India on 13-09-2014.

Kudu: Resolving Transactional and Analytic Trade-offs in Hadoop

Kudu: Resolving Transactional and Analytic Trade-offs in Hadoopjdcryans Kudu is a new column-oriented storage system for Apache Hadoop that is designed to address the gaps in transactional processing and analytics in Hadoop. It aims to provide high throughput for large scans, low latency for individual rows, and database semantics like ACID transactions. Kudu is motivated by the changing hardware landscape with faster SSDs and more memory, and aims to take advantage of these advances. It uses a distributed table design partitioned into tablets replicated across servers, with a centralized metadata service for coordination.

A brave new world in mutable big data relational storage (Strata NYC 2017)

A brave new world in mutable big data relational storage (Strata NYC 2017)Todd Lipcon The ever-increasing interest in running fast analytic scans on constantly updating data is stretching the capabilities of HDFS and NoSQL storage. Users want the fast online updates and serving of real-time data that NoSQL offers, as well as the fast scans, analytics, and processing of HDFS. Additionally, users are demanding that big data storage systems integrate natively with their existing BI and analytic technology investments, which typically use SQL as the standard query language of choice. This demand has led big data back to a familiar friend: relationally structured data storage systems.

Todd Lipcon explores the advantages of relational storage and reviews new developments, including Google Cloud Spanner and Apache Kudu, which provide a scalable relational solution for users who have too much data for a legacy high-performance analytic system. Todd explains how to address use cases that fall between HDFS and NoSQL with technologies like Apache Kudu or Google Cloud Spanner and how the combination of relational data models, SQL query support, and native API-based access enables the next generation of big data applications. Along the way, he also covers suggested architectures, the performance characteristics of Kudu and Spanner, and the deployment flexibility each option provides.

January 2015 HUG: Using HBase Co-Processors to Build a Distributed, Transacti...

January 2015 HUG: Using HBase Co-Processors to Build a Distributed, Transacti...Yahoo Developer Network Monte Zweben Co-Founder and CEO of Splice Machine, will discuss how to use HBase co-processors to build an ANSI-99 SQL database with 1) parallelization of SQL execution plans, 2) ACID transactions with snapshot isolation and 3) consistent secondary indexing.

Transactions are critical in traditional RDBMSs because they ensure reliable updates across multiple rows and tables. Most operational applications require transactions, but even analytics systems use transactions to reliably update secondary indexes after a record insert or update.

In the Hadoop ecosystem, HBase is a key-value store with real-time updates, but it does not have multi-row, multi-table transactions, secondary indexes or a robust query language like SQL. Combining SQL with a full transactional model over HBase opens a whole new set of OLTP and OLAP use cases for Hadoop that was traditionally reserved for RDBMSs like MySQL or Oracle. However, a transactional HBase system has the advantage of scaling out with commodity servers, leading to a 5x-10x cost savings over traditional databases like MySQL or Oracle.

HBase co-processors, introduced in release 0.92, provide a flexible and high-performance framework to extend HBase. In this talk, we show how we used HBase co-processors to support a full ANSI SQL RDBMS without modifying the core HBase source. We will discuss how endpoint transactions are used to serialize SQL execution plans over to regions so that computation is local to where the data is stored. Additionally, we will show how observer co-processors simultaneously support both transactions and secondary indexing.

The talk will also discuss how Splice Machine extended the work of Google Percolator, Yahoo Labs’ OMID, and the University of Waterloo on distributed snapshot isolation for transactions. Lastly, performance benchmarks will be provided, including full TPC-C and TPC-H results that show how Hadoop/HBase can be a replacement of traditional RDBMS solutions.

Low latency high throughput streaming using Apache Apex and Apache Kudu

Low latency high throughput streaming using Apache Apex and Apache KuduDataWorks Summit True streaming is fast becoming a necessity for many business use cases. On the other hand the data set sizes and volumes are also growing exponentially compounding the complexity of data processing pipelines.There exists a need for true low latency streaming coupled with very high throughput data processing. Apache Apex as a low latency and high throughput data processing framework and Apache Kudu as a high throughput store form a nice combination which solves this pattern very efficiently.

This session will walk through a use case which involves writing a high throughput stream using Apache Kafka,Apache Apex and Apache Kudu. The session will start with a general overview of Apache Apex and capabilities of Apex that form the foundation for a low latency and high throughput engine with Apache kafka being an example input source of streams. Subsequently we walk through Kudu integration with Apex by walking through various patterns like end to end exactly once, selective column writes and timestamp propagations for out of band data. The session will also cover additional patterns that this integration will cover for enterprise level data processing pipelines.

The session will conclude with some metrics for latency and throughput numbers for the use case that is presented.

Speaker

Ananth Gundabattula, Senior Architect, Commonwealth Bank of Australia

Introducing Kudu

Introducing KuduJeremy Beard Jeremy Beard, a senior solutions architect at Cloudera, introduces Kudu, a new column-oriented storage system for Apache Hadoop designed for fast analytics on fast changing data. Kudu is meant to fill gaps in HDFS and HBase by providing efficient scanning, finding and writing capabilities simultaneously. It uses a relational data model with ACID transactions and integrates with common Hadoop tools like Impala, Spark and MapReduce. Kudu aims to simplify real-time analytics use cases by allowing data to be directly updated without complex ETL processes.

Apache Kudu (Incubating): New Hadoop Storage for Fast Analytics on Fast Data ...

Apache Kudu (Incubating): New Hadoop Storage for Fast Analytics on Fast Data ...Cloudera, Inc. This document provides an overview of Apache Kudu, an open source storage layer for Apache Hadoop that enables fast analytics on fast data. Some key points:

- Kudu is a columnar storage engine that allows for both fast analytics queries as well as low-latency updates to the stored data.

- It addresses gaps in the existing Hadoop storage landscape by providing efficient scans, individual row lookups, and mutable data all within the same system.

- Kudu uses a master-tablet server architecture with tablets that are horizontally partitioned and replicated for fault tolerance. It supports SQL and NoSQL interfaces.

- Integrations with Spark, Impala and MapReduce allow it to be used for both

C* Summit 2013: Time for a New Relationship - Intuit's Journey from RDBMS to ...

C* Summit 2013: Time for a New Relationship - Intuit's Journey from RDBMS to ...DataStax Academy This session talks about Intuit’s journey of our Consumer Financial Platform that is built to scale to petabytes of data. The original system used a major RDBMS and from there, we redesigned to use the distributed nature of Cassandra. This talk will go through our transition including the data model used for the final product. As with any large system transition, many hard lessons are learned and we will discuss those and share our experiences.

Introduction to Kudu: Hadoop Storage for Fast Analytics on Fast Data - Rüdige...

Introduction to Kudu: Hadoop Storage for Fast Analytics on Fast Data - Rüdige...Dataconomy Media Kudu is a new column-oriented storage system for Apache Hadoop that is designed to enable fast analytics on fast, changing data. It aims to provide high throughput for large scans and low latency for random access simultaneously. Kudu is optimized for workloads that involve high volumes of incoming data and require real-time analytics through inserts, updates, scans and lookups. It simplifies architectures for real-time analytics compared to hybrid approaches using HBase and HDFS. Currently, Kudu is in beta and provides APIs for Java, C++, Impala, MapReduce and Spark.

Building Effective Near-Real-Time Analytics with Spark Streaming and Kudu

Building Effective Near-Real-Time Analytics with Spark Streaming and KuduJeremy Beard This document discusses building near-real-time analytics pipelines using Apache Spark Streaming and Apache Kudu on the Cloudera platform. It defines near-real-time analytics, describes the relevant components of the Cloudera stack (Kafka, Spark, Kudu, Impala), and how they can work together. The document then outlines the typical stages involved in implementing a Spark Streaming to Kudu pipeline, including sourcing from a queue, translating data, deriving storage records, planning mutations, and storing the data. It provides performance considerations and introduces Envelope, a Spark Streaming application on Cloudera Labs that implements these stages through configurable pipelines.

Cloudera Impala + PostgreSQL

Cloudera Impala + PostgreSQLliuknag Hacking Cloudera Impala for running on PostgreSQL cluster as MPP style. Performances under typical sql stmt and concurrence case are verified.

MySQL Query Optimization (Basics)

MySQL Query Optimization (Basics)Karthik .P.R This document discusses various techniques for optimizing queries in MySQL databases. It covers storage engines like InnoDB and MyISAM, indexing strategies including different index types and usage examples, using explain plans to analyze query performance, and rewriting queries to improve efficiency by leveraging indexes and removing unnecessary functions. The goal of these optimization techniques is to reduce load on database servers and improve query response times as data volumes increase.

January 2015 HUG: Using HBase Co-Processors to Build a Distributed, Transacti...

January 2015 HUG: Using HBase Co-Processors to Build a Distributed, Transacti...Yahoo Developer Network

Viewers also liked (12)

MariaDB for developers

MariaDB for developersColin Charles Learn about the MariaDB 10 features that exist for developers: microseconds, virtual columns, PCRE regular expressions, DELETE ... RETURNING, geospatial extensions (GIS), dynamic columns. Use cases, and a hint of storage engines

MariaDB for Developers and Operators (DevOps)

MariaDB for Developers and Operators (DevOps)Colin Charles MariaDB features that both developers and operators will find benefit from, especially when we focus on the 10.0 release. This is specific to a Red Hat Summit/DevNation crowd.

Open11 maria db the new m in lamp

Open11 maria db the new m in lampColin Charles - MariaDB is a community-developed fork of MySQL that is fully compatible and intended as a drop-in replacement. It aims to be stable, high-performance, and feature-enhanced compared to MySQL.

- Major new features in MariaDB 5.1 include storage engines like XtraDB and PBXT, extended slow query log statistics, and bug fixes. MariaDB 5.2 includes virtual columns, pluggable authentication, and optimizations.

- The MariaDB community is open source and welcomes contributions beyond just coding, such as writing documentation, testing, and evangelism.

MariaDB 10.0 - SkySQL Paris Meetup

MariaDB 10.0 - SkySQL Paris MeetupMariaDB Corporation This document summarizes a talk given by Michael "Monty" Widenius about reasons to switch to MariaDB 10.0 from MySQL 5.5 or MariaDB 5.5. The talk addresses why MariaDB was created, features of MariaDB releases, benchmarks, the role of the MariaDB foundation, and reasons to switch. It provides information on the MariaDB foundation goals of developing and distributing MariaDB openly. It outlines many new features in MariaDB 10.0 including new storage engines, replication features, functionality, and improvements in areas like speed, optimization, and usability.

MariaDB: The 2012 Edition

MariaDB: The 2012 EditionColin Charles MariaDB is a community developed branch of MySQL that is feature enhanced and backward compatible. It aims to be a 100% drop-in replacement for MySQL that is stable, bug-free, and released under the GPLv2 license. Major releases of MariaDB include new storage engines like XtraDB and Aria, as well as new features for performance, scalability, and compatibility. MariaDB is developed as an open source project and supported by Monty Program and other community contributors and service providers.

State of MariaDB

State of MariaDBMonty Program The MariaDB update for 2011 from Michael Widenius of Monty Program. This was a keynote given on Wednesday 13 April 2011 at the O'Reilly MySQL Conference & Expo 2011.

Lessons from database failures

Lessons from database failuresColin Charles Presented at the MySQL Chicago Meetup in August 2016. The focus of the talk is on backups and verification, replication and failover, as well as security and encryption.

MariaDB 10: A MySQL Replacement - HKOSC

MariaDB 10: A MySQL Replacement - HKOSC Colin Charles MariaDB 10: A MySQL Replacement. Current up to 10.0.9, right before the 10.0.10 GA release presented the weekend before the release in Hong Kong, at the Hong Kong Open Source Conference.

MySQL in the Cloud

MySQL in the CloudColin Charles Today you can use MySQL in several clouds in what is considered using it as a service, a database as a service (DBaaS). Learn the differences, the access methods, and the level of control you have for the various cloud offerings including:

- Amazon RDS

- Google Cloud SQL

- HPCloud DBaaS

- Rackspace Openstack DBaaS

The administration tools and ideologies behind it are completely different, and you are in a "locked-down" environment. Some considerations include:

* Different backup strategies

* Planning for multiple data centres for availability

* Where do you host your application?

* How do you get the most performance out of the solution?

* What does this all cost?

Questions like this will be demystified in the talk.

Lessons from {distributed,remote,virtual} communities and companies

Lessons from {distributed,remote,virtual} communities and companiesColin Charles A last minute talk for the people at DevOps Amsterdam, happening around the same time as O'Reilly Velocity Amsterdam 2016. Here are lessons one can learn from distributed/remote/virtual communities and companies from someone that has spent a long time being remote and distributed.

MariaDB Server Compatibility with MySQL

MariaDB Server Compatibility with MySQLColin Charles At the MariaDB Server Developer's meeting in Amsterdam, Oct 8 2016. This was the deck to talk about what MariaDB Server 10.1/10.2 might be missing from MySQL versions up to 5.7. The focus is on compatibility of MariaDB Server with MySQL.

Forking Successfully - or is a branch better?

Forking Successfully - or is a branch better?Colin Charles Forking Successfully or do you think a branch will work better? Learn from history, see what's current, etc. Presented at OSCON London 2016. This is forking beyond the github generation. And if you're going to do it, some tips on how you could be successful.

Ad

Similar to MySQL in the Hosted Cloud (20)

MySQL in the Hosted Cloud - Percona Live 2015

MySQL in the Hosted Cloud - Percona Live 2015Colin Charles Colin Charles presented on running MySQL in the hosted cloud. He discussed various database as a service (DBaaS) options like Amazon RDS, Rackspace, and Google Cloud SQL. Key considerations for DBaaS include location, service level agreements, support options, available MySQL/MariaDB versions, access methods, configuration options, costs, and features like high availability and backups. Running MySQL on EC2 is also an option but requires more management of hardware, software, networking, storage and backups. Benchmarking and monitoring tools were recommended to evaluate performance and usage.

Databases in the hosted cloud

Databases in the hosted cloudColin Charles Today you can use hosted MySQL/MariaDB/Percona Server in several "cloud providers" in what is considered using it as a service, a database as a service (DBaaS). You can also use hosted PostgreSQL and MongoDB thru various service providers. Learn the differences, the access methods, and the level of control you have for the various public cloud offerings:

- Amazon RDS for MySQL and PostgreSQL

- Google Cloud SQL

- Rackspace OpenStack DBaaS

- The likes of compose.io, MongoLab and Rackspace's offerings around MongoDB

The administration tools and ideologies behind it are completely different, and you are in a "locked-down" environment. Some considerations include:

* Different backup strategies

* Planning for multiple data centres for availability

* Where do you host your application?

* How do you get the most performance out of the solution?

* What does this all cost?

Growth topics include:

* How do you move from one DBaaS to another?

* How do you move all this from DBaaS to your own hosted platform?

Questions like this will be demystified in the talk. This talk will benefit experienced database administrators (DBAs) who now also have to deal with cloud deployments as well as application developers in startups that have to rely on "managed services" without the ability of a DBA.

Databases in the hosted cloud

Databases in the hosted cloud Colin Charles Presented at OSCON 2018. A review of what is available from MySQL, MariaDB Server, MongoDB, PostgreSQL, and more. Covering your choices, considerations, versions, access methods, cost, a deeper look at RDS and if you should run your own instances or not.

Databases in the Hosted Cloud

Databases in the Hosted CloudColin Charles With a focus on Amazon AWS RDS MySQL and PostgreSQL, Rackspace cloud, Google Cloud SQL, Microsoft Azure for MySQL and PostgreSQL as well as a hint of the other clouds

MariaDB 10.1 what's new and what's coming in 10.2 - Tokyo MariaDB Meetup

MariaDB 10.1 what's new and what's coming in 10.2 - Tokyo MariaDB MeetupColin Charles Presented at the Tokyo MariaDB Server meetup in July 2016, this is an overview of what you can see and use in MariaDB Server 10.1, but more importantly what is planned to arrive in 10.2

Meet MariaDB 10.1 at the Bulgaria Web Summit

Meet MariaDB 10.1 at the Bulgaria Web SummitColin Charles Meet MariaDB 10.1 at the Bulgaria Web Summit, held in Sofia in February 2016. Learn all about MariaDB Server, and the new features like encryption, audit plugins, and more.

Cloudera Impala - Las Vegas Big Data Meetup Nov 5th 2014

Cloudera Impala - Las Vegas Big Data Meetup Nov 5th 2014cdmaxime Maxime Dumas gives a presentation on Cloudera Impala, which provides fast SQL query capability for Apache Hadoop. Impala allows for interactive queries on Hadoop data in seconds rather than minutes by using a native MPP query engine instead of MapReduce. It offers benefits like SQL support, improved performance of 3-4x up to 90x faster than MapReduce, and flexibility to query existing Hadoop data without needing to migrate or duplicate it. The latest release of Impala 2.0 includes new features like window functions, subqueries, and spilling joins and aggregations to disk when memory is exhausted.

Google Cloud Platform, Compute Engine, and App Engine

Google Cloud Platform, Compute Engine, and App EngineCsaba Toth Introduction to Google Cloud Platform's compute section, Google Compute Engine, Google App Engine. Place these technologies into the cloud service stack, and later show how Google blurs the boundaries of IaaS and PaaS.

Grails in the Cloud (2013)

Grails in the Cloud (2013)Meni Lubetkin This document discusses different cloud platforms for hosting Grails applications. It provides an overview of infrastructure as a service (IaaS) models like Amazon EC2 and shared/dedicated virtual private servers, as well as platform as a service (PaaS) options including Amazon Beanstalk, Google App Engine, Heroku, Cloud Foundry, and Jelastic. A comparison chart evaluates these platforms based on factors such as pricing, control, reliability, and scalability. The document emphasizes that competition and changes in the cloud space are rapid and recommends keeping applications loosely coupled and testing platforms using free trials.

MariaDB - a MySQL Replacement #SELF2014

MariaDB - a MySQL Replacement #SELF2014Colin Charles MariaDB - a MySQL replacement at South East Linux Fest 2014 - SELF2014. Learn about features that are not in MySQL 5.6, some that are only just coming in MySQL 5.7, and some that just don't exist.

Maria db 10 and the mariadb foundation(colin)

Maria db 10 and the mariadb foundation(colin)kayokogoto This document provides an overview of MariaDB 10 and the MariaDB Foundation. It discusses the history and development of MariaDB, including key features added in versions 5.1 through 10.0 such as new storage engines, performance improvements, and features backported from MySQL. It outlines the goals of MariaDB to be compatible with MySQL while adding new features, and describes the community-led development model. The roadmap aims to have MariaDB be a drop-in replacement for MySQL 5.6 by releasing version 10.1.

MySQL Ecosystem in 2023 - FOSSASIA'23 - Alkin.pptx.pdf

MySQL Ecosystem in 2023 - FOSSASIA'23 - Alkin.pptx.pdfAlkin Tezuysal MySQL is still hot, with Percona XtraDB Cluster (PXC) and MariaDB Server. Welcome back post-pandemic to see what is on offer in the current ecosystem.

Did you know that Amazon RDS now uses semi-sync replication rather than DRBD for multi-AZ deployments? Did you know that Galera Cluster for MySQL 8 is much more efficient with CLONE SST rather than using the xtrabackup method for SST? Did you know that Percona Server continues to extend MyRocks? Did you know that MariaDB Server has more Oracle syntax compatibility? This and more will be covered in the session, while short and quick, should leave you wandering to discover new features for production.

MySQL Ecosystem in 2020

MySQL Ecosystem in 2020Alkin Tezuysal * Use cases of MySQL as well as edge cases of MySQL topologies using real-life examples and "war" stories

* How scalability and proxy wars make MySQL topologies more robust to serve webscale shops

* Open-source tools, utilities, and surrounding MySQL Ecosystem.

The MySQL Server ecosystem in 2016

The MySQL Server ecosystem in 2016sys army The document summarizes the history and current state of the MySQL database server ecosystem. It discusses the origins and development of MySQL, MariaDB, Percona Server, and other related projects. It also describes some of the key features and innovations in recent versions of these database servers. The ecosystem is very active with contributions from many organizations and the future remains promising with ongoing work.

VMworld 2013: Virtualizing Databases: Doing IT Right

VMworld 2013: Virtualizing Databases: Doing IT Right VMworld

VMworld 2013

Michael Corey, Ntirety, Inc

Jeff Szastak, VMware

Learn more about VMworld and register at http://www.vmworld.com/index.jspa?src=socmed-vmworld-slideshare

The Complete MariaDB Server tutorial

The Complete MariaDB Server tutorialColin Charles Presented at Percona Live Amsterdam 2016, this is an in-depth look at MariaDB Server right up to MariaDB Server 10.1. Learn the differences. See what's already in MySQL. And so on.

MariaDB - the "new" MySQL is 5 years old and everywhere (LinuxCon Europe 2015)

MariaDB - the "new" MySQL is 5 years old and everywhere (LinuxCon Europe 2015)Colin Charles MariaDB is like the "new" MySQL, and its available everywhere. This talk was given at LinuxCon Europe in Dublin in October 2015. Learn about all the new features, considering the release was just around the corner. Changes in replication are also very interesting

Webinar Slides: MySQL HA/DR/Geo-Scale - High Noon #4: MS Azure Database MySQL

Webinar Slides: MySQL HA/DR/Geo-Scale - High Noon #4: MS Azure Database MySQLContinuent MS Azure Database for MySQL vs. Continuent Tungsten Clusters

Building a Geo-Scale, Multi-Region and Highly Available MySQL Cloud Back-End

This is the third of our High Noon series covering MySQL clustering solutions for high availability (HA), disaster recovery (DR), and geographic distribution.

Azure Database for MySQL is a managed database cluster within Microsoft Azure Cloud that runs MySQL community edition. There are really two deployment options: “Single Server” and “Flexible Server (Preview).” We will look at the Flexible Server version, even though it is still preview, because most enterprise applications require failover, so this is the relevant comparison for Tungsten Clustering.

You may use Tungsten Clustering with native MySQL, MariaDB or Percona Server for MySQL in GCP, AWS, Azure, and/or on-premises data centers for better technological capabilities, control, and flexibility. But learn about the pros and cons!

Enjoy the webinar!

AGENDA

- Goals for the High Noon Webinar Series

- High Noon Series: Tungsten Clustering vs Others

- Microsoft Azure Database for MySQL

- Key Characteristics

- Certification-based Replication

- Azure MySQL Multi-Site Requirements

- Limitations Using Azure MySQL

- How to do better MySQL HA / DR / Geo-Scale?

- Azure MySQL vs Tungsten Clustering

- About Continuent & Its Solutions

PRESENTER

Matthew Lang - Customer Success Director – Americas, Continuent - has over 25 years of experience in database administration, database programming, and system architecture, including the creation of a database replication product that is still in use today. He has designed highly available, scaleable systems that have allowed startups to quickly become enterprise organizations, utilizing a variety of technologies including open source projects, virtualization and cloud.

The MySQL Server ecosystem in 2016

The MySQL Server ecosystem in 2016Colin Charles MySQL is a unique adult (now 21 years old) in many ways. It supports plugins. It supports storage engines. It is also owned by Oracle, thus birthing two branches of the popular opensource database: Percona Server and MariaDB Server. It also once spawned a fork: Drizzle. Lately a consortium of web scale users (think a chunk of the top 10 sites out there) have spawned WebScaleSQL.

You're a busy DBA having to maintain a mix of this. Or you're a CIO planning to choose one branch. How do you go about picking? Supporting multiple databases? Find out more in this talk. Also covered is a deep-dive into what feature differences exist between MySQL/Percona Server/MariaDB/WebScaleSQL, how distributions package the various databases differently. Within the hour, you'll be informed about the past, the present, and hopefully be knowledgeable enough to know what to pick in the future.

Note, there will also be coverage of the various trees around WebScaleSQL, like the Facebook tree, the Alibaba tree as well as the Twitter tree.

Introduction to Kudu - StampedeCon 2016

Introduction to Kudu - StampedeCon 2016StampedeCon Over the past several years, the Hadoop ecosystem has made great strides in its real-time access capabilities, narrowing the gap compared to traditional database technologies. With systems such as Impala and Spark, analysts can now run complex queries or jobs over large datasets within a matter of seconds. With systems such as Apache HBase and Apache Phoenix, applications can achieve millisecond-scale random access to arbitrarily-sized datasets.

Despite these advances, some important gaps remain that prevent many applications from transitioning to Hadoop-based architectures. Users are often caught between a rock and a hard place: columnar formats such as Apache Parquet offer extremely fast scan rates for analytics, but little to no ability for real-time modification or row-by-row indexed access. Online systems such as HBase offer very fast random access, but scan rates that are too slow for large scale data warehousing workloads.

This talk will investigate the trade-offs between real-time transactional access and fast analytic performance from the perspective of storage engine internals. It will also describe Kudu, the new addition to the open source Hadoop ecosystem that fills the gap described above, complementing HDFS and HBase to provide a new option to achieve fast scans and fast random access from a single API.

Ad

More from Colin Charles (19)

Differences between MariaDB 10.3 & MySQL 8.0

Differences between MariaDB 10.3 & MySQL 8.0Colin Charles MySQL and MariaDB are becoming more divergent. Learn what is different from a high level. It is also a good idea to ensure that you use the correct database for the correct job.

What is MariaDB Server 10.3?

What is MariaDB Server 10.3?Colin Charles MariaDB Server 10.3 is a culmination of features from MariaDB Server 10.2+10.1+10.0+5.5+5.3+5.2+5.1 as well as a base branch from MySQL 5.5 and backports from MySQL 5.6/5.7. It has many new features, like a GA-ready sharding engine (SPIDER), MyRocks, as well as some Oracle compatibility, system versioned tables and a whole lot more.

MySQL features missing in MariaDB Server

MySQL features missing in MariaDB ServerColin Charles MySQL features missing in MariaDB Server. Here's an overview from the New York developer's Unconference in February 2018. This is primarily aimed at the developers, to decide what goes into MariaDB 10.4, as opposed to users.

High level comparisons are made between MySQL 5.6/5.7 with of course MySQL 8.0 as well. Here's to ensuring MariaDB Server 10/310.4 has more "Drop-in" compatibility.

The MySQL ecosystem - understanding it, not running away from it!

The MySQL ecosystem - understanding it, not running away from it! Colin Charles You're a busy DBA thinking about having to maintain a mix of this. Or you're a CIO planning to choose one branch over another. How do you go about picking? Supporting multiple databases? Find out more in this talk. Also covered is a deep-dive into what feature differences exist between MySQL/Percona Server/MariaDB Server. Within 20 minutes, you'll leave informed and knowledgable on what to pick.

A base blog post to get started: https://www.percona.com/blog/2017/11/02/mysql-vs-mariadb-reality-check/

Best practices for MySQL High Availability Tutorial

Best practices for MySQL High Availability TutorialColin Charles Given at O'Reilly Velocity London in October 2017, this tutorial focuses on the current best practices for MySQL High Availability.

Percona ServerをMySQL 5.6と5.7用に作るエンジニアリング(そしてMongoDBのヒント)

Percona ServerをMySQL 5.6と5.7用に作るエンジニアリング(そしてMongoDBのヒント)Colin Charles Engineering that goes into making Percona Server for MySQL 5.6 & 5.7 different (and a hint of MongoDB) for dbtechshowcase 2017 - the slides also have some Japanese in it. This should help a Japanese audience to read it. If there are questions due to poor translation, do not hesitate to drop me an email ([email protected]) or tweet: @bytebot

Capacity planning for your data stores

Capacity planning for your data storesColin Charles Databases require capacity planning (and to those coming from traditional RDBMS solutions, this can be thought of as a sizing guide). Capacity planning prevents resource exhaustion. Capacity planning can be hard. This talk has a heavier leaning on MySQL, but the concepts and addendum will help with any other data store.

The Proxy Wars - MySQL Router, ProxySQL, MariaDB MaxScale

The Proxy Wars - MySQL Router, ProxySQL, MariaDB MaxScaleColin Charles This document discusses MySQL proxy technologies including MySQL Router, ProxySQL, and MariaDB MaxScale. It provides an overview of each technology, including when they were released, key features, and comparisons between them. ProxySQL is highlighted as a popular option currently with integration with Percona tools, while MySQL Router may become more widely used due to its support for MySQL InnoDB Cluster. MariaDB MaxScale is noted for its binlog routing capabilities. Overall the document aims to help people understand and choose between the different MySQL proxy options.

Securing your MySQL / MariaDB Server data

Securing your MySQL / MariaDB Server dataColin Charles Co-presented alongside Ronald Bradford, this covers MySQL, Percona Server, and MariaDB Server (since the latter occasionally can be different enough). Go thru insecure practices, focus on communication security, connection security, data security, user accounts and server access security.

The MySQL Server Ecosystem in 2016

The MySQL Server Ecosystem in 2016Colin Charles This was a short 25 minute talk, but we go into a bit of a history of MySQL, how the branches and forks appeared, what's sticking around today (branch? Percona Server. Fork? MariaDB Server). What should you use? Think about what you need today and what the roadmap holds.

Best practices for MySQL/MariaDB Server/Percona Server High Availability

Best practices for MySQL/MariaDB Server/Percona Server High AvailabilityColin Charles Best practices for MySQL/MariaDB Server/Percona Server High Availability - presented at Percona Live Amsterdam 2016. The focus is on picking the right High Availability solution, discussing replication, handling failure (yes, you can achieve a quick automatic failover), proxies (there are plenty), HA in the cloud/geographical redundancy, sharding solutions, how newer versions of MySQL help you, and what to watch for next.

Lessons from database failures

Lessons from database failures Colin Charles Failure happens, and we can learn from it. We need to think about backups, but also verification of them. We should definitely make use of replication and think about automatic failover. And security is key, but don't forget that encryption is now available in MySQL, Percona Server and MariaDB Server.

Lessons from database failures

Lessons from database failuresColin Charles This is my third iteration of the talk presented in Tokyo, Japan - first was at a keynote at rootconf.in in April 2016, then at the MySQL meetup in New York, and now for dbtechshowcase. The focus is on database failures of the past, and how modern MySQL / MariaDB Server technologies could have helped them avoid such failure. The focus is on backups and verification, replication and failover, and security and encryption.

My first moments with MongoDB

My first moments with MongoDBColin Charles An introduction to MongoDB from an experienced MySQL user and developer. There are differences and we go thru the What/Why/Who/Where of MongoDB, the "similarities" to the MySQL world like storage engines, how replication is a little more interesting with built-in sharding and automatic failover, backups, monitoring, DBaaS, going to production and finding out more resources.

MariaDB Server & MySQL Security Essentials 2016

MariaDB Server & MySQL Security Essentials 2016Colin Charles This document summarizes a presentation on MariaDB/MySQL security essentials. The presentation covered historically insecure default configurations, privilege escalation vulnerabilities, access control best practices like limiting privileges to only what users need and removing unnecessary accounts. It also discussed authentication methods like SSL, PAM, Kerberos and audit plugins. Encryption at the table, tablespace and binary log level was explained as well. Preventing SQL injections and available security assessment tools were also mentioned.

Tuning Linux for your database FLOSSUK 2016

Tuning Linux for your database FLOSSUK 2016Colin Charles Some best practices about tuning Linux for your database workloads. The focus is not just on MySQL or MariaDB Server but also on understanding the OS from hardware/cloud, I/O, filesystems, memory, CPU, network, and resources.

Distributions from the view a package

Distributions from the view a packageColin Charles Having spent more than the last decade being the main point of contact for distributions shipping MySQL, then MariaDB Server, it's clear that working with distributions have many challenges. Licensing changes (when MySQL moved the client libraries from LGPL to GPL with a FOSS Exception), ABI changes, speed (or lack thereof) of distribution releases/freezes, supporting the software throughout the lifespan of the distribution, specific bugs due to platforms, and a lot more will be discussed in this talk. Let's not forget the politics. How do we decide "tiers" of importance for distributions? As a bonus, there will be a focus on how much effort it took to "replace" MySQL with MariaDB.

Benefits: if you're making a distribution, this is the point of view of the upstream package makers. Why are distribution statistics important to us? Do we monitor your bugs system or do you have a better escalation to us? How do we test to make sure things are going well before release. This and more will be spoken about.

As an upstream project (package), we love nothing more than being available everywhere. But time and energy goes into making this is so as there are quirks in every distribution.

Meet MariaDB Server 10.1 London MySQL meetup December 2015

Meet MariaDB Server 10.1 London MySQL meetup December 2015Colin Charles Meet MariaDB Server 10.1, the server that got released recently. Presented at the London MySQL Meetup in December 2015. Learn about the new features in MariaDB Server, especially around the focus of what we did to improve security.

Cool MariaDB Plugins

Cool MariaDB Plugins Colin Charles This document discusses MariaDB plugins and provides examples of several useful plugins, including authentication plugins, password validation plugins, SQL error logging, audit logging, query analysis, and more. It encourages contributing plugins to help extend MariaDB's functionality.

Recently uploaded (20)

Semantic Cultivators : The Critical Future Role to Enable AI

Semantic Cultivators : The Critical Future Role to Enable AIartmondano By 2026, AI agents will consume 10x more enterprise data than humans, but with none of the contextual understanding that prevents catastrophic misinterpretations.

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

Rock, Paper, Scissors: An Apex Map Learning Journey

Rock, Paper, Scissors: An Apex Map Learning JourneyLynda Kane Slide Deck from Presentations to WITDevs (April 2021) and Cleveland Developer Group (6/28/2023) on using Rock, Paper, Scissors to learn the Map construct in Salesforce Apex development.

AI and Data Privacy in 2025: Global Trends

AI and Data Privacy in 2025: Global TrendsInData Labs In this infographic, we explore how businesses can implement effective governance frameworks to address AI data privacy. Understanding it is crucial for developing effective strategies that ensure compliance, safeguard customer trust, and leverage AI responsibly. Equip yourself with insights that can drive informed decision-making and position your organization for success in the future of data privacy.

This infographic contains:

-AI and data privacy: Key findings

-Statistics on AI data privacy in the today’s world

-Tips on how to overcome data privacy challenges

-Benefits of AI data security investments.

Keep up-to-date on how AI is reshaping privacy standards and what this entails for both individuals and organizations.

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptx

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptxJustin Reock Building 10x Organizations with Modern Productivity Metrics

10x developers may be a myth, but 10x organizations are very real, as proven by the influential study performed in the 1980s, ‘The Coding War Games.’

Right now, here in early 2025, we seem to be experiencing YAPP (Yet Another Productivity Philosophy), and that philosophy is converging on developer experience. It seems that with every new method we invent for the delivery of products, whether physical or virtual, we reinvent productivity philosophies to go alongside them.

But which of these approaches actually work? DORA? SPACE? DevEx? What should we invest in and create urgency behind today, so that we don’t find ourselves having the same discussion again in a decade?

Drupalcamp Finland – Measuring Front-end Energy Consumption

Drupalcamp Finland – Measuring Front-end Energy ConsumptionExove How to measure web front-end energy consumption using Firefox Profiler. Presented in DrupalCamp Finland on April 25th, 2025.

Network Security. Different aspects of Network Security.

Network Security. Different aspects of Network Security.gregtap1 Network Security. Different aspects of Network Security.

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdf

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdfAbi john Analyze the growth of meme coins from mere online jokes to potential assets in the digital economy. Explore the community, culture, and utility as they elevate themselves to a new era in cryptocurrency.

#AdminHour presents: Hour of Code2018 slide deck from 12/6/2018

#AdminHour presents: Hour of Code2018 slide deck from 12/6/2018Lynda Kane Slide Deck from the #AdminHour's Hour of Code session on 12/6/2018 that features learning to code using the Python Turtle library to create snowflakes

Build Your Own Copilot & Agents For Devs

Build Your Own Copilot & Agents For DevsBrian McKeiver May 2nd, 2025 talk at StirTrek 2025 Conference.

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

Automation Dreamin': Capture User Feedback From Anywhere

Automation Dreamin': Capture User Feedback From AnywhereLynda Kane Slide Deck from Automation Dreamin' 2022 presentation Capture User Feedback from Anywhere

Automation Dreamin' 2022: Sharing Some Gratitude with Your Users

Automation Dreamin' 2022: Sharing Some Gratitude with Your UsersLynda Kane Slide Deck from Automation Dreamin'2022 presentation Sharing Some Gratitude with Your Users on creating a Flow to present a random statement of Gratitude to a User in Salesforce.

"Client Partnership — the Path to Exponential Growth for Companies Sized 50-5...

"Client Partnership — the Path to Exponential Growth for Companies Sized 50-5...Fwdays Why the "more leads, more sales" approach is not a silver bullet for a company.

Common symptoms of an ineffective Client Partnership (CP).

Key reasons why CP fails.

Step-by-step roadmap for building this function (processes, roles, metrics).

Business outcomes of CP implementation based on examples of companies sized 50-500.

Procurement Insights Cost To Value Guide.pptx

Procurement Insights Cost To Value Guide.pptxJon Hansen Procurement Insights integrated Historic Procurement Industry Archives, serves as a powerful complement — not a competitor — to other procurement industry firms. It fills critical gaps in depth, agility, and contextual insight that most traditional analyst and association models overlook.

Learn more about this value- driven proprietary service offering here.

tecnologias de las primeras civilizaciones.pdf

tecnologias de las primeras civilizaciones.pdffjgm517 descaripcion detallada del avance de las tecnologias en mesopotamia, egipto, roma y grecia.

"PHP and MySQL CRUD Operations for Student Management System"

"PHP and MySQL CRUD Operations for Student Management System"Jainul Musani Php with MySQL database Connectivity - CRUD Operations

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat The MCP (Model Context Protocol) is a framework designed to manage context and interaction within complex systems. This SlideShare presentation will provide a detailed overview of the MCP Model, its applications, and how it plays a crucial role in improving communication and decision-making in distributed systems. We will explore the key concepts behind the protocol, including the importance of context, data management, and how this model enhances system adaptability and responsiveness. Ideal for software developers, system architects, and IT professionals, this presentation will offer valuable insights into how the MCP Model can streamline workflows, improve efficiency, and create more intuitive systems for a wide range of use cases.

Automation Hour 1/28/2022: Capture User Feedback from Anywhere

Automation Hour 1/28/2022: Capture User Feedback from AnywhereLynda Kane Slide Deck from Automation Hour 1/28/2022 presentation Capture User Feedback from Anywhere presenting setting up a Custom Object and Flow to collection User Feedback in Dynamic Pages and schedule a report to act on that feedback regularly.

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat

MySQL in the Hosted Cloud

- 1. MySQL in the Hosted Cloud Colin Charles, MariaDB Corporation Ab [email protected] | [email protected] http://mariadb.com/ | http://mariadb.org/ http://bytebot.net/blog/ | @bytebot on Twitter Percona Live London, United Kingdom 4 November 2014 1

- 2. whoami • Work on MariaDB at MariaDB Corporation (SkySQL Ab) • Merged with Monty Program Ab, makers of MariaDB • Formerly MySQL AB (exit: Sun Microsystems) • Past lives include Fedora Project (FESCO), OpenOffice.org 2

- 3. Agenda • MySQL as a service offering (DBaaS) • Choices • Considerations • MySQL versions & access • Costs • Deeper into RDS • Should you run this on EC2 or an equivalent? • Conclusion 3

- 4. MySQL as a service • Database as a Service (DBaaS) • MySQL available on-demand, without any installation/configuration of hardware/ software • Pay-per-usage based • Provider maintains MySQL, you don’t maintain, upgrade, or administer the database 4

- 5. New way of deployment • Enter a credit card number • call API (or use the GUI) ec2-run-instances ami-xxx -k ${EC2_KEYPAIR} -t m1.large nova boot --image centos6-x86_64 --flavor m1.large db1 5 credit: http://www.flickr.com/photos/68751915@N05/6280507539/

- 6. Why DBaaS? • “Couldn’t we just have a few more servers to handle the traffic spike during the elections?” • Don’t have a lot of DBAs, optimise for operational ease • Rapid deployment & scale-out 6

- 7. Your choices today • Amazon Web Services Relational Database Service (RDS) • Rackspace Cloud Databases • Google Cloud SQL • HP Helion Public Cloud Relational DB 7

- 8. There are more • Jelastic - PaaS offering MySQL, MariaDB • ClearDB - MySQL partnered with heroku, appfog, Azure clouds • Joyent - Image offers Percona MySQL • Xeround - 2 weeks notice... 8

- 9. Whom we won’t be covering • GenieDB - globally distributed MySQL as a service, master-master replication, works on EC2, Rackspace, Google Compute Engine, HP Cloud • ScaleDB - promises write scaling, HA clustering, etc. replacing InnoDB/MyISAM 9

- 10. Regions & Availability Zones • Region: a data centre location, containing multiple Availability Zones • Availability Zone (AZ): isolated from failures from other AZs + low-latency network connectivity to other zones in same region 10

- 11. Location, location, location • AWS RDS: US East (N. Virginia), US West (Oregon), US West (California), EU (Ireland, Frankfurt), APAC (Singapore, Tokyo, Sydney), South America (São Paulo), GovCloud • Rackspace: USA (Dallas DFW, Chicago ORD, N. Virginia IAD), APAC (Sydney, Hong Kong), EU (London)* • Google Cloud SQL: US, EU, Asia • HP Cloud: US-East (Virginia), US-West 11

- 12. Service Level Agreements (SLA) • AWS - 99.95% in a calendar month • Rackspace - 99.9% in a calendar month • Google - 99.95% in a calendar month • HP Cloud - no specific DB SLA, 99.95% in a calendar month • SLAs exclude “scheduled maintenance” which may storage I/O + elevate latency • AWS is 30 minutes/week, so really 99.65% 12

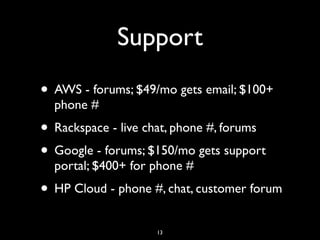

- 13. Support • AWS - forums; $49/mo gets email; $100+ phone # • Rackspace - live chat, phone #, forums • Google - forums; $150/mo gets support portal; $400+ for phone # • HP Cloud - phone #, chat, customer forum 13

- 14. Who manages this? • AWS: self-management, Enterprise ($15k+) • Rackspace: $100 + 0.04 cents/hr over regular pricing • Google: self-management • HP Cloud: self-management 14

- 15. MySQL versions • AWS: MySQL Community 5.1, 5.5, 5.6 • Rackspace: MariaDB 10, MySQL 5.6/5.1, Percona Server 5.6 • Google: MySQL Community 5.5, 5.6 (preview) • HP Cloud: Percona Server 5.5.28 15

- 16. Access methods • AWS - within Amazon, externally via mysql client, API access. • Rackspace - private hostname within Rackspace network, API access. • Google - within AppEngine, a command line Java tool (gcutil), standard mysql client • HP Cloud - within HP Cloud, externally via client (trove-cli, reddwarf), API access, mysql client 16

- 17. Can you configure MySQL? • You don’t access my.cnf naturally • In AWS you have parameter groups which allow configuration of MySQL source: http://www.mysqlperformanceblog.com/2013/08/21/amazon-rds-with-mysql-5-6-configuration-variables/ 17

- 18. Cost • Subscribe to relevant newsletters of your services • Cost changes rapidly, plus you get new instance types and new features (IOPS) • Don’t forget network access costs • Monitor your costs daily, hourly if possible (EC2 instances can have spot pricing) • https://github.com/ronaldbradford/aws 18

- 19. Costs: AWS • AWS prices vary between regions • http://aws.amazon.com/rds/pricing/ 19

- 20. Costs: AWS II • Medium instances (3.75GB) useful for testing ($1,577 vs $2,411/yr in 2013) • Large instance (7.5GB) production ready ($3,241/yr vs $4,777/yr in 2013) • m3.2XL (30GB, 8vCPUs) ($12,964/yr) • XL instance (15GB, 8ECUs) ($9,555/yr) 20

- 21. Costs: Rackspace • Option to have regular Cloud Database or Managed Instances • 4GB instance (testing) is $2,102/yr (vs. $3,504/yr in 2013) • 8GB instance (production) is $4,205/yr (vs $6,658/yr in 2013) • Consider looking at I/O priority, and the actual TPS you get 21

- 22. Costs: Google • You must enable billing before you create Cloud SQL instances • https://developers.google.com/cloud-sql/docs/billing • Testing (D8 - 4GB RAM) - ($4,274.15) • XL equivalent for production (D16 - 8GB RAM) - ($8,548.30) • Packages billing plans are cheaper than per-use billing plans 22

- 23. Costs: HP Cloud • 50% off pricing while in public beta • 4GB RAM, 60GB storage - $1,752/yr (usual: $3,504/yr) • 8GB RAM, 120GB storage - $3,504/yr (usual: $7,008/yr) 23

- 24. Where do you host your application? • Typically within the compute clusters of the service you’re running the DBaaS in • This also means your language choices are limited based on what the platform offers (eg. AppEngine only offers Java, Python, PHP, Go) 24