Native Support of Prometheus Monitoring in Apache Spark 3.0

5 likes2,640 views

All production environment requires monitoring and alerting. Apache Spark also has a configurable metrics system in order to allow users to report Spark metrics to a variety of sinks. Prometheus is one of the popular open-source monitoring and alerting toolkits which is used with Apache Spark together.

1 of 41

Downloaded 55 times

![Select a single Spark app

rate(metrics_executor_totalTasks_total{...}[1m])](https://image.slidesharecdn.com/406dongjoonhyundbtsai-200627154223/85/Native-Support-of-Prometheus-Monitoring-in-Apache-Spark-3-0-33-320.jpg)

![Set spark.sql.streaming.metricsEnabled=true (default:false)

Monitoring streaming job behavior (1/2)

Metrics

• latency

• inputRate-total

• processingRate-total

• states-rowsTotal

• states-usedBytes

• eventTime-watermark

Prefix of streaming query metric names

• metrics_[namespace]_spark_streaming_[queryName]

•](https://image.slidesharecdn.com/406dongjoonhyundbtsai-200627154223/85/Native-Support-of-Prometheus-Monitoring-in-Apache-Spark-3-0-36-320.jpg)

Ad

Recommended

Physical Plans in Spark SQL

Physical Plans in Spark SQLDatabricks In Spark SQL the physical plan provides the fundamental information about the execution of the query. The objective of this talk is to convey understanding and familiarity of query plans in Spark SQL, and use that knowledge to achieve better performance of Apache Spark queries. We will walk you through the most common operators you might find in the query plan and explain some relevant information that can be useful in order to understand some details about the execution. If you understand the query plan, you can look for the weak spot and try to rewrite the query to achieve a more optimal plan that leads to more efficient execution.

The main content of this talk is based on Spark source code but it will reflect some real-life queries that we run while processing data. We will show some examples of query plans and explain how to interpret them and what information can be taken from them. We will also describe what is happening under the hood when the plan is generated focusing mainly on the phase of physical planning. In general, in this talk we want to share what we have learned from both Spark source code and real-life queries that we run in our daily data processing.

Deep Dive: Memory Management in Apache Spark

Deep Dive: Memory Management in Apache SparkDatabricks Memory management is at the heart of any data-intensive system. Spark, in particular, must arbitrate memory allocation between two main use cases: buffering intermediate data for processing (execution) and caching user data (storage). This talk will take a deep dive through the memory management designs adopted in Spark since its inception and discuss their performance and usability implications for the end user.

Memory Management in Apache Spark

Memory Management in Apache SparkDatabricks Memory management is at the heart of any data-intensive system. Spark, in particular, must arbitrate memory allocation between two main use cases: buffering intermediate data for processing (execution) and caching user data (storage). This talk will take a deep dive through the memory management designs adopted in Spark since its inception and discuss their performance and usability implications for the end user.

Designing ETL Pipelines with Structured Streaming and Delta Lake—How to Archi...

Designing ETL Pipelines with Structured Streaming and Delta Lake—How to Archi...Databricks Structured Streaming has proven to be the best platform for building distributed stream processing applications. Its unified SQL/Dataset/DataFrame APIs and Spark’s built-in functions make it easy for developers to express complex computations. Delta Lake, on the other hand, is the best way to store structured data because it is a open-source storage layer that brings ACID transactions to Apache Spark and big data workloads Together, these can make it very easy to build pipelines in many common scenarios. However, expressing the business logic is only part of the larger problem of building end-to-end streaming pipelines that interact with a complex ecosystem of storage systems and workloads. It is important for the developer to truly understand the business problem that needs to be solved. Apache Spark, being a unified analytics engine doing both batch and stream processing, often provides multiples ways to solve the same problem. So understanding the requirements carefully helps you to architect your pipeline that solves your business needs in the most resource efficient manner.

In this talk, I am going examine a number common streaming design patterns in the context of the following questions.

WHAT are you trying to consume? What are you trying to produce? What is the final output that the business wants? What are your throughput and latency requirements?

WHY do you really have those requirements? Would solving the requirements of the individual pipeline actually solve your end-to-end business requirements?

HOW are going to architect the solution? And how much are you willing to pay for it?

Clarity in understanding the ‘what and why’ of any problem can automatically much clarity on the ‘how’ to architect it using Structured Streaming and, in many cases, Delta Lake.

Patroni - HA PostgreSQL made easy

Patroni - HA PostgreSQL made easyAlexander Kukushkin This document discusses Patroni, an open-source tool for managing high availability PostgreSQL clusters. It describes how Patroni uses a distributed configuration system like Etcd or Zookeeper to provide automated failover for PostgreSQL databases. Key features of Patroni include manual and scheduled failover, synchronous replication, dynamic configuration updates, and integration with backup tools like WAL-E. The document also covers some of the challenges of building automatic failover systems and how Patroni addresses issues like choosing a new master node and reattaching failed nodes.

Dynamic Partition Pruning in Apache Spark

Dynamic Partition Pruning in Apache SparkDatabricks In data analytics frameworks such as Spark it is important to detect and avoid scanning data that is irrelevant to the executed query, an optimization which is known as partition pruning. Dynamic partition pruning occurs when the optimizer is unable to identify at parse time the partitions it has to eliminate. In particular, we consider a star schema which consists of one or multiple fact tables referencing any number of dimension tables. In such join operations, we can prune the partitions the join reads from a fact table by identifying those partitions that result from filtering the dimension tables. In this talk we present a mechanism for performing dynamic partition pruning at runtime by reusing the dimension table broadcast results in hash joins and we show significant improvements for most TPCDS queries.

A Deep Dive into Spark SQL's Catalyst Optimizer with Yin Huai

A Deep Dive into Spark SQL's Catalyst Optimizer with Yin HuaiDatabricks Catalyst is becoming one of the most important components of Apache Spark, as it underpins all the major new APIs in Spark 2.0 and later versions, from DataFrames and Datasets to Streaming. At its core, Catalyst is a general library for manipulating trees.

In this talk, Yin explores a modular compiler frontend for Spark based on this library that includes a query analyzer, optimizer, and an execution planner. Yin offers a deep dive into Spark SQL’s Catalyst optimizer, introducing the core concepts of Catalyst and demonstrating how developers can extend it. You’ll leave with a deeper understanding of how Spark analyzes, optimizes, and plans a user’s query.

Scaling your Data Pipelines with Apache Spark on Kubernetes

Scaling your Data Pipelines with Apache Spark on KubernetesDatabricks There is no doubt Kubernetes has emerged as the next generation of cloud native infrastructure to support a wide variety of distributed workloads. Apache Spark has evolved to run both Machine Learning and large scale analytics workloads. There is growing interest in running Apache Spark natively on Kubernetes. By combining the flexibility of Kubernetes and scalable data processing with Apache Spark, you can run any data and machine pipelines on this infrastructure while effectively utilizing resources at disposal.

In this talk, Rajesh Thallam and Sougata Biswas will share how to effectively run your Apache Spark applications on Google Kubernetes Engine (GKE) and Google Cloud Dataproc, orchestrate the data and machine learning pipelines with managed Apache Airflow on GKE (Google Cloud Composer). Following topics will be covered: – Understanding key traits of Apache Spark on Kubernetes- Things to know when running Apache Spark on Kubernetes such as autoscaling- Demonstrate running analytics pipelines on Apache Spark orchestrated with Apache Airflow on Kubernetes cluster.

Parquet performance tuning: the missing guide

Parquet performance tuning: the missing guideRyan Blue Parquet performance tuning focuses on optimizing Parquet reads by leveraging columnar organization, encoding, and filtering techniques. Statistics and dictionary filtering can eliminate unnecessary data reads by filtering at the row group and page levels. However, these optimizations require columns to be sorted and fully dictionary encoded within files. Increasing dictionary size thresholds and decreasing row group sizes can help avoid dictionary encoding fallback and improve filtering effectiveness. Future work may include new encodings, compression algorithms like Brotli, and page-level filtering in the Parquet format.

Tuning Apache Spark for Large-Scale Workloads Gaoxiang Liu and Sital Kedia

Tuning Apache Spark for Large-Scale Workloads Gaoxiang Liu and Sital KediaDatabricks Apache Spark is a fast and flexible compute engine for a variety of diverse workloads. Optimizing performance for different applications often requires an understanding of Spark internals and can be challenging for Spark application developers. In this session, learn how Facebook tunes Spark to run large-scale workloads reliably and efficiently. The speakers will begin by explaining the various tools and techniques they use to discover performance bottlenecks in Spark jobs. Next, you’ll hear about important configuration parameters and their experiments tuning these parameters on large-scale production workload. You’ll also learn about Facebook’s new efforts towards automatically tuning several important configurations based on nature of the workload. The speakers will conclude by sharing their results with automatic tuning and future directions for the project.ing several important configurations based on nature of the workload. We will conclude by sharing our result with automatic tuning and future directions for the project.

Improving SparkSQL Performance by 30%: How We Optimize Parquet Pushdown and P...

Improving SparkSQL Performance by 30%: How We Optimize Parquet Pushdown and P...Databricks The document discusses optimizations made to Spark SQL performance when working with Parquet files at ByteDance. It describes how Spark originally reads Parquet files and identifies two main areas for optimization: Parquet filter pushdown and the Parquet reader. For filter pushdown, sorting columns improved statistics and reduced data reads by 30%. For the reader, splitting it to first filter then read other columns prevented loading unnecessary data. These changes improved Spark SQL performance at ByteDance without changing jobs.

Amazon S3 Best Practice and Tuning for Hadoop/Spark in the Cloud

Amazon S3 Best Practice and Tuning for Hadoop/Spark in the CloudNoritaka Sekiyama This document provides an overview and summary of Amazon S3 best practices and tuning for Hadoop/Spark in the cloud. It discusses the relationship between Hadoop/Spark and S3, the differences between HDFS and S3 and their use cases, details on how S3 behaves from the perspective of Hadoop/Spark, well-known pitfalls and tunings related to S3 consistency and multipart uploads, and recent community activities related to S3. The presentation aims to help users optimize their use of S3 storage with Hadoop/Spark frameworks.

The Parquet Format and Performance Optimization Opportunities

The Parquet Format and Performance Optimization OpportunitiesDatabricks The Parquet format is one of the most widely used columnar storage formats in the Spark ecosystem. Given that I/O is expensive and that the storage layer is the entry point for any query execution, understanding the intricacies of your storage format is important for optimizing your workloads.

As an introduction, we will provide context around the format, covering the basics of structured data formats and the underlying physical data storage model alternatives (row-wise, columnar and hybrid). Given this context, we will dive deeper into specifics of the Parquet format: representation on disk, physical data organization (row-groups, column-chunks and pages) and encoding schemes. Now equipped with sufficient background knowledge, we will discuss several performance optimization opportunities with respect to the format: dictionary encoding, page compression, predicate pushdown (min/max skipping), dictionary filtering and partitioning schemes. We will learn how to combat the evil that is ‘many small files’, and will discuss the open-source Delta Lake format in relation to this and Parquet in general.

This talk serves both as an approachable refresher on columnar storage as well as a guide on how to leverage the Parquet format for speeding up analytical workloads in Spark using tangible tips and tricks.

Deep Dive into Spark SQL with Advanced Performance Tuning with Xiao Li & Wenc...

Deep Dive into Spark SQL with Advanced Performance Tuning with Xiao Li & Wenc...Databricks Spark SQL is a highly scalable and efficient relational processing engine with ease-to-use APIs and mid-query fault tolerance. It is a core module of Apache Spark. Spark SQL can process, integrate and analyze the data from diverse data sources (e.g., Hive, Cassandra, Kafka and Oracle) and file formats (e.g., Parquet, ORC, CSV, and JSON). This talk will dive into the technical details of SparkSQL spanning the entire lifecycle of a query execution. The audience will get a deeper understanding of Spark SQL and understand how to tune Spark SQL performance.

How to build a streaming Lakehouse with Flink, Kafka, and Hudi

How to build a streaming Lakehouse with Flink, Kafka, and HudiFlink Forward Flink Forward San Francisco 2022.

With a real-time processing engine like Flink and a transactional storage layer like Hudi, it has never been easier to build end-to-end low-latency data platforms connecting sources like Kafka to data lake storage. Come learn how to blend Lakehouse architectural patterns with real-time processing pipelines with Flink and Hudi. We will dive deep on how Flink can leverage the newest features of Hudi like multi-modal indexing that dramatically improves query and write performance, data skipping that reduces the query latency by 10x for large datasets, and many more innovations unique to Flink and Hudi.

by

Ethan Guo & Kyle Weller

Processing Large Data with Apache Spark -- HasGeek

Processing Large Data with Apache Spark -- HasGeekVenkata Naga Ravi Apache Spark presentation at HasGeek FifthElelephant

https://fifthelephant.talkfunnel.com/2015/15-processing-large-data-with-apache-spark

Covering Big Data Overview, Spark Overview, Spark Internals and its supported libraries

Flink Forward San Francisco 2019: Moving from Lambda and Kappa Architectures ...

Flink Forward San Francisco 2019: Moving from Lambda and Kappa Architectures ...Flink Forward Moving from Lambda and Kappa Architectures to Kappa+ at Uber

Kappa+ is a new approach developed at Uber to overcome the limitations of the Lambda and Kappa architectures. Whether your realtime infrastructure processes data at Uber scale (well over a trillion messages daily) or only a fraction of that, chances are you will need to reprocess old data at some point.

There can be many reasons for this. Perhaps a bug fix in the realtime code needs to be retroactively applied (aka backfill), or there is a need to train realtime machine learning models on last few months of data before bringing the models online. Kafka's data retention is limited in practice and generally insufficient for such needs. So data must be processed from archives. Aside from addressing such situations, enabling efficient stream processing on archived as well as realtime data also broadens the applicability of stream processing.

This talk introduces the Kappa+ architecture which enables the reuse of streaming realtime logic (stateful and stateless) to efficiently process any amounts of historic data without requiring it to be in Kafka. We shall discuss the complexities involved in such kind of processing and the specific techniques employed in Kappa+ to tackle them.

Spark shuffle introduction

Spark shuffle introductioncolorant This document discusses Spark shuffle, which is an expensive operation that involves data partitioning, serialization/deserialization, compression, and disk I/O. It provides an overview of how shuffle works in Spark and the history of optimizations like sort-based shuffle and an external shuffle service. Key concepts discussed include shuffle writers, readers, and the pluggable block transfer service that handles data transfer. The document also covers shuffle-related configuration options and potential future work.

Parallelizing with Apache Spark in Unexpected Ways

Parallelizing with Apache Spark in Unexpected WaysDatabricks "Out of the box, Spark provides rich and extensive APIs for performing in memory, large-scale computation across data. Once a system has been built and tuned with Spark Datasets/Dataframes/RDDs, have you ever been left wondering if you could push the limits of Spark even further? In this session, we will cover some of the tips learned while building retail-scale systems at Target to maximize the parallelization that you can achieve from Spark in ways that may not be obvious from current documentation. Specifically, we will cover multithreading the Spark driver with Scala Futures to enable parallel job submission. We will talk about developing custom partitioners to leverage the ability to apply operations across understood chunks of data and what tradeoffs that entails. We will also dive into strategies for parallelizing scripts with Spark that might have nothing to with Spark to support environments where peers work in multiple languages or perhaps a different language/library is just the best thing to get the job done. Come learn how to squeeze every last drop out of your Spark job with strategies for parallelization that go off the beaten path.

"

Pyspark Tutorial | Introduction to Apache Spark with Python | PySpark Trainin...

Pyspark Tutorial | Introduction to Apache Spark with Python | PySpark Trainin...Edureka! ** PySpark Certification Training: https://www.edureka.co/pyspark-certification-training**

This Edureka tutorial on PySpark Tutorial will provide you with a detailed and comprehensive knowledge of Pyspark, how it works, the reason why python works best with Apache Spark. You will also learn about RDDs, data frames and mllib.

The Rise of ZStandard: Apache Spark/Parquet/ORC/Avro

The Rise of ZStandard: Apache Spark/Parquet/ORC/AvroDatabricks Zstandard is a fast compression algorithm which you can use in Apache Spark in various way. In this talk, I briefly summarized the evolution history of Apache Spark in this area and four main use cases and the benefits and the next steps:

1) ZStandard can optimize Spark local disk IO by compressing shuffle files significantly. This is very useful in K8s environments. It’s beneficial not only when you use `emptyDir` with `memory` medium, but also it maximizes OS cache benefit when you use shared SSDs or container local storage. In Spark 3.2, SPARK-34390 takes advantage of ZStandard buffer pool feature and its performance gain is impressive, too.

2) Event log compression is another area to save your storage cost on the cloud storage like S3 and to improve the usability. SPARK-34503 officially switched the default event log compression codec from LZ4 to Zstandard.

3) Zstandard data file compression can give you more benefits when you use ORC/Parquet files as your input and output. Apache ORC 1.6 supports Zstandardalready and Apache Spark enables it via SPARK-33978. The upcoming Parquet 1.12 will support Zstandard compression.

4) Last, but not least, since Apache Spark 3.0, Zstandard is used to serialize/deserialize MapStatus data instead of Gzip.

There are more community works to utilize Zstandard to improve Spark. For example, Apache Avro community also supports Zstandard and SPARK-34479 aims to support Zstandard in Spark’s avro file format in Spark 3.2.0.

Apache Spark Data Source V2 with Wenchen Fan and Gengliang Wang

Apache Spark Data Source V2 with Wenchen Fan and Gengliang WangDatabricks As a general computing engine, Spark can process data from various data management/storage systems, including HDFS, Hive, Cassandra and Kafka. For flexibility and high throughput, Spark defines the Data Source API, which is an abstraction of the storage layer. The Data Source API has two requirements.

1) Generality: support reading/writing most data management/storage systems.

2) Flexibility: customize and optimize the read and write paths for different systems based on their capabilities.

Data Source API V2 is one of the most important features coming with Spark 2.3. This talk will dive into the design and implementation of Data Source API V2, with comparison to the Data Source API V1. We also demonstrate how to implement a file-based data source using the Data Source API V2 for showing its generality and flexibility.

Productizing Structured Streaming Jobs

Productizing Structured Streaming JobsDatabricks "Structured Streaming was a new streaming API introduced to Spark over 2 years ago in Spark 2.0, and was announced GA as of Spark 2.2. Databricks customers have processed over a hundred trillion rows in production using Structured Streaming. We received dozens of questions on how to best develop, monitor, test, deploy and upgrade these jobs. In this talk, we aim to share best practices around what has worked and what hasn't across our customer base.

We will tackle questions around how to plan ahead, what kind of code changes are safe for structured streaming jobs, how to architect streaming pipelines which can give you the most flexibility without sacrificing performance by using tools like Databricks Delta, how to best monitor your streaming jobs and alert if your streams are falling behind or are actually failing, as well as how to best test your code."

Spark Shuffle Deep Dive (Explained In Depth) - How Shuffle Works in Spark

Spark Shuffle Deep Dive (Explained In Depth) - How Shuffle Works in SparkBo Yang The slides explain how shuffle works in Spark and help people understand more details about Spark internal. It shows how the major classes are implemented, including: ShuffleManager (SortShuffleManager), ShuffleWriter (SortShuffleWriter, BypassMergeSortShuffleWriter, UnsafeShuffleWriter), ShuffleReader (BlockStoreShuffleReader).

Best Practices for Enabling Speculative Execution on Large Scale Platforms

Best Practices for Enabling Speculative Execution on Large Scale PlatformsDatabricks Apache Spark has the ‘speculative execution’ feature to handle the slow tasks in a stage due to environment issues like slow network, disk etc. If one task is running slowly in a stage, Spark driver can launch a speculation task for it on a different host. Between the regular task and its speculation task, Spark system will later take the result from the first successfully completed task and kill the slower one.

When we first enabled the speculation feature for all Spark applications by default on a large cluster of 10K+ nodes at LinkedIn, we observed that the default values set for Spark’s speculation configuration parameters did not work well for LinkedIn’s batch jobs. For example, the system launched too many fruitless speculation tasks (i.e. tasks that were killed later). Besides, the speculation tasks did not help shorten the shuffle stages. In order to reduce the number of fruitless speculation tasks, we tried to find out the root cause, enhanced Spark engine, and tuned the speculation parameters carefully. We analyzed the number of speculation tasks launched, number of fruitful versus fruitless speculation tasks, and their corresponding cpu-memory resource consumption in terms of gigabytes-hours. We were able to reduce the average job response times by 13%, decrease the standard deviation of job elapsed times by 40%, and lower total resource consumption by 24% in a heavily utilized multi-tenant environment on a large cluster. In this talk, we will share our experience on enabling the speculative execution to achieve good job elapsed time reduction at the same time keeping a minimal overhead.

Performance Troubleshooting Using Apache Spark Metrics

Performance Troubleshooting Using Apache Spark MetricsDatabricks Luca Canali, a data engineer at CERN, presented on performance troubleshooting using Apache Spark metrics at the UnifiedDataAnalytics #SparkAISummit. CERN runs large Hadoop and Spark clusters to process over 300 PB of data from the Large Hadron Collider experiments. Luca discussed how to gather, analyze, and visualize Spark metrics to identify bottlenecks and improve performance.

Why your Spark job is failing

Why your Spark job is failingSandy Ryza The document discusses Spark exceptions and errors related to shuffling data between nodes. It notes that tasks can fail due to out of memory errors or files being closed prematurely. It also provides explanations of Spark's shuffle operations and how data is written and merged across nodes during shuffles.

Making Apache Spark Better with Delta Lake

Making Apache Spark Better with Delta LakeDatabricks Delta Lake is an open-source storage layer that brings reliability to data lakes. Delta Lake offers ACID transactions, scalable metadata handling, and unifies the streaming and batch data processing. It runs on top of your existing data lake and is fully compatible with Apache Spark APIs.

In this talk, we will cover:

* What data quality problems Delta helps address

* How to convert your existing application to Delta Lake

* How the Delta Lake transaction protocol works internally

* The Delta Lake roadmap for the next few releases

* How to get involved!

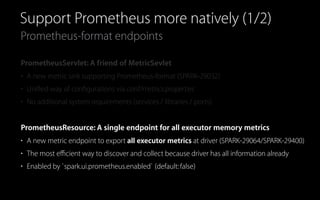

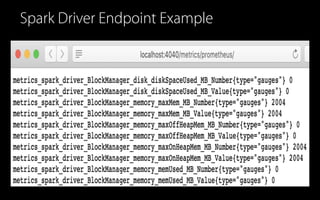

Native support of Prometheus monitoring in Apache Spark 3

Native support of Prometheus monitoring in Apache Spark 3Dongjoon Hyun This document discusses native support for Prometheus monitoring in Apache Spark 3. It introduces new Prometheus-formatted endpoints that expose Spark metrics without additional dependencies. This includes a PrometheusServlet that outputs metrics from masters, workers and drivers in Prometheus format, and a PrometheusResource endpoint that exports all executor memory metrics from the driver. The document discusses how these new endpoints make monitoring Spark on Kubernetes clusters easier by taking advantage of Prometheus service discovery. While the new endpoints are still experimental, they provide better integration with Prometheus compared to prior Spark versions.

Monitor Apache Spark 3 on Kubernetes using Metrics and Plugins

Monitor Apache Spark 3 on Kubernetes using Metrics and PluginsDatabricks This talk will cover some practical aspects of Apache Spark monitoring, focusing on measuring Apache Spark running on cloud environments, and aiming to empower Apache Spark users with data-driven performance troubleshooting. Apache Spark metrics allow extracting important information on Apache Spark’s internal execution. In addition, Apache Spark 3 has introduced an improved plugin interface extending the metrics collection to third-party APIs. This is particularly useful when running Apache Spark on cloud environments as it allows measuring OS and container metrics like CPU usage, I/O, memory usage, network throughput, and also measuring metrics related to cloud filesystems access. Participants will learn how to make use of this type of instrumentation to build and run an Apache Spark performance dashboard, which complements the existing Spark WebUI for advanced monitoring and performance troubleshooting.

Ad

More Related Content

What's hot (20)

Parquet performance tuning: the missing guide

Parquet performance tuning: the missing guideRyan Blue Parquet performance tuning focuses on optimizing Parquet reads by leveraging columnar organization, encoding, and filtering techniques. Statistics and dictionary filtering can eliminate unnecessary data reads by filtering at the row group and page levels. However, these optimizations require columns to be sorted and fully dictionary encoded within files. Increasing dictionary size thresholds and decreasing row group sizes can help avoid dictionary encoding fallback and improve filtering effectiveness. Future work may include new encodings, compression algorithms like Brotli, and page-level filtering in the Parquet format.

Tuning Apache Spark for Large-Scale Workloads Gaoxiang Liu and Sital Kedia

Tuning Apache Spark for Large-Scale Workloads Gaoxiang Liu and Sital KediaDatabricks Apache Spark is a fast and flexible compute engine for a variety of diverse workloads. Optimizing performance for different applications often requires an understanding of Spark internals and can be challenging for Spark application developers. In this session, learn how Facebook tunes Spark to run large-scale workloads reliably and efficiently. The speakers will begin by explaining the various tools and techniques they use to discover performance bottlenecks in Spark jobs. Next, you’ll hear about important configuration parameters and their experiments tuning these parameters on large-scale production workload. You’ll also learn about Facebook’s new efforts towards automatically tuning several important configurations based on nature of the workload. The speakers will conclude by sharing their results with automatic tuning and future directions for the project.ing several important configurations based on nature of the workload. We will conclude by sharing our result with automatic tuning and future directions for the project.

Improving SparkSQL Performance by 30%: How We Optimize Parquet Pushdown and P...

Improving SparkSQL Performance by 30%: How We Optimize Parquet Pushdown and P...Databricks The document discusses optimizations made to Spark SQL performance when working with Parquet files at ByteDance. It describes how Spark originally reads Parquet files and identifies two main areas for optimization: Parquet filter pushdown and the Parquet reader. For filter pushdown, sorting columns improved statistics and reduced data reads by 30%. For the reader, splitting it to first filter then read other columns prevented loading unnecessary data. These changes improved Spark SQL performance at ByteDance without changing jobs.

Amazon S3 Best Practice and Tuning for Hadoop/Spark in the Cloud

Amazon S3 Best Practice and Tuning for Hadoop/Spark in the CloudNoritaka Sekiyama This document provides an overview and summary of Amazon S3 best practices and tuning for Hadoop/Spark in the cloud. It discusses the relationship between Hadoop/Spark and S3, the differences between HDFS and S3 and their use cases, details on how S3 behaves from the perspective of Hadoop/Spark, well-known pitfalls and tunings related to S3 consistency and multipart uploads, and recent community activities related to S3. The presentation aims to help users optimize their use of S3 storage with Hadoop/Spark frameworks.

The Parquet Format and Performance Optimization Opportunities

The Parquet Format and Performance Optimization OpportunitiesDatabricks The Parquet format is one of the most widely used columnar storage formats in the Spark ecosystem. Given that I/O is expensive and that the storage layer is the entry point for any query execution, understanding the intricacies of your storage format is important for optimizing your workloads.

As an introduction, we will provide context around the format, covering the basics of structured data formats and the underlying physical data storage model alternatives (row-wise, columnar and hybrid). Given this context, we will dive deeper into specifics of the Parquet format: representation on disk, physical data organization (row-groups, column-chunks and pages) and encoding schemes. Now equipped with sufficient background knowledge, we will discuss several performance optimization opportunities with respect to the format: dictionary encoding, page compression, predicate pushdown (min/max skipping), dictionary filtering and partitioning schemes. We will learn how to combat the evil that is ‘many small files’, and will discuss the open-source Delta Lake format in relation to this and Parquet in general.

This talk serves both as an approachable refresher on columnar storage as well as a guide on how to leverage the Parquet format for speeding up analytical workloads in Spark using tangible tips and tricks.

Deep Dive into Spark SQL with Advanced Performance Tuning with Xiao Li & Wenc...

Deep Dive into Spark SQL with Advanced Performance Tuning with Xiao Li & Wenc...Databricks Spark SQL is a highly scalable and efficient relational processing engine with ease-to-use APIs and mid-query fault tolerance. It is a core module of Apache Spark. Spark SQL can process, integrate and analyze the data from diverse data sources (e.g., Hive, Cassandra, Kafka and Oracle) and file formats (e.g., Parquet, ORC, CSV, and JSON). This talk will dive into the technical details of SparkSQL spanning the entire lifecycle of a query execution. The audience will get a deeper understanding of Spark SQL and understand how to tune Spark SQL performance.

How to build a streaming Lakehouse with Flink, Kafka, and Hudi

How to build a streaming Lakehouse with Flink, Kafka, and HudiFlink Forward Flink Forward San Francisco 2022.

With a real-time processing engine like Flink and a transactional storage layer like Hudi, it has never been easier to build end-to-end low-latency data platforms connecting sources like Kafka to data lake storage. Come learn how to blend Lakehouse architectural patterns with real-time processing pipelines with Flink and Hudi. We will dive deep on how Flink can leverage the newest features of Hudi like multi-modal indexing that dramatically improves query and write performance, data skipping that reduces the query latency by 10x for large datasets, and many more innovations unique to Flink and Hudi.

by

Ethan Guo & Kyle Weller

Processing Large Data with Apache Spark -- HasGeek

Processing Large Data with Apache Spark -- HasGeekVenkata Naga Ravi Apache Spark presentation at HasGeek FifthElelephant

https://fifthelephant.talkfunnel.com/2015/15-processing-large-data-with-apache-spark

Covering Big Data Overview, Spark Overview, Spark Internals and its supported libraries

Flink Forward San Francisco 2019: Moving from Lambda and Kappa Architectures ...

Flink Forward San Francisco 2019: Moving from Lambda and Kappa Architectures ...Flink Forward Moving from Lambda and Kappa Architectures to Kappa+ at Uber

Kappa+ is a new approach developed at Uber to overcome the limitations of the Lambda and Kappa architectures. Whether your realtime infrastructure processes data at Uber scale (well over a trillion messages daily) or only a fraction of that, chances are you will need to reprocess old data at some point.

There can be many reasons for this. Perhaps a bug fix in the realtime code needs to be retroactively applied (aka backfill), or there is a need to train realtime machine learning models on last few months of data before bringing the models online. Kafka's data retention is limited in practice and generally insufficient for such needs. So data must be processed from archives. Aside from addressing such situations, enabling efficient stream processing on archived as well as realtime data also broadens the applicability of stream processing.

This talk introduces the Kappa+ architecture which enables the reuse of streaming realtime logic (stateful and stateless) to efficiently process any amounts of historic data without requiring it to be in Kafka. We shall discuss the complexities involved in such kind of processing and the specific techniques employed in Kappa+ to tackle them.

Spark shuffle introduction

Spark shuffle introductioncolorant This document discusses Spark shuffle, which is an expensive operation that involves data partitioning, serialization/deserialization, compression, and disk I/O. It provides an overview of how shuffle works in Spark and the history of optimizations like sort-based shuffle and an external shuffle service. Key concepts discussed include shuffle writers, readers, and the pluggable block transfer service that handles data transfer. The document also covers shuffle-related configuration options and potential future work.

Parallelizing with Apache Spark in Unexpected Ways

Parallelizing with Apache Spark in Unexpected WaysDatabricks "Out of the box, Spark provides rich and extensive APIs for performing in memory, large-scale computation across data. Once a system has been built and tuned with Spark Datasets/Dataframes/RDDs, have you ever been left wondering if you could push the limits of Spark even further? In this session, we will cover some of the tips learned while building retail-scale systems at Target to maximize the parallelization that you can achieve from Spark in ways that may not be obvious from current documentation. Specifically, we will cover multithreading the Spark driver with Scala Futures to enable parallel job submission. We will talk about developing custom partitioners to leverage the ability to apply operations across understood chunks of data and what tradeoffs that entails. We will also dive into strategies for parallelizing scripts with Spark that might have nothing to with Spark to support environments where peers work in multiple languages or perhaps a different language/library is just the best thing to get the job done. Come learn how to squeeze every last drop out of your Spark job with strategies for parallelization that go off the beaten path.

"

Pyspark Tutorial | Introduction to Apache Spark with Python | PySpark Trainin...

Pyspark Tutorial | Introduction to Apache Spark with Python | PySpark Trainin...Edureka! ** PySpark Certification Training: https://www.edureka.co/pyspark-certification-training**

This Edureka tutorial on PySpark Tutorial will provide you with a detailed and comprehensive knowledge of Pyspark, how it works, the reason why python works best with Apache Spark. You will also learn about RDDs, data frames and mllib.

The Rise of ZStandard: Apache Spark/Parquet/ORC/Avro

The Rise of ZStandard: Apache Spark/Parquet/ORC/AvroDatabricks Zstandard is a fast compression algorithm which you can use in Apache Spark in various way. In this talk, I briefly summarized the evolution history of Apache Spark in this area and four main use cases and the benefits and the next steps:

1) ZStandard can optimize Spark local disk IO by compressing shuffle files significantly. This is very useful in K8s environments. It’s beneficial not only when you use `emptyDir` with `memory` medium, but also it maximizes OS cache benefit when you use shared SSDs or container local storage. In Spark 3.2, SPARK-34390 takes advantage of ZStandard buffer pool feature and its performance gain is impressive, too.

2) Event log compression is another area to save your storage cost on the cloud storage like S3 and to improve the usability. SPARK-34503 officially switched the default event log compression codec from LZ4 to Zstandard.

3) Zstandard data file compression can give you more benefits when you use ORC/Parquet files as your input and output. Apache ORC 1.6 supports Zstandardalready and Apache Spark enables it via SPARK-33978. The upcoming Parquet 1.12 will support Zstandard compression.

4) Last, but not least, since Apache Spark 3.0, Zstandard is used to serialize/deserialize MapStatus data instead of Gzip.

There are more community works to utilize Zstandard to improve Spark. For example, Apache Avro community also supports Zstandard and SPARK-34479 aims to support Zstandard in Spark’s avro file format in Spark 3.2.0.

Apache Spark Data Source V2 with Wenchen Fan and Gengliang Wang

Apache Spark Data Source V2 with Wenchen Fan and Gengliang WangDatabricks As a general computing engine, Spark can process data from various data management/storage systems, including HDFS, Hive, Cassandra and Kafka. For flexibility and high throughput, Spark defines the Data Source API, which is an abstraction of the storage layer. The Data Source API has two requirements.

1) Generality: support reading/writing most data management/storage systems.

2) Flexibility: customize and optimize the read and write paths for different systems based on their capabilities.

Data Source API V2 is one of the most important features coming with Spark 2.3. This talk will dive into the design and implementation of Data Source API V2, with comparison to the Data Source API V1. We also demonstrate how to implement a file-based data source using the Data Source API V2 for showing its generality and flexibility.

Productizing Structured Streaming Jobs

Productizing Structured Streaming JobsDatabricks "Structured Streaming was a new streaming API introduced to Spark over 2 years ago in Spark 2.0, and was announced GA as of Spark 2.2. Databricks customers have processed over a hundred trillion rows in production using Structured Streaming. We received dozens of questions on how to best develop, monitor, test, deploy and upgrade these jobs. In this talk, we aim to share best practices around what has worked and what hasn't across our customer base.

We will tackle questions around how to plan ahead, what kind of code changes are safe for structured streaming jobs, how to architect streaming pipelines which can give you the most flexibility without sacrificing performance by using tools like Databricks Delta, how to best monitor your streaming jobs and alert if your streams are falling behind or are actually failing, as well as how to best test your code."

Spark Shuffle Deep Dive (Explained In Depth) - How Shuffle Works in Spark

Spark Shuffle Deep Dive (Explained In Depth) - How Shuffle Works in SparkBo Yang The slides explain how shuffle works in Spark and help people understand more details about Spark internal. It shows how the major classes are implemented, including: ShuffleManager (SortShuffleManager), ShuffleWriter (SortShuffleWriter, BypassMergeSortShuffleWriter, UnsafeShuffleWriter), ShuffleReader (BlockStoreShuffleReader).

Best Practices for Enabling Speculative Execution on Large Scale Platforms

Best Practices for Enabling Speculative Execution on Large Scale PlatformsDatabricks Apache Spark has the ‘speculative execution’ feature to handle the slow tasks in a stage due to environment issues like slow network, disk etc. If one task is running slowly in a stage, Spark driver can launch a speculation task for it on a different host. Between the regular task and its speculation task, Spark system will later take the result from the first successfully completed task and kill the slower one.

When we first enabled the speculation feature for all Spark applications by default on a large cluster of 10K+ nodes at LinkedIn, we observed that the default values set for Spark’s speculation configuration parameters did not work well for LinkedIn’s batch jobs. For example, the system launched too many fruitless speculation tasks (i.e. tasks that were killed later). Besides, the speculation tasks did not help shorten the shuffle stages. In order to reduce the number of fruitless speculation tasks, we tried to find out the root cause, enhanced Spark engine, and tuned the speculation parameters carefully. We analyzed the number of speculation tasks launched, number of fruitful versus fruitless speculation tasks, and their corresponding cpu-memory resource consumption in terms of gigabytes-hours. We were able to reduce the average job response times by 13%, decrease the standard deviation of job elapsed times by 40%, and lower total resource consumption by 24% in a heavily utilized multi-tenant environment on a large cluster. In this talk, we will share our experience on enabling the speculative execution to achieve good job elapsed time reduction at the same time keeping a minimal overhead.

Performance Troubleshooting Using Apache Spark Metrics

Performance Troubleshooting Using Apache Spark MetricsDatabricks Luca Canali, a data engineer at CERN, presented on performance troubleshooting using Apache Spark metrics at the UnifiedDataAnalytics #SparkAISummit. CERN runs large Hadoop and Spark clusters to process over 300 PB of data from the Large Hadron Collider experiments. Luca discussed how to gather, analyze, and visualize Spark metrics to identify bottlenecks and improve performance.

Why your Spark job is failing

Why your Spark job is failingSandy Ryza The document discusses Spark exceptions and errors related to shuffling data between nodes. It notes that tasks can fail due to out of memory errors or files being closed prematurely. It also provides explanations of Spark's shuffle operations and how data is written and merged across nodes during shuffles.

Making Apache Spark Better with Delta Lake

Making Apache Spark Better with Delta LakeDatabricks Delta Lake is an open-source storage layer that brings reliability to data lakes. Delta Lake offers ACID transactions, scalable metadata handling, and unifies the streaming and batch data processing. It runs on top of your existing data lake and is fully compatible with Apache Spark APIs.

In this talk, we will cover:

* What data quality problems Delta helps address

* How to convert your existing application to Delta Lake

* How the Delta Lake transaction protocol works internally

* The Delta Lake roadmap for the next few releases

* How to get involved!

Similar to Native Support of Prometheus Monitoring in Apache Spark 3.0 (20)

Native support of Prometheus monitoring in Apache Spark 3

Native support of Prometheus monitoring in Apache Spark 3Dongjoon Hyun This document discusses native support for Prometheus monitoring in Apache Spark 3. It introduces new Prometheus-formatted endpoints that expose Spark metrics without additional dependencies. This includes a PrometheusServlet that outputs metrics from masters, workers and drivers in Prometheus format, and a PrometheusResource endpoint that exports all executor memory metrics from the driver. The document discusses how these new endpoints make monitoring Spark on Kubernetes clusters easier by taking advantage of Prometheus service discovery. While the new endpoints are still experimental, they provide better integration with Prometheus compared to prior Spark versions.

Monitor Apache Spark 3 on Kubernetes using Metrics and Plugins

Monitor Apache Spark 3 on Kubernetes using Metrics and PluginsDatabricks This talk will cover some practical aspects of Apache Spark monitoring, focusing on measuring Apache Spark running on cloud environments, and aiming to empower Apache Spark users with data-driven performance troubleshooting. Apache Spark metrics allow extracting important information on Apache Spark’s internal execution. In addition, Apache Spark 3 has introduced an improved plugin interface extending the metrics collection to third-party APIs. This is particularly useful when running Apache Spark on cloud environments as it allows measuring OS and container metrics like CPU usage, I/O, memory usage, network throughput, and also measuring metrics related to cloud filesystems access. Participants will learn how to make use of this type of instrumentation to build and run an Apache Spark performance dashboard, which complements the existing Spark WebUI for advanced monitoring and performance troubleshooting.

03 2014 Apache Spark Serving: Unifying Batch, Streaming, and RESTful Serving

03 2014 Apache Spark Serving: Unifying Batch, Streaming, and RESTful ServingDatabricks We present Spark Serving, a new spark computing mode that enables users to deploy any Spark computation as a sub-millisecond latency web service backed by any Spark Cluster. Attendees will explore the architecture of Spark Serving and discover how to deploy services on a variety of cluster types like Azure Databricks, Kubernetes, and Spark Standalone. We will also demonstrate its simple yet powerful API for RESTful SparkSQL, SparkML, and Deep Network deployment with the same API as batch and streaming workloads. In addition, we will explore the "dual architecture": HTTP on Spark. This architecture converts any spark cluster into a distributed web client with the familiar and pipelinable SparkML API. These two contributions provide the fundamental spark communication primitives to integrate and deploy any computation framework into the Spark Ecosystem. We will explore how Microsoft has used this work to leverage Spark as a fault-tolerant microservice orchestration engine in addition to an ETL and ML platform. And will walk through two examples drawn from Microsoft's ongoing work on Cognitive Service composition, and unsupervised object detection for Snow Leopard recognition.

Getting Started with Apache Spark on Kubernetes

Getting Started with Apache Spark on KubernetesDatabricks Community adoption of Kubernetes (instead of YARN) as a scheduler for Apache Spark has been accelerating since the major improvements from Spark 3.0 release. Companies choose to run Spark on Kubernetes to use a single cloud-agnostic technology across their entire stack, and to benefit from improved isolation and resource sharing for concurrent workloads. In this talk, the founders of Data Mechanics, a serverless Spark platform powered by Kubernetes, will show how to easily get started with Spark on Kubernetes.

Running Apache Spark on Kubernetes: Best Practices and Pitfalls

Running Apache Spark on Kubernetes: Best Practices and PitfallsDatabricks Since initial support was added in Apache Spark 2.3, running Spark on Kubernetes has been growing in popularity

Real-Time Log Analysis with Apache Mesos, Kafka and Cassandra

Real-Time Log Analysis with Apache Mesos, Kafka and CassandraJoe Stein Slides for our solution we developed for using Mesos, Docker, Kafka, Spark, Cassandra and Solr (DataStax Enterprise Edition) all developed in Go for doing realtime log analysis at scale. Many organizations either need or want log analysis in real time where you can see within a second what is happening within your entire infrastructure. Today, with the hardware available and software systems we have in place, you can develop, build and use as a service these solutions.

Service Mesh - Observability

Service Mesh - ObservabilityAraf Karsh Hamid Building Cloud-Native App Series - Part 11 of 11

Microservices Architecture Series

Service Mesh - Observability

- Zipkin

- Prometheus

- Grafana

- Kiali

Monitoring in Big Data Platform - Albert Lewandowski, GetInData

Monitoring in Big Data Platform - Albert Lewandowski, GetInDataGetInData Did you like it? Check out our blog to stay up to date: https://getindata.com/blog

The webinar was organized by GetinData on 2020. During the webinar we explaned the concept of monitoring and observability with focus on data analytics platforms.

Watch more here: https://www.youtube.com/watch?v=qSOlEN5XBQc

Whitepaper - Monitoring ang Observability for Data Platform: https://getindata.com/blog/white-paper-big-data-monitoring-observability-data-platform/

Speaker: Albert Lewandowski

Linkedin: https://www.linkedin.com/in/albert-lewandowski/

___

Getindata is a company founded in 2014 by ex-Spotify data engineers. From day one our focus has been on Big Data projects. We bring together a group of best and most experienced experts in Poland, working with cloud and open-source Big Data technologies to help companies build scalable data architectures and implement advanced analytics over large data sets.

Our experts have vast production experience in implementing Big Data projects for Polish as well as foreign companies including i.a. Spotify, Play, Truecaller, Kcell, Acast, Allegro, ING, Agora, Synerise, StepStone, iZettle and many others from the pharmaceutical, media, finance and FMCG industries.

https://getindata.com

What is New with Apache Spark Performance Monitoring in Spark 3.0

What is New with Apache Spark Performance Monitoring in Spark 3.0Databricks Apache Spark and its ecosystem provide many instrumentation points, metrics, and monitoring tools that you can use to improve the performance of your jobs and understand how your Spark workloads are utilizing the available system resources. Spark 3.0 comes with several important additions and improvements to the monitoring system. This talk will cover the new features, review some readily available solutions to use them, and will provide examples and feedback from production usage at the CERN Spark service. Topics covered will include Spark executor metrics for fine-grained memory monitoring and extensions to the Spark monitoring system using Spark 3.0 Plugins. Plugins allow us to deploy custom metrics extending the Spark monitoring system to measure, among other things, I/O metrics for cloud file systems like S3, OS metrics, and custom metrics provided by external libraries.

Apache spark 2.4 and beyond

Apache spark 2.4 and beyondXiao Li Apache Spark 2.4 comes packed with a lot of new functionalities and improvements, including the new barrier execution mode, flexible streaming sink, the native AVRO data source, PySpark’s eager evaluation mode, Kubernetes support, higher-order functions, Scala 2.12 support, and more.

Databricks Meetup @ Los Angeles Apache Spark User Group

Databricks Meetup @ Los Angeles Apache Spark User GroupPaco Nathan This document summarizes a presentation on Apache Spark and Spark Streaming. It provides an overview of Spark, describing it as an in-memory cluster computing framework. It then discusses Spark Streaming, explaining that it runs streaming computations as small batch jobs to provide low latency processing. Several use cases for Spark Streaming are presented, including from companies like Stratio, Pearson, Ooyala, and Sharethrough. The presentation concludes with a demonstration of Python Spark Streaming code.

Monitoring the Dynamic Resource Usage of Scala and Python Spark Jobs in Yarn:...

Monitoring the Dynamic Resource Usage of Scala and Python Spark Jobs in Yarn:...Spark Summit We all dread “Lost task” and “Container killed by YARN for exceeding memory limits” messages in our scaled-up spark yarn applications. Even answering the question “How much memory did my application use?” is surprisingly tricky in the distributed yarn environment. Sqrrl has developed a testing framework for observing vital statistics of spark jobs including executor-by-executor memory and CPU usage over time for both the JDK and python portions of pyspark yarn containers. This talk will detail the methods we use to collect, store, and report spark yarn resource usage. This information has proved to be invaluable for performance and regression testing of the spark jobs in Sqrrl Enterprise.

Regain Control Thanks To Prometheus

Regain Control Thanks To PrometheusEtienne Coutaud In the French FedEx company we used Prometheus to monitor the infrastructure. It hosts a CQRS Architecture composed with Kafka, Spark, Cassandra, ElasticSearch, and microservices APIs in scala.

This presentation is about using Prometheus in production, you will see why we choosed Prometheus, how we integrated it, configured it and what kind of insights we extracted from the whole infrastructure.

In addition, you will see how Prometheus changed our way of working, how we implemented self-healing based on Prometheus, how we configured systemd to trigger AlertManager API, integration with slack and other cool stuffs.

The power of linux advanced tracer [POUG18]![The power of linux advanced tracer [POUG18]](https://cdn.slidesharecdn.com/ss_thumbnails/thepoweroflinuxadvancedtracer-180909125236-thumbnail.jpg?width=560&fit=bounds)

![The power of linux advanced tracer [POUG18]](https://cdn.slidesharecdn.com/ss_thumbnails/thepoweroflinuxadvancedtracer-180909125236-thumbnail.jpg?width=560&fit=bounds)

![The power of linux advanced tracer [POUG18]](https://cdn.slidesharecdn.com/ss_thumbnails/thepoweroflinuxadvancedtracer-180909125236-thumbnail.jpg?width=560&fit=bounds)

![The power of linux advanced tracer [POUG18]](https://cdn.slidesharecdn.com/ss_thumbnails/thepoweroflinuxadvancedtracer-180909125236-thumbnail.jpg?width=560&fit=bounds)

The power of linux advanced tracer [POUG18]Mahmoud Hatem The document discusses Linux tracing techniques. It begins with an overview of the Linux tracing landscape and the main tracing systems. It then covers static tracing using tracepoints, dynamic tracing using kprobes and uprobes, and monkey patching techniques. It also looks deeper at CPU utilization analysis using hardware events, performance monitor counters, and the Top-Down Microarchitecture Analysis Method. The goal is to provide a better understanding of Linux tracing capabilities and how to identify performance bottlenecks.

Typesafe spark- Zalando meetup

Typesafe spark- Zalando meetupStavros Kontopoulos This document discusses Typesafe's Reactive Platform and Apache Spark. It describes Typesafe's Fast Data strategy of using a microservices architecture with Spark, Kafka, HDFS and databases. It outlines contributions Typesafe has made to Spark, including backpressure support, dynamic resource allocation in Mesos, and integration tests. The document also discusses Typesafe's customer support and roadmap, including plans to introduce Kerberos security and evaluate Tachyon.

Data Summer Conf 2018, “Building unified Batch and Stream processing pipeline...

Data Summer Conf 2018, “Building unified Batch and Stream processing pipeline...Provectus Apache Beam is an open source, unified model and set of language-specific SDKs for defining and executing data processing pipelines, and also data ingestion and integration flows, supporting for both batch and streaming use cases. In presentation I will provide a general overview of Apache Beam and programming model comparison Apache Beam vs Apache Spark.

Web Scale Reasoning and the LarKC Project

Web Scale Reasoning and the LarKC ProjectSaltlux Inc. The LarKC project aims to build an integrated pluggable platform for large-scale reasoning. It supports parallelization, distribution, and remote execution. The LarKC platform provides a lightweight core that gives standardized interfaces for combining plug-in components, while the real work is done in the plug-ins. There are three types of LarKC users: those building plug-ins, configuring workflows, and using workflows.

Big Data Open Source Security LLC: Realtime log analysis with Mesos, Docker, ...

Big Data Open Source Security LLC: Realtime log analysis with Mesos, Docker, ...DataStax Academy This document discusses real-time log analysis using Mesos, Docker, Kafka, Spark, Cassandra and Solr at scale. It provides an overview of the architecture, describing how data from various sources like syslog can be ingested into Kafka via Docker producers. It then discusses consuming from Kafka to write to Cassandra in real-time and running Spark jobs on Cassandra data. The document uses these open source tools together in a reference architecture to enable real-time analytics and search capabilities on streaming data.

Running Apache Spark Jobs Using Kubernetes

Running Apache Spark Jobs Using KubernetesDatabricks Apache Spark has introduced a powerful engine for distributed data processing, providing unmatched capabilities to handle petabytes of data across multiple servers. Its capabilities and performance unseated other technologies in the Hadoop world, but while Spark provides a lot of power, it also comes with a high maintenance cost, which is why we now see innovations to simplify the Spark infrastructure.

Functioning incessantly of Data Science Platform with Kubeflow - Albert Lewan...

Functioning incessantly of Data Science Platform with Kubeflow - Albert Lewan...GetInData Did you like it? Check out our blog to stay up to date: https://getindata.com/blog

The talk is focused on administration, development and monitoring platform with Apache Spark, Apache Flink and Kubeflow in which the monitoring stack is based on Prometheus stack.

Author: Albert Lewandowski

Linkedin: https://www.linkedin.com/in/albert-lewandowski/

___

Getindata is a company founded in 2014 by ex-Spotify data engineers. From day one our focus has been on Big Data projects. We bring together a group of best and most experienced experts in Poland, working with cloud and open-source Big Data technologies to help companies build scalable data architectures and implement advanced analytics over large data sets.

Our experts have vast production experience in implementing Big Data projects for Polish as well as foreign companies including i.a. Spotify, Play, Truecaller, Kcell, Acast, Allegro, ING, Agora, Synerise, StepStone, iZettle and many others from the pharmaceutical, media, finance and FMCG industries.

https://getindata.com

Ad

More from Databricks (20)

DW Migration Webinar-March 2022.pptx

DW Migration Webinar-March 2022.pptxDatabricks The document discusses migrating a data warehouse to the Databricks Lakehouse Platform. It outlines why legacy data warehouses are struggling, how the Databricks Platform addresses these issues, and key considerations for modern analytics and data warehousing. The document then provides an overview of the migration methodology, approach, strategies, and key takeaways for moving to a lakehouse on Databricks.

Data Lakehouse Symposium | Day 1 | Part 1

Data Lakehouse Symposium | Day 1 | Part 1Databricks The world of data architecture began with applications. Next came data warehouses. Then text was organized into a data warehouse.

Then one day the world discovered a whole new kind of data that was being generated by organizations. The world found that machines generated data that could be transformed into valuable insights. This was the origin of what is today called the data lakehouse. The evolution of data architecture continues today.

Come listen to industry experts describe this transformation of ordinary data into a data architecture that is invaluable to business. Simply put, organizations that take data architecture seriously are going to be at the forefront of business tomorrow.

This is an educational event.

Several of the authors of the book Building the Data Lakehouse will be presenting at this symposium.

Data Lakehouse Symposium | Day 1 | Part 2

Data Lakehouse Symposium | Day 1 | Part 2Databricks The world of data architecture began with applications. Next came data warehouses. Then text was organized into a data warehouse.

Then one day the world discovered a whole new kind of data that was being generated by organizations. The world found that machines generated data that could be transformed into valuable insights. This was the origin of what is today called the data lakehouse. The evolution of data architecture continues today.

Come listen to industry experts describe this transformation of ordinary data into a data architecture that is invaluable to business. Simply put, organizations that take data architecture seriously are going to be at the forefront of business tomorrow.

This is an educational event.

Several of the authors of the book Building the Data Lakehouse will be presenting at this symposium.

Data Lakehouse Symposium | Day 2

Data Lakehouse Symposium | Day 2Databricks The world of data architecture began with applications. Next came data warehouses. Then text was organized into a data warehouse.

Then one day the world discovered a whole new kind of data that was being generated by organizations. The world found that machines generated data that could be transformed into valuable insights. This was the origin of what is today called the data lakehouse. The evolution of data architecture continues today.

Come listen to industry experts describe this transformation of ordinary data into a data architecture that is invaluable to business. Simply put, organizations that take data architecture seriously are going to be at the forefront of business tomorrow.

This is an educational event.

Several of the authors of the book Building the Data Lakehouse will be presenting at this symposium.

Data Lakehouse Symposium | Day 4

Data Lakehouse Symposium | Day 4Databricks The document discusses the challenges of modern data, analytics, and AI workloads. Most enterprises struggle with siloed data systems that make integration and productivity difficult. The future of data lies with a data lakehouse platform that can unify data engineering, analytics, data warehousing, and machine learning workloads on a single open platform. The Databricks Lakehouse platform aims to address these challenges with its open data lake approach and capabilities for data engineering, SQL analytics, governance, and machine learning.

5 Critical Steps to Clean Your Data Swamp When Migrating Off of Hadoop

5 Critical Steps to Clean Your Data Swamp When Migrating Off of HadoopDatabricks In this session, learn how to quickly supplement your on-premises Hadoop environment with a simple, open, and collaborative cloud architecture that enables you to generate greater value with scaled application of analytics and AI on all your data. You will also learn five critical steps for a successful migration to the Databricks Lakehouse Platform along with the resources available to help you begin to re-skill your data teams.

Democratizing Data Quality Through a Centralized Platform

Democratizing Data Quality Through a Centralized PlatformDatabricks Bad data leads to bad decisions and broken customer experiences. Organizations depend on complete and accurate data to power their business, maintain efficiency, and uphold customer trust. With thousands of datasets and pipelines running, how do we ensure that all data meets quality standards, and that expectations are clear between producers and consumers? Investing in shared, flexible components and practices for monitoring data health is crucial for a complex data organization to rapidly and effectively scale.

At Zillow, we built a centralized platform to meet our data quality needs across stakeholders. The platform is accessible to engineers, scientists, and analysts, and seamlessly integrates with existing data pipelines and data discovery tools. In this presentation, we will provide an overview of our platform’s capabilities, including:

Giving producers and consumers the ability to define and view data quality expectations using a self-service onboarding portal

Performing data quality validations using libraries built to work with spark

Dynamically generating pipelines that can be abstracted away from users

Flagging data that doesn’t meet quality standards at the earliest stage and giving producers the opportunity to resolve issues before use by downstream consumers

Exposing data quality metrics alongside each dataset to provide producers and consumers with a comprehensive picture of health over time

Learn to Use Databricks for Data Science

Learn to Use Databricks for Data ScienceDatabricks Data scientists face numerous challenges throughout the data science workflow that hinder productivity. As organizations continue to become more data-driven, a collaborative environment is more critical than ever — one that provides easier access and visibility into the data, reports and dashboards built against the data, reproducibility, and insights uncovered within the data.. Join us to hear how Databricks’ open and collaborative platform simplifies data science by enabling you to run all types of analytics workloads, from data preparation to exploratory analysis and predictive analytics, at scale — all on one unified platform.

Why APM Is Not the Same As ML Monitoring

Why APM Is Not the Same As ML MonitoringDatabricks Application performance monitoring (APM) has become the cornerstone of software engineering allowing engineering teams to quickly identify and remedy production issues. However, as the world moves to intelligent software applications that are built using machine learning, traditional APM quickly becomes insufficient to identify and remedy production issues encountered in these modern software applications.

As a lead software engineer at NewRelic, my team built high-performance monitoring systems including Insights, Mobile, and SixthSense. As I transitioned to building ML Monitoring software, I found the architectural principles and design choices underlying APM to not be a good fit for this brand new world. In fact, blindly following APM designs led us down paths that would have been better left unexplored.

In this talk, I draw upon my (and my team’s) experience building an ML Monitoring system from the ground up and deploying it on customer workloads running large-scale ML training with Spark as well as real-time inference systems. I will highlight how the key principles and architectural choices of APM don’t apply to ML monitoring. You’ll learn why, understand what ML Monitoring can successfully borrow from APM, and hear what is required to build a scalable, robust ML Monitoring architecture.

The Function, the Context, and the Data—Enabling ML Ops at Stitch Fix

The Function, the Context, and the Data—Enabling ML Ops at Stitch FixDatabricks Autonomy and ownership are core to working at Stitch Fix, particularly on the Algorithms team. We enable data scientists to deploy and operate their models independently, with minimal need for handoffs or gatekeeping. By writing a simple function and calling out to an intuitive API, data scientists can harness a suite of platform-provided tooling meant to make ML operations easy. In this talk, we will dive into the abstractions the Data Platform team has built to enable this. We will go over the interface data scientists use to specify a model and what that hooks into, including online deployment, batch execution on Spark, and metrics tracking and visualization.

Stage Level Scheduling Improving Big Data and AI Integration

Stage Level Scheduling Improving Big Data and AI IntegrationDatabricks In this talk, I will dive into the stage level scheduling feature added to Apache Spark 3.1. Stage level scheduling extends upon Project Hydrogen by improving big data ETL and AI integration and also enables multiple other use cases. It is beneficial any time the user wants to change container resources between stages in a single Apache Spark application, whether those resources are CPU, Memory or GPUs. One of the most popular use cases is enabling end-to-end scalable Deep Learning and AI to efficiently use GPU resources. In this type of use case, users read from a distributed file system, do data manipulation and filtering to get the data into a format that the Deep Learning algorithm needs for training or inference and then sends the data into a Deep Learning algorithm. Using stage level scheduling combined with accelerator aware scheduling enables users to seamlessly go from ETL to Deep Learning running on the GPU by adjusting the container requirements for different stages in Spark within the same application. This makes writing these applications easier and can help with hardware utilization and costs.

There are other ETL use cases where users want to change CPU and memory resources between stages, for instance there is data skew or perhaps the data size is much larger in certain stages of the application. In this talk, I will go over the feature details, cluster requirements, the API and use cases. I will demo how the stage level scheduling API can be used by Horovod to seamlessly go from data preparation to training using the Tensorflow Keras API using GPUs.

The talk will also touch on other new Apache Spark 3.1 functionality, such as pluggable caching, which can be used to enable faster dataframe access when operating from GPUs.

Simplify Data Conversion from Spark to TensorFlow and PyTorch

Simplify Data Conversion from Spark to TensorFlow and PyTorchDatabricks In this talk, I would like to introduce an open-source tool built by our team that simplifies the data conversion from Apache Spark to deep learning frameworks.

Imagine you have a large dataset, say 20 GBs, and you want to use it to train a TensorFlow model. Before feeding the data to the model, you need to clean and preprocess your data using Spark. Now you have your dataset in a Spark DataFrame. When it comes to the training part, you may have the problem: How can I convert my Spark DataFrame to some format recognized by my TensorFlow model?

The existing data conversion process can be tedious. For example, to convert an Apache Spark DataFrame to a TensorFlow Dataset file format, you need to either save the Apache Spark DataFrame on a distributed filesystem in parquet format and load the converted data with third-party tools such as Petastorm, or save it directly in TFRecord files with spark-tensorflow-connector and load it back using TFRecordDataset. Both approaches take more than 20 lines of code to manage the intermediate data files, rely on different parsing syntax, and require extra attention for handling vector columns in the Spark DataFrames. In short, all these engineering frictions greatly reduced the data scientists’ productivity.

The Databricks Machine Learning team contributed a new Spark Dataset Converter API to Petastorm to simplify these tedious data conversion process steps. With the new API, it takes a few lines of code to convert a Spark DataFrame to a TensorFlow Dataset or a PyTorch DataLoader with default parameters.

In the talk, I will use an example to show how to use the Spark Dataset Converter to train a Tensorflow model and how simple it is to go from single-node training to distributed training on Databricks.

Scaling and Unifying SciKit Learn and Apache Spark Pipelines

Scaling and Unifying SciKit Learn and Apache Spark PipelinesDatabricks Pipelines have become ubiquitous, as the need for stringing multiple functions to compose applications has gained adoption and popularity. Common pipeline abstractions such as “fit” and “transform” are even shared across divergent platforms such as Python Scikit-Learn and Apache Spark.

Scaling pipelines at the level of simple functions is desirable for many AI applications, however is not directly supported by Ray’s parallelism primitives. In this talk, Raghu will describe a pipeline abstraction that takes advantage of Ray’s compute model to efficiently scale arbitrarily complex pipeline workflows. He will demonstrate how this abstraction cleanly unifies pipeline workflows across multiple platforms such as Scikit-Learn and Spark, and achieves nearly optimal scale-out parallelism on pipelined computations.

Attendees will learn how pipelined workflows can be mapped to Ray’s compute model and how they can both unify and accelerate their pipelines with Ray.

Sawtooth Windows for Feature Aggregations

Sawtooth Windows for Feature AggregationsDatabricks In this talk about zipline, we will introduce a new type of windowing construct called a sawtooth window. We will describe various properties about sawtooth windows that we utilize to achieve online-offline consistency, while still maintaining high-throughput, low-read latency and tunable write latency for serving machine learning features.We will also talk about a simple deployment strategy for correcting feature drift – due operations that are not “abelian groups”, that operate over change data.

Redis + Apache Spark = Swiss Army Knife Meets Kitchen Sink

Redis + Apache Spark = Swiss Army Knife Meets Kitchen SinkDatabricks We want to present multiple anti patterns utilizing Redis in unconventional ways to get the maximum out of Apache Spark.All examples presented are tried and tested in production at Scale at Adobe. The most common integration is spark-redis which interfaces with Redis as a Dataframe backing Store or as an upstream for Structured Streaming. We deviate from the common use cases to explore where Redis can plug gaps while scaling out high throughput applications in Spark.

Niche 1 : Long Running Spark Batch Job – Dispatch New Jobs by polling a Redis Queue

· Why?

o Custom queries on top a table; We load the data once and query N times

· Why not Structured Streaming

· Working Solution using Redis

Niche 2 : Distributed Counters

· Problems with Spark Accumulators

· Utilize Redis Hashes as distributed counters

· Precautions for retries and speculative execution

· Pipelining to improve performance

Re-imagine Data Monitoring with whylogs and Spark