Negative Selection for Algorithm for Anomaly Detection

6 likes4,849 views

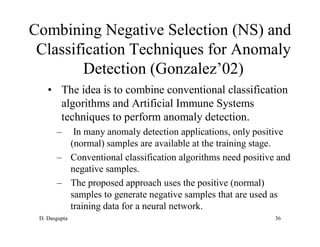

Dipankar Dasgupta reviews the negative selection algorithm and its connections to learning classifier systems

1 of 50

Downloaded 387 times

Ad

Recommended

Face recognition Face Identification

Face recognition Face IdentificationKalyan Acharjya This document provides an overview of Kalyan Acharjya's proposed work on face recognition for his M.Tech dissertation. It discusses conducting literature research on existing face recognition techniques, identifying challenges in real-time applications, and exploring standard face image databases. The presentation covers topics such as how face recognition works, applications, and concludes with plans to modify existing algorithms and compare results to related work to enhance recognition rates.

Webinar 2 - IMU & GPS

Webinar 2 - IMU & GPSCivil Maps We discuss how GPS and IMU work together in the context of capturing vehicle motion and a simple technique for creating a trajectory from a sample set of IMU data. After part 1 & 2 you will be able to generate a point cloud by fusing the IMU trajectory and the LiDAR data.

Facial recognition powerpoint

Facial recognition powerpoint12206695 Facial recognition systems use computer algorithms to identify or verify people from digital images or video by analyzing patterns in their faces. The document traces the development of these systems from early work in the 1970s to modern applications. It describes different types of facial recognition techniques and provides examples of software using the technology. The document also summarizes the results of an online survey about public awareness and interest in using facial recognition. It concludes by noting improvements in accuracy over time but also ongoing challenges regarding error rates, privacy, and changes to facial features.

Concealed Weapon Detection

Concealed Weapon Detection SrijanKumar18 Project Report on Concealed Weapon Detection using Digital Image Processing.It is very useful for final year ECE students.

Presentation of Visual Tracking

Presentation of Visual TrackingYu-Sheng (Yosen) Chen This presentation discusses computer vision techniques for human tracking and interaction. It begins with an outline of the topics to be covered, including basic visual tracking, multi-cue particle filtering for tracking, multi-human tracking, multi-camera tracking, and handling re-entering people. It then describes implementations of basic color-based tracking, particle filtering with multiple cues, and using particle filtering for human head tracking. Challenges with overlapping people are addressed through joint candidate evaluation and sorting by depth. The multi-camera system correlates tracks across cameras to identify corresponding people. Overall, the presentation explains a complete visual tracking and surveillance system using computer vision algorithms.

Fingerprint Recognition System

Fingerprint Recognition Systemchristywong1234 This document summarizes a student project on fingerprint recognition systems. It includes an introduction to fingerprint biometrics, how fingerprint scanners work, applications like security and devices, and an online survey on student familiarity. The survey found most were familiar with the technology and use it for immigration. While some experienced failures, most trust the accuracy and see potential for faster logins and more uses in the future. In conclusion, fingerprint recognition is familiar to most users and could continue adapting to new applications.

Iris Recognition

Iris RecognitionPiyush Mittal The document discusses iris biometrics and an iris recognition system. It provides details on iris anatomy, image acquisition, preprocessing, iris localization including pupil and iris detection, iris normalization, feature extraction using Haar wavelets, and matching. It evaluates the system on three databases achieving over 94% accuracy with low false acceptance and rejection rates. Further work is proposed on fusion, dual extraction approaches, indexing large databases, and using local descriptors.

Compressive Sensing Basics - Medical Imaging - MRI

Compressive Sensing Basics - Medical Imaging - MRIThomas Stefani Compressive sensing is a technique that allows for sampling and reconstruction of sparse signals from far fewer samples or measurements than traditional methods. It addresses the exponential growth of digital data by enabling better sensing of less data. A sparse signal can be accurately reconstructed from incomplete measurements if it has a sparse representation in some domain, the measurements have a noise-like pattern, and non-linear reconstruction is used. Compressive sensing has been applied to MRI to reduce scan time by sampling the k-space sparsely and reconstructing the image using compressed sensing techniques.

edge Linking dip.pptx

edge Linking dip.pptxMruthyunjayaS This document summarizes a seminar topic on edge linking presented under the guidance of Dr. Suresha M. It was submitted by student Shashikala V. Edge linking is used to group edge points detected by edge detection algorithms into meaningful boundaries. There are two types of edge linking: local processing which analyzes pixel neighborhoods, and global processing using the Hough transform which is tolerant of gaps and noise. The Hough transform maps edge points in image space to curves in parameter space to identify linear features like lines.

MISSING PERSON TRACKING

MISSING PERSON TRACKINGMadhumithaAdepu This project is about tracking a missing person by uploading his photo in the portal and the path of CCTV footage related to missing location if the photo is matched with the video, particular frame is captured and saved.we can see the captured frame

Ajay ppt region segmentation new copy

Ajay ppt region segmentation new copyAjay Kumar Singh Region-based image segmentation partitions an image into regions based on pixel properties like homogeneity and spatial proximity. The key region-based methods are thresholding, clustering, region growing, and split-and-merge. Region growing works by aggregating neighboring pixels with similar attributes into regions starting from seed pixels. Split-and-merge first over-segments an image and then refines the segmentation by splitting regions with high variance and merging similar adjacent regions. Region-based segmentation is used for tasks like object recognition, image compression, and medical imaging.

Phase 1 presentation1.pptx

Phase 1 presentation1.pptxGirishKA4 This document summarizes a student project presentation on developing a blood group detection system using image processing. The project is being conducted by 4 students in their 7th semester of Computer Science engineering under the guidance of a professor. The presentation includes an abstract, objectives, introduction on blood groups and their importance, scope of the project, literature survey of existing methods, problem definition, proposed work including the system requirements and references. The proposed work is to use a non-invasive approach involving image sensors and spectroscopic data to determine blood groups quickly and accurately in emergencies.

Image based authentication

Image based authenticationأحلام انصارى Image-based authentication (IBA) uses a set of user-selected images rather than a password for authentication. The IBA system displays an image set including key images mixed with other images. The user is authenticated by correctly identifying their key images. The document discusses IBA in detail, including potential vulnerabilities and methods to counter threats like observation attacks, brute force attacks, and frequency analysis attacks. It also covers the use of CAPTCHAs to distinguish humans and machines.

Automatic Attendance System using Deep Learning

Automatic Attendance System using Deep LearningSunil Aryal This document presents an automatic attendance system using deep learning for facial recognition. It begins with an introduction that explains how the system uses real-time face recognition algorithms integrated with a university management system to automate attendance tracking without manual input. The methodology section then outlines the 5 main steps: 1) taking pictures with a high definition camera, 2) detecting faces, 3) recognizing faces, 4) processing the database, and 5) marking attendance. It describes using CNN and MTCNN models for face detection and a ResNet-34 architecture trained on a large dataset for face recognition, achieving 97% accuracy. The conclusion states this system provides an accurate, transparent, and time-efficient way to take attendance without human bias or manual work.

Blue Eye Technology

Blue Eye Technologyrahuldikonda The PPT includes what is Blue eye technology,Technologies used in blue eye, applications of blue eye technology.

FACE RECOGNITION SYSTEM PPT

FACE RECOGNITION SYSTEM PPTSaghir Hussain This document discusses different biometric identification methods such as finger-scan, facial recognition, iris-scan, and retina-scan. It notes there are approximately 80 nodal points on a human face that can be used for facial recognition, including the distance between the eyes, width of the nose, depth of the eye socket, cheekbones, jaw line, and chin. The document outlines several existing facial recognition algorithms and notes they are not yet 100% efficient. It concludes by describing potential government and commercial uses of facial recognition technology, such as for law enforcement, security, immigration, voting verification, residential security, and banking ATMs.

Moving object detection in video surveillance

Moving object detection in video surveillanceAshfaqul Haque John This is a thesis presentation on moving object detection in video surveillance. This thesis have been done using MATLAB.

Predicting Emotions through Facial Expressions

Predicting Emotions through Facial Expressions twinkle singh This document describes a facial expression recognition system with two parts: face recognition and facial expression recognition. It discusses using principal component analysis (PCA) and linear discriminative analysis (LDA) for face recognition, and PCA to extract eigenfaces for facial expression recognition. The system first performs face detection, then extracts facial expression data and classifies the expression. MATLAB is used as the tool for its faster programming capabilities.

swarm robotics

swarm roboticsDeepika Kothamasu A swarm is a collective group of self-propelled entities that move together. Swarm robotics uses large numbers of simple robots that coordinate together without a centralized control through local interactions. Swarm intelligence is an artificial intelligence technique inspired by swarms in nature, using algorithms like ant colony optimization and flocking to achieve collective behaviors from decentralized and self-organized systems. These algorithms were developed to help solve optimization problems. While swarms exhibit benefits like adaptability and novelty, they also have disadvantages like being non-optimal, non-controllable and non-predictable. Swarm robotics has applications in industries, medicine, military and space research.

iris recognition system as means of unique identification

iris recognition system as means of unique identification Being Topper Project Done and submitted by Students Of final year CBP Government Engineering College

student name : vipin kumar khutail , Krishnanad Mishra , Jaswant kumar, Rahul Vashisht

Project Description :

Iris recognition is an automated method of bio-metric identification that uses mathematical pattern-recognition techniques on video images of one or both of the irises of an individual's eyes, whose complex random patterns are unique, stable, and can be seen from some distance

Fourier descriptors & moments

Fourier descriptors & momentsrajisri2 This document discusses Fourier descriptors and moments which are used in object recognition and image processing. Fourier descriptors represent the boundary shape of an object using the coefficients of its Fourier transform. They are useful because they are invariant to scaling, translation, and rotation. Central moments are another type of descriptor that are translation and rotation invariant. Velocity moments describe both shape and motion over time. Moment invariants are derived from moments to be invariant to specific transformations and are commonly used in image analysis applications such as object detection.

Object detection.pptx

Object detection.pptxshradhaketkale2 The document summarizes a final year project presentation titled "Object Detection for Visually Impaired People" presented to the Department of Computer Science & Engineering at Dr. J.J. Magdum College of Engineering. The project aims to develop a mobile application using machine learning that can detect objects in the surroundings of a visually impaired user and provide audio output to help them navigate safely. The application would incorporate modules for object detection, recognition, text-to-speech, and speech-to-text to help identify obstacles in the user's path and alert them. The project was carried out by 4 students and implemented using Android Studio, TensorFlow, OpenCV and Google Cloud Vision API on Android smartphones.

Low power vlsi implementation adaptive noise cancellor based on least means s...

Low power vlsi implementation adaptive noise cancellor based on least means s...shaik chand basha We are trying to implement an adaptive filter with input weights. The adaptive parameters are obtained by simulating noise canceller on MATLAB. Simulink model of adaptive Noise canceller was developed and Processed by FPGA.

Virtual mouse

Virtual mouseNikhil Mane The document describes a proposed virtual mouse system that uses hand gesture recognition instead of a physical mouse. It discusses the limitations of existing input devices like trackballs and optical mice. The proposed system uses a webcam to capture images of hand gestures, applies object recognition techniques to identify gestures, and translates the gestures to mouse events on the screen. It outlines the hardware and software requirements and modules needed to implement the virtual mouse, including image acquisition, object recognition, coordinate calculation, and event generation. Work done so far includes literature research and initial implementation efforts.

Image authentication techniques based on Image watermarking

Image authentication techniques based on Image watermarkingNawin Kumar Sharma This document discusses image authentication techniques using digital watermarking. It defines digital watermarking as a technique for inserting information like a watermark into an image that can later be extracted or detected to protect copyright and ensure tamper resistance. The process involves embedding a watermark during insertion, potential attacks on the watermarked image, and detection of the watermark. Various domains for embedding watermarks are discussed like the spatial domain and transform domains like DCT and DWT. Properties of good watermarks and classifications of watermarks like robust and fragile are also summarized.

Real time image processing ppt

Real time image processing pptashwini.jagdhane This document provides an overview of real-time image processing. It begins with introducing real-time image processing and how it differs from ordinary image processing by having deadlines and predictable response times. The document then discusses the requirements for a real-time image processing system including high resolution video input, low latency, and high processing performance. It also covers applications such as mobile robots and human-computer interaction. In the end, it provides definitions of real-time image processing in both the perceptual and signal processing senses.

SMART DUST

SMART DUSTKhyravdhy Tannaya Smart dust is a network of tiny sensor-enabled devices called motes that can monitor environmental conditions. Each mote contains sensors, computing power, wireless communication, and an autonomous power supply within a volume of a few millimeters. They communicate with each other and a base station using radio frequency or optical transmission. Major challenges in developing smart dust include fitting all components into a small size while minimizing energy usage. Potential applications include environmental monitoring, healthcare, security, and traffic monitoring.

Block Matching Project

Block Matching Projectdswazalwar This document summarizes the block matching based motion estimation algorithm. The algorithm estimates motion between two frames by comparing blocks in the reference frame to blocks in the target frame. It discusses key parameters like block size, search window, and matching criteria. Experimental results on example images demonstrate how varying these parameters affects the motion fields and computation time. The best results were found with a block size of 16x16, search window of 16, and threshold of 1.7.

Inspiration to Application: A Tutorial on Artificial Immune Systems

Inspiration to Application: A Tutorial on Artificial Immune SystemsJulie Greensmith A tutorial of the history and application of artificial immune systems, given as a research tutorial for the Intelligent Modelling and Analysis Research Group, School of Computer Science, University of Nottingham UK.

2001: An Introduction to Artificial Immune Systems

2001: An Introduction to Artificial Immune SystemsLeandro de Castro ICANNGA 2001 (International Conference on Artificial Neural Networks and Genetic Algorithms, Praga, República Tcheca.

More Related Content

What's hot (20)

edge Linking dip.pptx

edge Linking dip.pptxMruthyunjayaS This document summarizes a seminar topic on edge linking presented under the guidance of Dr. Suresha M. It was submitted by student Shashikala V. Edge linking is used to group edge points detected by edge detection algorithms into meaningful boundaries. There are two types of edge linking: local processing which analyzes pixel neighborhoods, and global processing using the Hough transform which is tolerant of gaps and noise. The Hough transform maps edge points in image space to curves in parameter space to identify linear features like lines.

MISSING PERSON TRACKING

MISSING PERSON TRACKINGMadhumithaAdepu This project is about tracking a missing person by uploading his photo in the portal and the path of CCTV footage related to missing location if the photo is matched with the video, particular frame is captured and saved.we can see the captured frame

Ajay ppt region segmentation new copy

Ajay ppt region segmentation new copyAjay Kumar Singh Region-based image segmentation partitions an image into regions based on pixel properties like homogeneity and spatial proximity. The key region-based methods are thresholding, clustering, region growing, and split-and-merge. Region growing works by aggregating neighboring pixels with similar attributes into regions starting from seed pixels. Split-and-merge first over-segments an image and then refines the segmentation by splitting regions with high variance and merging similar adjacent regions. Region-based segmentation is used for tasks like object recognition, image compression, and medical imaging.

Phase 1 presentation1.pptx

Phase 1 presentation1.pptxGirishKA4 This document summarizes a student project presentation on developing a blood group detection system using image processing. The project is being conducted by 4 students in their 7th semester of Computer Science engineering under the guidance of a professor. The presentation includes an abstract, objectives, introduction on blood groups and their importance, scope of the project, literature survey of existing methods, problem definition, proposed work including the system requirements and references. The proposed work is to use a non-invasive approach involving image sensors and spectroscopic data to determine blood groups quickly and accurately in emergencies.

Image based authentication

Image based authenticationأحلام انصارى Image-based authentication (IBA) uses a set of user-selected images rather than a password for authentication. The IBA system displays an image set including key images mixed with other images. The user is authenticated by correctly identifying their key images. The document discusses IBA in detail, including potential vulnerabilities and methods to counter threats like observation attacks, brute force attacks, and frequency analysis attacks. It also covers the use of CAPTCHAs to distinguish humans and machines.

Automatic Attendance System using Deep Learning

Automatic Attendance System using Deep LearningSunil Aryal This document presents an automatic attendance system using deep learning for facial recognition. It begins with an introduction that explains how the system uses real-time face recognition algorithms integrated with a university management system to automate attendance tracking without manual input. The methodology section then outlines the 5 main steps: 1) taking pictures with a high definition camera, 2) detecting faces, 3) recognizing faces, 4) processing the database, and 5) marking attendance. It describes using CNN and MTCNN models for face detection and a ResNet-34 architecture trained on a large dataset for face recognition, achieving 97% accuracy. The conclusion states this system provides an accurate, transparent, and time-efficient way to take attendance without human bias or manual work.

Blue Eye Technology

Blue Eye Technologyrahuldikonda The PPT includes what is Blue eye technology,Technologies used in blue eye, applications of blue eye technology.

FACE RECOGNITION SYSTEM PPT

FACE RECOGNITION SYSTEM PPTSaghir Hussain This document discusses different biometric identification methods such as finger-scan, facial recognition, iris-scan, and retina-scan. It notes there are approximately 80 nodal points on a human face that can be used for facial recognition, including the distance between the eyes, width of the nose, depth of the eye socket, cheekbones, jaw line, and chin. The document outlines several existing facial recognition algorithms and notes they are not yet 100% efficient. It concludes by describing potential government and commercial uses of facial recognition technology, such as for law enforcement, security, immigration, voting verification, residential security, and banking ATMs.

Moving object detection in video surveillance

Moving object detection in video surveillanceAshfaqul Haque John This is a thesis presentation on moving object detection in video surveillance. This thesis have been done using MATLAB.

Predicting Emotions through Facial Expressions

Predicting Emotions through Facial Expressions twinkle singh This document describes a facial expression recognition system with two parts: face recognition and facial expression recognition. It discusses using principal component analysis (PCA) and linear discriminative analysis (LDA) for face recognition, and PCA to extract eigenfaces for facial expression recognition. The system first performs face detection, then extracts facial expression data and classifies the expression. MATLAB is used as the tool for its faster programming capabilities.

swarm robotics

swarm roboticsDeepika Kothamasu A swarm is a collective group of self-propelled entities that move together. Swarm robotics uses large numbers of simple robots that coordinate together without a centralized control through local interactions. Swarm intelligence is an artificial intelligence technique inspired by swarms in nature, using algorithms like ant colony optimization and flocking to achieve collective behaviors from decentralized and self-organized systems. These algorithms were developed to help solve optimization problems. While swarms exhibit benefits like adaptability and novelty, they also have disadvantages like being non-optimal, non-controllable and non-predictable. Swarm robotics has applications in industries, medicine, military and space research.

iris recognition system as means of unique identification

iris recognition system as means of unique identification Being Topper Project Done and submitted by Students Of final year CBP Government Engineering College

student name : vipin kumar khutail , Krishnanad Mishra , Jaswant kumar, Rahul Vashisht

Project Description :

Iris recognition is an automated method of bio-metric identification that uses mathematical pattern-recognition techniques on video images of one or both of the irises of an individual's eyes, whose complex random patterns are unique, stable, and can be seen from some distance

Fourier descriptors & moments

Fourier descriptors & momentsrajisri2 This document discusses Fourier descriptors and moments which are used in object recognition and image processing. Fourier descriptors represent the boundary shape of an object using the coefficients of its Fourier transform. They are useful because they are invariant to scaling, translation, and rotation. Central moments are another type of descriptor that are translation and rotation invariant. Velocity moments describe both shape and motion over time. Moment invariants are derived from moments to be invariant to specific transformations and are commonly used in image analysis applications such as object detection.

Object detection.pptx

Object detection.pptxshradhaketkale2 The document summarizes a final year project presentation titled "Object Detection for Visually Impaired People" presented to the Department of Computer Science & Engineering at Dr. J.J. Magdum College of Engineering. The project aims to develop a mobile application using machine learning that can detect objects in the surroundings of a visually impaired user and provide audio output to help them navigate safely. The application would incorporate modules for object detection, recognition, text-to-speech, and speech-to-text to help identify obstacles in the user's path and alert them. The project was carried out by 4 students and implemented using Android Studio, TensorFlow, OpenCV and Google Cloud Vision API on Android smartphones.

Low power vlsi implementation adaptive noise cancellor based on least means s...

Low power vlsi implementation adaptive noise cancellor based on least means s...shaik chand basha We are trying to implement an adaptive filter with input weights. The adaptive parameters are obtained by simulating noise canceller on MATLAB. Simulink model of adaptive Noise canceller was developed and Processed by FPGA.

Virtual mouse

Virtual mouseNikhil Mane The document describes a proposed virtual mouse system that uses hand gesture recognition instead of a physical mouse. It discusses the limitations of existing input devices like trackballs and optical mice. The proposed system uses a webcam to capture images of hand gestures, applies object recognition techniques to identify gestures, and translates the gestures to mouse events on the screen. It outlines the hardware and software requirements and modules needed to implement the virtual mouse, including image acquisition, object recognition, coordinate calculation, and event generation. Work done so far includes literature research and initial implementation efforts.

Image authentication techniques based on Image watermarking

Image authentication techniques based on Image watermarkingNawin Kumar Sharma This document discusses image authentication techniques using digital watermarking. It defines digital watermarking as a technique for inserting information like a watermark into an image that can later be extracted or detected to protect copyright and ensure tamper resistance. The process involves embedding a watermark during insertion, potential attacks on the watermarked image, and detection of the watermark. Various domains for embedding watermarks are discussed like the spatial domain and transform domains like DCT and DWT. Properties of good watermarks and classifications of watermarks like robust and fragile are also summarized.

Real time image processing ppt

Real time image processing pptashwini.jagdhane This document provides an overview of real-time image processing. It begins with introducing real-time image processing and how it differs from ordinary image processing by having deadlines and predictable response times. The document then discusses the requirements for a real-time image processing system including high resolution video input, low latency, and high processing performance. It also covers applications such as mobile robots and human-computer interaction. In the end, it provides definitions of real-time image processing in both the perceptual and signal processing senses.

SMART DUST

SMART DUSTKhyravdhy Tannaya Smart dust is a network of tiny sensor-enabled devices called motes that can monitor environmental conditions. Each mote contains sensors, computing power, wireless communication, and an autonomous power supply within a volume of a few millimeters. They communicate with each other and a base station using radio frequency or optical transmission. Major challenges in developing smart dust include fitting all components into a small size while minimizing energy usage. Potential applications include environmental monitoring, healthcare, security, and traffic monitoring.

Block Matching Project

Block Matching Projectdswazalwar This document summarizes the block matching based motion estimation algorithm. The algorithm estimates motion between two frames by comparing blocks in the reference frame to blocks in the target frame. It discusses key parameters like block size, search window, and matching criteria. Experimental results on example images demonstrate how varying these parameters affects the motion fields and computation time. The best results were found with a block size of 16x16, search window of 16, and threshold of 1.7.

Viewers also liked (20)

Inspiration to Application: A Tutorial on Artificial Immune Systems

Inspiration to Application: A Tutorial on Artificial Immune SystemsJulie Greensmith A tutorial of the history and application of artificial immune systems, given as a research tutorial for the Intelligent Modelling and Analysis Research Group, School of Computer Science, University of Nottingham UK.

2001: An Introduction to Artificial Immune Systems

2001: An Introduction to Artificial Immune SystemsLeandro de Castro ICANNGA 2001 (International Conference on Artificial Neural Networks and Genetic Algorithms, Praga, República Tcheca.

Design and Implementation of Artificial Immune System for Detecting Flooding ...

Design and Implementation of Artificial Immune System for Detecting Flooding ...Kent State University Academic Paper: N. B. I. Al-Dabagh and I. A. Ali, "Design and implementation of artificial immune system for detecting flooding attacks," in High Performance Computing and Simulation (HPCS), 2011 International Conference on, 2011, pp. 381-390.

Artificial immune system against viral attack

Artificial immune system against viral attackUltraUploader This document discusses an artificial immune system approach for detecting computer viruses. It begins by providing background on artificial immune systems and how they can be applied to computer security similar to how the human immune system distinguishes self from non-self. It then describes the proposed artificial immune system-based virus detection system, which includes a signature extractor that generates signatures for non-self programs that do not match self programs, and a signature selector that analyzes the signatures to determine if they belong to viruses or self programs. The system aims to detect unknown viruses through an adaptive process of learning virus signatures.

2005: A Matlab Tour on Artificial Immune Systems

2005: A Matlab Tour on Artificial Immune SystemsLeandro de Castro The document summarizes several bio-inspired algorithms including CLONALG, aiNet, ABNET, and Opt-aiNet. CLONALG is a clonal selection algorithm inspired by immune system principles of clonal selection, hypermutation, and affinity maturation. aiNet is an artificial immune network model that uses principles of clonal selection, affinity maturation, and network suppression to perform unsupervised learning and clustering. ABNET is an antibody network based on a feedforward neural network trained with immune system concepts. Opt-aiNet adapts the aiNet model for optimization problems by introducing dynamic population sizing, mutation proportional to fitness, and automatic stopping criteria.

Artificial immune system

Artificial immune systemTejaswini Jitta This document discusses artificial immune systems and their applications in mobile ad hoc networks (MANETs). It describes various artificial immune system algorithms inspired by theoretical immunology, including negative selection, artificial immune networks, clonal selection, danger theory, and dendritic cell algorithms. These algorithms can be used for intrusion detection in MANETs to provide self-healing, self-defensive, and self-organizing capabilities to address security challenges in infrastructure-less mobile networks. Several studies have investigated applying artificial immune system approaches like negative selection and clonal selection to detect node misbehavior and classify nodes as self or non-self in MANETs.

2005: An Introduction to Artificial Immune Systems

2005: An Introduction to Artificial Immune SystemsLeandro de Castro The document introduces artificial immune systems (AIS), which are computational systems inspired by the human immune system. It provides an overview of the immune system and its properties such as diversity, learning, memory, pattern recognition, and self/non-self discrimination. These properties provide a biological paradigm for developing AIS algorithms. The document then discusses representation schemes, affinity measures, and generic algorithms that have been developed for AIS, including negative selection, clonal selection, and immune network models. Finally, it reviews applications of AIS and discusses current trends in the field.

2006: Artificial Immune Systems - The Past, The Present, And The Future?

2006: Artificial Immune Systems - The Past, The Present, And The Future?Leandro de Castro The document provides an overview of artificial immune systems (AIS), including:

1) It discusses the history and development of AIS from the 1980s onward, including early works that drew inspiration from immunology and key conferences/publications.

2) It outlines the current state of AIS research, including new application areas, algorithmic improvements, and theoretical investigations into convergence and modeling.

3) It speculates on potential future directions for AIS, such as strengthening theoretical foundations, exploring innate immunity and danger theory models, and applying AIS to dynamic environments.

Real time detection system of driver distraction.pdf

Real time detection system of driver distraction.pdfReena_Jadhav There is accumulating evidence that driver distrac- tion is a leading cause of vehicle crashes and incidents. In par- ticular, increased use of so-called in-vehicle information systems (IVIS) have raised important and growing safety concerns. Thus, detecting the driver’s state is of paramount importance, to adapt IVIS, therefore avoiding or mitigating their possible negative effects. The purpose of this presentation is to show a method for the nonintrusive and real- time detection of visual distraction, using vehicle dynamics data and without using the eye-tracker data as inputs to classifiers. Specifically, we present and compare different models that are based on well-known machine learning (ML) methods. Data for training the models were collected using a static driving simulator, with real human subjects performing a specific secondary task [i.e., a surrogate visual research task (SURT)] while driving. Different training methods, model characteristics, and feature selection criteria have been compared. Based on our results, using a BSN has outperformed all the other ML methods, providing the highest classification rate for most of the subjects.

Index Terms—Accident prevention, artificial intelligence and machine learning (ML), driver distraction and inattention, intel- ligent supporting systems.

Lecture8 multi class_svm

Lecture8 multi class_svmStéphane Canu This document discusses multi-class support vector machines (SVMs). It outlines three main strategies for multi-class SVMs: decomposition approaches like one-vs-all and one-vs-one, a global approach, and an approach using pairwise coupling of convex hulls. It also discusses using SVMs to estimate class probabilities and describes two variants of multi-class SVMs that incorporate slack variables to allow misclassified examples.

Modified artificial immune system for single row facility layout problem

Modified artificial immune system for single row facility layout problemIAEME Publication One of the main optimization algorithms currently available in the research field is an Artificial Immune System where abundant applications are using this algorithm for clustering and patter recognition processes. These algorithms are providing more effective optimized results in multi-model optimization problems than Genetic Algorithm.

Developing an Artificial Immune Model for Cash Fraud Detection

Developing an Artificial Immune Model for Cash Fraud Detection khawla Osama Document from thesis done by Bsc students as graduation research , to develop a model that detect a cash card fraud base on the cash card holder pattern ,the technique used to detect fraud inspired from immune system

AIS

AISSweta Leena Panda The document discusses using artificial immune systems for computer security. It introduces the human immune system and how artificial immune systems (AIS) are inspired by it. Several AIS models are described for detecting viruses, including negative selection, partial matching rules, anomaly detection, and self/non-self models. The BAM (B-cell Algorithmic Model) is presented as a way to detect viral code by comparing patterns to known legal and viral codes using a correlation matrix. The document concludes the BAM model provides an effective way to detect viruses and errors using artificial immune system concepts.

TabuSearch FINAL

TabuSearch FINALMustafa RASHID Tabu search is a metaheuristic algorithm that guides a local search procedure to explore the solution space beyond local optima. It uses flexible memory structures and strategic restrictions to avoid getting stuck in cycles. The key components are a tabu list that forbids recently used moves, a neighborhood structure to identify adjacent solutions, and aspiration criteria to override forbidden moves under certain conditions. The algorithm starts with an initial solution and iteratively moves to better solutions in the neighborhood until a stopping criterion is met, updating the tabu list at each step to explore new regions. It has been successfully applied to problems like minimum spanning trees, scheduling, and vehicle routing.

Bee algorithm

Bee algorithmkousick This document discusses the bee algorithm, which is an optimization technique inspired by the foraging behavior of honey bees. It begins with an introduction and overview of concepts like nature of bees, hill climbing, swarm intelligence, and bee colony optimization. It then describes the key steps of the proposed bee algorithm, including initializing a population of solutions, evaluating their fitness, selecting sites for neighborhood search, recruiting bees to search those sites, and iterating until an optimal solution is found. An example application to a traveling salesperson problem is provided. The document concludes that bee algorithm can help provide an optimal solution for problems with many possible solutions, such as in artificial intelligence applications.

Designing Hybrid Cryptosystem for Secure Transmission of Image Data using Bio...

Designing Hybrid Cryptosystem for Secure Transmission of Image Data using Bio...ranjit banshpal The document outlines a proposed hybrid cryptosystem for secure transmission of image data using biometric fingerprints. It discusses problems with existing password and cryptographic techniques, and proposes a system that uses fingerprint biometrics to generate an encryption key, JPEG compression, and a secret fragment visible mosaic image method for embedding encrypted image data. The methodology section describes the tools and algorithms used, including SHA-256, AES, and JPEG. The implementation details section provides flow diagrams of the encryption and decryption processes.

AUTOMATIC ACCIDENT DETECTION AND ALERT SYSTEM

AUTOMATIC ACCIDENT DETECTION AND ALERT SYSTEMAnamika Vinod The document describes an automatic accident alert system called "SAVE ME" for automobiles. It consists of three main modules: an Android application, a sensing module, and a server module. The sensing module contains a collision sensor and microcontroller that detects accidents. The Android app detects active sensors nearby and sends alerts to the server. The server then sends messages to emergency contacts and nearby control stations, including GPS location to help with rescue efforts. The system aims to quickly alert authorities in the event of an unattended accident.

Image Steganography using LSB

Image Steganography using LSBSreelekshmi Sree This document discusses steganography and image steganography techniques. It defines steganography as hiding information within other information to avoid detection. Image steganography is described as hiding data in digital images using techniques like least significant bit encoding. The document outlines the LSB algorithm, which replaces the least significant bits of image pixel values with bits of the hidden message. Examples are given to illustrate how short messages can be concealed in an image using this method.

ACCIDENT DETECTION AND VEHICLE TRACKING USING GPS,GSM AND MEMS

ACCIDENT DETECTION AND VEHICLE TRACKING USING GPS,GSM AND MEMSKrishna Moparthi This document describes a vehicle accident detection and tracking system using GPS, GSM, and MEMS sensors. The system detects accidents using a MEMS sensor and then uses GPS to determine the vehicle's location. The location is sent via GSM to emergency services and authorized contacts to provide rapid response. The system aims to quickly locate accident sites and notify help in remote areas with limited communication infrastructure.

Algorithms and Flowcharts

Algorithms and FlowchartsDeva Singh This presentation covers all the basic fundamentals of Algorithms & Flowcharts.

( Included examples )

Design and Implementation of Artificial Immune System for Detecting Flooding ...

Design and Implementation of Artificial Immune System for Detecting Flooding ...Kent State University

Ad

Similar to Negative Selection for Algorithm for Anomaly Detection (20)

Ijetr042309

Ijetr042309Engineering Research Publication IJETR, ER Publication, Research Papers, Free Journals, High Impact Journals, M.Tech Research Articles, Free Publication, UGC Journals,

Microarray Analysis

Microarray AnalysisJames McInerney Microarrays allow researchers to analyze gene expression across thousands of genes simultaneously. DNA probes are arrayed on a small glass or nylon slide, and labeled mRNA from samples is hybridized to the probes. Fluorescent scanning detects which genes are expressed. Data analysis includes normalization, distance metrics, clustering, and visualization to group genes with similar expression profiles and identify patterns of co-regulated genes. Microarrays enable functional genomics studies of development, disease, response to drugs or environmental factors, and more.

Probablistic Inference With Limited Information

Probablistic Inference With Limited InformationChuka Okoye The document presents a probabilistic approach to answering queries in sensor networks using limited and stochastic information. It uses a Bayesian network to model the relationships between sensor measurements, enemy agent locations, and whether a friendly agent is surrounded. Approximate inference is performed using Markov Chain Monte Carlo sampling to estimate the posterior probability of being surrounded given the sensor data. Simulation results show the algorithm can effectively handle noisy sensor measurements and provide useful estimates even when direct information is limited or unavailable.

General pipeline of transcriptomics analysis

General pipeline of transcriptomics analysisSanty Marques-Ladeira The document outlines the general pipeline for transcriptomics analysis based on microarray experiments. It discusses the main steps which include quality control, normalization, annotation, differential expression analysis, clustering, and supplemental analyses such as functional enrichment and transcription factor binding site analysis. Key points within each step are highlighted, such as common normalization and differential expression methods, different clustering algorithms, and tools used for enrichment and transcription factor analysis.

Anomaly detection

Anomaly detection철 김 This document discusses anomaly detection techniques. It defines anomaly detection as the identification of items, events or observations that do not conform to expected patterns in data mining. It then covers various anomaly detection methods including unsupervised, supervised and semi-supervised techniques. Specific algorithms discussed include LOF, RNN, and Twitter's Seasonal Hybrid ESD approach. Real-world applications of anomaly detection are also mentioned such as intrusion detection, fraud detection and system health monitoring.

Lecture 7: Recurrent Neural Networks

Lecture 7: Recurrent Neural NetworksSang Jun Lee - POSTECH EECE695J, "딥러닝 기초 및 철강공정에의 활용", 2017-11-10

- Contents: introduction to reccurent neural networks, LSTM, variants of RNN, implementation of RNN, case studies

- Video: https://youtu.be/pgqiEPb4pV8

Gene Expression Data Analysis

Gene Expression Data AnalysisJhoirene Clemente This document discusses analyzing and visualizing gene expression data. It defines key terms like genes and gene expression data. It also describes clustering gene expression data using k-means clustering to group genes based on similarity in a dataset of yeast cell cycle genes. Finally, it discusses visualizing gene expression data using techniques like vector fusion, nMDS, and PCA to project high-dimensional gene expression datasets into 2D or 3D spaces.

Cost Optimized Design Technique for Pseudo-Random Numbers in Cellular Automata

Cost Optimized Design Technique for Pseudo-Random Numbers in Cellular Automataijait In this research work, we have put an emphasis on the cost effective design approach for high quality pseudo-random numbers using one dimensional Cellular Automata (CA) over Maximum Length CA. This work focuses on different complexities e.g., space complexity, time complexity, design complexity and searching complexity for the generation of pseudo-random numbers in CA. The optimization procedure for

these associated complexities is commonly referred as the cost effective generation approach for pseudorandom numbers. The mathematical approach for proposed methodology over the existing maximum length CA emphasizes on better flexibility to fault coverage. The randomness quality of the generated patterns for the proposed methodology has been verified using Diehard Tests which reflects that the randomness quality

achieved for proposed methodology is equal to the quality of randomness of the patterns generated by the maximum length cellular automata. The cost effectiveness results a cheap hardware implementation for the concerned pseudo-random pattern generator. Short version of this paper has been published in [1].

Distributed Architecture of Subspace Clustering and Related

Distributed Architecture of Subspace Clustering and RelatedPei-Che Chang Distributed Architecture of Subspace Clustering and Related

Sparse Subspace Clustering

Low-Rank Representation

Least Squares Regression

Multiview Subspace Clustering

Deep learning from a novice perspective

Deep learning from a novice perspectiveAnirban Santara This is a set of slides that introduces the layman to Deep Learning and also presents a road-map for further studies.

Hierarchical Temporal Memory for Real-time Anomaly Detection

Hierarchical Temporal Memory for Real-time Anomaly DetectionIhor Bobak The presentaiton was done in Belarus Big Data User group. Link to the video (in Russian): https://www.youtube.com/watch?v=opa8UO_ldF8

Compressed Sensing In Spectral Imaging

Compressed Sensing In Spectral Imaging Distinguished Lecturer Series - Leon The Mathematician Professor Gonzalo R. Arce gave a lecture on "Compressed sensing in spectral imaging" in the Distinguished Lecturer Series - Leon The Mathematician.

More Information available at:

http://goo.gl/satkf

Gene Extrapolation Models for Toxicogenomic Data

Gene Extrapolation Models for Toxicogenomic DataNacho Caballero 1) The document describes using gene expression data from landmark genes to build predictive models for extrapolating the expression of regular genes not in the landmark set.

2) Three different model types are evaluated: linear regression, elastic net, and neural networks. Elastic net is shown to outperform linear regression in terms of signal-to-noise ratio for the extrapolated expressions.

3) The performance of the different extrapolation models is assessed on their ability to predict carcinogenicity classifiers, and the correlation between expressions from different microarray platforms is examined.

Oriented Tensor Reconstruction. Tracing Neural Pathways from DT-MRI

Oriented Tensor Reconstruction. Tracing Neural Pathways from DT-MRILeonid Zhukov This document presents a method for tracing neural pathways from diffusion tensor MRI (DT-MRI) data through oriented tensor reconstruction. It introduces DT-MRI and discusses previous work in tensor visualization and fiber tracing. The presented algorithm uses moving least squares filtering and fiber tracing to extract anatomical structures from DT-MRI data such as the corona radiata, corpus callosum, and cingulum bundle. Results demonstrate the algorithm can smoothly reconstruct recognizable brain structures. Future work includes additional method developments and validation.

Poster Presentation on "Artifact Characterization and Removal for In-Vivo Neu...

Poster Presentation on "Artifact Characterization and Removal for In-Vivo Neu...Md Kafiul Islam Background: In vivo neural recordings are often corrupted by different artifacts, especially in a less-constrained recording environment. Due to limited understanding of the artifacts appeared in the in vivo neural data, it is more challenging to identify artifacts from neural signal components compared with other applications. The objective of this work is to analyze artifact characteristics and to develop an algorithm for automatic artifact detection and removal without distorting the signals of interest.

Proposed method: The proposed algorithm for artifact detection and removal is based on the stationary wavelet transform (SWT) with selected frequency bands of neural signals. The selection of frequency bands is based on the spectrum characteristics of in vivo neural data. Further, to make the proposed algorithm robust under different recording conditions, a modified universal-threshold value is proposed.

Results: Extensive simulations have been performed to evaluate the performance of the proposed algorithm in terms of both amount of artifact removal and amount of distortion to neural signals. The quantitative results reveal that the algorithm is quite robust for different artifact types and artifact-to-signal ratio.

Comparison with existing methods: Both real and synthesized data have been used for testing the pro-posed algorithm in comparison with other artifact removal algorithms (e.g. ICA, wICA, wCCA, EMD-ICA, and EMD-CCA) found in the literature. Comparative testing results suggest that the proposed algorithm performs better than the available algorithms.

Conclusion: Our work is expected to be useful for future research on in vivo neural signal processing and eventually to develop a real-time neural interface for advanced neuroscience and behavioral experiments.

Diagnosis of Faulty Sensors in Antenna Array using Hybrid Differential Evolut...

Diagnosis of Faulty Sensors in Antenna Array using Hybrid Differential Evolut...IJECEIAES In this work, differential evolution based compressive sensing technique for detection of faulty sensors in linear arrays has been presented. This algorithm starts from taking the linear measurements of the power pattern generated by the array under test. The difference between the collected compressive measurements and measured healthy array field pattern is minimized using a hybrid differential evolution (DE). In the proposed method, the slow convergence of DE based compressed sensing technique is accelerated with the help of parallel coordinate decent algorithm (PCD). The combination of DE with PCD makes the minimization faster and precise. Simulation results validate the performance to detect faulty sensors from a small number of measurements.

Uncertainties in large scale power systems

Uncertainties in large scale power systemsOlivier Teytaud Weather, opponents, geopolitics: so many uncertainties in such a case ? How to manage power systems in spite of these uncertainties, and how to decide investments.

Talk at Saint-Etienne in 2015; thanks to R. Leriche and to the "games and optimizations" days in Saint-Etienne.

Bias correction, and other uncertainty management techniques

Bias correction, and other uncertainty management techniquesOlivier Teytaud The document discusses various sources of uncertainty in power systems, including stochastic uncertainties like weather and non-stochastic uncertainties involving scenarios without probabilities. It proposes using portfolio methods that run multiple algorithms concurrently to address uncertainties, as no single algorithm consistently performs best across different problem instances. Portfolio methods can improve robustness over running a single algorithm by selecting the best response from the set of algorithm outputs.

Adaptive equalization

Adaptive equalizationKamal Bhatt The document discusses adaptive channel equalization using neural networks. It provides an overview of neural networks and their application to channel equalization. Specifically, it summarizes various neural network architectures that have been used for equalization, including multilayer perceptrons, functional link artificial neural networks, Chebyshev neural networks, and radial basis function networks. It compares the bit error rate performance of these different neural network equalizers with traditional linear equalizers such as LMS and RLS. Overall, the document finds that neural network equalizers can better handle nonlinear channel distortions compared to linear equalizers and that radial basis function networks provide particularly good performance for channel equalization applications.

20141003.journal club

20141003.journal clubHayaru SHOUNO - Researchers used a hierarchical convolutional neural network (CNN) optimized for object categorization performance to predict neural responses in higher visual cortex.

- The top layer of the CNN accurately predicted responses in inferior temporal (IT) cortex, and intermediate layers predicted responses in V4 cortex.

- This suggests that biological performance optimization directly shaped neural mechanisms in visual processing areas, as the CNN was not explicitly trained on neural data but emerged as predictive of responses in IT and V4.

Ad

More from Xavier Llorà (20)

Meandre 2.0 Alpha Preview

Meandre 2.0 Alpha PreviewXavier Llorà A quick overview of the seed for Meandre 2.0 series. It covers the main motivations moving forward and the disruptive changes introduced via the use of Scala and MongoDB

Soaring the Clouds with Meandre

Soaring the Clouds with MeandreXavier Llorà This document discusses cloud computing and the Meandre framework. It provides an overview of cloud concepts like public/private clouds and IaaS, PaaS, SaaS models. It describes NCSA's use of virtual machines and Eucalyptus cloud. Meandre is presented as a component-based framework that can orchestrate data-intensive applications across cloud resources through its dataflow model and scripting language. It aims to facilitate scaling applications to leverage elastic cloud infrastructure and integrate computation and data.

From Galapagos to Twitter: Darwin, Natural Selection, and Web 2.0

From Galapagos to Twitter: Darwin, Natural Selection, and Web 2.0Xavier Llorà One hundred and fifty years have passed since the publication of Darwin's world-changing manuscript "The Origins of Species by Means of Natural Selection". Darwin's ideas have proven their power to reach beyond the biology realm, and their ability to define a conceptual framework which allows us to model and understand complex systems. In the mid 1950s and 60s the efforts of a scattered group of engineers proved the benefits of adopting an evolutionary paradigm to solve complex real-world problems. In the 70s, the emerging presence of computers brought us a new collection of artificial evolution paradigms, among which genetic algorithms rapidly gained widespread adoption. Currently, the Internet has propitiated an exponential growth of information and computational resources that are clearly disrupting our perception and forcing us to reevaluate the boundaries between technology and social interaction. Darwin's ideas can, once again, help us understand such disruptive change. In this talk, I will review the origin of artificial evolution ideas and techniques. I will also show how these techniques are, nowadays, helping to solve a wide range of applications, from life science problems to twitter puzzles, and how high performance computing can make Darwin ideas a routinary tool to help us model and understand complex systems.

Large Scale Data Mining using Genetics-Based Machine Learning

Large Scale Data Mining using Genetics-Based Machine LearningXavier Llorà We are living in the peta-byte era.We have larger and larger data to analyze, process and transform into useful answers for the domain experts. Robust data mining tools, able to cope with petascale volumes and/or high dimensionality producing human-understandable solutions are key on several domain areas. Genetics-based machine learning (GBML) techniques are perfect candidates for this task, among others, due to the recent advances in representations, learning paradigms, and theoretical modeling. If evolutionary learning techniques aspire to be a relevant player in this context, they need to have the capacity of processing these vast amounts of data and they need to process this data within reasonable time. Moreover, massive computation cycles are getting cheaper and cheaper every day, allowing researchers to have access to unprecedented parallelization degrees. Several topics are interlaced in these two requirements: (1) having the proper learning paradigms and knowledge representations, (2) understanding them and knowing when are they suitable for the problem at hand, (3) using efficiency enhancement techniques, and (4) transforming and visualizing the produced solutions to give back as much insight as possible to the domain experts are few of them.

This tutorial will try to answer this question, following a roadmap that starts with the questions of what large means, and why large is a challenge for GBML methods. Afterwards, we will discuss different facets in which we can overcome this challenge: Efficiency enhancement techniques, representations able to cope with large dimensionality spaces, scalability of learning paradigms. We will also review a topic interlaced with all of them: how can we model the scalability of the components of our GBML systems to better engineer them to get the best performance out of them for large datasets. The roadmap continues with examples of real applications of GBML systems and finishes with an analysis of further directions.

Data-Intensive Computing for Competent Genetic Algorithms: A Pilot Study us...

Data-Intensive Computing for Competent Genetic Algorithms: A Pilot Study us...Xavier Llorà Data-intensive computing has positioned itself as a valuable programming paradigm to efficiently approach problems requiring processing very large volumes of data. This paper presents a pilot study about how to apply the data-intensive computing paradigm to evolutionary computation algorithms. Two representative cases (selectorecombinative genetic algorithms and estimation of distribution algorithms) are presented, analyzed, and discussed. This study shows that equivalent data-intensive computing evolutionary computation algorithms can be easily developed, providing robust and scalable algorithms for the multicore-computing era. Experimental results show how such algorithms scale with the number of available cores without further modification.

Scalabiltity in GBML, Accuracy-based Michigan Fuzzy LCS, and new Trends

Scalabiltity in GBML, Accuracy-based Michigan Fuzzy LCS, and new TrendsXavier Llorà The document summarizes a presentation given by Jorge Casillas on research related to scaling up genetic learning algorithms and fuzzy classifier systems. Specifically, it discusses:

1. An approach using evolutionary instance selection and stratification to extract rule sets from large datasets that balance prediction accuracy and interpretability.

2. Fuzzy-XCS, an accuracy-based genetic fuzzy system the author is developing that uses competitive fuzzy inference and represents rules as disjunctive normal forms to address challenges in credit assignment.

3. Open problems and opportunities in applying genetic learning at large scales, such as addressing chromosome size and efficient evaluation over large datasets.

Pittsburgh Learning Classifier Systems for Protein Structure Prediction: Sca...

Pittsburgh Learning Classifier Systems for Protein Structure Prediction: Sca...Xavier Llorà This document summarizes research using a Pittsburgh Learning Classifier System (LCS) called GAssist to predict protein structure by determining coordination numbers (CN). The researchers tested GAssist on a dataset of over 250,000 protein residues, comparing it to support vector machines, Naive Bayes, and C4.5 decision trees. While support vector machines achieved the best accuracy, GAssist produced more interpretable and compact rule sets at the cost of lower performance. The researchers analyzed the interpretability and scalability of GAssist for this challenging bioinformatics problem, identifying avenues for improving its accuracy while maintaining explanatory power.

Towards a Theoretical Towards a Theoretical Framework for LCS Framework fo...

Towards a Theoretical Towards a Theoretical Framework for LCS Framework fo...Xavier Llorà Alwyn Barry introduces the theoretical framework for LCS that Jan Drugowitsch is currently working on.

Learning Classifier Systems for Class Imbalance Problems

Learning Classifier Systems for Class Imbalance ProblemsXavier Llorà The document discusses learning classifier systems (LCS) for addressing class imbalance problems in datasets. It aims to enhance the applicability of LCS to knowledge discovery from real-world datasets that often exhibit class imbalance, where one class is represented by significantly fewer examples than other classes. The author proposes adapting parameters of the XCS learning classifier system, such as learning rate and genetic algorithm threshold, based on estimated class imbalance ratios within classifiers' niches in order to minimize bias towards majority classes and better handle small disjuncts representing minority classes.

A Retrospective Look at A Retrospective Look at Classifier System ResearchCl...

A Retrospective Look at A Retrospective Look at Classifier System ResearchCl...Xavier Llorà Lashon Booker presents the glance to the past of LCS and how that connects to the current and future efforts.

XCS: Current capabilities and future challenges

XCS: Current capabilities and future challengesXavier Llorà The document discusses the XCS classifier system, which uses a combination of gradient-based techniques and evolutionary algorithms to learn predictive models from complex problems. It summarizes XCS's current capabilities in classification, function approximation, and reinforcement learning tasks. However, it notes there are still challenges to improve XCS's representations and operators, niching abilities, handling of dynamic problems, solution compactness, and development of hierarchical classifier systems.

Searle, Intentionality, and the Future of Classifier Systems

Searle, Intentionality, and the Future of Classifier SystemsXavier Llorà David E. Goldberg reflects about the reality of social constructs and the future of learning classifier systems

Computed Prediction: So far, so good. What now?

Computed Prediction: So far, so good. What now?Xavier Llorà This document discusses computed prediction in learning classifier systems (LCS). It addresses representing the payoff function Q(s,a) that maps state-action pairs to expected future payoffs. Specifically:

1) In computed prediction, each classifier has parameters w and the classifier prediction is computed as a parametrized function p(x,w) like a linear approximation.

2) Classifier weights are updated using the Widrow-Hoff rule online as the payoff function is learned.

3) Using a powerful approximator like tile coding to compute predictions allows the problem to potentially be solved by a single classifier, but evolution of different approximators per problem subspace may still

NIGEL 2006 welcome

NIGEL 2006 welcomeXavier Llorà This document provides information about the NCSA/IlliGAL Gathering on Evolutionary Learning (NIGEL 2006) conference. It discusses how the conference originated from a previous 2003 gathering. It thanks the organizers and participants and provides details about the agenda, which includes presentations on topics like classifier systems and discussions around applications and techniques of evolutionary learning.

Linkage Learning for Pittsburgh LCS: Making Problems Tractable

Linkage Learning for Pittsburgh LCS: Making Problems TractableXavier Llorà Presentation by Xavier Llorà, Kumara Sastry, & David E. Goldberg showing how linkage learning is possible on Pittsburgh style learning classifier systems

Meandre: Semantic-Driven Data-Intensive Flows in the Clouds

Meandre: Semantic-Driven Data-Intensive Flows in the CloudsXavier Llorà - Meandre is a semantic-driven data-intensive workflow infrastructure for distributed computing. It allows users to assemble modular components into complex workflows (flows) in a visual programming tool or using a scripting language called ZigZag.

- Workflows are composed of components, which can be executable or control components. Executable components perform computational tasks when data is available, while control components pause workflows for user interactions. Components are described semantically using ontologies to separate functionality from implementation.

- Data availability drives workflow execution in Meandre. When required inputs are available, components will fire and produce outputs to make data available for downstream components. This dataflow approach aims to make workflows transparent, intuitive, and reusable across

ZigZag: The Meandring Language

ZigZag: The Meandring LanguageXavier Llorà ZigZag is a new language for describing data-intensive workflows. It aims to make the Meandre infrastructure easier to use by allowing users to assemble complex data flows. The language has a new syntax and compiles workflows that can then be run on Meandre to process large datasets.

HUMIES 2007 Bronze Winner: Towards Better than Human Capability in Diagnosing...

HUMIES 2007 Bronze Winner: Towards Better than Human Capability in Diagnosing...Xavier Llorà This slides where the ones presented during GECCO 2007 as part of the final process of the HUMIE awards. This work was awarded with the Bronze medal.

Do not Match, Inherit: Fitness Surrogates for Genetics-Based Machine Learning...

Do not Match, Inherit: Fitness Surrogates for Genetics-Based Machine Learning...Xavier Llorà A byproduct benefit of using probabilistic model-building genetic algorithms is the creation of cheap and accurate surrogate models. Learning classifier systems---and genetics-based machine learning in general---can greatly benefit from such surrogates which may replace the costly matching procedure of a rule against large data sets. In this paper we investigate the accuracy of such surrogate fitness functions when coupled with the probabilistic models evolved by the x-ary extended compact classifier system (xeCCS). To achieve such a goal, we show the need that the probabilistic models should be able to represent all the accurate basis functions required for creating an accurate surrogate. We also introduce a procedure to transform populations of rules based into dependency structure matrices (DSMs) which allows building accurate models of overlapping building blocks---a necessary condition to accurately estimate the fitness of the evolved rules.

Towards Better than Human Capability in Diagnosing Prostate Cancer Using Infr...

Towards Better than Human Capability in Diagnosing Prostate Cancer Using Infr...Xavier Llorà Cancer diagnosis is essentially a human task. Almost universally, the process requires the extraction of tissue (biopsy) and examination of its microstructure by a human. To improve diagnoses based on limited and inconsistent morphologic knowledge, a new approach has recently been proposed that uses molecular spectroscopic imaging to utilize microscopic chemical composition for diagnoses. In contrast to visible imaging, the approach results in very large data sets as each pixel contains the entire molecular vibrational spectroscopy data from all chemical species. Here, we propose data handling and analysis strategies to allow computer-based diagnosis of human prostate cancer by applying a novel genetics-based machine learning technique ({\tt NAX}). We apply this technique to demonstrate both fast learning and accurate classification that, additionally, scales well with parallelization. Preliminary results demonstrate that this approach can improve current clinical practice in diagnosing prostate cancer.

Recently uploaded (20)

Offshore IT Support: Balancing In-House and Offshore Help Desk Technicians

Offshore IT Support: Balancing In-House and Offshore Help Desk Techniciansjohn823664 In today's always-on digital environment, businesses must deliver seamless IT support across time zones, devices, and departments. This SlideShare explores how companies can strategically combine in-house expertise with offshore talent to build a high-performing, cost-efficient help desk operation.

From the benefits and challenges of offshore support to practical models for integrating global teams, this presentation offers insights, real-world examples, and key metrics for success. Whether you're scaling a startup or optimizing enterprise support, discover how to balance cost, quality, and responsiveness with a hybrid IT support strategy.

Perfect for IT managers, operations leads, and business owners considering global help desk solutions.

Co-Constructing Explanations for AI Systems using Provenance

Co-Constructing Explanations for AI Systems using ProvenancePaul Groth Explanation is not a one off - it's a process where people and systems work together to gain understanding. This idea of co-constructing explanations or explanation by exploration is powerful way to frame the problem of explanation. In this talk, I discuss our first experiments with this approach for explaining complex AI systems by using provenance. Importantly, I discuss the difficulty of evaluation and discuss some of our first approaches to evaluating these systems at scale. Finally, I touch on the importance of explanation to the comprehensive evaluation of AI systems.

ECS25 - The adventures of a Microsoft 365 Platform Owner - Website.pptx

ECS25 - The adventures of a Microsoft 365 Platform Owner - Website.pptxJasper Oosterveld My slides for ECS 2025.

Palo Alto Networks Cybersecurity Foundation

Palo Alto Networks Cybersecurity FoundationVICTOR MAESTRE RAMIREZ Palo Alto Networks Cybersecurity Foundation

The case for on-premises AI

The case for on-premises AIPrincipled Technologies Exploring the advantages of on-premises Dell PowerEdge servers with AMD EPYC processors vs. the cloud for small to medium businesses’ AI workloads

AI initiatives can bring tremendous value to your business, but you need to support your new AI workloads effectively. That means choosing the best possible infrastructure for your needs—and many companies are finding that the cloud isn’t right for them. According to a recent Rackspace survey of IT executives, 69 percent of companies have moved some of their applications on-premises from the cloud, with half of those citing security and compliance as the reason and 44 percent citing cost.

On-premises solutions provide a number of advantages. With full control over your security infrastructure, you can be certain that all compliance requirements remain firmly in the hands of your IT team. Opting for on-premises also gives you the ability to design your infrastructure to the precise needs of that team and your new AI workloads. Depending on the workload, you may also see performance benefits, along with more predictable costs. As you start to build your next AI initiative, consider an on-premises solution utilizing AMD EPYC processor-powered Dell PowerEdge servers.

Data Virtualization: Bringing the Power of FME to Any Application

Data Virtualization: Bringing the Power of FME to Any ApplicationSafe Software Imagine building web applications or dashboards on top of all your systems. With FME’s new Data Virtualization feature, you can deliver the full CRUD (create, read, update, and delete) capabilities on top of all your data that exploit the full power of FME’s all data, any AI capabilities. Data Virtualization enables you to build OpenAPI compliant API endpoints using FME Form’s no-code development platform.

In this webinar, you’ll see how easy it is to turn complex data into real-time, usable REST API based services. We’ll walk through a real example of building a map-based app using FME’s Data Virtualization, and show you how to get started in your own environment – no dev team required.

What you’ll take away:

-How to build live applications and dashboards with federated data

-Ways to control what’s exposed: filter, transform, and secure responses

-How to scale access with caching, asynchronous web call support, with API endpoint level security.

-Where this fits in your stack: from web apps, to AI, to automation

Whether you’re building internal tools, public portals, or powering automation – this webinar is your starting point to real-time data delivery.

UiPath Community Berlin: Studio Tips & Tricks and UiPath Insights

UiPath Community Berlin: Studio Tips & Tricks and UiPath InsightsUiPathCommunity Join the UiPath Community Berlin (Virtual) meetup on May 27 to discover handy Studio Tips & Tricks and get introduced to UiPath Insights. Learn how to boost your development workflow, improve efficiency, and gain visibility into your automation performance.

📕 Agenda:

- Welcome & Introductions

- UiPath Studio Tips & Tricks for Efficient Development

- Best Practices for Workflow Design

- Introduction to UiPath Insights

- Creating Dashboards & Tracking KPIs (Demo)

- Q&A and Open Discussion

Perfect for developers, analysts, and automation enthusiasts!

This session streamed live on May 27, 18:00 CET.

Check out all our upcoming UiPath Community sessions at:

👉 https://community.uipath.com/events/

Join our UiPath Community Berlin chapter:

👉 https://community.uipath.com/berlin/

Cybersecurity Fundamentals: Apprentice - Palo Alto Certificate

Cybersecurity Fundamentals: Apprentice - Palo Alto CertificateVICTOR MAESTRE RAMIREZ Cybersecurity Fundamentals: Apprentice - Palo Alto Certificate

Grannie’s Journey to Using Healthcare AI Experiences

Grannie’s Journey to Using Healthcare AI ExperiencesLauren Parr AI offers transformative potential to enhance our long-time persona Grannie’s life, from healthcare to social connection. This session explores how UX designers can address unmet needs through AI-driven solutions, ensuring intuitive interfaces that improve safety, well-being, and meaningful interactions without overwhelming users.

SDG 9000 Series: Unleashing multigigabit everywhere

SDG 9000 Series: Unleashing multigigabit everywhereAdtran Adtran’s SDG 9000 Series brings high-performance, cloud-managed Wi-Fi 7 to homes, businesses and public spaces. Built on a unified SmartOS platform, the portfolio includes outdoor access points, ceiling-mount APs and a 10G PoE router. Intellifi and Mosaic One simplify deployment, deliver AI-driven insights and unlock powerful new revenue streams for service providers.

Droidal: AI Agents Revolutionizing Healthcare

Droidal: AI Agents Revolutionizing HealthcareDroidal LLC Droidal’s AI Agents are transforming healthcare by bringing intelligence, speed, and efficiency to key areas such as Revenue Cycle Management (RCM), clinical operations, and patient engagement. Built specifically for the needs of U.S. hospitals and clinics, Droidal's solutions are designed to improve outcomes and reduce administrative burden.