[NeurIPS2020 (spotlight)] Generalization bound of globally optimal non convex neural network training: Transportation map estimation by infinite dimensional Langevin dynamics

- 1. Taiji Suzuki The University of Tokyo / AIP-RIKEN NeurIPS2020 Generalization bound of globally optimal non-convex neural network training: Transportation map estimation by infinite dimensional Langevin dynamics 1

- 2. Summary Neural network optimization • We formulate NN training as an infinite dimensional gradient Langevin dynamics in RKHS. ➢“Lift” of noisy gradient descent trajectory. • Global optimality is ensured. ➢Geometric ergodicity + time discretization error • Generalization error bound + Excess risk bound. ➢(i) 1/ 𝑛 gen error. (ii) Fast learning rate of excess risk. 2 • Finite/infinite width can be treated in a unifying manner. • Good generalization error guarantee → Different from NTK and mean field analysis.

- 3. Difficulty of NN optimization Optimization of neural network is “difficult” because of 3 Nonconvexity High-dimensionality + •Neural tangent kernel: ➢ Take infinite width asymptotics as 𝑛 → ∞. ➢ Benefit of NN is lost compared with kernel methods. •Mean field analysis: ➢ Take infinite width asymptotics to guarantee convergence. ➢ Its generalization error is not well understood. •(Usual) gradient Langevin dynamics: ➢ Suffer from curse of dimensionality. Our formulation: Infinite dimensional gradient Langevin dynamics.

- 4. Infinite dim neural network • 2-layer NN: direct expression 4 (training loss) • 2-layer NN: transportation map expression (infinite width) (integral representation) Also includes • DNN • ResNet etc. 𝑎𝑚 = 0 (𝑚 > 𝑀) → finite width network

- 5. Mean field model 5 Expectation w.r.t. prob. density 𝜌 of (𝑎, 𝑤): Optimization of 𝑓 ⇔ Optimization of 𝜌 Continuity equation 𝑣𝑡: gradient Convergence is guaranteed for 𝜌𝑡 with density. (Infinite width) (movement of each particle) (distribution) [Nitanda&Suzuki, 2017][Chizat&Bach, 2018][Mei, Montanari&Nguyen, 2018] Each neuron corresponds to one particle. One partilce

- 6. “Lift” of neural network training 6 Transportation map formulation: (finite width) 𝜌0 has a finite discrete support → finite width network Finite/Infinite width can be treated in a unifying manner. (unlike existing frame-work such as NTK and mean field)

- 7. Infinite-dim non-convex optimization 7 Ex. • ℋ: 𝐿2(𝜌) • ℋ𝐾: RKHS (e.g., Sobolev sp.) Optimal solution nonconvex We utilize gradient Langevin dynamics in a Hilbert space to optimize the objective.

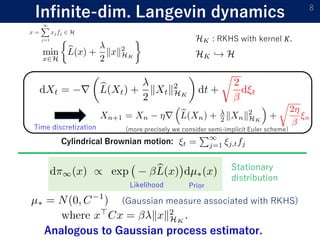

- 8. Infinite-dim. Langevin dynamics 8 : RKHS with kernel 𝐾. Cylindrical Brownian motion: Time discretization Analogous to Gaussian process estimator. (Gaussian measure associated with RKHS) Stationary distribution Likelihood Prior (more precisely we consider semi-implicit Euler scheme)

- 9. Infinite dimensional setting Hilbert space 9 RKHS structure Assumption (eigenvalue decay) (not essential, can be relaxed to 𝜇𝑘 ∼ 𝑘−𝑝 for 𝑝 > 1)

- 10. Risk bounds of NN training 10 Gen. error: Excess risk: Time discretization Optimization method (Infinite dimensional GLD):

- 11. Error bound 11 Thm (Generalization error bound) with probability 1 − 𝛿. Opt. error: [Muzellec, Sato, Massias, Suzuki, arXiv:2003.00306 (2020)] Ο(1/ 𝑛) PAC-Bayesian stability bound [Rivasplata, Kuzborskij, Szepesvári, and Shawe-Taylor, 2019] • Loss function ℓ is “sufficiently smooth.” • Loss and its gradients are bounded: Assumption (geometric ergodicity + time discretization) Λ𝜂 ∗ : spectral gap

- 12. Fast rate: general result 12 Thm (Excess risk bound: fast rate) Let and . Can be faster than Ο(1/ 𝑛)

- 13. Example: classification & regression 13 Strong low noise condition: For sufficiently large 𝑛 and any 𝛽 ≤ 𝑛, Classification Regression Model: Excess classification error

- 14. Summary Neural network optimization • We formulate NN training as an infinite dimensional gradient Langevin dynamics in RKHS. ➢“Lift” of noisy gradient descent trajectory. • Global optimality is ensured. ➢Geometric ergodicity + time discretization error • Generalization error bound + Excess risk bound. ➢(i) 1/ 𝑛 gen error. (ii) Fast learning rate of excess risk. 14 • Finite/infinite width can be treated in a unifying manner. • Good generalization error guarantee → Different from NTK and mean field analysis.

![Mean field model 5

Expectation w.r.t. prob. density 𝜌 of (𝑎, 𝑤):

Optimization of 𝑓 ⇔ Optimization of 𝜌

Continuity equation

𝑣𝑡: gradient

Convergence is guaranteed for 𝜌𝑡 with density.

(Infinite width)

(movement of

each particle)

(distribution)

[Nitanda&Suzuki, 2017][Chizat&Bach, 2018][Mei, Montanari&Nguyen, 2018]

Each neuron corresponds

to one particle.

One partilce](https://image.slidesharecdn.com/neurips2020spotlight-210331133014/85/NeurIPS2020-spotlight-Generalization-bound-of-globally-optimal-non-convex-neural-network-training-Transportation-map-estimation-by-infinite-dimensional-Langevin-dynamics-5-320.jpg)

![Error bound 11

Thm (Generalization error bound)

with probability 1 − 𝛿.

Opt. error:

[Muzellec, Sato, Massias, Suzuki, arXiv:2003.00306 (2020)]

Ο(1/ 𝑛)

PAC-Bayesian stability bound [Rivasplata, Kuzborskij, Szepesvári, and Shawe-Taylor, 2019]

• Loss function ℓ is “sufficiently smooth.”

• Loss and its gradients are bounded:

Assumption

(geometric ergodicity + time discretization)

Λ𝜂

∗ : spectral gap](https://image.slidesharecdn.com/neurips2020spotlight-210331133014/85/NeurIPS2020-spotlight-Generalization-bound-of-globally-optimal-non-convex-neural-network-training-Transportation-map-estimation-by-infinite-dimensional-Langevin-dynamics-11-320.jpg)