Ad

New Features in Neo4j 3.4 / 3.3 - Graph Algorithms, Spatial, Date-Time & Visualization

- 1. Release Demo: Neo4j 3.4 & Bloom Michael Hunger (@mesirii) Developer Relations Neo4j CPH- June 2018 3

- 2. Graph Transactions Graph Analytics Data Integration Development & Admin Analytics Tooling Drivers & APIs Discovery & Visualization Developers Admins Applications Business Users Data Analysts Data Scientists Enterprise Data Hub Native Graph Platform: Tools for Many Users

- 3. Neo4j: Enabling the Connected Enterprise Consumers of Connected Data AI & Graph Analytics • Sentiment analysis • Customer segmentation • Machine learning • Cognitive computing • Community detection Transactional Graphs • Fraud detection • Real-time recommendations • Network and IT operations management • Knowledge Graphs • Master Data Management Discovery & Visualization • Fraud detection • Network and IT operations • Product information management • Risk and portfolio analysisData Scientists Business Users Applications

- 4. Neo4j 3.3 and 3.4 Latest Innovations

- 5. 3.3 Oct 2017

- 6. Development & Administration Analytics Tooling Graph Analytics Graph Transactions Data Integration Discovery & VisualizationDrivers & APIs AI Neo4j Database 3.3 • 50% faster writes • Real-time transactions and traversal applications Review: The Neo4j Graph Platform, Fall 2017 Neo4j Desktop, the developers’ mission control console • Free, registered local license of Enterprise Edition • APOC library installer • Algorithm library installer Data Integration • Neo4j ETL reveals RDBMS hidden relationships upon importing to graph • Data Importer for fast data ingestion Graph Analytics • Graph Algorithms support Community Detection, Centrality and Path Finding • Cypher for Apache Spark from openCypher.org supports graph composition (sub-graphs) and algorithm chaining Discovery & Visualization • Integration with popular visualization vendors • Neo4j Browser and custom visualizations allow graph exploration Bolt with GraphQL and more • Secure, Causal Clustering • High-speed analytic processing • On-prem, Docker & cloud delivery

- 7. “Least Connected” load balancing Faster & more memory efficient runtime Batch generation of IDs Schema operations now take local locks Page cache metadata moved off heap Native GB+ Tree numeric indexes Bulk importer paging & memory improvements Dynamically reload config settings without restarting Neo4j Admin & Config Storage & Indexing Memory Management Kernel & Transactions Cypher Engine Drivers & Bolt Protocol Neo4j 3.3 Performance Improvements

- 8. Concurrent/Transactional Write Performance 25000 20000 15000 10000 5000 0 Neo4j 2.2 Neo4j 2.3 Neo4j 3.0 Neo4j 3.1 Neo4j 3.2 Neo4j 3.3 69% 31% 59% 38% 55% (Simulates Real-World Workloads)

- 9. ` RR RR RR RRRRRR READ REPLICAS London ` C C RR RR RR RRRRRR READ REPLICAS New York Multi-Data Center Clustering with Secure Transit

- 10. Neo4j Desktop 1.0 • Mission control for developers • Connect to both local and remote Neo4j servers • Free with registration • Includes development license for Neo4j Enterprise Edition • Graph Apps • Keeps you up to date with latest versions, plugins, etc. • https://neo4j.com/download

- 11. Finds the optimal path or evaluates route availability and quality • Single Source Short Path • All-Nodes SSP • Parallel paths Evaluates how a group is clustered or partitioned • Label Propagation • Union Find • Strongly Connected Components • Louvain • Triangle-Count Determines the importance of distinct nodes in the network • PageRank • Betweeness • Closeness • Degree Data Science Algorithms

- 12. Project Goals • high performance graph algorithms • user friendly (procedures) • support graph projections • augment OLTP with OLAP • integrate efficiently with live Neo4j database (read, write, Cypher) • common Graph API to write your own

- 13. Usage 1. Call as Cypher procedure

- 14. Usage 1. Call as Cypher procedure 2. Pass in specification (Label, Prop, Query) and configuration

- 15. Usage 1. Call as Cypher procedure 2. Pass in specification (Label, Prop, Query) and configuration 3. ~.stream variant returns (a lot) of results CALL algo.<name>.stream('Label','TYPE',{conf}) YIELD nodeId, score

- 16. Usage 1. Call as Cypher procedure 2. Pass in specification (Label, Prop, Query) and configuration 3. ~.stream variant returns (a lot) of results CALL algo.<name>.stream('Label','TYPE',{conf}) YIELD nodeId, score 1. non-stream variant writes results to graph and returns statistics CALL algo.<name>('Label','TYPE',{conf});

- 17. Usage 1. Call as Cypher procedure 2. Pass in specification (Label, Prop, Query) and configuration 3. ~.stream variant returns (a lot) of results CALL algo.<name>.stream('Label','TYPE',{conf}) YIELD nodeId, score 1. non-stream variant writes results to graph and returns statistics CALL algo.<name>('Label','TYPE',{conf}); 1. Cypher projection: pass in Cypher for node and relationship lists CALL algo.<name>( 'MATCH ... RETURN id(n)', 'MATCH (n)-->(m) RETURN id(n), id(m)', { graph:'cypher' } )

- 18. Architecture 1. Load Data in parallel from Neo4j 2. Store in efficient data structure 3. Run graph algorithm in parallel using Graph API 4. Write data back in parallel Neo4j 1, 2 Algorithm Datastructures 4 3 Graph API

- 19. TheAlgorithms Centralities • PageRank (baseline) • Betweeness • Closeness • Degree

- 20. Example: PageRank CALL algo.pageRank('Page', 'LINKS', { iterations:20, dampingFactor:0.85, write: true, writeProperty:"pagerank"} ) YIELD nodes, iterations, loadMillis, computeMillis, writeMillis, dampingFactor, write, writeProperty

- 21. Clustering • Label Propagation • Louvain (Phase2) • Union Find / WCC • Strongly Connected Components • Triangle- Count/Clustering CoeffTheAlgorithms

- 22. CALL algo.unionFind('User', 'FRIEND', { write:true, partitionProperty:'partition'} ) YIELD nodes, setCount, loadMillis, computeMillis, writeMillis Example: UnionFind (CC)

- 23. Path-Expansion / Traversal • Single Short Path • All-Nodes SSP • Parallel BFS / DFS TheAlgorithms

- 24. MATCH(start:Location {name:'A'}) CALL algo.deltaStepping.stream(start, 'cost', 3.0) YIELD nodeId, distance RETURN nodeId, distance ORDER LIMIT 20 Example: Delta Stepping

- 25. Reading Material • Thanks to Amy Hodler • 13 Top Resources on Graph Theory • neo4j.com/blog/ top-13-resources-graph-theory-algorithms/

- 26. GraphAware NLP Comprehensive Suite of NLP Tools for Neo4j

- 28. Trump Twitter Analysis (M. David Allen)

- 29. ● Import Trump Twitter Archive ● Extract Hashtags and Mentions ● Some Analytics Queries ● Use NLP (Caution) ● for Entities and Sentiment ● Some more queries Trump is Dooming the World one Tweet(storm) at a Time https://medium.com/@david.allen_3172/using-nlp-in-neo4j-ac40bc92196f

- 30. WITH [ archive.com/data/realdonaldtrump/2018.json',..] as urls UNWIND urls AS url CALL apoc.load.json(url) YIELD value as t CREATE (tweet:Tweet { id_str: t.id_str, text: t.text, created_at: t.created_at, retweets: t.retweet_count, favorites: t.favorite_count, retweet: t.is_retweet, reply: t.in_reply_to_user_id_str, source: t.source }) RETURN count(t); Importing Data https://medium.com/@david.allen_3172/using-nlp-in-neo4j-ac40bc92196f

- 31. MATCH (t:Tweet) WHERE t.text contains '#' WITH t, apoc.text.regexGroups(t.text, "#([w_]+)") as matches UNWIND matches as match WITH t, match[1] as hashtag MERGE (h:Tag { name: toUpper(hashtag) }) ON CREATE SET h.text = hashtag MERGE (h)<-[:TAGGED]-(t) RETURN count(h); Importing Data - Hashtags https://medium.com/@david.allen_3172/using-nlp-in-neo4j-ac40bc92196f

- 32. MATCH (t:Tweet) WHERE t.text CONTAINS '@' WITH t, apoc.text.regexGroups(t.text, "@([w_]+)") as matches UNWIND matches as match WITH t, match[1] as mention MERGE (u:User { name: toLower(mention) }) ON CREATE SET u.text = mention MERGE (u)<-[:MENTIONED]-(t) RETURN count(u); Importing Data - Mentions https://medium.com/@david.allen_3172/using-nlp-in-neo4j-ac40bc92196f

- 33. MATCH (tag:Tag)<-[:TAGGED]-(tw) RETURN tag.name, count(*) as freq ORDER BY freq desc LIMIT 10; MATCH (u:User)<-[:MENTIONED]-(tw) RETURN u.name, count(*) as freq ORDER BY freq desc LIMIT 10; Query Data – Tags / Mentions https://medium.com/@david.allen_3172/using-nlp-in-neo4j-ac40bc92196f

- 35. NLP Knowledge Studio by GraphAware

- 38. NLP Knowledge Studio by GraphAware

- 39. NLP Knowledge Studio by GraphAware

- 40. New in Town 3.4 May 2018

- 43. Support for Time and Date +

- 44. • property storage: local date and time, date & time with timezones • durations • indexed • range scans: $before < event.time < $after • lots of possible datetime formats including weeks, quarters • ordering • Events, Time Tracking, History, Auditing, ... DateTime https://neo4j.com/docs/developer-manual/current/cypher/syntax/temporal/

- 45. DateTime Types & Functions Support date Support time Support timezone Date x Time x x LocalTime x DateTime x x x LocalDateTime x x

- 46. DateTime Types & Functions date() time.transaction() localtime.statement() datetime.realtime() datetime.realtime('Europe/Berlin') "db.temporal.timezone" Current date, using statement clock Current time, using transaction clock Current local time, using statement clock Current date and time, using realtime clock This datetime instance will have the default timezone of the database Current date and time, using realtime clock, in Europe/Berlin timezone Neo4j setting to configure default timezone*, taking a String, e.g. "Europe/Berlin".

- 47. DateTime Types & Functions date("2018-01-01") localtime("12:00:01.003") time("12:00:01.003+01:00") datetime("2018-01-01T12:00:01.003[Europe/Berlin]")

- 48. DateTime Types & Functions date({year: 2018, month: 1, day: 1}) localtime({hour: 12, minute: 0, second 1, millisecond: 3}) time({hour: 12, minute: 0, second 1, millisecond: 3, timezone: '+01:00'}) datetime({year: 2018, month: 1, day: 1, hour: 12, minute: 0, second 1, millisecond: 3, timezone: 'Europe/Berlin'})

- 49. DateTime Types & Functions - Duration a = localdatetime("2018-01-01T00:00") b = localdatetime("2018-02-02T01:00") duration.between(a, b) -> (1M, 1D, 3600s, 0ns) duration.inMonths(a, b) -> (1M, 0D, 0s, 0ns) duration.inDays(a, b) -> (0M, 32D, 0s, 0ns) duration.inSeconds(a, b) -> (0M, 0D, 2768400s, 0ns)

- 50. DateTime Types & Functions - Duration a = localdatetime("2018-01-01T00:00") b = localdatetime("2018-02-02T01:00") duration.between(a, b) -> (1M, 1D, 3600s, 0ns) duration.inMonths(a, b) -> (1M, 0D, 0s, 0ns) duration.inDays(a, b) -> (0M, 32D, 0s, 0ns) duration.inSeconds(a, b) -> (0M, 0D, 2768400s, 0ns) // Maths instant + duration instant – duration duration * number duration / number

- 51. DateTime Types & Functions - toString toString Date 2018-05-07 Time 15:37:20.05+02:00 LocalTime 15:37:20.05 DateTime 2018-05-07T15:37:20.05+02:00[Europe/Stockholm] LocalDateTime 2018-05-07T15:37:20.05 Duration P12Y5M14DT16H13M10.01S Prints all temporal types in a format that can be parsed back

- 52. DateTime Types & Functions - Pitfalls • Don’t compare Durations. Add them to the same instant and compare the results instead. date + dur < date + dur2 • Don’t subtract instants. Use duration.between. duration.between(date1, date2) • Keep predicates simple to leverage indexing. MATCH (n), (m) WHERE datetime({date: n.date, time: m.time}) = dt MATCH (n), (m) WHERE n.date = date(dt) AND m.time = time(dt)

- 53. Support for Geospatial Search + medium.com/neo4j/whats-new-in-neo4j-spatial-features-586d69cda8d0

- 54. Geospatial Graph Queries – Coord Systems Explicit CRS: • point({x:1, y:2, z:3, crs:'wgs-84-3d'}) • point({x:1, y:2, z:3, srid:4979}) Inferred CRS: • point({x:1, y:2}) => 'cartesian' • point({x:1, y:2, z:3}) => 'cartesian-3d' • point({latitude:2, longitude:1}) => 'wgs-84' • point({latitude:2, longitude:1, height:1000}) => 'wgs-84-3d'

- 55. Geospatial Graph Queries – Index Neo4j 3.2 introduced the GBPTree: • Lock-free writes (high insert performance) • Good read performance (comparable) Neo4j 3.3 introduced the NativeSchemaNumberIndex: • And the FusionSchemaIndex • Allows multiple types to exist in one logical index Neo4j 3.4 Spatial Index / Date Time Index • Different indexes for coordinate system • Hilbert Space Filling Curves

- 57. Geospatial Graph Queries 9 index 5 hits

- 60. Performance

- 61. Neo4j 3.3 Neo4j 3.4 70% Faster 250 500 0 750 1000 Neo4j 3.2 Reads – Neo4j Enterprise Cypher Runtime 10% Faster Mixed Workload Read Benchmark

- 62. Supercharging Graph Writes One Component: 80% of transactional write overhead (!!!) ?QM ,. Index Insertion

- 63. ACID Optimized for graph Fast Reads ~10x Faster Writes Neo4j GB+ Tree Index Label Groupings (Neo4j 3.2) Numerics (Neo4j 3.3) Strings (Neo4j 3.4) QM ,. External Index Provider Native Neo4j Index Supercharging Graph Writes

- 64. 68 Writes with Native String Indexes 500% improvement Neo4j 3.4 RC1 Performance

- 65. Scalability

- 66. Multi-Clustering Support for Global Internet Apps Horizontally partition graph by domain (country, product, customer, data center) 70 Multi-tenancy Geo Partitioning Write Scaling Driver Support sa cluster uk cluster us_east cluster hk cluster

- 67. Ops & Admin

- 68. LDAP & Active Directory User & Roles Procedure Access Controls Security: Property Blacklisting added Kerberos SSO Certs Intra-Cluster Encryption Security Event Logging TLS Wire Encryption SSN: “043-56-8834” 0000“043-56-8834” Property Blacklisting

- 70. Rolling Upgrades 74 3.4 3.4 3.44.0 4.0 4.0 Auto Cache Reheating Upgrade to new versions with zero downtime Store upgrades may require downtime but can be done subsequently For Restarts, Restores, and Cluster Expansion

- 71. 75 3.4 Features By Edition Community Edition Enterprise Edition Date / Time data types ■ ■ 3D Geospatial data types ■ ■ Performance Improvements Native String Indexes – up to 5x faster writes ■ ■ 2x faster backups ■ Improved Cypher runtime Fast Faster 100B+ object bulk importer ■ Resumable Enterprise Scaling & Administration Multi-Clustering (partitioning of clusters) ■ Automatic cache warming ■ Rolling upgrades ■ Resumable copy/restore cluster member ■ New diagnostic metrics and support tools ■ Security: Property blacklisting by user/role ■

- 72. Development & Administration Analytics Tooling Graph Analytics Graph Transactions Data Integration Discovery & VisualizationDrivers & APIs AI Neo4j Database 3.4 • 70% faster Cypher • Native String Indexes (up to 5x faster writes) • 100B+ bulk importer The Neo4j Graph Platform, Summer 2018 Improved Admin Experience • Rolling upgrades • 2X faster backups • Cache Warming on startup • Improved diagnostics Morpheus for Apache Spark • Graph analytics in the data lake • In-memory Spark graphs from Apache Hadoop, Hive, Gremlin and Spark • Save graphs into Neo4j • High-speed data exchange between Neo4j & data lake • Progressive analysis using named graphs Graph Data Science • High speed graph algorithms Neo4j Bloom • New graph illustration and communication tool for non- technical users • Explore and edit graph • Search-based • Create storyboards • Foundation for graph data discovery • Integrated with graph platform Multi-Cluster routing built into Bolt drivers • Date/Time data type • 3-D Geospatial search • Secure, Horizontal Multi-Clustering • Property Blacklisting Security

- 73. Introducing A Brand New Graph Exploration Product

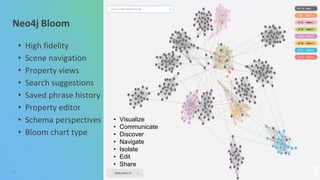

- 74. Neo4j Bloom

- 75. Neo4j Bloom 79 • High fidelity • Scene navigation • Property views • Search suggestions • Saved phrase history • Property editor • Schema perspectives • Bloom chart type • Visualize • Communicate • Discover • Navigate • Isolate • Edit • Share

- 76. 80 Neo4j Bloom Features • Prompted Search • Property Browser & editor • Category icons and color scheme • Pan, Zoom & Select

- 77. Advancing the Platform 81 Native graph architecture extends scale, use cases and performance • Neo4j Database 3.4 Shipping May, 2018 New products for new users • Neo4j Bloom visualization & storyboard tool for business Shipping in June, 2018

![WITH [ archive.com/data/realdonaldtrump/2018.json',..]

as urls

UNWIND urls AS url

CALL apoc.load.json(url) YIELD value as t

CREATE (tweet:Tweet {

id_str: t.id_str, text: t.text,

created_at: t.created_at, retweets: t.retweet_count,

favorites: t.favorite_count, retweet: t.is_retweet,

reply: t.in_reply_to_user_id_str, source: t.source

}) RETURN count(t);

Importing Data

https://medium.com/@david.allen_3172/using-nlp-in-neo4j-ac40bc92196f](https://image.slidesharecdn.com/neo4j-34-meetup-180623073429/85/New-Features-in-Neo4j-3-4-3-3-Graph-Algorithms-Spatial-Date-Time-Visualization-30-320.jpg)

![MATCH (t:Tweet) WHERE t.text contains '#'

WITH t,

apoc.text.regexGroups(t.text, "#([w_]+)") as matches

UNWIND matches as match

WITH t, match[1] as hashtag

MERGE (h:Tag { name: toUpper(hashtag) })

ON CREATE SET h.text = hashtag

MERGE (h)<-[:TAGGED]-(t)

RETURN count(h);

Importing Data - Hashtags

https://medium.com/@david.allen_3172/using-nlp-in-neo4j-ac40bc92196f](https://image.slidesharecdn.com/neo4j-34-meetup-180623073429/85/New-Features-in-Neo4j-3-4-3-3-Graph-Algorithms-Spatial-Date-Time-Visualization-31-320.jpg)

![MATCH (t:Tweet) WHERE t.text CONTAINS '@'

WITH t,

apoc.text.regexGroups(t.text, "@([w_]+)") as

matches

UNWIND matches as match

WITH t, match[1] as mention

MERGE (u:User { name: toLower(mention) })

ON CREATE SET u.text = mention

MERGE (u)<-[:MENTIONED]-(t)

RETURN count(u);

Importing Data - Mentions

https://medium.com/@david.allen_3172/using-nlp-in-neo4j-ac40bc92196f](https://image.slidesharecdn.com/neo4j-34-meetup-180623073429/85/New-Features-in-Neo4j-3-4-3-3-Graph-Algorithms-Spatial-Date-Time-Visualization-32-320.jpg)

![MATCH (tag:Tag)<-[:TAGGED]-(tw)

RETURN tag.name, count(*) as freq

ORDER BY freq desc LIMIT 10;

MATCH (u:User)<-[:MENTIONED]-(tw)

RETURN u.name, count(*) as freq

ORDER BY freq desc LIMIT 10;

Query Data – Tags / Mentions

https://medium.com/@david.allen_3172/using-nlp-in-neo4j-ac40bc92196f](https://image.slidesharecdn.com/neo4j-34-meetup-180623073429/85/New-Features-in-Neo4j-3-4-3-3-Graph-Algorithms-Spatial-Date-Time-Visualization-33-320.jpg)

![DateTime Types & Functions

date("2018-01-01")

localtime("12:00:01.003")

time("12:00:01.003+01:00")

datetime("2018-01-01T12:00:01.003[Europe/Berlin]")](https://image.slidesharecdn.com/neo4j-34-meetup-180623073429/85/New-Features-in-Neo4j-3-4-3-3-Graph-Algorithms-Spatial-Date-Time-Visualization-47-320.jpg)

![DateTime Types & Functions - toString

toString

Date 2018-05-07

Time 15:37:20.05+02:00

LocalTime 15:37:20.05

DateTime 2018-05-07T15:37:20.05+02:00[Europe/Stockholm]

LocalDateTime 2018-05-07T15:37:20.05

Duration P12Y5M14DT16H13M10.01S

Prints all temporal types in a format that can be parsed back](https://image.slidesharecdn.com/neo4j-34-meetup-180623073429/85/New-Features-in-Neo4j-3-4-3-3-Graph-Algorithms-Spatial-Date-Time-Visualization-51-320.jpg)

![Get & Download Wondershare Filmora Crack Latest [2025]](https://cdn.slidesharecdn.com/ss_thumbnails/revolutionizingresidentialwi-fi-250422112639-60fb726f-250429170801-59e1b240-thumbnail.jpg?width=560&fit=bounds)