NoSQL Riak MongoDB Elasticsearch - All The Same?

4 likes3,458 views

Gives a general introduction to NoSQL and modeling data with JSON. Goes on to compare MongoDB, Riak and Elasticsearch - that seem to be the same at first sight but are in fact pretty different. Presented at JavaLand.

1 of 74

Downloaded 44 times

Ad

Recommended

NoSQL and Architectures

NoSQL and ArchitecturesEberhard Wolff Eberhard Wolff discusses NoSQL databases and architectures. He explains that NoSQL databases sacrifice joins for scalability by storing related data together rather than across tables. This allows for high availability at the cost of eventual consistency according to the CAP theorem. Architects taking a polyglot approach must choose the right database for each use case, factoring in scalability, flexibility and operational tradeoffs.

Heroku

HerokuEberhard Wolff Heroku is a platform as a service that originally started as a Ruby PaaS but now supports Node.js, Clojure, Grails, Scala, and Python. It uses the Git version control system for deployment and a dyno process model for scaling applications. While flexible in allowing custom buildpacks and configuration via environment variables, there are also restrictions like maximum source code size and memory limits for dyno processes.

High Availability and Scalability: Too Expensive! Architectures for Future E...

High Availability and Scalability: Too Expensive! Architectures for Future E...Eberhard Wolff The document discusses high availability and scalability approaches for enterprise systems. Traditionally, enterprises rely on highly reliable hardware, small clusters, and standby data centers. However, this approach is expensive and systems can still fail. The document proposes using virtualization, stateless servers, and NoSQL databases to achieve high availability and scalability at lower cost. Servers are provisioned as "Phoenix servers" that can be easily recreated if failed, without relying on expensive reliable hardware. Data is distributed across servers for redundancy and fine-grained scaling. This software-based approach provides better performance and availability compared to traditional hardware-centric methods.

Data Science at Scale with Apache Spark and Zeppelin Notebook

Data Science at Scale with Apache Spark and Zeppelin NotebookCarolyn Duby How to get started with Data Science at scale using Apache Spark to clean, analyze, discover, and build models on large data sets. Use Zeppelin to record analysis to encourage peer review and reuse of analysis techniques.

Meetup Crash Course: Cassandra Data Modelling

Meetup Crash Course: Cassandra Data ModellingErick Ramirez A crash course in Cassandra Data Modelling presented at the Melbourne Cassandra Meetup (bit.ly/1U9mNb7). The essentials pack to get you started on your journey to becoming The Next Top Model-ler!

Micro Services - Smaller is Better?

Micro Services - Smaller is Better?Eberhard Wolff This presentation shows some advice how to be successful with micro services: Which size is right? How shall a project start? What about refactoring?

Boston Future of Data Meetup: May 2017: Spark Introduction with Credit Card F...

Boston Future of Data Meetup: May 2017: Spark Introduction with Credit Card F...Carolyn Duby Presented at the Future of Data Meetup in Boston in May 2017. An introduction to Apache Spark followed by a sample credit card fraud demo using Spark, Nifi, Storm and Hbase.

Speed Up Your APEX Apps with JSON and Handlebars

Speed Up Your APEX Apps with JSON and HandlebarsMarko Gorički The document discusses various methods for generating JSON from SQL queries in Oracle APEX applications. It compares manually concatenating strings, using the apex_util.json_from_* procedures, the PL/JSON library, the APEX_JSON API package, and Oracle REST Data Services (ORDS). The APEX_JSON package is recommended for most cases as it supports generation and parsing, can be used standalone, has a light footprint, and makes conversion from XML easy. Using a templating engine like Handlebars.js with JSON is also presented as a way to dynamically render HTML from database queries.

External Master Data in Alfresco: Integrating and Keeping Metadata Consistent...

External Master Data in Alfresco: Integrating and Keeping Metadata Consistent...ITD Systems Real life content is always tightly integrated with master data. Reference data to be used for the content is usually stored in a third-party enterprise system (or even several different systems) and should be consumed by Alfresco.

Flink Community Update 2015 June

Flink Community Update 2015 JuneMárton Balassi This document summarizes Apache Flink community updates from June 2015. It discusses the 0.9.0 release of Apache Flink, an open source platform for scalable batch and stream data processing. Key points include the addition of two new committers, blog posts and workshops promoting Flink, and various conference and meetup talks about Flink occurring that month. It encourages registration for the Flink Forward conference in October 2015.

Software Developer and Architecture @ LinkedIn (QCon SF 2014)

Software Developer and Architecture @ LinkedIn (QCon SF 2014)Sid Anand The document provides details about Sid Anand's career and then discusses LinkedIn's software development process and architecture when he was there. It notes that when Sid started at LinkedIn in 2011, compiling the code took a long time due to the large codebase and many dependencies. It then describes how LinkedIn scaled to support hundreds of millions of members and thousands of employees by splitting the monolithic codebase into individual Git repos, using intermediate JARs to reduce dependencies, and connecting development machines to test environments instead of deploying everything locally. It also discusses LinkedIn's use of Kafka, search federation, and not making web service calls between data centers to scale across multiple data centers.

Get involved with the Apache Software Foundation

Get involved with the Apache Software FoundationShalin Shekhar Mangar Presented at Indian Institute of Information Technology (IIIT) Allahabad on 21 Oct 2009 to students about the Apache Software Foundation, Lucene, Solr, Hadoop and on the benefits of contributing to open source projects. The target audience was sophomore, junior and senior B.Tech students.

Avoid boring work_v2

Avoid boring work_v2Marcin Przepiórowski How to avoid boring work

Automation for DBAs.

How to use OEM 12c and Ansible in day by day DBA worj

Galene - LinkedIn's Search Architecture: Presented by Diego Buthay & Sriram S...

Galene - LinkedIn's Search Architecture: Presented by Diego Buthay & Sriram S...Lucidworks LinkedIn's search architecture called Galene uses Lucene to index hundreds of millions of profiles. Galene improves search quality and scalability through techniques like offline indexing for complex features, live updates at fine granularity, static ranking to prioritize more popular profiles, and early termination to quickly return top results. The architecture includes base, live, and snapshot indexes to support these techniques.

PHP, the GraphQL ecosystem and GraphQLite

PHP, the GraphQL ecosystem and GraphQLiteJEAN-GUILLAUME DUJARDIN Creating a GraphQL API is more and more common for PHP developers. The task can seem complex but there are a lot of tools to help.

In this talk given at AFUP Paris PHP Meetup, I'm presenting GraphQL and why it is important. Then, I'm having a look at the existing libraries in PHP. Finally, I'm diving in the details of GraphQLite; a library that creates a GraphQL schema by analyzing your PHP code.

Java Application Servers Are Dead!

Java Application Servers Are Dead!Eberhard Wolff This document discusses the declining relevance of traditional Java application servers. It argues that application servers are no longer needed as containers for multiple applications due to limitations in isolation. Modern applications are better suited as individual JAR files with their own custom infrastructure and standard monitoring and deployment tools rather than relying on application server-specific approaches. Frameworks like Spring Boot and Dropwizard provide an alternative to application servers by allowing applications to be self-contained deployable units without dependency on external application server infrastructure.

State-of-the-Art Drupal Search with Apache Solr

State-of-the-Art Drupal Search with Apache Solrguest432cd6 These are the slides from the presentation I gave on Feb. 2, 2010, in Brussels, at the FOSDEM conference.

Oracle APEX Nitro

Oracle APEX NitroMarko Gorički This document discusses using APEX Nitro to improve the APEX development process. APEX Nitro allows developers to write CSS and JavaScript locally and have changes automatically synced to their APEX application. It provides features like error handling, minification, concatenation, and preprocessing to boost performance and maintainability. The document reviews how to install, configure, and use APEX Nitro to enhance the front-end development experience.

SolrCloud and Shard Splitting

SolrCloud and Shard SplittingShalin Shekhar Mangar This document summarizes a presentation about SolrCloud shard splitting. It introduces the presenter and his background with Apache Lucene and Solr. The presentation covers an overview of SolrCloud, how documents are routed to shards in SolrCloud, the SolrCloud collections API, and the new functionality for splitting shards in Solr 4.3 to allow dynamic resharding of collections without downtime. It provides details on the shard splitting mechanism and tips for using the new functionality.

Real Time search using Spark and Elasticsearch

Real Time search using Spark and ElasticsearchSigmoid This document discusses using Spark Streaming and Elasticsearch to enable real-time search and analysis of streaming data. Spark Streaming processes and enriches streaming data and stores it in Elasticsearch for low-latency search and alerts. The elasticsearch-hadoop connector allows Spark jobs to read from and write to Elasticsearch, integrating the batch processing of Spark with the real-time search of Elasticsearch.

Software Architecture for DevOps and Continuous Delivery

Software Architecture for DevOps and Continuous DeliveryEberhard Wolff Talks from Continuous Lifecycle 2013 in Germany about the influence of DevOps and Continuous Delivery on Software Architecture,

Spark Summit EU talk by Dean Wampler

Spark Summit EU talk by Dean WamplerSpark Summit Dean Wampler gave a talk at the Spark Summit Europe 2016 titled "Just Enough Scala for Spark". He demonstrated coding a demo in Scala for Spark, based on his free online tutorial. The tutorial and live coding showed attendees the essential Scala concepts for working with Spark. Wampler also provided resources for additional Scala and Spark help.

SparkR + Zeppelin

SparkR + Zeppelinfelixcss This document summarizes a presentation on using SparkR and Zeppelin. SparkR allows using R language APIs for Spark, exposing Spark functionality through an R-friendly DataFrame API. SparkR DataFrames can be used in Zeppelin, providing interfaces between native R and Spark operations. The presentation demonstrates running SparkR code and DataFrame transformations in Zeppelin notebooks.

Build a modern data platform.pptx

Build a modern data platform.pptxIke Ellis The document discusses building a data platform for analytics in Azure. It outlines common issues with traditional data warehouse architectures and recommends building a data lake approach using Azure Synapse Analytics. The key elements include ingesting raw data from various sources into landing zones, creating a raw layer using file formats like Parquet, building star schemas in dedicated SQL pools or Spark tables, implementing alerting using Log Analytics, and loading data into Power BI. Building the platform with Python pipelines, notebooks, and GitHub integration is emphasized for flexibility, testability and collaboration.

Loading 350M documents into a large Solr cluster: Presented by Dion Olsthoorn...

Loading 350M documents into a large Solr cluster: Presented by Dion Olsthoorn...Lucidworks This document summarizes a presentation about loading 350 million documents into a Solr cluster in 8 hours or less. It describes using an external cloud platform for preprocessing content before indexing into Solr. It also details using a queueing system to post content to Solr in batches to avoid overloading the cluster. The presentation recommends indexing large content sets on an isolated Solr environment before restoring indexes to the production cluster.

Running Apache Zeppelin production

Running Apache Zeppelin productionVinay Shukla Discussion around concerns related to deploying Apache Zeppelin in production, including deployment choices, security, performance and integration.

HBaseCon2015-final

HBaseCon2015-finalMaryann Xue Apache Phoenix is a SQL query layer for Apache HBase that allows users to interact with HBase through JDBC. It transforms SQL queries into native HBase API calls to optimize execution across the HBase cluster in a parallel manner. The presentation covered Phoenix's current features like join support, new features like functional indexes and user defined functions, and the future integration with Apache Calcite to bring more SQL capabilities and a cost-based query optimizer to Phoenix. Overall, Phoenix provides a relational view of data stored in HBase to enable complex SQL queries to run efficiently on large datasets.

Parallel Computing with SolrCloud: Presented by Joel Bernstein, Alfresco

Parallel Computing with SolrCloud: Presented by Joel Bernstein, AlfrescoLucidworks This document summarizes Joel Bernstein's presentation on parallel SQL in Solr 6.0. The key points are:

1. SQL provides an optimizer to choose the best query plan for complex queries in Solr, avoiding the need for users to determine optimal faceting APIs or parameters.

2. SQL queries in Solr 6.0 can perform distributed joins, aggregations, sorting, and filtering using Solr search predicates. Aggregations can be performed using either map-reduce or facets.

3. Under the hood, SQL queries are compiled to TupleStreams which are serialized to Streaming Expressions and executed in parallel across worker collections using Solr's streaming API framework.

Xây dựng tag cloud bằng cây n-gram

Xây dựng tag cloud bằng cây n-gramMinh Lê Mô tả về cây n-gram được sử dụng để tạo ra tag cloud cho một tập tài liệu.

Có nhiều hoạt họa không được slideshare hỗ trợ :-(

Stateless authentication for microservices applications - JavaLand 2015

Stateless authentication for microservices applications - JavaLand 2015Alvaro Sanchez-Mariscal This talk is about how to secure your front-end + backend applications using a RESTful approach. As opposed to traditional and monolithic server-side applications (where the HTTP session is used), when your front-end application is running on a browser and not securely from the server, there are few things you need to consider.

In this session Alvaro will explore standards like OAuth or JWT to achieve a stateless, token-based authentication using frameworks like Angular JS on the front-end and Spring Security on the backend.

Ad

More Related Content

What's hot (20)

External Master Data in Alfresco: Integrating and Keeping Metadata Consistent...

External Master Data in Alfresco: Integrating and Keeping Metadata Consistent...ITD Systems Real life content is always tightly integrated with master data. Reference data to be used for the content is usually stored in a third-party enterprise system (or even several different systems) and should be consumed by Alfresco.

Flink Community Update 2015 June

Flink Community Update 2015 JuneMárton Balassi This document summarizes Apache Flink community updates from June 2015. It discusses the 0.9.0 release of Apache Flink, an open source platform for scalable batch and stream data processing. Key points include the addition of two new committers, blog posts and workshops promoting Flink, and various conference and meetup talks about Flink occurring that month. It encourages registration for the Flink Forward conference in October 2015.

Software Developer and Architecture @ LinkedIn (QCon SF 2014)

Software Developer and Architecture @ LinkedIn (QCon SF 2014)Sid Anand The document provides details about Sid Anand's career and then discusses LinkedIn's software development process and architecture when he was there. It notes that when Sid started at LinkedIn in 2011, compiling the code took a long time due to the large codebase and many dependencies. It then describes how LinkedIn scaled to support hundreds of millions of members and thousands of employees by splitting the monolithic codebase into individual Git repos, using intermediate JARs to reduce dependencies, and connecting development machines to test environments instead of deploying everything locally. It also discusses LinkedIn's use of Kafka, search federation, and not making web service calls between data centers to scale across multiple data centers.

Get involved with the Apache Software Foundation

Get involved with the Apache Software FoundationShalin Shekhar Mangar Presented at Indian Institute of Information Technology (IIIT) Allahabad on 21 Oct 2009 to students about the Apache Software Foundation, Lucene, Solr, Hadoop and on the benefits of contributing to open source projects. The target audience was sophomore, junior and senior B.Tech students.

Avoid boring work_v2

Avoid boring work_v2Marcin Przepiórowski How to avoid boring work

Automation for DBAs.

How to use OEM 12c and Ansible in day by day DBA worj

Galene - LinkedIn's Search Architecture: Presented by Diego Buthay & Sriram S...

Galene - LinkedIn's Search Architecture: Presented by Diego Buthay & Sriram S...Lucidworks LinkedIn's search architecture called Galene uses Lucene to index hundreds of millions of profiles. Galene improves search quality and scalability through techniques like offline indexing for complex features, live updates at fine granularity, static ranking to prioritize more popular profiles, and early termination to quickly return top results. The architecture includes base, live, and snapshot indexes to support these techniques.

PHP, the GraphQL ecosystem and GraphQLite

PHP, the GraphQL ecosystem and GraphQLiteJEAN-GUILLAUME DUJARDIN Creating a GraphQL API is more and more common for PHP developers. The task can seem complex but there are a lot of tools to help.

In this talk given at AFUP Paris PHP Meetup, I'm presenting GraphQL and why it is important. Then, I'm having a look at the existing libraries in PHP. Finally, I'm diving in the details of GraphQLite; a library that creates a GraphQL schema by analyzing your PHP code.

Java Application Servers Are Dead!

Java Application Servers Are Dead!Eberhard Wolff This document discusses the declining relevance of traditional Java application servers. It argues that application servers are no longer needed as containers for multiple applications due to limitations in isolation. Modern applications are better suited as individual JAR files with their own custom infrastructure and standard monitoring and deployment tools rather than relying on application server-specific approaches. Frameworks like Spring Boot and Dropwizard provide an alternative to application servers by allowing applications to be self-contained deployable units without dependency on external application server infrastructure.

State-of-the-Art Drupal Search with Apache Solr

State-of-the-Art Drupal Search with Apache Solrguest432cd6 These are the slides from the presentation I gave on Feb. 2, 2010, in Brussels, at the FOSDEM conference.

Oracle APEX Nitro

Oracle APEX NitroMarko Gorički This document discusses using APEX Nitro to improve the APEX development process. APEX Nitro allows developers to write CSS and JavaScript locally and have changes automatically synced to their APEX application. It provides features like error handling, minification, concatenation, and preprocessing to boost performance and maintainability. The document reviews how to install, configure, and use APEX Nitro to enhance the front-end development experience.

SolrCloud and Shard Splitting

SolrCloud and Shard SplittingShalin Shekhar Mangar This document summarizes a presentation about SolrCloud shard splitting. It introduces the presenter and his background with Apache Lucene and Solr. The presentation covers an overview of SolrCloud, how documents are routed to shards in SolrCloud, the SolrCloud collections API, and the new functionality for splitting shards in Solr 4.3 to allow dynamic resharding of collections without downtime. It provides details on the shard splitting mechanism and tips for using the new functionality.

Real Time search using Spark and Elasticsearch

Real Time search using Spark and ElasticsearchSigmoid This document discusses using Spark Streaming and Elasticsearch to enable real-time search and analysis of streaming data. Spark Streaming processes and enriches streaming data and stores it in Elasticsearch for low-latency search and alerts. The elasticsearch-hadoop connector allows Spark jobs to read from and write to Elasticsearch, integrating the batch processing of Spark with the real-time search of Elasticsearch.

Software Architecture for DevOps and Continuous Delivery

Software Architecture for DevOps and Continuous DeliveryEberhard Wolff Talks from Continuous Lifecycle 2013 in Germany about the influence of DevOps and Continuous Delivery on Software Architecture,

Spark Summit EU talk by Dean Wampler

Spark Summit EU talk by Dean WamplerSpark Summit Dean Wampler gave a talk at the Spark Summit Europe 2016 titled "Just Enough Scala for Spark". He demonstrated coding a demo in Scala for Spark, based on his free online tutorial. The tutorial and live coding showed attendees the essential Scala concepts for working with Spark. Wampler also provided resources for additional Scala and Spark help.

SparkR + Zeppelin

SparkR + Zeppelinfelixcss This document summarizes a presentation on using SparkR and Zeppelin. SparkR allows using R language APIs for Spark, exposing Spark functionality through an R-friendly DataFrame API. SparkR DataFrames can be used in Zeppelin, providing interfaces between native R and Spark operations. The presentation demonstrates running SparkR code and DataFrame transformations in Zeppelin notebooks.

Build a modern data platform.pptx

Build a modern data platform.pptxIke Ellis The document discusses building a data platform for analytics in Azure. It outlines common issues with traditional data warehouse architectures and recommends building a data lake approach using Azure Synapse Analytics. The key elements include ingesting raw data from various sources into landing zones, creating a raw layer using file formats like Parquet, building star schemas in dedicated SQL pools or Spark tables, implementing alerting using Log Analytics, and loading data into Power BI. Building the platform with Python pipelines, notebooks, and GitHub integration is emphasized for flexibility, testability and collaboration.

Loading 350M documents into a large Solr cluster: Presented by Dion Olsthoorn...

Loading 350M documents into a large Solr cluster: Presented by Dion Olsthoorn...Lucidworks This document summarizes a presentation about loading 350 million documents into a Solr cluster in 8 hours or less. It describes using an external cloud platform for preprocessing content before indexing into Solr. It also details using a queueing system to post content to Solr in batches to avoid overloading the cluster. The presentation recommends indexing large content sets on an isolated Solr environment before restoring indexes to the production cluster.

Running Apache Zeppelin production

Running Apache Zeppelin productionVinay Shukla Discussion around concerns related to deploying Apache Zeppelin in production, including deployment choices, security, performance and integration.

HBaseCon2015-final

HBaseCon2015-finalMaryann Xue Apache Phoenix is a SQL query layer for Apache HBase that allows users to interact with HBase through JDBC. It transforms SQL queries into native HBase API calls to optimize execution across the HBase cluster in a parallel manner. The presentation covered Phoenix's current features like join support, new features like functional indexes and user defined functions, and the future integration with Apache Calcite to bring more SQL capabilities and a cost-based query optimizer to Phoenix. Overall, Phoenix provides a relational view of data stored in HBase to enable complex SQL queries to run efficiently on large datasets.

Parallel Computing with SolrCloud: Presented by Joel Bernstein, Alfresco

Parallel Computing with SolrCloud: Presented by Joel Bernstein, AlfrescoLucidworks This document summarizes Joel Bernstein's presentation on parallel SQL in Solr 6.0. The key points are:

1. SQL provides an optimizer to choose the best query plan for complex queries in Solr, avoiding the need for users to determine optimal faceting APIs or parameters.

2. SQL queries in Solr 6.0 can perform distributed joins, aggregations, sorting, and filtering using Solr search predicates. Aggregations can be performed using either map-reduce or facets.

3. Under the hood, SQL queries are compiled to TupleStreams which are serialized to Streaming Expressions and executed in parallel across worker collections using Solr's streaming API framework.

Viewers also liked (10)

Xây dựng tag cloud bằng cây n-gram

Xây dựng tag cloud bằng cây n-gramMinh Lê Mô tả về cây n-gram được sử dụng để tạo ra tag cloud cho một tập tài liệu.

Có nhiều hoạt họa không được slideshare hỗ trợ :-(

Stateless authentication for microservices applications - JavaLand 2015

Stateless authentication for microservices applications - JavaLand 2015Alvaro Sanchez-Mariscal This talk is about how to secure your front-end + backend applications using a RESTful approach. As opposed to traditional and monolithic server-side applications (where the HTTP session is used), when your front-end application is running on a browser and not securely from the server, there are few things you need to consider.

In this session Alvaro will explore standards like OAuth or JWT to achieve a stateless, token-based authentication using frameworks like Angular JS on the front-end and Spring Security on the backend.

JavaLand - Integration Testing How-to

JavaLand - Integration Testing How-toNicolas Fränkel This document provides an overview of integration testing. It discusses what integration testing is, the challenges of integration testing, and some solutions and best practices for integration testing. Specifically, it covers:

- The differences between unit testing and integration testing

- Examples of testing components together (like testing each part of a car separately vs doing a test drive)

- Techniques for reducing brittle and slow integration tests, like separating tests from infrastructure dependencies

- Tools for faking dependencies like databases, web services, and containers in integration tests

- Best practices for integration testing with build tools like Maven and for continuous integration

Javaland keynote final

Javaland keynote finalMarcus Lagergren This document outlines the keynote presentation "20 years in Java and JVM land from an engineer's perspective" given by Marcus Lagergren. The presentation looks back over Lagergren's 20 years working with Java and runtimes, from his early experiences in university in the 1990s through projects at Ericsson and involvement with early Java releases. It provides a first-hand account of the evolution of Java and runtime technologies from the perspective of an engineer who has worked with Java throughout its history.

Cassandra Troubleshooting (for 2.0 and earlier)

Cassandra Troubleshooting (for 2.0 and earlier)J.B. Langston I’ll give a general lay of the land for troubleshooting Cassandra. Then I’ll take you on a deep dive through nodetool and system.log and give you a guided tour of the useful information they provide for troubleshooting. I’ll devote special attention to monitoring the various processes that Cassandra uses to do its work and how to effectively search for information about specific error messages online.

This is the old version of this presentation for Cassandra 2.0 and earlier. Check out the updated slide deck for Cassandra 2.1.

Legacy lambda code

Legacy lambda codePeter Lawrey After migrating a three year old C# project to Java we ending up with a significant portion of legacy code using lambdas in Java. What was some of the good use cases, code which could be written better and the problems we had migrating from C#. At the end we look at the performance implications of using Lambdas.

Cassandra 3.0 advanced preview

Cassandra 3.0 advanced previewPatrick McFadin This summer, coming to a server near you, Cassandra 3.0! Contributors and committers have been working hard on what is the most ambitious release to date. It’s almost too much to talk about, but we will dig into some of the most important, ground breaking features that you’ll want to use. Indexing changes that will make your applications faster and spark jobs more efficient. Storage engine changes to get even more density and efficiency from your nodes. Developer focused features like full JSON support and User Defined Functions. And finally, one of the most requested features, Windows support, has made it’s arrival. There is more, but you’ll just have to some see for yourself. Get your front row seat and don’t miss it!

The Art Of Effective Persuasion (The Star Wars Way!)

The Art Of Effective Persuasion (The Star Wars Way!)Fred Then Spend 5 minutes.

Learn 10 rules.

Experience 250% improvement as an effective presenter & persuader

ElasticSearch : Architecture et Développement

ElasticSearch : Architecture et DéveloppementMohamed hedi Abidi Présentation d'Elasticsearch lors d'un ebizbar à ebiznext.

Anwendungsfälle für Elasticsearch JavaLand 2015

Anwendungsfälle für Elasticsearch JavaLand 2015Florian Hopf The document discusses Elasticsearch and provides examples of how to use it for document storage, full text search, analytics, and log file analysis. It demonstrates how to install Elasticsearch, index and retrieve documents, perform queries using the query DSL, add geospatial data and filtering, aggregate data, and visualize analytics using Kibana. Real-world use cases are also presented, such as by GitHub, Stack Overflow, and log analysis platforms.

Ad

Similar to NoSQL Riak MongoDB Elasticsearch - All The Same? (20)

Micro Services - Small is Beautiful

Micro Services - Small is BeautifulEberhard Wolff The document discusses microservices and their advantages over monolithic architectures. Microservices break applications into small, independent components that can be developed, deployed and scaled independently. This allows for faster development and easier continuous delivery. The document recommends using Spring Boot to implement microservices and Docker to deploy and manage the microservices as independent components. It provides an example of implementing an ELK stack as Dockerized microservices.

Java Architectures - a New Hope

Java Architectures - a New HopeEberhard Wolff The document discusses Java application architectures and microservices. It describes how microservices can help break monolithic Java applications into independent, scalable services. Each service is built around a specific business capability and can be developed, deployed and scaled independently. The document recommends using Spring Boot to easily create microservices and Docker to deploy and manage the microservices as lightweight components. It provides an example of creating an ELK logging stack from microservices using Docker.

Spring Boot

Spring Bootgedoplan This document discusses Spring Boot, a framework for building Java applications. It makes building Java web applications easier by providing sensible defaults and automatic configuration. Spring Boot allows building applications that are easy to test, debug and deploy. It supports adding additional libraries and frameworks like Spring Data JPA with minimal configuration. The document demonstrates how to create a basic application with Spring Boot and Spring Data JPA with auto-configured infrastructure and shows how Spring Boot helps with development, operations and deployment of Java applications.

Spring Boot

Spring BootEberhard Wolff Spring Boot makes it easier to create Java web applications. It provides sensible defaults and infrastructure so developers don't need to spend time wiring applications together. Spring Boot applications are also easier to develop, test, and deploy. The document demonstrates how to create a basic web application with Spring Boot, add Spring Data JPA for database access, and use features for development and operations.

Micro Service – The New Architecture Paradigm

Micro Service – The New Architecture ParadigmEberhard Wolff The document discusses microservices as a new software architecture paradigm. It defines microservices as small, independent processes that work together to form an application. The key benefits of microservices are that they allow for easier, faster deployment of features since each service is its own deployment unit and teams can deploy independently without integration. However, the document also notes challenges of microservices such as increased communication overhead, difficulty of code reuse across services, and managing dependencies between many different services. It concludes that microservices are best for projects where time to market is important and continuous delivery is a priority.

Microservice - All is Small, All is Well?

Microservice - All is Small, All is Well?Eberhard Wolff This document discusses microservices and their advantages and disadvantages. Microservices are small, independent units that work together to form an application. They allow for independent deployment and use of different technologies. While this improves scalability and agility, it also introduces challenges around distributed systems, refactoring code between services, and operating at scale. The key is to start with a functional architecture and limit communication between services.

Microservice With Spring Boot and Spring Cloud

Microservice With Spring Boot and Spring CloudEberhard Wolff Spring Boot and Spring Cloud are an ideal foundation for creating Microservices based on Java. This presentation explains basic concepts of these libraries.

Legacy Sins

Legacy SinsEberhard Wolff Eberhard Wolff discusses several factors that contribute to creating changeable software beyond just architecture. He emphasizes that automated testing, following a test pyramid approach, continuous delivery practices like automated deployment, and understanding the customer's priorities are all important. While architecture is a factor, there are no universal rules and the architect's job is to understand each project's unique needs.

Amazon Elastic Beanstalk

Amazon Elastic BeanstalkEberhard Wolff Amazon Elastic Beanstalk is a PaaS offering from Amazon that allows users to deploy and manage applications in the cloud. It automatically handles tasks like capacity provisioning, load balancing, scaling and application health monitoring. The document discusses the history and services behind Elastic Beanstalk like EC2 and S3. It also provides an overview of how Elastic Beanstalk works, the programming models supported, tools available and a demo of deploying a sample news application using Elastic Beanstalk.

ELK Stack

ELK StackEberhard Wolff Short presentation about the ELK stack (Elasticsearch, Logstash, Kibana) running on top of Docker / Vagrant.

Micro Services - Neither Micro Nor Service

Micro Services - Neither Micro Nor ServiceEberhard Wolff Micro Services are a new approach to software architecture. This presentation discusses how small they should be - and wether they are really service - in the SOA sense.

Java Application Servers Are Dead! - Short Version

Java Application Servers Are Dead! - Short VersionEberhard Wolff The document discusses issues with traditional Java application servers and argues that they are outdated for modern applications. It notes that application servers do not fully isolate applications, that dependencies on specific application server versions and libraries hinder portability, and that their deployment and monitoring tools do not integrate well with standard operating system tools. It suggests that modern applications would be better served by packaging all dependencies into executable JAR files and providing their own lightweight infrastructure instead of relying on application servers.

Continuous Delivery & DevOps in the Enterprise

Continuous Delivery & DevOps in the EnterpriseEberhard Wolff Continuous Delivery and DevOps have a different value proposition in the Enterprise and therefore must be implemented differently. This presentation ta

Continuous Delivery and Micro Services - A Symbiosis

Continuous Delivery and Micro Services - A SymbiosisEberhard Wolff Continuous Delivery profits from Micro Services - and the other way round. This presentation shows how the two technologies work together - and how Micro Services can be used to simplify the transition to Continuous Delivery.

New Theme Directory

New Theme DirectoryKonstantin Obenland Millions of websites depend on the WordPress.org infrastructure every day. Parts of it is almost a decade old, ancient in software terms, and most of it is not even based on WordPress itself. At the time of WordCamp San Antonio, we will hopefully have finished a project that drastically modernizes the theme repository and moves to over to a WordPress installation, complete with a redesigned front-end and a more robust backend. This session will go over the changes involved, and will look into how WordPress.org works and what the underlying structure of it looks like.

Backing Data Silo Atack: Alfresco sharding, SOLR for non-flat objects

Backing Data Silo Atack: Alfresco sharding, SOLR for non-flat objectsITD Systems The document discusses a scheme for sharding Alfresco repositories to address scalability and storage limitations. Key points of the scheme include:

- Repositories are sharded across multiple independent servers, each storing a part of the content.

- A level 7 switch balances requests across repositories and provides a single API entry point.

- An external SOLR cloud indexes all repositories in a single index to allow federated queries.

- The scheme is benchmarked to scale to 15,000 concurrent users on commodity hardware. Additional considerations for production include auto-discovery, configuration management, and safety checks.

Oracle REST Data Services: POUG Edition

Oracle REST Data Services: POUG EditionJeff Smith An overview of ORDS for building RESTful Web Services and your Oracle Database with BEER examples!

Thanks and credit to the POUG organization for making this possible.

Apache Accumulo and the Data Lake

Apache Accumulo and the Data LakeAaron Cordova Aaron Cordova outlines how Accumulo helps provide the essential features of a "Data Lake": a system in which all types of data from all sources can be imported, secured, analyzed, and delivered to decision makers.

Woo: Writing a fast web server @ ELS2015

Woo: Writing a fast web server @ ELS2015fukamachi Eitaro Fukamachi presented on writing the fast web server Woo in Common Lisp. He discussed three tactics for achieving speed: using a multithreaded event-driven architecture instead of prefork, developing a fast HTTP parser instead of regular expressions, and using the libev event library. Benchmarks showed Woo was 1.6 times faster than Node.js. The goal was to create the fastest HTTP server in Common Lisp.

Practical Machine Learning for Smarter Search with Spark+Solr

Practical Machine Learning for Smarter Search with Spark+SolrJake Mannix This document discusses using Apache Spark and Apache Solr together for practical machine learning and data engineering tasks. It provides an overview of Spark and Solr, why they are useful together, and then gives an example of using them together to explore a dataset of Apache mailing list archives through data visualization, clustering, classification, and recommender systems.

Ad

More from Eberhard Wolff (20)

Architectures and Alternatives

Architectures and AlternativesEberhard Wolff Limiting software architecture to the traditional ideas is not enough for today's challenges. This presentation shows additional tools and how problems like maintainability, reliability and usability can be solved.

Beyond Microservices

Beyond MicroservicesEberhard Wolff This presentation shows the properties, advantages and challenges of microservices. It then discusses alternative architectures beyond microservices.

The Frontiers of Continuous Delivery

The Frontiers of Continuous DeliveryEberhard Wolff Continuous Delivery solves many current challenges - but still adoption is limited. This talks shows reasons for this and how to overcome these problems.

Four Times Microservices - REST, Kubernetes, UI Integration, Async

Four Times Microservices - REST, Kubernetes, UI Integration, AsyncEberhard Wolff How you can build microservices:

- REST with the Netflix stack (Eureka for Service Discovery, Ribbon for Load Balancing, Hystrix for Resilience, Zuul for Routing)

- REST with Consul for Services Discovery

- REST with Kubernetes

- UI integration with ESI (Edge Side Includes)

- UI integration on the client with JavaScript

- Async with Apache Kafka

- Async with HTTP + Atom

Microservices - not just with Java

Microservices - not just with JavaEberhard Wolff This presentation show several options how to implement microservices: the Netflix stack, Consul, and Kubernetes. Also integration options like REST and UI integration are covered.

Deployment - Done Right!

Deployment - Done Right!Eberhard Wolff There are many different deployment options - package managers, tools like Chef or Puppet, PaaS and orchestration tools. This presentation give an overview of these tools and approaches like idempotent installation or immutable server.

Held at Continuous Lifecycle 2016

Data Architecture not Just for Microservices

Data Architecture not Just for MicroservicesEberhard Wolff 1) Microservices aim to decouple systems by separating data models into bounded contexts, with each microservice owning its own data schema.

2) However, some data like basic order information needs to be shared across microservices. Domain-driven design patterns like shared kernel and event-driven replication can be used to share this data while maintaining independence.

3) With shared kernel, a subset of data is defined that multiple microservices can access, but this impacts resilience. With events, data changes in one service generate events to update data in other services asynchronously.

4) The CAP theorem presents challenges for data consistency across microservices. Network partitions may lead to availability conflicts that require eventual consistency over strong consistency

How to Split Your System into Microservices

How to Split Your System into MicroservicesEberhard Wolff Splitting a system into microservices is a challenging task. This talk shows how ideas like Bounded Context, migration scenarios and technical constraints can be used to build a microservice architecture. Held at WJAX 2016.

Microservices and Self-contained System to Scale Agile

Microservices and Self-contained System to Scale AgileEberhard Wolff Architectures like Microservices and Self-contained Systems provide a way to support agile processes and scale them. Held at JUG Saxony Day 2016 in Dresden.

How Small Can Java Microservices Be?

How Small Can Java Microservices Be?Eberhard Wolff The document discusses the concept of nanoservices and how they compare to microservices. Nanoservices are defined as being smaller than microservices, with independent deployment units that use more lightweight technologies. Examples discussed include Docker containers, AWS Lambda functions, OSGi bundles, and Java EE applications. While nanoservices aim to allow for smaller services and local communication, technologies like OSGi and Java EE have challenges with independent deployment and scaling. Serverless technologies like AWS Lambda provide stronger support for nanoservices through features like independent scaling, isolation, and technology freedom.

Data Architecturen Not Just for Microservices

Data Architecturen Not Just for MicroservicesEberhard Wolff Microservices change the way data is handled and stored. This presentation shows how Bounded Context, Events, Event Sourcing and CQRS provide new approaches to handle data.

Microservices: Redundancy=Maintainability

Microservices: Redundancy=MaintainabilityEberhard Wolff We assume software should contain no redundancies and that a clean architecture is the way to a maintainable system. Microservices challenge these assumptions. Keynote from Entwicklertage 2016 in Karlsruhe.

Self-contained Systems: A Different Approach to Microservices

Self-contained Systems: A Different Approach to MicroservicesEberhard Wolff This presentation discusses approaches to Microservices - including self-contained systems and their benefits and trade-offs.

Microservices Technology Stack

Microservices Technology StackEberhard Wolff Overview of all technologies that you can use to support Microservice applications. Covers integration, operations and testing.

Software Architecture for Innovation

Software Architecture for InnovationEberhard Wolff How can software architecture help to enable innovation? Also discusses Continuous Delivery and Microservices.

Five (easy?) Steps Towards Continuous Delivery

Five (easy?) Steps Towards Continuous DeliveryEberhard Wolff This document outlines five steps towards achieving continuous delivery:

1. Realize deployment automation is a prerequisite, not the goal of continuous delivery.

2. Understand goals like reliability and time-to-market, then take pragmatic steps like value stream mapping.

3. Eliminate manual sign-offs and create trust in automated tests using techniques like behavior-driven development.

4. Address the gaps between development and operations teams through collaboration on deployments.

5. Consider architectural adjustments like migrating to microservices for independent, faster delivery pipelines.

Nanoservices and Microservices with Java

Nanoservices and Microservices with JavaEberhard Wolff Nanoservices are smaller than Microservices. This presentation shows how technologies like Amazon Lambda, OSGi and Java EE can be used to enable such small services.

Microservices: Architecture to Support Agile

Microservices: Architecture to Support AgileEberhard Wolff 1. Microservices architecture divides applications into small, independent components called microservices that can be developed, deployed and scaled independently.

2. This architecture aligns well with agile principles by allowing individual teams to focus on and deploy their microservice without coordination, enabling faster feedback and continuous delivery of working software.

3. By structuring the organization around business domains rather than technical components, microservices help drive organizational communication patterns that mirror the architecture, avoiding misalignment over time.

Microservices: Architecture to scale Agile

Microservices: Architecture to scale AgileEberhard Wolff Microservices allow for scaling agile processes. This presentation shows what Microservices are, what agility is and introduces Self-contained Systems (SCS). Finally, it shows how SCS can help to scale agile processes.

Microservices, DevOps, Continuous Delivery – More Than Three Buzzwords

Microservices, DevOps, Continuous Delivery – More Than Three BuzzwordsEberhard Wolff This document discusses how microservices, continuous delivery, and DevOps relate and solve problems together. Microservices break applications into independently deployable components, continuous delivery incorporates automated testing and deployment pipelines, and DevOps emphasizes collaboration between development and operations teams. Together, these approaches enable faster and more reliable software releases by allowing components to be deployed independently while maintaining overall system integrity through practices like monitoring and common infrastructure standards. They also allow alternative approaches to challenges like maintainability and scalability by facilitating fast feedback loops and the ability to quickly identify and address bottlenecks.

Recently uploaded (20)

AI and Data Privacy in 2025: Global Trends

AI and Data Privacy in 2025: Global TrendsInData Labs In this infographic, we explore how businesses can implement effective governance frameworks to address AI data privacy. Understanding it is crucial for developing effective strategies that ensure compliance, safeguard customer trust, and leverage AI responsibly. Equip yourself with insights that can drive informed decision-making and position your organization for success in the future of data privacy.

This infographic contains:

-AI and data privacy: Key findings

-Statistics on AI data privacy in the today’s world

-Tips on how to overcome data privacy challenges

-Benefits of AI data security investments.

Keep up-to-date on how AI is reshaping privacy standards and what this entails for both individuals and organizations.

Drupalcamp Finland – Measuring Front-end Energy Consumption

Drupalcamp Finland – Measuring Front-end Energy ConsumptionExove How to measure web front-end energy consumption using Firefox Profiler. Presented in DrupalCamp Finland on April 25th, 2025.

Big Data Analytics Quick Research Guide by Arthur Morgan

Big Data Analytics Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...Impelsys Inc. Impelsys provided a robust testing solution, leveraging a risk-based and requirement-mapped approach to validate ICU Connect and CritiXpert. A well-defined test suite was developed to assess data communication, clinical data collection, transformation, and visualization across integrated devices.

Build Your Own Copilot & Agents For Devs

Build Your Own Copilot & Agents For DevsBrian McKeiver May 2nd, 2025 talk at StirTrek 2025 Conference.

Dev Dives: Automate and orchestrate your processes with UiPath Maestro

Dev Dives: Automate and orchestrate your processes with UiPath MaestroUiPathCommunity This session is designed to equip developers with the skills needed to build mission-critical, end-to-end processes that seamlessly orchestrate agents, people, and robots.

📕 Here's what you can expect:

- Modeling: Build end-to-end processes using BPMN.

- Implementing: Integrate agentic tasks, RPA, APIs, and advanced decisioning into processes.

- Operating: Control process instances with rewind, replay, pause, and stop functions.

- Monitoring: Use dashboards and embedded analytics for real-time insights into process instances.

This webinar is a must-attend for developers looking to enhance their agentic automation skills and orchestrate robust, mission-critical processes.

👨🏫 Speaker:

Andrei Vintila, Principal Product Manager @UiPath

This session streamed live on April 29, 2025, 16:00 CET.

Check out all our upcoming Dev Dives sessions at https://community.uipath.com/dev-dives-automation-developer-2025/.

Cybersecurity Identity and Access Solutions using Azure AD

Cybersecurity Identity and Access Solutions using Azure ADVICTOR MAESTRE RAMIREZ Cybersecurity Identity and Access Solutions using Azure AD

Generative Artificial Intelligence (GenAI) in Business

Generative Artificial Intelligence (GenAI) in BusinessDr. Tathagat Varma My talk for the Indian School of Business (ISB) Emerging Leaders Program Cohort 9. In this talk, I discussed key issues around adoption of GenAI in business - benefits, opportunities and limitations. I also discussed how my research on Theory of Cognitive Chasms helps address some of these issues

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...Alan Dix Talk at the final event of Data Fusion Dynamics: A Collaborative UK-Saudi Initiative in Cybersecurity and Artificial Intelligence funded by the British Council UK-Saudi Challenge Fund 2024, Cardiff Metropolitan University, 29th April 2025

https://alandix.com/academic/talks/CMet2025-AI-Changes-Everything/

Is AI just another technology, or does it fundamentally change the way we live and think?

Every technology has a direct impact with micro-ethical consequences, some good, some bad. However more profound are the ways in which some technologies reshape the very fabric of society with macro-ethical impacts. The invention of the stirrup revolutionised mounted combat, but as a side effect gave rise to the feudal system, which still shapes politics today. The internal combustion engine offers personal freedom and creates pollution, but has also transformed the nature of urban planning and international trade. When we look at AI the micro-ethical issues, such as bias, are most obvious, but the macro-ethical challenges may be greater.

At a micro-ethical level AI has the potential to deepen social, ethnic and gender bias, issues I have warned about since the early 1990s! It is also being used increasingly on the battlefield. However, it also offers amazing opportunities in health and educations, as the recent Nobel prizes for the developers of AlphaFold illustrate. More radically, the need to encode ethics acts as a mirror to surface essential ethical problems and conflicts.

At the macro-ethical level, by the early 2000s digital technology had already begun to undermine sovereignty (e.g. gambling), market economics (through network effects and emergent monopolies), and the very meaning of money. Modern AI is the child of big data, big computation and ultimately big business, intensifying the inherent tendency of digital technology to concentrate power. AI is already unravelling the fundamentals of the social, political and economic world around us, but this is a world that needs radical reimagining to overcome the global environmental and human challenges that confront us. Our challenge is whether to let the threads fall as they may, or to use them to weave a better future.

How Can I use the AI Hype in my Business Context?

How Can I use the AI Hype in my Business Context?Daniel Lehner 𝙄𝙨 𝘼𝙄 𝙟𝙪𝙨𝙩 𝙝𝙮𝙥𝙚? 𝙊𝙧 𝙞𝙨 𝙞𝙩 𝙩𝙝𝙚 𝙜𝙖𝙢𝙚 𝙘𝙝𝙖𝙣𝙜𝙚𝙧 𝙮𝙤𝙪𝙧 𝙗𝙪𝙨𝙞𝙣𝙚𝙨𝙨 𝙣𝙚𝙚𝙙𝙨?

Everyone’s talking about AI but is anyone really using it to create real value?

Most companies want to leverage AI. Few know 𝗵𝗼𝘄.

✅ What exactly should you ask to find real AI opportunities?

✅ Which AI techniques actually fit your business?

✅ Is your data even ready for AI?

If you’re not sure, you’re not alone. This is a condensed version of the slides I presented at a Linkedin webinar for Tecnovy on 28.04.2025.

2025-05-Q4-2024-Investor-Presentation.pptx

2025-05-Q4-2024-Investor-Presentation.pptxSamuele Fogagnolo Cloudflare Q4 Financial Results Presentation

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep Dive

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep DiveScyllaDB Want to learn practical tips for designing systems that can scale efficiently without compromising speed?

Join us for a workshop where we’ll address these challenges head-on and explore how to architect low-latency systems using Rust. During this free interactive workshop oriented for developers, engineers, and architects, we’ll cover how Rust’s unique language features and the Tokio async runtime enable high-performance application development.

As you explore key principles of designing low-latency systems with Rust, you will learn how to:

- Create and compile a real-world app with Rust

- Connect the application to ScyllaDB (NoSQL data store)

- Negotiate tradeoffs related to data modeling and querying

- Manage and monitor the database for consistently low latencies

Role of Data Annotation Services in AI-Powered Manufacturing

Role of Data Annotation Services in AI-Powered ManufacturingAndrew Leo From predictive maintenance to robotic automation, AI is driving the future of manufacturing. But without high-quality annotated data, even the smartest models fall short.

Discover how data annotation services are powering accuracy, safety, and efficiency in AI-driven manufacturing systems.

Precision in data labeling = Precision on the production floor.

Technology Trends in 2025: AI and Big Data Analytics

Technology Trends in 2025: AI and Big Data AnalyticsInData Labs At InData Labs, we have been keeping an ear to the ground, looking out for AI-enabled digital transformation trends coming our way in 2025. Our report will provide a look into the technology landscape of the future, including:

-Artificial Intelligence Market Overview

-Strategies for AI Adoption in 2025

-Anticipated drivers of AI adoption and transformative technologies

-Benefits of AI and Big data for your business

-Tips on how to prepare your business for innovation

-AI and data privacy: Strategies for securing data privacy in AI models, etc.

Download your free copy nowand implement the key findings to improve your business.

Complete Guide to Advanced Logistics Management Software in Riyadh.pdf

Complete Guide to Advanced Logistics Management Software in Riyadh.pdfSoftware Company Explore the benefits and features of advanced logistics management software for businesses in Riyadh. This guide delves into the latest technologies, from real-time tracking and route optimization to warehouse management and inventory control, helping businesses streamline their logistics operations and reduce costs. Learn how implementing the right software solution can enhance efficiency, improve customer satisfaction, and provide a competitive edge in the growing logistics sector of Riyadh.

Heap, Types of Heap, Insertion and Deletion

Heap, Types of Heap, Insertion and DeletionJaydeep Kale This pdf will explain what is heap, its type, insertion and deletion in heap and Heap sort

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat The MCP (Model Context Protocol) is a framework designed to manage context and interaction within complex systems. This SlideShare presentation will provide a detailed overview of the MCP Model, its applications, and how it plays a crucial role in improving communication and decision-making in distributed systems. We will explore the key concepts behind the protocol, including the importance of context, data management, and how this model enhances system adaptability and responsiveness. Ideal for software developers, system architects, and IT professionals, this presentation will offer valuable insights into how the MCP Model can streamline workflows, improve efficiency, and create more intuitive systems for a wide range of use cases.

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat

NoSQL Riak MongoDB Elasticsearch - All The Same?

- 1. MongoDB, Elasticsearch, Riak – all the same? Eberhard Wolff Freelancer Head Technology Advisory Board adesso AG http://ewolff.com

- 2. Eberhard Wolff - @ewolff Leseprobe: http://bit.ly/CD-Buch

- 3. Eberhard Wolff - @ewolff Modeling: Relational Databases vs. JSON

- 4. Eberhard Wolff - @ewolff Financial System • Different financial products • Mapping objects / database • Inheritance

- 5. Eberhard Wolff - @ewolff E/R Model Asset Stock Zero Bond Option Country> 20 database tables Up to 25 attributes Currency

- 6. Eberhard Wolff - @ewolff JOINs L

- 7. Get all asset with interest rate x

- 8. Eberhard Wolff - @ewolff

- 9. Eberhard Wolff - @ewolff JSON

- 10. Eberhard Wolff - @ewolff Asset Type ID Zero Bond Interest Rate Fixed Rate Bond Interest Rate Stock Option … Preferred Underlying asset Country Price Country Currency

- 11. Eberhard Wolff - @ewolff { "ID" : "42", "type" : "Fixed Rate Bond", "Country" : "DE", "Currency" : "EUR", "ISIN" : "DE0001141562", "Interest Rate" : "2.5" }

- 12. Eberhard Wolff - @ewolff All stores in this presentation support JSON

- 13. Eberhard Wolff - @ewolff Scaling Relational Databases

- 14. Eberhard Wolff - @ewolff Larger Server DB Server DB Server Expensive Server Limited

- 15. Eberhard Wolff - @ewolff Common Storage DB Server Expensive Storage Limited DB Server DB Server DB Server e.g. Oracle RAC

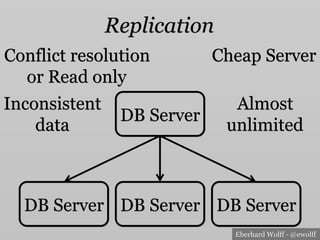

- 16. Eberhard Wolff - @ewolff Replication Cheap Server Almost unlimited DB Server DB Server DB Server DB Server Inconsistent data Conflict resolution or Read only

- 17. Eberhard Wolff - @ewolff Replication DB Server DB Server DB Server DB Server MySQL Master-Slave Oracle Advanced Replication

- 18. Eberhard Wolff - @ewolff Network Failure • Either Answer & provide outdated data • or Don’t answer i.e. always provide up to date data

- 19. Eberhard Wolff - @ewolff CAP • Consistency • Availability • Network Partition Tolerance • If network fails provide a potentially incorrect answer or no at all?

- 20. Eberhard Wolff - @ewolff BASE • Basically Available • Soft State • Eventually (= in the end) consistent • i.e. give potentially incorrect answer

- 21. Eberhard Wolff - @ewolff BASE and Relational DBs • Very limited • Stand by • Read only replica • No truly distributed DB

- 22. Eberhard Wolff - @ewolff Relational & BASE • Most relational operations cover multiple tables • Needs locks across multiple servers • Not realistically possible

- 23. Eberhard Wolff - @ewolff NoSQL & BASE • Typical operation covers one data structure • …that contains more information • No complex locking • More sophisticated BASE

- 24. Eberhard Wolff - @ewolff Naïve View on NoSQL

- 25. Eberhard Wolff - @ewolff Key / Value Stores • Map Key to Value • For simple data structure • Retrieval only by key • Easy scalability • Only for simple applications Key Value 42 Some data

- 26. Eberhard Wolff - @ewolff Document Oriented • Documents e.g. JSON • Complex structures & queries • Still great scalability • For more complex applications { "author":{ "name":"Eberhard Wolff", "email":"[email protected]" }, "title": "Continuous Delivery”, }

- 27. Eberhard Wolff - @ewolff Graph, Column Oriented…

- 28. Eberhard Wolff - @ewolff Educated View on NoSQL

- 29. Eberhard Wolff - @ewolff Key / value Document-based Search engine All the same?

- 30. Eberhard Wolff - @ewolff MongoDB elasticsearch Riak

- 31. Eberhard Wolff - @ewolff MongoDB elasticsearch Riak

- 32. Eberhard Wolff - @ewolff • Key / value • Truly distributed database What is Riak?

- 33. Eberhard Wolff - @ewolff Riak: Technologies • Erlang • Open Source (Apache 2.0) • Company: Basho

- 34. Eberhard Wolff - @ewolff • Allows secondary indices • Riak Search 2.0: Solr integration • Solr: Lucene based search engine • API compatible to Solr • Key / value or document based? More indices

- 35. Eberhard Wolff - @ewolff • Map/reduce • Scans all datasets • Can store large binary objects More Features

- 36. Eberhard Wolff - @ewolff Scaling Riak • Based on the Dynamo paper • Well understood • …and battle proofed at Amazon

- 37. Eberhard Wolff - @ewolff Scaling Riak Server A Shard1 Shard3 Shard4 Server B Shard2 Shard1 Shard4 Server D Shard4 Shard2 Shard3 Server C Shard3 Shard2 Shard1

- 38. Eberhard Wolff - @ewolff Scaling Riak Server A Shard1 Shard3 Shard4 Server B Shard2 Shard1 Shard4 Server D Shard4 Shard2 Shard3 Server C Shard3 Shard2 Shard1

- 39. Eberhard Wolff - @ewolff Scaling Riak Server A Shard1 Shard3 Shard4 Server B Shard2 Shard1 Shard4 Server D Shard4 Shard2 Shard3 Server C Shard3 Shard2 Shard1 New Server

- 40. Eberhard Wolff - @ewolff Tuning BASE • N node with replica • R nodes read from • W nodes written to • Trade off

- 41. Eberhard Wolff - @ewolff Is it bullet proof?

- 42. Eberhard Wolff - @ewolff Jepsen • Test suite for network failures etc • https://aphyr.com/tags/jepsen • Riak succeeds • …if tuned correctly • …might still need to merge versions • https://aphyr.com/posts/285-call-me- maybe-riak

- 43. Eberhard Wolff - @ewolff MongoDB elasticsearch Riak

- 44. Eberhard Wolff - @ewolff MongoDB elasticsearch Riak

- 45. Eberhard Wolff - @ewolff MongoDB elasticsearch Riak

- 46. Eberhard Wolff - @ewolff • Document-oriented • MMAPv1 Memory-mapped files + journal • New in 3.0: WiredTiger for complex loads Humongous What is MongoDB?

- 47. Eberhard Wolff - @ewolff MongoDB: Technologies • C++ • Open Source (AGPL) • Company: MongoDB, Inc.

- 48. Eberhard Wolff - @ewolff • Can store large binary objects • Its own full text search More Features

- 49. Eberhard Wolff - @ewolff More Features • Map / Reduce • JavaScript • Aggregation framework

- 50. Eberhard Wolff - @ewolff Scaling MongoDB Replica 1 Shard 1 Replica 2 Replica 3 Shard 2 Replica 1 Replica 2 Replica 3

- 51. Eberhard Wolff - @ewolff Availability Replica 1 Shard 1 Replica 2 Replica 3 Shard 2 Replica 1 Replica 2 Replica 3

- 52. Eberhard Wolff - @ewolff Scaling MongoDB Replica 1 Shard 1 Replica 2 Replica 3 Replica 1 Shard 2 Replica 2 Replica 3 Replica 1 Shard 3 Replica 2 Replica 3

- 53. Eberhard Wolff - @ewolff Scaling MongoDB Replica 1 Shard 1 Replica 2 Replica 3 Shard 2 Replica 1 Replica 2 Replica 3 ?

- 54. Eberhard Wolff - @ewolff Tuning BASE • Write concerns • How many nodes should acknowledge the write? • Read from primary • …or also secondaries

- 55. Eberhard Wolff - @ewolff Jepsen • Mongo loses writes • A bug – might still be there • Also: non-acknowledge writes might still survive • …and overwrite other data • https://aphyr.com/posts/284-call-me- maybe-mongodb

- 56. Eberhard Wolff - @ewolff MongoDB elasticsearch Riak

- 57. Eberhard Wolff - @ewolff MongoDB elasticsearch Riak

- 58. Eberhard Wolff - @ewolff MongoDB elasticsearch Riak

- 59. Eberhard Wolff - @ewolff Database =Storage + Search

- 60. Eberhard Wolff - @ewolff elasticsearch =Storage + Search

- 61. Eberhard Wolff - @ewolff What is elasticsearch? • Search Engine • Also stores original documents • Based on Lucene Search Libray • Easy scaling

- 62. Eberhard Wolff - @ewolff elasticsearch: Technologies • Java • REST • Open Source (Apache) • Backed by company elasticsearch

- 63. Eberhard Wolff - @ewolff elasticsearch Internals • Append only file • Many benefits • But not too great for updates

- 64. Eberhard Wolff - @ewolff Scaling elasticsearch Server Server Server Shard 1 Replica 1 Replica 2 Shard 2 Replica 3Shard 3

- 65. Eberhard Wolff - @ewolff Tuning BASE • Write acknowledge: 1, majority, all • Including indexing • Read from primary • …or also secondaries

- 66. Eberhard Wolff - @ewolff Jepsen • Loses data even if just one node is partioned (June 2014) • Actively worked on • It’s a search engine… • https://aphyr.com/posts/317-call-me- maybe-elasticsearch • http://www.elasticsearch.org/guide/ en/elasticsearch/resiliency/current/

- 67. Eberhard Wolff - @ewolff Scenarios elasticsearch

- 68. Eberhard Wolff - @ewolff Search • Powerful query language • Configurable index • Text analysis • Stop words • Stemming

- 69. Eberhard Wolff - @ewolff Facets • Number of hits by category • Useful for statistics • & Big Data • Statistical facet (+ computation) • Range facets etc.

- 70. Eberhard Wolff - @ewolff MongoDB elasticsearch Riak

- 71. Eberhard Wolff - @ewolff MongoDB elasticsearch Riak

- 72. Eberhard Wolff - @ewolff Conclusion • Relational databases might be BASE • NoSQL embraces BASE better • Key / Value, Document stores and search engine: very similar features • Care about scaling • Care about resilience

- 73. Eberhard Wolff - @ewolff

- 74. Eberhard Wolff - @ewolff Thank You!