OpenPOWER Acceleration of HPCC Systems

2 likes728 views

JT Kellington, IBM and Allan Cantle, Nallatech present at the 2015 HPCC Systems Engineering Summit Community Day about porting HPCC Systems to the POWER8-based ppc64el architecture.

1 of 29

Ad

Recommended

OpenCAPI-based Image Analysis Pipeline for 18 GB/s kilohertz-framerate X-ray ...

OpenCAPI-based Image Analysis Pipeline for 18 GB/s kilohertz-framerate X-ray ...Ganesan Narayanasamy Macromolecular crystallography is an experimental technique allowing to explore 3D atomic structure of proteins, used by academics for research in biology and by pharmaceutical companies in rational drug design. While up to now development of the technique was limited by scientific instruments performance, recently computing performance becomes a key limitation. In my presentation I will present a computing challenge to handle 18 GB/s data stream coming from the new X-ray detector. I will show PSI experiences in applying conventional hardware for the task and why this attempt failed. I will then present how IC 922 server with OpenCAPI enabled FPGA boards allowed to build a sustainable and scalable solution for high speed data acquisition. Finally, I will give a perspective, how the advancement in hardware development will enable better science by users of the Swiss Light Source.

IBM HPC Transformation with AI

IBM HPC Transformation with AI Ganesan Narayanasamy This document discusses how HPC infrastructure is being transformed with AI. It summarizes that cognitive systems use distributed deep learning across HPC clusters to speed up training times. It also outlines IBM's hardware portfolio expansion for AI training, inference, and storage capabilities. The document discusses software stacks for AI like Watson Machine Learning Community Edition that use containers and universal base images to simplify deployment.

SCFE 2020 OpenCAPI presentation as part of OpenPWOER Tutorial

SCFE 2020 OpenCAPI presentation as part of OpenPWOER TutorialGanesan Narayanasamy This document introduces hardware acceleration using FPGAs with OpenCAPI. It discusses how classic FPGA acceleration has issues like slow CPU-managed memory access and lack of data coherency. OpenCAPI allows FPGAs to directly access host memory, providing faster memory access and data coherency. It also introduces the OC-Accel framework that allows programming FPGAs using C/C++ instead of HDL languages, addressing issues like long development times. Example applications demonstrated significant performance improvements using this approach over CPU-only or classic FPGA acceleration methods.

Lenovo HPC Strategy Update

Lenovo HPC Strategy Updateinside-BigData.com Luigi Brochard from Lenovo gave this talk at the Switzerland HPC Conference. "High performance computing is converging more and more with the big data topic and related infrastructure requirements in the field. Lenovo is investing in developing systems designed to resolve todays and future problems in a more efficient way and respond to the demands of Industrial and research application landscape."

Watch the video: http://wp.me/p3RLHQ-gDC

Learn more: http://www3.lenovo.com/us/en/data-center/solutions/hpc/

Sign up for our insideHPC Newsletter: http://insidehpc.com/newsletter

Using a Field Programmable Gate Array to Accelerate Application Performance

Using a Field Programmable Gate Array to Accelerate Application PerformanceOdinot Stanislas Intel s'intéresse tout particulièrement aux FPGA et notamment au potentiel qu'ils apportent lorsque les ISV et développeurs ont des besoins très spécifiques en Génomique, traitement d'images, traitement de bases de données, et même dans le Cloud. Dans ce document vous aurez l'occasion d'en savoir plus sur notre stratégie, et sur un programme de recherche lancé par Intel et Altera impliquant des Xeon E5 équipés... de FPGA

Intel is looking at FPGA and what they bring to ISVs and developers and their very specific needs in genomics, image processing, databases, and even in the cloud. In this document you will have the opportunity to learn more about our strategy, and a research program initiated by Intel and Altera involving Xeon E5 with... FPGA inside.

Auteur(s)/Author(s):

P. K. Gupta, Director of Cloud Platform Technology, Intel Corporation

Accelerate Big Data Processing with High-Performance Computing Technologies

Accelerate Big Data Processing with High-Performance Computing TechnologiesIntel® Software Learn about opportunities and challenges for accelerating big data middleware on modern high-performance computing (HPC) clusters by exploiting HPC technologies.

TAU E4S ON OpenPOWER /POWER9 platform

TAU E4S ON OpenPOWER /POWER9 platformGanesan Narayanasamy TAU Performance System and the Extreme-scale Scientific Software Stack (E4S) aim to improve productivity for HPC and AI workloads. TAU provides a portable performance evaluation toolkit, while E4S delivers modular and interoperable software stacks. Together, they lower barriers to using software tools from the Exascale Computing Project and enable performance analysis of complex, multi-component applications.

OpenCAPI next generation accelerator

OpenCAPI next generation accelerator Ganesan Narayanasamy This document discusses OpenCAPI, an open standard for high-performance input/output between processors and accelerators. It provides background on the industry drivers for developing such a standard, an overview of OpenCAPI technology and capabilities, examples of OpenCAPI-based systems from IBM and partners, and performance metrics. The document aims to promote OpenCAPI and growing an open ecosystem around it to support accelerated computing workloads.

High-Performance and Scalable Designs of Programming Models for Exascale Systems

High-Performance and Scalable Designs of Programming Models for Exascale Systemsinside-BigData.com - The document discusses programming models and challenges for exascale systems. It focuses on MPI and PGAS models like OpenSHMEM.

- Key challenges include supporting hybrid MPI+PGAS programming, efficient communication for multi-core and accelerator nodes, fault tolerance, and extreme low memory usage.

- The MVAPICH2 project aims to address these challenges through its high performance MPI and PGAS implementation and optimization of communication for technologies like InfiniBand.

OpenHPC: A Comprehensive System Software Stack

OpenHPC: A Comprehensive System Software Stackinside-BigData.com "OpenHPC is a collaborative, community effort that initiated from a desire to aggregate a number of common ingredients required to deploy and manage High Performance Computing (HPC) Linux clusters including provisioning tools, resource management, I/O clients, development tools, and a variety of scientific libraries. Packages provided by OpenHPC have been pre-built with HPC integration in mind with a goal to provide re-usable building blocks for the HPC community. Over time, the community also plans to identify and develop abstraction interfaces between key components to further enhance modularity and interchangeability. The community includes representation from a variety of sources including software vendors, equipment manufacturers, research institutions, supercomputing sites, and others."

Watch the video: http://wp.me/p3RLHQ-gKz

Learn more: http://openhpc.community/

Sign up for our insideHPC Newsletter: http://insidehpc.com/newsletter

Omp tutorial cpugpu_programming_cdac

Omp tutorial cpugpu_programming_cdacGanesan Narayanasamy This document provides an introduction to GPU programming using OpenMP. It discusses what OpenMP is, how the OpenMP programming model works, common OpenMP directives and constructs for parallelization including parallel, simd, work-sharing, tasks, and synchronization. It also covers how to write, compile and run an OpenMP program. Finally, it describes how OpenMP can be used for GPU programming using target directives to offload work to the device and data mapping clauses to manage data transfer between host and device.

00 opencapi acceleration framework yonglu_ver2

00 opencapi acceleration framework yonglu_ver2Yutaka Kawai This document discusses OpenCAPI acceleration using the OpenCAPI Acceleration Framework (oc-accel). It provides an overview of the oc-accel components and workflow, benchmarks the OC-Accel bandwidth and latency, and provides examples of how to fully utilize OC-Accel capabilities to accelerate functions on an FPGA. The document also outlines the OC-Accel development process and previews upcoming features like support for ODMA to port existing PCIe accelerators to OpenCAPI.

OpenPOWER Latest Updates

OpenPOWER Latest UpdatesGanesan Narayanasamy This presentation covers various partners and collaborators who are currently working with OpenPOWER foundation ,Use cases of OpenPOWER systems in multiple Industries , OpenPOWER Workgroups and OpenCAPI features .

DOME 64-bit μDataCenter

DOME 64-bit μDataCenterinside-BigData.com In this deck, Ronald P. Luijten from IBM Research in Zurich presents: DOME 64-bit μDataCenter.

I like to call it a datacenter in a shoebox. With the combination of power and energy efficiency, we believe the microserver will be of interest beyond the DOME project, particularly for cloud data centers and Big Data analytics applications."

The microserver’s team has designed and demonstrated a prototype 64-bit microserver using a PowerPC based chip from Freescale Semiconductor running Linux Fedora and IBM DB2. At 133 × 55 mm2 the microserver contains all of the essential functions of today’s servers, which are 4 to 10 times larger in size. Not only is the microserver compact, it is also very energy-efficient.

Watch the video: http://wp.me/p3RLHQ-gJM

Learn more: https://www.zurich.ibm.com/microserver/

Sign up for our insideHPC Newsletter: http://insideHPC/newsletter

Lenovo HPC: Energy Efficiency and Water-Cool-Technology Innovations

Lenovo HPC: Energy Efficiency and Water-Cool-Technology Innovationsinside-BigData.com In this deck from the 2018 Swiss HPC Conference, Karsten Kutzer from Lenovo presents: Energy Efficiency and Water-Cool-Technology Innovations.

"This session will discuss why water cooling is becoming more and more important for HPC data centers, Lenovo’s series of innovations in the area of direct water-cooled systems, and ways to re-use “waste heat” created by HPC systems."

Watch the video: https://wp.me/p3RLHQ-iDl

Learn more:

http://lenovo.com

and

http://www.hpcadvisorycouncil.com/events/2018/swiss-workshop/agenda.php

Sign up for our insideHPC Newsletter: http://insidehpc.com/newsletter

BXI: Bull eXascale Interconnect

BXI: Bull eXascale Interconnectinside-BigData.com In this deck, Jean-Pierre Panziera from Atos presents: BXI - Bull eXascale Interconnect.

"Exascale entails an explosion of performance, of the number of nodes/cores, of data volume and data movement. At such a scale, optimizing the network that is the backbone of the system becomes a major contributor to global performance. The interconnect is going to be a key enabling technology for exascale systems. This is why one of the cornerstones of Bull’s exascale program is the development of our own new-generation interconnect. The Bull eXascale Interconnect or BXI introduces a paradigm shift in terms of performance, scalability, efficiency, reliability and quality of service for extreme workloads."

Watch the video: http://wp.me/p3RLHQ-gJa

Learn more: https://bull.com/bull-exascale-interconnect/

Sign up for our insideHPC Newsletter: http://insidehpc.com/newsletter

ARM HPC Ecosystem

ARM HPC Ecosysteminside-BigData.com "Algorithmic processing performed in High Performance Computing environments impacts the lives of billions of people, and planning for exascale computing presents significant power challenges to the industry. ARM delivers the enabling technology behind HPC. The 64-bit design of the ARMv8-A architecture combined with Advanced SIMD vectorization are ideal to enable large scientific computing calculations to be executed efficiently on ARM HPC machines. In addition ARM and its partners are working to ensure that all the software tools and libraries, needed by both users and systems administrators, are provided in readily available, optimized packages."

Learn more: https://developer.arm.com/hpc

and

http://hpcuserforum.com

Sign up for our insideHPC Newsletter: http://insidehpc.com/newsletter

OpenPOWER Webinar

OpenPOWER Webinar Ganesan Narayanasamy The document discusses strategies for improving application performance on POWER9 processors using IBM XL and open source compilers. It reviews key POWER9 features and outlines common bottlenecks like branches, register spills, and memory issues. It provides guidelines on using compiler options and coding practices to address these bottlenecks, such as unrolling loops, inlining functions, and prefetching data. Tools like perf are also described for analyzing performance bottlenecks.

Infrastructure et serveurs HP

Infrastructure et serveurs HPProcontact Informatique The document discusses Hewlett-Packard's latest server hardware and management solutions. It introduces the HP ProLiant Gen8 servers which feature improved performance, capacity, and energy efficiency compared to previous generations. New capabilities of the HP iLO 4 management engine and HP SUM software allow for easier remote administration and firmware updates. The document also highlights enhanced storage features such as predictive failure detection and automated data mirroring in HP's Smart Array controllers.

AMD It's Time to ROC

AMD It's Time to ROCinside-BigData.com In this video from the HPC User Forum in Tucson, Gregory Stoner from AMD presents: It's Time to ROC.

"With the announcement of the Boltzmann Initiative and the recent releases of ROCK and ROCR, AMD has ushered in a new era of Heterogeneous Computing. The Boltzmann initiative exposes cutting edge compute capabilities and features on targeted AMD/ATI Radeon discrete GPUs through an open source software stack. The Boltzmann stack is comprised of several components based on open standards, but extended so important hardware capabilities are not hidden by the implementation."

Learn more: http://gpuopen.com/getting-started-with-boltzmann-components-platforms-installation/

and

http://hpcuserforum.com

Watch the video presentation: http://wp.me/p3RLHQ-fcJ

Sign up for our insideHPC Newsletter: http://insidehpc.com/newsletter

MIT's experience on OpenPOWER/POWER 9 platform

MIT's experience on OpenPOWER/POWER 9 platformGanesan Narayanasamy The document discusses using temporal shift modules (TSM) for efficient video recognition, where TSM enables temporal modeling in 2D CNNs with no additional computation cost; TSM models achieve better performance than 3D CNNs and previous methods while using less computation, and can be used for applications like online video understanding, low-latency deployment on edge devices, and large-scale distributed training on supercomputers.

High Performance Interconnects: Landscape, Assessments & Rankings

High Performance Interconnects: Landscape, Assessments & Rankingsinside-BigData.com “Dan Olds will present recent research into the history of High Performance Interconnects (HPI), the current state of the HPI market, where HPIs are going in the future, and how customers should evaluate HPI options today. This will be a highly informative and interactive session.”

Watch the video: http://insidehpc.com/2017/04/high-performance-interconnects-assessments-rankings-landscape/

Learn more: http://orionx.net

Sign up for our insidehpc.com/newsletter: http://insidehpc.com/newsletter

Open CAPI, A New Standard for High Performance Attachment of Memory, Accelera...

Open CAPI, A New Standard for High Performance Attachment of Memory, Accelera...inside-BigData.com In this deck from the Switzerland HPC Conference, Jeffrey Stuecheli from IBM presents: Open CAPI, A New Standard for High Performance Attachment of Memory, Acceleration, and Networks.

"OpenCAPI sets a new standard for the industry, providing a high bandwidth, low latency open interface design specification. This session will introduce the new standard and it's goals. This includes details on how the interface protocol provides unprecedented latency and bandwidth to attached devices."

Watch the video: http://wp.me/p3RLHQ-gDZ

Learn more: http://opencapi.org/

and

http://hpcadvisorycouncil.com/events/2017/swiss-workshop/agenda.php

Sign up for our insideHPC Newsletter: http://insidehpc.com/newsletter

GEN-Z: An Overview and Use Cases

GEN-Z: An Overview and Use Casesinside-BigData.com This document provides an overview of Gen-Z, a new interconnect architecture proposed to address challenges with increasing data growth, flat memory capacity, and the need for real-time data insights. Gen-Z is designed to provide high bandwidth and low latency memory semantic communications across systems. It breaks the traditional processor-memory interlock by introducing a split controller model. This allows for more flexible and composable solutions that can leverage different memory technologies. The Gen-Z Consortium is developing open standards for the architecture with the goal of enabling innovation through an open and non-proprietary approach.

It's Time to ROCm!

It's Time to ROCm!inside-BigData.com AMD has been away from the HPC space for a while, but now they are coming back in a big way with an open software approach to GPU computing. The Radeon Open Compute Platform (ROCm) was born from the Boltzman Initiative announced last year at SC15. Now available on GitHub, the ROCm Platform bringing a rich foundation to advanced computing by better integrating the CPU and GPU to solve real-world problems.

"We are excited to present ROCm, the first open-source HPC/ultrascale-class platform for GPU computing that’s also programming-language independent. We are bringing the UNIX philosophy of choice, minimalism and modular software development to GPU computing. The new ROCm foundation lets you choose or even develop tools and a language run time for your application."

Watch the video presentation: http://wp.me/p3RLHQ-fJT

Learn more: https://radeonopencompute.github.io/

Sign up for our insideHPC Newsletter: http://insidehpc.com/newsletter

NWU and HPC

NWU and HPCWilhelm van Belkum The document discusses plans to establish an institutional high performance computing (HPC) facility at North-West University. It outlines the technical goals of building a Beowulf cluster to link existing departmental clusters and integrate with national and international computational grids. It also discusses management principles for the new HPC facility to ensure sustainability, efficiency, reliability, availability and high performance.

Best Practices and Performance Studies for High-Performance Computing Clusters

Best Practices and Performance Studies for High-Performance Computing ClustersIntel® Software The document discusses best practices and a performance study of HPC clusters. It covers system configuration and tuning, building applications, Intel Xeon processors, efficient execution methods, tools for boosting performance, and application performance highlights using HPL and HPCG benchmarks. The document contains agenda items, market share data, typical BIOS settings, compiler flags, MPI usage, and performance results from single node and cluster runs of the benchmarks.

Meet HBase 2.0 and Phoenix 5.0

Meet HBase 2.0 and Phoenix 5.0DataWorks Summit This talk will give an overview of two exciting releases for Apache HBase 2.0 and Phoenix 5.0. HBase provides a NoSQL column store on Hadoop for random, real-time read/write workloads. Phoenix provides SQL on top of HBase. HBase 2.0 contains a large number of features that were a long time in development, including rewritten region assignment, performance improvements (RPC, rewritten write pipeline, etc), async clients and WAL, a C++ client, offheaping memstore and other buffers, shading of dependencies, as well as a lot of other fixes and stability improvements. We will go into details on some of the most important improvements in the release, as well as what are the implications for the users in terms of API and upgrade paths. Phoenix 5.0 is the next big Phoenix release because of its integration with HBase 2.0 and a lot of performance improvements in support of secondary Indexes. It has many important new features such as encoded columns, Kafka and Hive integration, and many other performance improvements. This session will also describe the uses cases that HBase and Phoenix are a good architectural fit for.

Speaker: Alan Gates, Co-Founder, Hortonworks

ORGANIZATIONS CHART

ORGANIZATIONS CHARTDolly Vanessa Akel Llamas The document outlines the organization chart for Cardique, a public corporation created by Law 99 in 1993 to manage and conserve the environment and natural resources in Colombia. The chart shows that Cardique has subdepartments for environmental management, planning, protocols, general secretariat, administrative and financial, and an environmental quality laboratory. The subdepartments are responsible for monitoring natural resources, territorial management planning, disseminating corporate actions, legal matters, financial management and staffing, and providing analytical services for water, soil and air samples.

Leveraging SMEs’ Strength for INSPIRE

Leveraging SMEs’ Strength for INSPIREsmespire Snowflake Software provides software and services to help organizations implement INSPIRE, the European directive on sharing geospatial data. They offer products like GO Publisher and GO Loader to create and load INSPIRE data, as well as consulting, training, and hosting INSPIRE data services. The document discusses experiences with INSPIRE implementation, opportunities for small- and medium-sized enterprises, barriers to implementation, and new initiatives to advance open data and geospatial innovation in Europe.

Ad

More Related Content

What's hot (20)

High-Performance and Scalable Designs of Programming Models for Exascale Systems

High-Performance and Scalable Designs of Programming Models for Exascale Systemsinside-BigData.com - The document discusses programming models and challenges for exascale systems. It focuses on MPI and PGAS models like OpenSHMEM.

- Key challenges include supporting hybrid MPI+PGAS programming, efficient communication for multi-core and accelerator nodes, fault tolerance, and extreme low memory usage.

- The MVAPICH2 project aims to address these challenges through its high performance MPI and PGAS implementation and optimization of communication for technologies like InfiniBand.

OpenHPC: A Comprehensive System Software Stack

OpenHPC: A Comprehensive System Software Stackinside-BigData.com "OpenHPC is a collaborative, community effort that initiated from a desire to aggregate a number of common ingredients required to deploy and manage High Performance Computing (HPC) Linux clusters including provisioning tools, resource management, I/O clients, development tools, and a variety of scientific libraries. Packages provided by OpenHPC have been pre-built with HPC integration in mind with a goal to provide re-usable building blocks for the HPC community. Over time, the community also plans to identify and develop abstraction interfaces between key components to further enhance modularity and interchangeability. The community includes representation from a variety of sources including software vendors, equipment manufacturers, research institutions, supercomputing sites, and others."

Watch the video: http://wp.me/p3RLHQ-gKz

Learn more: http://openhpc.community/

Sign up for our insideHPC Newsletter: http://insidehpc.com/newsletter

Omp tutorial cpugpu_programming_cdac

Omp tutorial cpugpu_programming_cdacGanesan Narayanasamy This document provides an introduction to GPU programming using OpenMP. It discusses what OpenMP is, how the OpenMP programming model works, common OpenMP directives and constructs for parallelization including parallel, simd, work-sharing, tasks, and synchronization. It also covers how to write, compile and run an OpenMP program. Finally, it describes how OpenMP can be used for GPU programming using target directives to offload work to the device and data mapping clauses to manage data transfer between host and device.

00 opencapi acceleration framework yonglu_ver2

00 opencapi acceleration framework yonglu_ver2Yutaka Kawai This document discusses OpenCAPI acceleration using the OpenCAPI Acceleration Framework (oc-accel). It provides an overview of the oc-accel components and workflow, benchmarks the OC-Accel bandwidth and latency, and provides examples of how to fully utilize OC-Accel capabilities to accelerate functions on an FPGA. The document also outlines the OC-Accel development process and previews upcoming features like support for ODMA to port existing PCIe accelerators to OpenCAPI.

OpenPOWER Latest Updates

OpenPOWER Latest UpdatesGanesan Narayanasamy This presentation covers various partners and collaborators who are currently working with OpenPOWER foundation ,Use cases of OpenPOWER systems in multiple Industries , OpenPOWER Workgroups and OpenCAPI features .

DOME 64-bit μDataCenter

DOME 64-bit μDataCenterinside-BigData.com In this deck, Ronald P. Luijten from IBM Research in Zurich presents: DOME 64-bit μDataCenter.

I like to call it a datacenter in a shoebox. With the combination of power and energy efficiency, we believe the microserver will be of interest beyond the DOME project, particularly for cloud data centers and Big Data analytics applications."

The microserver’s team has designed and demonstrated a prototype 64-bit microserver using a PowerPC based chip from Freescale Semiconductor running Linux Fedora and IBM DB2. At 133 × 55 mm2 the microserver contains all of the essential functions of today’s servers, which are 4 to 10 times larger in size. Not only is the microserver compact, it is also very energy-efficient.

Watch the video: http://wp.me/p3RLHQ-gJM

Learn more: https://www.zurich.ibm.com/microserver/

Sign up for our insideHPC Newsletter: http://insideHPC/newsletter

Lenovo HPC: Energy Efficiency and Water-Cool-Technology Innovations

Lenovo HPC: Energy Efficiency and Water-Cool-Technology Innovationsinside-BigData.com In this deck from the 2018 Swiss HPC Conference, Karsten Kutzer from Lenovo presents: Energy Efficiency and Water-Cool-Technology Innovations.

"This session will discuss why water cooling is becoming more and more important for HPC data centers, Lenovo’s series of innovations in the area of direct water-cooled systems, and ways to re-use “waste heat” created by HPC systems."

Watch the video: https://wp.me/p3RLHQ-iDl

Learn more:

http://lenovo.com

and

http://www.hpcadvisorycouncil.com/events/2018/swiss-workshop/agenda.php

Sign up for our insideHPC Newsletter: http://insidehpc.com/newsletter

BXI: Bull eXascale Interconnect

BXI: Bull eXascale Interconnectinside-BigData.com In this deck, Jean-Pierre Panziera from Atos presents: BXI - Bull eXascale Interconnect.

"Exascale entails an explosion of performance, of the number of nodes/cores, of data volume and data movement. At such a scale, optimizing the network that is the backbone of the system becomes a major contributor to global performance. The interconnect is going to be a key enabling technology for exascale systems. This is why one of the cornerstones of Bull’s exascale program is the development of our own new-generation interconnect. The Bull eXascale Interconnect or BXI introduces a paradigm shift in terms of performance, scalability, efficiency, reliability and quality of service for extreme workloads."

Watch the video: http://wp.me/p3RLHQ-gJa

Learn more: https://bull.com/bull-exascale-interconnect/

Sign up for our insideHPC Newsletter: http://insidehpc.com/newsletter

ARM HPC Ecosystem

ARM HPC Ecosysteminside-BigData.com "Algorithmic processing performed in High Performance Computing environments impacts the lives of billions of people, and planning for exascale computing presents significant power challenges to the industry. ARM delivers the enabling technology behind HPC. The 64-bit design of the ARMv8-A architecture combined with Advanced SIMD vectorization are ideal to enable large scientific computing calculations to be executed efficiently on ARM HPC machines. In addition ARM and its partners are working to ensure that all the software tools and libraries, needed by both users and systems administrators, are provided in readily available, optimized packages."

Learn more: https://developer.arm.com/hpc

and

http://hpcuserforum.com

Sign up for our insideHPC Newsletter: http://insidehpc.com/newsletter

OpenPOWER Webinar

OpenPOWER Webinar Ganesan Narayanasamy The document discusses strategies for improving application performance on POWER9 processors using IBM XL and open source compilers. It reviews key POWER9 features and outlines common bottlenecks like branches, register spills, and memory issues. It provides guidelines on using compiler options and coding practices to address these bottlenecks, such as unrolling loops, inlining functions, and prefetching data. Tools like perf are also described for analyzing performance bottlenecks.

Infrastructure et serveurs HP

Infrastructure et serveurs HPProcontact Informatique The document discusses Hewlett-Packard's latest server hardware and management solutions. It introduces the HP ProLiant Gen8 servers which feature improved performance, capacity, and energy efficiency compared to previous generations. New capabilities of the HP iLO 4 management engine and HP SUM software allow for easier remote administration and firmware updates. The document also highlights enhanced storage features such as predictive failure detection and automated data mirroring in HP's Smart Array controllers.

AMD It's Time to ROC

AMD It's Time to ROCinside-BigData.com In this video from the HPC User Forum in Tucson, Gregory Stoner from AMD presents: It's Time to ROC.

"With the announcement of the Boltzmann Initiative and the recent releases of ROCK and ROCR, AMD has ushered in a new era of Heterogeneous Computing. The Boltzmann initiative exposes cutting edge compute capabilities and features on targeted AMD/ATI Radeon discrete GPUs through an open source software stack. The Boltzmann stack is comprised of several components based on open standards, but extended so important hardware capabilities are not hidden by the implementation."

Learn more: http://gpuopen.com/getting-started-with-boltzmann-components-platforms-installation/

and

http://hpcuserforum.com

Watch the video presentation: http://wp.me/p3RLHQ-fcJ

Sign up for our insideHPC Newsletter: http://insidehpc.com/newsletter

MIT's experience on OpenPOWER/POWER 9 platform

MIT's experience on OpenPOWER/POWER 9 platformGanesan Narayanasamy The document discusses using temporal shift modules (TSM) for efficient video recognition, where TSM enables temporal modeling in 2D CNNs with no additional computation cost; TSM models achieve better performance than 3D CNNs and previous methods while using less computation, and can be used for applications like online video understanding, low-latency deployment on edge devices, and large-scale distributed training on supercomputers.

High Performance Interconnects: Landscape, Assessments & Rankings

High Performance Interconnects: Landscape, Assessments & Rankingsinside-BigData.com “Dan Olds will present recent research into the history of High Performance Interconnects (HPI), the current state of the HPI market, where HPIs are going in the future, and how customers should evaluate HPI options today. This will be a highly informative and interactive session.”

Watch the video: http://insidehpc.com/2017/04/high-performance-interconnects-assessments-rankings-landscape/

Learn more: http://orionx.net

Sign up for our insidehpc.com/newsletter: http://insidehpc.com/newsletter

Open CAPI, A New Standard for High Performance Attachment of Memory, Accelera...

Open CAPI, A New Standard for High Performance Attachment of Memory, Accelera...inside-BigData.com In this deck from the Switzerland HPC Conference, Jeffrey Stuecheli from IBM presents: Open CAPI, A New Standard for High Performance Attachment of Memory, Acceleration, and Networks.

"OpenCAPI sets a new standard for the industry, providing a high bandwidth, low latency open interface design specification. This session will introduce the new standard and it's goals. This includes details on how the interface protocol provides unprecedented latency and bandwidth to attached devices."

Watch the video: http://wp.me/p3RLHQ-gDZ

Learn more: http://opencapi.org/

and

http://hpcadvisorycouncil.com/events/2017/swiss-workshop/agenda.php

Sign up for our insideHPC Newsletter: http://insidehpc.com/newsletter

GEN-Z: An Overview and Use Cases

GEN-Z: An Overview and Use Casesinside-BigData.com This document provides an overview of Gen-Z, a new interconnect architecture proposed to address challenges with increasing data growth, flat memory capacity, and the need for real-time data insights. Gen-Z is designed to provide high bandwidth and low latency memory semantic communications across systems. It breaks the traditional processor-memory interlock by introducing a split controller model. This allows for more flexible and composable solutions that can leverage different memory technologies. The Gen-Z Consortium is developing open standards for the architecture with the goal of enabling innovation through an open and non-proprietary approach.

It's Time to ROCm!

It's Time to ROCm!inside-BigData.com AMD has been away from the HPC space for a while, but now they are coming back in a big way with an open software approach to GPU computing. The Radeon Open Compute Platform (ROCm) was born from the Boltzman Initiative announced last year at SC15. Now available on GitHub, the ROCm Platform bringing a rich foundation to advanced computing by better integrating the CPU and GPU to solve real-world problems.

"We are excited to present ROCm, the first open-source HPC/ultrascale-class platform for GPU computing that’s also programming-language independent. We are bringing the UNIX philosophy of choice, minimalism and modular software development to GPU computing. The new ROCm foundation lets you choose or even develop tools and a language run time for your application."

Watch the video presentation: http://wp.me/p3RLHQ-fJT

Learn more: https://radeonopencompute.github.io/

Sign up for our insideHPC Newsletter: http://insidehpc.com/newsletter

NWU and HPC

NWU and HPCWilhelm van Belkum The document discusses plans to establish an institutional high performance computing (HPC) facility at North-West University. It outlines the technical goals of building a Beowulf cluster to link existing departmental clusters and integrate with national and international computational grids. It also discusses management principles for the new HPC facility to ensure sustainability, efficiency, reliability, availability and high performance.

Best Practices and Performance Studies for High-Performance Computing Clusters

Best Practices and Performance Studies for High-Performance Computing ClustersIntel® Software The document discusses best practices and a performance study of HPC clusters. It covers system configuration and tuning, building applications, Intel Xeon processors, efficient execution methods, tools for boosting performance, and application performance highlights using HPL and HPCG benchmarks. The document contains agenda items, market share data, typical BIOS settings, compiler flags, MPI usage, and performance results from single node and cluster runs of the benchmarks.

Meet HBase 2.0 and Phoenix 5.0

Meet HBase 2.0 and Phoenix 5.0DataWorks Summit This talk will give an overview of two exciting releases for Apache HBase 2.0 and Phoenix 5.0. HBase provides a NoSQL column store on Hadoop for random, real-time read/write workloads. Phoenix provides SQL on top of HBase. HBase 2.0 contains a large number of features that were a long time in development, including rewritten region assignment, performance improvements (RPC, rewritten write pipeline, etc), async clients and WAL, a C++ client, offheaping memstore and other buffers, shading of dependencies, as well as a lot of other fixes and stability improvements. We will go into details on some of the most important improvements in the release, as well as what are the implications for the users in terms of API and upgrade paths. Phoenix 5.0 is the next big Phoenix release because of its integration with HBase 2.0 and a lot of performance improvements in support of secondary Indexes. It has many important new features such as encoded columns, Kafka and Hive integration, and many other performance improvements. This session will also describe the uses cases that HBase and Phoenix are a good architectural fit for.

Speaker: Alan Gates, Co-Founder, Hortonworks

Viewers also liked (20)

ORGANIZATIONS CHART

ORGANIZATIONS CHARTDolly Vanessa Akel Llamas The document outlines the organization chart for Cardique, a public corporation created by Law 99 in 1993 to manage and conserve the environment and natural resources in Colombia. The chart shows that Cardique has subdepartments for environmental management, planning, protocols, general secretariat, administrative and financial, and an environmental quality laboratory. The subdepartments are responsible for monitoring natural resources, territorial management planning, disseminating corporate actions, legal matters, financial management and staffing, and providing analytical services for water, soil and air samples.

Leveraging SMEs’ Strength for INSPIRE

Leveraging SMEs’ Strength for INSPIREsmespire Snowflake Software provides software and services to help organizations implement INSPIRE, the European directive on sharing geospatial data. They offer products like GO Publisher and GO Loader to create and load INSPIRE data, as well as consulting, training, and hosting INSPIRE data services. The document discusses experiences with INSPIRE implementation, opportunities for small- and medium-sized enterprises, barriers to implementation, and new initiatives to advance open data and geospatial innovation in Europe.

Corporate etiquette

Corporate etiquetteBrijesh Shukla SEO Etiquette has to do with good manners. It's not so much our own good manners, but making other people feel comfortable by the way we behave.

“The conduct or procedure required by good breeding or authority to be observed in social or official life.”

100+pet ideas

100+pet ideaskellyquince This document outlines over 110 potential topics related to challenges of owning and caring for cats. Some of the topics discussed include cats eating birds and critters, keeping cats indoors vs outdoors, cleaning litter boxes, feeding cats, cats bringing in unwanted items like moths, cats getting eaten by owls, cats running away, and saying goodbye when a beloved cat dies. The author decides to focus their monoprint story on a cat named Oona bringing in live moths.

Presentation supervision

Presentation supervisionMaxikar90 DKV Seguros is a Spanish health insurance company that is part of the Munich Health and DKV Group. It has 2000 employees, over 2 million clients, and a premium volume of 625 million euros. The internship involves working in the international marketing department on tasks like managing advertising media, translations, consulting with clients about insurance products, and customer service role plays.

asian pharma press -published

asian pharma press -publishedSachin Sangle The document summarizes research on the watery fluid found at the floral base of Spathodea campanulata. Key findings include:

1) The fluid was found to be stable for over 48 hours at room temperature and over 6 months refrigerated. It had a viscosity of 0.8645cp, density of 0.9426g/ml, and pH of 6.4.

2) Analysis found minerals including calcium, magnesium, iron, chromium, zinc, and manganese. Phytochemical screening indicated the presence of steroids, minerals, proteins and organic acids.

3) Antimicrobial testing of the dried fluid residue showed activity against all tested microorganisms, including

LEVICK Weekly - Mar 8 2013

LEVICK Weekly - Mar 8 2013LEVICK Corporate Humor

Context is Key

Cyber Security

with Christopher Garcia

Blogs

Worth Following

Technology’s Impact

on Reputation

LEVICK

In the News

LEVICK Weekly - Jan 11 2013

LEVICK Weekly - Jan 11 2013LEVICK In this Issue:

Herbalife Speaks for Itself as Ackman and Loeb Duke it out

Lance Armstrong: Coming Clean Won't Be Enough

Bank of America Is at the Crossroads (Again)

White Collar Enforcement in 2013 with Amy Conway-Hatcher

What's Next: Food Labeling Issues in 2013

Blogs Worth Following

LEVICK in the News

Presentacion ciclopaseo ingles

Presentacion ciclopaseo inglesMauricio Martínez This document outlines the plans for a cycling event in Envigado, Colombia. The event aims to promote cycling as a non-polluting form of transportation and encourage healthy recreation. It will involve participants of all ages and abilities cycling along tracks in the municipality. Organizers hope the event will help social wellbeing by promoting healthy living and getting citizens involved in physical activity and sports. Precautions and recommendations are provided to ensure participants have a fun and safe experience.

JB Marks Education Trust presentation: Bursary Recipients

JB Marks Education Trust presentation: Bursary RecipientsEdzai Conilias Zvobwo A talk I gave to bursary recipients of the JB Marks Education Trust programme in Midrand on the 31st of January 2015

IRJP Published

IRJP PublishedSachin Sangle The document compares the curcumin content in fresh and stored turmeric rhizomes. Samples were collected from underground pits ("pev") where rhizomes are traditionally stored, as well as local markets. Curcumin content was highest in rhizomes stored 2.5 years in pevs, ranging from 3.426% to 5.784%. After 3 years of storage, curcumin content decreased to 3.186%. Soil in the storage region contains minerals and selenium that may prevent microbial growth and oxidation, maintaining higher curcumin levels compared to other storage methods.

The importance of Exchange 2013 CAS in Exchange 2013 coexistence | Part 1/2 |...

The importance of Exchange 2013 CAS in Exchange 2013 coexistence | Part 1/2 |...Eyal Doron The importance of Exchange 2013 CAS in Exchange 2013 coexistence | Part 1/2 | 2#23

http://o365info.com/the-importance-of-exchange-2013-cas-in-exchange-2013-coexistence-environment-part-12

Reviewing the subject of - Exchange CAS 2013 role in an Exchange 2013 coexistence environment (this is the first article, in a series of two articles).

Eyal Doron | o365info.com

Bridge the gap alerts

Bridge the gap alertsaoconno2 This document discusses different alert services available through legal research databases. It describes how to set up search alerts, Shepard's alerts, publication alerts, docket alerts, and more on Lexis Advance and WestlawNext. It also mentions alert options on Bloomberg Law, CCH Intelliconnect, Bloomberg BNA, and some other databases. The document provides examples and links for additional information on using the various alert tools. It concludes by discussing legal blogs and ways to follow blogs through RSS readers or Twitter.

Android Training in Bangalore

Android Training in BangaloreCMS Computer This short document promotes creating presentations using Haiku Deck, a tool for making slideshows. It encourages the reader to get started making their own Haiku Deck presentation and sharing it on SlideShare. In a single sentence, it pitches the idea of using Haiku Deck to easily design presentations.

Genre theory

Genre theoryhannahdickson This document discusses genre theory in film. It defines genre as a category or type of film defined by structural and thematic elements that allow audiences to identify common traits. While genres aim to organize films, sub-genres have emerged that combine elements of different genres. This allows for more experimental hybrid genres but makes categorization less clear-cut. The document also profiles several theorists' perspectives on genre, including that genres can restrict creativity but provide predictability valued by audiences.

Air Compressor Manufacturers, Air Compressor Manufacturers Gujarat

Air Compressor Manufacturers, Air Compressor Manufacturers GujaratAirtech Engineers Air Compressor Manufacturers India, Air Compressor Manufacturers Ahmedabad, Exporter of Air Compressors, Air Compressor Spare Parts, Air Compressor Spares, Commercial Air Compressor, Double Stage Air Compressor, Commercial Air Compressor, For More Details Visit Us Online At:

Manual para-realizar-estudios-de-prefactibilidad-y-factibilidad

Manual para-realizar-estudios-de-prefactibilidad-y-factibilidadAlicia Quispe Este manual tiene como objetivo guiar la elaboración de estudios de prefactibilidad y factibilidad para proyectos de carreteras. Explica el ciclo de proyectos, la identificación del problema y las alternativas, la formulación del proyecto, el análisis beneficio-costo y criterios de rentabilidad, y la evaluación económica y financiera del proyecto. El manual fue desarrollado por un equipo de expertos nicaragüenses con el apoyo del gobierno de Dinamarca para normalizar los procesos de revisión de estud

El costo real de los ataques ¿Cómo te impacta en ROI?

El costo real de los ataques ¿Cómo te impacta en ROI?Grupo Smartekh Te imaginas, ¿cuánto les cuesta técnicamente a los ciber delincuentes llevar a cabo los ataques de forma exitosa y cómo cuánto ganan?

Según lo revelado en esta investigación con Palo Alto Networks mientras que algunos atacantes pueden estar motivados por razones no materiales o monetarias, como las que son geopolítica o reputación, un promedio de 69% de

los encuestados dicen que están en esto por el dinero.

Ad

Similar to OpenPOWER Acceleration of HPCC Systems (20)

Demystify OpenPOWER

Demystify OpenPOWERAnand Haridass The document discusses OpenPOWER, an open ecosystem using the POWER architecture to share expertise, investment, and intellectual property. It outlines the goals of the OpenPOWER Foundation to serve evolving customer needs through collaborative innovation and solutions. Examples are provided of innovations developed through partnerships, such as accelerated databases, optimized flash storage, and high performance computing systems. The benefits of the OpenPOWER approach for customers are affirmed through adoption of Linux distributions and cloud deployments.

6 open capi_meetup_in_japan_final

6 open capi_meetup_in_japan_finalYutaka Kawai OpenCAPI is an open standard interface that provides high bandwidth and low latency connections between processors, accelerators, memory and storage. It addresses the growing need for increased performance driven by workloads like AI and the limitations of Moore's Law. OpenCAPI supports a heterogeneous system architecture with technologies like FPGAs and different memory types. It uses a thin protocol stack and virtual addressing to minimize latency. The SNAP framework also makes programming accelerators using OpenCAPI easier by abstracting the hardware details.

Assisting User’s Transition to Titan’s Accelerated Architecture

Assisting User’s Transition to Titan’s Accelerated Architectureinside-BigData.com Oak Ridge National Lab is home of Titan, the largest GPU accelerated supercomputer in the world. This fact alone can be an intimidating experience for users new to leadership computing facilities. Our facility has collected over four years of experience helping users port applications to Titan. This talk will explain common paths and tools to successfully port applications, and expose common difficulties experienced by new users. Lastly, learn how our free and open training program can assist your organization in this transition.

Scaling Redis Cluster Deployments for Genome Analysis (featuring LSU) - Terry...

Scaling Redis Cluster Deployments for Genome Analysis (featuring LSU) - Terry...Redis Labs Timely genome analysis requires a fresh approach to platform design for big data problems. Louisiana State University has tested enterprise cluster deployments of Redis with a unique solution that allows flash memory to act as extended RAM. Learn about how this solution allows large amounts of data to be handled with a fraction of the memory needed for a typical deployment.

New Generation of IBM Power Systems Delivering value with Red Hat Enterprise ...

New Generation of IBM Power Systems Delivering value with Red Hat Enterprise ...Filipe Miranda New Generation of IBM Power Systems Delivering value with Red Hat Enterprise Linux - Learn about the new IBM Power8 architecture, about Red Hat Enterprise Linux 7 for Power Systems and additional information on EnterpriseDB on how to migrate from Oracle to PostgreSQL.

UPDATED!

OpenCAPI Technology Ecosystem

OpenCAPI Technology EcosystemGanesan Narayanasamy The Open Coherent Accelerator Processor Interface (OpenCAPI) is an industry-standard architecture targeted for emerging accelerator solutions and workloads. This session will address these following areas : 1.) The latest technology advancements surround OpenCAPI, 2.) The OpenCAPI strategy as it relates to the other industry acceleration standards. ie Intel's CXL, Gen-Z and CCIX, 3.) The open initiatives surrounding OMI and OpenCAPI 3.0 and GitHub, 4.) Industry Open Source Initiatives around OpenCAPI, 5.) OC-Accel - Our new FPGA programming framework, supporting OpenCAPI 3.0, targeting higher level programming languages such as C, C++ 6.) Interesting Use Cases

Sparc t4 systems customer presentation

Sparc t4 systems customer presentationsolarisyougood The document describes Oracle's new SPARC T4 servers, which provide up to 5x better single-threaded performance than previous SPARC servers. The SPARC T4 servers are optimized for Oracle software like the Oracle Database and WebLogic Suite. They include integrated security features like encryption without performance penalties. The document provides an overview of the SPARC T4 processor architecture and performance advantages, and describes how the new servers are optimized solutions for running Oracle applications.

Ceph Community Talk on High-Performance Solid Sate Ceph

Ceph Community Talk on High-Performance Solid Sate Ceph Ceph Community The document summarizes a presentation given by representatives from various companies on optimizing Ceph for high-performance solid state drives. It discusses testing a real workload on a Ceph cluster with 50 SSD nodes that achieved over 280,000 read and write IOPS. Areas for further optimization were identified, such as reducing latency spikes and improving single-threaded performance. Various companies then described their contributions to Ceph performance, such as Intel providing hardware for testing and Samsung discussing SSD interface improvements.

GTC15-Manoj-Roge-OpenPOWER

GTC15-Manoj-Roge-OpenPOWERAchronix This document discusses trends in data centers and the challenges of scaling compute power efficiently. It notes the need for heterogeneous computing and workload acceleration to address issues like "dark silicon" where only a fraction of CPU transistors can be active due to power and thermal constraints. FPGAs are presented as a solution for acceleration by providing increased throughput and lower latency compared to CPUs for various workloads. The document outlines Xilinx's software-defined development environments and how their solutions have demonstrated significant performance and efficiency gains when used for acceleration over CAPI (Coherent Accelerator Processor Interface) in IBM Power8 systems. Overall it promotes rethinking data center architecture and harnessing the potential of FPGAs through an open ecosystem like OpenPOWER.

Heterogeneous Computing on POWER - IBM and OpenPOWER technologies to accelera...

Heterogeneous Computing on POWER - IBM and OpenPOWER technologies to accelera...Cesar Maciel Heterogeneous computing refers to systems that use more than one kind of processor and direct applications to run in the processor that is the most efficient for that specific task. Power Systems servers based on the POWER8 processor support several accelerators that are integrated into the system to improve the efficiency of an application.

Introduction to HPC & Supercomputing in AI

Introduction to HPC & Supercomputing in AITyrone Systems Catch up with our live webinar on Natural Language Processing! Learn about how it works and how it applies to you. We have provided all the information in our video recording you would not miss out on.

Watch the Natural Language Processing webinar here!

Maxwell siuc hpc_description_tutorial

Maxwell siuc hpc_description_tutorialmadhuinturi This document provides an introduction to high-performance computing (HPC) including definitions, applications, hardware, and software. It defines HPC as utilizing parallel processing through computer clusters and supercomputers to solve complex modeling problems. The document then describes typical HPC cluster hardware such as computing nodes, a head node, switches, storage, and a KVM. It also outlines cluster management software, job scheduling, and parallel programming tools like MPI that allow programs to run simultaneously on multiple processors. An example HPC cluster at SIU called Maxwell is presented with its technical specifications and a tutorial on logging into and running simple MPI programs on the system.

Japan's post K Computer

Japan's post K Computerinside-BigData.com In this deck from the HPC User Forum in Austin, Yutaka Ishikawa from Riken AICS presents: Japan's post K Computer.

Watch the video presentation: http://wp.me/p3RLHQ-fJ6

Learn more: http://hpcuserforum.com

OCP Telco Engineering Workshop at BCE2017

OCP Telco Engineering Workshop at BCE2017Radisys Corporation Radisys' CTO, Andrew Alleman, was one of the featured speakers at the OCP Telco Engineering Workshop during the 2017 Big Communications Event. Andrew discussed carrier-grade open rack architecture (CG-OpenRack-19), the future of open hardware standards and commercial products in the OCP pipeline during his presentation.

ODP Presentation LinuxCon NA 2014

ODP Presentation LinuxCon NA 2014Michael Christofferson The document discusses the Open Data Plane (ODP) project, which aims to create an open source framework for data plane applications. ODP provides a standardized API to enable networking applications across different architectures like ARM, Intel and PowerPC. It is based on the Event Machine model of work-driven processing. ODP implementations optimize the API for different hardware platforms while providing application portability. The project aims to support functions like dynamic load balancing, power management, and virtual switch integration.

Heterogeneous Computing : The Future of Systems

Heterogeneous Computing : The Future of SystemsAnand Haridass Charts from NITK-IBM Computer Systems Research Group (NCSRG)

- Dennard Scaling,Moore's Law, OpenPOWER, Storage Class Memory, FPGA, GPU, CAPI, OpenCAPI, nVidia nvlink, Google Microsoft Heterogeneous system usage

Challenges in Embedded Computing

Challenges in Embedded ComputingPradeep Kumar TS This document discusses embedded computing and microcontrollers. It provides information on characteristics of embedded systems like meeting deadlines and real-time constraints. It explains why microprocessors are useful for implementing digital systems efficiently. Microprocessors can be customized for different price points and markets. The document also discusses challenges in embedded computing like power consumption and testing. It provides specifications of computer components like the processor, memory, and ports. Finally, it describes several families of microcontrollers like the Intel 4004, 8051, and ARM profiles.

Hyper threading

Hyper threadingAnmol Purohit This document discusses hyper-threading technology, which allows processors to work more efficiently by executing two threads simultaneously. It begins with an introduction to hyper-threading and then covers key concepts like how it works, the replicated and shared resources, applications that benefit from it, and advantages like improved performance and increased number of supported users. It also notes disadvantages like increased overhead and potential shared resource conflicts.

@IBM Power roadmap 8

@IBM Power roadmap 8 Diego Alberto Tamayo Power servers

Power your cloud. Not all clouds are built the same. Learn about the unique requirements of cloud for big data and analytics.

Mauricio breteernitiz hpc-exascale-iscte

Mauricio breteernitiz hpc-exascale-isctembreternitz The document discusses high performance computing and the path towards exascale systems. It covers key application requirements in areas like cancer research, climate modeling, and materials science. Technological challenges for exascale include power and resilience issues. The US Department of Energy is funding several exascale development programs through 2020, including the CANDLE project applying deep learning to precision cancer medicine. Reaching exascale will enable new capabilities in big data analytics, machine learning, and commercial applications.

Ad

More from HPCC Systems (20)

Natural Language to SQL Query conversion using Machine Learning Techniques on...

Natural Language to SQL Query conversion using Machine Learning Techniques on...HPCC Systems Presented at the RV College of Engineering Multidisciplinary Trends in Information Technology, July 21 - 25, 2020.

Improving Efficiency of Machine Learning Algorithms using HPCC Systems

Improving Efficiency of Machine Learning Algorithms using HPCC SystemsHPCC Systems 1) The document discusses improving the efficiency of machine learning algorithms using the HPCC Systems platform through parallelization.

2) It describes the HPCC Systems architecture and its advantages for distributed machine learning.

3) A parallel DBSCAN algorithm is implemented on the HPCC platform which shows improved performance over the serial algorithm, with execution times decreasing as more nodes are used.

Towards Trustable AI for Complex Systems

Towards Trustable AI for Complex SystemsHPCC Systems This document provides a summary of a presentation on achieving trustable artificial intelligence (AI) for complex systems. The presentation discusses making data, systems understanding, and AI algorithms more trustable. It suggests deeper data extraction, wider integration of multi-modal data, and augmenting limited data to make data more trustable. A holistic view of systems and balancing simplification and complication can aid understanding. Moving beyond correlation to causation and explaining rather than treating AI as a black box can improve trust in algorithms. The overall goal is to develop explainable and reliable AI that humans will feel confident using to understand and manage complex life science and information technology systems.

Welcome

WelcomeHPCC Systems The document thanks sponsors of different levels for an HPCC summit. It also provides a link to a YouTube video from the summit that is 20 minutes and 19 seconds long and is part of a playlist.

Closing / Adjourn

Closing / Adjourn HPCC Systems The document thanks sponsors of different levels for the #HPCCSummit. It also provides a link to a 1 hour and 41 minute long YouTube video from a playlist related to the summit. The video can be viewed at the URL listed and is the tenth video in the playlist.

Community Website: Virtual Ribbon Cutting

Community Website: Virtual Ribbon CuttingHPCC Systems The reveal of how HPCC Systems is improving and expanding around the world for building a global community.

Path to 8.0

Path to 8.0 HPCC Systems Come hear a brief overview on the direction the HPCC Systems platform is heading, and get a glimpse into some of the likely highlights included in the next minor and major versions.

Release Cycle Changes

Release Cycle ChangesHPCC Systems This talk will explain the reasoning behind the release cycle changes, and how overcoming the challenges faced in the previous practice of automated testing has introduced new benefits and wider acceptance from the wider community.

Geohashing with Uber’s H3 Geospatial Index

Geohashing with Uber’s H3 Geospatial Index HPCC Systems An introductory look at the ECL H3 Plugin (available since v7.2.0) - a journey from lat/long to ROXIE Service driven visualizations

Advancements in HPCC Systems Machine Learning

Advancements in HPCC Systems Machine LearningHPCC Systems This presentation will provide an overview of the latest advancements in Machine Learning modules over the past year, including Clustering, Natural Language Processing, Deep Learning, and the Expanded Model Evaluation Metrics.

Clustering Methods of the HPCC Systems Machine Learning Library

The clustering method is an important part of unsupervised learning. To gain the unsupervised learning capability, two widely applied clustering methods, KMeans and DBSCAN are adopted to the current Machine Learning library. This presentation will introduce the newly developed clustering algorithms and the evaluation methods.

Docker Support

Docker Support HPCC Systems Learn how to package the HPCC Systems Platform in a Docker container and deploy it locally, and build an HPCC Systems Platform AMI followed by an AWS deployment.

Expanding HPCC Systems Deep Neural Network Capabilities

Expanding HPCC Systems Deep Neural Network CapabilitiesHPCC Systems The training process for modern deep neural networks requires big data and large computational power. Though HPCC Systems excels at both of these, HPCC Systems is limited to utilizing the CPU only. It has been shown that GPU acceleration vastly improves Deep Learning training time. In this talk, Robert will explain how HPCC Systems became the first GPU accelerated library while also greatly expanding its deep neural network capabilities.

Leveraging Intra-Node Parallelization in HPCC Systems

Leveraging Intra-Node Parallelization in HPCC SystemsHPCC Systems This document discusses leveraging intra-node parallelization in HPCC Systems to improve the performance of set similarity joins (SSJ). It describes a naïve approach to computing SSJ that suffers from memory exhaustion and straggling executors. The presented approach replicates and groups independent data using hashing to address these issues while enabling efficient use of multiple CPU cores through multithreading. Experiments show the approach scales to larger datasets and achieves better performance by increasing the number of threads per executor. Lessons learned include that less complex optimizations are more robust in distributed environments.

DataPatterns - Profiling in ECL Watch

DataPatterns - Profiling in ECL Watch HPCC Systems DataPatterns is an ECL bundle that provides data profiling and research tools. It has been integrated into the HPCC Systems ECL Standard Library and ECL Watch. The presentation discusses improvements to DataPatterns, how it can be used from ECL code or within ECL Watch to generate profiling reports, and differences between installations from the ECL bundle, Standard Library, or ECL Watch. It also demonstrates using DataDetectors models to examine sample data and previews the new Cloud IDE for browser-based ECL programming.

Leveraging the Spark-HPCC Ecosystem

Leveraging the Spark-HPCC Ecosystem HPCC Systems Join us for an introductory walk-through of using the Spark-HPCC Systems ecosystem to analyze your HPCC Systems data using a collaborative Apache Zeppelin notebook environment.

Work Unit Analysis Tool

Work Unit Analysis ToolHPCC Systems The Workunit Analyser examines the entire workunit to produce advice that both novices and experienced ECL developers should find useful. The Workunit Analyser is a post-execution analyser that identifies potential issues and assists users in writing better ECL.

Community Award Ceremony

Community Award Ceremony HPCC Systems Let's congratulate the winners of the 2019 HPCC Systems Poster Competition and other award recipients.

Dapper Tool - A Bundle to Make your ECL Neater

Dapper Tool - A Bundle to Make your ECL NeaterHPCC Systems Have you ever written a long project for a simple column rename and thought, this should be easier? What about nicely named output statements? Yeah they bother me too. Oh, and DEDUP(SORT(DISTINCT()))? There is a better way! Learn how Dapper can help!

A Success Story of Challenging the Status Quo: Gadget Girls and the Inclusion...

A Success Story of Challenging the Status Quo: Gadget Girls and the Inclusion...HPCC Systems Join NSU University School student and program leader for girls in robotics, Ronnie Shashoua, as she talks about Gadget Girls - a project in collaboration with the NSU Alvin Sherman Library, NSU University School, the South Florida Girl Scouts, and sponsorship from the HPCC Systems Academic Program. Gadget Girls is a program aimed at encouraging girls in fourth and fifth grade to explore their interests in and love for STEM, especially robotics and engineering. Shashoua will discuss the underrepresentation of girls in the Florida Vex Robotics circuit, such as how it demonstrates a larger trend of low numbers of women undertaking STEM educational and career paths and the role it played in inspiring the creation of Gadget Girls.

Beyond the Spectrum – Creating an Environment of Diversity and Empowerment wi...

Beyond the Spectrum – Creating an Environment of Diversity and Empowerment wi...HPCC Systems Hear how the Florida Atlantic University Center for Autism and Related Disabilities has partnered with the HPCC Systems community to provide young people with autism both the technology and professional skills needed to compete in today’s workplace. Mentoring and hands-on coding through ECL workshops have positively impacted students, opening doors to new opportunities for both students and employers.

Recently uploaded (20)

Perencanaan Pengendalian-Proyek-Konstruksi-MS-PROJECT.pptx

Perencanaan Pengendalian-Proyek-Konstruksi-MS-PROJECT.pptxPareaRusan planning and calculation monitoring project

Thingyan is now a global treasure! See how people around the world are search...

Thingyan is now a global treasure! See how people around the world are search...Pixellion We explored how the world searches for 'Thingyan' and 'သင်္ကြန်' and this year, it’s extra special. Thingyan is now officially recognized as a World Intangible Cultural Heritage by UNESCO! Dive into the trends and celebrate with us!

How iCode cybertech Helped Me Recover My Lost Funds

How iCode cybertech Helped Me Recover My Lost Fundsireneschmid345 I was devastated when I realized that I had fallen victim to an online fraud, losing a significant amount of money in the process. After countless hours of searching for a solution, I came across iCode cybertech. From the moment I reached out to their team, I felt a sense of hope that I can recommend iCode Cybertech enough for anyone who has faced similar challenges. Their commitment to helping clients and their exceptional service truly set them apart. Thank you, iCode cybertech, for turning my situation around!

[email protected]

computer organization and assembly language.docx

computer organization and assembly language.docxalisoftwareengineer1 computer organization and assembly language : its about types of programming language along with variable and array description..https://www.nfciet.edu.pk/

Template_A3nnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnn

Template_A3nnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnnncegiver630 Telangana State, India’s newest state that was carved from the erstwhile state of Andhra

Pradesh in 2014 has launched the Water Grid Scheme named as ‘Mission Bhagiratha (MB)’

to seek a permanent and sustainable solution to the drinking water problem in the state. MB is

designed to provide potable drinking water to every household in their premises through

piped water supply (PWS) by 2018. The vision of the project is to ensure safe and sustainable

piped drinking water supply from surface water sources

Call illuminati Agent in uganda+256776963507/0741506136

Call illuminati Agent in uganda+256776963507/0741506136illuminati Agent uganda call+256776963507/0741506136 Call illuminati Agent in uganda+256776963507/0741506136

04302025_CCC TUG_DataVista: The Design Story

04302025_CCC TUG_DataVista: The Design Storyccctableauusergroup CCCCO and WestEd share the story behind how DataVista came together from a design standpoint and in Tableau.

How to join illuminati Agent in uganda call+256776963507/0741506136

How to join illuminati Agent in uganda call+256776963507/0741506136illuminati Agent uganda call+256776963507/0741506136 How to join illuminati Agent in uganda call+256776963507/0741506136

Adobe Analytics NOAM Central User Group April 2025 Agent AI: Uncovering the S...

Adobe Analytics NOAM Central User Group April 2025 Agent AI: Uncovering the S...gmuir1066 Discussion of Highlights of Adobe Summit 2025

Call illuminati Agent in uganda+256776963507/0741506136

Call illuminati Agent in uganda+256776963507/0741506136illuminati Agent uganda call+256776963507/0741506136

How to join illuminati Agent in uganda call+256776963507/0741506136

How to join illuminati Agent in uganda call+256776963507/0741506136illuminati Agent uganda call+256776963507/0741506136

OpenPOWER Acceleration of HPCC Systems

- 1. OpenPOWER Acceleration of HPCC Systems 2015 LexisNexis® Risk Solutions HPCC Engineering Summit – Community Day Presenters: JT Kellington & Allan Cantle September 29, 2015 Delray Beach, FL Accelerating the Pace of Innovation

- 2. Overview/Agenda • The OpenPOWER Story • Porting HPCC Systems to ppc64el • POWER8 at a Glance • Power 8 Data Centric Architectures with FPGA Acceleration • Summary 2

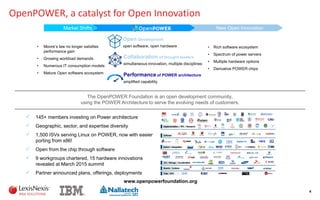

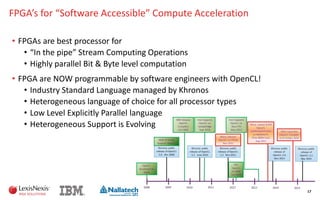

- 4. OpenPOWER, a catalyst for Open Innovation 4 • Moore’s law no longer satisfies performance gain • Growing workload demands • Numerous IT consumption models • Mature Open software ecosystem Performance of POWER architecture amplified capability Open Development open software, open hardware Collaboration of thought leaders simultaneous innovation, multiple disciplines The OpenPOWER Foundation is an open development community, using the POWER Architecture to serve the evolving needs of customers. • Rich software ecosystem • Spectrum of power servers • Multiple hardware options • Derivative POWER chips Market Shifts New Open Innovation 145+ members investing on Power architecture Geographic, sector, and expertise diversity 1,500 ISVs serving Linux on POWER, now with easier porting from x86! Open from the chip through software 9 workgroups chartered, 15 hardware innovations revealed at March 2015 summit Partner announced plans, offerings, deployments www.openpowerfoundation.org

- 5. Porting HPCC Systems to ppc64el

- 6. HPCC Systems and POWER8 Designed For Easy Porting HPCC Systems Framework • ECL is Platform Agnostic • Hardware and architecture changes transparent to end user • CMake, gcc, binutils, Node.js, etc. • Open Source! • Quick and easy collaboration POWER8 Designed for Open Innovation • Little Endian OS • More compatible with industry • IBM Software Development Kit for Linux on Power • Advance Toolchain • Post-Link Optimization • Linux performance analysis tools • Full support in Ubuntu, RHEL, and SLES 6

- 7. Porting Details Initial port took less than a week! • Added ARCH defines for PPC64EL (system/include/platform.h) • Added stack description for POWER8 (ecl/hql/hqlstack.hpp) • Added binutils support for powerpc (ecl/hqlcpp/hqlres.cpp) 7 Deployment and testing took a bit longer • Couple of bugs found: • Stricter alignment for atomic operations • New compiler did not allow compare of “this” to NULL • Updates to Init system with latest Ubuntu • Monkey on the keyboard! • Basic setup and running was easy, but harder to go to the “next level” • Storage configuration, memory usage, thread support, etc.

- 8. POWER8 at a Glance

- 9. POWER8 Is Designed For Big Data! 9 Technology • 22 nm SOI, eDRAM, 15 ML 650 mm2 Caches • 512 KB SRAM L2 / core • 96 MB eDRAM shared L3 Memory • Up to 230 GB/s sustained bandwidth Bus Interfaces • Durable open memory attach interface • Up to 48x 48x Integrated PCI gen3/socket • SMP interconnect • CAPI Cores • 12 cores (SMT8) • 8 dispatch, 10 issue, 16 execution pipes • 2x internal data flows/queues • Enhanced prefetching • 64 KB data cache, 32 KB instruction cache Accelerators • Crypto and memory expansion • Transactional memory • VMM assist • Data move/VM mobility POWER8 Scale-Out Dual Chip Module Chip Interconnect CoreCoreCore L2L2L2 L3 Bank L3 Bank L3 Bank L3 Bank L3 Bank L3 Bank L2L2L2 Core Core Core Chip Interconnect Core Core Core L2 L2 L2 L2 L2 L2 L3 Bank L3 Bank L3 Bank L3 Bank L3 Bank L3 Bank Core Core Core MemoryBus MemoryBus SMPInterconnect SMPInterconnect SMPSMPCAPIPCIe SMPCAPIPCIeSMP

- 10. POWER8 Introduces CAPI Technology 10 CAPP PCIe POWER8 Processor FPGA Accelerated Functional Unit (AFU) CAPI IBM Supplied POWER Service Layer Typical I/O Model Flow Flow with a Coherent Model Shared Mem. Notify Accelerator Acceleration Shared Memory Completion DD Call Copy or Pin Source Data MMIO Notify Accelerator Acceleration Poll / Int Completion Copy or Unpin Result Data Ret. From DD Completion Advantages of Coherent Attachment Over I/O Attachment Virtual Addressing & Data Caching (significant latency reduction) Easier, Natural Programming Model (avoid application restructuring) Enables Apps Not Possible on I/O (Pointer chasing, shared mem semaphores, …)

- 11. strategy ( ) CAPI Attached Flash Optimization • Attach IBM FlashSystem to POWER8 via CAPI • Read/write commands issued via APIs from applications to eliminate 97% of code path length • Saves 20-30 cores per 1M IOPS Pin buffers, Translate, Map DMA, Start I/O Application Read/Write Syscall Interrupt, unmap, unpin,Iodone scheduling 20K instructions reduced to <2000 Disk and Adapter DD strategy ( ) iodone ( ) FileSystem Application User Library Posix Async I/O Style API Shared Memory Work Queue aio_read() aio_write() iodone ( ) LVM

- 12. CAPI Unlocks the Next Level of Performance for Flash Identical hardware with 2 different paths to data FlashSystem Conventional I/O (FC) CAPI 0 20,000 40,000 60,000 80,000 100,000 120,000 Conventional CAPI IOPS per Hardware Thread 0 50 100 150 200 250 300 350 400 450 500 Conventional CAPI Latency (microseconds) IBM POWER S822L >5x better IOPS per HW thread >2x lower latency

- 13. POWER 8 Data Centric Architectures with FPGA Acceleration • Allan Cantle – President & Founder, Nallatech

- 14. Nallatech at a glance Server qualified accelerator cards featuring FPGAs, network I/O and an open architecture software/firmware framework » Energy-efficient High Performance Heterogeneous Computing » Real-time, low latency network and I/O processing » Design Services for Application Porting » IBM Open Power partner » Altera OpenCL partner » Xilinx Alliance partner » 20 Year History of FPGA Acceleration including IBM System Z qualified & 1000+ Node deployments 14

- 15. Heterogeneous Computing – Motivation • Efficient Evolutional Compute Performance • 1998 - DARPA Polymorphic Computing • 2003 – Power Wall GPGPUProcessors FPGA Processors Multicore Microprocessors Polymorphic Processor A processor that is equally efficient at processing all different data types Polymorphic Application Data Types SymbolicStreaming – SIMD - Vector (DSP) Bit Level SWEPTEfficiency Size,Weight,Energy,Performance,Time