Optimizely NYC Developer Meetup - Experimentation at Blue Apron

- 1. John Cline Engineering Lead, Growth November 7, 2017 Experimentation @ Blue Apron

- 2. 2 Who am I? ►Engineering Lead for Growth Team ►Growth owns: −Marketing/landing pages −Registration/reactivation −Referral program −Experimentation, tracking, and email integrations ►Started at Blue Apron in August 2016 I’m online at @clinejj

- 3. 3 Overview ►The Early Days ►Unique Challenges ►Let’s Talk Solutions ►Getting Testy ►Next Steps 3

- 5. 5 The Early Days Prior to ~Aug 2016, experimentation was done only with Optimizely Web. ►Pros −Easy for non-technical users to create and launch tests −Worked well with our SPA ►Cons −Only worked with client side changes −Could not populate events in Optimizely results view with backend events This worked pretty well, but... 5

- 6. 6 Challenges

- 7. 7 Unique Challenges Blue Apron is a unique business. We’re the first meal-kit company to launch in the US, and a few things make our form of e-commerce a bit more challenging than other companies selling products online: ► We currently offer recurring meal plans (our customers get 2-4 recipes every week unless they skip/cancel) ► We have seasonal recipes and a freshness guarantee ► We (currently) have a six day cutoff for changing your order ► We have multiple fulfillment and delivery methods ► We support customers across web, iOS, and Android It’s possible for a customer to sign up for a plan and then never have a need to log in to our digital product or contact support again. That makes it hard to rely on a client-side only testing setup.

- 8. 8 Unique Challenges Scheduled backend jobs power many critical parts of our business. A client side solution wouldn’t give us flexibility to test this business logic.

- 9. 9 Unique Challenges Because of our unique business model, our KPIs for most tests require long term evaluation. Besides conversion and engagement, we also look at: ►Cohorted LTV by registration week (including accounting for acquisition costs) ►Order rate: What % of weeks has a customer ordered? ►Performance relative to various user segments −Referral vs non-referral −Two person plan vs four person plan −Zip code Tracking these KPIs required someone on our analytics team to run the analysis (anywhere from 2-4 weeks), creating a bottleneck to see test results.

- 10. 10 The Solution

- 11. 11 Enter Optimizely Full Stack Around this time, Optimizely Full Stack was released and targeted precisely at our use case. We looked into open source frameworks (eg Sixpack, Wasabi) but needed something with less of a maintenance cost. Our team also already knew how to use the product. We looked at feature flag frameworks (like Flipper), but needed something for the experimentation use case (vs a feature flag). Our main application is a Ruby/Rails app, so we wrote a thin singleton wrapper for the Full Stack Ruby gem, which helped us support different environments and handle errors. 11

- 12. 12 Integrating with Full Stack We already had some pieces in place that made integration easier: ►An internal concept of an experiment in our data model −A site test has many variations which each have many users ►API for clients to log variation status to our internal system ►Including test variation information in our eventing frameworks (GA and Amplitude) These helped ensure we had a good data pipeline to tag users and events for further analysis when required.

- 13. 13 Integrating with Full Stack The Optimizely results dashboard made it easy to get early directional decisions on whether to stop/ramp a test, while our wrapper gave us the information needed for a deeper analysis. We wrote a wrapper service around the Optimizely client to integrate with our existing site test data model to log bucketing results for analytics purposes. We added an asynchronous event reporter for reporting events to Optimizely (runs in our background job processor). Currently, the Optimizely datafile is only downloaded on application startup.

- 14. 14 Integrating with Full Stack

- 15. 15 Getting Testy

- 16. 16 Testing with Full Stack Creating a test in our application is fairly straightforward: 1. Run a migration to create the test/variations in our data model class SiteTestVariationsForMyTest < ActiveRecord::Migration def self.up site_test = SiteTest.create!( name: 'My Test', experiment_id: 'my-test', is_active: false ) site_test.site_test_variations.create!( variation_name: 'Control', variation_id: 'my-test-control' ) site_test.site_test_variations.create!( variation_name: 'Variation', variation_id: 'my-test-variation' ) end def self.down raise ActiveRecord::IrreversibleMigration end end

- 17. 17 Testing with Full Stack 2. Create a testing service to wrap bucketing logic module SiteTests class MyTestingService include Experimentable def initialize(user) @user = user end def run_experiment return unless user_valid? return if bucket.blank? # Take actions on the user end private def user_valid? # does user meet criteria for test (could also be handled with audiences) end def bucket @variation_id ||= testing_service.bucket_user(@user) end end end

- 18. 18 Testing with Full Stack 3. Bucket users SiteTests::MyTestingService.new(user).run_experiment 3. Read variation status @user&.active_site_test_variation_ids.to_a We generally bucket users at account creation or through an API call to our configurations API (returns feature status/configurations for a user).

- 19. 19 Testing with Full Stack Some tests that we’ve run since integrating: ►New post-registration onboarding flow ►Second box reminder email ►More recipes/plan options ►New delivery schedule ►New reactivation experience These helped ensure we had a good data pipeline to tag users and events for further analysis when required. 19

- 20. 20 Testing with Full Stack More recipes/plan options Control Test

- 21. 21 Testing with Full Stack Control Test More recipes/plan options

- 22. 22 Testing with Full Stack Results from more recipes/plan options test:

- 23. 23 New Reactivation Flow Testing with Full Stack

- 24. 24 Results from new reactivation flow test: Testing with Full Stack

- 25. 25 Next Steps

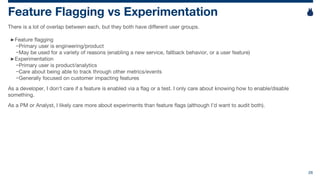

- 26. 26 Feature Flagging vs Experimentation There is a lot of overlap between each, but they both have different user groups. ►Feature flagging −Primary user is engineering/product −May be used for a variety of reasons (enabling a new service, fallback behavior, or a user feature) ►Experimentation −Primary user is product/analytics −Care about being able to track through other metrics/events −Generally focused on customer impacting features As a developer, I don’t care if a feature is enabled via a flag or a test. I only care about knowing how to enable/disable something. As a PM or Analyst, I likely care more about experiments than feature flags (although I’d want to audit both).

- 27. 27 Feature Flagging vs Experimentation We use two separate tools for feature flagging: Open Source Optimizely (GitHub Platform Team) Full Stack GOAL: Create a single source of truth and with an easier to use dashboard for setting up features. 27

- 28. 28 Feature Flagging vs Experimentation Rough plan: ► Expose “feature configuration” to clients through API (both internal code structure and our REST API) −List of enabled features and any configuration parameters ►Consolidate features to be enabled if flipper || optimizely ►Add administration panel to create features/configurations and test or roll them out ►Support better cross-platform testing −App version targeting −User segmentation −“Global holdback” −Mutually exclusive tests Why do we still use flipper? Local, and changes occur instantly. Better for arbitrary % rollouts (vs the more heavyweight enablement through Optimizely).

- 29. 29 Feature Management Optimizely Full Stack just launched a new feature management system. It supports: ►Defining a feature configuration −A feature is a set of boolean/double/integer/string parameters −Can modify parameters by variation (or rollout) ►Enabling a feature in an experiment variation or rolling out to %/audience We’re still testing it, but looks promising (and being able to update variables without rolling code is incredibly helpful). 29

- 30. 30 Tech Debt We are still developing general guidelines for engineers on how to set up tests, particularly around which platform to implement and how to implement (we use Optimizely Web, Full Stack, iOS, Android, and have Flipper for server side gating). As we do more testing, we enable more features, which makes our code more complex. On a quarterly-ish basis, we go through and clean up unused tests (or do so when launching). You should definitely have a philosophy on feature flags and tech debt cleanup.

- 31. 31 Things to Think About Optimizely specifically: ►Environments −Currently have each environment (dev/staging/production) as separate Optimizely projects - makes it difficult to copy tests between each environment ►Cross Platform Testing −If serving multiple platforms, need server managed solution (even if just driving client-only changes) to ensure consistent experience 31

- 32. 32 Things to Think About At the end of the day, who are the users of your feature/experimentation platform? ►Testing gives you insights into user behavior - what are you going to do with that? ►How do you measure your KPIs? ►How do you make decisions? ►What’s the developer experience like? 32