Ad

Oracle engineered systems executive presentation

- 1. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Oracle Engineered Systems Michael Palmeter Senior Director of Engineering Oracle Engineered Systems

- 2. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Larry Ellison “...the cardinal sin of the computing industry is the creation of complexity.” 7/21/2016 Oracle Confidential 2

- 3. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Operational Inefficiencies Resources Spent Supporting IT Services Already Deployed 7/21/2016 Oracle Confidential 3

- 4. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Typical IT Expenditure Distribution Focus on ongoing expenses, not purchase price. 7/21/2016 Oracle Confidential 4

- 5. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Engineered Systems & Cloud Systems built from commodity servers, storage, networking, and software Monolithic servers with custom software Freedomto Innovate 20051995 2015 Freedom from Low-level Tasks Drives Innovation 7/21/2016 Oracle Confidential 5

- 6. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Apps to Disk to Cloud Strategic Investment, Uniquely Co-Engineered Oracle Cloud Applications Database 12c In-Memory Software in Silicon Engineered SystemsOperating Systems MORE THAN $34B IN R&D SINCE 2004 7/21/2016 Oracle Confidential 6

- 7. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Innovation by Combining Business & Technology Cloud Everywhere In-Memory Everything Software in Silicon Technology Directions Business Drivers Social Mobile Analytics Cloud • DBaaS • Built In Virtualization • SDN • Built In Cloud • High Speed Networking Technology Enablers Thoughtful Innovation and Technology Investments • Software in Silicon • In-Memory • DRAM storage • DB Accelerated Storage • Engineered Systems 7/21/2016 Oracle Confidential 7

- 8. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Oracle’s Cloud Business is Booming Already #2 SaaS Provider in the World VMs in 19 Data Centers SaaS Provider in the world Users on the Oracle Cloud Every Day Transactions on the Oracle Cloud Every Day 120K+ #2 62M+ 30B+ 7/21/2016 Oracle Confidential 8

- 9. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Oracle’s Future is Software as a Service & Hybrid Cloud Sustainable Differentiation for Mixed-model Customers and Deployments @oracle @customer 7/21/2016 Oracle Confidential 9

- 10. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Engineered Systems Extreme Performance Maximum Security Lowest Cost 7/21/2016 Oracle Confidential 10

- 11. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Oracle Engineered Systems: Run Your Business Better • Complete, Integrated Platform • Servers, storage, networking, virtualization, operating system, management, application & database templates, specialized management tools, expert support and Platinum Services • Optimized for Oracle • Ultimate OLTP & batch performance, security, reliability, horizontal & vertical scalability • Runs Standard Applications • Runs any standard enterprise workload, from packaged Oracle and 3rd party apps to custom Java, C++, Ruby-on-Rails, Python and more Engineered Systems 7/21/2016 Oracle Confidential 11

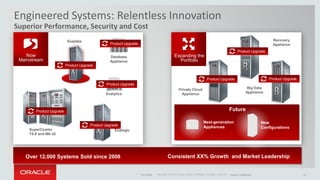

- 12. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Superior Performance, Security and Cost Engineered Systems: Relentless Innovation Now Mainstream Expanding the Portfolio Exalytics Product Upgrade Exalogic Product Upgrade Exadata Product Upgrade Database Appliance Product Upgrade SuperCluster T5-8 and M6-32 Product Upgrade Big Data Appliance Product Upgrade Recovery Appliance Product Upgrade Private Cloud Appliance Product Upgrade Future Over 12,000 Systems Sold since 2008 Consistent XX% Growth and Market Leadership Next-generation Appliances New Configurations 7/21/2016 Oracle Confidential 12

- 13. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Integrated Stack Systems Market Share Rankings* Oracle Engineered Systems Lead the Industry Source: Gartner, Inc., Market Share Analysis: Data Center Hardware Integrated Systems, Worldwide, 2013, Adrian O'Connell, 31 July 2014. 13%2 5%3 1 50% 7/21/2016 Oracle Confidential 13

- 14. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | 1000’s of Leading Brands Choose Engineered Systems 7/21/2016 Oracle Confidential 14

- 15. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Slower than the Speed of Business The Legacy Approach To Managing Data Production Server with OLTP Database Copy DB to Run Cost Management Data Warehouse Server with OLAP Database (Column Format) Oracle Business Intelligence Server (Analytics & Reports) Periodic Batch Processes Extract, Transform, Load Traditional Application Architecture LATENCY (Row Format) 7/21/2016 Oracle Confidential 15

- 16. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Engineered Systems Technology Innovations InfiniBand Scale-Out Smart Scan Columnar Compression Storage Indexes Database Aware PCI Flash Data Mining Offload IO Priorities Prioritized File Recovery Compressed Flash Cache Multi-Tenant Aware Resource Mgmt Exabus JSON and XML offload Columnar Flash Cache Direct-to-Wire Protocol In-Memory Fault Tolerance M7 Software in Silicon TechnologyInnovations 2008 2015 7/21/2016 Oracle Confidential 16

- 17. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Exadata: Oracle Database Storage Acceleration Smart Scale-Out Storage + ++ Smart PCI Flash Cache Transparent cache in front of disk Smart Scan query offload 10x compression Hybrid Columnar Compression 7/21/2016 Oracle Confidential 17

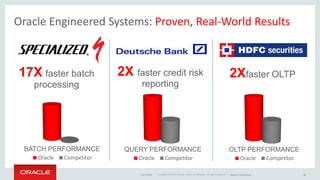

- 18. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | 2X faster credit risk reporting Oracle Competitor QUERY PERFORMANCE Oracle Engineered Systems: Proven, Real-World Results Oracle Competitor OLTP PERFORMANCE 2Xfaster OLTP Oracle Competitor BATCH PERFORMANCE 17X faster batch processing 7/21/2016 Oracle Confidential 18

- 19. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Oracle Database 12c In-Memory Database • BOTH row and column In- Memory formats for same data/table • Simultaneously active and transactionally consistent • 100X Faster Analytics & reporting: column format • 2X Faster OLTP: row format Column Format Memory Row Format Memory AnalyticsOLTP Sales Sales Sales 7/21/2016 Oracle Confidential 19

- 20. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | “Now we can run time-sensitive analytical queries directly against our OLTP database. This is something we wouldn't have dreamt of earlier.” – Arup Nanda, Enterprise Architect Starwood Hotels and Resorts 207/21/2016 Oracle Confidential 20

- 21. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | E-Business Suite In-Memory Cost Management Real-time Cost and Profitability Analysis Optimize costs and working capital Evaluate COGS and valuations Maximize margins and gross profits 1000X Faster 7/21/2016 Oracle Confidential 21

- 22. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | In-Memory Modules for Oracle Applications Real-time Cost and Profitability Analysis Optimize costs and working capital Evaluate COGS and valuations Maximize margins and gross profits 1000X Faster Than commodity x86 hardware and regular Oracle Database 12c 7/21/2016 Oracle Confidential 22

- 23. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | No More Overnight Batch and Real-time Analytics Revolutionary Transformation to Real-Time Enterprise Accelerate Your Business In-Memory Application Architecture INSTANT, SIMPLE In-Memory Cost Mgmt Schema OLTP Schema In-Memory Everything Bi/Analytics 7/21/2016 Oracle Confidential 23

- 24. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Next Generation Data Architecture Exadata, SuperClusterBig Data Appliance Discover, AnalyzeOrganizeStream, Acquire Exalytics 7/21/2016 Oracle Confidential 24

- 25. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Next Generation Data Architecture Exadata, SuperClusterBig Data Appliance Discover, AnalyzeOrganizeStream, Acquire Exalytics Hadoop Open Source R Applications Oracle NoSQL Database Oracle Big Data Connectors Oracle Data Integrator In-DatabaseAnalytics Data Warehouse Oracle Advanced Analytics Oracle Database Oracle Enterprise Performance Management Oracle Business Intelligence Applications Oracle Business Intelligence Tools Oracle Endeca Information Discovery 7/21/2016 Oracle Confidential 25

- 26. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Engineered Systems: Maximum Security Secure Java & Middleware Secure Oracle Database Secure OS Run-Time Secure Boot & Firmware Secure Workload Deployment Secure VM Templates Automated Compliance End-to-End Encryption Engineered Systems 7/21/2016 Oracle Confidential 26

- 27. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Metering, Limiting and Charging Web-based Self Provisioning Dynamic Resource Management Role Based Access Control Complete Workload Isolation Engineered Systems: End-to-End Multitenant Architecture 7/21/2016 Oracle Confidential 27

- 28. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Engineered Systems: Lower OPEX than Multi-vendor • Global standard • Factory assembled and tested • Engineered for end-to-end performance, security & efficiency • Expert support & full-stack patches • Unique platform, assembled by you, for you • Inconsistent Performance • Low efficiency • Complex security • Multi-vendor finger-pointing Custom PlatformEngineered Systems 7/21/2016 Oracle Confidential 28

- 29. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Oracle Platinum Support Complete. Integrated. Proactive. High Availability Services. ORACLE PLATINUM SERVICES 1 Covered system must be within an Oracle two-hour service area to receive two-hour response as a standard service. Industry Leading Enterprise Support • 24/7 support coverage • Specialized Support Team • 2-hour onsite response to hardware issues1 • New updates and upgrades for Database, Server, Storage, and OS software • Industry-leading response times: – 5 Minute Fault Notification – 15 Minute Restoration or Escalation to Development – 30 Minute Joint Debugging with Development • Patch deployment by Oracle engineers • 24/7 Oracle remote fault monitoring • Higher support level for Oracle stack – Server, Storage, Network, Database software Platinum Services at No Additional Cost 7/21/2016 Oracle Confidential 29

- 30. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Oracle Platinum Services Benefits: Real World •75% fewer sev1 Service Requests •70% fewer escalations •37% fewer bugs encountered •27% shorter avg. SR resolution time •86% of SRs are opened by Oracle –Requires an Auto Service Request connection 7/21/2016 Oracle Confidential 30

- 31. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Engineered Systems: Lower OPEX and CAPEX 70% OPEX Reduction Oracle Other OPEX Competition 83% less space 70% less power Oracle Other DATACENTER SPACE Competition 4 weeks to Production Oracle Other TIME TO DEPLOY Competition 7/21/2016 Oracle Confidential 31

- 32. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Simultaneously Runs Batch and OLTP Anytime • 28 days to deploy the largest cMRO implementation in the world • Defect tracking reduced 36x to 5 seconds • Advanced Supply Chain Management savings of 320 person hours per month Oracle Data GuardOracle Data Guard ZFS Replication 7/21/2016 Oracle Confidential 32

- 33. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Success Story: Allegis Group • Large volume of paychecks as well as invoices to other agencies • Long batch processing times – Payroll process , time & billing , GL posting , getting invoices out • Wanted to pay everyone weekly to obtain a marketplace advantage – Achieved goal of single weekly pay run for all, as a marketplace differentiator – Allowed business to add more functions to Apps / take on more growth; increased Rev. Oracle Competitor PROCESSING TIME 70% faster reports Competition 7/21/2016 Oracle Confidential 33

- 34. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Fidelity– Realized Benefits with Engineered Systems 7/21/2016 Oracle Confidential 34

- 35. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Compute • 27 UCS M4 blades/972 Cores • 4 UCS 5108 Chassis • 2 Fabric Connects • Rack Infrastructure Subtotal Public Price $781K $73K $56K $2K $912K Support/Yr. $26K Storage • Netapp FAS 8040 HA • Netapp Storage SW Subtotal $59K $45K $104K $8K Software • Red Hat Server • VMware vSphere & vCenter • Cisco UCS Director & UCS SW Subtotal $180K $86K $265K $88K $45K $33K $166K Total $1,281K Public Price $200K Support/Yr. Compute Cloud • 27 X5 Servers / 972 Cores • 2 OVN Fabric Interconnects • Rack Infrastructure Subtotal List Price Support/Yr. Storage Cloud • ZFS ES HA Storage 18TB • ZFS Replication • ZFS Cloning Subtotal Software • Oracle Linux • VM Server • Enterprise Manager Subtotal Total $608K List Price $73K Support/Yr. Virtual Compute Appliance X5 Cisco UCS Virtual Compute Appliance X5: Lower Cost than Cisco UCS ½ Cost Year 1 7/21/2016 Oracle Confidential 35

- 36. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Compute • 8 UCS M4 (256 Cores/4TB) • 1 UCS 5108 Chassis • 2 Fabric Interconnects • Rack Infrastructure Subtotal ASP $194K $8K $26K $2K $230K Support/Yr. $1K $0K $1K $0K $2K Storage • 10 EMC X-Brick 20 (200TB) • 1 EMC VNX 5600 (80TB) • EMC Unisphere Unified Suite Subtotal $2,805K $65K $15K $2,886K $0K $4K $1K $5K Software • Red Hat Server • VMware vSphere OC EP Subtotal $0k $41K $41K $17k $10K $27K Total $3,157K ASP $33K Support/Yr. Compute • 2 T5-8 (256 Cores/4TB) • 3 InfiniBand Switches • Rack infrastructure ASP Support/Yr. Storage • 8 Exadata HC Storage (384TB) • 1 ZS3-ES Storage (80TB) • ZS3 Cloning & Replication • Exadata Storage Software Software • Oracle Solaris • Oracle VM for SPARC • Enterprise Manager Total $1,048K ASP $155K Support/Yr. SuperCluster T5-8 Full Rack Comparable Cisco/EMC/VMware/RedHat Cisco/EMC/VMware/RedHat ASPOracle ASP SuperCluster: Lower Cost than Multi-vendor x86 Systems 1/3 Cost Year 1 7/21/2016 Oracle Confidential 36

- 37. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Pay for an Engineered System as a Monthly Service Oracle IaaS Private Cloud • Engineered Systems for a monthly fee – Replace CAPEX with OPEX – Lower total cost than purchase • Private / On Customer Premise – Maintain control of business critical data • Elastic Compute Capacity on Demand – Pay For peak capacity, only when needed • Oracle PlatinumPlus Services Included – Higher level of support including patching services $0 $100 $200 $300 $400 $500 $600 0 12 24 36 48 60 CumulativeCost($,000s) Months 3yearterm IaaSwith3 Months CoDperYear IaaSnoCoDused Purchaseplus AnnualSupport 7/21/2016 Oracle Confidential 37

- 38. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | 6 Processors in 5 Years Oracle’s Microprocessor 201320112010 2013 2013 2015 16 x 2nd Gen cores 4MB L3 Cache 1.65 GHz 8 x 3rd Gen Cores 4MB L3 Cache 3.0 GHz 16 x 3rd Gen Cores 8MB L3 Cache 3.6 GHz 12 x 3rd Gen Cores 48MB L3 Cache 3.6 GHz 6 x 3rd Gen Cores 48MB L3 Cache 3.6 GHz 32 x 4th Gen Cores 64MB L3 Cache 4.1 GHz SPARC T3 SPARC T4 SPARC T5 SPARC M5 SPARC M6 SPARC M7 Including Software in Silicon • Query Acceleration • App Data Integrity • More…} Coming Soon 7/21/2016 Oracle Confidential 38

- 39. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | The Ultimate Software Optimization: Hardware Revolution, Not Evolution! Software in Silicon Performance DB In-Memory Acceleration Engines Security Application Data Integrity Lower Cost Compression Engines Coming in 2015 7/21/2016 Oracle Confidential 39

- 40. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Changing the rules for database processing Software in Silicon: Making In-Memory Processing Faster Available with Oracle Database 12c 10x higher performance over legacy processors Performance Efficiency Frees CPU cores for transactional processing Data Capacity Up to 3x the data in same memory with in-line decompression Coming in 2015 7/21/2016 Oracle Confidential 40

- 41. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Query Acceleration: Not Just Simply Queries • Real-world Enterprise analytics accelerated – Hardware for common range comparisons – “How many between start-date and end-date?” • The following query would run entirely in silicon • Results placed directly in processor cache 7/21/2016 Oracle Confidential 41 SQL: SELECT count(*) …WHERE lo_orderdate = d_datekey …AND lo_partkey = 1059538 AND d_year_monthnum BETWEEN 201311 AND 201312; Coming in 2015

- 42. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Up to 170 Billion rows per second Stupendous Performance with Fewer Cores Query Throughput Legacy Approach Useable Memory Legacy Approach Frees 64 cores for decompress and frees 32 cores for query Comparing current systems with equivalent CPU core counts and physical memory Oracle Software in Silicon 10x Oracle Software in Silicon 3x Over More Up to Higher Coming in 2015 7/21/2016 Oracle Confidential 42

- 43. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Improved Security & Reliability in Hardware Software in Silicon: Application Data Integrity • First ever hardware based memory protection • Stops malicious programs from accessing other application memory • Can be always on: Hardware approach has negligible performance impact • Results in improved developer efficiency and more secure and higher available applications Coming in 2015 7/21/2016 Oracle Confidential 43

- 44. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Revolutionary Change to Memory Architecture Security, Reliability: Application Data Integrity Oracle Confidential – Restricted Memory Pointers Memory Content • M7 Application Data Protection stops memory corruptions with no impact on performance • Hidden “color” bits added to pointers (key), and content (lock) • Pointer color (key) must match content color or program is aborted • Can be used in optimized production code • Prevents access off end of structure, stale pointer access, malicious attacks Coming in 2015 7/21/2016 Oracle Confidential 44

- 45. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Oracle Engineered Systems Lowest Cost Maximum Security Extreme Performance 7/21/2016 Oracle Confidential 45

- 46. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Apps to Disk to Cloud Uniquely Co-Engineered, Unprecedented Capabilities Oracle Cloud Applications Database 12c In-Memory Software in Silicon Engineered Systems Operating Systems Real-Time Enterprise 7/21/2016 Oracle Confidential 46

- 47. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |

- 48. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Zero Point-in-Time Copy Across the Enterprise ZDLRA: Modern Scale-out Database Protection as a Service Single System Scales to Protect an Entire Data Center Off Prem Cloud DBaaS 7/21/2016 Oracle Confidential 48

- 49. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. |Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | 49 Recovery Appliance Unique Benefits for Business and I.T. Minimal Impact Backups Production databases only send changes. All backup and tape processing offloaded Eliminate Data Loss Real-time redo transport provides instant protection of ongoing transactions Cloud-Scale Protection Easily protect all databases in the data center using massively scalable service Database Level Recoverability End-to-end reliability, visibility, and control of databases - not disjoint files 7/21/2016 Oracle Confidential 49

- 50. Copyright © 2015 Oracle and/or its affiliates. All rights reserved. | Zero Data Loss Recovery Appliance Overview Delta Push •DBs access and send only changes • Minimal impact on production •Real-time redo transport instantly protects ongoing transactions Protected Databases Protects all DBs in Data Center •Petabytes of data •Oracle 10.2-12c, any platform •No expensive DB backup agents Delta Store •Stores validated, compressed DB changes on disk •Fast restores to any point-in-time using deltas •Built on Exadata scaling and resilience •Enterprise Manager end-to-end control Recovery Appliance Replicates to Remote Recovery Appliance Offloads Tape Backup 7/21/2016 Oracle Confidential 50