[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositional n-Gram Features

1 like184 views

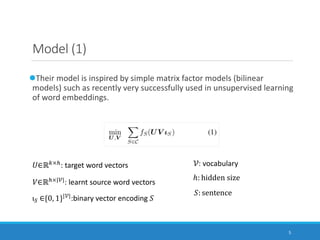

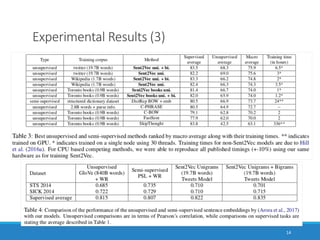

(1) The document presents an unsupervised method called Sent2Vec to learn sentence embeddings using compositional n-gram features. (2) Sent2Vec extends the continuous bag-of-words model to train sentence embeddings by composing word vectors with n-gram embeddings. (3) Experimental results show Sent2Vec outperforms other unsupervised models on most benchmark tasks, highlighting the robustness of the sentence embeddings produced.

1 of 16

Download to read offline

![1

lUnsupervised training of word representation, such as Word2Vec

[Mikolov et al., 2013] is now routinely trained on very large amounts of

raw text data, and have become ubiquitous building blocks of a majority

of current state-of-the-art NLP applications.

lWhile very useful semantic representations are available for words.

Abstract & Introduction (1)](https://image.slidesharecdn.com/paperreadingnaacl-unsupervisedlearningofsentenceembeddingsusingcompositionaln-gramfeatures-181121025013/85/Paper-Reading-Unsupervised-Learning-of-Sentence-Embeddings-using-Compositional-n-Gram-Features-2-320.jpg)

![4

Approach

Their proposed model (Sent2Vec) can be seen as an extension of the Cl -

BOW [Mikolov et al., 2013] training objecKve to train sentence instead

of word embeddings.

Sent2Vec is a simple unsupervised model allowing to coml -pose

sentence embeddings using word vectors along with n-gram

embeddings, simultaneously training composiKon and the embed-ding

vectors themselves.](https://image.slidesharecdn.com/paperreadingnaacl-unsupervisedlearningofsentenceembeddingsusingcompositionaln-gramfeatures-181121025013/85/Paper-Reading-Unsupervised-Learning-of-Sentence-Embeddings-using-Compositional-n-Gram-Features-5-320.jpg)

![6

Model (2)

lConceptually, Sent2Vec can be interpreted as a natural extension of the

word-contexts from C-BOW [Mikolov et al., 2013] to a larger sentence

context.

!(#): the list of n−grams (including un−igrams)

ι&(') ∈{0, 1}|/|: binary vector encoding !(#) #: sentence

67: source (or context) embed−ding](https://image.slidesharecdn.com/paperreadingnaacl-unsupervisedlearningofsentenceembeddingsusingcompositionaln-gramfeatures-181121025013/85/Paper-Reading-Unsupervised-Learning-of-Sentence-Embeddings-using-Compositional-n-Gram-Features-7-320.jpg)

![8

Model (4)

lSubsampling

lTo select the possible target unigrams (positives), they use subsampling as in

[Joulin et al., 2017; Bojanowski et al., 2017], each word ! being discarded

with probability 1 − $%(!)

()*

:the set of words sampled negaJvely for the word !+ ∈ -

-: sentence

4): source (or context) embed−ding

5): target embed−ding$6 ! ≔

8)

∑):∈; 8):

8):the normalized frequency of ! in the corpus](https://image.slidesharecdn.com/paperreadingnaacl-unsupervisedlearningofsentenceembeddingsusingcompositionaln-gramfeatures-181121025013/85/Paper-Reading-Unsupervised-Learning-of-Sentence-Embeddings-using-Compositional-n-Gram-Features-9-320.jpg)

![15

Conclusion

lIn this paper, they introduce a novel, computationally efficient, unsupervised,

C-BOW-inspired method to train and infer sentence embeddings.

lOn supervised evaluations, their method, on an average, achieves better

performance than all other unsupervised competitors with the exception of

Skip-Thought [Kiros et al., 2015].

lHowever, their model is generalizable, extremely fast to train, simple to

understand and easily interpretable, showing the relevance of simple and

well-grounded representation models in contrast to the models using deep

architectures.

lFuture work could focus on augmenting the model to exploit data with

ordered sentences.](https://image.slidesharecdn.com/paperreadingnaacl-unsupervisedlearningofsentenceembeddingsusingcompositionaln-gramfeatures-181121025013/85/Paper-Reading-Unsupervised-Learning-of-Sentence-Embeddings-using-Compositional-n-Gram-Features-16-320.jpg)

Ad

Recommended

Convolutional neural networks for sentiment classification

Convolutional neural networks for sentiment classificationYunchao He This document discusses various techniques for using convolutional neural networks for sentiment classification. It describes using word embeddings as network parameters that are learned during training or initialized from pre-trained models. It also discusses using sentence matrices and different types of convolutional and pooling layers. Specific CNN models discussed include using different channels, dynamic k-max pooling, semantic clustering, enriching word vectors, and multichannel variable-size convolution. References are provided for several papers on applying CNNs to sentiment classification.

Text Mining for Lexicography

Text Mining for LexicographyLeiden University Suzan Verberne gave a workshop on using text mining for lexicography. She discussed using word embeddings to help discover and select new lemmas for dictionaries. Word2Dict is a lexicographic tool that uses word embeddings to present words semantically related to the lemma being described. Word embeddings learn dense vector representations of words by predicting words in context using neural networks, improving on the traditional sparse vector space model. Word embeddings can be trained using the Word2Vec algorithm and analyzed using the Gensim Python package to gain linguistic insights and improve natural language processing applications.

A neural probabilistic language model

A neural probabilistic language modelc sharada The paper presents a neural probabilistic language model that overcomes the curse of dimensionality in probabilistic language modeling. It develops a neural network model with distributed word representations as parameters to learn the probability of sequences. The model learns representations for each word and the probability function as a function of these representations using a hidden and softmax layer. This allows the model to estimate probabilities of unseen sequences during training by taking advantage of longer contexts through continuous representations.

[Paper Reading] Supervised Learning of Universal Sentence Representations fro...![[Paper Reading] Supervised Learning of Universal Sentence Representations fro...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingmt-supervisedlearningofuniversalsentencerepresentationsfromnaturallanguageinferencedata-171216153412-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Supervised Learning of Universal Sentence Representations fro...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingmt-supervisedlearningofuniversalsentencerepresentationsfromnaturallanguageinferencedata-171216153412-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Supervised Learning of Universal Sentence Representations fro...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingmt-supervisedlearningofuniversalsentencerepresentationsfromnaturallanguageinferencedata-171216153412-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Supervised Learning of Universal Sentence Representations fro...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingmt-supervisedlearningofuniversalsentencerepresentationsfromnaturallanguageinferencedata-171216153412-thumbnail.jpg?width=560&fit=bounds)

[Paper Reading] Supervised Learning of Universal Sentence Representations fro...Hiroki Shimanaka This document summarizes the paper "Supervised Learning of Universal Sentence Representations from Natural Language Inference Data". It discusses how the researchers trained sentence embeddings using supervised data from the Stanford Natural Language Inference dataset. They tested several sentence encoder architectures and found that a BiLSTM network with max pooling produced the best performing universal sentence representations, outperforming prior unsupervised methods on 12 transfer tasks. The sentence representations learned from the natural language inference data consistently achieved state-of-the-art performance across multiple downstream tasks.

Word2Vec

Word2Vechyunyoung Lee This is for seminar in NLP(Natural Language Processing) labs about what word2vec is and how to embed word in a vector.

Deep Learning勉強会@小町研 "Learning Character-level Representations for Part-of-Sp...

Deep Learning勉強会@小町研 "Learning Character-level Representations for Part-of-Sp...Yuki Tomo 12/22 Deep Learning勉強会@小町研 にて

"Learning Character-level Representations for Part-of-Speech Tagging" C ́ıcero Nogueira dos Santos, Bianca Zadrozny

を紹介しました。

Tutorial on word2vec

Tutorial on word2vecLeiden University General background and conceptual explanation of word embeddings (word2vec in particular). Mostly aimed at linguists, but also understandable for non-linguists.

Leiden University, 23 March 2018

Word representations in vector space

Word representations in vector spaceAbdullah Khan Zehady - The document discusses neural word embeddings, which represent words as dense real-valued vectors in a continuous vector space. This allows words with similar meanings to have similar vector representations.

- It describes how neural network language models like skip-gram and CBOW can be used to efficiently learn these word embeddings from unlabeled text data in an unsupervised manner. Techniques like hierarchical softmax and negative sampling help reduce computational complexity.

- The learned word embeddings show meaningful syntactic and semantic relationships between words and allow performing analogy and similarity tasks without any supervision during training.

What is word2vec?

What is word2vec?Traian Rebedea General presentation about the word2vec model, including some explanations for training and reference to the implicit factorization done by the model

Probabilistic content models,

Probabilistic content models,Bryan Gummibearehausen This document presents a probabilistic content model using a hidden Markov model to model topic structures in text. It applies this model to two tasks: sentence ordering and extractive summarization. For sentence ordering, it generates all possible orders, computes the probability of each, and ranks them to evaluate how well the original sentence order is recovered. For summarization, it assigns topic probabilities to sentences based on topic distributions and extracts sentences with high topic probabilities in summaries. Evaluation shows the content model outperforms baselines on both tasks, demonstrating it effectively captures text structure.

Nlp research presentation

Nlp research presentationSurya Sg This document provides an overview of natural language processing (NLP) research trends presented at ACL 2020, including shifting away from large labeled datasets towards unsupervised and data augmentation techniques. It discusses the resurgence of retrieval models combined with language models, the focus on explainable NLP models, and reflections on current achievements and limitations in the field. Key papers on BERT and XLNet are summarized, outlining their main ideas and achievements in advancing the state-of-the-art on various NLP tasks.

Language models

Language modelsMaryam Khordad This document discusses natural language processing and language models. It begins by explaining that natural language processing aims to give computers the ability to process human language in order to perform tasks like dialogue systems, machine translation, and question answering. It then discusses how language models assign probabilities to strings of text to determine if they are valid sentences. Specifically, it covers n-gram models which use the previous n words to predict the next, and how smoothing techniques are used to handle uncommon words. The document provides an overview of key concepts in natural language processing and language modeling.

Dual Embedding Space Model (DESM)

Dual Embedding Space Model (DESM)Bhaskar Mitra A fundamental goal of search engines is to identify, given a query, documents that have relevant text. This is intrinsically difficult because the query and the document may use different vocabulary, or the document may contain query words without being relevant. We investigate neural word embeddings as a source of evidence in document ranking. We train a word2vec embedding model on a large unlabelled query corpus, but in contrast to how the model is commonly used, we retain both the input and the output projections, allowing us to leverage both the embedding spaces to derive richer distributional relationships. During ranking we map the query words into the input space and the document words into the output space, and compute a query-document relevance score by aggregating the cosine similarities across all the query-document word pairs.

We postulate that the proposed Dual Embedding Space Model (DESM) captures evidence on whether a document is about a query term in addition to what is modelled by traditional term-frequency based approaches. Our experiments show that the DESM can re-rank top documents returned by a commercial Web search engine, like Bing, better than a term-matching based signal like TF-IDF. However, when ranking a larger set of candidate documents, we find the embeddings-based approach is prone to false positives, retrieving documents that are only loosely related to the query. We demonstrate that this problem can be solved effectively by ranking based on a linear mixture of the DESM and the word counting features.

AINL 2016: Nikolenko

AINL 2016: NikolenkoLidia Pivovarova This document provides an overview of deep learning techniques for natural language processing. It begins with an introduction to distributed word representations like word2vec and GloVe. It then discusses methods for generating sentence embeddings, including paragraph vectors and recursive neural networks. Character-level models are presented as an alternative to word embeddings that can handle morphology and out-of-vocabulary words. Finally, some general deep learning approaches for NLP tasks like text generation and word sense disambiguation are briefly outlined.

Word2Vec

Word2Vecmohammad javad hasani This document provides an overview of Word2Vec, a neural network model for learning word embeddings developed by researchers led by Tomas Mikolov at Google in 2013. It describes the goal of reconstructing word contexts, different word embedding techniques like one-hot vectors, and the two main Word2Vec models - Continuous Bag of Words (CBOW) and Skip-Gram. These models map words to vectors in a neural network and are trained to predict words from contexts or predict contexts from words. The document also discusses Word2Vec parameters, implementations, and other applications that build upon its approach to word embeddings.

GDG Tbilisi 2017. Word Embedding Libraries Overview: Word2Vec and fastText

GDG Tbilisi 2017. Word Embedding Libraries Overview: Word2Vec and fastTextrudolf eremyan This presentation about comparing different word embedding models and libraries like word2vec and fastText, describing their difference and showing pros and cons.

Suggestion Generation for Specific Erroneous Part in a Sentence using Deep Le...

Suggestion Generation for Specific Erroneous Part in a Sentence using Deep Le...ijtsrd This document presents a method for generating suggestions for specific erroneous parts of sentences in Indian languages like Malayalam using deep learning. The method uses recurrent neural networks with long short-term memory layers to train a model on input-output examples of sentences and their corrections. The model takes in preprocessed sentence data and generates a set of possible corrections for erroneous parts through multiple network layers. An analysis of the model shows that it can accurately generate suggestions for word length of three, but requires more data and study to handle the complex morphology and symbols of Malayalam. The performance of the method is limited by the hardware used and it could be improved with a more powerful system and additional training data.

Tensorflow

TensorflowKnoldus Inc. The presentation introduces you to Tensorflow, different types of NLP techniques like CBOW and skip-gram and also Jupyter-notebook. It explains the topics through a problem statement where we wanted to cluster the feedbacks from the knolx sessions, basically it takes you through the process of problem-solving with deep learning models.

[Emnlp] what is glo ve part i - towards data science![[Emnlp] what is glo ve part i - towards data science](https://cdn.slidesharecdn.com/ss_thumbnails/emnlpwhatisgloveparti-towardsdatascience-200228052054-thumbnail.jpg?width=560&fit=bounds)

![[Emnlp] what is glo ve part i - towards data science](https://cdn.slidesharecdn.com/ss_thumbnails/emnlpwhatisgloveparti-towardsdatascience-200228052054-thumbnail.jpg?width=560&fit=bounds)

![[Emnlp] what is glo ve part i - towards data science](https://cdn.slidesharecdn.com/ss_thumbnails/emnlpwhatisgloveparti-towardsdatascience-200228052054-thumbnail.jpg?width=560&fit=bounds)

![[Emnlp] what is glo ve part i - towards data science](https://cdn.slidesharecdn.com/ss_thumbnails/emnlpwhatisgloveparti-towardsdatascience-200228052054-thumbnail.jpg?width=560&fit=bounds)

[Emnlp] what is glo ve part i - towards data scienceNikhil Jaiswal This document introduces GloVe (Global Vectors), a method for creating word embeddings that combines global matrix factorization and local context window models. It discusses how global matrix factorization uses singular value decomposition to reduce a term-frequency matrix to learn word vectors from global corpus statistics. It also explains how local context window models like skip-gram and CBOW learn word embeddings by predicting words from a fixed-size window of surrounding context words during training. GloVe aims to learn from both global co-occurrence patterns and local context to generate word vectors.

Centroid-based Text Summarization through Compositionality of Word Embeddings

Centroid-based Text Summarization through Compositionality of Word EmbeddingsGaetano Rossiello, PhD MultiLing 2017 @ EACL 2017, Valencia, Spain

Summarization and summary evaluation across source types and genres

Source code: https://github.com/gaetangate/text-summarizer

5 Lessons Learned from Designing Neural Models for Information Retrieval

5 Lessons Learned from Designing Neural Models for Information RetrievalBhaskar Mitra Slides from my keynote talk at the Recherche d'Information SEmantique (RISE) workshop at CORIA-TALN 2018 conference in Rennes, France.

(Abstract)

Neural Information Retrieval (or neural IR) is the application of shallow or deep neural networks to IR tasks. Unlike classical IR models, these machine learning (ML) based approaches are data-hungry, requiring large scale training data before they can be deployed. Traditional learning to rank models employ supervised ML techniques—including neural networks—over hand-crafted IR features. By contrast, more recently proposed neural models learn representations of language from raw text that can bridge the gap between the query and the document vocabulary.

Neural IR is an emerging field and research publications in the area has been increasing in recent years. While the community explores new architectures and training regimes, a new set of challenges, opportunities, and design principles are emerging in the context of these new IR models. In this talk, I will share five lessons learned from my personal research in the area of neural IR. I will present a framework for discussing different unsupervised approaches to learning latent representations of text. I will cover several challenges to learning effective text representations for IR and discuss how latent space models should be combined with observed feature spaces for better retrieval performance. Finally, I will conclude with a few case studies that demonstrates the application of neural approaches to IR that go beyond text matching.

Basic review on topic modeling

Basic review on topic modelingHiroyuki Kuromiya This document provides an introduction and overview of 5 papers related to topic modeling techniques. It begins with introducing the speaker and their research interests in text analysis using topic modeling. It then lists the 5 papers that will be discussed: LSA, pLSI, LDA, Gaussian LDA, and criticisms of topic modeling. The document focuses on summarizing each paper's motivation, key points, model, parameter estimation methods, and deficiencies. It provides high-level summaries of key aspects of influential topic modeling papers to introduce the topic.

Topic Modeling

Topic ModelingKarol Grzegorczyk Topic modeling is a technique for discovering hidden semantic patterns in large document collections. It represents documents as probability distributions over latent topics, where each topic is characterized by a distribution over words. Two common probabilistic topic models are latent Dirichlet allocation (LDA) and probabilistic latent semantic analysis (pLSA). LDA assumes each document exhibits multiple topics in different proportions, with topics modeled as distributions over words. Topic modeling provides dimensionality reduction and can be applied to problems like text classification, collaborative filtering, and computer vision tasks like image classification.

An Entity-Driven Recursive Neural Network Model for Chinese Discourse Coheren...

An Entity-Driven Recursive Neural Network Model for Chinese Discourse Coheren...ijaia Chinese discourse coherence modeling remains a challenge taskin Natural Language Processing

field.Existing approaches mostlyfocus on the need for feature engineering, whichadoptthe sophisticated

features to capture the logic or syntactic or semantic relationships acrosssentences within a text.In this

paper, we present an entity-drivenrecursive deep modelfor the Chinese discourse coherence evaluation

based on current English discourse coherenceneural network model. Specifically, to overcome the

shortage of identifying the entity(nouns) overlap across sentences in the currentmodel, Our combined

modelsuccessfully investigatesthe entities information into the recursive neural network

freamework.Evaluation results on both sentence ordering and machine translation coherence rating

task show the effectiveness of the proposed model, which significantly outperforms the existing strong

baseline.

Usage of word sense disambiguation in concept identification in ontology cons...

Usage of word sense disambiguation in concept identification in ontology cons...Innovation Quotient Pvt Ltd The document discusses using word sense disambiguation (WSD) in concept identification for ontology construction. It describes implementing an approach that forms concepts from terms by meeting certain criteria, such as having an intentional definition and instances. WSD is needed to identify the sense of terms related to the domain when forming concepts. The Lesk algorithm is discussed as one method for WSD and concept disambiguation, involving calculating similarity between terms and WordNet senses. Evaluation shows the approach identified domain-specific concepts with reasonable precision and recall compared to other methods. Choosing the best WSD algorithm depends on factors like the problem nature and performance metrics.

Domain-Specific Term Extraction for Concept Identification in Ontology Constr...

Domain-Specific Term Extraction for Concept Identification in Ontology Constr...Innovation Quotient Pvt Ltd This document proposes improvements to domain-specific term extraction for ontology construction. It discusses issues with existing term extraction approaches and presents a new method that selects and organizes target and contrastive corpora. Terms are extracted using linguistic rules on part-of-speech tagged text. Statistical distributions are calculated to identify terms based on their frequency across multiple contrastive corpora. The approach achieves better precision in extracting simple and complex terms for computer science and biomedical domains compared to existing methods.

text summarization using amr

text summarization using amramit nagarkoti presentation from my thesis defense on text summarization, discusses already existing state of art models along with efficiency of AMR or Abstract Meaning Representation for text summarization, we see how we can use AMRs with seq2seq models. We also discuss other techniques such as BPE or Byte Pair Encoding and its effectiveness for the task. Also we see how data augmentation with POS tags and AMRs effect the summarization with s2s learning.

Word2vec: From intuition to practice using gensim

Word2vec: From intuition to practice using gensimEdgar Marca Edgar Marca gives a presentation on word2vec and its applications. He begins by introducing word2vec, a model for learning word embeddings from raw text. Word2vec maps words to vectors in a continuous vector space, where semantically similar words are mapped close together. Next, he discusses how word2vec can be used for applications like understanding Trump supporters' tweets, making restaurant recommendations, and generating song playlists. In closing, he emphasizes that pre-trained word2vec models can be used when data is limited, but results vary based on the specific dataset.

NLP Project: Paragraph Topic Classification

NLP Project: Paragraph Topic ClassificationEugene Nho In this natural language understanding (NLU) project, we implemented and compared various approaches for predicting the topics of paragraph-length texts. This paper explains our methodology and results for the following approaches: Naive Bayes, One-vs-Rest Support Vector Machine (OvR SVM) with GloVe vectors, Latent Dirichlet Allocation (LDA) with OvR SVM, Convolutional Neural Networks (CNN), and Long Short Term Memory networks (LSTM).

Turkish language modeling using BERT

Turkish language modeling using BERTAbdurrahimDerric Our project is about guessing the correct missing

word in a given sentence. To find of guess the missing word

we have two main methods one of them statistical language

modeling, while the other is neural language models.

Statistical language modeling depend on the frequency of the

relation between words and here we use Markov chain. Since

neural language models uses artificial neural networks which

uses deep learning, here we use BERT which is the state of art

in language modeling provided by google.

Ad

More Related Content

What's hot (20)

What is word2vec?

What is word2vec?Traian Rebedea General presentation about the word2vec model, including some explanations for training and reference to the implicit factorization done by the model

Probabilistic content models,

Probabilistic content models,Bryan Gummibearehausen This document presents a probabilistic content model using a hidden Markov model to model topic structures in text. It applies this model to two tasks: sentence ordering and extractive summarization. For sentence ordering, it generates all possible orders, computes the probability of each, and ranks them to evaluate how well the original sentence order is recovered. For summarization, it assigns topic probabilities to sentences based on topic distributions and extracts sentences with high topic probabilities in summaries. Evaluation shows the content model outperforms baselines on both tasks, demonstrating it effectively captures text structure.

Nlp research presentation

Nlp research presentationSurya Sg This document provides an overview of natural language processing (NLP) research trends presented at ACL 2020, including shifting away from large labeled datasets towards unsupervised and data augmentation techniques. It discusses the resurgence of retrieval models combined with language models, the focus on explainable NLP models, and reflections on current achievements and limitations in the field. Key papers on BERT and XLNet are summarized, outlining their main ideas and achievements in advancing the state-of-the-art on various NLP tasks.

Language models

Language modelsMaryam Khordad This document discusses natural language processing and language models. It begins by explaining that natural language processing aims to give computers the ability to process human language in order to perform tasks like dialogue systems, machine translation, and question answering. It then discusses how language models assign probabilities to strings of text to determine if they are valid sentences. Specifically, it covers n-gram models which use the previous n words to predict the next, and how smoothing techniques are used to handle uncommon words. The document provides an overview of key concepts in natural language processing and language modeling.

Dual Embedding Space Model (DESM)

Dual Embedding Space Model (DESM)Bhaskar Mitra A fundamental goal of search engines is to identify, given a query, documents that have relevant text. This is intrinsically difficult because the query and the document may use different vocabulary, or the document may contain query words without being relevant. We investigate neural word embeddings as a source of evidence in document ranking. We train a word2vec embedding model on a large unlabelled query corpus, but in contrast to how the model is commonly used, we retain both the input and the output projections, allowing us to leverage both the embedding spaces to derive richer distributional relationships. During ranking we map the query words into the input space and the document words into the output space, and compute a query-document relevance score by aggregating the cosine similarities across all the query-document word pairs.

We postulate that the proposed Dual Embedding Space Model (DESM) captures evidence on whether a document is about a query term in addition to what is modelled by traditional term-frequency based approaches. Our experiments show that the DESM can re-rank top documents returned by a commercial Web search engine, like Bing, better than a term-matching based signal like TF-IDF. However, when ranking a larger set of candidate documents, we find the embeddings-based approach is prone to false positives, retrieving documents that are only loosely related to the query. We demonstrate that this problem can be solved effectively by ranking based on a linear mixture of the DESM and the word counting features.

AINL 2016: Nikolenko

AINL 2016: NikolenkoLidia Pivovarova This document provides an overview of deep learning techniques for natural language processing. It begins with an introduction to distributed word representations like word2vec and GloVe. It then discusses methods for generating sentence embeddings, including paragraph vectors and recursive neural networks. Character-level models are presented as an alternative to word embeddings that can handle morphology and out-of-vocabulary words. Finally, some general deep learning approaches for NLP tasks like text generation and word sense disambiguation are briefly outlined.

Word2Vec

Word2Vecmohammad javad hasani This document provides an overview of Word2Vec, a neural network model for learning word embeddings developed by researchers led by Tomas Mikolov at Google in 2013. It describes the goal of reconstructing word contexts, different word embedding techniques like one-hot vectors, and the two main Word2Vec models - Continuous Bag of Words (CBOW) and Skip-Gram. These models map words to vectors in a neural network and are trained to predict words from contexts or predict contexts from words. The document also discusses Word2Vec parameters, implementations, and other applications that build upon its approach to word embeddings.

GDG Tbilisi 2017. Word Embedding Libraries Overview: Word2Vec and fastText

GDG Tbilisi 2017. Word Embedding Libraries Overview: Word2Vec and fastTextrudolf eremyan This presentation about comparing different word embedding models and libraries like word2vec and fastText, describing their difference and showing pros and cons.

Suggestion Generation for Specific Erroneous Part in a Sentence using Deep Le...

Suggestion Generation for Specific Erroneous Part in a Sentence using Deep Le...ijtsrd This document presents a method for generating suggestions for specific erroneous parts of sentences in Indian languages like Malayalam using deep learning. The method uses recurrent neural networks with long short-term memory layers to train a model on input-output examples of sentences and their corrections. The model takes in preprocessed sentence data and generates a set of possible corrections for erroneous parts through multiple network layers. An analysis of the model shows that it can accurately generate suggestions for word length of three, but requires more data and study to handle the complex morphology and symbols of Malayalam. The performance of the method is limited by the hardware used and it could be improved with a more powerful system and additional training data.

Tensorflow

TensorflowKnoldus Inc. The presentation introduces you to Tensorflow, different types of NLP techniques like CBOW and skip-gram and also Jupyter-notebook. It explains the topics through a problem statement where we wanted to cluster the feedbacks from the knolx sessions, basically it takes you through the process of problem-solving with deep learning models.

[Emnlp] what is glo ve part i - towards data science![[Emnlp] what is glo ve part i - towards data science](https://cdn.slidesharecdn.com/ss_thumbnails/emnlpwhatisgloveparti-towardsdatascience-200228052054-thumbnail.jpg?width=560&fit=bounds)

![[Emnlp] what is glo ve part i - towards data science](https://cdn.slidesharecdn.com/ss_thumbnails/emnlpwhatisgloveparti-towardsdatascience-200228052054-thumbnail.jpg?width=560&fit=bounds)

![[Emnlp] what is glo ve part i - towards data science](https://cdn.slidesharecdn.com/ss_thumbnails/emnlpwhatisgloveparti-towardsdatascience-200228052054-thumbnail.jpg?width=560&fit=bounds)

![[Emnlp] what is glo ve part i - towards data science](https://cdn.slidesharecdn.com/ss_thumbnails/emnlpwhatisgloveparti-towardsdatascience-200228052054-thumbnail.jpg?width=560&fit=bounds)

[Emnlp] what is glo ve part i - towards data scienceNikhil Jaiswal This document introduces GloVe (Global Vectors), a method for creating word embeddings that combines global matrix factorization and local context window models. It discusses how global matrix factorization uses singular value decomposition to reduce a term-frequency matrix to learn word vectors from global corpus statistics. It also explains how local context window models like skip-gram and CBOW learn word embeddings by predicting words from a fixed-size window of surrounding context words during training. GloVe aims to learn from both global co-occurrence patterns and local context to generate word vectors.

Centroid-based Text Summarization through Compositionality of Word Embeddings

Centroid-based Text Summarization through Compositionality of Word EmbeddingsGaetano Rossiello, PhD MultiLing 2017 @ EACL 2017, Valencia, Spain

Summarization and summary evaluation across source types and genres

Source code: https://github.com/gaetangate/text-summarizer

5 Lessons Learned from Designing Neural Models for Information Retrieval

5 Lessons Learned from Designing Neural Models for Information RetrievalBhaskar Mitra Slides from my keynote talk at the Recherche d'Information SEmantique (RISE) workshop at CORIA-TALN 2018 conference in Rennes, France.

(Abstract)

Neural Information Retrieval (or neural IR) is the application of shallow or deep neural networks to IR tasks. Unlike classical IR models, these machine learning (ML) based approaches are data-hungry, requiring large scale training data before they can be deployed. Traditional learning to rank models employ supervised ML techniques—including neural networks—over hand-crafted IR features. By contrast, more recently proposed neural models learn representations of language from raw text that can bridge the gap between the query and the document vocabulary.

Neural IR is an emerging field and research publications in the area has been increasing in recent years. While the community explores new architectures and training regimes, a new set of challenges, opportunities, and design principles are emerging in the context of these new IR models. In this talk, I will share five lessons learned from my personal research in the area of neural IR. I will present a framework for discussing different unsupervised approaches to learning latent representations of text. I will cover several challenges to learning effective text representations for IR and discuss how latent space models should be combined with observed feature spaces for better retrieval performance. Finally, I will conclude with a few case studies that demonstrates the application of neural approaches to IR that go beyond text matching.

Basic review on topic modeling

Basic review on topic modelingHiroyuki Kuromiya This document provides an introduction and overview of 5 papers related to topic modeling techniques. It begins with introducing the speaker and their research interests in text analysis using topic modeling. It then lists the 5 papers that will be discussed: LSA, pLSI, LDA, Gaussian LDA, and criticisms of topic modeling. The document focuses on summarizing each paper's motivation, key points, model, parameter estimation methods, and deficiencies. It provides high-level summaries of key aspects of influential topic modeling papers to introduce the topic.

Topic Modeling

Topic ModelingKarol Grzegorczyk Topic modeling is a technique for discovering hidden semantic patterns in large document collections. It represents documents as probability distributions over latent topics, where each topic is characterized by a distribution over words. Two common probabilistic topic models are latent Dirichlet allocation (LDA) and probabilistic latent semantic analysis (pLSA). LDA assumes each document exhibits multiple topics in different proportions, with topics modeled as distributions over words. Topic modeling provides dimensionality reduction and can be applied to problems like text classification, collaborative filtering, and computer vision tasks like image classification.

An Entity-Driven Recursive Neural Network Model for Chinese Discourse Coheren...

An Entity-Driven Recursive Neural Network Model for Chinese Discourse Coheren...ijaia Chinese discourse coherence modeling remains a challenge taskin Natural Language Processing

field.Existing approaches mostlyfocus on the need for feature engineering, whichadoptthe sophisticated

features to capture the logic or syntactic or semantic relationships acrosssentences within a text.In this

paper, we present an entity-drivenrecursive deep modelfor the Chinese discourse coherence evaluation

based on current English discourse coherenceneural network model. Specifically, to overcome the

shortage of identifying the entity(nouns) overlap across sentences in the currentmodel, Our combined

modelsuccessfully investigatesthe entities information into the recursive neural network

freamework.Evaluation results on both sentence ordering and machine translation coherence rating

task show the effectiveness of the proposed model, which significantly outperforms the existing strong

baseline.

Usage of word sense disambiguation in concept identification in ontology cons...

Usage of word sense disambiguation in concept identification in ontology cons...Innovation Quotient Pvt Ltd The document discusses using word sense disambiguation (WSD) in concept identification for ontology construction. It describes implementing an approach that forms concepts from terms by meeting certain criteria, such as having an intentional definition and instances. WSD is needed to identify the sense of terms related to the domain when forming concepts. The Lesk algorithm is discussed as one method for WSD and concept disambiguation, involving calculating similarity between terms and WordNet senses. Evaluation shows the approach identified domain-specific concepts with reasonable precision and recall compared to other methods. Choosing the best WSD algorithm depends on factors like the problem nature and performance metrics.

Domain-Specific Term Extraction for Concept Identification in Ontology Constr...

Domain-Specific Term Extraction for Concept Identification in Ontology Constr...Innovation Quotient Pvt Ltd This document proposes improvements to domain-specific term extraction for ontology construction. It discusses issues with existing term extraction approaches and presents a new method that selects and organizes target and contrastive corpora. Terms are extracted using linguistic rules on part-of-speech tagged text. Statistical distributions are calculated to identify terms based on their frequency across multiple contrastive corpora. The approach achieves better precision in extracting simple and complex terms for computer science and biomedical domains compared to existing methods.

text summarization using amr

text summarization using amramit nagarkoti presentation from my thesis defense on text summarization, discusses already existing state of art models along with efficiency of AMR or Abstract Meaning Representation for text summarization, we see how we can use AMRs with seq2seq models. We also discuss other techniques such as BPE or Byte Pair Encoding and its effectiveness for the task. Also we see how data augmentation with POS tags and AMRs effect the summarization with s2s learning.

Word2vec: From intuition to practice using gensim

Word2vec: From intuition to practice using gensimEdgar Marca Edgar Marca gives a presentation on word2vec and its applications. He begins by introducing word2vec, a model for learning word embeddings from raw text. Word2vec maps words to vectors in a continuous vector space, where semantically similar words are mapped close together. Next, he discusses how word2vec can be used for applications like understanding Trump supporters' tweets, making restaurant recommendations, and generating song playlists. In closing, he emphasizes that pre-trained word2vec models can be used when data is limited, but results vary based on the specific dataset.

Usage of word sense disambiguation in concept identification in ontology cons...

Usage of word sense disambiguation in concept identification in ontology cons...Innovation Quotient Pvt Ltd

Domain-Specific Term Extraction for Concept Identification in Ontology Constr...

Domain-Specific Term Extraction for Concept Identification in Ontology Constr...Innovation Quotient Pvt Ltd

Similar to [Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositional n-Gram Features (20)

NLP Project: Paragraph Topic Classification

NLP Project: Paragraph Topic ClassificationEugene Nho In this natural language understanding (NLU) project, we implemented and compared various approaches for predicting the topics of paragraph-length texts. This paper explains our methodology and results for the following approaches: Naive Bayes, One-vs-Rest Support Vector Machine (OvR SVM) with GloVe vectors, Latent Dirichlet Allocation (LDA) with OvR SVM, Convolutional Neural Networks (CNN), and Long Short Term Memory networks (LSTM).

Turkish language modeling using BERT

Turkish language modeling using BERTAbdurrahimDerric Our project is about guessing the correct missing

word in a given sentence. To find of guess the missing word

we have two main methods one of them statistical language

modeling, while the other is neural language models.

Statistical language modeling depend on the frequency of the

relation between words and here we use Markov chain. Since

neural language models uses artificial neural networks which

uses deep learning, here we use BERT which is the state of art

in language modeling provided by google.

THE ABILITY OF WORD EMBEDDINGS TO CAPTURE WORD SIMILARITIES

THE ABILITY OF WORD EMBEDDINGS TO CAPTURE WORD SIMILARITIESkevig Distributed language representation has become the most widely used technique for language representation in various natural language processing tasks. Most of the natural language processing models that are based on deep learning techniques use already pre-trained distributed word representations, commonly called word embeddings. Determining the most qualitative word embeddings is of crucial importance for such models. However, selecting the appropriate word embeddings is a perplexing task since the projected embedding space is not intuitive to humans.In this paper, we explore different approaches for creating distributed word representations. We perform an intrinsic evaluation of several state-of-the-art word embedding methods. Their performance on capturing word similarities is analysed with existing benchmark datasets for word pairs similarities. The research in this paper conducts a correlation analysis between ground truth word similarities and similarities obtained by different word embedding methods.

THE ABILITY OF WORD EMBEDDINGS TO CAPTURE WORD SIMILARITIES

THE ABILITY OF WORD EMBEDDINGS TO CAPTURE WORD SIMILARITIESkevig Distributed language representation has become the most widely used technique for language representation in various natural language processing tasks. Most of the natural language processing models that are based on deep learning techniques use already pre-trained distributed word representations, commonly called word embeddings. Determining the most qualitative word embeddings is of crucial importance for such models. However, selecting the appropriate word embeddings is a perplexing task since the projected embedding space is not intuitive to humans. In this paper, we explore different approaches for creating distributed word representations. We perform an intrinsic evaluation of several state-of-the-art word embedding methods. Their performance on capturing word similarities is analysed with existing benchmark datasets for word pairs similarities. The research in this paper conducts a correlation analysis between ground truth word similarities and similarities obtained by different word embedding methods.

Deep Learning for Information Retrieval: Models, Progress, & Opportunities

Deep Learning for Information Retrieval: Models, Progress, & OpportunitiesMatthew Lease Talk given at the 8th Forum for Information Retrieval Evaluation (FIRE, http://fire.irsi.res.in/fire/2016/), December 10, 2016, and at the Qatar Computing Research Institute (QCRI), December 15, 2016.

CHUNKER BASED SENTIMENT ANALYSIS AND TENSE CLASSIFICATION FOR NEPALI TEXT

CHUNKER BASED SENTIMENT ANALYSIS AND TENSE CLASSIFICATION FOR NEPALI TEXTSethDarren1 The article represents the Sentiment Analysis (SA) and Tense Classification using Skip gram model for the

word to vector encoding on Nepali language. The experiment on SA for positive-negative classification is

carried out in two ways. In the first experiment the vector representation of each sentence is generated by

using Skip-gram model followed by the Multi-Layer Perceptron (MLP) classification and it is observed that

the F1 score of 0.6486 is achieved for positive-negative classification with overall accuracy of 68%.

Whereas in the second experiment the verb chunks are extracted using Nepali parser and carried out the

similar experiment on the verb chunks. F1 scores of 0.6779 is observed for positive -negative classification

with overall accuracy of 85%. Hence, Chunker based sentiment analysis is proven to be better than

sentiment analysis using sentences.

This paper also proposes using a skip-gram model to identify the tenses of Nepali sentences and verbs. In

the third experiment, the vector representation of each sentence is generated by using Skip-gram model

followed by the Multi-Layer Perceptron (MLP)classification and it is observed that verb chunks had very

low overall accuracy of 53%. In the fourth experiment, conducted for Tense Classification using Sentences

resulted in improved efficiency with overall accuracy of 89%. Past tenses were identified and classified

more accurately than other tenses. Hence, sentence based tense classification is proven to be better than

verb Chunker based sentiment analysis.

Chunker Based Sentiment Analysis and Tense Classification for Nepali Text

Chunker Based Sentiment Analysis and Tense Classification for Nepali Textkevig The article represents the Sentiment Analysis (SA) and Tense Classification using Skip gram model for the word to vector encoding on Nepali language. The experiment on SA for positive-negative classification is carried out in two ways. In the first experiment the vector representation of each sentence is generated by using Skip-gram model followed by the Multi-Layer Perceptron (MLP) classification and it is observed that the F1 score of 0.6486 is achieved for positive-negative classification with overall accuracy of 68%. Whereas in the second experiment the verb chunks are extracted using Nepali parser and carried out the similar experiment on the verb chunks. F1 scores of 0.6779 is observed for positive -negative classification with overall accuracy of 85%. Hence, Chunker based sentiment analysis is proven to be better than sentiment analysis using sentences. This paper also proposes using a skip-gram model to identify the tenses of Nepali sentences and verbs. In the third experiment, the vector representation of each sentence is generated by using Skip-gram model followed by the Multi-Layer Perceptron (MLP)classification and it is observed that verb chunks had very low overall accuracy of 53%. In the fourth experiment, conducted for Tense Classification using Sentences resulted in improved efficiency with overall accuracy of 89%. Past tenses were identified and classified more accurately than other tenses. Hence, sentence based tense classification is proven to be better than verb Chunker based sentiment analysis.

Chunker Based Sentiment Analysis and Tense Classification for Nepali Text

Chunker Based Sentiment Analysis and Tense Classification for Nepali Textkevig The article represents the Sentiment Analysis (SA) and Tense Classification using Skip gram model for the word to vector encoding on Nepali language. The experiment on SA for positive-negative classification is carried out in two ways. In the first experiment the vector representation of each sentence is generated by using Skip-gram model followed by the Multi-Layer Perceptron (MLP) classification and it is observed that the F1 score of 0.6486 is achieved for positive-negative classification with overall accuracy of 68%. Whereas in the second experiment the verb chunks are extracted using Nepali parser and carried out the similar experiment on the verb chunks. F1 scores of 0.6779 is observed for positive -negative classification with overall accuracy of 85%. Hence, Chunker based sentiment analysis is proven to be better than sentiment analysis using sentences. This paper also proposes using a skip-gram model to identify the tenses of Nepali sentences and verbs. In the third experiment, the vector representation of each sentence is generated by using Skip-gram model followed by the Multi-Layer Perceptron (MLP)classification and it is observed that verb chunks had very low overall accuracy of 53%. In the fourth experiment, conducted for Tense Classification using Sentences resulted in improved efficiency with overall accuracy of 89%. Past tenses were identified and classified more accurately than other tenses. Hence, sentence based tense classification is proven to be better than verb Chunker based sentiment analysis.

CONTEXT-AWARE CLUSTERING USING GLOVE AND K-MEANS

CONTEXT-AWARE CLUSTERING USING GLOVE AND K-MEANSijseajournal ABSTRACT

In this paper we propose a novel method to cluster categorical data while retaining their context. Typically, clustering is performed on numerical data. However it is often useful to cluster categorical data as well, especially when dealing with data in real-world contexts. Several methods exist which can cluster categorical data, but our approach is unique in that we use recent text-processing and machine learning advancements like GloVe and t- SNE to develop a a context-aware clustering approach (using pre-trained

word embeddings). We encode words or categorical data into numerical, context-aware, vectors that we use to cluster the data points using common clustering algorithms like K-means.

Knowledge distillation deeplab

Knowledge distillation deeplabFrozen Paradise Compress a neural network using knowledge distillation technique, reduce params to a sufficient amount in order to run on embedded devices

TEXTS CLASSIFICATION WITH THE USAGE OF NEURAL NETWORK BASED ON THE WORD2VEC’S...

TEXTS CLASSIFICATION WITH THE USAGE OF NEURAL NETWORK BASED ON THE WORD2VEC’S...ijsc Assigning the submitted text to one of the predetermined categories is required when dealing with

application-oriented texts. There are many different approaches to solving this problem, including using

neural network algorithms. This article explores using neural networks to sort news articles based on their

category. Two word vectorization algorithms are being used — The Bag of Words (BOW) and the

word2vec distributive semantic model. For this work the BOW model was applied to the FNN, whereas the

word2vec model was applied to CNN. We have measured the accuracy of the classification when applying

these methods for ad texts datasets. The experimental results have shown that both of the models show us

quite the comparable accuracy. However, the word2vec encoding used for CNN showed more relevant

results, regarding to the texts semantics. Moreover, the trained CNN, based on the word2vec architecture,

has produced a compact feature map on its last convolutional layer, which can then be used in the future

text representation. I.e. Using CNN as a text encoder and for learning transfer.

Texts Classification with the usage of Neural Network based on the Word2vec’s...

Texts Classification with the usage of Neural Network based on the Word2vec’s...ijsc The document summarizes research on classifying texts using neural networks with different text representation models. It explores using a bag-of-words model with a fully connected neural network and using the word2vec model with a convolutional neural network. The research tested these approaches on a dataset of news articles across 20 categories, finding the word2vec/CNN approach produced more semantically relevant results while also learning a compact text representation.

Texts Classification with the usage of Neural Network based on the Word2vec’s...

Texts Classification with the usage of Neural Network based on the Word2vec’s...ijsc Assigning the submitted text to one of the predetermined categories is required when dealing with application-oriented texts. There are many different approaches to solving this problem, including using

neural network algorithms. This article explores using neural networks to sort news articles based on their category. Two word vectorization algorithms are being used — The Bag of Words (BOW) and the

word2vec distributive semantic model. For this work the BOW model was applied to the FNN, whereas the word2vec model was applied to CNN. We have measured the accuracy of the classification when applying

these methods for ad texts datasets. The experimental results have shown that both of the models show us quite the comparable accuracy. However, the word2vec encoding used for CNN showed more relevant

results, regarding to the texts semantics. Moreover, the trained CNN, based on the word2vec architecture, has produced a compact feature map on its last convolutional layer, which can then be used in the future text representation. I.e. Using CNN as a text encoder and for learning transfer.

1808.10245v1 (1).pdf

1808.10245v1 (1).pdfKSHITIJCHAUDHARY20 The document describes a comparative study of various machine learning and neural network models for detecting abusive language on Twitter. It finds that a bidirectional GRU network trained on word-level features, with a Latent Topic Clustering module, achieves the most accurate results with an F1 score of 0.805 for detecting abusive tweets. Additionally, it explores using context tweets as additional features and finds this improves some models' performance.

Sentiment Analysis In Myanmar Language Using Convolutional Lstm Neural Network

Sentiment Analysis In Myanmar Language Using Convolutional Lstm Neural Networkkevig In recent years, there has been an increasing use of social media among people in Myanmar and writing

review on social media pages about the product, movie, and trip are also popular among people. Moreover,

most of the people are going to find the review pages about the product they want to buy before deciding

whether they should buy it or not. Extracting and receiving useful reviews over interesting products is very

important and time consuming for people. Sentiment analysis is one of the important processes for extracting

useful reviews of the products. In this paper, the Convolutional LSTM neural network architecture is

proposed to analyse the sentiment classification of cosmetic reviews written in Myanmar Language. The

paper also intends to build the cosmetic reviews dataset for deep learning and sentiment lexicon in Myanmar

Language.

SENTIMENT ANALYSIS IN MYANMAR LANGUAGE USING CONVOLUTIONAL LSTM NEURAL NETWORK

SENTIMENT ANALYSIS IN MYANMAR LANGUAGE USING CONVOLUTIONAL LSTM NEURAL NETWORKijnlc In recent years, there has been an increasing use of social media among people in Myanmar and writing review on social media pages about the product, movie, and trip are also popular among people. Moreover, most of the people are going to find the review pages about the product they want to buy before deciding whether they should buy it or not. Extracting and receiving useful reviews over interesting products is very important and time consuming for people. Sentiment analysis is one of the important processes for extracting useful reviews of the products. In this paper, the Convolutional LSTM neural network architecture is proposed to analyse the sentiment classification of cosmetic reviews written in Myanmar Language. The paper also intends to build the cosmetic reviews dataset for deep learning and sentiment lexicon in Myanmar Language.

Natural Language Generation / Stanford cs224n 2019w lecture 15 Review

Natural Language Generation / Stanford cs224n 2019w lecture 15 Reviewchangedaeoh This document discusses natural language generation (NLG) tasks and neural approaches. It begins with a recap of language models and decoding algorithms like beam search and sampling. It then covers NLG tasks like summarization, dialogue generation, and storytelling. For summarization, it discusses extractive vs. abstractive approaches and neural methods like pointer-generator networks. For dialogue, it discusses challenges like genericness, irrelevance and repetition that neural models face. It concludes with trends in NLG evaluation difficulties and the future of the field.

SNLI_presentation_2

SNLI_presentation_2Viral Gupta This document discusses natural language inference and summarizes the key points as follows:

1. The document describes the problem of natural language inference, which involves classifying the relationship between a premise and hypothesis sentence as entailment, contradiction, or neutral. This is an important problem in natural language processing.

2. The SNLI dataset is introduced as a collection of half a million natural language inference problems used to train and evaluate models.

3. Several approaches for solving the problem are discussed, including using word embeddings, LSTMs, CNNs, and traditional bag-of-words models. Results show LSTMs and CNNs achieve the best performance.

Neural word embedding and language modelling

Neural word embedding and language modellingRiddhi Jain This document summarizes a survey paper on neural word embeddings and language modeling. It discusses early word embedding models like word2vec and how later models targeted specific semantic relations or senses. It also describes how morpheme embeddings can capture sub-word information. The document notes datasets used to evaluate word embeddings, including similarity, analogy and synonym selection tasks. It concludes that human-level language understanding remains a challenge, but pre-trained language models have transferred knowledge through fine-tuning for specific tasks, while multi-modal models learn concepts through images like human language acquisition.

Sentence Validation by Statistical Language Modeling and Semantic Relations

Sentence Validation by Statistical Language Modeling and Semantic RelationsEditor IJCATR This paper deals with Sentence Validation - a sub-field of Natural Language Processing. It finds various applications in

different areas as it deals with understanding the natural language (English in most cases) and manipulating it. So the effort is on

understanding and extracting important information delivered to the computer and make possible efficient human computer

interaction. Sentence Validation is approached in two ways - by Statistical approach and Semantic approach. In both approaches

database is trained with the help of sample sentences of Brown corpus of NLTK. The statistical approach uses trigram technique based

on N-gram Markov Model and modified Kneser-Ney Smoothing to handle zero probabilities. As another testing on statistical basis,

tagging and chunking of the sentences having named entities is carried out using pre-defined grammar rules and semantic tree parsing,

and chunked off sentences are fed into another database, upon which testing is carried out. Finally, semantic analysis is carried out by

extracting entity relation pairs which are then tested. After the results of all three approaches is compiled, graphs are plotted and

variations are studied. Hence, a comparison of three different models is calculated and formulated. Graphs pertaining to the

probabilities of the three approaches are plotted, which clearly demarcate them and throw light on the findings of the project.

Ad

More from Hiroki Shimanaka (7)

[Tutorial] Sentence Representation![[Tutorial] Sentence Representation](https://cdn.slidesharecdn.com/ss_thumbnails/tutorialsentencerepresentation-181121030653-thumbnail.jpg?width=560&fit=bounds)

![[Tutorial] Sentence Representation](https://cdn.slidesharecdn.com/ss_thumbnails/tutorialsentencerepresentation-181121030653-thumbnail.jpg?width=560&fit=bounds)

![[Tutorial] Sentence Representation](https://cdn.slidesharecdn.com/ss_thumbnails/tutorialsentencerepresentation-181121030653-thumbnail.jpg?width=560&fit=bounds)

![[Tutorial] Sentence Representation](https://cdn.slidesharecdn.com/ss_thumbnails/tutorialsentencerepresentation-181121030653-thumbnail.jpg?width=560&fit=bounds)

[Tutorial] Sentence RepresentationHiroki Shimanaka 首都大 小町研究室 チュートリアル(研究室内で自分が詳しい分野やツールについて紹介する勉強会)(M1 前期)

[論文紹介] Reference Bias in Monolingual Machine Translation Evaluation![[論文紹介] Reference Bias in Monolingual Machine Translation Evaluation](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingacl2016-referencebiasinmonolingualmachinetranslationevaluation-181121025921-thumbnail.jpg?width=560&fit=bounds)

![[論文紹介] Reference Bias in Monolingual Machine Translation Evaluation](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingacl2016-referencebiasinmonolingualmachinetranslationevaluation-181121025921-thumbnail.jpg?width=560&fit=bounds)

![[論文紹介] Reference Bias in Monolingual Machine Translation Evaluation](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingacl2016-referencebiasinmonolingualmachinetranslationevaluation-181121025921-thumbnail.jpg?width=560&fit=bounds)

![[論文紹介] Reference Bias in Monolingual Machine Translation Evaluation](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingacl2016-referencebiasinmonolingualmachinetranslationevaluation-181121025921-thumbnail.jpg?width=560&fit=bounds)

[論文紹介] Reference Bias in Monolingual Machine Translation EvaluationHiroki Shimanaka This document summarizes a research paper about reference bias in monolingual machine translation evaluation. The paper presents experiments on a Chinese-English machine translation dataset from news articles. The first experiment showed that translations were rated higher when fewer reference translations were provided for comparison. The second experiment found that translations were rated lower when references were from a different domain than the translations. The conclusions are that the number and domain of reference translations can influence evaluation scores and introduce bias.

[論文紹介] ReVal: A Simple and Effective Machine Translation Evaluation Metric Ba...![[論文紹介] ReVal: A Simple and Effective Machine Translation Evaluation Metric Ba...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingemnlp-reval-asimpleandeffectivemachinetranslationevaluationmetricbasedonrecurrentneuraln-181121025804-thumbnail.jpg?width=560&fit=bounds)

![[論文紹介] ReVal: A Simple and Effective Machine Translation Evaluation Metric Ba...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingemnlp-reval-asimpleandeffectivemachinetranslationevaluationmetricbasedonrecurrentneuraln-181121025804-thumbnail.jpg?width=560&fit=bounds)

![[論文紹介] ReVal: A Simple and Effective Machine Translation Evaluation Metric Ba...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingemnlp-reval-asimpleandeffectivemachinetranslationevaluationmetricbasedonrecurrentneuraln-181121025804-thumbnail.jpg?width=560&fit=bounds)

![[論文紹介] ReVal: A Simple and Effective Machine Translation Evaluation Metric Ba...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingemnlp-reval-asimpleandeffectivemachinetranslationevaluationmetricbasedonrecurrentneuraln-181121025804-thumbnail.jpg?width=560&fit=bounds)

[論文紹介] ReVal: A Simple and Effective Machine Translation Evaluation Metric Ba...Hiroki Shimanaka 1) ReVal is a machine translation evaluation metric based on recurrent neural networks that uses an LSTM or Tree-LSTM to learn vector representations of sentences. It then calculates the similarity between the source and translated sentences using KL divergence.

2) The researchers evaluated ReVal on the WMT-13 and WMT-14 translation tasks, and it achieved high correlation with human judgments, outperforming other automatic metrics like BLEU, METEOR, and TERp.

3) ReVal was able to accurately rank translation systems and had a Kendall's Tau score over 0.7 when compared to human rankings, demonstrating it is a simple and effective automatic evaluation metric for machine translation.

[論文紹介] PARANMT-50M- Pushing the Limits of Paraphrastic Sentence Embeddings wi...![[論文紹介] PARANMT-50M- Pushing the Limits of Paraphrastic Sentence Embeddings wi...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingother-paranmt-50m-pushingthelimitsofparaphrasticsentenceembeddingswithmillionsofmachinet-181121011322-thumbnail.jpg?width=560&fit=bounds)

![[論文紹介] PARANMT-50M- Pushing the Limits of Paraphrastic Sentence Embeddings wi...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingother-paranmt-50m-pushingthelimitsofparaphrasticsentenceembeddingswithmillionsofmachinet-181121011322-thumbnail.jpg?width=560&fit=bounds)

![[論文紹介] PARANMT-50M- Pushing the Limits of Paraphrastic Sentence Embeddings wi...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingother-paranmt-50m-pushingthelimitsofparaphrasticsentenceembeddingswithmillionsofmachinet-181121011322-thumbnail.jpg?width=560&fit=bounds)

![[論文紹介] PARANMT-50M- Pushing the Limits of Paraphrastic Sentence Embeddings wi...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingother-paranmt-50m-pushingthelimitsofparaphrasticsentenceembeddingswithmillionsofmachinet-181121011322-thumbnail.jpg?width=560&fit=bounds)

[論文紹介] PARANMT-50M- Pushing the Limits of Paraphrastic Sentence Embeddings wi...Hiroki Shimanaka The document summarizes research on PARANMT-50M, a new paraphrastic sentence embedding model. The model was created by training an LSTM on 50 million English-Czech machine translation pairs to map sentences to a shared vector space where semantic similarity is represented by vector similarity. Evaluation shows the embeddings achieve state-of-the-art results on semantic textual similarity tasks, outperforming prior models trained on smaller datasets. The researchers believe the improved performance is due to the large scale machine translation training data used to generate the embeddings.

[論文紹介] AN EFFICIENT FRAMEWORK FOR LEARNING SENTENCE REPRESENTATIONS.![[論文紹介] AN EFFICIENT FRAMEWORK FOR LEARNING SENTENCE REPRESENTATIONS.](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingcurrent-anefficientframeworkforlearningsentencerepresentations-181121005425-thumbnail.jpg?width=560&fit=bounds)

![[論文紹介] AN EFFICIENT FRAMEWORK FOR LEARNING SENTENCE REPRESENTATIONS.](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingcurrent-anefficientframeworkforlearningsentencerepresentations-181121005425-thumbnail.jpg?width=560&fit=bounds)

![[論文紹介] AN EFFICIENT FRAMEWORK FOR LEARNING SENTENCE REPRESENTATIONS.](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingcurrent-anefficientframeworkforlearningsentencerepresentations-181121005425-thumbnail.jpg?width=560&fit=bounds)

![[論文紹介] AN EFFICIENT FRAMEWORK FOR LEARNING SENTENCE REPRESENTATIONS.](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingcurrent-anefficientframeworkforlearningsentencerepresentations-181121005425-thumbnail.jpg?width=560&fit=bounds)

[論文紹介] AN EFFICIENT FRAMEWORK FOR LEARNING SENTENCE REPRESENTATIONS.Hiroki Shimanaka This document summarizes a research paper that proposes Quick-Thought, a new framework for efficiently learning sentence representations. Quick-Thought uses a GRU or Bi-GRU encoder to project sentences into a vector space, and trains the encoder on a large unsupervised text corpus. It then evaluates the trained sentence representations on eight transfer tasks, finding that Quick-Thought achieves results comparable to more complex models while being faster to train.

[論文紹介] Are BLEU and Meaning Representation in Opposition?![[論文紹介] Are BLEU and Meaning Representation in Opposition?](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingacl2018-arebleuandmeaningrepresentationinopposition-181121001139-thumbnail.jpg?width=560&fit=bounds)

![[論文紹介] Are BLEU and Meaning Representation in Opposition?](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingacl2018-arebleuandmeaningrepresentationinopposition-181121001139-thumbnail.jpg?width=560&fit=bounds)

![[論文紹介] Are BLEU and Meaning Representation in Opposition?](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingacl2018-arebleuandmeaningrepresentationinopposition-181121001139-thumbnail.jpg?width=560&fit=bounds)

![[論文紹介] Are BLEU and Meaning Representation in Opposition?](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingacl2018-arebleuandmeaningrepresentationinopposition-181121001139-thumbnail.jpg?width=560&fit=bounds)

[論文紹介] Are BLEU and Meaning Representation in Opposition?Hiroki Shimanaka This document discusses two papers related to evaluating machine translation (MT) and neural machine translation (NMT) systems. It summarizes a paper that proposes using structured self-attentive sentence embeddings to evaluate meaning representation in addition to BLEU scores. It also summarizes a paper that examines whether BLEU scores and meaning representation are opposed, finding that while BLEU correlates with translation quality, it may not fully capture meaning.

Ad

Recently uploaded (20)

PRIZ Academy - Functional Modeling In Action with PRIZ.pdf

PRIZ Academy - Functional Modeling In Action with PRIZ.pdfPRIZ Guru This PRIZ Academy deck walks you step-by-step through Functional Modeling in Action, showing how Subject-Action-Object (SAO) analysis pinpoints critical functions, ranks harmful interactions, and guides fast, focused improvements. You’ll see:

Core SAO concepts and scoring logic

A wafer-breakage case study that turns theory into practice

A live PRIZ Platform demo that builds the model in minutes

Ideal for engineers, QA managers, and innovation leads who need clearer system insight and faster root-cause fixes. Dive in, map functions, and start improving what really matters.

Artificial Intelligence (AI) basics.pptx

Artificial Intelligence (AI) basics.pptxaditichinar its all about Artificial Intelligence(Ai) and Machine Learning and not on advanced level you can study before the exam or can check for some information on Ai for project

DATA-DRIVEN SHOULDER INVERSE KINEMATICS YoungBeom Kim1 , Byung-Ha Park1 , Kwa...

DATA-DRIVEN SHOULDER INVERSE KINEMATICS YoungBeom Kim1 , Byung-Ha Park1 , Kwa...charlesdick1345 This paper proposes a shoulder inverse kinematics (IK) technique. Shoulder complex is comprised of the sternum, clavicle, ribs, scapula, humerus, and four joints.

Dynamics of Structures with Uncertain Properties.pptx

Dynamics of Structures with Uncertain Properties.pptxUniversity of Glasgow In modern aerospace engineering, uncertainty is not an inconvenience — it is a defining feature. Lightweight structures, composite materials, and tight performance margins demand a deeper understanding of how variability in material properties, geometry, and boundary conditions affects dynamic response. This keynote presentation tackles the grand challenge: how can we model, quantify, and interpret uncertainty in structural dynamics while preserving physical insight?

This talk reflects over two decades of research at the intersection of structural mechanics, stochastic modelling, and computational dynamics. Rather than adopting black-box probabilistic methods that obscure interpretation, the approaches outlined here are rooted in engineering-first thinking — anchored in modal analysis, physical realism, and practical implementation within standard finite element frameworks.

The talk is structured around three major pillars:

1. Parametric Uncertainty via Random Eigenvalue Problems

* Analytical and asymptotic methods are introduced to compute statistics of natural frequencies and mode shapes.

* Key insight: eigenvalue sensitivity depends on spectral gaps — a critical factor for systems with clustered modes (e.g., turbine blades, panels).

2. Parametric Uncertainty in Dynamic Response using Modal Projection

* Spectral function-based representations are presented as a frequency-adaptive alternative to classical stochastic expansions.

* Efficient Galerkin projection techniques handle high-dimensional random fields while retaining mode-wise physical meaning.

3. Nonparametric Uncertainty using Random Matrix Theory

* When system parameters are unknown or unmeasurable, Wishart-distributed random matrices offer a principled way to encode uncertainty.

* A reduced-order implementation connects this theory to real-world systems — including experimental validations with vibrating plates and large-scale aerospace structures.

Across all topics, the focus is on reduced computational cost, physical interpretability, and direct applicability to aerospace problems.

The final section outlines current integration with FE tools (e.g., ANSYS, NASTRAN) and ongoing research into nonlinear extensions, digital twin frameworks, and uncertainty-informed design.

Whether you're a researcher, simulation engineer, or design analyst, this presentation offers a cohesive, physics-based roadmap to quantify what we don't know — and to do so responsibly.

Key words

Stochastic Dynamics, Structural Uncertainty, Aerospace Structures, Uncertainty Quantification, Random Matrix Theory, Modal Analysis, Spectral Methods, Engineering Mechanics, Finite Element Uncertainty, Wishart Distribution, Parametric Uncertainty, Nonparametric Modelling, Eigenvalue Problems, Reduced Order Modelling, ASME SSDM2025

some basics electrical and electronics knowledge

some basics electrical and electronics knowledgenguyentrungdo88 This chapter discribe about common electrical divices such as passive component, the internaltional system unit and international system prefixes.

AI-assisted Software Testing (3-hours tutorial)

AI-assisted Software Testing (3-hours tutorial)Vəhid Gəruslu Invited tutorial at the Istanbul Software Testing Conference (ISTC) 2025 https://iststc.com/

最新版加拿大魁北克大学蒙特利尔分校毕业证(UQAM毕业证书)原版定制