Parallel Processing in TM1 - QueBIT Consulting

Download as PPTX, PDF1 like870 views

Learn about parallel processing in TM1 brought to you by the experts of QueBIT consulting. The trusted experts in analytics.

1 of 21

Downloaded 13 times

Ad

Recommended

Webinar December 2018 - Planning Analytics Workspace (PAW) Tips & Tricks - Qu...

Webinar December 2018 - Planning Analytics Workspace (PAW) Tips & Tricks - Qu...QueBIT Consulting Webinar December 2018 - Planning Analytics Workspace (PAW) Tips & Tricks. Today’s webinar is part of an advanced webinar series offered by QueBIT. Our next webinar is scheduled for Thursday, January 10th at 2pm Eastern. Learn about the advancements in the Cognos Analytics 11.1 release. These changes will bring the power of artificial intelligence, machine learning, and advanced analytics to all Cognos Analytics users to empower, enlighten, and facilitate a new breed of boundless data explorers! Register today by accessing the Events page on our website at quebit.com/news-events.

Testing SAP PI/PO systems Full version

Testing SAP PI/PO systems Full versionDaniel Graversen This presentation covers why you must test your SAP Interfaces.

It will show how you can test it simply on SAP PI/PO with the FIGAF IRT tool.

You will see which Enterprise Pattern is supported.

How to install the system

IBM Maximo Performance Tuning

IBM Maximo Performance TuningFMMUG Speaker: Darlene Nerden, IBM

Overview: In this session will review the Maximo architecture and factors that influence performance. We will discuss some details for those factors regarding tuning for a performance impact. We will look at troubleshooting tools and Maximo settings to help identify and resolve a Maximo performance issue.

Structured Streaming in Spark

Structured Streaming in SparkDigital Vidya Apache Spark has been gaining steam, with rapidity, both in the headlines and in real-world adoption. Spark was developed in 2009, and open sourced in 2010. Since then, it has grown to become one of the largest open source communities in big data with over 200 contributors from more than 50 organizations. This open source analytics engine stands out for its ability to process large volumes of data significantly faster than contemporaries such as MapReduce, primarily owing to in-memory storage of data on its own processing framework. That being said, one of the top real-world industry use cases for Apache Spark is its ability to process ‘streaming data‘.

Client Success Story - Oracle FDMEE is the Cloud Data Hub at Legg Mason

Client Success Story - Oracle FDMEE is the Cloud Data Hub at Legg MasonAlithya rise Edition (FDMEE) to automate movement of metadata and data between ground and EPM Cloud. We will have a detailed discussion on the makeup of the FDMEE server configurations, allowing for invoking automated jobs to pull dimensions from an on-premises Essbase ASO application, allowing for custom scripting to invoke EPM Automate jobs to build dimensions and move data between different pods, finally allowing for movement of data in and out of the Oracle Profitability and Cost Management Cloud Service (PCMCS) application. A live demo will be used to illustrate various components of this interesting FDMEE-driven EPM process management architecture.

Sap Integrations

Sap IntegrationsAhmed Rami Elsherif, PMP, ITBMC This document discusses integrating SAP with other systems and enabling SAP functionality on the web. It provides an overview of SAP integration technologies like BAPI and RFC. Examples are given of importing/exporting data between SAP and other systems in real-time, performing monetary adjustments by reading SAP balance sheet data and writing adjustments back to SAP, and generating purchase orders in SAP for transport services by reading transport operations data. The conclusion states that while SAP aims to cover all enterprise activities, other systems will exist requiring integration with SAP using technologies like RFC and BAPI.

Short Summary Time Recording for SAP

Short Summary Time Recording for SAPCobalt Software GmbH The Chronos Time Recording system is a comprehensive software package that satisfies all the requirements of a modern time recording systems. It supports companies of any size and allows the economic implementation of simple to highly complex requirements. Regardless of whether the data comes from a PC, terminal, Tablet or Smartphone, the connectivity and control is implemented in a single solution.

Applying multi-processing techniques in Magento for upgrade optimization

Applying multi-processing techniques in Magento for upgrade optimizationKirill Morozov This document discusses optimizing the Magento upgrade process by applying multi-processing techniques. The standard Magento upgrade from 1.10 to 1.11 can take a disproportionate amount of time, causing system downtime. The author developed a prototype that breaks the upgrade into parallel parts that are executed by multiple child processes simultaneously. Testing on a production store reduced the upgrade time from 34 hours to 4 hours for a database with 2.5 million orders.

Nov. 2020 Taiwan TUG Activity - How to use Tableau Prep to enhance your effic...

Nov. 2020 Taiwan TUG Activity - How to use Tableau Prep to enhance your effic...Weihao Li 1. The document discusses using Tableau Prep to enhance data efficiency and accuracy. It covers running Prep through the command line, running Prep files automatically using Python, and maintaining data accuracy.

2. Methods for efficiently maintaining data accuracy discussed include customizing Prep outputs to detect issues like null values or duplicate data, and sending alerts through Slack if issues arise.

3. The agenda includes running Prep through the command line, efficiently maintaining data accuracy with customized outputs and alerts, and a Q&A session.

QueBIT Planning Product Sheet

QueBIT Planning Product SheetQueBIT Consulting QueBIT Planning is a cloud-based financial planning and analytics solution that will enable customers to use all the benefits of TM1 without the cost or overhead of owning an on-premise custom application.

5174 oracleascp

5174 oracleascpMohamed Mohaideen Abbas This document summarizes an online seminar on Oracle Advanced Supply Chain Planning (ASCP). The agenda includes an overview of ASCP, its capabilities, new features in R12, implementation options, and a demonstration. Key topics covered are plan types (unconstrained, constrained, optimized), the planner's workbench, simulation capabilities, and the Rapidflow implementation methodology. The demo shows the different plan outputs, KPIs, exception management, order release, and resolving supply constraints through simulation.

Planes, Trains and Automobiles – Handling Infrastructure Assets with FME

Planes, Trains and Automobiles – Handling Infrastructure Assets with FMESafe Software From airports to railways to roads, infrastructure asset handling is a complex task that inevitably involves a wide range of incompatible formats given the different applications and standards involved in design, management and data exchange. Using real cases, this webinar will demonstrate how FME can overcome data interoperability challenges commonly encountered in infrastructure asset handling and streamline processes.

Next level IT integration by SNP AG

Next level IT integration by SNP AGDr. Karl-Michael Popp This document discusses how SNP's automation capabilities can streamline IT integration projects from pre-merger to post-merger. Their Transformation Backbone software allows for a high degree of automation that reduces manual efforts, provides transparent data insights, and enables drill-down functionality. Case studies show how the software automated data migration by up to 90%, consolidated SAP landscapes efficiently, and enabled quick harmonization projects while maintaining data history and audit compliance. The standardized and modular nature of SNP's approach provides planning certainty, reusability, documentation, and reduces risk for integration projects of various sizes.

IBM - Paul Pilotto

IBM - Paul PilottoIDGnederland This document discusses how ASIST can help organizations address challenges in digital transformation. It summarizes ASIST's services including modern development, delivery pipelines, and shift-left testing. These services can help attract new talent, increase speed and agility, and improve quality. The document also provides examples of how different types of APIs can drive business models.

Managing Performance Globally with MySQL

Managing Performance Globally with MySQLDaniel Austin This is my presentation at MySQL Connect for 2013. I describe a large-scale Big Data system and how it was built.

IBM - Yannick Stiller

IBM - Yannick StillerIDGnederland This document summarizes the implementation of a new release management process at NRB using three pillars: change and stakeholder management, a release management process, and new tools. It describes the old way of individual release management without processes or communication between stages. The new process integrates change management, defines the release process with customer participation, and uses tools like RTC, UrbanCode, and IBM Rational to enable continuous integration and deployment between stages with release planning and governance. The goal is to establish a mature DevOps process at NRB without big bang changes by involving all stakeholders.

Aus Post Archiving

Aus Post ArchivingTony de Thomasis The document discusses Open Text (iXOS) archiving at AusPost, including:

1) Open Text is a globally proven solution that is integrated with SAP and meets compliance standards.

2) The current archiving architecture includes logical archives stored on IBM storage using the LEA solution.

3) Many common SAP objects are currently archived such as sales documents, deliveries, and customer master data.

4) New opportunities exist to archive additional SAP objects like sales contacts and purchasing documents to gain more benefits from archiving.

Migration Approaches for FDMEE

Migration Approaches for FDMEEAlithya This document discusses approaches for migrating data from Oracle's Financial Data Management Enterprise Edition (FDMEE) to its successor product, Oracle's Financial Data Management Enterprise Edition (FDMEE). It outlines two main approaches - using Oracle's migration utility or doing a full rebuild. The migration utility uses Oracle Data Integrator (ODI) under the hood and can automate some but not all aspects of the migration. A full rebuild takes more time but allows for cleaning up of unused artifacts and other improvements. Best practices are discussed such as designing the target system structure, rebuilding scripts with Jython, and thorough testing.

Automation in Home Textile

Automation in Home Textileitplant GHCL Limited implemented several automation projects to improve data collection and entry processes across various textile departments. This reduced paperwork, duplicate data entry, and reliance on skilled users. Specifically:

1) A weaving automation project transferred loom machine data to SAP online, reducing data collection time from 4 hours to 10 minutes per shift.

2) An inspection automation project uploaded inspection machine data directly to SAP via an interface, reducing data entry time from 20 minutes to fully automated and requiring only one operator per shift.

3) A cutting automation project allowed online data entry at cutting machines with validation, eliminating the need for a data entry operator.

4) A madeups automation project was being implemented to similarly

Ecc plan & edd plan in Oracle ASCP

Ecc plan & edd plan in Oracle ASCPFrank Peng彭成奎 The document summarizes the key differences between Earliest Due Date (EDD) and Earliest Component Completion (ECC) planning methods in Advanced Supply Chain Planning (ASCP). It shows how EDD focuses on meeting demand dates without considering resource constraints, which can result in overloading resources, while ECC aims to finish components earliest to level the workload across resources. The document provides examples of output from ASCP plans using both EDD and ECC, demonstrating how ECC plans can eliminate exceptions by balancing the workload across constrained resources.

Home Textile Challenges & Appraoch

Home Textile Challenges & Appraochitplant GHCL Limited faced several challenges after implementing SAP, including frequent changes in management and business processes. To address this, they standardized costing calculations and created a generic process flow model. They also automated data entry through interfaces to reduce errors and delays. Data entry positions were eliminated and existing employees were reassigned. Transactions that allowed adjustments or disturbances were locked down. Key transactions were gradually transferred to authorized users with training on user-friendly screens. These approaches helped simplify the system and provide error-free data analysis to decision makers.

Oracle ASCP Training

Oracle ASCP TrainingClick4learning This document provides an overview of an ASCP training session on Oracle's Advanced Supply Chain Planning solution. It discusses:

1) The basics of ASCP, including planning materials, capacity, and production to map supply to demand.

2) Key terms used in ASCP like purchase orders, work orders, routings, and resources.

3) An implementation of ASCP for a machining center to improve scheduling and capacity planning across 21 machines and over 150 SKUs.

4) The steps required to properly set up the planning environment in ASCP, including cleaning up old data, maintaining accurate item masters, and entering material and resource constraints.

Maximizing the Value of IBM's New Mainframe Pricing Model with Syncsort Elevate

Maximizing the Value of IBM's New Mainframe Pricing Model with Syncsort Elevate Precisely IBM’s new Tailored Fit Pricing is a generational shift in IBM Z software pricing, offering an alternative to the Rolling 4-Hour Average model introduced in 1999. Designed to eliminate the challenges of forecasting demand for hybrid cloud environments and dynamic workloads, this new pricing model can make your mainframe licensing costs more predictable and manageable.

Our Elevate MFSort and Elevate ZPSaver products, combined with the new Tailored Fit Pricing offer the opportunity to achieve even more efficiency and cost savings through high-performance sort and the ability to offload expensive processing to zIIP engines.

Learn about:

The two new Tailored Fit Pricing options from IBM

How Elevate MFSort and Elevate ZPSaver cut down workloads

How Syncsort can help you project the savings you may achieve

FDMEE Taking Source Filters to the Next Level

FDMEE Taking Source Filters to the Next LevelFrancisco Amores This document discusses taking source filters in Oracle's Financial Data Management Enterprise Edition (FDMEE) to the next level. It presents two case studies of customizing source filters: 1) For a Universal Data Adapter extracting from SQL, dynamically setting a filter parameter value to include all entities in a division. 2) For an HFM extract, dynamically setting dimension filters based on a user attribute value. The document explains how to build custom filter values in a BefImport script and update the parameter value at runtime to make it dynamic rather than static. This allows more flexible filtering than the out-of-the-box capabilities in FDMEE.

Streaming in the Wild with Apache Flink

Streaming in the Wild with Apache FlinkKostas Tzoumas This talk is an application-driven walkthrough to modern stream processing, exemplified by Apache Flink, and how this enables new applications and makes old applications easier and more efficient. In this talk, we will walk through several real-world stream processing application scenarios of Apache Flink, highlighting unique features in Flink that make these applications possible. In particular, we will see (1) how support for handling out of order streams enables real-time monitoring of cloud infrastructure, (2) how the ability handle high-volume data streams with low latency SLAs enables real-time alerts in network equipment, (3) how the combination of high throughput and the ability to handle batch as a special case of streaming enables an architecture where the same exact program is used for real-time and historical data processing, and (4) how stateful stream processing can enable an architecture that eliminates the need for an external database store, leading to more than 100x performance speedup, among many other benefits.

Mulesoft Meetup Milano #9 - Batch Processing and CI/CD

Mulesoft Meetup Milano #9 - Batch Processing and CI/CDGonzalo Marcos Ansoain Nona puntata del Mulesoft Meetup di Milano. Parliamo insieme a Paolo Petronzi di automazione e CI/CD e poi con Luca Bonaldo, il nostro Mulesoft Mentor in Italia, di best practices per batch processing.

Streaming in the Wild with Apache Flink

Streaming in the Wild with Apache FlinkDataWorks Summit/Hadoop Summit This document summarizes a presentation about streaming data processing with Apache Flink. It discusses how Flink enables real-time analysis and continuous applications. Case studies are presented showing how companies like Bouygues Telecom, Zalando, King.com, and Netflix use Flink for applications like monitoring, analytics, and building a stream processing service. Flink performance is discussed through benchmarks, and features like consistent snapshots and dynamic scaling are mentioned.

Next generation business automation with the red hat decision manager and red...

Next generation business automation with the red hat decision manager and red...Masahiko Umeno Red Hat offers the Decision Manager and Process Automation Manager to enable next generation business automation. The key pillars of their solution are application modernization, robotic process automation, IoT, AI, and business optimization. For successful application projects, companies should focus on the application architecture, organizing rules and processes, and using an iterative software development methodology. The Process Automation Manager supports business process management with capabilities like case management, while the Decision Manager is used for managing rules.

Lyft data Platform - 2019 slides

Lyft data Platform - 2019 slidesKarthik Murugesan Lyft’s data platform is at the heart of the company's business. Decisions from pricing to ETA to business operations rely on Lyft’s data platform. Moreover, it powers the enormous scale and speed at which Lyft operates. Mark Grover and Deepak Tiwari walk you through the choices Lyft made in the development and sustenance of the data platform, along with what lies ahead in the future.

The Lyft data platform: Now and in the future

The Lyft data platform: Now and in the futuremarkgrover - Lyft has grown significantly in recent years, providing over 1 billion rides to 30.7 million riders through 1.9 million drivers in 2018 across North America.

- Data is core to Lyft's business decisions, from pricing and driver matching to analyzing performance and informing investments.

- Lyft's data platform supports data scientists, analysts, engineers and others through tools like Apache Superset, change data capture from operational stores, and streaming frameworks.

- Key focuses for the platform include business metric observability, streaming applications, and machine learning while addressing challenges of reliability, integration and scale.

Ad

More Related Content

What's hot (16)

Nov. 2020 Taiwan TUG Activity - How to use Tableau Prep to enhance your effic...

Nov. 2020 Taiwan TUG Activity - How to use Tableau Prep to enhance your effic...Weihao Li 1. The document discusses using Tableau Prep to enhance data efficiency and accuracy. It covers running Prep through the command line, running Prep files automatically using Python, and maintaining data accuracy.

2. Methods for efficiently maintaining data accuracy discussed include customizing Prep outputs to detect issues like null values or duplicate data, and sending alerts through Slack if issues arise.

3. The agenda includes running Prep through the command line, efficiently maintaining data accuracy with customized outputs and alerts, and a Q&A session.

QueBIT Planning Product Sheet

QueBIT Planning Product SheetQueBIT Consulting QueBIT Planning is a cloud-based financial planning and analytics solution that will enable customers to use all the benefits of TM1 without the cost or overhead of owning an on-premise custom application.

5174 oracleascp

5174 oracleascpMohamed Mohaideen Abbas This document summarizes an online seminar on Oracle Advanced Supply Chain Planning (ASCP). The agenda includes an overview of ASCP, its capabilities, new features in R12, implementation options, and a demonstration. Key topics covered are plan types (unconstrained, constrained, optimized), the planner's workbench, simulation capabilities, and the Rapidflow implementation methodology. The demo shows the different plan outputs, KPIs, exception management, order release, and resolving supply constraints through simulation.

Planes, Trains and Automobiles – Handling Infrastructure Assets with FME

Planes, Trains and Automobiles – Handling Infrastructure Assets with FMESafe Software From airports to railways to roads, infrastructure asset handling is a complex task that inevitably involves a wide range of incompatible formats given the different applications and standards involved in design, management and data exchange. Using real cases, this webinar will demonstrate how FME can overcome data interoperability challenges commonly encountered in infrastructure asset handling and streamline processes.

Next level IT integration by SNP AG

Next level IT integration by SNP AGDr. Karl-Michael Popp This document discusses how SNP's automation capabilities can streamline IT integration projects from pre-merger to post-merger. Their Transformation Backbone software allows for a high degree of automation that reduces manual efforts, provides transparent data insights, and enables drill-down functionality. Case studies show how the software automated data migration by up to 90%, consolidated SAP landscapes efficiently, and enabled quick harmonization projects while maintaining data history and audit compliance. The standardized and modular nature of SNP's approach provides planning certainty, reusability, documentation, and reduces risk for integration projects of various sizes.

IBM - Paul Pilotto

IBM - Paul PilottoIDGnederland This document discusses how ASIST can help organizations address challenges in digital transformation. It summarizes ASIST's services including modern development, delivery pipelines, and shift-left testing. These services can help attract new talent, increase speed and agility, and improve quality. The document also provides examples of how different types of APIs can drive business models.

Managing Performance Globally with MySQL

Managing Performance Globally with MySQLDaniel Austin This is my presentation at MySQL Connect for 2013. I describe a large-scale Big Data system and how it was built.

IBM - Yannick Stiller

IBM - Yannick StillerIDGnederland This document summarizes the implementation of a new release management process at NRB using three pillars: change and stakeholder management, a release management process, and new tools. It describes the old way of individual release management without processes or communication between stages. The new process integrates change management, defines the release process with customer participation, and uses tools like RTC, UrbanCode, and IBM Rational to enable continuous integration and deployment between stages with release planning and governance. The goal is to establish a mature DevOps process at NRB without big bang changes by involving all stakeholders.

Aus Post Archiving

Aus Post ArchivingTony de Thomasis The document discusses Open Text (iXOS) archiving at AusPost, including:

1) Open Text is a globally proven solution that is integrated with SAP and meets compliance standards.

2) The current archiving architecture includes logical archives stored on IBM storage using the LEA solution.

3) Many common SAP objects are currently archived such as sales documents, deliveries, and customer master data.

4) New opportunities exist to archive additional SAP objects like sales contacts and purchasing documents to gain more benefits from archiving.

Migration Approaches for FDMEE

Migration Approaches for FDMEEAlithya This document discusses approaches for migrating data from Oracle's Financial Data Management Enterprise Edition (FDMEE) to its successor product, Oracle's Financial Data Management Enterprise Edition (FDMEE). It outlines two main approaches - using Oracle's migration utility or doing a full rebuild. The migration utility uses Oracle Data Integrator (ODI) under the hood and can automate some but not all aspects of the migration. A full rebuild takes more time but allows for cleaning up of unused artifacts and other improvements. Best practices are discussed such as designing the target system structure, rebuilding scripts with Jython, and thorough testing.

Automation in Home Textile

Automation in Home Textileitplant GHCL Limited implemented several automation projects to improve data collection and entry processes across various textile departments. This reduced paperwork, duplicate data entry, and reliance on skilled users. Specifically:

1) A weaving automation project transferred loom machine data to SAP online, reducing data collection time from 4 hours to 10 minutes per shift.

2) An inspection automation project uploaded inspection machine data directly to SAP via an interface, reducing data entry time from 20 minutes to fully automated and requiring only one operator per shift.

3) A cutting automation project allowed online data entry at cutting machines with validation, eliminating the need for a data entry operator.

4) A madeups automation project was being implemented to similarly

Ecc plan & edd plan in Oracle ASCP

Ecc plan & edd plan in Oracle ASCPFrank Peng彭成奎 The document summarizes the key differences between Earliest Due Date (EDD) and Earliest Component Completion (ECC) planning methods in Advanced Supply Chain Planning (ASCP). It shows how EDD focuses on meeting demand dates without considering resource constraints, which can result in overloading resources, while ECC aims to finish components earliest to level the workload across resources. The document provides examples of output from ASCP plans using both EDD and ECC, demonstrating how ECC plans can eliminate exceptions by balancing the workload across constrained resources.

Home Textile Challenges & Appraoch

Home Textile Challenges & Appraochitplant GHCL Limited faced several challenges after implementing SAP, including frequent changes in management and business processes. To address this, they standardized costing calculations and created a generic process flow model. They also automated data entry through interfaces to reduce errors and delays. Data entry positions were eliminated and existing employees were reassigned. Transactions that allowed adjustments or disturbances were locked down. Key transactions were gradually transferred to authorized users with training on user-friendly screens. These approaches helped simplify the system and provide error-free data analysis to decision makers.

Oracle ASCP Training

Oracle ASCP TrainingClick4learning This document provides an overview of an ASCP training session on Oracle's Advanced Supply Chain Planning solution. It discusses:

1) The basics of ASCP, including planning materials, capacity, and production to map supply to demand.

2) Key terms used in ASCP like purchase orders, work orders, routings, and resources.

3) An implementation of ASCP for a machining center to improve scheduling and capacity planning across 21 machines and over 150 SKUs.

4) The steps required to properly set up the planning environment in ASCP, including cleaning up old data, maintaining accurate item masters, and entering material and resource constraints.

Maximizing the Value of IBM's New Mainframe Pricing Model with Syncsort Elevate

Maximizing the Value of IBM's New Mainframe Pricing Model with Syncsort Elevate Precisely IBM’s new Tailored Fit Pricing is a generational shift in IBM Z software pricing, offering an alternative to the Rolling 4-Hour Average model introduced in 1999. Designed to eliminate the challenges of forecasting demand for hybrid cloud environments and dynamic workloads, this new pricing model can make your mainframe licensing costs more predictable and manageable.

Our Elevate MFSort and Elevate ZPSaver products, combined with the new Tailored Fit Pricing offer the opportunity to achieve even more efficiency and cost savings through high-performance sort and the ability to offload expensive processing to zIIP engines.

Learn about:

The two new Tailored Fit Pricing options from IBM

How Elevate MFSort and Elevate ZPSaver cut down workloads

How Syncsort can help you project the savings you may achieve

FDMEE Taking Source Filters to the Next Level

FDMEE Taking Source Filters to the Next LevelFrancisco Amores This document discusses taking source filters in Oracle's Financial Data Management Enterprise Edition (FDMEE) to the next level. It presents two case studies of customizing source filters: 1) For a Universal Data Adapter extracting from SQL, dynamically setting a filter parameter value to include all entities in a division. 2) For an HFM extract, dynamically setting dimension filters based on a user attribute value. The document explains how to build custom filter values in a BefImport script and update the parameter value at runtime to make it dynamic rather than static. This allows more flexible filtering than the out-of-the-box capabilities in FDMEE.

Similar to Parallel Processing in TM1 - QueBIT Consulting (20)

Streaming in the Wild with Apache Flink

Streaming in the Wild with Apache FlinkKostas Tzoumas This talk is an application-driven walkthrough to modern stream processing, exemplified by Apache Flink, and how this enables new applications and makes old applications easier and more efficient. In this talk, we will walk through several real-world stream processing application scenarios of Apache Flink, highlighting unique features in Flink that make these applications possible. In particular, we will see (1) how support for handling out of order streams enables real-time monitoring of cloud infrastructure, (2) how the ability handle high-volume data streams with low latency SLAs enables real-time alerts in network equipment, (3) how the combination of high throughput and the ability to handle batch as a special case of streaming enables an architecture where the same exact program is used for real-time and historical data processing, and (4) how stateful stream processing can enable an architecture that eliminates the need for an external database store, leading to more than 100x performance speedup, among many other benefits.

Mulesoft Meetup Milano #9 - Batch Processing and CI/CD

Mulesoft Meetup Milano #9 - Batch Processing and CI/CDGonzalo Marcos Ansoain Nona puntata del Mulesoft Meetup di Milano. Parliamo insieme a Paolo Petronzi di automazione e CI/CD e poi con Luca Bonaldo, il nostro Mulesoft Mentor in Italia, di best practices per batch processing.

Streaming in the Wild with Apache Flink

Streaming in the Wild with Apache FlinkDataWorks Summit/Hadoop Summit This document summarizes a presentation about streaming data processing with Apache Flink. It discusses how Flink enables real-time analysis and continuous applications. Case studies are presented showing how companies like Bouygues Telecom, Zalando, King.com, and Netflix use Flink for applications like monitoring, analytics, and building a stream processing service. Flink performance is discussed through benchmarks, and features like consistent snapshots and dynamic scaling are mentioned.

Next generation business automation with the red hat decision manager and red...

Next generation business automation with the red hat decision manager and red...Masahiko Umeno Red Hat offers the Decision Manager and Process Automation Manager to enable next generation business automation. The key pillars of their solution are application modernization, robotic process automation, IoT, AI, and business optimization. For successful application projects, companies should focus on the application architecture, organizing rules and processes, and using an iterative software development methodology. The Process Automation Manager supports business process management with capabilities like case management, while the Decision Manager is used for managing rules.

Lyft data Platform - 2019 slides

Lyft data Platform - 2019 slidesKarthik Murugesan Lyft’s data platform is at the heart of the company's business. Decisions from pricing to ETA to business operations rely on Lyft’s data platform. Moreover, it powers the enormous scale and speed at which Lyft operates. Mark Grover and Deepak Tiwari walk you through the choices Lyft made in the development and sustenance of the data platform, along with what lies ahead in the future.

The Lyft data platform: Now and in the future

The Lyft data platform: Now and in the futuremarkgrover - Lyft has grown significantly in recent years, providing over 1 billion rides to 30.7 million riders through 1.9 million drivers in 2018 across North America.

- Data is core to Lyft's business decisions, from pricing and driver matching to analyzing performance and informing investments.

- Lyft's data platform supports data scientists, analysts, engineers and others through tools like Apache Superset, change data capture from operational stores, and streaming frameworks.

- Key focuses for the platform include business metric observability, streaming applications, and machine learning while addressing challenges of reliability, integration and scale.

What’s Mule 4.3? How Does Anytime RTF Help? Our insights explain.

What’s Mule 4.3? How Does Anytime RTF Help? Our insights explain. Kellton Tech Solutions Ltd Read to learn what Mule Runtime Fabric (RTF) and Anypoint RTF are, how you can leverage these integration engines, the best adoption strategies, and the right way to conduct the risk-cost-benefit analysis for your business.

Introduction to TM1 TurboIntegrator Debugger Webinar - Quebit Consulting

Introduction to TM1 TurboIntegrator Debugger Webinar - Quebit ConsultingQueBIT Consulting Today’s webinar is part of a monthly advanced webinar series offered by QueBIT. Register for future webinars by accessing the events page on our website at quebit.com/news-events

AGENDA:

What is the TurboIntegrator Debugger?

Main Features/Main Window Walkthrough

How will the TI debugger help me?

Limitations

Installation and Configuration

TIBCO vs MuleSoft Differentiators

TIBCO vs MuleSoft DifferentiatorsSatish Nannapaneni This document discusses an agenda covering integration in the cloud era, the TIBCO ecosystem, the Anypoint Platform, and a conclusion with Q&A. It summarizes that cloud computing is changing the integration space and customers must evaluate their options. It notes limitations with TIBCO's BW5 and BW6 products and complexity when using both. The Anypoint Platform is presented as standards-based, built for APIs and integration, and providing enterprise scale and reusability through connecting to anything. Mule Runtime is discussed as optimized, performant, and built for modern DevOps. Development time and costs are argued to be lower with MuleSoft compared to TIBCO due to factors like design phases, testing,

Tyco IS Oracle Apps Support Project

Tyco IS Oracle Apps Support ProjectCHANDRASEKHAR REDROUTHU This document provides an agenda for an Oracle EBS support project at Tyco. It includes an overview of Tyco's Oracle EBS technical architecture and functional applications. It describes the finance applications and processes flows. It also details the current Oracle EBS environment, defect fix cycle, tools used, and project governance. Module specific flow diagrams are provided.

Sunny_Resume

Sunny_ResumeSunny Gupta Sunny Gupta has over 4 years of experience as a Software Engineer and ETL Developer. He currently works at HSBC Software Development India developing ETL jobs and scripts to load data into data warehouses from various source systems. Some of his skills include DataStage, Oracle, Teradata, Linux scripting, and scheduling tools like Control M. He has experience developing ETL solutions for FATCA reporting projects at HSBC.

Ask the Experts - An Informal Panel for FDMEE

Ask the Experts - An Informal Panel for FDMEEJoseph Alaimo Jr Knowledge shared about implementing and upgrading FDMEE. Tired of trying to get your FDMEE questions answered in other panels that span all the EPM technologies? Perhaps you are considering an upgrade to FDMEE, maybe you have already upgraded and are missing key functionality, maybe you are trying to understand your options for cloud integration with FDMEE, or maybe you just want to learn more about what you can do with FDMEE.

Sunny_Resume

Sunny_ResumeSunny Gupta Sunny Gupta is a software engineer with over 4 years of experience working with HSBC. He has expertise in data warehousing, ETL development using DataStage, scheduling with Control M, and reporting tools like OBIEE and Cognos. Currently he is working on FATCA projects at HSBC which involve extracting data from multiple systems, transforming it, and loading it into databases and third party reporting tools. He has experience in all phases of ETL development and providing production support.

Callidus Software On-Premise To On-Demand Migration

Callidus Software On-Premise To On-Demand MigrationCallidus Software - The document discusses migrating from an on-premise sales performance management (SPM) system to Callidus Cloud's on-demand SPM solution, including benefits of the migration, strategies and processes for migrating data, and costs.

- Key benefits of migrating include reducing total cost of ownership, focusing internal resources on core activities, and gaining access to the latest SPM features through Callidus Cloud's software updates.

- The migration process involves moving different components like data, reports, and configuration to Callidus Cloud's platforms and tools. Sample costs for migrating different amounts of data are provided.

- Callidus Cloud can help minimize migration costs and risks through standardized approaches, lever

nZDM.ppt

nZDM.pptNavin Somal nZDM (Near Zero Downtime Maintenance) and nZDT (Near Zero Downtime Technology) are methods to minimize downtime during SAP system updates and conversions. nZDM reduces downtime for SUM updates by performing more tasks while the system is still available, though it increases runtime. nZDT is a service offered by SAP that uses cloning to perform conversion tasks on a clone system during uptime and only requires a brief downtime for final switching to the new system. While both help lower downtime, nZDT can achieve even lower business downtimes of 8-16 hours depending on validation needed.

5 challenges of scaling l10n workflows KantanMT/bmmt webinar

5 challenges of scaling l10n workflows KantanMT/bmmt webinarkantanmt In this joint presentation, Tony O’Dowd, Founder and Chief Architect of KantanMT and Maxim Khalilov, Technical Lead of bmmt deliver an overview of the MT technology currently available in the language technology market, the challenges of operating MT systems at scale and speed, and their opinions on the future trajectory of MT.

Each presentation will be grounded with client examples, and how they’ve successfully integrated MT into their localization workflows.

Finally, both presenters will finish off with a 5 point checklist for successful MT deployment based on both the MT provider and LSP point of view.

If you have any questions about this presentation or want to get in touch with either company please contact:

Louise Irwin, Marketing Specialist at KantanMT ([email protected])

Peggy Linder, Operations Manager at bmmt ([email protected])

3158 - Cloud Infrastructure & It Optimization - Application Performance Manag...

3158 - Cloud Infrastructure & It Optimization - Application Performance Manag...Sandeep Chellingi IBM Performance Management 8.1.x new features, implementation practices, Monitored Enterprise Applications, and integration with Tivoli products.

Apama and Terracotta World: Getting Started in Predictive Analytics

Apama and Terracotta World: Getting Started in Predictive Analytics Software AG The document provides an overview of predictive analytics and Terracotta and Apama products. It discusses key highlights and strategic focus areas for Terracotta and Apama in 2016-2017, including delivering an in-memory data fabric platform, enhancing integration with digital business platform products, and enabling internet of things integration and streaming analytics. The document also introduces four speakers on predictive analytics.

Peteris Arajs - Where is my data

Peteris Arajs - Where is my dataAndrejs Vorobjovs Oracle Cloud ERP - where is My Data?

All about Oracle integration products and Cloud ERP:

* What are the ways to deliver it - all 3 options and obvious choice for our project

- File Based Data Import

- Web Services

* Can I trust the ERP statuses?

- Custom reporting using BI Publisher

- Security implications

* Lessons learned

- What works out of the box (provision SOA CS and, patch it)

- Security challenges

PTV Group_impact_camunda_bpm_20140122

PTV Group_impact_camunda_bpm_20140122camunda services GmbH The document discusses PTV's use of business process management (BPM) in spatial data processing. It describes PTV's motivation to introduce BPM to streamline workflows and enable parallel processing. The system architecture uses a BPM engine to control processes executed by services running on multiple workers. It demonstrates a map data processing workflow. Technical and data production BPM processes are discussed. Experiences show benefits of using BPM for transparency while pitfalls include concurrency issues. Future plans include improved modeling, statistics, and parallel processing support.

Ad

More from QueBIT Consulting (20)

QueBIT Agile Crisis Planning - A Better Way to Plan for Uncertain Times

QueBIT Agile Crisis Planning - A Better Way to Plan for Uncertain TimesQueBIT Consulting The QueBIT solution, which was built on IBM Planning Analytics, enabled the client to achieve two things that were not possible before the implementation. First, the solution identified correlations between historical sales and external factors such as inflation, Consumer Price Index (CPI), Gross Domestic Product (GDP), and commodity prices. This process which was built on IBM Planning Analytics and enhanced using QueBIT’s Euclid Studio tool, validated some prior assumptions by the business, while invalidating others. Second, the solution made it possible for the client to create numerous scenarios around reopening and consumer behaviors, including calculations of the resulting impact to business performance (volume and mix by channel). The future is still dynamic, and the QueBIT solution allows the business to identify its best assumptions and the resulting financial plan based on those assumptions.

JLG Case Study: Prescriptive Analytics & CPLEX Decision Optimization and TM1 ...

JLG Case Study: Prescriptive Analytics & CPLEX Decision Optimization and TM1 ...QueBIT Consulting The document discusses how JLG Industries used IBM Planning Analytics and CPLEX Decision Optimization integrated with TM1 to automate and optimize their master production scheduling process. This resulted in estimated annual savings of $700K from reducing the scheduling time from 10 days to 3 days per month, and $2.5M from improved forecast accuracy. The solution aligned production slot allocation across different factors based on optimization rules in 12 minutes, compared to 10 days previously.

Webinar: If Your Data Could Talk, What Story Would it Tell? Would it Be a Doc...

Webinar: If Your Data Could Talk, What Story Would it Tell? Would it Be a Doc...QueBIT Consulting QueBIT Consulting November 2018 webinar: If your data could talk, what story would it tell? Would it be a documentary, a thriller, or horror story?

Telling “Your Story” Using Cognos Analytics Webinar

Telling “Your Story” Using Cognos Analytics WebinarQueBIT Consulting Agenda:

-Introduction to QueBIT

-Overview of data “stories”

-Defining key elements in an effective “story”

-Demonstration of data delivery using these elements

-A technical dive into the Cognos Analytics features used in the demonstration

The Importance of Performance Testing Theory and Practice - QueBIT Consulting...

The Importance of Performance Testing Theory and Practice - QueBIT Consulting...QueBIT Consulting Why is good testing so hard to do? Not Enough Time. Not Enough Testers. Inconsistent or Incomplete Test Scripts. Lack of Performance Metrics. Difficult to Summarize Results

Practical Implementation Tips For Implementing a Financial Planning - QueBIT ...

Practical Implementation Tips For Implementing a Financial Planning - QueBIT ...QueBIT Consulting We’re driven to help organizations improve their agility to make intelligent decisions that create value.

This is why we’re committed to excellence in analytics strategy, implementation, and training.

QueBIT Quality Services (QQS) Brochure 2018 - QueBIT Consulting - Trusted Exp...

QueBIT Quality Services (QQS) Brochure 2018 - QueBIT Consulting - Trusted Exp...QueBIT Consulting QueBIT Quality Services (QQS) closes the gap in skills and resource management for customers unable to maintain their own systems.

QueBIT Corporate Brochure 2018 - QueBIT Consulting - Experts in Analytics

QueBIT Corporate Brochure 2018 - QueBIT Consulting - Experts in AnalyticsQueBIT Consulting QueBIT is a trusted expert in business analytics. They help organizations apply analytics to key functions to improve performance. QueBIT uses a collaborative approach to implement customized analytics solutions tailored for each client. Their solutions and expertise span data management, business intelligence, and advanced analytics. This allows clients to gain insights from their data to make better decisions and outperform competitors.

Predictive Price Optimization 2018 - Achilles - QueBIT Consulting

Predictive Price Optimization 2018 - Achilles - QueBIT ConsultingQueBIT Consulting "Price optimization avoids loss; loss of a customer because you priced too high, or loss of margin because you priced too low. You are likely losing customers and margin every day without price optimization" -Gary Quirke, CEO

QueBIT Planning Product Sheet 2018 - QueBIT Consulting

QueBIT Planning Product Sheet 2018 - QueBIT Consulting QueBIT Consulting QueBIT Planning is a cloud-based financial planning and analytics solution that allows customers to leverage the benefits of TM1 without the cost and overhead of an on-premise system. It can replace an existing problematic planning process within weeks with a streamlined, collaborative cloud model. Key benefits include reusable Excel reports, predictive modeling, minimal training needs, an intuitive user experience, and rapid implementation managed by QueBIT.

Predictive Price Optimization January 2018 QueBIT Webinar - Achilles Price Op...

Predictive Price Optimization January 2018 QueBIT Webinar - Achilles Price Op...QueBIT Consulting Webinar Agenda:

-Brief QueBIT Introductions

-What is Price Optimization?

-QueBIT’s Approach to Price Optimization

-Achilles Demonstration

-Key Benefits

While predictive analytics is based on sophisticated mathematics, Achilles gives deep insights into how these models work. These models consume all available data (not just price) to ensure that pricing recommendations are realistic and take into account the dynamic market place.

JLG Case Study - Prescriptive Analytics & CPLEX Decision Optimization and TM1...

JLG Case Study - Prescriptive Analytics & CPLEX Decision Optimization and TM1...QueBIT Consulting The document discusses how JLG Industries used IBM Planning Analytics and CPLEX Decision Optimization integrated with TM1 to automate and optimize their master production scheduling process. This resulted in estimated annual savings of $700K from reducing the scheduling time from 10 days to 3 days per month, and $2.5M from improved forecast accuracy. The solution aligned production plans from their Sales, Inventory, and Operations Plan more quickly and accurately to improve supplier forecasts.

Financial Performance Management Training Catalog - Self Paced, Instructor L...

Financial Performance Management Training Catalog - Self Paced, Instructor L...QueBIT Consulting QueBIT aims to make it easy to help you find the right information. Our mission is to empower you with the training you need, so that you can apply analytic techniques with confidence. We want you to succeed and see the power in the data that is at your fingertips, so that you can make better informed decisions. QueBIT is a full-service operation, offering flexible training sessions to meet your busy schedules. Our training is presented by certified, expert, technical trainers.

Cognos Analytics/Business Intelligence Training Catalog - Self Paced, Instruc...

Cognos Analytics/Business Intelligence Training Catalog - Self Paced, Instruc...QueBIT Consulting QueBIT aims to make it easy to help you find the right information. Our mission is to empower you with the training you need, so that you can apply analytic techniques with confidence. We want you to succeed and see the power in the data that is at your fingertips, so that you can make better informed decisions. QueBIT is a full-service operation, offering flexible training sessions to meet your busy schedules.

Auditable Financial System for Government Contracting at Accenture Federal Se...

Auditable Financial System for Government Contracting at Accenture Federal Se...QueBIT Consulting AGENDA:

Introductions and Company Overview

Government Contracting Industry Overview

AFS TM1 Model Overview

Business and Architectural Challenges

Solution Approach

Outcome and Results Achieved

Enhancements since Re-Architecture

Future Plans/Developments

Closing Statement

Q&A Session

Leveraging IBM Cognos TM1 for Merchandise Planning at Tractor Supply Company ...

Leveraging IBM Cognos TM1 for Merchandise Planning at Tractor Supply Company ...QueBIT Consulting AGENDA:

Introductions and Company Overviews

TSC Merchandise Planning Solution Overview

Prior State

Solution and Implementation

Tips & Tricks for TM1 Perspectives Templates

Q&A

IBM Vision 2017 Conference Session #1095 - Prescriptive Analytics & CPLEX Dec...

IBM Vision 2017 Conference Session #1095 - Prescriptive Analytics & CPLEX Dec...QueBIT Consulting AGENDA:

Introductions and Company Overviews

JLG Master Scheduling Solution Overview

Problem Overview

What is CPLEX?

CPLEX & TM1 Integration

Solution and Implementation

Q&A

IBM Vision 2017 Conference Session #1148 - Leveraging Planning Analytics & CD...

IBM Vision 2017 Conference Session #1148 - Leveraging Planning Analytics & CD...QueBIT Consulting AGENDA:

Introductions and Company Overviews

Stein Mart’s Business Objectives

Leveraging the IBM Cloud and QueBIT FrameWORQ to maximize time to value

Stein Mart’s Reporting Solution

Results Achieved

iQue on IBM BlueMix Can Increase Your TM1 Forecast Accuracy - IBM Vision Conf...

iQue on IBM BlueMix Can Increase Your TM1 Forecast Accuracy - IBM Vision Conf...QueBIT Consulting This document discusses iQue, a connector created by QueBIT Consulting that allows IBM Planning Analytics (TM1) to integrate with external data sources and applications via IBM Bluemix. The document outlines an agenda to discuss QueBIT, predictive demand planning using their Euclid engine, and how iQue can help improve TM1 forecast accuracy by connecting it to external predictive models and messaging services. A demo is shown of using iQue to send historical TM1 data to Euclid for predictive forecasting and loading the results back into TM1.

TM1 Healthcheck - QueBIT Consulting

TM1 Healthcheck - QueBIT ConsultingQueBIT Consulting Ongoing Performance Diagnostics are a critical part of maintaining your enterprise-wide applications. If you have not done a diagnostic assessment of your system in a while, a TM1 Healthcheck engagement with QueBIT will identify areas in which your TM1 performance and experience can be improved.

Ad

Recently uploaded (20)

intra-mart Accel series 2025 Spring updates-en.ppt

intra-mart Accel series 2025 Spring updates-en.pptNTTDATA INTRAMART intra-mart Accel series 2025 Spring updates-en.ppt

CGG Deck English - Apr 2025-edit (1).pptx

CGG Deck English - Apr 2025-edit (1).pptxChina_Gold_International_Resources China Gold Annual Report PPT

Freeze-Dried Fruit Powder Market Trends & Growth

Freeze-Dried Fruit Powder Market Trends & Growthchanderdeepseoexpert The freeze-dried fruit powder market is growing fast, driven by demand for natural, shelf-stable ingredients in food and beverages.

www.visualmedia.com digital markiting (1).pptx

www.visualmedia.com digital markiting (1).pptxDavinder Singh Visual media is a visual way of communicating meaning. This includes digital media such as social media and traditional media such as television. Visual media can encompass entertainment, advertising, art, performance art, crafts, information artifacts and messages between people.

From Dreams to Threads: The Story Behind The Chhapai

From Dreams to Threads: The Story Behind The ChhapaiThe Chhapai Chhapai is a direct-to-consumer (D2C) lifestyle fashion brand founded by Akash Sharma. We believe in providing the best quality printed & graphic t-shirts & hoodies so you can express yourself through what you wear, because everything can’t be explained in words.

Kiran Flemish - A Dynamic Musician

Kiran Flemish - A Dynamic MusicianKiran Flemish Kiran Flemish is a dynamic musician, composer, and student leader pursuing a degree in music with a minor in film and media studies. As a talented tenor saxophonist and DJ, he blends jazz with modern digital production, creating original compositions using platforms like Logic Pro and Ableton Live. With nearly a decade of experience as a private instructor and youth music coach, Kiran is passionate about mentoring the next generation of musicians. He has hosted workshops, raised funds for causes like the Save the Music Foundation and Type I Diabetes research, and is eager to expand his career in music licensing and production.

Influence of Career Development on Retention of Employees in Private Univers...

Influence of Career Development on Retention of Employees in Private Univers...publication11 Retention of employees in universities is paramount for producing quantity and quality of human capital for

economic development of a country. Turnover has persistently remained high in private universities despite

employee attrition by institutions, which can disrupt organizational stability, quality of education and reputation.

Objectives of the study included performance appraisal, staff training and promotion practices on retention of

employees. Correlational research design and quantitative research were adopted. Total population was 85 with a

sample of 70 which was selected through simple random sampling. Data collection was through questionnaire and

analysed using multiple linear regression with help of SPSS. Results showed that both performance appraisal

(t=1.813, P=.076, P>.05) and staff training practices (t=-1.887, P=.065, P>.05) were statistical insignificant while

promotion practices (t=3.804, P=.000, P<.05) was statistically significantly influenced retention of employees.

The study concluded that performance appraisal and staff training has little relationship with employee retention

whereas promotion practices affect employee retention in private universities. Therefore, it was recommended

that organizations renovate performance appraisal and staff training practices while promoting employees

annually, review salary structure, ensure there is no biasness and promotion practices should be based on meritocracy. The findings could benefit management of private universities, Government and researchers.

Yuriy Chapran: Zero Trust and Beyond: OpenVPN’s Role in Next-Gen Network Secu...

Yuriy Chapran: Zero Trust and Beyond: OpenVPN’s Role in Next-Gen Network Secu...Lviv Startup Club Yuriy Chapran: Zero Trust and Beyond: OpenVPN’s Role in Next-Gen Network Security (UA)

UA Online PMDay 2025 Spring

Website – https://pmday.org/online

Youtube – https://www.youtube.com/startuplviv

FB – https://www.facebook.com/pmdayconference

Level Up Your Launch: Utilizing AI for Start-up Success

Level Up Your Launch: Utilizing AI for Start-up SuccessBest Virtual Specialist AI isn’t a replacement; it’s the tool that’s unlocking new possibilities for start-ups, making it easier to automate tasks, strengthen security, and uncover insights that move businesses forward. But technology alone isn’t enough.

Real growth happens when smart tools meet real Human Support. Our virtual assistants help you stay authentic, creative, and connected while AI handles the heavy lifting.

Want to explore how combining AI power and human brilliance can transform your business?

Visit our website and let’s get started!

🔗 Learn more here: BestVirtualSpecialist.com

The Rise of Payroll Outsourcing in the UK: Key Statistics for 2025

The Rise of Payroll Outsourcing in the UK: Key Statistics for 2025QX Accounting Services Ltd Explore the growing trend of payroll outsourcing in the UK with key 2025 statistics, market insights, and benefits for accounting firms. This infographic highlights why more firms are turning to outsourced payroll services for UK businesses to boost compliance, cut costs, and streamline operations. Discover how QXAS can help your firm stay ahead.

for more details visit:- https://qxaccounting.com/uk/service/payroll-outsourcing/

Accounting_Basics_Complete_Guide_By_CA_Suvidha_Chaplot (1).pdf

Accounting_Basics_Complete_Guide_By_CA_Suvidha_Chaplot (1).pdfCA Suvidha Chaplot

**Title:** Accounting Basics – A Complete Visual Guide

**Author:** CA Suvidha Chaplot

**Description:**

Whether you're a beginner in business, a commerce student, or preparing for professional exams, understanding the language of business — **accounting** — is essential. This beautifully designed SlideShare simplifies key accounting concepts through **colorful infographics**, clear examples, and smart layouts.

From understanding **why accounting matters** to mastering **core principles, standards, types of accounts, and the accounting equation**, this guide covers everything in a visual-first format.

📘 **What’s Inside:**

* **Introduction to Accounting**: Definition, objectives, scope, and users

* **Accounting Concepts & Principles**: Business Entity, Accruals, Matching, Going Concern, and more

* **Types of Accounts**: Asset, Liability, Equity explained visually

* **The Accounting Equation**: Assets = Liabilities + Equity broken down with diagrams

* BONUS: Professionally designed cover for presentation or academic use

🎯 **Perfect for:**

* Students (Commerce, BBA, MBA, CA Foundation)

* Educators and Trainers

* UGC NET/Assistant Professor Aspirants

* Anyone building a strong foundation in accounting

👩🏫 **Designed & curated by:** CA Suvidha Chaplot

Affinity.co Lifecycle Marketing Presentation

Affinity.co Lifecycle Marketing Presentationomiller199514 This is a presentation I did as a part of an interview.

Region Research (Hiring Trends) Vietnam 2025.pdf

Region Research (Hiring Trends) Vietnam 2025.pdfConsultonmic Vietnam 2025 the next global hub for big players,

EquariusAI analytics for business water risk

EquariusAI analytics for business water riskPeter Adriaens Overview of LLM value proposition for counterparty water risk.

Solaris Resources Presentation - Corporate April 2025.pdf

Solaris Resources Presentation - Corporate April 2025.pdfpchambers2 Solaris Resources Corporate Presentation

Web Design Creating User-Friendly and Visually Engaging Websites - April 2025...

Web Design Creating User-Friendly and Visually Engaging Websites - April 2025...TheoRuby Website design for small business owners looking at wordpress and elementor pro and covering all areas of design including UX and storytelling!

Liberal Price To Buy Verified Wise Accounts In 2025.pdf

Liberal Price To Buy Verified Wise Accounts In 2025.pdfTopvasmm https://topvasmm.com/product/buy-verified-transferwise-accounts/

Parallel Processing in TM1 - QueBIT Consulting

- 1. Parallel Processing in TM1 2/9/2017

- 2. Presenters Ann-Grete Tan General Manager, FOPM [email protected] Ryan Clapp Technology Manager [email protected]

- 3. Agenda Introduction to QueBIT Overview of Parallel Processing in TM1 Things to Consider Methods of Parallel Processing QueBIT Parallel Processor and the REST API Q&A

- 4. About QueBIT 15 years in business with managers on the team who have been working in analytics for 20+ years Operating Nationally in the US: Westchester Co NY Headquarters Full Offerings – Analytics Advisory & Implementation Services, Reseller of IBM Software and Developer of Solutions 900+ successful implementations in finance, sales, marketing and operations (55+ new analytics implementations over the past 12 months) 450+ active analytics customers; all industries 100 employees 75 Consultants 10 Sales 5 Product/Solution Development 10 Management and G&A Development of Analytics Solutions such as Predictive Demand Planning/Assortment Planning, Predictive Claims Subrogation, etc. QueBIT FrameWORQ Software complements TM1/PA Local Delivering Analytics On-Premise and in The Cloud

- 5. FOPM Business Intelligence Predictive Analytics IOT Analytics Data Management • Real Time Streaming • Spark 2.0 • Deep Learning • Relational Data Warehouses • PDA Data Warehouses • OLAP Data Marts • Big Data QueBIT’s Core Capabilities • Predictive Demand Forecasting • Predictive Claims Subrogation • Predictive Assortment Planning • Predictive Fraud • Predictive Maintenance • Decision Optimization • Mobility Analytics • Reporting • Dashboards • Scorecards • Budgeting & Forecasting • Strategic Planning • Business Modeling • Financial Reporting & Consolidations • Disclosure Management

- 7. Standard TI Operation Load 2016 Actuals Turbo Integrator Single Threaded Standard Operation Server CPU 25 %

- 8. What is Parallel Processing? Turbo Integrator 4 Threaded Load Server CPU Load 2016 Q4 Actuals Load 2016 Q1 Actuals Load 2016 Q3 Actuals Load 2016 Q2 Actuals

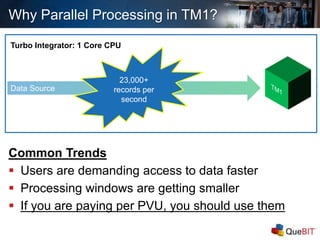

- 9. Why Parallel Processing in TM1? Data Source 23,000+ records per second Turbo Integrator: 1 Core CPU Common Trends Users are demanding access to data faster Processing windows are getting smaller If you are paying per PVU, you should use them

- 10. Data Partition Data Partition Data Partition Data Partition Data Partition Data Partition Data Partition Data Partition Turbo Integrator: 8 Core CPU Why Use Parallel Processing in TM1? 11,000,000+ records per minute!

- 12. Common Uses of Parallel Processing with TI Detailed Data Loads End of Period Processing Data Archiving Allocation Processes Data Extraction

- 13. Allocation Case Study 1.5 Hours 13+ Hours Multi- Threaded Processes Single Threaded Process

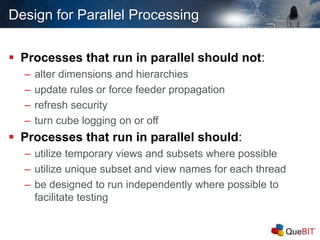

- 14. Design for Parallel Processing Processes that run in parallel should not: – alter dimensions and hierarchies – update rules or force feeder propagation – refresh security – turn cube logging on or off Processes that run in parallel should: – utilize temporary views and subsets where possible – utilize unique subset and view names for each thread – be designed to run independently where possible to facilitate testing

- 15. Other Design Considerations Thread and Process Management – Parallel processes are resource intensive, utilize tools to manage CPU loads. – Parallel Threads = CPU Cores - 1 Zero Out – Efficiency varies by process, make sure to test prior to finalizing your design Parallel threaded vs single threaded – If the entire cube is being zeroed out, it may be more efficient to use CUBECLEARDATA(). Be advised this function also “unfeeds” all fed cells

- 16. Methods of Parallel Processing Using TM1RunTI.exe – Could require advanced knowledge of scripting languages or additional TM1 cubes to manage Using chores on a schedule or a trigger – Easy to setup for consistent data set sizes Cognos Command Center – Powerful scheduler included with PA Cloud and some on-prem licenses QueBIT’s REST based RunMultipleTI.exe – Cloud friendly, designed to make parallel processing quick and easy

- 17. DEMO Using chores on a schedule or a trigger Cognos Command Center

- 18. Chore Architecture Thread Control Cube Target Cube 1) Chore monitors target cube for a flag, every x seconds Load Data Process 2) Process updates the flag 3) Flag detected, data load process run ChoreThreads

- 19. QueBIT RunMultipleTI.exe Built on top of TM1’s REST API Compatible with Planning Analytics Cloud Reduced Locking due to login/logout Designed to be simple to setup and use Control the # of running threads directly in the tool RunMultipleTI.exe REST API

- 20. Q&A Contact us if you want to make your TI processes faster. Contact us about implementing Cognos Command Center for your business. 1-800-QUE-BIT1 [email protected]

- 21. Q&A Thank You For Attending Join us for our next webinar: Cognos Disclosure Management Thursday, March 9th at 2pm EST Interested in moving to the cloud? Contact us for a demo and evaluation for your transition to Planning Analytics on the Cloud [email protected] TM1 Running Slow? Want a second opinion on your model? Looking to grow your TM1 footprint? Contact us today for your TM1 Health Check! [email protected]

Editor's Notes

- #4: TM1RunTI splits TIs and cut it down Read the IBM manual or call us and we can help Our tool is Cloud Compatible, Modern REST API, built for Parallel Processing. Easy to use, no need to build advanced scripts or cubes

- #7: What is your businesses longest running single threaded process

- #8: Graphic

- #9: Graphic

- #18: You can start multiple TIs from different sessions if you want a quick way to see if processes will lock each other.

- #19: You can start multiple TIs from different sessions if you want a quick way to see if processes will lock each other.

- #20: You can start multiple TIs from different sessions if you want a quick way to see if processes will lock each other.