Embedding Pig in scripting languages

Download as PPTX, PDF11 likes4,312 views

Examples of Pig embedding in Python. Presentation at the Post hadoop summit Pig user meetup http://www.meetup.com/PigUser/events/21215831/

1 of 31

Downloaded 151 times

Ad

Recommended

Pig programming is fun

Pig programming is funDataWorks Summit The document discusses new features in Pig including macros, debugging tools, UDF support for scripting languages, embedding Pig in other languages, and new operators like nested CROSS and FOREACH. Examples are provided for macros, debugging with Pig Illustrate, writing UDFs in Python and Ruby, running Pig from Python, and using nested operators. Future additions mentioned are RANK and CUBE operators.

Pig programming is more fun: New features in Pig

Pig programming is more fun: New features in Pigdaijy In the last year, we add lots of new language features into Pig. Pig programing is much more easier than before. With Pig Macro, we can write functions for Pig and we can modularize Pig program. Pig embedding allow use to embed Pig statement into Python and make use of rich language features of Python such as loop and branch. Java is no longer the only choice to write Pig UDF, we can write UDF in Python, Javascript and Ruby. Nested foreach and cross gives us more ways to manipulate data, which is not possible before. We also add tons of syntax sugar to simplify the Pig syntax. For example, direct syntax support for map, tuple and bag, project range expression in foreach, etc. We also revive the support for illustrate command to ease the debugging. In this paper, I will give an overview of all these features and illustrate how to use these features to program more efficiently in Pig. I will also give concrete example to demonstrate how Pig language evolves overtime with these language improvements.

Hadoop, Pig, and Python (PyData NYC 2012)

Hadoop, Pig, and Python (PyData NYC 2012)mortardata Mortar CEO K Young's talk on using Python with Hadoop and Pig (vs. Jython and MapReduce), including NumPy, SciPy, and NLTK.

Big Data Hadoop Training

Big Data Hadoop Trainingstratapps Pig is a platform for analyzing large datasets that sits on top of Hadoop. It provides a simple language called Pig Latin for expressing data analysis processes. Pig Latin scripts are compiled into series of MapReduce jobs that process and analyze data in parallel across a Hadoop cluster. Pig aims to be easier to use than raw MapReduce programs by providing high-level operations like JOIN, FILTER, GROUP, and allowing analysis to be expressed without writing Java code. Common use cases for Pig include log and web data analysis, ETL processes, and quick prototyping of algorithms for large-scale data.

Running R on Hadoop - CHUG - 20120815

Running R on Hadoop - CHUG - 20120815Chicago Hadoop Users Group The document summarizes a presentation on using R and Hadoop together. It includes:

1) An outline of topics to be covered including why use MapReduce and R, options for combining R and Hadoop, an overview of RHadoop, a step-by-step example, and advanced RHadoop features.

2) Code examples from Jonathan Seidman showing how to analyze airline on-time data using different R and Hadoop options - naked streaming, Hive, RHIPE, and RHadoop.

3) The analysis calculates average departure delays by year, month and airline using each method.

Apache Pig

Apache PigShashidhar Basavaraju This document provides an overview of Apache Pig and Pig Latin for querying large datasets. It discusses why Pig was created due to limitations in SQL for big data, how Pig scripts are written in Pig Latin using a simple syntax, and how PigLatin scripts are compiled into MapReduce jobs and executed on Hadoop clusters. Advanced topics covered include user-defined functions in PigLatin for custom data processing and sharing functions through Piggy Bank.

Introduction to Pig | Pig Architecture | Pig Fundamentals

Introduction to Pig | Pig Architecture | Pig FundamentalsSkillspeed This Hadoop Pig tutorial will unravel Pig Programming, Pig Commands, Pig Fundamentals, Grunt Mode, Script Mode & Embedded Mode.

At the end, you'll have a strong knowledge regarding Hadoop Pig Basics.

PPT Agenda:

✓ Introduction to BIG Data & Hadoop

✓ What is Pig?

✓ Pig Data Flows

✓ Pig Programming

----------

What is Pig?

Pig is an open source data flow language which processes data management operations via simple scripts using Pig Latin. Pig works very closely in relation with MapReduce.

----------

Applications of Pig

1. Data Cleansing

2. Data Transfers via HDFS

3. Data Factory Operations

4. Predictive Modelling

5. Business Intelligence

----------

Skillspeed is a live e-learning company focusing on high-technology courses. We provide live instructor led training in BIG Data & Hadoop featuring Realtime Projects, 24/7 Lifetime Support & 100% Placement Assistance.

Email: [email protected]

Website: https://www.skillspeed.com

High-level Programming Languages: Apache Pig and Pig Latin

High-level Programming Languages: Apache Pig and Pig LatinPietro Michiardi This slide deck is used as an introduction to the Apache Pig system and the Pig Latin high-level programming language, as part of the Distributed Systems and Cloud Computing course I hold at Eurecom.

Course website:

http://michiard.github.io/DISC-CLOUD-COURSE/

Sources available here:

https://github.com/michiard/DISC-CLOUD-COURSE

Python in big data world

Python in big data worldRohit Python can be used for big data applications and processing on Hadoop. Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of computers. It allows for the distributed processing of large datasets across clusters of computers using simple programming models. MapReduce is a programming model used in Hadoop for processing and generating large datasets in a distributed computing environment.

Sql saturday pig session (wes floyd) v2

Sql saturday pig session (wes floyd) v2Wes Floyd Pig is a platform for analyzing large datasets that sits between low-level MapReduce programming and high-level SQL queries. It provides a language called Pig Latin that allows users to specify data analysis programs without dealing with low-level details. Pig Latin scripts are compiled into sequences of MapReduce jobs for execution. HCatalog allows data to be shared between Pig, Hive, and other tools by reading metadata about schemas, locations, and formats.

Massively Parallel Processing with Procedural Python (PyData London 2014)

Massively Parallel Processing with Procedural Python (PyData London 2014)Ian Huston The Python data ecosystem has grown beyond the confines of single machines to embrace scalability. Here we describe one of our approaches to scaling, which is already being used in production systems. The goal of in-database analytics is to bring the calculations to the data, reducing transport costs and I/O bottlenecks. Using PL/Python we can run parallel queries across terabytes of data using not only pure SQL but also familiar PyData packages such as scikit-learn and nltk. This approach can also be used with PL/R to make use of a wide variety of R packages. We look at examples on Postgres compatible systems such as the Greenplum Database and on Hadoop through Pivotal HAWQ. We will also introduce MADlib, Pivotal’s open source library for scalable in-database machine learning, which uses Python to glue SQL queries to low level C++ functions and is also usable through the PyMADlib package.

Scalable Hadoop with succinct Python: the best of both worlds

Scalable Hadoop with succinct Python: the best of both worldsDataWorks Summit The document discusses using Python with Hadoop frameworks. It outlines some of the benefits of Hadoop like scalability and schema flexibility, and benefits of Python like succinct code and many data science libraries. It then reviews several projects that aim to bridge Python and Hadoop, including mrjob for MapReduce jobs, Pydoop for faster MapReduce, Pig for higher-level data flows, Snakebite for a Python HDFS client, and PySpark for working with Spark. However, it notes that Python support is often an afterthought or fringe project compared to the native Java support, and lacks commercial backing or cohesive APIs.

Introduction of the Design of A High-level Language over MapReduce -- The Pig...

Introduction of the Design of A High-level Language over MapReduce -- The Pig...Yu Liu Pig is a platform for analyzing large datasets that uses Pig Latin, a high-level language, to express data analysis programs. Pig Latin programs are compiled into MapReduce jobs and executed on Hadoop. Pig Latin provides data manipulation constructs like SQL as well as user-defined functions. The Pig system compiles programs through optimization, code generation, and execution on Hadoop. Future work focuses on additional optimizations, non-Java UDFs, and interfaces like SQL.

IPython Notebook as a Unified Data Science Interface for Hadoop

IPython Notebook as a Unified Data Science Interface for HadoopDataWorks Summit This document discusses using IPython Notebook as a unified data science interface for Hadoop. It proposes that a unified environment needs: 1) mixed local and distributed processing via Apache Spark, 2) access to languages like Python via PySpark, 3) seamless SQL integration via SparkSQL, and 4) visualization and reporting via IPython Notebook. The document demonstrates this environment by exploring open payments data between doctors/hospitals and manufacturers.

Data Science Amsterdam - Massively Parallel Processing with Procedural Languages

Data Science Amsterdam - Massively Parallel Processing with Procedural LanguagesIan Huston The goal of in-database analytics is to bring the calculations to the data, reducing transport costs and I/O bottlenecks. With Procedural Languages such as PL/Python and PL/R data parallel queries can be run across terabytes of data using not only pure SQL but also familiar Python and R packages. The Pivotal Data Science team have used this technique to create fraud behaviour models for each individual user in a large corporate network, to understand interception rates at customs checkpoints by accelerating natural language processing of package descriptions and to reduce customer churn by building a sentiment model using customer call centre records.

http://www.meetup.com/Data-Science-Amsterdam/events/178974942/

Pig on Tez - Low Latency ETL with Big Data

Pig on Tez - Low Latency ETL with Big DataDataWorks Summit This document discusses Pig on Tez, which runs Pig jobs on the Tez execution engine rather than MapReduce. The team introduces Pig and Tez, describes the design of Pig on Tez including logical and physical plans, custom vertices and edges, and performance optimizations like broadcast edges and object caching. Performance results show speedups of 1.5x to 6x over MapReduce. Current status is 90% feature parity with Pig on MR and future work includes supporting Tez local mode and improving stability, usability, and performance further.

Massively Parallel Processing with Procedural Python by Ronert Obst PyData Be...

Massively Parallel Processing with Procedural Python by Ronert Obst PyData Be...PyData The Python data ecosystem has grown beyond the confines of single machines to embrace scalability. Here we describe one of our approaches to scaling, which is already being used in production systems. The goal of in-database analytics is to bring the calculations to the data, reducing transport costs and I/O bottlenecks. Using PL/Python we can run parallel queries across terabytes of data using not only pure SQL but also familiar PyData packages such as scikit-learn and nltk. This approach can also be used with PL/R to make use of a wide variety of R packages. We look at examples on Postgres compatible systems such as the Greenplum Database and on Hadoop through Pivotal HAWQ. We will also introduce MADlib, Pivotal’s open source library for scalable in-database machine learning, which uses Python to glue SQL queries to low level C++ functions and is also usable through the PyMADlib package.

Apache Pig: Making data transformation easy

Apache Pig: Making data transformation easyVictor Sanchez Anguix This is part of an introductory course to Big Data Tools for Artificial Intelligence. These slides introduce students to the use of Apache Pig as an ETL tool over Hadoop.

Pig Tutorial | Twitter Case Study | Apache Pig Script and Commands | Edureka

Pig Tutorial | Twitter Case Study | Apache Pig Script and Commands | EdurekaEdureka! This Edureka Pig Tutorial ( Pig Tutorial Blog Series: https://goo.gl/KPE94k ) will help you understand the concepts of Apache Pig in depth.

Check our complete Hadoop playlist here: https://goo.gl/ExJdZs

Below are the topics covered in this Pig Tutorial:

1) Entry of Apache Pig

2) Pig vs MapReduce

3) Twitter Case Study on Apache Pig

4) Apache Pig Architecture

5) Pig Components

6) Pig Data Model

7) Running Pig Commands and Pig Scripts (Log Analysis)

Apache Pig for Data Scientists

Apache Pig for Data ScientistsDataWorks Summit This document discusses Apache Pig and its role in data science. It begins with an introduction to Pig, describing it as a high-level scripting language for operating on large datasets in Hadoop. It transforms data operations into MapReduce/Tez jobs and optimizes the number of jobs required. The document then covers using Pig for understanding data through statistics and sampling, machine learning by sampling large datasets and applying models with UDFs, and natural language processing on large unstructured data.

Big Data Step-by-Step: Using R & Hadoop (with RHadoop's rmr package)

Big Data Step-by-Step: Using R & Hadoop (with RHadoop's rmr package)Jeffrey Breen The document describes a Big Data workshop held on March 10, 2012 at the Microsoft New England Research & Development Center in Cambridge, MA. The workshop focused on using R and Hadoop, with an emphasis on RHadoop's rmr package. The document provides an introduction to using R with Hadoop and discusses several R packages for working with Hadoop, including RHIPE, rmr, rhdfs, and rhbase. Code examples are presented demonstrating how to calculate average departure delays by airline and month from an airline on-time performance dataset using different approaches, including Hadoop streaming, hive, RHIPE and rmr.

Hive vs Pig for HadoopSourceCodeReading

Hive vs Pig for HadoopSourceCodeReadingMitsuharu Hamba The document provides information about Hive and Pig, two frameworks for analyzing large datasets using Hadoop. It compares Hive and Pig, noting that Hive uses a SQL-like language called HiveQL to manipulate data, while Pig uses Pig Latin scripts and operates on data flows. The document also includes code examples demonstrating how to use basic operations in Hive and Pig like loading data, performing word counts, joins, and outer joins on sample datasets.

Hadoop interview question

Hadoop interview questionpappupassindia Hadoop is an open source framework for distributed storage and processing of vast amounts of data across clusters of computers. It uses a master-slave architecture with a single JobTracker master and multiple TaskTracker slaves. The JobTracker schedules tasks like map and reduce jobs on TaskTrackers, which each run task instances in separate JVMs. It monitors task progress and reschedules failed tasks. Hadoop uses MapReduce programming model where the input is split and mapped in parallel, then outputs are shuffled, sorted, and reduced to form the final results.

Word Embedding for Nearest Words

Word Embedding for Nearest WordsEkaKurniawan40 This document summarizes Bukalapak's process for generating word embeddings from product names to find similar words. It involved preprocessing over 300 million product names, training a word2vec model on the text, exporting the model for serving, and deploying model server and client applications to retrieve similar words through an API. The preprocessing used tokenization and filtering to extract words from names, while the model was trained using skip-gram architecture on GPUs over 1.5 months to produce a 500MB embedding.

Hadoop, Hbase and Hive- Bay area Hadoop User Group

Hadoop, Hbase and Hive- Bay area Hadoop User GroupHadoop User Group This document summarizes Facebook's use cases and architecture for integrating Apache Hive and HBase. It discusses loading data from Hive into HBase tables using INSERT statements, querying HBase tables from Hive using SELECT statements, and maintaining low latency access to dimension tables stored in HBase while performing analytics on fact data stored in Hive. The architecture involves writing a storage handler and SerDe to map between the two systems and executing Hive queries by generating MapReduce jobs that read from or write to HBase.

Hadoop 31-frequently-asked-interview-questions

Hadoop 31-frequently-asked-interview-questionsAsad Masood Qazi The document contains 31 questions and answers related to Hadoop concepts. It covers topics like common input formats in Hadoop, differences between TextInputFormat and KeyValueInputFormat, what are InputSplits and how they are created, how partitioning, shuffling and sorting occurs after the map phase, what is a combiner, functions of JobTracker and TaskTracker, how speculative execution works, using distributed cache and counters, setting number of mappers/reducers, writing custom partitioners, debugging Hadoop jobs, and failure handling processes for production Hadoop jobs.

GPU Accelerated Machine Learning

GPU Accelerated Machine LearningSri Ambati Deep learning algorithms have benefited greatly from the recent performance gains of GPUs. However, it has been unclear whether GPUs can speed up machine learning algorithms such as generalized linear modeling, random forests, gradient boosting machines, and clustering. H2O.ai, the leading open source AI company, is bringing the best-of-breed data science and machine learning algorithms to GPUs.

We introduce H2O4GPU, a fully featured machine learning library that is optimized for GPUs with a robust python API that is drop dead replacement for scikit-learn. We'll demonstrate benchmarks for the most common algorithms relevant to enterprise AI and showcase performance gains as compared to running on CPUs.

Jon’s Bio:

https://umdphysics.umd.edu/people/faculty/current/item/337-jcm.html

Please view the video here:

Hadoop for Java Professionals

Hadoop for Java ProfessionalsEdureka! With the surge in Big Data, organizations have began to implement Big Data related technologies as a part of their system. This has lead to a huge need to update existing skillsets with Hadoop. Java professionals are one such people who have to update themselves with Hadoop skills.

BioPig for scalable analysis of big sequencing data

BioPig for scalable analysis of big sequencing dataZhong Wang This document introduces BioPig, a Hadoop-based analytic toolkit for large-scale genomic sequence analysis. BioPig aims to provide a flexible, high-level, and scalable platform to enable domain experts to build custom analysis pipelines. It leverages Hadoop's data parallelism to speed up bioinformatics tasks like k-mer counting and assembly. The document demonstrates how BioPig can analyze over 1 terabase of metagenomic data using just 7 lines of code, much more simply than alternative MPI-based solutions. While challenges remain around optimization and integration, BioPig shows promise for scalable genomic analytics on very large datasets.

Poster Hadoop summit 2011: pig embedding in scripting languages

Poster Hadoop summit 2011: pig embedding in scripting languagesJulien Le Dem Pig Scripting allows Pig to be used for iterative algorithms and user defined functions by embedding Pig within a scripting language. This allows transitive closure, an iterative process, to be computed with one file using Python functions as UDFs and Python variables in Pig scripts, rather than requiring seven files and Java UDFs as plain Pig would.

Ad

More Related Content

What's hot (20)

Python in big data world

Python in big data worldRohit Python can be used for big data applications and processing on Hadoop. Hadoop is an open-source software framework for distributed storage and processing of large datasets across clusters of computers. It allows for the distributed processing of large datasets across clusters of computers using simple programming models. MapReduce is a programming model used in Hadoop for processing and generating large datasets in a distributed computing environment.

Sql saturday pig session (wes floyd) v2

Sql saturday pig session (wes floyd) v2Wes Floyd Pig is a platform for analyzing large datasets that sits between low-level MapReduce programming and high-level SQL queries. It provides a language called Pig Latin that allows users to specify data analysis programs without dealing with low-level details. Pig Latin scripts are compiled into sequences of MapReduce jobs for execution. HCatalog allows data to be shared between Pig, Hive, and other tools by reading metadata about schemas, locations, and formats.

Massively Parallel Processing with Procedural Python (PyData London 2014)

Massively Parallel Processing with Procedural Python (PyData London 2014)Ian Huston The Python data ecosystem has grown beyond the confines of single machines to embrace scalability. Here we describe one of our approaches to scaling, which is already being used in production systems. The goal of in-database analytics is to bring the calculations to the data, reducing transport costs and I/O bottlenecks. Using PL/Python we can run parallel queries across terabytes of data using not only pure SQL but also familiar PyData packages such as scikit-learn and nltk. This approach can also be used with PL/R to make use of a wide variety of R packages. We look at examples on Postgres compatible systems such as the Greenplum Database and on Hadoop through Pivotal HAWQ. We will also introduce MADlib, Pivotal’s open source library for scalable in-database machine learning, which uses Python to glue SQL queries to low level C++ functions and is also usable through the PyMADlib package.

Scalable Hadoop with succinct Python: the best of both worlds

Scalable Hadoop with succinct Python: the best of both worldsDataWorks Summit The document discusses using Python with Hadoop frameworks. It outlines some of the benefits of Hadoop like scalability and schema flexibility, and benefits of Python like succinct code and many data science libraries. It then reviews several projects that aim to bridge Python and Hadoop, including mrjob for MapReduce jobs, Pydoop for faster MapReduce, Pig for higher-level data flows, Snakebite for a Python HDFS client, and PySpark for working with Spark. However, it notes that Python support is often an afterthought or fringe project compared to the native Java support, and lacks commercial backing or cohesive APIs.

Introduction of the Design of A High-level Language over MapReduce -- The Pig...

Introduction of the Design of A High-level Language over MapReduce -- The Pig...Yu Liu Pig is a platform for analyzing large datasets that uses Pig Latin, a high-level language, to express data analysis programs. Pig Latin programs are compiled into MapReduce jobs and executed on Hadoop. Pig Latin provides data manipulation constructs like SQL as well as user-defined functions. The Pig system compiles programs through optimization, code generation, and execution on Hadoop. Future work focuses on additional optimizations, non-Java UDFs, and interfaces like SQL.

IPython Notebook as a Unified Data Science Interface for Hadoop

IPython Notebook as a Unified Data Science Interface for HadoopDataWorks Summit This document discusses using IPython Notebook as a unified data science interface for Hadoop. It proposes that a unified environment needs: 1) mixed local and distributed processing via Apache Spark, 2) access to languages like Python via PySpark, 3) seamless SQL integration via SparkSQL, and 4) visualization and reporting via IPython Notebook. The document demonstrates this environment by exploring open payments data between doctors/hospitals and manufacturers.

Data Science Amsterdam - Massively Parallel Processing with Procedural Languages

Data Science Amsterdam - Massively Parallel Processing with Procedural LanguagesIan Huston The goal of in-database analytics is to bring the calculations to the data, reducing transport costs and I/O bottlenecks. With Procedural Languages such as PL/Python and PL/R data parallel queries can be run across terabytes of data using not only pure SQL but also familiar Python and R packages. The Pivotal Data Science team have used this technique to create fraud behaviour models for each individual user in a large corporate network, to understand interception rates at customs checkpoints by accelerating natural language processing of package descriptions and to reduce customer churn by building a sentiment model using customer call centre records.

http://www.meetup.com/Data-Science-Amsterdam/events/178974942/

Pig on Tez - Low Latency ETL with Big Data

Pig on Tez - Low Latency ETL with Big DataDataWorks Summit This document discusses Pig on Tez, which runs Pig jobs on the Tez execution engine rather than MapReduce. The team introduces Pig and Tez, describes the design of Pig on Tez including logical and physical plans, custom vertices and edges, and performance optimizations like broadcast edges and object caching. Performance results show speedups of 1.5x to 6x over MapReduce. Current status is 90% feature parity with Pig on MR and future work includes supporting Tez local mode and improving stability, usability, and performance further.

Massively Parallel Processing with Procedural Python by Ronert Obst PyData Be...

Massively Parallel Processing with Procedural Python by Ronert Obst PyData Be...PyData The Python data ecosystem has grown beyond the confines of single machines to embrace scalability. Here we describe one of our approaches to scaling, which is already being used in production systems. The goal of in-database analytics is to bring the calculations to the data, reducing transport costs and I/O bottlenecks. Using PL/Python we can run parallel queries across terabytes of data using not only pure SQL but also familiar PyData packages such as scikit-learn and nltk. This approach can also be used with PL/R to make use of a wide variety of R packages. We look at examples on Postgres compatible systems such as the Greenplum Database and on Hadoop through Pivotal HAWQ. We will also introduce MADlib, Pivotal’s open source library for scalable in-database machine learning, which uses Python to glue SQL queries to low level C++ functions and is also usable through the PyMADlib package.

Apache Pig: Making data transformation easy

Apache Pig: Making data transformation easyVictor Sanchez Anguix This is part of an introductory course to Big Data Tools for Artificial Intelligence. These slides introduce students to the use of Apache Pig as an ETL tool over Hadoop.

Pig Tutorial | Twitter Case Study | Apache Pig Script and Commands | Edureka

Pig Tutorial | Twitter Case Study | Apache Pig Script and Commands | EdurekaEdureka! This Edureka Pig Tutorial ( Pig Tutorial Blog Series: https://goo.gl/KPE94k ) will help you understand the concepts of Apache Pig in depth.

Check our complete Hadoop playlist here: https://goo.gl/ExJdZs

Below are the topics covered in this Pig Tutorial:

1) Entry of Apache Pig

2) Pig vs MapReduce

3) Twitter Case Study on Apache Pig

4) Apache Pig Architecture

5) Pig Components

6) Pig Data Model

7) Running Pig Commands and Pig Scripts (Log Analysis)

Apache Pig for Data Scientists

Apache Pig for Data ScientistsDataWorks Summit This document discusses Apache Pig and its role in data science. It begins with an introduction to Pig, describing it as a high-level scripting language for operating on large datasets in Hadoop. It transforms data operations into MapReduce/Tez jobs and optimizes the number of jobs required. The document then covers using Pig for understanding data through statistics and sampling, machine learning by sampling large datasets and applying models with UDFs, and natural language processing on large unstructured data.

Big Data Step-by-Step: Using R & Hadoop (with RHadoop's rmr package)

Big Data Step-by-Step: Using R & Hadoop (with RHadoop's rmr package)Jeffrey Breen The document describes a Big Data workshop held on March 10, 2012 at the Microsoft New England Research & Development Center in Cambridge, MA. The workshop focused on using R and Hadoop, with an emphasis on RHadoop's rmr package. The document provides an introduction to using R with Hadoop and discusses several R packages for working with Hadoop, including RHIPE, rmr, rhdfs, and rhbase. Code examples are presented demonstrating how to calculate average departure delays by airline and month from an airline on-time performance dataset using different approaches, including Hadoop streaming, hive, RHIPE and rmr.

Hive vs Pig for HadoopSourceCodeReading

Hive vs Pig for HadoopSourceCodeReadingMitsuharu Hamba The document provides information about Hive and Pig, two frameworks for analyzing large datasets using Hadoop. It compares Hive and Pig, noting that Hive uses a SQL-like language called HiveQL to manipulate data, while Pig uses Pig Latin scripts and operates on data flows. The document also includes code examples demonstrating how to use basic operations in Hive and Pig like loading data, performing word counts, joins, and outer joins on sample datasets.

Hadoop interview question

Hadoop interview questionpappupassindia Hadoop is an open source framework for distributed storage and processing of vast amounts of data across clusters of computers. It uses a master-slave architecture with a single JobTracker master and multiple TaskTracker slaves. The JobTracker schedules tasks like map and reduce jobs on TaskTrackers, which each run task instances in separate JVMs. It monitors task progress and reschedules failed tasks. Hadoop uses MapReduce programming model where the input is split and mapped in parallel, then outputs are shuffled, sorted, and reduced to form the final results.

Word Embedding for Nearest Words

Word Embedding for Nearest WordsEkaKurniawan40 This document summarizes Bukalapak's process for generating word embeddings from product names to find similar words. It involved preprocessing over 300 million product names, training a word2vec model on the text, exporting the model for serving, and deploying model server and client applications to retrieve similar words through an API. The preprocessing used tokenization and filtering to extract words from names, while the model was trained using skip-gram architecture on GPUs over 1.5 months to produce a 500MB embedding.

Hadoop, Hbase and Hive- Bay area Hadoop User Group

Hadoop, Hbase and Hive- Bay area Hadoop User GroupHadoop User Group This document summarizes Facebook's use cases and architecture for integrating Apache Hive and HBase. It discusses loading data from Hive into HBase tables using INSERT statements, querying HBase tables from Hive using SELECT statements, and maintaining low latency access to dimension tables stored in HBase while performing analytics on fact data stored in Hive. The architecture involves writing a storage handler and SerDe to map between the two systems and executing Hive queries by generating MapReduce jobs that read from or write to HBase.

Hadoop 31-frequently-asked-interview-questions

Hadoop 31-frequently-asked-interview-questionsAsad Masood Qazi The document contains 31 questions and answers related to Hadoop concepts. It covers topics like common input formats in Hadoop, differences between TextInputFormat and KeyValueInputFormat, what are InputSplits and how they are created, how partitioning, shuffling and sorting occurs after the map phase, what is a combiner, functions of JobTracker and TaskTracker, how speculative execution works, using distributed cache and counters, setting number of mappers/reducers, writing custom partitioners, debugging Hadoop jobs, and failure handling processes for production Hadoop jobs.

GPU Accelerated Machine Learning

GPU Accelerated Machine LearningSri Ambati Deep learning algorithms have benefited greatly from the recent performance gains of GPUs. However, it has been unclear whether GPUs can speed up machine learning algorithms such as generalized linear modeling, random forests, gradient boosting machines, and clustering. H2O.ai, the leading open source AI company, is bringing the best-of-breed data science and machine learning algorithms to GPUs.

We introduce H2O4GPU, a fully featured machine learning library that is optimized for GPUs with a robust python API that is drop dead replacement for scikit-learn. We'll demonstrate benchmarks for the most common algorithms relevant to enterprise AI and showcase performance gains as compared to running on CPUs.

Jon’s Bio:

https://umdphysics.umd.edu/people/faculty/current/item/337-jcm.html

Please view the video here:

Hadoop for Java Professionals

Hadoop for Java ProfessionalsEdureka! With the surge in Big Data, organizations have began to implement Big Data related technologies as a part of their system. This has lead to a huge need to update existing skillsets with Hadoop. Java professionals are one such people who have to update themselves with Hadoop skills.

Viewers also liked (12)

BioPig for scalable analysis of big sequencing data

BioPig for scalable analysis of big sequencing dataZhong Wang This document introduces BioPig, a Hadoop-based analytic toolkit for large-scale genomic sequence analysis. BioPig aims to provide a flexible, high-level, and scalable platform to enable domain experts to build custom analysis pipelines. It leverages Hadoop's data parallelism to speed up bioinformatics tasks like k-mer counting and assembly. The document demonstrates how BioPig can analyze over 1 terabase of metagenomic data using just 7 lines of code, much more simply than alternative MPI-based solutions. While challenges remain around optimization and integration, BioPig shows promise for scalable genomic analytics on very large datasets.

Poster Hadoop summit 2011: pig embedding in scripting languages

Poster Hadoop summit 2011: pig embedding in scripting languagesJulien Le Dem Pig Scripting allows Pig to be used for iterative algorithms and user defined functions by embedding Pig within a scripting language. This allows transitive closure, an iterative process, to be computed with one file using Python functions as UDFs and Python variables in Pig scripts, rather than requiring seven files and Java UDFs as plain Pig would.

Data Eng Conf NY Nov 2016 Parquet Arrow

Data Eng Conf NY Nov 2016 Parquet ArrowJulien Le Dem - Arrow and Parquet are open source projects focused on column-oriented data formats for efficient in-memory (Arrow) and on-disk (Parquet) analytics.

- They allow for interoperability across systems by eliminating the overhead of data serialization and enabling common data representations.

- Column-oriented formats improve performance by reducing storage needs, enabling projection of only needed columns, and better utilization of CPU/memory through cache locality and vectorized processing.

Reducing the dimensionality of data with neural networks

Reducing the dimensionality of data with neural networksHakky St (1) The document describes using neural networks called autoencoders to perform dimensionality reduction on data in a nonlinear way. Autoencoders use an encoder network to transform high-dimensional data into a low-dimensional code, and a decoder network to recover the data from the code.

(2) The autoencoders are trained to minimize the discrepancy between the original and reconstructed data. Experiments on image and face datasets showed autoencoders outperforming principal components analysis at reconstructing the original data from the low-dimensional code.

(3) Pretraining the autoencoder layers using restricted Boltzmann machines helps optimize the many weights in deep autoencoders and scale the approach to large datasets.

Strata NY 2016: The future of column-oriented data processing with Arrow and ...

Strata NY 2016: The future of column-oriented data processing with Arrow and ...Julien Le Dem In pursuit of speed, big data is evolving toward columnar execution. The solid foundation laid by Arrow and Parquet for a shared columnar representation across the ecosystem promises a great future. Julien Le Dem and Jacques Nadeau discuss the future of columnar and the hardware trends it takes advantage of, like RDMA, SSDs, and nonvolatile memory.

05 k-means clustering

05 k-means clusteringSubhas Kumar Ghosh K-Means clustering is an algorithm that partitions data points into k clusters based on their distances from initial cluster center points. It is commonly used for classification applications on large datasets and can be parallelized by duplicating cluster centers and processing each data point independently. Mahout provides implementations of K-Means clustering and other algorithms that can operate on distributed datasets stored in Hadoop SequenceFiles.

Low Latency Execution For Apache Spark

Low Latency Execution For Apache SparkJen Aman This document describes Drizzle, a low latency execution engine for Apache Spark. It addresses the high overheads of Spark's centralized scheduling model by decoupling execution from scheduling through batch scheduling and pre-scheduling of shuffles. Microbenchmarks show Drizzle achieves milliseconds latency for iterative workloads compared to hundreds of milliseconds for Spark. End-to-end experiments show Drizzle improves latency for streaming and machine learning workloads like logistic regression. The authors are working on automatic batch tuning and an open source release of Drizzle.

Parquet Strata/Hadoop World, New York 2013

Parquet Strata/Hadoop World, New York 2013Julien Le Dem Parquet is a columnar storage format for Hadoop data. It was developed collaboratively by Twitter and Cloudera to address the need for efficient analytics on large datasets. Parquet provides more efficient compression and I/O compared to row-based formats by only reading and decompressing the columns needed by a query. It has been adopted by many companies for analytics workloads involving terabytes to petabytes of data. Parquet is language-independent and supports integration with frameworks like Hive, Pig, and Impala. It provides significant performance improvements and storage savings compared to traditional row-based formats.

Efficient Data Storage for Analytics with Apache Parquet 2.0

Efficient Data Storage for Analytics with Apache Parquet 2.0Cloudera, Inc. Apache Parquet is an open-source columnar storage format for efficient data storage and analytics. It provides efficient compression and encoding techniques that enable fast scans and queries of large datasets. Parquet 2.0 improves on these efficiencies through enhancements like delta encoding, binary packing designed for CPU efficiency, and predicate pushdown using statistics. Benchmark results show Parquet provides much better compression and query performance than row-oriented formats on big data workloads. The project is developed as an open-source community with contributions from many organizations.

If you have your own Columnar format, stop now and use Parquet 😛

If you have your own Columnar format, stop now and use Parquet 😛Julien Le Dem Lightning talk presented at HPTS 2015: http://hpts.ws/

Apache Parquet is the de facto standard columnar storage for big data. Open source and proprietary SQL engines already integrate with it as their users don’t want to load and duplicate their data in every tool. Users want an open, interoperable, efficient format to experiment with the many options they have. The format is defined by the open source community integrating feedback from many teams working on query engines (including but not limited to Impala, Drill, Hawq, SparkSQL, Presto, Hive, etc) or on infrastructure at scale (Twitter, Netflix, Stripe, Criteo, ...). Building on its initial success, the Parquet community is defining new features for the next iteration of the format. For example: improved metadata layout, type system completude or mergeable statistics used for planning.

Choosing an HDFS data storage format- Avro vs. Parquet and more - StampedeCon...

Choosing an HDFS data storage format- Avro vs. Parquet and more - StampedeCon...StampedeCon At the StampedeCon 2015 Big Data Conference: Picking your distribution and platform is just the first decision of many you need to make in order to create a successful data ecosystem. In addition to things like replication factor and node configuration, the choice of file format can have a profound impact on cluster performance. Each of the data formats have different strengths and weaknesses, depending on how you want to store and retrieve your data. For instance, we have observed performance differences on the order of 25x between Parquet and Plain Text files for certain workloads. However, it isn’t the case that one is always better than the others.

Pig and Python to Process Big Data

Pig and Python to Process Big DataShawn Hermans Shawn Hermans gave a presentation on using Pig and Python for big data analysis. He began with an introduction and background about himself. The presentation covered what big data is, challenges in working with large datasets, and how tools like MapReduce, Pig and Python can help address these challenges. As examples, he demonstrated using Pig to analyze US Census data and propagate satellite positions from Two Line Element sets. He also showed how to extend Pig with Python user defined functions.

Ad

Similar to Embedding Pig in scripting languages (20)

BRV CTO Summit Deep Learning Talk

BRV CTO Summit Deep Learning TalkDoug Chang This document outlines an agenda for a CTO summit on machine learning and deep learning topics. It includes discussions on CNN and RNN architectures, word embeddings, entity embeddings, reinforcement learning, and tips for training deep neural networks. Specific applications mentioned include self-driving cars, image captioning, language modeling, and modeling store sales. It also includes summaries of papers and links to code examples.

Need to make a horizontal change across 100+ microservices? No worries, Sheph...

Need to make a horizontal change across 100+ microservices? No worries, Sheph...Aori Nevo, PhD In this talk, you’ll be introduced to an innovative,

powerful, flexible, and easy-to-use tool developed by the

folks at NerdWallet that simplifies some of the complexities

associated with making horizontal changes in service

oriented systems via automation.

Incredible Machine with Pipelines and Generators

Incredible Machine with Pipelines and Generatorsdantleech The document discusses using generators and pipelines in PHP to build a performance testing tool called J-Meter. It begins by explaining generators in PHP and how they allow yielding control and passing values between functions. This enables building asynchronous pipelines where stages can be generators. Various PHP frameworks and patterns for asynchronous programming with generators are mentioned. The document concludes by outlining how generators and pipelines could be used to build the major components of a J-Meter-like performance testing tool in PHP.

Go - techniques for writing high performance Go applications

Go - techniques for writing high performance Go applicationsss63261 High Performance Go Presentation - techniques for writing high performance Go applications.

Pig

PigVetri V Pig is a data flow language and execution environment for exploring very large datasets on Hadoop clusters. Pig scripts are written using a scripting language that allows for rapid prototyping without compilation. Pig provides high-level operations like FILTER, FOREACH, GROUP, and JOIN that allow users to analyze data without writing MapReduce programs directly. The core abstraction in Pig is the relation, which is analogous to a table. Relations can be nested using complex data types like tuples and bags. Users can extend Pig's functionality using User Defined Functions. Key operations in Pig like GROUP and JOIN introduce "map-reduce barriers" that require a MapReduce job to be executed.

High Quality Symfony Bundles tutorial - Dutch PHP Conference 2014

High Quality Symfony Bundles tutorial - Dutch PHP Conference 2014Matthias Noback Slides for my talk "High Quality Symfony Bundles" tutorial at the Dutch PHP Conference 2014 (http://phpconference.nl).

Streaming Data in R

Streaming Data in RRory Winston This document discusses concurrency in R and the need for multithreading capabilities. It presents a motivating example of using shared memory via the bigmemoRy package to decouple data processing across multiple R sessions for real-time streaming data analysis. While R itself is single-threaded, packages like bigmemoRy allow sharing data between processes through inter-process communication to enable concurrent processing of streaming data in R.

Introduction to Google App Engine with Python

Introduction to Google App Engine with PythonBrian Lyttle Google App Engine is a cloud development platform that allows users to build and host web applications on Google's infrastructure. It provides automatic scaling for applications and manages all server maintenance. Development is done locally in Python and code is pushed to the cloud. The platform provides data storage, user authentication, URL fetching, task queues, and other services via APIs. While initially limited to Python and Java, it now supports other languages as well. Usage is free for small applications under a monthly quota, and priced based on usage for larger applications.

MobileConf 2021 Slides: Let's build macOS CLI Utilities using Swift

MobileConf 2021 Slides: Let's build macOS CLI Utilities using SwiftDiego Freniche Brito How to write CLI apps for macOS using swift, which packages to use, common challenges to overcome, how to structure your CLI app code.

Concurrent Programming OpenMP @ Distributed System Discussion

Concurrent Programming OpenMP @ Distributed System DiscussionCherryBerry2 This powerpoint presentation discusses OpenMP, a programming interface that allows for parallel programming on shared memory architectures. It covers the basic architecture of OpenMP, its core elements like directives and runtime routines, advantages like portability, and disadvantages like potential synchronization bugs. Examples are provided of using OpenMP directives to parallelize a simple "Hello World" program across multiple threads. Fine-grained and coarse-grained parallelism are also defined.

Easy deployment & management of cloud apps

Easy deployment & management of cloud appsDavid Cunningham The document discusses tools for deploying and managing cloud applications including Terraform, Packer, and Jsonnet. Terraform allows declarative configuration of infrastructure resources, Packer builds machine images, and Jsonnet is a configuration language designed to generate JSON or YAML files from reusable templates. The document demonstrates how to use these tools together to deploy a sample application with load balancing and auto-scaling on Google Cloud Platform. It also proposes ways to further abstract configurations and synchronize application and infrastructure details for improved usability.

Who pulls the strings?

Who pulls the strings?Ronny This document summarizes integrating the OpenNMS network monitoring platform with modern configuration management tools like Puppet. It discusses using Puppet to provision and automatically configure nodes in OpenNMS from Puppet's configuration data. The authors provide code for pulling node data from Puppet's REST API and generating an XML file for OpenNMS to import the nodes and their configuration. They also discuss opportunities to further improve the integration by developing a Java object model for Puppet's YAML output and filtering imports based on node attributes.

Python1

Python1AllsoftSolutions This document provides an overview of learning Python in three hours. It covers installing and running Python, basic data types like integers, floats and strings. It also discusses sequence types like lists, tuples and strings, including accessing elements, slicing, and using operators like + and *. The document explains basic syntax like comments, indentation and naming conventions. It provides examples of simple functions and scripts.

High Performance NodeJS

High Performance NodeJSDicoding Harimurti Prasetio (CTO NoLimit) - High Performance Node.js

Dicoding Space - Bandung Developer Day 3

https://www.dicoding.com/events/76

2nd puc computer science chapter 8 function overloading

2nd puc computer science chapter 8 function overloadingAahwini Esware gowda 2nd puc computer science chapter 8 function overloading ,types of function overloading ,syntax function overloading ,example function overloading

inline function, friend function ,

Pemrograman Python untuk Pemula

Pemrograman Python untuk PemulaOon Arfiandwi This document provides an introduction to programming with Python for beginners. It covers basic Python concepts like variables, data types, operators, conditional statements, functions, loops, strings and lists. It also demonstrates how to build simple web applications using Google App Engine and Python, including templating with Jinja2, storing data in the Datastore and handling web forms. The goal is to teach the fundamentals of Python programming and get started with cloud development on Google Cloud Platform.

The GO Language : From Beginners to Gophers

The GO Language : From Beginners to GophersI.I.S. G. Vallauri - Fossano These are the slides for the seminar to have a basic overview on the GO Language, By Alessandro Sanino.

They were used on a Lesson in University of Turin (Computer Science Department) 11-06-2018

Puppet quick start guide

Puppet quick start guideSuhan Dharmasuriya The document provides an overview and quick start guide for learning Puppet. It discusses what Puppet is, how to install a Puppet master and agent, Puppet modules and templates, and looping elements in templates. The guide outlines three sessions: 1) configuring a master and agent, 2) using modules and templates, and 3) looping in templates. It provides configuration examples and explains how to generate files on the agent from templates using Puppet runs.

Ad

More from Julien Le Dem (18)

Data and AI summit: data pipelines observability with open lineage

Data and AI summit: data pipelines observability with open lineageJulien Le Dem Presentation of Data lineage an Observability with OpenLineage at the "Data and AI summit" (formerly Spark summit). With a focus on the Apache Spark integration for OpenLineage

Data pipelines observability: OpenLineage & Marquez

Data pipelines observability: OpenLineage & MarquezJulien Le Dem This document discusses OpenLineage and Marquez, which aim to provide standardized metadata and data lineage collection for data pipelines. OpenLineage defines an open standard for collecting metadata as data moves through pipelines, similar to metadata collected by EXIF for images. Marquez is an open source implementation of this standard, which can collect metadata from various data tools and store it in a graph database for querying lineage and understanding dependencies. This collected metadata helps with tasks like troubleshooting, impact analysis, and understanding how data flows through complex pipelines over time.

Open core summit: Observability for data pipelines with OpenLineage

Open core summit: Observability for data pipelines with OpenLineageJulien Le Dem This document discusses Open Lineage and the Marquez project for collecting metadata and data lineage information from data pipelines. It describes how Open Lineage defines a standard model and protocol for instrumentation to collect metadata on jobs, datasets, and runs in a consistent way. This metadata can then provide context on the data source, schema, owners, usage, and changes. The document outlines how Marquez implements the Open Lineage standard by defining entities, relationships, and facets to store this metadata and enable use cases like data governance, discovery, and debugging. It also positions Marquez as a centralized but modular framework to integrate various data platforms and extensions like Datakin's lineage analysis tools.

Data platform architecture principles - ieee infrastructure 2020

Data platform architecture principles - ieee infrastructure 2020Julien Le Dem This document discusses principles for building a healthy data platform, including:

1. Establishing explicit contracts between teams to define dependencies and service level agreements.

2. Abstracting the data platform into services for ingesting, storing, and processing data in motion and at rest.

3. Enabling observability of data pipelines through metadata collection and integration with tools like Marquez to provide lineage, availability, and change management visibility.

Data lineage and observability with Marquez - subsurface 2020

Data lineage and observability with Marquez - subsurface 2020Julien Le Dem This document discusses Marquez, an open source metadata management system. It provides an overview of Marquez and how it can be used to track metadata in data pipelines. Specifically:

- Marquez collects and stores metadata about data sources, datasets, jobs, and runs to provide data lineage and observability.

- It has a modular framework to support data governance, data lineage, and data discovery. Metadata can be collected via REST APIs or language SDKs.

- Marquez integrates with Apache Airflow to collect task-level metadata, dependencies between DAGs, and link tasks to code versions. This enables understanding of operational dependencies and troubleshooting.

- The Marquez community aims to build an open

Strata NY 2018: The deconstructed database

Strata NY 2018: The deconstructed databaseJulien Le Dem The document discusses the evolution of big data systems from flat files in Hadoop to deconstructed databases. Originally, Hadoop used flat files with no schema and tied data storage to execution. This was flexible but inefficient. SQL databases provided schemas and separated storage from execution but were inflexible. Modern systems decompose databases into independent components like storage, processing, and machine learning that can be mixed and matched. Standards are emerging for columnar storage, querying, schemas, and data exchange. Future improvements include better interoperability, data abstractions, and governance.

From flat files to deconstructed database

From flat files to deconstructed databaseJulien Le Dem From flat files to deconstructed databases:

- Originally, Hadoop used flat files and MapReduce which was flexible but inefficient for queries.

- The database world used SQL and relational models with optimizations but were inflexible.

- Now components like storage, processing, and machine learning can be mixed and matched more efficiently with standards like Apache Calcite, Parquet, Avro and Arrow.

Strata NY 2017 Parquet Arrow roadmap

Strata NY 2017 Parquet Arrow roadmapJulien Le Dem This document summarizes Apache Parquet and Apache Arrow, two open source projects for columnar data formats. It discusses how Parquet provides an on-disk columnar format for storage while Arrow provides an in-memory columnar format. The document outlines how Arrow builds on the success of Parquet by providing a common in-memory format that avoids serialization overhead and allows systems to share functionality. It provides examples of performance gains from the vertical integration of Parquet and Arrow.

The columnar roadmap: Apache Parquet and Apache Arrow

The columnar roadmap: Apache Parquet and Apache ArrowJulien Le Dem This document discusses Apache Parquet and Apache Arrow, open source projects for columnar data formats. Parquet is an on-disk columnar format that optimizes I/O performance through compression and projection pushdown. Arrow is an in-memory columnar format that maximizes CPU efficiency through vectorized processing and SIMD. It aims to serve as a standard in-memory format between systems. The document outlines how Arrow builds on Parquet's success and provides benefits like reduced serialization overhead and ability to share functionality through its ecosystem. It also describes how Parquet and Arrow representations are integrated through techniques like vectorized reading and predicate pushdown.

Improving Python and Spark Performance and Interoperability with Apache Arrow

Improving Python and Spark Performance and Interoperability with Apache ArrowJulien Le Dem This document discusses improving Python and Spark performance and interoperability with Apache Arrow. It begins with an overview of current limitations of PySpark UDFs, such as inefficient data movement and scalar computation. It then introduces Apache Arrow, an open source in-memory columnar data format, and how it can help by allowing more efficient data sharing and vectorized computation. The document shows how Arrow improved PySpark UDF performance by 53x through vectorization and reduced serialization. It outlines future plans to further optimize UDFs and integration with Spark and other projects.

Mule soft mar 2017 Parquet Arrow

Mule soft mar 2017 Parquet ArrowJulien Le Dem This document discusses the future of column-oriented data processing with Apache Arrow and Apache Parquet. Arrow provides an open standard for in-memory columnar data, while Parquet provides an open standard for on-disk columnar data storage. Together they provide interoperability across systems and high performance by avoiding data copying and format conversions. The document outlines the goals and benefits of Arrow and Parquet, how they improve CPU and I/O efficiency, and examples of performance gains from integrating systems like Spark and Pandas with Arrow.

Strata London 2016: The future of column oriented data processing with Arrow ...

Strata London 2016: The future of column oriented data processing with Arrow ...Julien Le Dem Talk given at Strata London 2016: The future of column oriented data processing with Arrow and Parquet

Sql on everything with drill

Sql on everything with drillJulien Le Dem It’s no longer a world of just relational databases. Companies are increasingly adopting specialized datastores such as Hadoop, HBase, MongoDB, Elasticsearch, Solr and S3. Apache Drill, an open source, in-memory, columnar SQL execution engine, enables interactive SQL queries against more datastores.

How to use Parquet as a basis for ETL and analytics

How to use Parquet as a basis for ETL and analyticsJulien Le Dem Parquet is a columnar format designed to be extremely efficient and interoperable across the hadoop ecosystem. Its integration in most of the Hadoop processing frameworks (Impala, Hive, Pig, Cascading, Crunch, Scalding, Spark, …) and serialization models (Thrift, Avro, Protocol Buffers, …) makes it easy to use in existing ETL and processing pipelines, while giving flexibility of choice on the query engine (whether in Java or C++). In this talk, we will describe how one can us Parquet with a wide variety of data analysis tools like Spark, Impala, Pig, Hive, and Cascading to create powerful, efficient data analysis pipelines. Data management is simplified as the format is self describing and handles schema evolution. Support for nested structures enables more natural modeling of data for Hadoop compared to flat representations that create the need for often costly joins.

Efficient Data Storage for Analytics with Parquet 2.0 - Hadoop Summit 2014

Efficient Data Storage for Analytics with Parquet 2.0 - Hadoop Summit 2014Julien Le Dem Apache Parquet is an open-source columnar storage format for efficient data storage and analytics. It provides efficient compression and encoding techniques that enable fast scans and queries of large datasets. Parquet 2.0 improves on these efficiencies through techniques like delta encoding, dictionary encoding, run-length encoding and binary packing designed for CPU and cache optimizations. Benchmark results show Parquet provides much better compression and faster query performance than other formats like text, Avro and RCFile. The project is developed as an open source community with contributions from many organizations.

Parquet Hadoop Summit 2013

Parquet Hadoop Summit 2013Julien Le Dem Parquet is a columnar storage format for Hadoop data. It was developed by Twitter and Cloudera to optimize storage and querying of large datasets. Parquet provides more efficient compression and I/O compared to traditional row-based formats by storing data by column. Early results show a 28% reduction in storage size and up to a 114% improvement in query performance versus the original Thrift format. Parquet supports complex nested schemas and can be used with Hadoop tools like Hive, Pig, and Impala.

Parquet Twitter Seattle open house

Parquet Twitter Seattle open houseJulien Le Dem This document discusses Parquet, an open-source columnar file format for Hadoop. It was created at Twitter to optimize their analytics infrastructure, which includes several large Hadoop clusters processing data from their 200M+ users. Parquet aims to improve on existing storage formats by organizing data column-wise for better compression and scanning capabilities. It uses a row group structure and supports efficient reads of individual columns. Initial results at Twitter found a 28% space savings over existing formats and scan performance improvements. The project is open source and aims to continue optimizing column storage and enabling new execution engines for Hadoop.

Parquet overview

Parquet overviewJulien Le Dem Parquet is a column-oriented storage format for Hadoop that supports efficient compression and encoding techniques. It uses a row group structure to store data in columns in a compressed and encoded column chunk format. The schema and metadata are stored in the file footer to allow for efficient reads and scans of selected columns. The format is designed to be extensible through pluggable components for schema conversion, record materialization, and encodings.

Recently uploaded (20)

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In France

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In Francechb3 The latest updates on Manifest's pre-seed stage progress.

AI and Data Privacy in 2025: Global Trends

AI and Data Privacy in 2025: Global TrendsInData Labs In this infographic, we explore how businesses can implement effective governance frameworks to address AI data privacy. Understanding it is crucial for developing effective strategies that ensure compliance, safeguard customer trust, and leverage AI responsibly. Equip yourself with insights that can drive informed decision-making and position your organization for success in the future of data privacy.

This infographic contains:

-AI and data privacy: Key findings

-Statistics on AI data privacy in the today’s world

-Tips on how to overcome data privacy challenges

-Benefits of AI data security investments.

Keep up-to-date on how AI is reshaping privacy standards and what this entails for both individuals and organizations.

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager API

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager APIUiPathCommunity Join this UiPath Community Berlin meetup to explore the Orchestrator API, Swagger interface, and the Test Manager API. Learn how to leverage these tools to streamline automation, enhance testing, and integrate more efficiently with UiPath. Perfect for developers, testers, and automation enthusiasts!

📕 Agenda

Welcome & Introductions

Orchestrator API Overview

Exploring the Swagger Interface

Test Manager API Highlights

Streamlining Automation & Testing with APIs (Demo)

Q&A and Open Discussion

Perfect for developers, testers, and automation enthusiasts!

👉 Join our UiPath Community Berlin chapter: https://community.uipath.com/berlin/

This session streamed live on April 29, 2025, 18:00 CET.

Check out all our upcoming UiPath Community sessions at https://community.uipath.com/events/.

Procurement Insights Cost To Value Guide.pptx

Procurement Insights Cost To Value Guide.pptxJon Hansen Procurement Insights integrated Historic Procurement Industry Archives, serves as a powerful complement — not a competitor — to other procurement industry firms. It fills critical gaps in depth, agility, and contextual insight that most traditional analyst and association models overlook.

Learn more about this value- driven proprietary service offering here.

Generative Artificial Intelligence (GenAI) in Business

Generative Artificial Intelligence (GenAI) in BusinessDr. Tathagat Varma My talk for the Indian School of Business (ISB) Emerging Leaders Program Cohort 9. In this talk, I discussed key issues around adoption of GenAI in business - benefits, opportunities and limitations. I also discussed how my research on Theory of Cognitive Chasms helps address some of these issues

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...organizerofv IEDM 2024 Tutorial2

How analogue intelligence complements AI

How analogue intelligence complements AIPaul Rowe

Artificial Intelligence is providing benefits in many areas of work within the heritage sector, from image analysis, to ideas generation, and new research tools. However, it is more critical than ever for people, with analogue intelligence, to ensure the integrity and ethical use of AI. Including real people can improve the use of AI by identifying potential biases, cross-checking results, refining workflows, and providing contextual relevance to AI-driven results.

News about the impact of AI often paints a rosy picture. In practice, there are many potential pitfalls. This presentation discusses these issues and looks at the role of analogue intelligence and analogue interfaces in providing the best results to our audiences. How do we deal with factually incorrect results? How do we get content generated that better reflects the diversity of our communities? What roles are there for physical, in-person experiences in the digital world?

Special Meetup Edition - TDX Bengaluru Meetup #52.pptx

Special Meetup Edition - TDX Bengaluru Meetup #52.pptxshyamraj55 We’re bringing the TDX energy to our community with 2 power-packed sessions:

🛠️ Workshop: MuleSoft for Agentforce

Explore the new version of our hands-on workshop featuring the latest Topic Center and API Catalog updates.

📄 Talk: Power Up Document Processing

Dive into smart automation with MuleSoft IDP, NLP, and Einstein AI for intelligent document workflows.

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

Big Data Analytics Quick Research Guide by Arthur Morgan

Big Data Analytics Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...Noah Loul Artificial intelligence is changing how businesses operate. Companies are using AI agents to automate tasks, reduce time spent on repetitive work, and focus more on high-value activities. Noah Loul, an AI strategist and entrepreneur, has helped dozens of companies streamline their operations using smart automation. He believes AI agents aren't just tools—they're workers that take on repeatable tasks so your human team can focus on what matters. If you want to reduce time waste and increase output, AI agents are the next move.

Heap, Types of Heap, Insertion and Deletion

Heap, Types of Heap, Insertion and DeletionJaydeep Kale This pdf will explain what is heap, its type, insertion and deletion in heap and Heap sort

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdf

The Evolution of Meme Coins A New Era for Digital Currency ppt.pdfAbi john Analyze the growth of meme coins from mere online jokes to potential assets in the digital economy. Explore the community, culture, and utility as they elevate themselves to a new era in cryptocurrency.

Quantum Computing Quick Research Guide by Arthur Morgan

Quantum Computing Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

Web and Graphics Designing Training in Rajpura

Web and Graphics Designing Training in RajpuraErginous Technology Web & Graphics Designing Training at Erginous Technologies in Rajpura offers practical, hands-on learning for students, graduates, and professionals aiming for a creative career. The 6-week and 6-month industrial training programs blend creativity with technical skills to prepare you for real-world opportunities in design.

The course covers Graphic Designing tools like Photoshop, Illustrator, and CorelDRAW, along with logo, banner, and branding design. In Web Designing, you’ll learn HTML5, CSS3, JavaScript basics, responsive design, Bootstrap, Figma, and Adobe XD.

Erginous emphasizes 100% practical training, live projects, portfolio building, expert guidance, certification, and placement support. Graduates can explore roles like Web Designer, Graphic Designer, UI/UX Designer, or Freelancer.

For more info, visit erginous.co.in , message us on Instagram at erginoustechnologies, or call directly at +91-89684-38190 . Start your journey toward a creative and successful design career today!

TrsLabs - Fintech Product & Business Consulting

TrsLabs - Fintech Product & Business ConsultingTrs Labs Hybrid Growth Mandate Model with TrsLabs

Strategic Investments, Inorganic Growth, Business Model Pivoting are critical activities that business don't do/change everyday. In cases like this, it may benefit your business to choose a temporary external consultant.

An unbiased plan driven by clearcut deliverables, market dynamics and without the influence of your internal office equations empower business leaders to make right choices.

Getting things done within a budget within a timeframe is key to Growing Business - No matter whether you are a start-up or a big company

Talk to us & Unlock the competitive advantage

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

Mastering Advance Window Functions in SQL.pdf

Mastering Advance Window Functions in SQL.pdfSpiral Mantra How well do you really know SQL?📊

.

.

If PARTITION BY and ROW_NUMBER() sound familiar but still confuse you, it’s time to upgrade your knowledge

And you can schedule a 1:1 call with our industry experts: https://spiralmantra.com/contact-us/ or drop us a mail at [email protected]

Embedding Pig in scripting languages

- 1. Embedding Pig in scripting languagesWhat happens when you feed a Pig to a Python?Julien Le Dem – Principal Engineer - Content Platforms at Yahoo!Pig [email protected]@julienledem

- 2. DisclaimerNo animals were hurtin the making of this presentationI’m cuteI’m hungryPicture credits:OZinOH: http://www.flickr.com/photos/75905404@N00/5421543577/Stephen & Claire Farnsworth: http://www.flickr.com/photos/the_farnsworths/4720850597/

- 3. What for ?Simplifying the implementation of iterative algorithms:Loop and exit criteriaSimpler User Defined FunctionsEasier parameter passing

- 4. BeforeThe implementation has the following artifacts:

- 5. Pig Script(s)warshall_n_minus_1 = LOAD '$workDir/warshall_0' USING BinStorage AS (id1:chararray, id2:chararray, status:chararray);to_join_n_minus_1 = LOAD '$workDir/to_join_0'USING BinStorage AS (id1:chararray, id2:chararray, status:chararray);joined = COGROUP to_join_n_minus_1 BY id2, warshall_n_minus_1 BY id1;followed = FOREACH joinedGENERATE FLATTEN(followRel(to_join_n_minus_1,warshall_n_minus_1));followed_byid = GROUP followed BY id1;warshall_n = FOREACH followed_byidGENERATE group, FLATTEN(coalesceLine(followed.(id2, status)));to_join_n = FILTER warshall_n BY $2 == 'notfollowed' AND $0!=$1;STORE warshall_n INTO '$workDir/warshall_1' USING BinStorage;STORE to_join_n INTO '$workDir/to_join_1 USING BinStorage;

- 6. External loop#!/usr/bin/python import osnum_iter=int(10)for i in range(num_iter):os.system('java -jar ./lib/pig.jar -x local plsi_singleiteration.pig')os.rename('output_results/p_z_u','output_results/p_z_u.'+str(i))os.system('cpoutput_results/p_z_u.nxtoutput_results/p_z_u'); os.rename('output_results/p_z_u.nxt','output_results/p_z_u.'+str(i+1))os.rename('output_results/p_s_z','output_results/p_s_z.'+str(i))os.system('cpoutput_results/p_s_z.nxtoutput_results/p_s_z'); os.rename('output_results/p_s_z.nxt','output_results/p_s_z.'+str(i+1))

- 7. Java UDF(s)

- 9. So… What happens?Credits: Mango Atchar: http://www.flickr.com/photos/mangoatchar/362439607/

- 10. AfterOne script (to rule them all): - main program - UDFs as script functions - embedded Pig statementsAll the algorithm in one place

- 11. ReferencesIt uses JVM implementations of scripting languages (Jython, Rhino).This is a joint effort, see the following Jiras: in Pig 0.8: PIG-928 Python UDFs in Pig0.9: PIG-1479 embedding, PIG-1794 JavaScript supportDoc: http://pig.apache.org/docs/

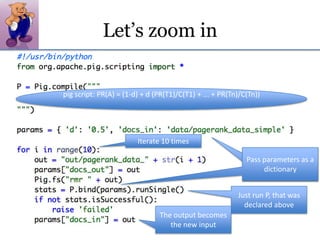

- 12. Examples1) Simple example: fixed loop iteration2) Adding convergence criteria and accessing intermediary output3)More advanced example with UDFs

- 13. 1) A Simple ExamplePageRank:A system of linear equations (as many as there are pages on the web, yeah, a lot): It can be approximated iteratively: compute the new page rank based on the page ranks of the previous iteration. Start with some value.Ref: http://en.wikipedia.org/wiki/PageRank

- 14. Or more visuallyEach page sends a fraction of its PageRank to the pages linked to. Inversely proportional to the number of links.