Presentation sql server to oracle a database migration roadmap

4 likes1,400 views

Download & Share Technology Presentations http://goo.gl/k80oY0 Student Guide & Best http://goo.gl/6OkI77

1 of 25

Ad

Recommended

Introduction to Microsoft Power BI

Introduction to Microsoft Power BICCG Business intelligence dashboards and data visualizations serve as a launching point for better business decision making. Learn how you can leverage Power BI to easily build reports and dashboards with interactive visualizations.

Microsoft SQL Server - SQL Server Migrations Presentation

Microsoft SQL Server - SQL Server Migrations PresentationMicrosoft Private Cloud The document discusses SQL Server migrations from Oracle databases. It highlights top reasons for customers migrating to SQL Server, including lower total cost of ownership, improved performance, and increased developer productivity. It also outlines concerns about migrations and introduces the SQL Server Migration Assistant (SSMA) tool, which automates components of database migrations to SQL Server.

Presentation upgrade, migrate & consolidate to oracle database 12c &...

Presentation upgrade, migrate & consolidate to oracle database 12c &...solarisyougood This document provides an overview of upgrading, migrating, and consolidating to Oracle Database 12c and 11gR2. It discusses new features in Oracle 12c such as automatic data optimization, extreme availability enhancements like Active Data Guard Far Sync, and security features. The document also covers preparing for an upgrade, migration cases, fallback strategies, performance management, and multitenant architecture concepts.

Oracle Enterprise Manager

Oracle Enterprise ManagerBob Rhubart Oracle Enterprise Manager (EM) provides complete lifecycle management for the cloud - from automated cloud setup to self-service delivery to cloud operations. In this session you’ll learn how to take control of your cloud infrastructure with EM features including Consolidation Planning and Self-Service provisioning with Metering and Chargeback. Come hear how Oracle is expanding its management capabilities into the cloud!

(As presented by Adeesh Fulay at Oracle Technology Network Architect Day in Chicago, October 24, 2011.)

An Enterprise Architect's View of MongoDB

An Enterprise Architect's View of MongoDBMongoDB This document discusses how MongoDB can help enterprises meet modern data and application requirements. It outlines the many new technologies and demands placing pressure on enterprises, including big data, mobile, cloud computing, and more. Traditional databases struggle to meet these new demands due to limitations like rigid schemas and difficulty scaling. MongoDB provides capabilities like dynamic schemas, high performance at scale through horizontal scaling, and low total cost of ownership. The document examines how MongoDB has been successfully used by enterprises for use cases like operational data stores and as an enterprise data service to break down silos.

Power BI Interview Questions and Answers | Power BI Certification | Power BI ...

Power BI Interview Questions and Answers | Power BI Certification | Power BI ...Edureka! ( Power BI Training - https://www.edureka.co/power-bi-training )

This Edureka "PowerBI Interview Questions and Answers" tutorial will help you unravel concepts of Power BI and touch those topics that are very vital for succeeding in Power BI Interviews.

This video helps you to learn the following topics:

1. General Power BI Questions

2. DAX

3. Power Pivot

4. Power Query

5. Power Map

6. Additional Questions

Check out our Power BI Playlist: https://goo.gl/97sJv1

Achieving Lakehouse Models with Spark 3.0

Achieving Lakehouse Models with Spark 3.0Databricks It’s very easy to be distracted by the latest and greatest approaches with technology, but sometimes there’s a reason old approaches stand the test of time. Star Schemas & Kimball is one of those things that isn’t going anywhere, but as we move towards the “Data Lakehouse” paradigm – how appropriate is this modelling technique, and how can we harness the Delta Engine & Spark 3.0 to maximise it’s performance?

JDBC Source Connector: What could go wrong? with Francesco Tisiot | Kafka Sum...

JDBC Source Connector: What could go wrong? with Francesco Tisiot | Kafka Sum...HostedbyConfluent When needing to source Database events into Apache Kafka, the JDBC source connector usually represents the first choice for its flexibility and the almost-zero setup required on the database side. But sometimes simplicity comes at the cost of accuracy and missing events can have catastrophic impacts on our data pipelines.

In this session we'll understand how the JDBC source connector works and explore the various modes it can operate to load data in a bulk or incremental manner. Having covered the basics, we'll analyse the edge cases causing things to go wrong like infrequent snapshot times, out of order events, non-incremental sequences or hard deletes.

Finally we'll look at other approaches, like the Debezium source connector, and demonstrate how some more configuration on the database side helps avoid problems and sets up a reliable source of events for our streaming pipeline.

Want to reliably take your Database events into Apache Kafka? This session is for you!

Azure Cosmos DB

Azure Cosmos DBMohamed Tawfik Microsoft Azure Cosmos DB is a multi-model database that supports document, key-value, wide-column and graph data models. It provides high throughput, low latency and global distribution across multiple regions. Cosmos DB supports multiple APIs including SQL, MongoDB, Cassandra and Gremlin to allow developers to use their preferred API based on their application needs and skills. It also provides automatic scaling of throughput and storage across all data partitions.

MySQL: Indexing for Better Performance

MySQL: Indexing for Better Performancejkeriaki This document discusses indexing in MySQL databases to improve query performance. It begins by defining an index as a data structure that speeds up data retrieval from databases. It then covers various types of indexes like primary keys, unique indexes, and different indexing algorithms like B-Tree, hash, and full text. The document discusses when to create indexes, such as on columns frequently used in queries like WHERE clauses. It also covers multi-column indexes, partial indexes, and indexes to support sorting, joining tables, and avoiding full table scans. The concepts of cardinality and selectivity are introduced. The document concludes with a discussion of index overhead and using EXPLAIN to view query execution plans and index usage.

SQL Server Upgrade and Consolidation - Methodology and Approach

SQL Server Upgrade and Consolidation - Methodology and ApproachIndra Dharmawan Workshop Outline

- Today’s Challenges

- What Is Consolidation?

- Consolidation Approach

- The Benefits of Consolidation

- Time to upgrade???

- Why Upgrade?

- Upgrade Methodology

- Upgrade & Consolidation Tools

- Q&A

Big data on aws

Big data on awsSerkan Özal Big data services on AWS:

- Storage (S3, Glacier)

- Analytics&Querying (DynamoDB, Redhshift, RDS, Elasticsearch, CloudSearch, QuickSight)

- Processing (EMR, Kinesis, Lambda, Machine Learning)

- Flow (Firehose, Data Pipeline, DMS, Snowball)

Demo at https://github.com/serkan-ozal/ankaracloudmeetup-bigdata-demo

Rise of the Data Cloud

Rise of the Data CloudKent Graziano This talk will introduce you to the Data Cloud, how it works, and the problems it solves for companies across the globe and across industries. The Data Cloud is a global network where thousands of organizations mobilize data with near-unlimited scale, concurrency, and performance. Inside the Data Cloud, organizations unite their siloed data, easily discover and securely share governed data, and execute diverse analytic workloads. Wherever data or users live, Snowflake delivers a single and seamless experience across multiple public clouds. Snowflake’s platform is the engine that powers and provides access to the Data Cloud

Oracle architecture ppt

Oracle architecture pptDeepak Shetty This document provides an overview of the Oracle database architecture. It describes the major components of Oracle's architecture, including the memory structures like the system global area and program global area, background processes, and the logical and physical storage structures. The key components are the database buffer cache, redo log buffer, shared pool, processes, tablespaces, data files, and redo log files.

The Oracle RAC Family of Solutions - Presentation

The Oracle RAC Family of Solutions - PresentationMarkus Michalewicz Oracle RAC is an option to the Oracle Database Enterprise Edition. At least, this is what it is known for. This presentation shows the many ways in which the stack, which is known as Oracle RAC can be used in the most efficient way for various use cases.

Informatica PowerCenter

Informatica PowerCenterRamy Mahrous All about Informatica PowerCenter features for both Business and Technical staff, it illustrates how Informatica PowerCenter solves core business challenges in Data Integration projects.

Azure Data Engineer Certification | How to Become Azure Data Engineer

Azure Data Engineer Certification | How to Become Azure Data EngineerIntellipaat In this Azure Data Engineer Certification video, you will learn about Azure basics, Azure Data Engineer Certification, and how to become an Azure Data Engineer. This is a must-watch video for everyone who wishes to learn Azure and make a career in it.

Power BI Architecture

Power BI ArchitectureArthur Graus Power BI is a business analytics service that allows users to analyze data and share insights. It includes dashboards, reports, and datasets that can be viewed on mobile devices. Power BI integrates with various data sources and platforms like SQL Server, Azure, and Office 365. It provides self-service business intelligence capabilities for end users to explore and visualize data without assistance from IT departments.

Autonomous Database Explained

Autonomous Database ExplainedNeagu Alexandru Cristian The document discusses Oracle Autonomous Database and provides an agenda for a presentation. The agenda includes:

1. An overview of what Autonomous Database is and how it provides self-driving, self-securing, and self-repairing capabilities.

2. Key use cases for Autonomous Transaction Processing such as for transactional applications and mixed workloads.

3. How Autonomous Database can be used with microservices architectures.

4. Use cases for Autonomous Data Warehouse such as for data marts, warehouses, sandboxes, and machine learning.

5. How Autonomous Data Warehouse integrates with Oracle Analytics Cloud.

6. A demonstration of getting hands-on with

Common MongoDB Use Cases

Common MongoDB Use CasesDATAVERSITY This document discusses common use cases for MongoDB and why it is well-suited for them. It describes how MongoDB can handle high volumes of data feeds, operational intelligence and analytics, product data management, user data management, and content management. Its flexible data model, high performance, scalability through sharding and replication, and support for dynamic schemas make it a good fit for applications that need to store large amounts of data, handle high throughput of reads and writes, and have low latency requirements.

Vertica Analytics Database general overview

Vertica Analytics Database general overviewStratebi Vertica is an advanced analytics platform that combines high-performance query processing with advanced analytics and machine learning capabilities. It bridges the gap between high-cost legacy data warehouses and less powerful Hadoop data lakes. Vertica uses a massively parallel processing architecture to deliver fast analytics on large datasets regardless of where the data resides. It has been implemented by various companies across industries to drive customer experience management, operational analytics, and fraud detection through applications like predictive maintenance, customer churn analysis, and network optimization.

A Cloud Journey - Move to the Oracle Cloud

A Cloud Journey - Move to the Oracle CloudMarkus Michalewicz Presented the "A Cloud Journey - Move to the Oracle Cloud" on behalf of Ricardo Gonzalez during Bulgarian Oracle User Group Spring Conference 2019. This presentation discusses various methods on how to migrate to the Oracle Cloud and provides recommendations as to which tool to use (and where to find it) especially assuming that Zero Downtime Migration is desired, for which the new Zero Downtime Migration tool is described and discussed in detail. More information: http://www.oracle.com/goto/move

MySQL Tutorial For Beginners | Relational Database Management System | MySQL ...

MySQL Tutorial For Beginners | Relational Database Management System | MySQL ...Edureka! ( ** MYSQL DBA Certification Training https://www.edureka.co/mysql-dba ** )

This Edureka tutorial PPT on MySQL explains all the fundamentals of MySQL with examples.

The following are the topics covered in this tutorial:

1. What is Database & DBMS?

2. Structured Query Language

3. MySQL & MySQL Workbench

4. Entity Relationship Diagram

5. Normalization

6. SQL Operations & Commands

Follow us to never miss an update in the future.

Instagram: https://www.instagram.com/edureka_learning/

Facebook: https://www.facebook.com/edurekaIN/

Twitter: https://twitter.com/edurekain

LinkedIn: https://www.linkedin.com/company/edureka

Preparing for EBS R12.2-upgrade-full

Preparing for EBS R12.2-upgrade-fullBerry Clemens The document provides an overview of steps that can be taken today to prepare for Oracle E-Business Suite 12.2. It discusses reviewing functional changes in 12.2, understanding technical architecture changes including the new use of WebLogic Server, and considering an upgrade of the technical infrastructure including the database. It also covers understanding the new online patching process in 12.2 including the patching cycle, edition-based redefinition, and the enablement process for online patching.

Microsoft SQL Server internals & architecture

Microsoft SQL Server internals & architectureKevin Kline From noted SQL Server expert and author Kevin Kline - Let’s face it. You can effectively do many IT jobs related to Microsoft SQL Server without knowing the internals of how SQL Server works. Many great developers, DBAs, and designers get their day-to-day work completed on time and with reasonable quality while never really knowing what’s happening behind the scenes. But if you want to take your skills to the next level, it’s critical to know SQL Server’s internal processes and architecture. This session will answer questions like:

- What are the various areas of memory inside of SQL Server?

- How are queries handled behind the scenes?

- What does SQL Server do with procedural code, like functions, procedures, and triggers?

- What happens during checkpoints? Lazywrites?

- How are IOs handled with regards to transaction logs and database?

- What happens when transaction logs and databases grow or shrinks?

This fast paced session will take you through many aspects of the internal operations of SQL Server and, for those topics we don’t cover, will point you to resources where you can get more information.

Top 10 tips for Oracle performance (Updated April 2015)

Top 10 tips for Oracle performance (Updated April 2015)Guy Harrison This document provides a summary of Guy Harrison's top 10 Oracle database tuning tips presentation. The tips include being methodical and empirical in tuning, optimizing database design, indexing wisely, writing efficient code, optimizing the optimizer, tuning SQL and PL/SQL, monitoring and managing contention, optimizing memory to reduce I/O, and tuning I/O last but tuning it well. The document discusses each tip in more detail and provides examples and best practices for implementing them.

Less17 moving data

Less17 moving dataAmit Bhalla This document provides an overview of moving data in and out of Oracle databases. It describes SQL*Loader, external tables, Oracle Data Pump, and legacy Oracle export and import utilities. Key points include: SQL*Loader loads data from files, external tables access external file data as database objects, Data Pump provides high-speed data and metadata movement with tools like expdp and impdp, and legacy utilities can be used in Data Pump legacy mode.

How to Manage Scale-Out Environments with MariaDB MaxScale

How to Manage Scale-Out Environments with MariaDB MaxScaleMariaDB plc MariaDB MaxScale is a database proxy that provides scalability, security, and high availability for MariaDB deployments. It supports load balancing, connection routing, and replication to scale database environments without application impact. MaxScale includes features like query caching, read/write splitting, multi-tenant routing, and binlog replication to optimize performance. It can also stream change data capture to big data platforms and provides tools to help manage operations.

Database migration

Database migrationOpris Monica This document summarizes the key aspects and process of migrating an Oracle database to SQL Server 2008/2012. It discusses the major components being migrated - schema, data, applications. The major steps are analysis, migration, testing and deployment. It then focuses on using Microsoft's SQL Server Migration Assistant (SSMA) for Oracle tool to migrate the schema, data, and business logic between the two databases.

Database migration

Database migrationOpris Monica This document summarizes the key aspects and process of migrating an Oracle database to SQL Server 2008/2012. It discusses the major components being migrated - schema, data, applications. The major steps are analysis, migration, testing and deployment. It then focuses on using Microsoft's SQL Server Migration Assistant (SSMA) for Oracle tool to migrate the schema, business logic, data types and validate the migrated data.

Ad

More Related Content

What's hot (20)

Azure Cosmos DB

Azure Cosmos DBMohamed Tawfik Microsoft Azure Cosmos DB is a multi-model database that supports document, key-value, wide-column and graph data models. It provides high throughput, low latency and global distribution across multiple regions. Cosmos DB supports multiple APIs including SQL, MongoDB, Cassandra and Gremlin to allow developers to use their preferred API based on their application needs and skills. It also provides automatic scaling of throughput and storage across all data partitions.

MySQL: Indexing for Better Performance

MySQL: Indexing for Better Performancejkeriaki This document discusses indexing in MySQL databases to improve query performance. It begins by defining an index as a data structure that speeds up data retrieval from databases. It then covers various types of indexes like primary keys, unique indexes, and different indexing algorithms like B-Tree, hash, and full text. The document discusses when to create indexes, such as on columns frequently used in queries like WHERE clauses. It also covers multi-column indexes, partial indexes, and indexes to support sorting, joining tables, and avoiding full table scans. The concepts of cardinality and selectivity are introduced. The document concludes with a discussion of index overhead and using EXPLAIN to view query execution plans and index usage.

SQL Server Upgrade and Consolidation - Methodology and Approach

SQL Server Upgrade and Consolidation - Methodology and ApproachIndra Dharmawan Workshop Outline

- Today’s Challenges

- What Is Consolidation?

- Consolidation Approach

- The Benefits of Consolidation

- Time to upgrade???

- Why Upgrade?

- Upgrade Methodology

- Upgrade & Consolidation Tools

- Q&A

Big data on aws

Big data on awsSerkan Özal Big data services on AWS:

- Storage (S3, Glacier)

- Analytics&Querying (DynamoDB, Redhshift, RDS, Elasticsearch, CloudSearch, QuickSight)

- Processing (EMR, Kinesis, Lambda, Machine Learning)

- Flow (Firehose, Data Pipeline, DMS, Snowball)

Demo at https://github.com/serkan-ozal/ankaracloudmeetup-bigdata-demo

Rise of the Data Cloud

Rise of the Data CloudKent Graziano This talk will introduce you to the Data Cloud, how it works, and the problems it solves for companies across the globe and across industries. The Data Cloud is a global network where thousands of organizations mobilize data with near-unlimited scale, concurrency, and performance. Inside the Data Cloud, organizations unite their siloed data, easily discover and securely share governed data, and execute diverse analytic workloads. Wherever data or users live, Snowflake delivers a single and seamless experience across multiple public clouds. Snowflake’s platform is the engine that powers and provides access to the Data Cloud

Oracle architecture ppt

Oracle architecture pptDeepak Shetty This document provides an overview of the Oracle database architecture. It describes the major components of Oracle's architecture, including the memory structures like the system global area and program global area, background processes, and the logical and physical storage structures. The key components are the database buffer cache, redo log buffer, shared pool, processes, tablespaces, data files, and redo log files.

The Oracle RAC Family of Solutions - Presentation

The Oracle RAC Family of Solutions - PresentationMarkus Michalewicz Oracle RAC is an option to the Oracle Database Enterprise Edition. At least, this is what it is known for. This presentation shows the many ways in which the stack, which is known as Oracle RAC can be used in the most efficient way for various use cases.

Informatica PowerCenter

Informatica PowerCenterRamy Mahrous All about Informatica PowerCenter features for both Business and Technical staff, it illustrates how Informatica PowerCenter solves core business challenges in Data Integration projects.

Azure Data Engineer Certification | How to Become Azure Data Engineer

Azure Data Engineer Certification | How to Become Azure Data EngineerIntellipaat In this Azure Data Engineer Certification video, you will learn about Azure basics, Azure Data Engineer Certification, and how to become an Azure Data Engineer. This is a must-watch video for everyone who wishes to learn Azure and make a career in it.

Power BI Architecture

Power BI ArchitectureArthur Graus Power BI is a business analytics service that allows users to analyze data and share insights. It includes dashboards, reports, and datasets that can be viewed on mobile devices. Power BI integrates with various data sources and platforms like SQL Server, Azure, and Office 365. It provides self-service business intelligence capabilities for end users to explore and visualize data without assistance from IT departments.

Autonomous Database Explained

Autonomous Database ExplainedNeagu Alexandru Cristian The document discusses Oracle Autonomous Database and provides an agenda for a presentation. The agenda includes:

1. An overview of what Autonomous Database is and how it provides self-driving, self-securing, and self-repairing capabilities.

2. Key use cases for Autonomous Transaction Processing such as for transactional applications and mixed workloads.

3. How Autonomous Database can be used with microservices architectures.

4. Use cases for Autonomous Data Warehouse such as for data marts, warehouses, sandboxes, and machine learning.

5. How Autonomous Data Warehouse integrates with Oracle Analytics Cloud.

6. A demonstration of getting hands-on with

Common MongoDB Use Cases

Common MongoDB Use CasesDATAVERSITY This document discusses common use cases for MongoDB and why it is well-suited for them. It describes how MongoDB can handle high volumes of data feeds, operational intelligence and analytics, product data management, user data management, and content management. Its flexible data model, high performance, scalability through sharding and replication, and support for dynamic schemas make it a good fit for applications that need to store large amounts of data, handle high throughput of reads and writes, and have low latency requirements.

Vertica Analytics Database general overview

Vertica Analytics Database general overviewStratebi Vertica is an advanced analytics platform that combines high-performance query processing with advanced analytics and machine learning capabilities. It bridges the gap between high-cost legacy data warehouses and less powerful Hadoop data lakes. Vertica uses a massively parallel processing architecture to deliver fast analytics on large datasets regardless of where the data resides. It has been implemented by various companies across industries to drive customer experience management, operational analytics, and fraud detection through applications like predictive maintenance, customer churn analysis, and network optimization.

A Cloud Journey - Move to the Oracle Cloud

A Cloud Journey - Move to the Oracle CloudMarkus Michalewicz Presented the "A Cloud Journey - Move to the Oracle Cloud" on behalf of Ricardo Gonzalez during Bulgarian Oracle User Group Spring Conference 2019. This presentation discusses various methods on how to migrate to the Oracle Cloud and provides recommendations as to which tool to use (and where to find it) especially assuming that Zero Downtime Migration is desired, for which the new Zero Downtime Migration tool is described and discussed in detail. More information: http://www.oracle.com/goto/move

MySQL Tutorial For Beginners | Relational Database Management System | MySQL ...

MySQL Tutorial For Beginners | Relational Database Management System | MySQL ...Edureka! ( ** MYSQL DBA Certification Training https://www.edureka.co/mysql-dba ** )

This Edureka tutorial PPT on MySQL explains all the fundamentals of MySQL with examples.

The following are the topics covered in this tutorial:

1. What is Database & DBMS?

2. Structured Query Language

3. MySQL & MySQL Workbench

4. Entity Relationship Diagram

5. Normalization

6. SQL Operations & Commands

Follow us to never miss an update in the future.

Instagram: https://www.instagram.com/edureka_learning/

Facebook: https://www.facebook.com/edurekaIN/

Twitter: https://twitter.com/edurekain

LinkedIn: https://www.linkedin.com/company/edureka

Preparing for EBS R12.2-upgrade-full

Preparing for EBS R12.2-upgrade-fullBerry Clemens The document provides an overview of steps that can be taken today to prepare for Oracle E-Business Suite 12.2. It discusses reviewing functional changes in 12.2, understanding technical architecture changes including the new use of WebLogic Server, and considering an upgrade of the technical infrastructure including the database. It also covers understanding the new online patching process in 12.2 including the patching cycle, edition-based redefinition, and the enablement process for online patching.

Microsoft SQL Server internals & architecture

Microsoft SQL Server internals & architectureKevin Kline From noted SQL Server expert and author Kevin Kline - Let’s face it. You can effectively do many IT jobs related to Microsoft SQL Server without knowing the internals of how SQL Server works. Many great developers, DBAs, and designers get their day-to-day work completed on time and with reasonable quality while never really knowing what’s happening behind the scenes. But if you want to take your skills to the next level, it’s critical to know SQL Server’s internal processes and architecture. This session will answer questions like:

- What are the various areas of memory inside of SQL Server?

- How are queries handled behind the scenes?

- What does SQL Server do with procedural code, like functions, procedures, and triggers?

- What happens during checkpoints? Lazywrites?

- How are IOs handled with regards to transaction logs and database?

- What happens when transaction logs and databases grow or shrinks?

This fast paced session will take you through many aspects of the internal operations of SQL Server and, for those topics we don’t cover, will point you to resources where you can get more information.

Top 10 tips for Oracle performance (Updated April 2015)

Top 10 tips for Oracle performance (Updated April 2015)Guy Harrison This document provides a summary of Guy Harrison's top 10 Oracle database tuning tips presentation. The tips include being methodical and empirical in tuning, optimizing database design, indexing wisely, writing efficient code, optimizing the optimizer, tuning SQL and PL/SQL, monitoring and managing contention, optimizing memory to reduce I/O, and tuning I/O last but tuning it well. The document discusses each tip in more detail and provides examples and best practices for implementing them.

Less17 moving data

Less17 moving dataAmit Bhalla This document provides an overview of moving data in and out of Oracle databases. It describes SQL*Loader, external tables, Oracle Data Pump, and legacy Oracle export and import utilities. Key points include: SQL*Loader loads data from files, external tables access external file data as database objects, Data Pump provides high-speed data and metadata movement with tools like expdp and impdp, and legacy utilities can be used in Data Pump legacy mode.

How to Manage Scale-Out Environments with MariaDB MaxScale

How to Manage Scale-Out Environments with MariaDB MaxScaleMariaDB plc MariaDB MaxScale is a database proxy that provides scalability, security, and high availability for MariaDB deployments. It supports load balancing, connection routing, and replication to scale database environments without application impact. MaxScale includes features like query caching, read/write splitting, multi-tenant routing, and binlog replication to optimize performance. It can also stream change data capture to big data platforms and provides tools to help manage operations.

Viewers also liked (9)

Database migration

Database migrationOpris Monica This document summarizes the key aspects and process of migrating an Oracle database to SQL Server 2008/2012. It discusses the major components being migrated - schema, data, applications. The major steps are analysis, migration, testing and deployment. It then focuses on using Microsoft's SQL Server Migration Assistant (SSMA) for Oracle tool to migrate the schema, data, and business logic between the two databases.

Database migration

Database migrationOpris Monica This document summarizes the key aspects and process of migrating an Oracle database to SQL Server 2008/2012. It discusses the major components being migrated - schema, data, applications. The major steps are analysis, migration, testing and deployment. It then focuses on using Microsoft's SQL Server Migration Assistant (SSMA) for Oracle tool to migrate the schema, business logic, data types and validate the migrated data.

Migrating Fast to Solr

Migrating Fast to SolrCominvent AS This document discusses migrating from Microsoft FAST to Apache Solr. It provides an overview of the migration process and key steps to consider, such as mapping the FAST index profile to the Solr schema, migrating content by feeding it into Solr, and options for migrating document processing and the search middleware. It also addresses operational concerns and provides additional resources for learning more about Solr.

Oracle To Sql Server migration process

Oracle To Sql Server migration processharirk1986 This document discusses key aspects of migrating a database from SQL Server to Oracle 11g. The major steps in a migration are analysis, migration, testing, and deployment. The migration process involves migrating the schema and objects, business logic, and client applications. Tools like Oracle Migration Workbench and Database Migration Verifier help automate the migration and validation of the migrated schema and data.

Database migration

Database migrationSankar Patnaik Database migration is the process of transferring data between different database systems or upgrades. It involves analyzing and mapping data from the source to the target system, transforming the data, validating data quality, and maintaining the migrated data. For example, Capital One migrated from Oracle to Teradata databases as their data volume grew too large for Oracle to efficiently handle. The migration process includes pre-migration planning, extraction, transformation, data loading, validation, and post-migration maintenance.

Agile Methodology - Data Migration v1.0

Agile Methodology - Data Migration v1.0Julian Samuels The document discusses applying an agile methodology to a data migration project from Basecamp to SharePoint. It describes agile as an iterative approach involving collaboration between cross-functional teams in sprint sessions to deliver functionality. An agile data migration would involve these teams working in sprints to map data from the old to new systems and transfer it over incrementally. The document outlines the various stages and roles needed in an agile data migration project, including planning, analysis, development, testing, deployment and closing stages.

Data migration

Data migrationVatsala Chauhan This document discusses data migration in Oracle E-Business Suite. It covers migrating data to Oracle using open interfaces/APIs, Oracle utilities like FNDLOAD and iSetup, and third party tools like DataLoad and Mercury Object Migrator. It also discusses migrating data from Oracle by creating materialized views or using the Business Event System to define custom events. The document provides an overview of different data migration scenarios and options for loading both setup, master, and transactional data in Oracle E-Business Suite.

Ms sql server architecture

Ms sql server architectureAjeet Singh The document discusses various disaster recovery strategies for SQL Server including failover clustering, database mirroring, and peer-to-peer transactional replication. It provides advantages and disadvantages of each approach. It also outlines the steps to configure replication for Always On Availability Groups which involves setting up publications and subscriptions, configuring the availability group, and redirecting the original publisher to the listener name.

Preparing a data migration plan: A practical guide

Preparing a data migration plan: A practical guideETLSolutions The document provides guidance on preparing a data migration plan. It discusses the importance of project scoping, methodology, data preparation, and data security when planning a data migration. Specifically, it recommends thoroughly reviewing all aspects of the project and data in the planning stages to identify risks and issues early. This helps reduce risks and ensures the migration is completed according to best practices.

Ad

Similar to Presentation sql server to oracle a database migration roadmap (20)

Large scale, interactive ad-hoc queries over different datastores with Apache...

Large scale, interactive ad-hoc queries over different datastores with Apache...jaxLondonConference Presented at JAX London 2013

Apache Drill is a distributed system for interactive ad-hoc query and analysis of large-scale datasets. It is the Open Source version of Google’s Dremel technology. Apache Drill is designed to scale to thousands of servers and able to process Petabytes of data in seconds, enabling SQL-on-Hadoop and supporting a variety of data sources.

Engineering practices in big data storage and processing

Engineering practices in big data storage and processingSchubert Zhang

Data

Preparation

Offline

Data

(CRM)

Data

Warehouse

Data

Mining

Data

Visualization

离线批量分析为主

19

大数据服务平台

用户

应用

Web应用

移动应用

数据服务平台

数据源

数据仓库

数据处理

数据分析

数据可视化

原则:

1. 提供统一的数据访问接口

2. 支持多种数据源与多种应用

3. 以离线批量分析为主,兼顾实时

Oracle OpenWo2014 review part 03 three_paa_s_database

Oracle OpenWo2014 review part 03 three_paa_s_databaseGetting value from IoT, Integration and Data Analytics This document provides a summary of Oracle OpenWorld 2014 discussions on database cloud, in-memory database, native JSON support, big data, and Internet of Things (IoT) technologies. Key points include:

- Database Cloud on Oracle offers pay-as-you-go pricing and self-service provisioning similar to on-premise databases.

- Oracle Database 12c includes an in-memory option that can provide up to 100x faster analytics queries and 2-4x faster transaction processing.

- Native JSON support in 12c allows storing and querying JSON documents within the database.

- Big data technologies like Oracle Big Data SQL and Oracle Big Data Discovery help analyze large and diverse data sets from sources like

HTAP Queries

HTAP QueriesAtif Shaikh The document discusses HTAP (Hybrid Transactional/Analytical Processing), data fabrics, and key PostgreSQL features that enable data fabrics. It describes HTAP as addressing resource contention by allowing mixed workloads on the same system and analytics on inflight transactional data. Data fabrics are defined as providing a logical unified data model, distributed cache, query federation, and semantic normalization across an enterprise data fabric cluster. Key PostgreSQL features that support data fabrics include its schema store, distributed cache, query federation, optimization, and normalization capabilities as well as foreign data wrappers.

Databricks Platform.pptx

Databricks Platform.pptxAlex Ivy The document provides an overview of the Databricks platform, which offers a unified environment for data engineering, analytics, and AI. It describes how Databricks addresses the complexity of managing data across siloed systems by providing a single "data lakehouse" platform where all data and analytics workloads can be run. Key features highlighted include Delta Lake for ACID transactions on data lakes, auto loader for streaming data ingestion, notebooks for interactive coding, and governance tools to securely share and catalog data and models.

2021 04-20 apache arrow and its impact on the database industry.pptx

2021 04-20 apache arrow and its impact on the database industry.pptxAndrew Lamb The talk will motivate why Apache Arrow and related projects (e.g. DataFusion) is a good choice for implementing modern analytic database systems. It reviews the major components in most databases and explains where Apache Arrow fits in, and explains additional integration benefits from using Arrow.

Denodo Partner Connect: Technical Webinar - Ask Me Anything

Denodo Partner Connect: Technical Webinar - Ask Me AnythingDenodo Watch full webinar here: https://buff.ly/47jH4lk

In this session, Denodo experts will cover a deeper dive into the top 5 differentiated use cases for Denodo by answering any questions since the previous session.

Additionally, we invite partners to bring any general questions related to Denodo, the Denodo Platform, or data management.

Prague data management meetup 2018-03-27

Prague data management meetup 2018-03-27Martin Bém This document discusses different data types and data models. It begins by describing unstructured, semi-structured, and structured data. It then discusses relational and non-relational data models. The document notes that big data can include any of these data types and models. It provides an overview of Microsoft's data management and analytics platform and tools for working with structured, semi-structured, and unstructured data at varying scales. These include offerings like SQL Server, Azure SQL Database, Azure Data Lake Store, Azure Data Lake Analytics, HDInsight and Azure Data Warehouse.

VMworld 2013: Virtualizing Databases: Doing IT Right

VMworld 2013: Virtualizing Databases: Doing IT Right VMworld

VMworld 2013

Michael Corey, Ntirety, Inc

Jeff Szastak, VMware

Learn more about VMworld and register at http://www.vmworld.com/index.jspa?src=socmed-vmworld-slideshare

Data Modeling on Azure for Analytics

Data Modeling on Azure for AnalyticsIke Ellis When you model data you are making two decisions:

* The location where data will be stored

* How the data will be organized for ease of use

5 Steps for Migrating Relational Databases to Next-Gen Architectures

5 Steps for Migrating Relational Databases to Next-Gen ArchitecturesNuoDB The current “cloud first” revolution has exposed an ugly secret: Traditional databases simply cannot meet the high-scale, high availability, high customer expectation reality that business are facing. As more customers begin migrating mission-critical applications to database platforms that can inherently support next-generation flexibility and agility, it’s no wonder that the market for alternative database solutions is growing rapidly.

In this webinar, NayaTech CTO David Yahalom and NuoDB VP of Products Ariff Kassam discuss the primary motivators behind the growing adoption of next-generation, cloud-centric database technologies and the five steps to ensure such database migration projects are successful.

Topics include:

The main drivers behind the booming adoption of cloud-native, elastic, next-generation database technologies and the paradigm shift in the database technologies market.

The challenges for database migrations - from data movement and schema conversion to achieving feature parity with traditional commercial databases.

The five steps - from planning to execution - for a successful migration across different database platforms.

Sql Server 2005 Business Inteligence

Sql Server 2005 Business Inteligenceabercius24 An overview of Intregation Services, Analysis Services and Reporting Services in supporting Business Intelligence using Microsoft Sql Server 2005.

Data Handning with Sqlite for Android

Data Handning with Sqlite for AndroidJakir Hossain This document provides an overview of SQLite, including:

- SQLite is an embedded SQL database that is not a client-server system and stores the entire database in a single disk file.

- It supports ACID transactions for reliability and data integrity.

- SQLite is used widely in applications like web browsers, Adobe software, Android, and more due to its small size and not requiring a separate database server.

- The Android SDK includes classes for managing SQLite databases like SQLiteDatabase for executing queries, updates and deletes.

Microsoft Data Integration Pipelines: Azure Data Factory and SSIS

Microsoft Data Integration Pipelines: Azure Data Factory and SSISMark Kromer The document discusses tools for building ETL pipelines to consume hybrid data sources and load data into analytics systems at scale. It describes how Azure Data Factory and SQL Server Integration Services can be used to automate pipelines that extract, transform, and load data from both on-premises and cloud data stores into data warehouses and data lakes for analytics. Specific patterns shown include analyzing blog comments, sentiment analysis with machine learning, and loading a modern data warehouse.

Cerebro: Bringing together data scientists and bi users - Royal Caribbean - S...

Cerebro: Bringing together data scientists and bi users - Royal Caribbean - S...Thomas W. Fry Cerebro: Bringing together data scientists and BI users on a common analytics platform in the cloud

https://conferences.oreilly.com/strata/strata-eu-2019/public/schedule/detail/77861

ETL 2.0 Data Engineering for developers

ETL 2.0 Data Engineering for developersMicrosoft Tech Community The document summarizes the Databricks analytics platform, which provides a unified environment powered by Apache Spark. It integrates with Azure services and provides features like interactive collaboration, native security integration, and one-click setup. It also discusses capabilities like schema management, data consistency, elastic scalability, and automatic upgrades.

Oracle's history

Oracle's historyGeorgi Sotirov The history of a 40 years old database. The presentation reviews Oracle's history and evolution of development features

Avast Premium Security 24.12.9725 + License Key Till 2050

Avast Premium Security 24.12.9725 + License Key Till 2050asfadnew DIRECT LINK BELOW🎁✔👇

https://serialhax.com/after-verification-click-go-to-download-page/

☝☝✅👉 Note: >> Please copy the link and paste it into Google New Tab now Download link And Enjoy 😍

Serif Affinity Photo Crack 2.3.1.2217 + Serial Key [Latest]![Serif Affinity Photo Crack 2.3.1.2217 + Serial Key [Latest]](https://cdn.slidesharecdn.com/ss_thumbnails/starrockstechnicaloverview-250128095832-74508123-thumbnail.jpg?width=560&fit=bounds)

![Serif Affinity Photo Crack 2.3.1.2217 + Serial Key [Latest]](https://cdn.slidesharecdn.com/ss_thumbnails/starrockstechnicaloverview-250128095832-74508123-thumbnail.jpg?width=560&fit=bounds)

![Serif Affinity Photo Crack 2.3.1.2217 + Serial Key [Latest]](https://cdn.slidesharecdn.com/ss_thumbnails/starrockstechnicaloverview-250128095832-74508123-thumbnail.jpg?width=560&fit=bounds)

![Serif Affinity Photo Crack 2.3.1.2217 + Serial Key [Latest]](https://cdn.slidesharecdn.com/ss_thumbnails/starrockstechnicaloverview-250128095832-74508123-thumbnail.jpg?width=560&fit=bounds)

Serif Affinity Photo Crack 2.3.1.2217 + Serial Key [Latest]hyby22543 DIRECT LINK BELOW🎁✔👇

https://serialhax.com/after-verification-click-go-to-download-page/

☝☝✅👉 Note: >> Please copy the link and paste it into Google New Tab now Download link And Enjoy 😍

Oracle OpenWo2014 review part 03 three_paa_s_database

Oracle OpenWo2014 review part 03 three_paa_s_databaseGetting value from IoT, Integration and Data Analytics

Ad

More from xKinAnx (20)

Engage for success ibm spectrum accelerate 2

Engage for success ibm spectrum accelerate 2xKinAnx IBM Spectrum Accelerate is software that extends the capabilities of IBM's XIV storage system, such as consistent performance tuning-free, to new delivery models. It provides enterprise storage capabilities deployed in minutes instead of months. Spectrum Accelerate runs the proven XIV software on commodity x86 servers and storage, providing similar features and functions to an XIV system. It offers benefits like business agility, flexibility, simplified acquisition and deployment, and lower administration and training costs.

Accelerate with ibm storage ibm spectrum virtualize hyper swap deep dive

Accelerate with ibm storage ibm spectrum virtualize hyper swap deep divexKinAnx The document provides an overview of IBM Spectrum Virtualize HyperSwap functionality. HyperSwap allows host I/O to continue accessing volumes across two sites without interruption if one site fails. It uses synchronous remote copy between two I/O groups to make volumes accessible across both groups. The document outlines the steps to configure a HyperSwap configuration, including naming sites, assigning nodes and hosts to sites, and defining the topology.

Software defined storage provisioning using ibm smart cloud

Software defined storage provisioning using ibm smart cloudxKinAnx This document provides an overview of software-defined storage provisioning using IBM SmartCloud Virtual Storage Center (VSC). It discusses the typical challenges with manual storage provisioning, and how VSC addresses those challenges through automation. VSC's storage provisioning involves three phases - setup, planning, and execution. The setup phase involves adding storage devices, servers, and defining service classes. In the planning phase, VSC creates a provisioning plan based on the request. In the execution phase, the plan is run to automatically complete all configuration steps. The document highlights how VSC optimizes placement and streamlines the provisioning process.

Ibm spectrum virtualize 101

Ibm spectrum virtualize 101 xKinAnx This document discusses IBM Spectrum Virtualize 101 and IBM Spectrum Storage solutions. It provides an overview of software defined storage and IBM Spectrum Virtualize, describing how it achieves storage virtualization and mobility. It also provides details on the new IBM Spectrum Virtualize DH8 hardware platform, including its performance improvements over previous platforms and support for compression acceleration.

Accelerate with ibm storage ibm spectrum virtualize hyper swap deep dive dee...

Accelerate with ibm storage ibm spectrum virtualize hyper swap deep dive dee...xKinAnx HyperSwap provides high availability by allowing volumes to be accessible across two IBM Spectrum Virtualize systems in a clustered configuration. It uses synchronous remote copy to replicate primary and secondary volumes between the two systems, making the volumes appear as a single object to hosts. This allows host I/O to continue if an entire system fails without any data loss. The configuration requires a quorum disk in a third site for the cluster to maintain coordination and survive failures across the two main sites.

04 empalis -ibm_spectrum_protect_-_strategy_and_directions

04 empalis -ibm_spectrum_protect_-_strategy_and_directionsxKinAnx IBM Spectrum Protect (formerly IBM Tivoli Storage Manager) provides data protection and recovery for hybrid cloud environments. This document summarizes a presentation on IBM's strategic direction for Spectrum Protect, including plans to enhance the product to better support hybrid cloud, virtual environments, large-scale deduplication, simplified management, and protection for key workloads. The presentation outlines roadmap features for 2015 and potential future enhancements.

Ibm spectrum scale fundamentals workshop for americas part 1 components archi...

Ibm spectrum scale fundamentals workshop for americas part 1 components archi...xKinAnx The document provides instructions for installing and configuring Spectrum Scale 4.1. Key steps include: installing Spectrum Scale software on nodes; creating a cluster using mmcrcluster and designating primary/secondary servers; verifying the cluster status with mmlscluster; creating Network Shared Disks (NSDs); and creating a file system. The document also covers licensing, system requirements, and IBM and client responsibilities for installation and maintenance.

Ibm spectrum scale fundamentals workshop for americas part 2 IBM Spectrum Sca...

Ibm spectrum scale fundamentals workshop for americas part 2 IBM Spectrum Sca...xKinAnx This document discusses quorum nodes in Spectrum Scale clusters and recovery from failures. It describes how quorum nodes determine the active cluster and prevent partitioning. The document outlines best practices for quorum nodes and provides steps to recover from loss of a quorum node majority or failure of the primary and secondary configuration servers.

Ibm spectrum scale fundamentals workshop for americas part 3 Information Life...

Ibm spectrum scale fundamentals workshop for americas part 3 Information Life...xKinAnx IBM Spectrum Scale can help achieve ILM efficiencies through policy-driven, automated tiered storage management. The ILM toolkit manages file sets and storage pools and automates data management. Storage pools group similar disks and classify storage within a file system. File placement and management policies determine file placement and movement based on rules.

Ibm spectrum scale fundamentals workshop for americas part 4 Replication, Str...

Ibm spectrum scale fundamentals workshop for americas part 4 Replication, Str...xKinAnx The document provides an overview of IBM Spectrum Scale Active File Management (AFM). AFM allows data to be accessed globally across multiple clusters as if it were local by automatically managing asynchronous replication. It describes the various AFM modes including read-only caching, single-writer, and independent writer. It also covers topics like pre-fetching data, cache eviction, cache states, expiration of stale data, and the types of data transferred between home and cache sites.

Ibm spectrum scale fundamentals workshop for americas part 4 spectrum scale_r...

Ibm spectrum scale fundamentals workshop for americas part 4 spectrum scale_r...xKinAnx This document provides information about replication and stretch clusters in IBM Spectrum Scale. It defines replication as synchronously copying file system data across failure groups for redundancy. While replication improves availability, it reduces performance and increases storage usage. Stretch clusters combine two or more clusters to create a single large cluster, typically using replication between sites. Replication policies and failure group configuration are important to ensure effective data duplication.

Ibm spectrum scale fundamentals workshop for americas part 5 spectrum scale_c...

Ibm spectrum scale fundamentals workshop for americas part 5 spectrum scale_c...xKinAnx This document provides information about clustered NFS (cNFS) in IBM Spectrum Scale. cNFS allows multiple Spectrum Scale servers to share a common namespace via NFS, providing high availability, performance, scalability and a single namespace as storage capacity increases. The document discusses components of cNFS including load balancing, monitoring, and failover. It also provides instructions for prerequisites, setup, administration and tuning of a cNFS configuration.

Ibm spectrum scale fundamentals workshop for americas part 6 spectrumscale el...

Ibm spectrum scale fundamentals workshop for americas part 6 spectrumscale el...xKinAnx This document provides an overview of managing Spectrum Scale opportunity discovery and working with external resources to be successful. It discusses how to build presentations and configurations to address technical and philosophical solution requirements. The document introduces IBM Spectrum Scale as providing low latency global data access, linear scalability, and enterprise storage services on standard hardware for on-premise or cloud deployments. It also discusses Spectrum Scale and Elastic Storage Server, noting the latter is a hardware building block with GPFS 4.1 installed. The document provides tips for discovering opportunities through RFPs, RFIs, events, workshops, and engaging clients to understand their needs in order to build compelling proposal information.

Ibm spectrum scale fundamentals workshop for americas part 7 spectrumscale el...

Ibm spectrum scale fundamentals workshop for americas part 7 spectrumscale el...xKinAnx This document provides guidance on sizing and configuring Spectrum Scale and Elastic Storage Server solutions. It discusses collecting information from clients such as use cases, workload characteristics, capacity and performance goals, and infrastructure requirements. It then describes using tools to help architect solutions that meet the client's needs, such as breaking the problem down, addressing redundancy and high availability, and accounting for different sites, tiers, clients and protocols. The document also provides tips for working with the configuration tool and pricing the solution appropriately.

Ibm spectrum scale fundamentals workshop for americas part 8 spectrumscale ba...

Ibm spectrum scale fundamentals workshop for americas part 8 spectrumscale ba...xKinAnx The document provides an overview of key concepts covered in a GPFS 4.1 system administration course, including backups using mmbackup, SOBAR integration, snapshots, quotas, clones, and extended attributes. The document includes examples of commands and procedures for administering these GPFS functions.

Ibm spectrum scale fundamentals workshop for americas part 5 ess gnr-usecases...

Ibm spectrum scale fundamentals workshop for americas part 5 ess gnr-usecases...xKinAnx This document provides an overview of Spectrum Scale 4.1 system administration. It describes the Elastic Storage Server options and components, Spectrum Scale native RAID (GNR), and tips for best practices. GNR implements sophisticated data placement and error correction algorithms using software RAID to provide high reliability and performance without additional hardware. It features auto-rebalancing, low rebuild overhead through declustering, and end-to-end data checksumming.

Presentation disaster recovery in virtualization and cloud

Presentation disaster recovery in virtualization and cloudxKinAnx Download & Share Technology

Presentations http://ouo.io/XKLUj

Student Guide & Best http://ouo.io/8u1RP

Presentation disaster recovery for oracle fusion middleware with the zfs st...

Presentation disaster recovery for oracle fusion middleware with the zfs st...xKinAnx Download & Share Technology

Presentations http://ouo.io/XKLUj

Student Guide & Best http://ouo.io/8u1RP

Presentation differentiated virtualization for enterprise clouds, large and...

Presentation differentiated virtualization for enterprise clouds, large and...xKinAnx Download & Share Technology

Presentations http://ouo.io/XKLUj

Student Guide & Best http://ouo.io/8u1RP

Presentation desktops for the cloud the view rollout

Presentation desktops for the cloud the view rolloutxKinAnx Download & Share Technology

Presentations http://ouo.io/XKLUj

Student Guide & Best http://ouo.io/8u1RP

Recently uploaded (20)

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager API

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager APIUiPathCommunity Join this UiPath Community Berlin meetup to explore the Orchestrator API, Swagger interface, and the Test Manager API. Learn how to leverage these tools to streamline automation, enhance testing, and integrate more efficiently with UiPath. Perfect for developers, testers, and automation enthusiasts!

📕 Agenda

Welcome & Introductions

Orchestrator API Overview

Exploring the Swagger Interface

Test Manager API Highlights

Streamlining Automation & Testing with APIs (Demo)

Q&A and Open Discussion

Perfect for developers, testers, and automation enthusiasts!

👉 Join our UiPath Community Berlin chapter: https://community.uipath.com/berlin/

This session streamed live on April 29, 2025, 18:00 CET.

Check out all our upcoming UiPath Community sessions at https://community.uipath.com/events/.

Semantic Cultivators : The Critical Future Role to Enable AI

Semantic Cultivators : The Critical Future Role to Enable AIartmondano By 2026, AI agents will consume 10x more enterprise data than humans, but with none of the contextual understanding that prevents catastrophic misinterpretations.

Rusty Waters: Elevating Lakehouses Beyond Spark

Rusty Waters: Elevating Lakehouses Beyond Sparkcarlyakerly1 Spark is a powerhouse for large datasets, but when it comes to smaller data workloads, its overhead can sometimes slow things down. What if you could achieve high performance and efficiency without the need for Spark?

At S&P Global Commodity Insights, having a complete view of global energy and commodities markets enables customers to make data-driven decisions with confidence and create long-term, sustainable value. 🌍

Explore delta-rs + CDC and how these open-source innovations power lightweight, high-performance data applications beyond Spark! 🚀

"Client Partnership — the Path to Exponential Growth for Companies Sized 50-5...

"Client Partnership — the Path to Exponential Growth for Companies Sized 50-5...Fwdays Why the "more leads, more sales" approach is not a silver bullet for a company.

Common symptoms of an ineffective Client Partnership (CP).

Key reasons why CP fails.

Step-by-step roadmap for building this function (processes, roles, metrics).

Business outcomes of CP implementation based on examples of companies sized 50-500.

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...Alan Dix Talk at the final event of Data Fusion Dynamics: A Collaborative UK-Saudi Initiative in Cybersecurity and Artificial Intelligence funded by the British Council UK-Saudi Challenge Fund 2024, Cardiff Metropolitan University, 29th April 2025

https://alandix.com/academic/talks/CMet2025-AI-Changes-Everything/

Is AI just another technology, or does it fundamentally change the way we live and think?

Every technology has a direct impact with micro-ethical consequences, some good, some bad. However more profound are the ways in which some technologies reshape the very fabric of society with macro-ethical impacts. The invention of the stirrup revolutionised mounted combat, but as a side effect gave rise to the feudal system, which still shapes politics today. The internal combustion engine offers personal freedom and creates pollution, but has also transformed the nature of urban planning and international trade. When we look at AI the micro-ethical issues, such as bias, are most obvious, but the macro-ethical challenges may be greater.

At a micro-ethical level AI has the potential to deepen social, ethnic and gender bias, issues I have warned about since the early 1990s! It is also being used increasingly on the battlefield. However, it also offers amazing opportunities in health and educations, as the recent Nobel prizes for the developers of AlphaFold illustrate. More radically, the need to encode ethics acts as a mirror to surface essential ethical problems and conflicts.

At the macro-ethical level, by the early 2000s digital technology had already begun to undermine sovereignty (e.g. gambling), market economics (through network effects and emergent monopolies), and the very meaning of money. Modern AI is the child of big data, big computation and ultimately big business, intensifying the inherent tendency of digital technology to concentrate power. AI is already unravelling the fundamentals of the social, political and economic world around us, but this is a world that needs radical reimagining to overcome the global environmental and human challenges that confront us. Our challenge is whether to let the threads fall as they may, or to use them to weave a better future.

Big Data Analytics Quick Research Guide by Arthur Morgan

Big Data Analytics Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

2025-05-Q4-2024-Investor-Presentation.pptx

2025-05-Q4-2024-Investor-Presentation.pptxSamuele Fogagnolo Cloudflare Q4 Financial Results Presentation

Buckeye Dreamin 2024: Assessing and Resolving Technical Debt

Buckeye Dreamin 2024: Assessing and Resolving Technical DebtLynda Kane Slide Deck from Buckeye Dreamin' 2024 presentation Assessing and Resolving Technical Debt. Focused on identifying technical debt in Salesforce and working towards resolving it.

Network Security. Different aspects of Network Security.

Network Security. Different aspects of Network Security.gregtap1 Network Security. Different aspects of Network Security.

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

How Can I use the AI Hype in my Business Context?

How Can I use the AI Hype in my Business Context?Daniel Lehner 𝙄𝙨 𝘼𝙄 𝙟𝙪𝙨𝙩 𝙝𝙮𝙥𝙚? 𝙊𝙧 𝙞𝙨 𝙞𝙩 𝙩𝙝𝙚 𝙜𝙖𝙢𝙚 𝙘𝙝𝙖𝙣𝙜𝙚𝙧 𝙮𝙤𝙪𝙧 𝙗𝙪𝙨𝙞𝙣𝙚𝙨𝙨 𝙣𝙚𝙚𝙙𝙨?

Everyone’s talking about AI but is anyone really using it to create real value?

Most companies want to leverage AI. Few know 𝗵𝗼𝘄.

✅ What exactly should you ask to find real AI opportunities?

✅ Which AI techniques actually fit your business?

✅ Is your data even ready for AI?

If you’re not sure, you’re not alone. This is a condensed version of the slides I presented at a Linkedin webinar for Tecnovy on 28.04.2025.

Role of Data Annotation Services in AI-Powered Manufacturing

Role of Data Annotation Services in AI-Powered ManufacturingAndrew Leo From predictive maintenance to robotic automation, AI is driving the future of manufacturing. But without high-quality annotated data, even the smartest models fall short.

Discover how data annotation services are powering accuracy, safety, and efficiency in AI-driven manufacturing systems.

Precision in data labeling = Precision on the production floor.

Dev Dives: Automate and orchestrate your processes with UiPath Maestro

Dev Dives: Automate and orchestrate your processes with UiPath MaestroUiPathCommunity This session is designed to equip developers with the skills needed to build mission-critical, end-to-end processes that seamlessly orchestrate agents, people, and robots.

📕 Here's what you can expect:

- Modeling: Build end-to-end processes using BPMN.

- Implementing: Integrate agentic tasks, RPA, APIs, and advanced decisioning into processes.

- Operating: Control process instances with rewind, replay, pause, and stop functions.

- Monitoring: Use dashboards and embedded analytics for real-time insights into process instances.

This webinar is a must-attend for developers looking to enhance their agentic automation skills and orchestrate robust, mission-critical processes.

👨🏫 Speaker:

Andrei Vintila, Principal Product Manager @UiPath

This session streamed live on April 29, 2025, 16:00 CET.

Check out all our upcoming Dev Dives sessions at https://community.uipath.com/dev-dives-automation-developer-2025/.

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...Impelsys Inc. Impelsys provided a robust testing solution, leveraging a risk-based and requirement-mapped approach to validate ICU Connect and CritiXpert. A well-defined test suite was developed to assess data communication, clinical data collection, transformation, and visualization across integrated devices.

Automation Hour 1/28/2022: Capture User Feedback from Anywhere

Automation Hour 1/28/2022: Capture User Feedback from AnywhereLynda Kane Slide Deck from Automation Hour 1/28/2022 presentation Capture User Feedback from Anywhere presenting setting up a Custom Object and Flow to collection User Feedback in Dynamic Pages and schedule a report to act on that feedback regularly.

"Rebranding for Growth", Anna Velykoivanenko

"Rebranding for Growth", Anna VelykoivanenkoFwdays Since there is no single formula for rebranding, this presentation will explore best practices for aligning business strategy and communication to achieve business goals.

Learn the Basics of Agile Development: Your Step-by-Step Guide

Learn the Basics of Agile Development: Your Step-by-Step GuideMarcel David New to Agile? This step-by-step guide is your perfect starting point. "Learn the Basics of Agile Development" simplifies complex concepts, providing you with a clear understanding of how Agile can improve software development and project management. Discover the benefits of iterative work, team collaboration, and flexible planning.

Presentation sql server to oracle a database migration roadmap

- 1. SQL Server to Oracle A Database Migration Roadmap Louis Shih Superior Court of California County of Sacramento Oracle OpenWorld 2010 San Francisco, California

- 2. Agenda Introduction Institutional Background Migration Criteria Database Migration Methodology SQL/Oracle Tool for Data Migration Questions DataBase Migration Roadmap 2

- 3. Institutional Background The Superior Court of California (SacCourt), County of Sacramento is part of the statewide justice system of 58 trial courts, Appellate Courts and the California Supreme Court. Each county operates a Superior Court that adjudicates criminal, civil, small claims, landlord-tenant, traffic, family law, and juvenile dependency and delinquency matters. SacCourt has 60 judicial officers and 760 staff who processed over 400,000 new cases filed in FY 2008-09. “Our Mission is to assure justice, equality and fairness for all under the law.” DataBase Migration Roadmap 3

- 4. DataBase Migration Roadmap Database Environment SQL Server • SQL Server 6.5, 2000, 2005 32-bit on Window • SQL Server 2008 64-bit on VMWare/Physical Hardware Oracle • Oracle 10G R2, 10G R2 RAC on Sun SPARC Solaris 10 • Oracle Enterprise Manager, Grid Control on Window • Oracle Application Express • Oracle Migration Workbench 4

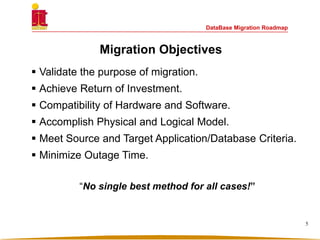

- 5. DataBase Migration Roadmap Migration Objectives Validate the purpose of migration. Achieve Return of Investment. Compatibility of Hardware and Software. Accomplish Physical and Logical Model. Meet Source and Target Application/Database Criteria. Minimize Outage Time. “No single best method for all cases!” 5

- 6. DataBase Migration Roadmap Migration Process Analyze • Database Architecture • Cost-Effectiveness • Risk Mitigation Plan • Routines • Downtime Perform • Data Migration Verify • Migration Success 6

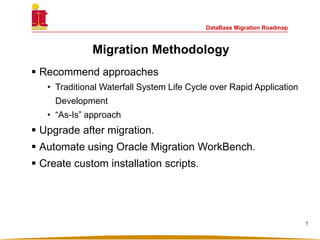

- 7. DataBase Migration Roadmap Migration Methodology Recommend approaches • Traditional Waterfall System Life Cycle over Rapid Application Development • “As-Is” approach Upgrade after migration. Automate using Oracle Migration WorkBench. Create custom installation scripts. 7

- 8. DataBase Migration Roadmap SQL to Oracle Migration 1. Physical and Logical Structure 1.1 Characteristics 1.2 Data Types/Storage 1.3 Recommendations 2. Stored Procedures 3. SQL Migration 4. Database Design 5. Schema Design 6. Data Migration 7. Security 8

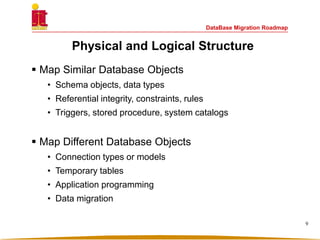

- 9. DataBase Migration Roadmap Map Similar Database Objects • Schema objects, data types • Referential integrity, constraints, rules • Triggers, stored procedure, system catalogs Map Different Database Objects • Connection types or models • Temporary tables • Application programming • Data migration 9 Physical and Logical Structure

- 10. DataBase Migration Roadmap Physical and Logical Structure Memory SMON PMON MMON …….. Process (Dedicated/Shared) SystemTablespace (System,Sysaux, Temp,Undo) User1Tablespace User2Tablespace Oracle Instance/Database Database MasterDB Model,Msdb,Tempdb, resource(2008) Database1 Process Memory Master = System Tablespace Model = Template Tempdb = Undo Msdb = Agent Services System = Sys Objects Sysaux = 10g Nonsys Objects Temp = Sorting Undo = Rollback, Recover SQL Server Database2 10

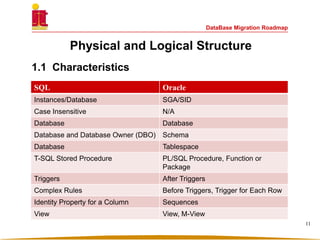

- 11. DataBase Migration Roadmap 1.1 Characteristics SQL Oracle Instances/Database SGA/SID Case Insensitive N/A Database Database Database and Database Owner (DBO) Schema Database Tablespace T-SQL Stored Procedure PL/SQL Procedure, Function or Package Triggers After Triggers Complex Rules Before Triggers, Trigger for Each Row Identity Property for a Column Sequences View View, M-View 11 Physical and Logical Structure

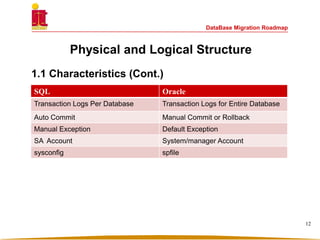

- 12. DataBase Migration Roadmap 1.1 Characteristics (Cont.) SQL Oracle Transaction Logs Per Database Transaction Logs for Entire Database Auto Commit Manual Commit or Rollback Manual Exception Default Exception SA Account System/manager Account sysconfig spfile 12 Physical and Logical Structure

- 13. DataBase Migration Roadmap Physical and Logical Structure 1.2 Data Types/Storage SQL Oracle Integer, Small Int, Tiny Int, Bit, Money, Small Money Number (10, 6, 3, 1, 19, 10) Real, Float Float Text CLOB Image BLOB Binary, VarBinary RAW DateTime, Small DateTime Date Varchar2 (max) LONG, CLOB Varbinary (max) LONG RAW, BLOB, BFILE 13

- 14. DataBase Migration Roadmap Physical and Logical Structure 1.2 Data Types/Storage (Cont.) SQL Oracle Database Devices Datafile Page Data Block Extent Extent and Segments Segments Tablespace (Extent and Segments) Log Devices Redo Log Files Data, Dump N/A 14

- 15. DataBase Migration Roadmap Physical and Logical Structure 1.3 Recommendations SQL Oracle MS Applications tend to use ASP on Clients. ASP uses ADO to communicate to DB. Use Oracle OLE/DB or migrate to JSP. DB Library Use Oracle OCI calls. IIS/ASP IAS/Fusion on JAVA 2 Platform, J2EE Embedded SQL from C/C++ Manual conversion Stored Procedure return Multiple Sets Find driver support Reference Cursors (i.e. DataDirect). Delphi, MS Access (Embedded SQL/C or MS Library) Use ODBC Driver. DBO.Database Transform to Single or Multiple Schema. DTS/SSIS Warehouse Builder 15

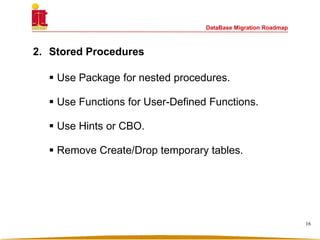

- 16. DataBase Migration Roadmap 2. Stored Procedures Use Package for nested procedures. Use Functions for User-Defined Functions. Use Hints or CBO. Remove Create/Drop temporary tables. 16

- 17. DataBase Migration Roadmap 3. SQL Migration TOP function Dynamic SQL No conversion Case statements Decode Unique identifier (GUID) ROWID or UROWID Example: select newid() vs. select sys_guid() from dual 17

- 18. DataBase Migration Roadmap 4. Database Design Evaluate Constraints • Entity Integrity • Referential Integrity • Unique Key • Check Use Table Partitions. Apply Reverse Key for sequence generated columns. Apply Flashback for restoration. Use Oracle RAC, Active DataGuard for HA/DR. Use Transparent Data Encryptions and remove data encryptions. 18

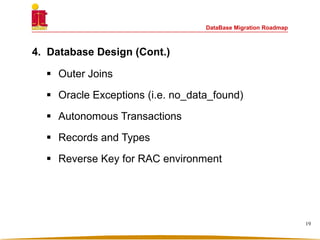

- 19. DataBase Migration Roadmap 4. Database Design (Cont.) Outer Joins Oracle Exceptions (i.e. no_data_found) Autonomous Transactions Records and Types Reverse Key for RAC environment 19

- 20. DataBase Migration Roadmap 5. Schema Design Table (Data Types, Constraints) • Numeric (10, 2) Number (10, 2) • Datetime (Oracle 4712 BC, SQL 01/01/0001 – 12/31/9999) Views (Materialized views) Trigger (Functionality difference) Synonyms (Public or Private) Spatial Create table abc (id number (10) not null, geo dsys.sdo_geometry) vs. Create table abc (id number (10) not null, geo geography) 20

- 21. DataBase Migration Roadmap 5. Schema Design (Cont.) Data Types • Datetime 1/300th of a second vs. 1/100th million of a second Image and Text • Image of data is stored as pointer vs. Image stored in BLOB and Text in CLOB User-Defined • Equivalent to PL/SQL data type Table Design Create table sample (datetime_col datetime not null, integer_col int null, text_col text null, varchar_col varchar2 (10) null) Create table sample (datetime_col date not null, integer_col number null, text_col long null, varchar_col varchar2 (10) null) 21 OracleSQL

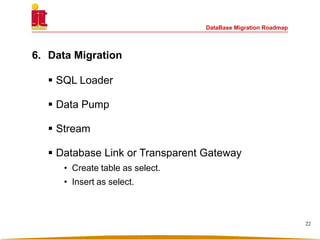

- 22. DataBase Migration Roadmap 6. Data Migration SQL Loader Data Pump Stream Database Link or Transparent Gateway • Create table as select. • Insert as select. 22

- 23. DataBase Migration Roadmap 7. Security Create user accounts in Oracle. Leverage default Role and Privs. Map user accounts to Role. 23

- 24. DataBase Migration Roadmap SQL/Oracle Tool for Migration Oracle Migration Workbench OEM/Grid Control Upgrade SQL to ver. 2005 with Transparent Gateway Oracle APEX Scripting 24

- 25. Questions DataBase Migration Roadmap [email protected] All Rights Reserved ©2010