Ray: A Cluster Computing Engine for Reinforcement Learning Applications with Philipp Moritz and Robert Nishihara

- 1. Ray: A Distributed Execution Framework for Emerging AI Applications Presenters: Philipp Moritz, Robert Nishihara Spark Summit West June 6, 2017

- 3. Why build a new system?

- 10. Supervised Learning → Reinforcement Learning

- 11. Supervised Learning → Reinforcement Learning ● One prediction ● Sequences of actions→

- 12. Supervised Learning → Reinforcement Learning ● One prediction ● Static environments ● Sequences of actions ● Dynamic environments→ →

- 13. Supervised Learning → Reinforcement Learning ● One prediction ● Static environments ● Immediate feedback ● Sequences of actions ● Dynamic environments ● Delayed rewards→ → →

- 14. Process inputs from different sensors in parallel & real-time RL Application Pattern

- 15. Process inputs from different sensors in parallel & real-time Execute large number of simulations, e.g., up to 100s of millions RL Application Pattern

- 16. Process inputs from different sensors in parallel & real-time Execute large number of simulations, e.g., up to 100s of millions Rollouts outcomes are used to update policy (e.g., SGD) Update policy Update policy … … Update policy simulations Update policy … RL Application Pattern

- 17. … Update policy Update policy … Update policy rollout Update policy … Process inputs from different sensors in parallel & real-time Execute large number of simulations, e.g., up to 100s of millions Rollouts outcomes are used to update policy (e.g., SGD) RL Application Pattern

- 18. Process inputs from different sensors in parallel & real-time Execute large number of simulations, e.g., up to 100s of millions Rollouts outcomes are used to update policy (e.g., SGD) Often policies implemented by DNNs actions observations RL Application Pattern

- 19. Process inputs from different sensors in parallel & real-time Execute large number of simulations, e.g., up to 100s of millions Rollouts outcomes are used to update policy (e.g., SGD) Often policies implemented by DNNs Most RL algorithms developed in Python RL Application Pattern

- 20. RL Application Requirements Need to handle dynamic task graphs, where tasks have • Heterogeneous durations • Heterogeneous computations Schedule millions of tasks/sec Make it easy to parallelize ML algorithms written in Python

- 21. Ray API - remote functions def zeros(shape): return np.zeros(shape) def dot(a, b): return np.dot(a, b)

- 22. Ray API - remote functions @ray.remote def zeros(shape): return np.zeros(shape) @ray.remote def dot(a, b): return np.dot(a, b)

- 23. Ray API - remote functions @ray.remote def zeros(shape): return np.zeros(shape) @ray.remote def dot(a, b): return np.dot(a, b) id1 = zeros.remote([5, 5]) id2 = zeros.remote([5, 5]) id3 = dot.remote(id1, id2) ray.get(id3)

- 24. Ray API - remote functions @ray.remote def zeros(shape): return np.zeros(shape) @ray.remote def dot(a, b): return np.dot(a, b) id1 = zeros.remote([5, 5]) id2 = zeros.remote([5, 5]) id3 = dot.remote(id1, id2) ray.get(id3) ● Blue variables are Object IDs.

- 25. Ray API - remote functions @ray.remote def zeros(shape): return np.zeros(shape) @ray.remote def dot(a, b): return np.dot(a, b) id1 = zeros.remote([5, 5]) id2 = zeros.remote([5, 5]) id3 = dot.remote(id1, id2) ray.get(id3) ● Blue variables are Object IDs. id1 id2 id3 zeros zeros dot

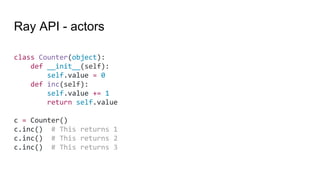

- 26. Ray API - actors class Counter(object): def __init__(self): self.value = 0 def inc(self): self.value += 1 return self.value c = Counter() c.inc() # This returns 1 c.inc() # This returns 2 c.inc() # This returns 3

- 27. Ray API - actors @ray.remote class Counter(object): def __init__(self): self.value = 0 def inc(self): self.value += 1 return self.value c = Counter.remote() id1 = c.inc.remote() id2 = c.inc.remote() id3 = c.inc.remote() ray.get([id1, id2, id3]) # This returns [1, 2, 3] ● State is shared between actor methods. ● Actor methods return Object IDs.

- 28. Ray API - actors @ray.remote class Counter(object): def __init__(self): self.value = 0 def inc(self): self.value += 1 return self.value c = Counter.remote() id1 = c.inc.remote() id2 = c.inc.remote() id3 = c.inc.remote() ray.get([id1, id2, id3]) # This returns [1, 2, 3] ● State is shared between actor methods. ● Actor methods return Object IDs. id1inc Counter inc inc id2 id3

- 29. Ray API - actors @ray.remote(num_gpus=1) class Counter(object): def __init__(self): self.value = 0 def inc(self): self.value += 1 return self.value c = Counter.remote() id1 = c.inc.remote() id2 = c.inc.remote() id3 = c.inc.remote() ray.get([id1, id2, id3]) # This returns [1, 2, 3] ● State is shared between actor methods. ● Actor methods return Object IDs. ● Can specify GPU requirements id1inc Counter inc inc id2 id3

- 30. Ray architecture Node 1 Node 2 Node 3

- 31. Ray architecture Node 1 Node 2 Node 3 Driver

- 32. Ray architecture Node 1 Node 2 Node 3 Worker WorkerWorker WorkerWorkerDriver

- 33. Ray architecture WorkerDriver WorkerWorker WorkerWorker Object Store Object Store Object Store

- 34. Ray architecture WorkerDriver WorkerWorker WorkerWorker Object Store Object Store Object Store Local Scheduler Local Scheduler Local Scheduler

- 35. Ray architecture WorkerDriver WorkerWorker WorkerWorker Object Store Object Store Object Store Local Scheduler Local Scheduler Local Scheduler

- 36. Ray architecture WorkerDriver WorkerWorker WorkerWorker Object Store Object Store Object Store Local Scheduler Local Scheduler Local Scheduler

- 37. Ray architecture WorkerDriver WorkerWorker WorkerWorker Object Store Object Store Object Store Local Scheduler Local Scheduler Local Scheduler Global Scheduler Global Scheduler Global Scheduler Global Scheduler

- 38. Ray architecture WorkerDriver WorkerWorker WorkerWorker Object Store Object Store Object Store Local Scheduler Local Scheduler Local Scheduler Global Scheduler Global Scheduler Global Scheduler Global Scheduler

- 39. Ray architecture WorkerDriver WorkerWorker WorkerWorker Object Store Object Store Object Store Local Scheduler Local Scheduler Local Scheduler Global Control Store Global Control Store Global Control Store Global Scheduler Global Scheduler Global Scheduler Global Scheduler

- 40. Ray architecture WorkerDriver WorkerWorker WorkerWorker Object Store Object Store Object Store Local Scheduler Local Scheduler Local Scheduler Global Scheduler Global Scheduler Global Scheduler Global Scheduler Global Control Store Global Control Store Global Control Store

- 41. Ray architecture WorkerDriver WorkerWorker WorkerWorker Object Store Object Store Object Store Local Scheduler Local Scheduler Local Scheduler Global Control Store Global Control Store Global Control Store Debugging Tools Profiling Tools Web UI Global Scheduler Global Scheduler Global Scheduler Global Scheduler

- 42. Ray performance

- 43. Ray performance One million tasks per second

- 44. Ray performance Latency of local task execution: ~300 us Latency of remote task execution: ~1ms One million tasks per second

- 49. Evolution Strategies actions observations rewards PolicySimulator Try lots of different policies and see which one works best!

- 50. Pseudocode actions observations rewards class Worker(object): def do_simulation(policy, seed): # perform simulation and return reward

- 51. Pseudocode actions observations rewards class Worker(object): def do_simulation(policy, seed): # perform simulation and return reward workers = [Worker() for i in range(20)] policy = initial_policy()

- 52. Pseudocode class Worker(object): def do_simulation(policy, seed): # perform simulation and return reward workers = [Worker() for i in range(20)] policy = initial_policy() for i in range(200): seeds = generate_seeds(i) rewards = [workers[j].do_simulation(policy, seeds[j]) for j in range(20)] policy = compute_update(policy, rewards, seeds) actions observations rewards

- 53. Pseudocode class Worker(object): def do_simulation(policy, seed): # perform simulation and return reward workers = [Worker() for i in range(20)] policy = initial_policy() for i in range(200): seeds = generate_seeds(i) rewards = [workers[j].do_simulation(policy, seeds[j]) for j in range(20)] policy = compute_update(policy, rewards, seeds) actions observations rewards

- 54. Pseudocode @ray.remote class Worker(object): def do_simulation(policy, seed): # perform simulation and return reward workers = [Worker() for i in range(20)] policy = initial_policy() for i in range(200): seeds = generate_seeds(i) rewards = [workers[j].do_simulation(policy, seeds[j]) for j in range(20)] policy = compute_update(policy, rewards, seeds) actions observations rewards

- 55. Pseudocode @ray.remote class Worker(object): def do_simulation(policy, seed): # perform simulation and return reward workers = [Worker.remote() for i in range(20)] policy = initial_policy() for i in range(200): seeds = generate_seeds(i) rewards = [workers[j].do_simulation(policy, seeds[j]) for j in range(20)] policy = compute_update(policy, rewards, seeds) actions observations rewards

- 56. Pseudocode @ray.remote class Worker(object): def do_simulation(policy, seed): # perform simulation and return reward workers = [Worker.remote() for i in range(20)] policy = initial_policy() for i in range(200): seeds = generate_seeds(i) rewards = [workers[j].do_simulation.remote(policy, seeds[j]) for j in range(20)] policy = compute_update(policy, rewards, seeds) actions observations rewards

- 57. Pseudocode @ray.remote class Worker(object): def do_simulation(policy, seed): # perform simulation and return reward workers = [Worker.remote() for i in range(20)] policy = initial_policy() for i in range(200): seeds = generate_seeds(i) rewards = [workers[j].do_simulation.remote(policy, seeds[j]) for j in range(20)] policy = compute_update(policy, ray.get(rewards), seeds) actions observations rewards

- 58. Evolution strategies on Ray 10 nodes 20 nodes 30 nodes 40 nodes 50 nodes Reference 97K 215K 202K N/A N/A Ray 152K 285K 323K 476K 571K The Ray implementation takes half the amount of code and was implemented in a couple of hours Simulator steps per second:

- 59. Policy Gradients

- 60. Ray + Apache Spark ● Complementary ○ Spark handles data processing, “classic” ML algorithms ○ Ray handles emerging AI algos., e.g. reinforcement learning (RL) ● Interoperability through object store based on Apache Arrow ○ Common data layout ○ Supports multiple languages

- 61. Ray is a system for AI Applications ● Ray is open source! https://github.com/ray-project/ray ● We have a v0.1 release! pip install ray ● We’d love your feedback Philipp Ion Alexey Stephanie Johann Richard William Mehrdad Mike Robert

![Ray API - remote functions

@ray.remote

def zeros(shape):

return np.zeros(shape)

@ray.remote

def dot(a, b):

return np.dot(a, b)

id1 = zeros.remote([5, 5])

id2 = zeros.remote([5, 5])

id3 = dot.remote(id1, id2)

ray.get(id3)](https://image.slidesharecdn.com/112moritznishihara-170619125212/85/Ray-A-Cluster-Computing-Engine-for-Reinforcement-Learning-Applications-with-Philipp-Moritz-and-Robert-Nishihara-23-320.jpg)

![Ray API - remote functions

@ray.remote

def zeros(shape):

return np.zeros(shape)

@ray.remote

def dot(a, b):

return np.dot(a, b)

id1 = zeros.remote([5, 5])

id2 = zeros.remote([5, 5])

id3 = dot.remote(id1, id2)

ray.get(id3)

● Blue variables are Object IDs.](https://image.slidesharecdn.com/112moritznishihara-170619125212/85/Ray-A-Cluster-Computing-Engine-for-Reinforcement-Learning-Applications-with-Philipp-Moritz-and-Robert-Nishihara-24-320.jpg)

![Ray API - remote functions

@ray.remote

def zeros(shape):

return np.zeros(shape)

@ray.remote

def dot(a, b):

return np.dot(a, b)

id1 = zeros.remote([5, 5])

id2 = zeros.remote([5, 5])

id3 = dot.remote(id1, id2)

ray.get(id3)

● Blue variables are Object IDs.

id1 id2

id3

zeros zeros

dot](https://image.slidesharecdn.com/112moritznishihara-170619125212/85/Ray-A-Cluster-Computing-Engine-for-Reinforcement-Learning-Applications-with-Philipp-Moritz-and-Robert-Nishihara-25-320.jpg)

![Ray API - actors

@ray.remote

class Counter(object):

def __init__(self):

self.value = 0

def inc(self):

self.value += 1

return self.value

c = Counter.remote()

id1 = c.inc.remote()

id2 = c.inc.remote()

id3 = c.inc.remote()

ray.get([id1, id2, id3]) # This returns [1, 2, 3]

● State is shared between actor methods.

● Actor methods return Object IDs.](https://image.slidesharecdn.com/112moritznishihara-170619125212/85/Ray-A-Cluster-Computing-Engine-for-Reinforcement-Learning-Applications-with-Philipp-Moritz-and-Robert-Nishihara-27-320.jpg)

![Ray API - actors

@ray.remote

class Counter(object):

def __init__(self):

self.value = 0

def inc(self):

self.value += 1

return self.value

c = Counter.remote()

id1 = c.inc.remote()

id2 = c.inc.remote()

id3 = c.inc.remote()

ray.get([id1, id2, id3]) # This returns [1, 2, 3]

● State is shared between actor methods.

● Actor methods return Object IDs.

id1inc

Counter

inc

inc

id2

id3](https://image.slidesharecdn.com/112moritznishihara-170619125212/85/Ray-A-Cluster-Computing-Engine-for-Reinforcement-Learning-Applications-with-Philipp-Moritz-and-Robert-Nishihara-28-320.jpg)

![Ray API - actors

@ray.remote(num_gpus=1)

class Counter(object):

def __init__(self):

self.value = 0

def inc(self):

self.value += 1

return self.value

c = Counter.remote()

id1 = c.inc.remote()

id2 = c.inc.remote()

id3 = c.inc.remote()

ray.get([id1, id2, id3]) # This returns [1, 2, 3]

● State is shared between actor methods.

● Actor methods return Object IDs.

● Can specify GPU requirements

id1inc

Counter

inc

inc

id2

id3](https://image.slidesharecdn.com/112moritznishihara-170619125212/85/Ray-A-Cluster-Computing-Engine-for-Reinforcement-Learning-Applications-with-Philipp-Moritz-and-Robert-Nishihara-29-320.jpg)

![Pseudocode

actions

observations

rewards

class Worker(object):

def do_simulation(policy, seed):

# perform simulation and return reward

workers = [Worker() for i in range(20)]

policy = initial_policy()](https://image.slidesharecdn.com/112moritznishihara-170619125212/85/Ray-A-Cluster-Computing-Engine-for-Reinforcement-Learning-Applications-with-Philipp-Moritz-and-Robert-Nishihara-51-320.jpg)

![Pseudocode

class Worker(object):

def do_simulation(policy, seed):

# perform simulation and return reward

workers = [Worker() for i in range(20)]

policy = initial_policy()

for i in range(200):

seeds = generate_seeds(i)

rewards = [workers[j].do_simulation(policy, seeds[j])

for j in range(20)]

policy = compute_update(policy, rewards, seeds)

actions

observations

rewards](https://image.slidesharecdn.com/112moritznishihara-170619125212/85/Ray-A-Cluster-Computing-Engine-for-Reinforcement-Learning-Applications-with-Philipp-Moritz-and-Robert-Nishihara-52-320.jpg)

![Pseudocode

class Worker(object):

def do_simulation(policy, seed):

# perform simulation and return reward

workers = [Worker() for i in range(20)]

policy = initial_policy()

for i in range(200):

seeds = generate_seeds(i)

rewards = [workers[j].do_simulation(policy, seeds[j])

for j in range(20)]

policy = compute_update(policy, rewards, seeds)

actions

observations

rewards](https://image.slidesharecdn.com/112moritznishihara-170619125212/85/Ray-A-Cluster-Computing-Engine-for-Reinforcement-Learning-Applications-with-Philipp-Moritz-and-Robert-Nishihara-53-320.jpg)

![Pseudocode

@ray.remote

class Worker(object):

def do_simulation(policy, seed):

# perform simulation and return reward

workers = [Worker() for i in range(20)]

policy = initial_policy()

for i in range(200):

seeds = generate_seeds(i)

rewards = [workers[j].do_simulation(policy, seeds[j])

for j in range(20)]

policy = compute_update(policy, rewards, seeds)

actions

observations

rewards](https://image.slidesharecdn.com/112moritznishihara-170619125212/85/Ray-A-Cluster-Computing-Engine-for-Reinforcement-Learning-Applications-with-Philipp-Moritz-and-Robert-Nishihara-54-320.jpg)

![Pseudocode

@ray.remote

class Worker(object):

def do_simulation(policy, seed):

# perform simulation and return reward

workers = [Worker.remote() for i in range(20)]

policy = initial_policy()

for i in range(200):

seeds = generate_seeds(i)

rewards = [workers[j].do_simulation(policy, seeds[j])

for j in range(20)]

policy = compute_update(policy, rewards, seeds)

actions

observations

rewards](https://image.slidesharecdn.com/112moritznishihara-170619125212/85/Ray-A-Cluster-Computing-Engine-for-Reinforcement-Learning-Applications-with-Philipp-Moritz-and-Robert-Nishihara-55-320.jpg)

![Pseudocode

@ray.remote

class Worker(object):

def do_simulation(policy, seed):

# perform simulation and return reward

workers = [Worker.remote() for i in range(20)]

policy = initial_policy()

for i in range(200):

seeds = generate_seeds(i)

rewards = [workers[j].do_simulation.remote(policy, seeds[j])

for j in range(20)]

policy = compute_update(policy, rewards, seeds)

actions

observations

rewards](https://image.slidesharecdn.com/112moritznishihara-170619125212/85/Ray-A-Cluster-Computing-Engine-for-Reinforcement-Learning-Applications-with-Philipp-Moritz-and-Robert-Nishihara-56-320.jpg)

![Pseudocode

@ray.remote

class Worker(object):

def do_simulation(policy, seed):

# perform simulation and return reward

workers = [Worker.remote() for i in range(20)]

policy = initial_policy()

for i in range(200):

seeds = generate_seeds(i)

rewards = [workers[j].do_simulation.remote(policy, seeds[j])

for j in range(20)]

policy = compute_update(policy, ray.get(rewards), seeds)

actions

observations

rewards](https://image.slidesharecdn.com/112moritznishihara-170619125212/85/Ray-A-Cluster-Computing-Engine-for-Reinforcement-Learning-Applications-with-Philipp-Moritz-and-Robert-Nishihara-57-320.jpg)