Real-World Machine Learning - Leverage the Features of MapR Converged Data Platform

1 like1,327 views

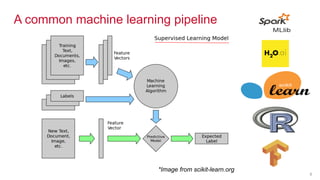

Examine the unique features of the MapR Converged Data Platform and how they can support production-grade enterprise machine learning - Ends with a live demo using H2O - Presented at Hadoop Summit Tokyo 2016

1 of 50

Downloaded 27 times

![© 2014 MapR Technologies 21

MapR NFS and Volumes

[mapr@ip-10-0-0-110 mapr]$ pwd

/mapr/hadoopsummit/user/mapr](https://image.slidesharecdn.com/hadoopsummittokyo2016-161028021146/85/Real-World-Machine-Learning-Leverage-the-Features-of-MapR-Converged-Data-Platform-21-320.jpg)

![© 2014 MapR Technologies 22

MapR NFS and Volumes

[mapr@ip-10-0-0-110 mapr]$ pwd

/mapr/hadoopsummit/user/mapr](https://image.slidesharecdn.com/hadoopsummittokyo2016-161028021146/85/Real-World-Machine-Learning-Leverage-the-Features-of-MapR-Converged-Data-Platform-22-320.jpg)

![© 2014 MapR Technologies 23

MapR NFS and Volumes

[mapr@ip-10-0-0-110 mapr]$ pwd

/mapr/hadoopsummit/user/mapr](https://image.slidesharecdn.com/hadoopsummittokyo2016-161028021146/85/Real-World-Machine-Learning-Leverage-the-Features-of-MapR-Converged-Data-Platform-23-320.jpg)

![© 2014 MapR Technologies 34

Snapshots

[... mateusz]$ cd .snapshot

[... .snapshot]$ ll

total 1

drwxr-xr-x. 2 mapr mapr 1 Oct 14 10:56

mateusz.snap1](https://image.slidesharecdn.com/hadoopsummittokyo2016-161028021146/85/Real-World-Machine-Learning-Leverage-the-Features-of-MapR-Converged-Data-Platform-34-320.jpg)

Ad

Recommended

The Future of Hadoop: MapR VP of Product Management, Tomer Shiran

The Future of Hadoop: MapR VP of Product Management, Tomer ShiranMapR Technologies (1) The amount of data in the world is growing exponentially, with unstructured data making up over 80% of collected data by 2020. (2) Apache Drill provides data agility for Hadoop by enabling self-service data exploration through a flexible data model and schema discovery. (3) Drill allows business users to rapidly query diverse data sources like files, HBase tables, and Hive without requiring IT, through a simple SQL interface.

Build a Time Series Application with Apache Spark and Apache HBase

Build a Time Series Application with Apache Spark and Apache HBaseCarol McDonald This document discusses using Apache Spark and Apache HBase to build a time series application. It provides an overview of time series data and requirements for ingesting, storing, and analyzing high volumes of time series data. The document then describes using Spark Streaming to process real-time data streams from sensors and storing the data in HBase. It outlines the steps in the lab exercise, which involves reading sensor data from files, converting it to objects, creating a Spark Streaming DStream, processing the DStream, and saving the data to HBase.

Hug france-2012-12-04

Hug france-2012-12-04Ted Dunning Talk at Hug FR on December 4, 2012 about the new Apache Drill project. Notably, this talk includes an introduction to the converging specification for the logical plan in Drill.

Apache Spark Overview

Apache Spark OverviewCarol McDonald Overview of Apache Spark: review of mapreduce, spark core, spark dataframes, spark machine learning example, spark streaming

Dealing with an Upside Down Internet

Dealing with an Upside Down InternetMapR Technologies From the Hadoop Summit 2015 Session with Ted Dunning:

Just when we thought the last mile problem was solved, the Internet of Things is turning the last mile problem of the consumer internet into the first mile problem of the industrial internet. This inversion impacts every aspect of the design of networked applications. I will show how to use existing Hadoop ecosystem tools, such as Spark, Drill and others, to deal successfully with this inversion. I will present real examples of how data from things leads to real business benefits and describe real techniques for how these examples work.

Marcel Kornacker: Impala tech talk Tue Feb 26th 2013

Marcel Kornacker: Impala tech talk Tue Feb 26th 2013Modern Data Stack France Impala is a massively parallel processing SQL query engine for Hadoop. It allows users to issue SQL queries directly to their data in Apache Hadoop. Impala uses a distributed architecture where queries are executed in parallel across nodes by Impala daemons. It uses a new execution engine written in C++ with runtime code generation for high performance. Impala also supports commonly used Hadoop file formats and can query data stored in HDFS and HBase.

Drill at the Chug 9-19-12

Drill at the Chug 9-19-12Ted Dunning This is a project report given to the Chicago Hadoop Users' Group meeting in Chicago on September 19, 2012

Hadoop Summit Amsterdam 2014: Capacity Planning In Multi-tenant Hadoop Deploy...

Hadoop Summit Amsterdam 2014: Capacity Planning In Multi-tenant Hadoop Deploy...Sumeet Singh Operating multi-tenant clusters requires careful planning of capacity for on-time launch of big data projects and applications within expected budget and with appropriate SLA guarantees. Making such guarantees with a set of standard hardware configurations is key to operate big data platforms as a hosted service for your organization.

This talk highlights the tools, techniques and methodology applied on a per-project or user basis across three primary multi-tenant deployments in the Apache Hadoop ecosystem, namely MapReduce/YARN and HDFS, HBase, and Storm due to the significance of capital investments with increasing scale in data nodes, region servers, and supervisor nodes respectively. We will demo the estimation tools developed for these deployments that can be used for capital planning and forecasting, and cluster resource and SLA management, including making latency and throughput guarantees to individual users and projects.

As we discuss the tools, we will share considerations that got incorporated to come up with the most appropriate calculation across these three primary deployments. We will discuss the data sources for calculations, resource drivers for different use cases, and how to plan for optimum capacity allocation per project with respect to given standard hardware configurations.

Optimal Execution Of MapReduce Jobs In Cloud - Voices 2015

Optimal Execution Of MapReduce Jobs In Cloud - Voices 2015Deanna Kosaraju Optimal Execution Of MapReduce Jobs In Cloud

Anshul Aggarwal, Software Engineer, Cisco Systems

Session Length: 1 Hour

Tue March 10 21:30 PST

Wed March 11 0:30 EST

Wed March 11 4:30:00 UTC

Wed March 11 10:00 IST

Wed March 11 15:30 Sydney

Voices 2015 www.globaltechwomen.com

We use MapReduce programming paradigm because it lends itself well to most data-intensive analytics jobs run on cloud these days, given its ability to scale-out and leverage several machines to parallel process data. Research has demonstrates that existing approaches to provisioning other applications in the cloud are not immediately relevant to MapReduce -based applications. Provisioning a MapReduce job entails requesting optimum number of resource sets (RS) and configuring MapReduce parameters such that each resource set is maximally utilized.

Each application has a different bottleneck resource (CPU :Disk :Network), and different bottleneck resource utilization, and thus needs to pick a different combination of these parameters based on the job profile such that the bottleneck resource is maximally utilized.

The problem at hand is thus defining a resource provisioning framework for MapReduce jobs running in a cloud keeping in mind performance goals such as Optimal resource utilization with Minimum incurred cost, Lower execution time, Energy Awareness, Automatic handling of node failure and Highly scalable solution.

Enterprise Scale Topological Data Analysis Using Spark

Enterprise Scale Topological Data Analysis Using SparkAlpine Data This document discusses scaling topological data analysis (TDA) using the Mapper algorithm to analyze large datasets. It describes how the authors built the first open-source scalable implementation of Mapper called Betti Mapper using Spark. Betti Mapper uses locality-sensitive hashing to bin data points and compute topological summaries on prototype points to achieve an 8-11x performance improvement over a naive Spark implementation. The key aspects of Betti Mapper that enable scaling to enterprise datasets are locality-sensitive hashing for sampling and using prototype points to reduce the distance matrix computation.

Large Scale Math with Hadoop MapReduce

Large Scale Math with Hadoop MapReduceHortonworks The document discusses using Hadoop MapReduce for large scale mathematical computations. It introduces integer multiplication algorithms like FFT, MapReduce-FFT, MapReduce-Sum and MapReduce-SSA. These algorithms can be used to solve computationally intensive problems like integer factoring, PDE solving, computing the Riemann zeta function, and calculating pi to high precision. The document focuses on integer multiplication as it is a prerequisite for many applications and explores FFT-based algorithms and the Schonhage-Strassen algorithm in particular.

Openstack

OpenstackRAKESH SHARMA The document discusses the potential for OpenStack to be the future of cloud computing. It describes how OpenStack provides an operating system for hybrid clouds that can augment and replace proprietary infrastructure software. The timing is optimal for OpenStack to accelerate the shift to cloud computing as enterprises look to adopt cloud solutions and ensure new applications can access corporate data and systems. OpenStack is an open source project that could emerge as the standard approach and prevent vendor lock-in.

Extending Hadoop for Fun & Profit

Extending Hadoop for Fun & ProfitMilind Bhandarkar Apache Hadoop project, and the Hadoop ecosystem has been designed be extremely flexible, and extensible. HDFS, Yarn, and MapReduce combined have more that 1000 configuration parameters that allow users to tune performance of Hadoop applications, and more importantly, extend Hadoop with application-specific functionality, without having to modify any of the core Hadoop code.

In this talk, I will start with simple extensions, such as writing a new InputFormat to efficiently process video files. I will provide with some extensions that boost application performance, such as optimized compression codecs, and pluggable shuffle implementations. With refactoring of MapReduce framework, and emergence of YARN, as a generic resource manager for Hadoop, one can extend Hadoop further by implementing new computation paradigms.

I will discuss one such computation framework, that allows Message Passing applications to run in the Hadoop cluster alongside MapReduce. I will conclude by outlining some of our ongoing work, that extends HDFS, by removing namespace limitations of the current Namenode implementation.

Introduction to Spark on Hadoop

Introduction to Spark on HadoopCarol McDonald This document provides an overview of Apache Spark, including:

- A refresher on MapReduce and its processing model

- An introduction to Spark, describing how it differs from MapReduce in addressing some of MapReduce's limitations

- Examples of how Spark can be used, including for iterative algorithms and interactive queries

- Resources for free online training in Hadoop, MapReduce, Hive and using HBase with MapReduce and Hive

Hadoop_Its_Not_Just_Internal_Storage_V14

Hadoop_Its_Not_Just_Internal_Storage_V14John Sing This document provides an overview of Hadoop storage perspectives from different stakeholders. The Hadoop application team prefers direct attached storage for performance reasons, as Hadoop was designed for affordable internet-scale analytics where data locality is important. However, IT operations has valid concerns about reliability, manageability, utilization, and integration with other systems when data is stored on direct attached storage instead of shared storage. There are tradeoffs to both approaches that depend on factors like the infrastructure, workload characteristics, and priorities of the organization.

Scaling hadoopapplications

Scaling hadoopapplicationsMilind Bhandarkar The document discusses best practices for scaling Hadoop applications. It covers causes of sublinear scalability like sequential bottlenecks, load imbalance, over-partitioning, and synchronization issues. It also provides equations for analyzing scalability and discusses techniques like reducing algorithmic overheads, increasing task granularity, and using compression. The document recommends using higher-level languages, tuning configuration parameters, and minimizing remote procedure calls to improve scalability.

Big Data Performance and Capacity Management

Big Data Performance and Capacity Managementrightsize A short introduction to Big Data; what it is and what are the implications for performance and capacity management.

Yahoo's Experience Running Pig on Tez at Scale

Yahoo's Experience Running Pig on Tez at ScaleDataWorks Summit/Hadoop Summit Yahoo migrated most of its Pig workload from MapReduce to Tez to achieve significant performance improvements and resource utilization gains. Some key challenges in the migration included addressing misconfigurations, bad programming practices, and behavioral changes between the frameworks. Yahoo was able to run very large and complex Pig on Tez jobs involving hundreds of vertices and terabytes of data smoothly at scale. Further optimizations are still needed around speculative execution and container reuse to improve utilization even more. The migration to Tez resulted in up to 30% reduction in runtime, memory, and CPU usage for Yahoo's Pig workload.

Hadoop, MapReduce and R = RHadoop

Hadoop, MapReduce and R = RHadoopVictoria López The document discusses linking the statistical programming language R with the Hadoop platform for big data analysis. It introduces Hadoop and its components like HDFS and MapReduce. It describes three ways to link R and Hadoop: RHIPE which performs distributed and parallel analysis, RHadoop which provides HDFS and MapReduce interfaces, and Hadoop streaming which allows R scripts to be used as Mappers and Reducers. The goal is to use these methods to analyze large datasets with R functions on Hadoop clusters.

Boston hug-2012-07

Boston hug-2012-07Ted Dunning Describes the state of Apache Mahout with special focus on the upcoming k-nearest neighbor and k-means clustering algorithms.

Goto amsterdam-2013-skinned

Goto amsterdam-2013-skinnedTed Dunning The document discusses how different technologies like Hadoop, Storm, Solr, and D3 can be integrated together using common storage platforms. It provides examples of how real-time and batch processing can be combined for applications like search and recommendations. The document advocates that hybrid systems integrating these technologies can provide benefits over traditional tiered architectures and be implemented today.

Anomaly Detection in Telecom with Spark - Tugdual Grall - Codemotion Amsterda...

Anomaly Detection in Telecom with Spark - Tugdual Grall - Codemotion Amsterda...Codemotion Telecom operators need to find operational anomalies in their networks very quickly. This need, however, is shared with many other industries as well so there are lessons for all of us here. Spark plus a streaming architecture can solve these problems very nicely. I will present both a practical architecture as well as design patterns and some detailed algorithms for detecting anomalies in event streams. These algorithms are simple but quite general and can be applied across a wide variety of situations.

Big Data and Cloud Computing

Big Data and Cloud ComputingFarzad Nozarian This document discusses cloud and big data technologies. It provides an overview of Hadoop and its ecosystem, which includes components like HDFS, MapReduce, HBase, Zookeeper, Pig and Hive. It also describes how data is stored in HDFS and HBase, and how MapReduce can be used for parallel processing across large datasets. Finally, it gives examples of using MapReduce to implement algorithms for word counting, building inverted indexes and performing joins.

Future of Data Intensive Applicaitons

Future of Data Intensive ApplicaitonsMilind Bhandarkar "Big Data" is a much-hyped term nowadays in Business Computing. However, the core concept of collaborative environments conducting experiments over large shared data repositories has existed for decades. In this talk, I will outline how recent advances in Cloud Computing, Big Data processing frameworks, and agile application development platforms enable Data Intensive Cloud Applications. I will provide a brief history of efforts in building scalable & adaptive run-time environments, and the role these runtime systems will play in new Cloud Applications. I will present a vision for cloud platforms for science, where data-intensive frameworks such as Apache Hadoop will play a key role.

Drill lightning-london-big-data-10-01-2012

Drill lightning-london-big-data-10-01-2012Ted Dunning Apache Drill is an open source engine for interactive analysis of large-scale datasets. It was inspired by Google's Dremel, which allows interactive querying of trillions of records at fast speeds. Drill uses a SQL-like language called DrQL to query nested data in a column-based manner. It has a flexible architecture that allows pluggable query languages, execution engines, data formats and sources. Drill aims to be fast, flexible, dependable and easy to use for interactive analysis of big data.

Neo4j vs giraph

Neo4j vs giraphNishant Gandhi This document surveys and compares three large-scale graph processing platforms: Apache Giraph, Hadoop-MapReduce, and Neo4j. It analyzes their programming models and performance based on previous studies. Hadoop was found to have the worst performance for graph algorithms due to its lack of optimizations for graphs. Giraph was generally the fastest platform due to its in-memory computations and message passing model. Neo4j performed well for small graphs due to its caching but did not scale as well as distributed platforms for large graphs. The document concludes that distributed graph-specific platforms like Giraph outperform generic platforms for most graph problems.

Hadoop 2 - More than MapReduce

Hadoop 2 - More than MapReduceUwe Printz This talk gives an introduction into Hadoop 2 and YARN. Then the changes for MapReduce 2 are explained. Finally Tez and Spark are explained and compared in detail.

The talk has been held on the Parallel 2014 conference in Karlsruhe, Germany on 06.05.2014.

Agenda:

- Introduction to Hadoop 2

- MapReduce 2

- Tez, Hive & Stinger Initiative

- Spark

2. hadoop fundamentals

2. hadoop fundamentalsLokesh Ramaswamy Hadoop is an open source framework for distributed storage and processing of large datasets across clusters of commodity hardware. It uses Google's MapReduce programming model and Google File System for reliability. The Hadoop architecture includes a distributed file system (HDFS) that stores data across clusters and a job scheduling and resource management framework (YARN) that allows distributed processing of large datasets in parallel. Key components include the NameNode, DataNodes, ResourceManager and NodeManagers. Hadoop provides reliability through replication of data blocks and automatic recovery from failures.

NYC Hadoop Meetup - MapR, Architecture, Philosophy and Applications

NYC Hadoop Meetup - MapR, Architecture, Philosophy and ApplicationsJason Shao Slides from: http://www.meetup.com/Hadoop-NYC/events/34411232/

There are a number of assumptions that come with using standard Hadoop that are based on Hadoop's initial architecture. Many of these assumptions can be relaxed with more advanced architectures such as those provided by MapR. These changes in assumptions have ripple effects throughout the system architecture. This is significant because many systems like Mahout provide multiple implementations of various algorithms with very different performance and scaling implications.

I will describe several case studies and use these examples to show how these changes can simplify systems or, in some cases, make certain classes of programs run an order of magnitude faster.

About the speaker: Ted Dunning - Chief Application Architect (MapR)

Ted has held Chief Scientist positions at Veoh Networks, ID Analytics and at MusicMatch, (now Yahoo Music). Ted is responsible for building the most advanced identity theft detection system on the planet, as well as one of the largest peer-assisted video distribution systems and ground-breaking music and video recommendations systems. Ted has 15 issued and 15 pending patents and contributes to several Apache open source projects including Hadoop, Zookeeper and Hbase. He is also a committer for Apache Mahout. Ted earned a BS degree in electrical engineering from the University of Colorado; a MS degree in computer science from New Mexico State University; and a Ph.D. in computing science from Sheffield University in the United Kingdom. Ted also bought the drinks at one of the very first Hadoop User Group meetings.

Which data should you move to Hadoop?

Which data should you move to Hadoop?Attunity This document discusses trends driving enterprises to move cold data to Hadoop and optimize their data warehouses. It outlines two trends: 1) collecting more customer data enables competitive advantages, and 2) big data is overwhelming traditional systems. It also discusses two realities: 1) Hadoop can relieve pressure on enterprise systems by handling data staging, archiving, and analytics, and 2) architecture matters for production success with requirements like performance, security, and integration. The document promotes MapR and Attunity solutions for data warehouse optimization on Hadoop through real-time data movement, workload analysis, and incremental implementation.

Ad

More Related Content

What's hot (20)

Optimal Execution Of MapReduce Jobs In Cloud - Voices 2015

Optimal Execution Of MapReduce Jobs In Cloud - Voices 2015Deanna Kosaraju Optimal Execution Of MapReduce Jobs In Cloud

Anshul Aggarwal, Software Engineer, Cisco Systems

Session Length: 1 Hour

Tue March 10 21:30 PST

Wed March 11 0:30 EST

Wed March 11 4:30:00 UTC

Wed March 11 10:00 IST

Wed March 11 15:30 Sydney

Voices 2015 www.globaltechwomen.com

We use MapReduce programming paradigm because it lends itself well to most data-intensive analytics jobs run on cloud these days, given its ability to scale-out and leverage several machines to parallel process data. Research has demonstrates that existing approaches to provisioning other applications in the cloud are not immediately relevant to MapReduce -based applications. Provisioning a MapReduce job entails requesting optimum number of resource sets (RS) and configuring MapReduce parameters such that each resource set is maximally utilized.

Each application has a different bottleneck resource (CPU :Disk :Network), and different bottleneck resource utilization, and thus needs to pick a different combination of these parameters based on the job profile such that the bottleneck resource is maximally utilized.

The problem at hand is thus defining a resource provisioning framework for MapReduce jobs running in a cloud keeping in mind performance goals such as Optimal resource utilization with Minimum incurred cost, Lower execution time, Energy Awareness, Automatic handling of node failure and Highly scalable solution.

Enterprise Scale Topological Data Analysis Using Spark

Enterprise Scale Topological Data Analysis Using SparkAlpine Data This document discusses scaling topological data analysis (TDA) using the Mapper algorithm to analyze large datasets. It describes how the authors built the first open-source scalable implementation of Mapper called Betti Mapper using Spark. Betti Mapper uses locality-sensitive hashing to bin data points and compute topological summaries on prototype points to achieve an 8-11x performance improvement over a naive Spark implementation. The key aspects of Betti Mapper that enable scaling to enterprise datasets are locality-sensitive hashing for sampling and using prototype points to reduce the distance matrix computation.

Large Scale Math with Hadoop MapReduce

Large Scale Math with Hadoop MapReduceHortonworks The document discusses using Hadoop MapReduce for large scale mathematical computations. It introduces integer multiplication algorithms like FFT, MapReduce-FFT, MapReduce-Sum and MapReduce-SSA. These algorithms can be used to solve computationally intensive problems like integer factoring, PDE solving, computing the Riemann zeta function, and calculating pi to high precision. The document focuses on integer multiplication as it is a prerequisite for many applications and explores FFT-based algorithms and the Schonhage-Strassen algorithm in particular.

Openstack

OpenstackRAKESH SHARMA The document discusses the potential for OpenStack to be the future of cloud computing. It describes how OpenStack provides an operating system for hybrid clouds that can augment and replace proprietary infrastructure software. The timing is optimal for OpenStack to accelerate the shift to cloud computing as enterprises look to adopt cloud solutions and ensure new applications can access corporate data and systems. OpenStack is an open source project that could emerge as the standard approach and prevent vendor lock-in.

Extending Hadoop for Fun & Profit

Extending Hadoop for Fun & ProfitMilind Bhandarkar Apache Hadoop project, and the Hadoop ecosystem has been designed be extremely flexible, and extensible. HDFS, Yarn, and MapReduce combined have more that 1000 configuration parameters that allow users to tune performance of Hadoop applications, and more importantly, extend Hadoop with application-specific functionality, without having to modify any of the core Hadoop code.

In this talk, I will start with simple extensions, such as writing a new InputFormat to efficiently process video files. I will provide with some extensions that boost application performance, such as optimized compression codecs, and pluggable shuffle implementations. With refactoring of MapReduce framework, and emergence of YARN, as a generic resource manager for Hadoop, one can extend Hadoop further by implementing new computation paradigms.

I will discuss one such computation framework, that allows Message Passing applications to run in the Hadoop cluster alongside MapReduce. I will conclude by outlining some of our ongoing work, that extends HDFS, by removing namespace limitations of the current Namenode implementation.

Introduction to Spark on Hadoop

Introduction to Spark on HadoopCarol McDonald This document provides an overview of Apache Spark, including:

- A refresher on MapReduce and its processing model

- An introduction to Spark, describing how it differs from MapReduce in addressing some of MapReduce's limitations

- Examples of how Spark can be used, including for iterative algorithms and interactive queries

- Resources for free online training in Hadoop, MapReduce, Hive and using HBase with MapReduce and Hive

Hadoop_Its_Not_Just_Internal_Storage_V14

Hadoop_Its_Not_Just_Internal_Storage_V14John Sing This document provides an overview of Hadoop storage perspectives from different stakeholders. The Hadoop application team prefers direct attached storage for performance reasons, as Hadoop was designed for affordable internet-scale analytics where data locality is important. However, IT operations has valid concerns about reliability, manageability, utilization, and integration with other systems when data is stored on direct attached storage instead of shared storage. There are tradeoffs to both approaches that depend on factors like the infrastructure, workload characteristics, and priorities of the organization.

Scaling hadoopapplications

Scaling hadoopapplicationsMilind Bhandarkar The document discusses best practices for scaling Hadoop applications. It covers causes of sublinear scalability like sequential bottlenecks, load imbalance, over-partitioning, and synchronization issues. It also provides equations for analyzing scalability and discusses techniques like reducing algorithmic overheads, increasing task granularity, and using compression. The document recommends using higher-level languages, tuning configuration parameters, and minimizing remote procedure calls to improve scalability.

Big Data Performance and Capacity Management

Big Data Performance and Capacity Managementrightsize A short introduction to Big Data; what it is and what are the implications for performance and capacity management.

Yahoo's Experience Running Pig on Tez at Scale

Yahoo's Experience Running Pig on Tez at ScaleDataWorks Summit/Hadoop Summit Yahoo migrated most of its Pig workload from MapReduce to Tez to achieve significant performance improvements and resource utilization gains. Some key challenges in the migration included addressing misconfigurations, bad programming practices, and behavioral changes between the frameworks. Yahoo was able to run very large and complex Pig on Tez jobs involving hundreds of vertices and terabytes of data smoothly at scale. Further optimizations are still needed around speculative execution and container reuse to improve utilization even more. The migration to Tez resulted in up to 30% reduction in runtime, memory, and CPU usage for Yahoo's Pig workload.

Hadoop, MapReduce and R = RHadoop

Hadoop, MapReduce and R = RHadoopVictoria López The document discusses linking the statistical programming language R with the Hadoop platform for big data analysis. It introduces Hadoop and its components like HDFS and MapReduce. It describes three ways to link R and Hadoop: RHIPE which performs distributed and parallel analysis, RHadoop which provides HDFS and MapReduce interfaces, and Hadoop streaming which allows R scripts to be used as Mappers and Reducers. The goal is to use these methods to analyze large datasets with R functions on Hadoop clusters.

Boston hug-2012-07

Boston hug-2012-07Ted Dunning Describes the state of Apache Mahout with special focus on the upcoming k-nearest neighbor and k-means clustering algorithms.

Goto amsterdam-2013-skinned

Goto amsterdam-2013-skinnedTed Dunning The document discusses how different technologies like Hadoop, Storm, Solr, and D3 can be integrated together using common storage platforms. It provides examples of how real-time and batch processing can be combined for applications like search and recommendations. The document advocates that hybrid systems integrating these technologies can provide benefits over traditional tiered architectures and be implemented today.

Anomaly Detection in Telecom with Spark - Tugdual Grall - Codemotion Amsterda...

Anomaly Detection in Telecom with Spark - Tugdual Grall - Codemotion Amsterda...Codemotion Telecom operators need to find operational anomalies in their networks very quickly. This need, however, is shared with many other industries as well so there are lessons for all of us here. Spark plus a streaming architecture can solve these problems very nicely. I will present both a practical architecture as well as design patterns and some detailed algorithms for detecting anomalies in event streams. These algorithms are simple but quite general and can be applied across a wide variety of situations.

Big Data and Cloud Computing

Big Data and Cloud ComputingFarzad Nozarian This document discusses cloud and big data technologies. It provides an overview of Hadoop and its ecosystem, which includes components like HDFS, MapReduce, HBase, Zookeeper, Pig and Hive. It also describes how data is stored in HDFS and HBase, and how MapReduce can be used for parallel processing across large datasets. Finally, it gives examples of using MapReduce to implement algorithms for word counting, building inverted indexes and performing joins.

Future of Data Intensive Applicaitons

Future of Data Intensive ApplicaitonsMilind Bhandarkar "Big Data" is a much-hyped term nowadays in Business Computing. However, the core concept of collaborative environments conducting experiments over large shared data repositories has existed for decades. In this talk, I will outline how recent advances in Cloud Computing, Big Data processing frameworks, and agile application development platforms enable Data Intensive Cloud Applications. I will provide a brief history of efforts in building scalable & adaptive run-time environments, and the role these runtime systems will play in new Cloud Applications. I will present a vision for cloud platforms for science, where data-intensive frameworks such as Apache Hadoop will play a key role.

Drill lightning-london-big-data-10-01-2012

Drill lightning-london-big-data-10-01-2012Ted Dunning Apache Drill is an open source engine for interactive analysis of large-scale datasets. It was inspired by Google's Dremel, which allows interactive querying of trillions of records at fast speeds. Drill uses a SQL-like language called DrQL to query nested data in a column-based manner. It has a flexible architecture that allows pluggable query languages, execution engines, data formats and sources. Drill aims to be fast, flexible, dependable and easy to use for interactive analysis of big data.

Neo4j vs giraph

Neo4j vs giraphNishant Gandhi This document surveys and compares three large-scale graph processing platforms: Apache Giraph, Hadoop-MapReduce, and Neo4j. It analyzes their programming models and performance based on previous studies. Hadoop was found to have the worst performance for graph algorithms due to its lack of optimizations for graphs. Giraph was generally the fastest platform due to its in-memory computations and message passing model. Neo4j performed well for small graphs due to its caching but did not scale as well as distributed platforms for large graphs. The document concludes that distributed graph-specific platforms like Giraph outperform generic platforms for most graph problems.

Hadoop 2 - More than MapReduce

Hadoop 2 - More than MapReduceUwe Printz This talk gives an introduction into Hadoop 2 and YARN. Then the changes for MapReduce 2 are explained. Finally Tez and Spark are explained and compared in detail.

The talk has been held on the Parallel 2014 conference in Karlsruhe, Germany on 06.05.2014.

Agenda:

- Introduction to Hadoop 2

- MapReduce 2

- Tez, Hive & Stinger Initiative

- Spark

2. hadoop fundamentals

2. hadoop fundamentalsLokesh Ramaswamy Hadoop is an open source framework for distributed storage and processing of large datasets across clusters of commodity hardware. It uses Google's MapReduce programming model and Google File System for reliability. The Hadoop architecture includes a distributed file system (HDFS) that stores data across clusters and a job scheduling and resource management framework (YARN) that allows distributed processing of large datasets in parallel. Key components include the NameNode, DataNodes, ResourceManager and NodeManagers. Hadoop provides reliability through replication of data blocks and automatic recovery from failures.

Viewers also liked (20)

NYC Hadoop Meetup - MapR, Architecture, Philosophy and Applications

NYC Hadoop Meetup - MapR, Architecture, Philosophy and ApplicationsJason Shao Slides from: http://www.meetup.com/Hadoop-NYC/events/34411232/

There are a number of assumptions that come with using standard Hadoop that are based on Hadoop's initial architecture. Many of these assumptions can be relaxed with more advanced architectures such as those provided by MapR. These changes in assumptions have ripple effects throughout the system architecture. This is significant because many systems like Mahout provide multiple implementations of various algorithms with very different performance and scaling implications.

I will describe several case studies and use these examples to show how these changes can simplify systems or, in some cases, make certain classes of programs run an order of magnitude faster.

About the speaker: Ted Dunning - Chief Application Architect (MapR)

Ted has held Chief Scientist positions at Veoh Networks, ID Analytics and at MusicMatch, (now Yahoo Music). Ted is responsible for building the most advanced identity theft detection system on the planet, as well as one of the largest peer-assisted video distribution systems and ground-breaking music and video recommendations systems. Ted has 15 issued and 15 pending patents and contributes to several Apache open source projects including Hadoop, Zookeeper and Hbase. He is also a committer for Apache Mahout. Ted earned a BS degree in electrical engineering from the University of Colorado; a MS degree in computer science from New Mexico State University; and a Ph.D. in computing science from Sheffield University in the United Kingdom. Ted also bought the drinks at one of the very first Hadoop User Group meetings.

Which data should you move to Hadoop?

Which data should you move to Hadoop?Attunity This document discusses trends driving enterprises to move cold data to Hadoop and optimize their data warehouses. It outlines two trends: 1) collecting more customer data enables competitive advantages, and 2) big data is overwhelming traditional systems. It also discusses two realities: 1) Hadoop can relieve pressure on enterprise systems by handling data staging, archiving, and analytics, and 2) architecture matters for production success with requirements like performance, security, and integration. The document promotes MapR and Attunity solutions for data warehouse optimization on Hadoop through real-time data movement, workload analysis, and incremental implementation.

MapR and Cisco Make IT Better

MapR and Cisco Make IT BetterMapR Technologies You’re not the only one still loading your data into data warehouses and building marts or cubes out of it. But today’s data requires a much more accessible environment that delivers real-time results. Prepare for this transformation because your data platform and storage choices are about to undergo a re-platforming that happens once in 30 years.

With the MapR Converged Data Platform (CDP) and Cisco Unified Compute System (UCS), you can optimize today’s infrastructure and grow to take advantage of what’s next. Uncover the range of possibilities from re-platforming by intimately understanding your options for density, performance, functionality and more.

Philly DB MapR Overview

Philly DB MapR OverviewMapR Technologies The document discusses MapR's distribution for Apache Hadoop. It provides an enterprise-grade and open source distribution that leverages open source components and makes targeted enhancements to make Hadoop more open and enterprise-ready. Key features include integration with other big data technologies like Accumulo, high availability, easy management at scale, and a storage architecture based on volumes to logically organize and manage data placement and policies across a Hadoop cluster.

Hands on MapR -- Viadea

Hands on MapR -- Viadeaviadea The document discusses MapR cluster management using the MapR CLI. It provides examples of starting and stopping a MapR cluster, managing nodes, volumes, mirrors and schedules. Specific examples include creating volumes, linking mirrors to volumes, syncing mirrors, moving volumes and nodes to different topologies, and creating schedules to automate tasks.

Seattle Scalability Meetup - Ted Dunning - MapR

Seattle Scalability Meetup - Ted Dunning - MapRclive boulton MapR is an amazing new distributed filesystem modeled after Hadoop. It maintains API compatibility with Hadoop, but far exceeds it in performance, manageability, and more.

/* Ted's MapR meeting slides incorporated here */

SQL-on-Hadoop with Apache Drill

SQL-on-Hadoop with Apache DrillMapR Technologies The open source project Apache Drill gives you SQL-on-Hadoop, but with some big differences. The biggest difference is that Drill extends ANSI SQL from a strongly typed language to also a late binding language without losing performance. This allows Drill to process complex structured data like JSON in addition to relational data. By dynamically generating a schema at read time that matches the data types and structures observed in the data, Drill gives you both self-service agility and speed.

Drill also introduces a view-based security model that uses file system permissions to control access to data at an extremely fine-grained level that makes secure access easy to control. These extensions have huge practical impact when it comes to writing real applications.

In these slides, Tugdual Grall, Technical Evangelist at MapR, gives several practical examples of how Drill makes it easy to analyze data, using SQL in your Java application with a simple JDBC driver.

MapR M7: Providing an enterprise quality Apache HBase API

MapR M7: Providing an enterprise quality Apache HBase APImcsrivas The document provides an overview of MapR M7, an integrated system for structured and unstructured data. M7 combines aspects of LSM trees and B-trees to provide faster reads and writes compared to Apache HBase. It achieves instant recovery from failures through its use of micro write-ahead logs and parallel region recovery. Benchmark results show MapR M7 providing 5-11x faster performance than HBase for common operations like reads, updates, and scans.

Map r hadoop-security-mar2014 (2)

Map r hadoop-security-mar2014 (2)MapR Technologies This document discusses securing Hadoop with MapR. It begins by explaining why Hadoop security is now important given the sensitive data it handles. It then outlines weaknesses in typical Hadoop deployments. The rest of the document details how MapR secures Hadoop, including wire-level authentication and encryption, authorization integration, and security for ecosystem components like Hive and Oozie without requiring Kerberos.

Hadoop and Your Enterprise Data Warehouse

Hadoop and Your Enterprise Data WarehouseEdgar Alejandro Villegas Hadoop and Your Enterprise Data Warehouse

Presentation Slides

By MapR & DBTA Database Trends and Applications

Design Patterns for working with Fast Data in Kafka

Design Patterns for working with Fast Data in KafkaIan Downard Apache Kafka is an open-source message broker project that provides a platform for storing and processing real-time data feeds. In this presentation Ian Downard describes the concepts that are important to understand in order to effectively use the Kafka API. He describes how to prepare a development environment from scratch, how to write a basic publish/subscribe application, and how to run it on a variety of cluster types, including simple single-node clusters, multi-node clusters using Heroku’s “Kafka as a Service”, and enterprise-grade multi-node clusters using MapR’s Converged Data Platform.

Video: https://vimeo.com/188045894

Ian also discusses strategies for working with "fast data" and how to maximize the throughput of your Kafka pipeline. He describes which Kafka configurations and data types have the largest impact on performance and provide some useful JUnit tests, combined with statistical analysis in R, that can help quantify how various configurations effect throughput.

Big Data Journey

Big Data JourneyTugdual Grall Generic presentation about Big Data Architecture/Components. This presentation was delivered by David Pilato and Tugdual Grall during JUG Summer Camp 2015 in La Rochelle, France

Why Elastic? @ 50th Vinitaly 2016

Why Elastic? @ 50th Vinitaly 2016Christoph Wurm Why do companies as different as Wikipedia, Netflix, USAA and NASA use the Elastic stack? And why should you, too?

Elastic v5.0.0 Update uptoalpha3 v0.2 - 김종민

Elastic v5.0.0 Update uptoalpha3 v0.2 - 김종민NAVER D2 The document summarizes the new features and improvements in Elastic Stack v5.0.0, including updates to Kibana, Elasticsearch, Logstash, and Beats. Key highlights include a redesigned Kibana interface, improved indexing performance in Elasticsearch, easier plugin development in Logstash, new data shippers and filtering capabilities in Beats, and expanded subscription support offerings. The Elastic Stack aims to help users build distributed applications and solve real problems through its integrated search, analytics, and data pipeline capabilities.

Using Familiar BI Tools and Hadoop to Analyze Enterprise Networks

Using Familiar BI Tools and Hadoop to Analyze Enterprise NetworksMapR Technologies This document discusses using Apache Drill and business intelligence (BI) tools to analyze network data stored in Hadoop. It provides examples of querying network packet captures, OpenStack data, and TCP metrics using SQL with tools like Tableau and SAP Lumira. The key benefits are interacting with diverse network data sources like JSON and CSV files without preprocessing, and gaining insights by combining network data with other data sources in the BI tools.

Understanding Metadata: Why it's essential to your big data solution and how ...

Understanding Metadata: Why it's essential to your big data solution and how ...Zaloni This document discusses the importance of metadata for big data solutions and data lakes. It begins with introductions of the two speakers, Ben Sharma and Vikram Sreekanti. It then discusses how metadata allows you to track data in the data lake, improve change management and data visibility. The document presents considerations for metadata such as integration with enterprise solutions and automated registration. It provides examples of using metadata for data lineage, quality, and cataloging. Finally, it discusses using metadata across storage tiers for data lifecycle management and providing elastic compute resources.

MapR-DB Elasticsearch Integration

MapR-DB Elasticsearch IntegrationMapR Technologies This document discusses MapR's integration with Elasticsearch. It introduces MapR-DB, a scalable NoSQL database, and describes how MapR replicates data from MapR-DB tables to Elasticsearch in near real-time. The replication architecture uses gateway nodes to stream data changes from MapR-DB to Elasticsearch. It also covers data type conversions and future extensions, such as supporting additional external sinks like Spark streaming.

Handling the Extremes: Scaling and Streaming in Finance

Handling the Extremes: Scaling and Streaming in FinanceMapR Technologies This document discusses how streaming platforms can handle large volumes of data for financial applications. It provides examples of messaging platforms and use cases for fraud detection and email filtering. The key benefits discussed are the ability to horizontally scale applications, replicate data across clusters, and index data dynamically for different consumers.

IoT and Big Data - Iot Asia 2014

IoT and Big Data - Iot Asia 2014John Berns This document discusses big data and the Internet of Things (IoT). It states that while IoT data can be big data, big data strategies and technologies apply regardless of data source or industry. It defines big data as occurring when the size of data becomes problematic to store, move, extract, analyze, etc. using traditional methods. It recommends distributing and parallelizing data using approaches like Hadoop and discusses how technologies like SQL on Hadoop, Pig, Spark, HBase, queues, stream processing, and complex architectures can be used to handle big IoT and other big data.

Spark SQL versus Apache Drill: Different Tools with Different Rules

Spark SQL versus Apache Drill: Different Tools with Different RulesDataWorks Summit/Hadoop Summit Ted Dunning presents information on Drill and Spark SQL. Drill is a query engine that operates on batches of rows in a pipelined and optimistic manner, while Spark SQL provides SQL capabilities on top of Spark's RDD abstraction. The document discusses the key differences in their approaches to optimization, execution, and security. It also explores opportunities for unification by allowing Drill and Spark to work together on the same data.

Ad

Similar to Real-World Machine Learning - Leverage the Features of MapR Converged Data Platform (20)

Real-World Machine Learning - Leverage the Features of MapR Converged Data Pl...

Real-World Machine Learning - Leverage the Features of MapR Converged Data Pl...DataWorks Summit/Hadoop Summit This document discusses using the MapR Converged Data Platform for machine learning projects. It describes MapR features like the MapR filesystem, snapshots, mirrors and topologies that help support different phases of machine learning like data collection, preparation, modeling, evaluation and deployment. The document also outlines how MapR can help manage machine learning projects at scale in an enterprise environment and integrates with common ML tools. It concludes with a demo of running H2O on MapR to showcase these features in action.

CEP - simplified streaming architecture - Strata Singapore 2016

CEP - simplified streaming architecture - Strata Singapore 2016Mathieu Dumoulin We describe an application of CEP using a microservice-based streaming architecture. We use Drools business rule engine to apply rules in real time to an event stream from IoT traffic sensor data.

Real World Use Cases: Hadoop and NoSQL in Production

Real World Use Cases: Hadoop and NoSQL in ProductionCodemotion "Real World Use Cases: Hadoop and NoSQL in Production" by Tugdual Grall.

What’s important about a technology is what you can use it to do. I’ve looked at what a number of groups are doing with Apache Hadoop and NoSQL in production, and I will relay what worked well for them and what did not. Drawing from real world use cases, I show how people who understand these new approaches can employ them well in conjunction with traditional approaches and existing applications. Thread Detection, Datawarehouse optimization, Marketing Efficiency, Biometric Database are some examples exposed during this presentation.

MapR and Machine Learning Primer

MapR and Machine Learning PrimerMathieu Dumoulin MapR is an ideal scalable platform for data science and specifically for operationalizing machine learning in the enterprise. This presentations gives specific reasons why.

Real-time Hadoop: The Ideal Messaging System for Hadoop

Real-time Hadoop: The Ideal Messaging System for Hadoop DataWorks Summit/Hadoop Summit Ted Dunning presents on streaming architectures and MapR Technologies' streaming capabilities. He discusses MapR Streams, which implements the Kafka API for high performance and scale. MapR provides a converged data platform with files, tables, and streams managed under common security and permissions. Dunning reviews several use cases and lessons learned around real-time data processing, microservices, and global data management requirements.

Streaming in the Extreme

Streaming in the ExtremeJulius Remigio, CBIP Streaming in the Extreme

Jim Scott, Director, Enterprise Strategy & Architecture, MapR

Have you ever heard of Kafka? Are you ready to start streaming all of the events in your business? What happens to your streaming solution when you outgrow your single data center? What happens when you are at a company that is already running multiple data centers and you need to implement streaming across data centers? I will discuss technologies like Kafka that can be used to accomplish, real-time, lossless messaging that works in both single and multiple globally dispersed data centers. I will also describe how to handle the data coming in through these streams in both batch processes as well as real-time processes.What about when you need to scale to a trillion events per day? I will discuss technologies like Kafka that can be used to accomplish, real-time, lossless messaging that works in both single and multiple globally dispersed data centers. I will also describe how to handle the data coming in through these streams in both batch processes as well as real-time processes.

Video Presentation:

https://youtu.be/Y0vxLgB1u9o

Back to School - St. Louis Hadoop Meetup September 2016

Back to School - St. Louis Hadoop Meetup September 2016Adam Doyle These are the slides from Matt Miller's presentation on 10 of the most useful tools in the Hadoop ecosystem.

Distributed Deep Learning on Spark

Distributed Deep Learning on SparkMathieu Dumoulin This document provides an overview of distributed deep learning on Spark. It begins with a brief introduction to machine learning and deep learning. It then discusses why distributed systems are needed for deep learning due to the computational intensity. Spark is identified as a framework that can be used to build distributed deep learning systems. Two examples are described - SparkNet, which was developed at UC Berkeley, and CaffeOnSpark, developed at Yahoo. Both implement distributed stochastic gradient descent using a parameter server approach. The document concludes with demonstrations of Caffe and CaffeOnSpark.

MapR 5.2: Getting More Value from the MapR Converged Community Edition

MapR 5.2: Getting More Value from the MapR Converged Community EditionMapR Technologies Please join us to learn about the recent developments during the past year in the MapR Community Edition. In these slides, we will cover the following platform updates:

-Taking cluster monitoring to the next level with the Spyglass Initiative

-Real-time streaming with MapR Streams

-MapR-DB JSON document database and application development with OJAI

-Securing your data with access control expressions (ACEs)

Big Data Everywhere Chicago: Getting Real with the MapR Platform (MapR)

Big Data Everywhere Chicago: Getting Real with the MapR Platform (MapR)BigDataEverywhere Jim Scott, Director of Enterprise Strategy, MapR; Cofounder, CHUG

In this talk, we will take a look back at the short history of Hadoop, along with the trials and tribulation that have come along with this ground-breaking technology. We will explore the reasons why enterprises need to look deeper into their wants and needs and further into the future to prepare for where they are going.

Is Spark Replacing Hadoop

Is Spark Replacing HadoopMapR Technologies Spark is potentially replacing MapReduce as the primary execution framework for Hadoop, though Hadoop will likely continue embracing new frameworks. Spark code is easier to write and its performance is faster for iterative algorithms. However, not all applications are faster in Spark and it may have limitations. Hadoop also supports many other frameworks and is about more than just MapReduce, including storage, resource management, and a growing ecosystem of tools.

Streaming Architecture to Connect Everything (Including Hybrid Cloud) - Strat...

Streaming Architecture to Connect Everything (Including Hybrid Cloud) - Strat...Mathieu Dumoulin This document summarizes a talk given by Mathieu Dumoulin of MapR Technologies about architecting hybrid cloud applications using streaming messaging systems. The talk discusses using streaming architectures to connect systems in hybrid clouds, with public and private clouds connected by streaming. It also discusses using streaming for IoT and microservices and highlights Kafka and Spark Streaming/Flink as streaming technologies. Examples of log analysis architectures spanning hybrid clouds are presented.

MapR 5.2: Getting More Value from the MapR Converged Data Platform

MapR 5.2: Getting More Value from the MapR Converged Data PlatformMapR Technologies End of maintenance for MapR 4.x is coming in January, so now is a good time to plan your upgrade. Please join us to learn about the recent developments during the past year in the MapR Platform that will make the upgrade effort this year worthwhile.

How Spark is Enabling the New Wave of Converged Cloud Applications

How Spark is Enabling the New Wave of Converged Cloud Applications MapR Technologies Apache Spark has become the de-facto compute engine of choice for data engineers, developers, and data scientists because of its ability to run multiple analytic workloads with a single, general-purpose compute engine.

But is Spark alone sufficient for developing cloud-based big data applications? What are the other required components for supporting big data cloud processing? How can you accelerate the development of applications which extend across Spark and other frameworks such as Kafka, Hadoop, NoSQL databases, and more?

MapR Unique features

MapR Unique featuresVishwas Tengse MapR is a distribution of Apache Hadoop that includes over a dozen projects like HBase, Hive, Pig, and Spark. It provides capabilities for big data and constantly upgrades projects within 90 days of release. MapR also contributes to open source. Key benefits include high availability without special configurations, superior performance reducing costs, and data protection through snapshots. It also supports real-time applications, security, multi-tenancy, and assistance from MapR data scientists and engineers.

Analyzing Real-World Data with Apache Drill

Analyzing Real-World Data with Apache DrillTomer Shiran The document describes a demo of analyzing real-world data using Apache Drill. The demo involves running Drill, configuring storage plugins for HDFS and MongoDB, and exploring sample data from Yelp including reviews, users, and business data stored in JSON files and MongoDB collections. Queries are run against this data using SQL to analyze basics as well as complex data structures.

Predictive Maintenance Using Recurrent Neural Networks

Predictive Maintenance Using Recurrent Neural NetworksJustin Brandenburg This document discusses using recurrent neural networks for predictive maintenance. It begins by providing context on industry 4.0 and the growth of industrial automation. It then discusses predictive maintenance and how sensor data from industrial equipment can be used for failure prediction. The document outlines how a recurrent neural network model could be developed using streaming sensor data from manufacturing devices to identify abnormal behavior and predict needed maintenance. It describes the workflow of importing and preparing the data, developing and testing the model, and deploying it to generate alerts from new streaming data.

Keys for Success from Streams to Queries

Keys for Success from Streams to QueriesDataWorks Summit/Hadoop Summit Ted Dunning is the Chief Applications Architect at MapR Technologies and a committer for Apache Drill, Zookeeper, and other projects. The document discusses goals around real-time or near-time processing and microservices. It describes how to design microservices for isolation using self-describing data, private databases, and shared storage only where necessary. Various scenarios involving fraud detection, IoT data aggregation, and global data recovery are presented. Lessons focus on decoupling services, propagating events rather than table updates, and how data architecture should reflect business structure.

Streaming Goes Mainstream: New Architecture & Emerging Technologies for Strea...

Streaming Goes Mainstream: New Architecture & Emerging Technologies for Strea...MapR Technologies This document summarizes Ellen Friedman's presentation on streaming data and architectures. The key points are:

1) Streaming data is becoming mainstream as technologies for distributed storage and stream processing mature. Real-time insights from streaming data provide more value than static batch analysis.

2) MapR Streams is part of MapR's converged data platform for message transport and can support use cases like microservices with its distributed, durable messaging capabilities.

3) Apache Flink is a popular open source stream processing framework that provides accurate, low-latency processing of streaming data through features like windowing, event-time semantics, and state management.

Converged and Containerized Distributed Deep Learning With TensorFlow and Kub...

Converged and Containerized Distributed Deep Learning With TensorFlow and Kub...Mathieu Dumoulin Docker containers running on Kubernetes combine with MapR Converged Data Platform allow any company to potentially enjoy the same sophisticated data infrastructure for enabling teams to engage in transformative machine learning and deep learning for production use at scale.

Real-World Machine Learning - Leverage the Features of MapR Converged Data Pl...

Real-World Machine Learning - Leverage the Features of MapR Converged Data Pl...DataWorks Summit/Hadoop Summit

Ad

More from Mathieu Dumoulin (6)

State of the Art Robot Predictive Maintenance with Real-time Sensor Data

State of the Art Robot Predictive Maintenance with Real-time Sensor DataMathieu Dumoulin Our Strata Beijing 2017 presentation slides where we show how to use data from a movement sensor, in real-time, to do anomaly detection at scale using standard enterprise big data software.

Real world machine learning with Java for Fumankaitori.com

Real world machine learning with Java for Fumankaitori.comMathieu Dumoulin This document summarizes a presentation about using machine learning in Java 8 at Fumankaitori.com. The presentation introduces the speaker and their company, which collects user dissatisfaction posts and rewards users with points that can be exchanged for coupons. Their goal was to automate point assignment for posts using machine learning instead of manual rules. They trained an XGBoost model in DataRobot that achieved their goal of predicting points within 5 of human labels. For production, they achieved similar performance using H2O to train a gradient boosted machine model and generate a prediction POJO for low latency predictions. The presentation emphasizes that machine learning is possible for any Java engineer and that Java 8 features like streams make it a good choice for real

MapReduce: Traitement de données distribué à grande échelle simplifié

MapReduce: Traitement de données distribué à grande échelle simplifiéMathieu Dumoulin Présentation qui reprend les éléments principaux de l'article fondamental sur MapReduce de Dean et Ghemawat de 2004: MapReduce: simplified data processing on large clusters

Presentation Hadoop Québec

Presentation Hadoop QuébecMathieu Dumoulin Introduction à Hadoop et à l'écosystème Hadoop. Remise en contexte et survol des concepts fondamentaux.

Recently uploaded (20)

Exceptional Behaviors: How Frequently Are They Tested? (AST 2025)

Exceptional Behaviors: How Frequently Are They Tested? (AST 2025)Andre Hora Exceptions allow developers to handle error cases expected to occur infrequently. Ideally, good test suites should test both normal and exceptional behaviors to catch more bugs and avoid regressions. While current research analyzes exceptions that propagate to tests, it does not explore other exceptions that do not reach the tests. In this paper, we provide an empirical study to explore how frequently exceptional behaviors are tested in real-world systems. We consider both exceptions that propagate to tests and the ones that do not reach the tests. For this purpose, we run an instrumented version of test suites, monitor their execution, and collect information about the exceptions raised at runtime. We analyze the test suites of 25 Python systems, covering 5,372 executed methods, 17.9M calls, and 1.4M raised exceptions. We find that 21.4% of the executed methods do raise exceptions at runtime. In methods that raise exceptions, on the median, 1 in 10 calls exercise exceptional behaviors. Close to 80% of the methods that raise exceptions do so infrequently, but about 20% raise exceptions more frequently. Finally, we provide implications for researchers and practitioners. We suggest developing novel tools to support exercising exceptional behaviors and refactoring expensive try/except blocks. We also call attention to the fact that exception-raising behaviors are not necessarily “abnormal” or rare.

Adobe Illustrator Crack FREE Download 2025 Latest Version

Adobe Illustrator Crack FREE Download 2025 Latest Versionkashifyounis067 🌍📱👉COPY LINK & PASTE ON GOOGLE http://drfiles.net/ 👈🌍

Adobe Illustrator is a powerful, professional-grade vector graphics software used for creating a wide range of designs, including logos, icons, illustrations, and more. Unlike raster graphics (like photos), which are made of pixels, vector graphics in Illustrator are defined by mathematical equations, allowing them to be scaled up or down infinitely without losing quality.

Here's a more detailed explanation:

Key Features and Capabilities:

Vector-Based Design:

Illustrator's foundation is its use of vector graphics, meaning designs are created using paths, lines, shapes, and curves defined mathematically.

Scalability:

This vector-based approach allows for designs to be resized without any loss of resolution or quality, making it suitable for various print and digital applications.

Design Creation:

Illustrator is used for a wide variety of design purposes, including:

Logos and Brand Identity: Creating logos, icons, and other brand assets.

Illustrations: Designing detailed illustrations for books, magazines, web pages, and more.

Marketing Materials: Creating posters, flyers, banners, and other marketing visuals.

Web Design: Designing web graphics, including icons, buttons, and layouts.

Text Handling:

Illustrator offers sophisticated typography tools for manipulating and designing text within your graphics.

Brushes and Effects:

It provides a range of brushes and effects for adding artistic touches and visual styles to your designs.

Integration with Other Adobe Software:

Illustrator integrates seamlessly with other Adobe Creative Cloud apps like Photoshop, InDesign, and Dreamweaver, facilitating a smooth workflow.

Why Use Illustrator?

Professional-Grade Features:

Illustrator offers a comprehensive set of tools and features for professional design work.

Versatility:

It can be used for a wide range of design tasks and applications, making it a versatile tool for designers.

Industry Standard:

Illustrator is a widely used and recognized software in the graphic design industry.

Creative Freedom:

It empowers designers to create detailed, high-quality graphics with a high degree of control and precision.

Download YouTube By Click 2025 Free Full Activated

Download YouTube By Click 2025 Free Full Activatedsaniamalik72555 Copy & Past Link 👉👉

https://dr-up-community.info/

"YouTube by Click" likely refers to the ByClick Downloader software, a video downloading and conversion tool, specifically designed to download content from YouTube and other video platforms. It allows users to download YouTube videos for offline viewing and to convert them to different formats.

Landscape of Requirements Engineering for/by AI through Literature Review

Landscape of Requirements Engineering for/by AI through Literature ReviewHironori Washizaki Hironori Washizaki, "Landscape of Requirements Engineering for/by AI through Literature Review," RAISE 2025: Workshop on Requirements engineering for AI-powered SoftwarE, 2025.

Douwan Crack 2025 new verson+ License code

Douwan Crack 2025 new verson+ License codeaneelaramzan63 Copy & Paste On Google >>> https://dr-up-community.info/

Douwan Preactivated Crack Douwan Crack Free Download. Douwan is a comprehensive software solution designed for data management and analysis.

Why Orangescrum Is a Game Changer for Construction Companies in 2025

Why Orangescrum Is a Game Changer for Construction Companies in 2025Orangescrum Orangescrum revolutionizes construction project management in 2025 with real-time collaboration, resource planning, task tracking, and workflow automation, boosting efficiency, transparency, and on-time project delivery.

F-Secure Freedome VPN 2025 Crack Plus Activation New Version

F-Secure Freedome VPN 2025 Crack Plus Activation New Versionsaimabibi60507 Copy & Past Link 👉👉

https://dr-up-community.info/

F-Secure Freedome VPN is a virtual private network service developed by F-Secure, a Finnish cybersecurity company. It offers features such as Wi-Fi protection, IP address masking, browsing protection, and a kill switch to enhance online privacy and security .

Exploring Wayland: A Modern Display Server for the Future

Exploring Wayland: A Modern Display Server for the FutureICS Wayland is revolutionizing the way we interact with graphical interfaces, offering a modern alternative to the X Window System. In this webinar, we’ll delve into the architecture and benefits of Wayland, including its streamlined design, enhanced performance, and improved security features.

Adobe After Effects Crack FREE FRESH version 2025

Adobe After Effects Crack FREE FRESH version 2025kashifyounis067 🌍📱👉COPY LINK & PASTE ON GOOGLE http://drfiles.net/ 👈🌍

Adobe After Effects is a software application used for creating motion graphics, special effects, and video compositing. It's widely used in TV and film post-production, as well as for creating visuals for online content, presentations, and more. While it can be used to create basic animations and designs, its primary strength lies in adding visual effects and motion to videos and graphics after they have been edited.

Here's a more detailed breakdown:

Motion Graphics:

.

After Effects is powerful for creating animated titles, transitions, and other visual elements to enhance the look of videos and presentations.

Visual Effects:

.

It's used extensively in film and television for creating special effects like green screen compositing, object manipulation, and other visual enhancements.

Video Compositing:

.

After Effects allows users to combine multiple video clips, images, and graphics to create a final, cohesive visual.

Animation:

.

It uses keyframes to create smooth, animated sequences, allowing for precise control over the movement and appearance of objects.

Integration with Adobe Creative Cloud:

.

After Effects is part of the Adobe Creative Cloud, a suite of software that includes other popular applications like Photoshop and Premiere Pro.

Post-Production Tool:

.

After Effects is primarily used in the post-production phase, meaning it's used to enhance the visuals after the initial editing of footage has been completed.

How to Optimize Your AWS Environment for Improved Cloud Performance

How to Optimize Your AWS Environment for Improved Cloud PerformanceThousandEyes How to Optimize Your AWS Environment for Improved Cloud Performance

FL Studio Producer Edition Crack 2025 Full Version

FL Studio Producer Edition Crack 2025 Full Versiontahirabibi60507 Copy & Past Link 👉👉

http://drfiles.net/

FL Studio is a Digital Audio Workstation (DAW) software used for music production. It's developed by the Belgian company Image-Line. FL Studio allows users to create and edit music using a graphical user interface with a pattern-based music sequencer.

Mastering Fluent Bit: Ultimate Guide to Integrating Telemetry Pipelines with ...

Mastering Fluent Bit: Ultimate Guide to Integrating Telemetry Pipelines with ...Eric D. Schabell It's time you stopped letting your telemetry data pressure your budgets and get in the way of solving issues with agility! No more I say! Take back control of your telemetry data as we guide you through the open source project Fluent Bit. Learn how to manage your telemetry data from source to destination using the pipeline phases covering collection, parsing, aggregation, transformation, and forwarding from any source to any destination. Buckle up for a fun ride as you learn by exploring how telemetry pipelines work, how to set up your first pipeline, and exploring several common use cases that Fluent Bit helps solve. All this backed by a self-paced, hands-on workshop that attendees can pursue at home after this session (https://o11y-workshops.gitlab.io/workshop-fluentbit).

Not So Common Memory Leaks in Java Webinar

Not So Common Memory Leaks in Java WebinarTier1 app This SlideShare presentation is from our May webinar, “Not So Common Memory Leaks & How to Fix Them?”, where we explored lesser-known memory leak patterns in Java applications. Unlike typical leaks, subtle issues such as thread local misuse, inner class references, uncached collections, and misbehaving frameworks often go undetected and gradually degrade performance. This deck provides in-depth insights into identifying these hidden leaks using advanced heap analysis and profiling techniques, along with real-world case studies and practical solutions. Ideal for developers and performance engineers aiming to deepen their understanding of Java memory management and improve application stability.

Adobe Marketo Engage Champion Deep Dive - SFDC CRM Synch V2 & Usage Dashboards

Adobe Marketo Engage Champion Deep Dive - SFDC CRM Synch V2 & Usage DashboardsBradBedford3 Join Ajay Sarpal and Miray Vu to learn about key Marketo Engage enhancements. Discover improved in-app Salesforce CRM connector statistics for easy monitoring of sync health and throughput. Explore new Salesforce CRM Synch Dashboards providing up-to-date insights into weekly activity usage, thresholds, and limits with drill-down capabilities. Learn about proactive notifications for both Salesforce CRM sync and product usage overages. Get an update on improved Salesforce CRM synch scale and reliability coming in Q2 2025.

Key Takeaways:

Improved Salesforce CRM User Experience: Learn how self-service visibility enhances satisfaction.

Utilize Salesforce CRM Synch Dashboards: Explore real-time weekly activity data.

Monitor Performance Against Limits: See threshold limits for each product level.

Get Usage Over-Limit Alerts: Receive notifications for exceeding thresholds.

Learn About Improved Salesforce CRM Scale: Understand upcoming cloud-based incremental sync.

Scaling GraphRAG: Efficient Knowledge Retrieval for Enterprise AI

Scaling GraphRAG: Efficient Knowledge Retrieval for Enterprise AIdanshalev If we were building a GenAI stack today, we'd start with one question: Can your retrieval system handle multi-hop logic?

Trick question, b/c most can’t. They treat retrieval as nearest-neighbor search.

Today, we discussed scaling #GraphRAG at AWS DevOps Day, and the takeaway is clear: VectorRAG is naive, lacks domain awareness, and can’t handle full dataset retrieval.

GraphRAG builds a knowledge graph from source documents, allowing for a deeper understanding of the data + higher accuracy.

Solidworks Crack 2025 latest new + license code

Solidworks Crack 2025 latest new + license codeaneelaramzan63 Copy & Paste On Google >>> https://dr-up-community.info/

The two main methods for installing standalone licenses of SOLIDWORKS are clean installation and parallel installation (the process is different ...

Disable your internet connection to prevent the software from performing online checks during installation

Secure Test Infrastructure: The Backbone of Trustworthy Software Development

Secure Test Infrastructure: The Backbone of Trustworthy Software DevelopmentShubham Joshi A secure test infrastructure ensures that the testing process doesn’t become a gateway for vulnerabilities. By protecting test environments, data, and access points, organizations can confidently develop and deploy software without compromising user privacy or system integrity.

How Valletta helped healthcare SaaS to transform QA and compliance to grow wi...

How Valletta helped healthcare SaaS to transform QA and compliance to grow wi...Egor Kaleynik This case study explores how we partnered with a mid-sized U.S. healthcare SaaS provider to help them scale from a successful pilot phase to supporting over 10,000 users—while meeting strict HIPAA compliance requirements.

Faced with slow, manual testing cycles, frequent regression bugs, and looming audit risks, their growth was at risk. Their existing QA processes couldn’t keep up with the complexity of real-time biometric data handling, and earlier automation attempts had failed due to unreliable tools and fragmented workflows.

We stepped in to deliver a full QA and DevOps transformation. Our team replaced their fragile legacy tests with Testim’s self-healing automation, integrated Postman and OWASP ZAP into Jenkins pipelines for continuous API and security validation, and leveraged AWS Device Farm for real-device, region-specific compliance testing. Custom deployment scripts gave them control over rollouts without relying on heavy CI/CD infrastructure.

The result? Test cycle times were reduced from 3 days to just 8 hours, regression bugs dropped by 40%, and they passed their first HIPAA audit without issue—unlocking faster contract signings and enabling them to expand confidently. More than just a technical upgrade, this project embedded compliance into every phase of development, proving that SaaS providers in regulated industries can scale fast and stay secure.

Who Watches the Watchmen (SciFiDevCon 2025)

Who Watches the Watchmen (SciFiDevCon 2025)Allon Mureinik Tests, especially unit tests, are the developers’ superheroes. They allow us to mess around with our code and keep us safe.

We often trust them with the safety of our codebase, but how do we know that we should? How do we know that this trust is well-deserved?