scalable machine learning

- 2. me: Sam Bessalah Software Engineer, Freelance Big Data, Distributed Computing, Machine Learning Paris Data Geek Co-organizer @samklr @DataParis

- 3. Machine Learning Land VOWPAL WABBIT

- 4. Some Observations in Big Data Land ● New use cases push towards faster execution platforms and real time predictions engines. ● Traditional MapReduce on Hadoop is fading away, especially for Machine Learning ● Apache Spark has become the darling of the Big Data world, thanks to its high level API and performances. ● Rise of Machine Learning public APIs to easily integrate models into application and other data processing workflows.

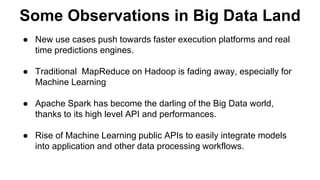

- 5. ● Used to be the only Hadoop MapReduce Framework ● Moved from MapReduce towards modern and faster backends, namely ● Now provide a fluent DSL that integrates with Scala and Spark

- 7. Mahout Example Simple Co-occurence analysis in Mahout val A = drmFromHDFS (“ hdfs://nivdul/babygirl.txt“) val cooccurencesMatrix = A.t %*% A val numInteractions = drmBroadcast(A.colsums) val I = C.mapBlock(){ case (keys, block) => val indicatorBlock = sparse(row, col) for (r <- block ) indicatorBlock = computeLLR (row, nbInt) keys <- indicatorblock }

- 8. Dataflow system, materialized by immutable and lazy, in-memory distributed collections suited for iterative and complex transformations, like in most Machine Learning algorithms. Those in-memory collections are called Resilient Distributed Datasets (RDD) They provide : ● Partitioned data ● High level operations (map, filter, collect, reduce, zip, join, sample, etc …) ● No side effects ● Fault recovery via lineage

- 9. Some operations on RDDs

- 10. Spark Ecosystem

- 11. MLlib Machine Learning library within Spark : ● Provides an integrated predictive and data analysis workflow ● Broad collections of algorithms and applications ● Integrates with the whole Spark Ecosystem Three APIs in :

- 13. Example: Clustering via K-means // Load and parse data val data = sc.textFile(“hdfs://bbgrl/dataset.txt”) val parsedData = data.map { x => Vectors.dense(x.split(“ “).map.(_.toDouble )) }.cache() //Cluster data into 5 classes using K-means val clusters = Kmeans.train(parsedData, k=5, numIterations=20 ) //Evaluate model error val cost = clusters.computeCost(parsedData)

- 15. Coming to Spark 1.2 ● Ensembles of decision trees : Random Forests ● Boosting ● Topic modeling ● Streaming Kmeans ● A pipeline interface for machine workflows A lot of contributions from the community

- 16. Machine Learning Pipeline Typical machine learning workflows are complex ! Coming in next iterations of MLLib

- 17. ● H20 is a fast (really fast), statistics, Machine Learning and maths engine on the JVM. ● Edited by 0xdata (commercial entity) and focus on bringing robust and highly performant machine learning algorithms to popular Big Data workloads. ● Has APIs in R, Java, Scala and Python and integrates to third parties tools like Tableau and Excel.

- 19. Example in R library(h2o) localH2O = h2o.init(ip = 'localhost', port = 54321) irisPath = system.file("extdata", "iris.csv", package="h2o") iris.hex = h2o.importFile(localH2O, path = irisPath, key = "iris.hex") iris.data.frame <- as.data.frame(iris.hex) > colnames(iris.hex) [1] "C1" "C2" "C3" "C4" "C5" >

- 20. Simple Logistic Regressioon to predict prostate cancer outcomes: > prostate.hex = h2o.importFile(localH2O, path="https://raw.github.com/0xdata/h2o/../prostate.csv", key = "prostate.hex") > prostate.glm = h2o.glm(y = "CAPSULE", x =c("AGE","RACE","PSA","DCAPS"), data = prostate.hex,family = "binomial", nfolds = 10, alpha = 0.5) > prostate.fit = h2o.predict(object=prostate.glm, newdata = prostate.hex)

- 21. > (prostate.fit) IP Address: 127.0.0.1 Port : 54321 Parsed Data Key: GLM2Predict_8b6890653fa743be9eb3ab1668c5a6e9 predict X0 X1 1 0 0.7452267 0.2547732 2 1 0.3969807 0.6030193 3 1 0.4120950 0.5879050 4 1 0.3726134 0.6273866 5 1 0.6465137 0.3534863 6 1 0.4331880 0.5668120

- 22. Sparkling Water Transparent use of H2O data and algorithms with the Spark API. Provides a custom RDD : H2ORDD

- 25. val sqlContext = new SQLContext(sc) import sqlContext._ airlinesTable.registerTempTable("airlinesTable") //H20 methods val query = “SELECT * FROM airlinesTable WHERE Dest LIKE 'SFO' OR Dest LIKE 'SJC' OR Dest LIKE 'OAK'“ val result = sql(query) result.count

- 26. Same but with Spark API // H2O Context provide useful implicits for conversions val h2oContext = new H2OContext(sc) import h2oContext._ // Create RDD wrapper around DataFrame val airlinesTable : RDD[Airlines] = toRDD[Airlines](airlinesData) airlinesTable.count // And use Spark RDD API directly val flightsOnlyToSF = airlinesTable.filter(f => f.Dest==Some("SFO") || f.Dest==Some("SJC") || f.Dest==Some("OAK") ) flightsOnlyToSF.count

- 27. Build a model import hex.deeplearning._ import hex.deeplearning.DeepLearningModel.DeepLearningParameters val dlParams = new DeepLearningParameters() dlParams._training_frame = result( 'Year, 'Month, 'DayofMonth, DayOfWeek, 'CRSDepTime, 'CRSArrTime,'UniqueCarrier, FlightNum, 'TailNum, 'CRSElapsedTime, 'Origin, 'Dest,'Distance,‘IsDepDelayed) dlParams.response_column = 'IsDepDelayed.name // Create a new model builder val dl = new DeepLearning(dlParams) val dlModel = dl.train.get

- 28. Predict // Use model to score data val prediction = dlModel.score(result)(‘predict) // Collect predicted values via the RDD API val predictionValues = toRDD[DoubleHolder](prediction) .collect .map ( _.result.getOrElse("NaN") )

Editor's Notes

- #14: c’est où le chat ?

![Example in R

library(h2o)

localH2O = h2o.init(ip = 'localhost', port = 54321)

irisPath = system.file("extdata", "iris.csv", package="h2o")

iris.hex = h2o.importFile(localH2O, path = irisPath, key = "iris.hex")

iris.data.frame <- as.data.frame(iris.hex)

> colnames(iris.hex)

[1] "C1" "C2" "C3" "C4" "C5"

>](https://image.slidesharecdn.com/datajob-mlscale3-141120160307-conversion-gate02/85/scalable-machine-learning-19-320.jpg)

![Same but with Spark API

// H2O Context provide useful implicits for conversions

val h2oContext = new H2OContext(sc)

import h2oContext._

// Create RDD wrapper around DataFrame

val airlinesTable : RDD[Airlines] = toRDD[Airlines](airlinesData)

airlinesTable.count

// And use Spark RDD API directly

val flightsOnlyToSF = airlinesTable.filter(f =>

f.Dest==Some("SFO") || f.Dest==Some("SJC") || f.Dest==Some("OAK")

)

flightsOnlyToSF.count](https://image.slidesharecdn.com/datajob-mlscale3-141120160307-conversion-gate02/85/scalable-machine-learning-26-320.jpg)

.collect

.map ( _.result.getOrElse("NaN") )](https://image.slidesharecdn.com/datajob-mlscale3-141120160307-conversion-gate02/85/scalable-machine-learning-28-320.jpg)