Schema-on-Read vs Schema-on-Write

Download as PPTX, PDF24 likes27,845 views

This is the first time I introduced the concept of Schema-on-Read vs Schema-on-Write to the public. It was at Berkeley EECS RAD Lab retreat Open Mic Session on May 28th, 2009 at Santa Cruz, California.

1 of 4

Downloaded 296 times

Ad

Recommended

Espresso: LinkedIn's Distributed Data Serving Platform (Paper)

Espresso: LinkedIn's Distributed Data Serving Platform (Paper)Amy W. Tang This paper, written by the LinkedIn Espresso Team, appeared at the ACM SIGMOD/PODS Conference (June 2013). To see the talk given by Swaroop Jagadish (Staff Software Engineer @ LinkedIn), go here:

http://www.slideshare.net/amywtang/li-espresso-sigmodtalk

Kafka replication apachecon_2013

Kafka replication apachecon_2013Jun Rao The document discusses intra-cluster replication in Apache Kafka, including its architecture where partitions are replicated across brokers for high availability. Kafka uses a leader and in-sync replicas approach to strongly consistent replication while tolerating failures. Performance considerations in Kafka replication include latency and durability tradeoffs for producers and optimizing throughput for consumers.

Apache Flink and what it is used for

Apache Flink and what it is used forAljoscha Krettek Aljoscha Krettek is the PMC chair of Apache Flink and Apache Beam, and co-founder of data Artisans. Apache Flink is an open-source platform for distributed stream and batch data processing. It allows for stateful computations over data streams in real-time and historically. Flink supports batch and stream processing using APIs like DataSet and DataStream. Data Artisans originated Flink and provides an application platform powered by Flink and Kubernetes for building stateful stream processing applications.

Elasticsearch Tutorial | Getting Started with Elasticsearch | ELK Stack Train...

Elasticsearch Tutorial | Getting Started with Elasticsearch | ELK Stack Train...Edureka! ( ELK Stack Training - https://www.edureka.co/elk-stack-trai... )

This Edureka Elasticsearch Tutorial will help you in understanding the fundamentals of Elasticsearch along with its practical usage and help you in building a strong foundation in ELK Stack. This video helps you to learn following topics:

1. What Is Elasticsearch?

2. Why Elasticsearch?

3. Elasticsearch Advantages

4. Elasticsearch Installation

5. API Conventions

6. Elasticsearch Query DSL

7. Mapping

8. Analysis

9 Modules

Data Lakehouse, Data Mesh, and Data Fabric (r1)

Data Lakehouse, Data Mesh, and Data Fabric (r1)James Serra So many buzzwords of late: Data Lakehouse, Data Mesh, and Data Fabric. What do all these terms mean and how do they compare to a data warehouse? In this session I’ll cover all of them in detail and compare the pros and cons of each. I’ll include use cases so you can see what approach will work best for your big data needs.

Apache Iceberg - A Table Format for Hige Analytic Datasets

Apache Iceberg - A Table Format for Hige Analytic DatasetsAlluxio, Inc. Data Orchestration Summit

www.alluxio.io/data-orchestration-summit-2019

November 7, 2019

Apache Iceberg - A Table Format for Hige Analytic Datasets

Speaker:

Ryan Blue, Netflix

For more Alluxio events: https://www.alluxio.io/events/

Facebook Messages & HBase

Facebook Messages & HBase强 王 The document discusses Facebook's use of HBase to store messaging data. It provides an overview of HBase, including its data model, performance characteristics, and how it was a good fit for Facebook's needs due to its ability to handle large volumes of data, high write throughput, and efficient random access. It also describes some enhancements Facebook made to HBase to improve availability, stability, and performance. Finally, it briefly mentions Facebook's migration of messaging data from MySQL to their HBase implementation.

The Top Five Mistakes Made When Writing Streaming Applications with Mark Grov...

The Top Five Mistakes Made When Writing Streaming Applications with Mark Grov...Databricks So you know you want to write a streaming app, but any non-trivial streaming app developer would have to think about these questions:

– How do I manage offsets?

– How do I manage state?

– How do I make my Spark Streaming job resilient to failures? Can I avoid some failures?

– How do I gracefully shutdown my streaming job?

– How do I monitor and manage my streaming job (i.e. re-try logic)?

– How can I better manage the DAG in my streaming job?

– When do I use checkpointing, and for what? When should I not use checkpointing?

– Do I need a WAL when using a streaming data source? Why? When don’t I need one?

This session will share practices that no one talks about when you start writing your streaming app, but you’ll inevitably need to learn along the way.

Migrating your clusters and workloads from Hadoop 2 to Hadoop 3

Migrating your clusters and workloads from Hadoop 2 to Hadoop 3DataWorks Summit The Hadoop community announced Hadoop 3.0 GA in December, 2017 and 3.1 around April, 2018 loaded with a lot of features and improvements. One of the biggest challenges for any new major release of a software platform is its compatibility. Apache Hadoop community has focused on ensuring wire and binary compatibility for Hadoop 2 clients and workloads.

There are many challenges to be addressed by admins while upgrading to a major release of Hadoop. Users running workloads on Hadoop 2 should be able to seamlessly run or migrate their workloads onto Hadoop 3. This session will be deep diving into upgrade aspects in detail and provide a detailed preview of migration strategies with information on what works and what might not work. This talk would focus on the motivation for upgrading to Hadoop 3 and provide a cluster upgrade guide for admins and workload migration guide for users of Hadoop.

Speaker

Suma Shivaprasad, Hortonworks, Staff Engineer

Rohith Sharma, Hortonworks, Senior Software Engineer

Cloud dw benchmark using tpd-ds( Snowflake vs Redshift vs EMR Hive )

Cloud dw benchmark using tpd-ds( Snowflake vs Redshift vs EMR Hive )SANG WON PARK 몇년 전부터 Data Architecture의 변화가 빠르게 진행되고 있고,

그 중 Cloud DW는 기존 Data Lake(Hadoop 기반)의 한계(성능, 비용, 운영 등)에 대한 대안으로 주목받으며,

많은 기업들이 이미 도입했거나, 도입을 검토하고 있다.

본 자료는 이러한 Cloud DW에 대해서 개념적으로 이해하고,

시장에 존재하는 다양한 Cloud DW 중에서 기업의 환경에 맞는 제품이 어떤 것인지 성능/비용 관점으로 비교했다.

- 왜기업들은 CloudDW에주목하는가?

- 시장에는어떤 제품들이 있는가?

- 우리Biz환경에서는 어떤 제품을 도입해야 하는가?

- CloudDW솔루션의 성능은?

- 기존DataLake(EMR)대비 성능은?

- 유사CloudDW(snowflake vs redshift) 대비성능은?

앞으로도 Data를 둘러싼 시장은 Cloud DW를 기반으로 ELT, Mata Mesh, Reverse ETL등 새로운 생테계가 급속하게 발전할 것이고,

이를 위한 데이터 엔지니어/데이터 아키텍트 관점의 기술적 검토와 고민이 필요할 것 같다.

https://blog.naver.com/freepsw/222654809552

Presentation of Apache Cassandra

Presentation of Apache Cassandra Nikiforos Botis This is a presentation of the popular NoSQL database Apache Cassandra which was created by our team in the context of the module "Business Intelligence and Big Data Analysis".

Kafka presentation

Kafka presentationMohammed Fazuluddin Kafka is an open source messaging system that can handle massive streams of data in real-time. It is fast, scalable, durable, and fault-tolerant. Kafka is commonly used for stream processing, website activity tracking, metrics collection, and log aggregation. It supports high throughput, reliable delivery, and horizontal scalability. Some examples of real-time use cases for Kafka include website monitoring, network monitoring, fraud detection, and IoT applications.

Tuning Apache Kafka Connectors for Flink.pptx

Tuning Apache Kafka Connectors for Flink.pptxFlink Forward Flink Forward San Francisco 2022.

In normal situations, the default Kafka consumer and producer configuration options work well. But we all know life is not all roses and rainbows and in this session we’ll explore a few knobs that can save the day in atypical scenarios. First, we'll take a detailed look at the parameters available when reading from Kafka. We’ll inspect the params helping us to spot quickly an application lock or crash, the ones that can significantly improve the performance and the ones to touch with gloves since they could cause more harm than benefit. Moreover we’ll explore the partitioning options and discuss when diverging from the default strategy is needed. Next, we’ll discuss the Kafka Sink. After browsing the available options we'll then dive deep into understanding how to approach use cases like sinking enormous records, managing spikes, and handling small but frequent updates.. If you want to understand how to make your application survive when the sky is dark, this session is for you!

by

Olena Babenko

Amazon S3 Best Practice and Tuning for Hadoop/Spark in the Cloud

Amazon S3 Best Practice and Tuning for Hadoop/Spark in the CloudNoritaka Sekiyama This document provides an overview and summary of Amazon S3 best practices and tuning for Hadoop/Spark in the cloud. It discusses the relationship between Hadoop/Spark and S3, the differences between HDFS and S3 and their use cases, details on how S3 behaves from the perspective of Hadoop/Spark, well-known pitfalls and tunings related to S3 consistency and multipart uploads, and recent community activities related to S3. The presentation aims to help users optimize their use of S3 storage with Hadoop/Spark frameworks.

Apache Cassandra at the Geek2Geek Berlin

Apache Cassandra at the Geek2Geek BerlinChristian Johannsen This document provides an agenda and introduction for a presentation on Apache Cassandra and DataStax Enterprise. The presentation covers an introduction to Cassandra and NoSQL, the CAP theorem, Apache Cassandra features and architecture including replication, consistency levels and failure handling. It also discusses the Cassandra Query Language, data modeling for time series data, and new features in DataStax Enterprise like Spark integration and secondary indexes on collections. The presentation concludes with recommendations for getting started with Cassandra in production environments.

Choosing an HDFS data storage format- Avro vs. Parquet and more - StampedeCon...

Choosing an HDFS data storage format- Avro vs. Parquet and more - StampedeCon...StampedeCon At the StampedeCon 2015 Big Data Conference: Picking your distribution and platform is just the first decision of many you need to make in order to create a successful data ecosystem. In addition to things like replication factor and node configuration, the choice of file format can have a profound impact on cluster performance. Each of the data formats have different strengths and weaknesses, depending on how you want to store and retrieve your data. For instance, we have observed performance differences on the order of 25x between Parquet and Plain Text files for certain workloads. However, it isn’t the case that one is always better than the others.

Top 5 Mistakes When Writing Spark Applications

Top 5 Mistakes When Writing Spark ApplicationsSpark Summit This document discusses 5 common mistakes when writing Spark applications:

1) Improperly sizing executors by not considering cores, memory, and overhead. The optimal configuration depends on the workload and cluster resources.

2) Applications failing due to shuffle blocks exceeding 2GB size limit. Increasing the number of partitions helps address this.

3) Jobs running slowly due to data skew in joins and shuffles. Techniques like salting keys can help address skew.

4) Not properly managing the DAG to avoid shuffles and bring work to the data. Using ReduceByKey over GroupByKey and TreeReduce over Reduce when possible.

5) Classpath conflicts arising from mismatched library versions, which can be addressed using sh

Apache Spark Architecture

Apache Spark ArchitectureAlexey Grishchenko This is the presentation I made on JavaDay Kiev 2015 regarding the architecture of Apache Spark. It covers the memory model, the shuffle implementations, data frames and some other high-level staff and can be used as an introduction to Apache Spark

A Tale of Three Apache Spark APIs: RDDs, DataFrames, and Datasets with Jules ...

A Tale of Three Apache Spark APIs: RDDs, DataFrames, and Datasets with Jules ...Databricks Of all the developers’ delight, none is more attractive than a set of APIs that make developers productive, that are easy to use, and that are intuitive and expressive. Apache Spark offers these APIs across components such as Spark SQL, Streaming, Machine Learning, and Graph Processing to operate on large data sets in languages such as Scala, Java, Python, and R for doing distributed big data processing at scale. In this talk, I will explore the evolution of three sets of APIs-RDDs, DataFrames, and Datasets-available in Apache Spark 2.x. In particular, I will emphasize three takeaways: 1) why and when you should use each set as best practices 2) outline its performance and optimization benefits; and 3) underscore scenarios when to use DataFrames and Datasets instead of RDDs for your big data distributed processing. Through simple notebook demonstrations with API code examples, you’ll learn how to process big data using RDDs, DataFrames, and Datasets and interoperate among them. (this will be vocalization of the blog, along with the latest developments in Apache Spark 2.x Dataframe/Datasets and Spark SQL APIs: https://databricks.com/blog/2016/07/14/a-tale-of-three-apache-spark-apis-rdds-dataframes-and-datasets.html)

Introduction to Apache Kafka

Introduction to Apache KafkaJeff Holoman The document provides an introduction and overview of Apache Kafka presented by Jeff Holoman. It begins with an agenda and background on the presenter. It then covers basic Kafka concepts like topics, partitions, producers, consumers and consumer groups. It discusses efficiency and delivery guarantees. Finally, it presents some use cases for Kafka and positioning around when it may or may not be a good fit compared to other technologies.

kafka

kafkaAmikam Snir Kafka is an open-source distributed commit log service that provides high-throughput messaging functionality. It is designed to handle large volumes of data and different use cases like online and offline processing more efficiently than alternatives like RabbitMQ. Kafka works by partitioning topics into segments spread across clusters of machines, and replicates across these partitions for fault tolerance. It can be used as a central data hub or pipeline for collecting, transforming, and streaming data between systems and applications.

Real-Life Use Cases & Architectures for Event Streaming with Apache Kafka

Real-Life Use Cases & Architectures for Event Streaming with Apache KafkaKai Wähner Streaming all over the World: Real-Life Use Cases & Architectures for Event Streaming with Apache Kafka.

Learn about various case studies for event streaming with Apache Kafka across industries. The talk explores architectures for real-world deployments from Audi, BMW, Disney, Generali, Paypal, Tesla, Unity, Walmart, William Hill, and more. Use cases include fraud detection, mainframe offloading, predictive maintenance, cybersecurity, edge computing, track&trace, live betting, and much more.

Building robust CDC pipeline with Apache Hudi and Debezium

Building robust CDC pipeline with Apache Hudi and DebeziumTathastu.ai We have covered the need for CDC and the benefits of building a CDC pipeline. We will compare various CDC streaming and reconciliation frameworks. We will also cover the architecture and the challenges we faced while running this system in the production. Finally, we will conclude the talk by covering Apache Hudi, Schema Registry and Debezium in detail and our contributions to the open-source community.

Hive + Tez: A Performance Deep Dive

Hive + Tez: A Performance Deep DiveDataWorks Summit This document provides a summary of improvements made to Hive's performance through the use of Apache Tez and other optimizations. Some key points include:

- Hive was improved to use Apache Tez as its execution engine instead of MapReduce, reducing latency for interactive queries and improving throughput for batch queries.

- Statistics collection was optimized to gather column-level statistics from ORC file footers, speeding up statistics gathering.

- The cost-based optimizer Optiq was added to Hive, allowing it to choose better execution plans.

- Vectorized query processing, broadcast joins, dynamic partitioning, and other optimizations improved individual query performance by over 100x in some cases.

MongoDB Fundamentals

MongoDB FundamentalsMongoDB The document discusses MongoDB concepts including:

- MongoDB uses a document-oriented data model with dynamic schemas and supports embedding and linking of related data.

- Replication allows for high availability and data redundancy across multiple nodes.

- Sharding provides horizontal scalability by distributing data across nodes in a cluster.

- MongoDB supports both eventual and immediate consistency models.

Apache Kafka Fundamentals for Architects, Admins and Developers

Apache Kafka Fundamentals for Architects, Admins and Developersconfluent This document summarizes a presentation about Apache Kafka. It introduces Apache Kafka as a modern, distributed platform for data streams made up of distributed, immutable, append-only commit logs. It describes Kafka's scalability similar to a filesystem and guarantees similar to a database, with the ability to rewind and replay data. The document discusses Kafka topics and partitions, partition leadership and replication, and provides resources for further information.

DASK and Apache Spark

DASK and Apache SparkDatabricks Gurpreet Singh from Microsoft gave a talk on scaling Python for data analysis and machine learning using DASK and Apache Spark. He discussed the challenges of scaling the Python data stack and compared options like DASK, Spark, and Spark MLlib. He provided examples of using DASK and PySpark DataFrames for parallel processing and showed how DASK-ML can be used to parallelize Scikit-Learn models. Distributed deep learning with tools like Project Hydrogen was also covered.

MySQL/MariaDB Proxy Software Test

MySQL/MariaDB Proxy Software TestI Goo Lee MySQL PowerGroup Tech Seminar (2017.1)

- 3.MySQL/MariaDB Proxy Software Test (by 이윤정)

- URL : cafe.naver.com/mysqlpg

Chapter 2 dbChapter 2 dbChapter 2 dbChapter 2 db.ppt

Chapter 2 dbChapter 2 dbChapter 2 dbChapter 2 db.pptmohammedabomashowrms This document discusses database system concepts and architecture. It describes DBMS architecture models as tightly integrated or client-server systems. It also discusses data models, schemas, database states, and the three schema architecture involving the internal, conceptual, and external levels. The document introduces the concepts of data independence and database languages including data definition languages and data manipulation languages.

Big Data_Architecture.pptx

Big Data_Architecture.pptxbetalab Les mégadonnées représentent un vrai enjeu à la fois technique, business et de société

: l'exploitation des données massives ouvre des possibilités de transformation radicales au

niveau des entreprises et des usages. Tout du moins : à condition que l'on en soit

techniquement capable... Car l'acquisition, le stockage et l'exploitation de quantités

massives de données représentent des vrais défis techniques.

Une architecture big data permet la création et de l'administration de tous les

systèmes techniques qui vont permettre la bonne exploitation des données.

Il existe énormément d'outils différents pour manipuler des quantités massives de

données : pour le stockage, l'analyse ou la diffusion, par exemple. Mais comment assembler

ces différents outils pour réaliser une architecture capable de passer à l'échelle, d'être

tolérante aux pannes et aisément extensible, tout cela sans exploser les coûts ?

Le succès du fonctionnement de la Big data dépend de son architecture, son

infrastructure correcte et de son l’utilité que l’on fait ‘’ Data into Information into Value ‘’.

L’architecture de la Big data est composé de 4 grandes parties : Intégration, Data Processing

& Stockage, Sécurité et Opération.

Ad

More Related Content

What's hot (20)

Migrating your clusters and workloads from Hadoop 2 to Hadoop 3

Migrating your clusters and workloads from Hadoop 2 to Hadoop 3DataWorks Summit The Hadoop community announced Hadoop 3.0 GA in December, 2017 and 3.1 around April, 2018 loaded with a lot of features and improvements. One of the biggest challenges for any new major release of a software platform is its compatibility. Apache Hadoop community has focused on ensuring wire and binary compatibility for Hadoop 2 clients and workloads.

There are many challenges to be addressed by admins while upgrading to a major release of Hadoop. Users running workloads on Hadoop 2 should be able to seamlessly run or migrate their workloads onto Hadoop 3. This session will be deep diving into upgrade aspects in detail and provide a detailed preview of migration strategies with information on what works and what might not work. This talk would focus on the motivation for upgrading to Hadoop 3 and provide a cluster upgrade guide for admins and workload migration guide for users of Hadoop.

Speaker

Suma Shivaprasad, Hortonworks, Staff Engineer

Rohith Sharma, Hortonworks, Senior Software Engineer

Cloud dw benchmark using tpd-ds( Snowflake vs Redshift vs EMR Hive )

Cloud dw benchmark using tpd-ds( Snowflake vs Redshift vs EMR Hive )SANG WON PARK 몇년 전부터 Data Architecture의 변화가 빠르게 진행되고 있고,

그 중 Cloud DW는 기존 Data Lake(Hadoop 기반)의 한계(성능, 비용, 운영 등)에 대한 대안으로 주목받으며,

많은 기업들이 이미 도입했거나, 도입을 검토하고 있다.

본 자료는 이러한 Cloud DW에 대해서 개념적으로 이해하고,

시장에 존재하는 다양한 Cloud DW 중에서 기업의 환경에 맞는 제품이 어떤 것인지 성능/비용 관점으로 비교했다.

- 왜기업들은 CloudDW에주목하는가?

- 시장에는어떤 제품들이 있는가?

- 우리Biz환경에서는 어떤 제품을 도입해야 하는가?

- CloudDW솔루션의 성능은?

- 기존DataLake(EMR)대비 성능은?

- 유사CloudDW(snowflake vs redshift) 대비성능은?

앞으로도 Data를 둘러싼 시장은 Cloud DW를 기반으로 ELT, Mata Mesh, Reverse ETL등 새로운 생테계가 급속하게 발전할 것이고,

이를 위한 데이터 엔지니어/데이터 아키텍트 관점의 기술적 검토와 고민이 필요할 것 같다.

https://blog.naver.com/freepsw/222654809552

Presentation of Apache Cassandra

Presentation of Apache Cassandra Nikiforos Botis This is a presentation of the popular NoSQL database Apache Cassandra which was created by our team in the context of the module "Business Intelligence and Big Data Analysis".

Kafka presentation

Kafka presentationMohammed Fazuluddin Kafka is an open source messaging system that can handle massive streams of data in real-time. It is fast, scalable, durable, and fault-tolerant. Kafka is commonly used for stream processing, website activity tracking, metrics collection, and log aggregation. It supports high throughput, reliable delivery, and horizontal scalability. Some examples of real-time use cases for Kafka include website monitoring, network monitoring, fraud detection, and IoT applications.

Tuning Apache Kafka Connectors for Flink.pptx

Tuning Apache Kafka Connectors for Flink.pptxFlink Forward Flink Forward San Francisco 2022.

In normal situations, the default Kafka consumer and producer configuration options work well. But we all know life is not all roses and rainbows and in this session we’ll explore a few knobs that can save the day in atypical scenarios. First, we'll take a detailed look at the parameters available when reading from Kafka. We’ll inspect the params helping us to spot quickly an application lock or crash, the ones that can significantly improve the performance and the ones to touch with gloves since they could cause more harm than benefit. Moreover we’ll explore the partitioning options and discuss when diverging from the default strategy is needed. Next, we’ll discuss the Kafka Sink. After browsing the available options we'll then dive deep into understanding how to approach use cases like sinking enormous records, managing spikes, and handling small but frequent updates.. If you want to understand how to make your application survive when the sky is dark, this session is for you!

by

Olena Babenko

Amazon S3 Best Practice and Tuning for Hadoop/Spark in the Cloud

Amazon S3 Best Practice and Tuning for Hadoop/Spark in the CloudNoritaka Sekiyama This document provides an overview and summary of Amazon S3 best practices and tuning for Hadoop/Spark in the cloud. It discusses the relationship between Hadoop/Spark and S3, the differences between HDFS and S3 and their use cases, details on how S3 behaves from the perspective of Hadoop/Spark, well-known pitfalls and tunings related to S3 consistency and multipart uploads, and recent community activities related to S3. The presentation aims to help users optimize their use of S3 storage with Hadoop/Spark frameworks.

Apache Cassandra at the Geek2Geek Berlin

Apache Cassandra at the Geek2Geek BerlinChristian Johannsen This document provides an agenda and introduction for a presentation on Apache Cassandra and DataStax Enterprise. The presentation covers an introduction to Cassandra and NoSQL, the CAP theorem, Apache Cassandra features and architecture including replication, consistency levels and failure handling. It also discusses the Cassandra Query Language, data modeling for time series data, and new features in DataStax Enterprise like Spark integration and secondary indexes on collections. The presentation concludes with recommendations for getting started with Cassandra in production environments.

Choosing an HDFS data storage format- Avro vs. Parquet and more - StampedeCon...

Choosing an HDFS data storage format- Avro vs. Parquet and more - StampedeCon...StampedeCon At the StampedeCon 2015 Big Data Conference: Picking your distribution and platform is just the first decision of many you need to make in order to create a successful data ecosystem. In addition to things like replication factor and node configuration, the choice of file format can have a profound impact on cluster performance. Each of the data formats have different strengths and weaknesses, depending on how you want to store and retrieve your data. For instance, we have observed performance differences on the order of 25x between Parquet and Plain Text files for certain workloads. However, it isn’t the case that one is always better than the others.

Top 5 Mistakes When Writing Spark Applications

Top 5 Mistakes When Writing Spark ApplicationsSpark Summit This document discusses 5 common mistakes when writing Spark applications:

1) Improperly sizing executors by not considering cores, memory, and overhead. The optimal configuration depends on the workload and cluster resources.

2) Applications failing due to shuffle blocks exceeding 2GB size limit. Increasing the number of partitions helps address this.

3) Jobs running slowly due to data skew in joins and shuffles. Techniques like salting keys can help address skew.

4) Not properly managing the DAG to avoid shuffles and bring work to the data. Using ReduceByKey over GroupByKey and TreeReduce over Reduce when possible.

5) Classpath conflicts arising from mismatched library versions, which can be addressed using sh

Apache Spark Architecture

Apache Spark ArchitectureAlexey Grishchenko This is the presentation I made on JavaDay Kiev 2015 regarding the architecture of Apache Spark. It covers the memory model, the shuffle implementations, data frames and some other high-level staff and can be used as an introduction to Apache Spark

A Tale of Three Apache Spark APIs: RDDs, DataFrames, and Datasets with Jules ...

A Tale of Three Apache Spark APIs: RDDs, DataFrames, and Datasets with Jules ...Databricks Of all the developers’ delight, none is more attractive than a set of APIs that make developers productive, that are easy to use, and that are intuitive and expressive. Apache Spark offers these APIs across components such as Spark SQL, Streaming, Machine Learning, and Graph Processing to operate on large data sets in languages such as Scala, Java, Python, and R for doing distributed big data processing at scale. In this talk, I will explore the evolution of three sets of APIs-RDDs, DataFrames, and Datasets-available in Apache Spark 2.x. In particular, I will emphasize three takeaways: 1) why and when you should use each set as best practices 2) outline its performance and optimization benefits; and 3) underscore scenarios when to use DataFrames and Datasets instead of RDDs for your big data distributed processing. Through simple notebook demonstrations with API code examples, you’ll learn how to process big data using RDDs, DataFrames, and Datasets and interoperate among them. (this will be vocalization of the blog, along with the latest developments in Apache Spark 2.x Dataframe/Datasets and Spark SQL APIs: https://databricks.com/blog/2016/07/14/a-tale-of-three-apache-spark-apis-rdds-dataframes-and-datasets.html)

Introduction to Apache Kafka

Introduction to Apache KafkaJeff Holoman The document provides an introduction and overview of Apache Kafka presented by Jeff Holoman. It begins with an agenda and background on the presenter. It then covers basic Kafka concepts like topics, partitions, producers, consumers and consumer groups. It discusses efficiency and delivery guarantees. Finally, it presents some use cases for Kafka and positioning around when it may or may not be a good fit compared to other technologies.

kafka

kafkaAmikam Snir Kafka is an open-source distributed commit log service that provides high-throughput messaging functionality. It is designed to handle large volumes of data and different use cases like online and offline processing more efficiently than alternatives like RabbitMQ. Kafka works by partitioning topics into segments spread across clusters of machines, and replicates across these partitions for fault tolerance. It can be used as a central data hub or pipeline for collecting, transforming, and streaming data between systems and applications.

Real-Life Use Cases & Architectures for Event Streaming with Apache Kafka

Real-Life Use Cases & Architectures for Event Streaming with Apache KafkaKai Wähner Streaming all over the World: Real-Life Use Cases & Architectures for Event Streaming with Apache Kafka.

Learn about various case studies for event streaming with Apache Kafka across industries. The talk explores architectures for real-world deployments from Audi, BMW, Disney, Generali, Paypal, Tesla, Unity, Walmart, William Hill, and more. Use cases include fraud detection, mainframe offloading, predictive maintenance, cybersecurity, edge computing, track&trace, live betting, and much more.

Building robust CDC pipeline with Apache Hudi and Debezium

Building robust CDC pipeline with Apache Hudi and DebeziumTathastu.ai We have covered the need for CDC and the benefits of building a CDC pipeline. We will compare various CDC streaming and reconciliation frameworks. We will also cover the architecture and the challenges we faced while running this system in the production. Finally, we will conclude the talk by covering Apache Hudi, Schema Registry and Debezium in detail and our contributions to the open-source community.

Hive + Tez: A Performance Deep Dive

Hive + Tez: A Performance Deep DiveDataWorks Summit This document provides a summary of improvements made to Hive's performance through the use of Apache Tez and other optimizations. Some key points include:

- Hive was improved to use Apache Tez as its execution engine instead of MapReduce, reducing latency for interactive queries and improving throughput for batch queries.

- Statistics collection was optimized to gather column-level statistics from ORC file footers, speeding up statistics gathering.

- The cost-based optimizer Optiq was added to Hive, allowing it to choose better execution plans.

- Vectorized query processing, broadcast joins, dynamic partitioning, and other optimizations improved individual query performance by over 100x in some cases.

MongoDB Fundamentals

MongoDB FundamentalsMongoDB The document discusses MongoDB concepts including:

- MongoDB uses a document-oriented data model with dynamic schemas and supports embedding and linking of related data.

- Replication allows for high availability and data redundancy across multiple nodes.

- Sharding provides horizontal scalability by distributing data across nodes in a cluster.

- MongoDB supports both eventual and immediate consistency models.

Apache Kafka Fundamentals for Architects, Admins and Developers

Apache Kafka Fundamentals for Architects, Admins and Developersconfluent This document summarizes a presentation about Apache Kafka. It introduces Apache Kafka as a modern, distributed platform for data streams made up of distributed, immutable, append-only commit logs. It describes Kafka's scalability similar to a filesystem and guarantees similar to a database, with the ability to rewind and replay data. The document discusses Kafka topics and partitions, partition leadership and replication, and provides resources for further information.

DASK and Apache Spark

DASK and Apache SparkDatabricks Gurpreet Singh from Microsoft gave a talk on scaling Python for data analysis and machine learning using DASK and Apache Spark. He discussed the challenges of scaling the Python data stack and compared options like DASK, Spark, and Spark MLlib. He provided examples of using DASK and PySpark DataFrames for parallel processing and showed how DASK-ML can be used to parallelize Scikit-Learn models. Distributed deep learning with tools like Project Hydrogen was also covered.

MySQL/MariaDB Proxy Software Test

MySQL/MariaDB Proxy Software TestI Goo Lee MySQL PowerGroup Tech Seminar (2017.1)

- 3.MySQL/MariaDB Proxy Software Test (by 이윤정)

- URL : cafe.naver.com/mysqlpg

Similar to Schema-on-Read vs Schema-on-Write (20)

Chapter 2 dbChapter 2 dbChapter 2 dbChapter 2 db.ppt

Chapter 2 dbChapter 2 dbChapter 2 dbChapter 2 db.pptmohammedabomashowrms This document discusses database system concepts and architecture. It describes DBMS architecture models as tightly integrated or client-server systems. It also discusses data models, schemas, database states, and the three schema architecture involving the internal, conceptual, and external levels. The document introduces the concepts of data independence and database languages including data definition languages and data manipulation languages.

Big Data_Architecture.pptx

Big Data_Architecture.pptxbetalab Les mégadonnées représentent un vrai enjeu à la fois technique, business et de société

: l'exploitation des données massives ouvre des possibilités de transformation radicales au

niveau des entreprises et des usages. Tout du moins : à condition que l'on en soit

techniquement capable... Car l'acquisition, le stockage et l'exploitation de quantités

massives de données représentent des vrais défis techniques.

Une architecture big data permet la création et de l'administration de tous les

systèmes techniques qui vont permettre la bonne exploitation des données.

Il existe énormément d'outils différents pour manipuler des quantités massives de

données : pour le stockage, l'analyse ou la diffusion, par exemple. Mais comment assembler

ces différents outils pour réaliser une architecture capable de passer à l'échelle, d'être

tolérante aux pannes et aisément extensible, tout cela sans exploser les coûts ?

Le succès du fonctionnement de la Big data dépend de son architecture, son

infrastructure correcte et de son l’utilité que l’on fait ‘’ Data into Information into Value ‘’.

L’architecture de la Big data est composé de 4 grandes parties : Intégration, Data Processing

& Stockage, Sécurité et Opération.

From discovering to trusting data

From discovering to trusting datamarkgrover Presentation at SF Big Analytics meetup on Jan 12, 2021. https://www.meetup.com/SF-Big-Analytics/events/275217663/

MongoDB

MongoDBfsbrooke Compilation of information to provide an introduction to MongoDB with particular emphasis on its C# driver.

Big data architectures and the data lake

Big data architectures and the data lakeJames Serra The document provides an overview of big data architectures and the data lake concept. It discusses why organizations are adopting data lakes to handle increasing data volumes and varieties. The key aspects covered include:

- Defining top-down and bottom-up approaches to data management

- Explaining what a data lake is and how Hadoop can function as the data lake

- Describing how a modern data warehouse combines features of a traditional data warehouse and data lake

- Discussing how federated querying allows data to be accessed across multiple sources

- Highlighting benefits of implementing big data solutions in the cloud

- Comparing shared-nothing, massively parallel processing (MPP) architectures to symmetric multi-processing (

no sql presentation

no sql presentationchandanm2 This document provides an overview of NoSQL databases. It discusses why cloud data stores became popular due to the rise of social media sites and need for large data storage. NoSQL databases provide a mechanism for storage and retrieval of data that is not modeled in tabular relations. NoSQL databases are scalable, do not require fixed schemas, and lack ACID properties. The document also discusses the CAP theorem, which states that a distributed system cannot achieve consistency, availability, and partition tolerance simultaneously.

Master.pptx

Master.pptxKarthikR780430 NoSQL databases were developed to address the need for databases that can handle big data and scale horizontally to support massive amounts of data and high user loads. NoSQL databases are non-relational and support high availability through horizontal scaling and replication across commodity servers to allow for continuous availability. Popular types of NoSQL databases include key-value stores, document stores, column-oriented databases, and graph databases, each suited for different use cases depending on an application's data model and query requirements.

NoSQL BIg Data Analytics Mongo DB and Cassandra .pdf

NoSQL BIg Data Analytics Mongo DB and Cassandra .pdfSharmilaChidaravalli No SQL

Mongo DB and Cassandra

Cloudera Impala - San Diego Big Data Meetup August 13th 2014

Cloudera Impala - San Diego Big Data Meetup August 13th 2014cdmaxime Cloudera Impala presentation to San Diego Big Data Meetup (http://www.meetup.com/sdbigdata/events/189420582/)

Microsoft Data Integration Pipelines: Azure Data Factory and SSIS

Microsoft Data Integration Pipelines: Azure Data Factory and SSISMark Kromer The document discusses tools for building ETL pipelines to consume hybrid data sources and load data into analytics systems at scale. It describes how Azure Data Factory and SQL Server Integration Services can be used to automate pipelines that extract, transform, and load data from both on-premises and cloud data stores into data warehouses and data lakes for analytics. Specific patterns shown include analyzing blog comments, sentiment analysis with machine learning, and loading a modern data warehouse.

Migrating Oracle database to Cassandra

Migrating Oracle database to CassandraUmair Mansoob This document discusses migrating Oracle databases to Cassandra. Cassandra offers lower costs, supports more data types, and can scale to handle large volumes of data across multiple data centers. It also allows for more flexible data modeling and built-in compression. The document compares Cassandra and Oracle on features, provides examples of companies using Cassandra, and outlines best practices for data modeling in Cassandra. It also discusses strategies for migrating data from Oracle to Cassandra including using loaders, Sqoop, and Spark.

Dbms module i

Dbms module iSANTOSH RATH This document provides an overview of database management systems and related concepts. It discusses the three schema architecture including external, conceptual, and internal schemas. It also covers data models, data definition and manipulation languages, database administrators, keys such as primary keys and foreign keys, and integrity constraints including referential integrity, check constraints, and NOT NULL constraints. The goal of these concepts is to provide a structured and standardized way to define, manipulate, and manage database systems and data.

Data Engineering on GCP

Data Engineering on GCPBlibBlobb This document provides an overview of Google Cloud Platform (GCP) data engineering concepts and services. It discusses key data engineering roles and responsibilities, as well as GCP services for compute, storage, databases, analytics, and monitoring. Specific services covered include Compute Engine, Kubernetes Engine, App Engine, Cloud Storage, Cloud SQL, Cloud Spanner, BigTable, and BigQuery. The document also provides primers on Hadoop, Spark, data modeling best practices, and security and access controls.

Google Data Engineering.pdf

Google Data Engineering.pdfavenkatram Google Data Engineering Cheatsheet provides an overview of key concepts in data engineering including data collection, transformation, visualization, and machine learning. It discusses Google Cloud Platform services for data engineering like Compute, Storage, Big Data, and Machine Learning. The document also summarizes concepts like Hadoop, HDFS, MapReduce, Spark, data warehouses, streaming data, and the Google Cloud monitoring and access management tools.

Compressed Introduction to Hadoop, SQL-on-Hadoop and NoSQL

Compressed Introduction to Hadoop, SQL-on-Hadoop and NoSQLArseny Chernov This document provides a compressed introduction to Hadoop, SQL-on-Hadoop, and NoSQL technologies. It begins with welcoming remarks and then provides short overviews of key concepts in less than 3 sentences each. These include introductions to Hadoop origins and architecture, HDFS, YARN, MapReduce, Hive, and HBase. It also includes quick demos and encourages questions from the audience.

NoSQL and Couchbase

NoSQL and CouchbaseSangharsh agarwal 1. NoSQL, Why NoSQL.

2. CAP, ACID, BASE

3. Couchbase, Why Couchbase?

4. Couchbase Features

5. Couchbase Architecture.

Otimizações de Projetos de Big Data, Dw e AI no Microsoft Azure

Otimizações de Projetos de Big Data, Dw e AI no Microsoft AzureLuan Moreno Medeiros Maciel Apresentação para a Agência Nacional de Aviação Civil sobre Otimzações de Projetos de Big Data, Dw e AI

Agile data lake? An oxymoron?

Agile data lake? An oxymoron?samthemonad Agile data lake? An oxymoron?

This talk includes a set of opinions and does not necessarily represent the opinion of the creator.

Ad

More from Amr Awadallah (6)

How Apache Hadoop is Revolutionizing Business Intelligence and Data Analytics...

How Apache Hadoop is Revolutionizing Business Intelligence and Data Analytics...Amr Awadallah Apache Hadoop is revolutionizing business intelligence and data analytics by providing a scalable and fault-tolerant distributed system for data storage and processing. It allows businesses to explore raw data at scale, perform complex analytics, and keep data alive for long-term analysis. Hadoop provides agility through flexible schemas and the ability to store any data and run any analysis. It offers scalability from terabytes to petabytes and consolidation by enabling data sharing across silos.

Cloudera/Stanford EE203 (Entrepreneurial Engineer)

Cloudera/Stanford EE203 (Entrepreneurial Engineer)Amr Awadallah This is a talk that I gave at Stanford's EE203 (Entrepreneurial Engineer) on Tuesday Feb 9th, 2010. It covers my experience at Stanford, VivaSmart, Yahoo, Accel Partners, and Cloudera.

How Hadoop Revolutionized Data Warehousing at Yahoo and Facebook

How Hadoop Revolutionized Data Warehousing at Yahoo and FacebookAmr Awadallah Hadoop was developed to solve problems with data warehousing systems at Yahoo and Facebook that were limited in processing large amounts of raw data in real-time. Hadoop uses HDFS for scalable storage and MapReduce for distributed processing. It allows for agile access to raw data at scale for ad-hoc queries, data mining and analytics without being constrained by traditional database schemas. Hadoop has been widely adopted for large-scale data processing and analytics across many companies.

Service Primitives for Internet Scale Applications

Service Primitives for Internet Scale ApplicationsAmr Awadallah A general framework to describe internet scale applications and characterize the functional properties that can be traded away to improve the following operational metrics:

* Throughput (how many user requests/sec?)

* Interactivity (latency, how fast user requests finish?)

* Availability (% of time user perceives service as up), including fast recovery to improve availability

* TCO (Total Cost of Ownership)

Applications of Virtual Machine Monitors for Scalable, Reliable, and Interact...

Applications of Virtual Machine Monitors for Scalable, Reliable, and Interact...Amr Awadallah My PhD oral defense.

An overlay network of VMMs (the vMatrix) which enables backward-compatible improvement of the scalability, reliability, and interactivity of Internet services.

Three applications demonstrated:

1. Dynamic Content Distribution

2. Server Switching

3. Fair placement of Game Servers

Yahoo Microstrategy 2008

Yahoo Microstrategy 2008Amr Awadallah I was meaning to put this talk up for grabs for some time now, but kept forgetting. I was invited to give the keynote speech for the Microstrategy World 2008 conference. The talk was very well received, so here it is.

Ad

Recently uploaded (20)

Complete Guide to Advanced Logistics Management Software in Riyadh.pdf

Complete Guide to Advanced Logistics Management Software in Riyadh.pdfSoftware Company Explore the benefits and features of advanced logistics management software for businesses in Riyadh. This guide delves into the latest technologies, from real-time tracking and route optimization to warehouse management and inventory control, helping businesses streamline their logistics operations and reduce costs. Learn how implementing the right software solution can enhance efficiency, improve customer satisfaction, and provide a competitive edge in the growing logistics sector of Riyadh.

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...Impelsys Inc. Impelsys provided a robust testing solution, leveraging a risk-based and requirement-mapped approach to validate ICU Connect and CritiXpert. A well-defined test suite was developed to assess data communication, clinical data collection, transformation, and visualization across integrated devices.

How Can I use the AI Hype in my Business Context?

How Can I use the AI Hype in my Business Context?Daniel Lehner 𝙄𝙨 𝘼𝙄 𝙟𝙪𝙨𝙩 𝙝𝙮𝙥𝙚? 𝙊𝙧 𝙞𝙨 𝙞𝙩 𝙩𝙝𝙚 𝙜𝙖𝙢𝙚 𝙘𝙝𝙖𝙣𝙜𝙚𝙧 𝙮𝙤𝙪𝙧 𝙗𝙪𝙨𝙞𝙣𝙚𝙨𝙨 𝙣𝙚𝙚𝙙𝙨?

Everyone’s talking about AI but is anyone really using it to create real value?

Most companies want to leverage AI. Few know 𝗵𝗼𝘄.

✅ What exactly should you ask to find real AI opportunities?

✅ Which AI techniques actually fit your business?

✅ Is your data even ready for AI?

If you’re not sure, you’re not alone. This is a condensed version of the slides I presented at a Linkedin webinar for Tecnovy on 28.04.2025.

TrsLabs - Fintech Product & Business Consulting

TrsLabs - Fintech Product & Business ConsultingTrs Labs Hybrid Growth Mandate Model with TrsLabs

Strategic Investments, Inorganic Growth, Business Model Pivoting are critical activities that business don't do/change everyday. In cases like this, it may benefit your business to choose a temporary external consultant.

An unbiased plan driven by clearcut deliverables, market dynamics and without the influence of your internal office equations empower business leaders to make right choices.

Getting things done within a budget within a timeframe is key to Growing Business - No matter whether you are a start-up or a big company

Talk to us & Unlock the competitive advantage

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...organizerofv IEDM 2024 Tutorial2

AI and Data Privacy in 2025: Global Trends

AI and Data Privacy in 2025: Global TrendsInData Labs In this infographic, we explore how businesses can implement effective governance frameworks to address AI data privacy. Understanding it is crucial for developing effective strategies that ensure compliance, safeguard customer trust, and leverage AI responsibly. Equip yourself with insights that can drive informed decision-making and position your organization for success in the future of data privacy.

This infographic contains:

-AI and data privacy: Key findings

-Statistics on AI data privacy in the today’s world

-Tips on how to overcome data privacy challenges

-Benefits of AI data security investments.

Keep up-to-date on how AI is reshaping privacy standards and what this entails for both individuals and organizations.

Big Data Analytics Quick Research Guide by Arthur Morgan

Big Data Analytics Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In France

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In Francechb3 The latest updates on Manifest's pre-seed stage progress.

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...Aqusag Technologies In late April 2025, a significant portion of Europe, particularly Spain, Portugal, and parts of southern France, experienced widespread, rolling power outages that continue to affect millions of residents, businesses, and infrastructure systems.

Linux Support for SMARC: How Toradex Empowers Embedded Developers

Linux Support for SMARC: How Toradex Empowers Embedded DevelopersToradex Toradex brings robust Linux support to SMARC (Smart Mobility Architecture), ensuring high performance and long-term reliability for embedded applications. Here’s how:

• Optimized Torizon OS & Yocto Support – Toradex provides Torizon OS, a Debian-based easy-to-use platform, and Yocto BSPs for customized Linux images on SMARC modules.

• Seamless Integration with i.MX 8M Plus and i.MX 95 – Toradex SMARC solutions leverage NXP’s i.MX 8 M Plus and i.MX 95 SoCs, delivering power efficiency and AI-ready performance.

• Secure and Reliable – With Secure Boot, over-the-air (OTA) updates, and LTS kernel support, Toradex ensures industrial-grade security and longevity.

• Containerized Workflows for AI & IoT – Support for Docker, ROS, and real-time Linux enables scalable AI, ML, and IoT applications.

• Strong Ecosystem & Developer Support – Toradex offers comprehensive documentation, developer tools, and dedicated support, accelerating time-to-market.

With Toradex’s Linux support for SMARC, developers get a scalable, secure, and high-performance solution for industrial, medical, and AI-driven applications.

Do you have a specific project or application in mind where you're considering SMARC? We can help with Free Compatibility Check and help you with quick time-to-market

For more information: https://www.toradex.com/computer-on-modules/smarc-arm-family

Mobile App Development Company in Saudi Arabia

Mobile App Development Company in Saudi ArabiaSteve Jonas EmizenTech is a globally recognized software development company, proudly serving businesses since 2013. With over 11+ years of industry experience and a team of 200+ skilled professionals, we have successfully delivered 1200+ projects across various sectors. As a leading Mobile App Development Company In Saudi Arabia we offer end-to-end solutions for iOS, Android, and cross-platform applications. Our apps are known for their user-friendly interfaces, scalability, high performance, and strong security features. We tailor each mobile application to meet the unique needs of different industries, ensuring a seamless user experience. EmizenTech is committed to turning your vision into a powerful digital product that drives growth, innovation, and long-term success in the competitive mobile landscape of Saudi Arabia.

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, presentation slides, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...Alan Dix Talk at the final event of Data Fusion Dynamics: A Collaborative UK-Saudi Initiative in Cybersecurity and Artificial Intelligence funded by the British Council UK-Saudi Challenge Fund 2024, Cardiff Metropolitan University, 29th April 2025

https://alandix.com/academic/talks/CMet2025-AI-Changes-Everything/

Is AI just another technology, or does it fundamentally change the way we live and think?

Every technology has a direct impact with micro-ethical consequences, some good, some bad. However more profound are the ways in which some technologies reshape the very fabric of society with macro-ethical impacts. The invention of the stirrup revolutionised mounted combat, but as a side effect gave rise to the feudal system, which still shapes politics today. The internal combustion engine offers personal freedom and creates pollution, but has also transformed the nature of urban planning and international trade. When we look at AI the micro-ethical issues, such as bias, are most obvious, but the macro-ethical challenges may be greater.

At a micro-ethical level AI has the potential to deepen social, ethnic and gender bias, issues I have warned about since the early 1990s! It is also being used increasingly on the battlefield. However, it also offers amazing opportunities in health and educations, as the recent Nobel prizes for the developers of AlphaFold illustrate. More radically, the need to encode ethics acts as a mirror to surface essential ethical problems and conflicts.

At the macro-ethical level, by the early 2000s digital technology had already begun to undermine sovereignty (e.g. gambling), market economics (through network effects and emergent monopolies), and the very meaning of money. Modern AI is the child of big data, big computation and ultimately big business, intensifying the inherent tendency of digital technology to concentrate power. AI is already unravelling the fundamentals of the social, political and economic world around us, but this is a world that needs radical reimagining to overcome the global environmental and human challenges that confront us. Our challenge is whether to let the threads fall as they may, or to use them to weave a better future.

AI EngineHost Review: Revolutionary USA Datacenter-Based Hosting with NVIDIA ...

AI EngineHost Review: Revolutionary USA Datacenter-Based Hosting with NVIDIA ...SOFTTECHHUB I started my online journey with several hosting services before stumbling upon Ai EngineHost. At first, the idea of paying one fee and getting lifetime access seemed too good to pass up. The platform is built on reliable US-based servers, ensuring your projects run at high speeds and remain safe. Let me take you step by step through its benefits and features as I explain why this hosting solution is a perfect fit for digital entrepreneurs.

Technology Trends in 2025: AI and Big Data Analytics

Technology Trends in 2025: AI and Big Data AnalyticsInData Labs At InData Labs, we have been keeping an ear to the ground, looking out for AI-enabled digital transformation trends coming our way in 2025. Our report will provide a look into the technology landscape of the future, including:

-Artificial Intelligence Market Overview

-Strategies for AI Adoption in 2025

-Anticipated drivers of AI adoption and transformative technologies

-Benefits of AI and Big data for your business

-Tips on how to prepare your business for innovation

-AI and data privacy: Strategies for securing data privacy in AI models, etc.

Download your free copy nowand implement the key findings to improve your business.

Procurement Insights Cost To Value Guide.pptx

Procurement Insights Cost To Value Guide.pptxJon Hansen Procurement Insights integrated Historic Procurement Industry Archives, serves as a powerful complement — not a competitor — to other procurement industry firms. It fills critical gaps in depth, agility, and contextual insight that most traditional analyst and association models overlook.

Learn more about this value- driven proprietary service offering here.

Role of Data Annotation Services in AI-Powered Manufacturing

Role of Data Annotation Services in AI-Powered ManufacturingAndrew Leo From predictive maintenance to robotic automation, AI is driving the future of manufacturing. But without high-quality annotated data, even the smartest models fall short.

Discover how data annotation services are powering accuracy, safety, and efficiency in AI-driven manufacturing systems.

Precision in data labeling = Precision on the production floor.

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...Noah Loul Artificial intelligence is changing how businesses operate. Companies are using AI agents to automate tasks, reduce time spent on repetitive work, and focus more on high-value activities. Noah Loul, an AI strategist and entrepreneur, has helped dozens of companies streamline their operations using smart automation. He believes AI agents aren't just tools—they're workers that take on repeatable tasks so your human team can focus on what matters. If you want to reduce time waste and increase output, AI agents are the next move.

HCL Nomad Web – Best Practices and Managing Multiuser Environments

HCL Nomad Web – Best Practices and Managing Multiuser Environmentspanagenda Webinar Recording: https://www.panagenda.com/webinars/hcl-nomad-web-best-practices-and-managing-multiuser-environments/

HCL Nomad Web is heralded as the next generation of the HCL Notes client, offering numerous advantages such as eliminating the need for packaging, distribution, and installation. Nomad Web client upgrades will be installed “automatically” in the background. This significantly reduces the administrative footprint compared to traditional HCL Notes clients. However, troubleshooting issues in Nomad Web present unique challenges compared to the Notes client.

Join Christoph and Marc as they demonstrate how to simplify the troubleshooting process in HCL Nomad Web, ensuring a smoother and more efficient user experience.

In this webinar, we will explore effective strategies for diagnosing and resolving common problems in HCL Nomad Web, including

- Accessing the console

- Locating and interpreting log files

- Accessing the data folder within the browser’s cache (using OPFS)

- Understand the difference between single- and multi-user scenarios

- Utilizing Client Clocking

Schema-on-Read vs Schema-on-Write

- 1. Schema-on-Read vs Schema-on-Write Amr Awadallah CTO, Cloudera, Inc. [email protected]

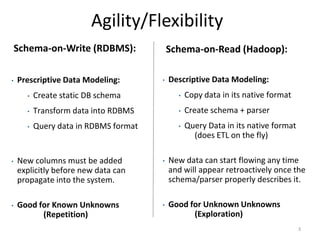

- 2. Schema-on-Read Traditional data systems require users to create a schema before loading any data into the system. This allows such systems to tightly control the placement of the data during load time hence enabling them to answer interactive queries very fast. However, this leads to loss of agility. In this talk I will demonstrate Hadoop's schema-onread capability. Using this approach data can start flowing into the system in its original form, then the schema is parsed at read time (each user can apply their own "data-lens“ to interpret the data). This allows for extreme agility while dealing with complex evolving data structures.

- 3. Agility/Flexibility Schema-on-Write (RDBMS): • Prescriptive Data Modeling: Schema-on-Read (Hadoop): • Descriptive Data Modeling: • Create static DB schema • Copy data in its native format • Transform data into RDBMS • Create schema + parser • Query data in RDBMS format • Query Data in its native format (does ETL on the fly) • New columns must be added explicitly before new data can propagate into the system. • New data can start flowing any time and will appear retroactively once the schema/parser properly describes it. • Good for Known Unknowns (Repetition) • Good for Unknown Unknowns (Exploration) 3

- 4. Traditional Data Stack Business Intelligent Software (OLAP, etc) Datamart Database 200GB/day Extract-Transform-Load Foundational Warehouse Grid Processing System (1st stage ETL) File Server Farm Log Collection Instrumentation 20TB/day