Scylla Summit 2016: Outbrain Case Study - Lowering Latency While Doing 20X IOPS of Cassandra

5 likes3,441 views

Outbrain is the world's largest content discovery program. Learn about their use case with Scylla where they lowered latency while doing 20X IOPS of Cassandra.

1 of 42

Downloaded 42 times

Ad

Recommended

Performance Monitoring: Understanding Your Scylla Cluster

Performance Monitoring: Understanding Your Scylla ClusterScyllaDB Learn the basics of monitoring Scylla, including monitoring infrastructure and understanding Scylla metrics.

Scylla Summit 2016: Scylla at Samsung SDS

Scylla Summit 2016: Scylla at Samsung SDSScyllaDB Learn about the challenges, solution and technical validation for Scylla in Samsung SDS. There are also use cases and the future plan.

Mesosphere and Contentteam: A New Way to Run Cassandra

Mesosphere and Contentteam: A New Way to Run CassandraDataStax Academy We, Ben Whitehead and Robert Stupp, will show you how to run Cassandra on Mesos. We will go through all the technical steps how to plan, setup and operate even large scale Cassandra clusters on Mesos. Further we illustrate how the Cassandra-on-Mesos framework helps you to setup Cassandra on Mesos, schedule regular maintenance tasks and manage hardware failures in the heart of your data center.

How netflix manages petabyte scale apache cassandra in the cloud

How netflix manages petabyte scale apache cassandra in the cloudVinay Kumar Chella Netflix manages petabyte-scale Apache Cassandra databases in the cloud through declarative infrastructure and tooling. They provision new Cassandra clusters quickly using robust AMIs and pre-flight checks. Existing clusters are kept running through declarative control planes that monitor desired states and automatically remedy issues. Netflix migrates software and hardware seamlessly through immutable infrastructure, incremental backups to S3, and parallel data transfers. They apply lessons from failures like accidentally deleting real backup data through over-automation.

Scylla Summit 2018: Introducing ValuStor, A Memcached Alternative Made to Run...

Scylla Summit 2018: Introducing ValuStor, A Memcached Alternative Made to Run...ScyllaDB In this presentation, we share approaches to replacing RAM-only caching infrastructure while achieving high performance against a persistent datastore. Memcached has proven very popular, but it also requires its users to sacrifice reliability, scalability, redundancy, availability, and security. To address these issues, Sensaphone implemented a memcached replacement called ValuStor, an easy-to-use key-value database client layer written in C++ that works well with Scylla. ValuStor includes features like client-side write queues, multi-threading support, automatic adaptive consistency, and support for multiple data types (including JSON).

Scylla Summit 2018: Make Scylla Fast Again! Find out how using Tools, Talent,...

Scylla Summit 2018: Make Scylla Fast Again! Find out how using Tools, Talent,...ScyllaDB Scylla strives to deliver high throughput at low, consistent latencies under any scenario. But in the field things can and do get slower than one would like. Some of those issues come from bad data modelling and anti-patterns. Some others from lack of resources and bad system configuration, and in rare cases even product malfunction.

But how to tell them apart? And once you do, how to understand how to fix your application or reconfigure your system? Scylla has a rich ecosystem of tools available to answer those questions and in this talk we’ll discuss the proper use of some of them and how to take advantage of each tool’s strength. We will discuss real examples using tools like CQL tracing, nodetool commands, the Scylla monitor and others.

Make 2016 your year of SMACK talk

Make 2016 your year of SMACK talkDataStax Academy You’ve heard all of the hype, but how can SMACK work for you? In this all-star lineup, you will learn how to create a reactive, scaling, resilient and performant data processing powerhouse. Bringing Akka, Kafka and Mesos together provides a foundation to develop and operate an elastically scalable actor system. We will go through the basics of Akka, Kafka and Mesos and then deep dive into putting them together in an end2end (and back again) distrubuted transaction. Distributed transactions mean producers waiting for one or more of consumers to respond. We'll also go through automated ways to failure induce these systems (using LinkedIn Simoorg) and trace them from start to stop through each component (using Twitters Zipkin). Finally, you will see how Apache Cassandra and Spark can be combined to add the incredibly scaling storage and data analysis needed in fast data pipelines. With these technologies as a foundation, you have the assurance that scale is never a problem and uptime is default.

Cassandra: An Alien Technology That's not so Alien

Cassandra: An Alien Technology That's not so AlienBrian Hess This document provides an overview of Cassandra, an open source distributed database. It discusses Cassandra's query language (CQL), which is similar to SQL but only supports Cassandra operations. It also covers Cassandra's tabular data model with rows, columns, and strong schemas. The document reviews tools for working with Cassandra and best practices for data modeling and application methodology, emphasizing denormalization and idempotency over transactions. It notes limitations of batches, secondary indices, and lightweight transactions in Cassandra.

Building Large-Scale Stream Infrastructures Across Multiple Data Centers with...

Building Large-Scale Stream Infrastructures Across Multiple Data Centers with...DataWorks Summit/Hadoop Summit This document discusses common patterns for running Apache Kafka across multiple data centers. It describes stretched clusters, active/passive, and active/active cluster configurations. For each pattern, it covers how to handle failures and recover consumer offsets when switching data centers. It also discusses considerations for using Kafka with other data stores in a multi-DC environment and future work like timestamp-based offset seeking.

Distribute Key Value Store

Distribute Key Value StoreSantal Li This document provides an overview of distributed key-value stores and Cassandra. It discusses key concepts like data partitioning, replication, and consistency models. It also summarizes Cassandra's features such as high availability, elastic scalability, and support for different data models. Code examples are given to demonstrate basic usage of the Cassandra client API for operations like insert, get, multiget and range queries.

Cassandra Summit 2014: Active-Active Cassandra Behind the Scenes

Cassandra Summit 2014: Active-Active Cassandra Behind the ScenesDataStax Academy Presenter: Roopa Tangirala, Senior Cloud Data Architect at Netflix

High availability is an important requirement for any online business and trying to architect around failures and expecting infrastructure to fail, and even then be highly available, is the key to success. One such effort here at Netflix was the Active-Active implementation where we provided region resiliency. This presentation will discuss the brief overview of the active-active implementation and how it leveraged Cassandra’s architecture in the backend to achieve its goal. It will cover our journey through A-A from Cassandra’s perspective, the data validation we did to prove the backend would work without impacting customer experience. The various problems we faced, like long repair times and gc_grace settings, plus lessons learned and what would we do differently next time around, will also be discussed.

NewSQL overview, Feb 2015

NewSQL overview, Feb 2015Ivan Glushkov NewSQL overview:

- History of RDBMs

- The reasons why NoSQL concept appeared

- Why NoSQL was not enough, the necessity of NewSQL

- Characteristics of NewSQL

- 7 DBs that belongs to NewSQL

- Overview Table with main properties

Client Drivers and Cassandra, the Right Way

Client Drivers and Cassandra, the Right WayDataStax Academy This document discusses Cassandra drivers and how to optimize queries. It begins with an introduction to Cassandra drivers and examples of basic usage in Java, Python and Ruby. It then covers the differences between synchronous and asynchronous queries. Prepared statements and consistency levels are also discussed. The document explores how consistency levels, driver policies and node outages impact performance and latency. Hinted handoff is described as a performance optimization that stores hints for missed writes on down nodes. Lastly, it provides best practices around driver usage.

BigData Developers MeetUp

BigData Developers MeetUpChristian Johannsen Christian Johannsen presents on evaluating Apache Cassandra as a cloud database. Cassandra is optimized for cloud infrastructure with features like transparent elasticity, scalability, high availability, easy data distribution and redundancy. It supports multiple data types, is easy to manage, low cost, supports multiple infrastructures and has security features. A demo of DataStax OpsCenter and Apache Spark on Cassandra is shown.

Webinar: Getting Started with Apache Cassandra

Webinar: Getting Started with Apache CassandraDataStax Would you like to learn how to use Cassandra but don’t know where to begin? Want to get your feet wet but you’re lost in the desert? Longing for a cluster when you don’t even know how to set up a node? Then look no further! Rebecca Mills, Junior Evangelist at Datastax, will guide you in the webinar “Getting Started with Apache Cassandra...”

You'll get an overview of Planet Cassandra’s resources to get you started quickly and easily. Rebecca will take you down the path that's right for you, whether you are a developer or administrator. Join if you are interested in getting Cassandra up and working in the way that suits you best.

C* Summit 2013: Cassandra at eBay Scale by Feng Qu and Anurag Jambhekar

C* Summit 2013: Cassandra at eBay Scale by Feng Qu and Anurag JambhekarDataStax Academy We have seen rapid adoption of C* at eBay in past two years. We have made tremendous efforts to integrate C* into existing database platforms, including Oracle, MySQL, Postgres, MongoDB, XMP etc.. We also scale C* to meet business requirement and encountered technical challenges you only see at eBay scale, 100TB data on hundreds of nodes. We will share our experience of deployment automation, managing, monitoring, reporting for both Apache Cassandra and DataStax enterprise.

A glimpse of cassandra 4.0 features netflix

A glimpse of cassandra 4.0 features netflixVinay Kumar Chella These slides are from the recent meetup @ Uber - Apache Cassandra at Uber and Netflix on new features in 4.0.

Abstract:

A glimpse of Cassandra 4.0 features:

There are a lot of exciting features coming in 4.0, but this talk covers some of the features that we at Netflix are particularly excited about and looking forward to. In this talk, we present an overview of just some of the many improvements shipping soon in 4.0.

Devops kc

Devops kcPhilip Thompson Philip Thompson is a software engineer at DataStax and contributor to Apache Cassandra. The document discusses Apache Cassandra, an open source, distributed database built for scalability and high availability. It describes Cassandra's architecture including data distribution across nodes, replication, consistency levels, and mechanisms for repair and anti-entropy.

Intro to cassandra

Intro to cassandraAaron Ploetz An introduction to the Apache Cassandra database, as presented at the Northern Illinois Coders user group on 20141022.

Scaling with sync_replication using Galera and EC2

Scaling with sync_replication using Galera and EC2Marco Tusa Challenging architecture design, and proof of concept on a real case of study using Syncrhomous solution.

Customer asks me to investigate and design MySQL architecture to support his application serving shops around the globe.

Scale out and scale in base to sales seasons.

Tales From The Front: An Architecture For Multi-Data Center Scalable Applicat...

Tales From The Front: An Architecture For Multi-Data Center Scalable Applicat...DataStax Academy - Quick review of Cassandra functionality that applies to this use case

- Common Data Center and application architectures for highly available inventory applications, and why the were designed that way

- Cassandra implementations vis-a-vis infrastructure capabilities

The impedance mismatch: compromises made to fit into IT infrastructures designed and implemented with an old mindset

Operations, Consistency, Failover for Multi-DC Clusters (Alexander Dejanovski...

Operations, Consistency, Failover for Multi-DC Clusters (Alexander Dejanovski...DataStax This document discusses operations, consistency, and failover for multi-datacenter Apache Cassandra clusters. It describes how to configure replication strategies to distribute data across DCs, maintain consistency levels, and handle reads and writes between DCs. It also covers adding a new DC, removing a DC, running repairs across DCs, and designing for failover between DCs in the event of network partitions or DC outages.

Zero Downtime Schema Changes - Galera Cluster - Best Practices

Zero Downtime Schema Changes - Galera Cluster - Best PracticesSeveralnines Database schema changes are usually not popular among DBAs or sysadmins, not when you are operating a cluster and cannot afford to switch off the service during a maintenance window. There are different ways to perform schema changes, some procedures being more complicated than others.

Galera Cluster is great at making your MySQL database highly available, but are you concerned about schema changes? Is an ALTER TABLE statement something that requires a lot of advance scheduling? What is the impact on your database uptime?

This is a common question, since ALTER operations in MySQL usually cause the table to be locked and rebuilt – which can potentially be disruptive to your live applications. Fortunately, Galera Cluster has mechanisms to replicate DDL across its nodes.

In these slides, you will learn about the following:

How to perform Zero Downtime Schema Changes

2 main methods: TOI and RSU

Total Order Isolation: predictability and consistency

Rolling Schema Upgrades

pt-online-schema-change

Schema synchronization with re-joining nodes

Recommended procedures

Common pitfalls/user errors

The slides are courtesy of Seppo Jaakola, CEO, Codership - creators of Galera Cluster

Scylla on Kubernetes: Introducing the Scylla Operator

Scylla on Kubernetes: Introducing the Scylla OperatorScyllaDB The document introduces the Scylla Operator for Kubernetes, which provides a management layer for Scylla on Kubernetes. It addresses some limitations of using StatefulSets alone to run Scylla, such as safe scale down operations and tracking member identity. The operator implements the controller pattern with custom resources to deploy and manage Scylla clusters on Kubernetes. It handles tasks like cluster creation and scale up/down while addressing issues like local storage failures.

Cassandra Introduction & Features

Cassandra Introduction & FeaturesPhil Peace Cassandra is an open source, distributed, decentralized, and fault-tolerant NoSQL database that is highly scalable and provides tunable consistency. It was created at Facebook based on Amazon's Dynamo and Google's Bigtable. Cassandra's key features include elastic scalability through horizontal partitioning, high availability with no single point of failure, tunable consistency levels, and a column-oriented data model with a CQL interface. Major companies like eBay, Netflix, and Apple use Cassandra for applications requiring large volumes of writes, geographical distribution, and evolving data models.

Connecting kafka message systems with scylla

Connecting kafka message systems with scylla Maheedhar Gunturu Maheedhar Gunturu presented on connecting Kafka message systems with Scylla. He discussed the benefits of message queues like Kafka including centralized infrastructure, buffering capabilities, and streaming data transformations. He then explained Kafka Connect which provides a standardized framework for building connectors with distributed and scalable connectors. Scylla and Cassandra connectors are available today with a Scylla shard aware connector being developed.

Cassandra internals

Cassandra internalsnarsiman The document summarizes a meetup about Cassandra internals. It provides an agenda that discusses what Cassandra is, its data placement and replication, read and write paths, compaction, and repair. Key concepts covered include Cassandra being decentralized with no single point of failure, its peer-to-peer architecture, and data being eventually consistent. A demo is also included to illustrate gossip, replication, and how data is handled during node failures and recoveries.

PaaSTA: Autoscaling at Yelp

PaaSTA: Autoscaling at YelpNathan Handler Capacity planning is a difficult challenge faced by most companies. If you have too few machines, you will not have enough compute resources available to deal with heavy loads. On the other hand, if you have too many machines, you are wasting money. This is why companies have started investing in automatically scaling services and infrastructure to minimize the amount of wasted money and resources.

In this talk, Nathan will describe how Yelp is using PaaSTA, a PaaS built on top of open source tools including Docker, Mesos, Marathon, and Chronos, to automatically and gracefully scale services and the underlying cluster. He will go into detail about how this functionality was implemented and the design designs that were made while architecting the system. He will also provide a brief comparison of how this approach differs from existing solutions.

M6d cassandrapresentation

M6d cassandrapresentationEdward Capriolo Cassandra is used for real-time bidding in online advertising. It processes billions of bid requests per day with low latency requirements. Segment data, which assigns product or service affinity to user groups, is stored in Cassandra to reduce calculations and allow users to be bid on sooner. Tuning the cache size and understanding the active dataset helps optimize performance.

Red Hat Storage Day Seattle: Stabilizing Petabyte Ceph Cluster in OpenStack C...

Red Hat Storage Day Seattle: Stabilizing Petabyte Ceph Cluster in OpenStack C...Red_Hat_Storage Cisco uses Ceph for storage in its OpenStack cloud platform. The initial Ceph cluster design used HDDs which caused stability issues as the cluster grew to petabytes in size. Improvements included throttling client IO, upgrading Ceph versions, moving MON metadata to SSDs, and retrofitting journals to NVMe SSDs. These steps stabilized performance and reduced recovery times. Lessons included having clear stability goals and automating testing to prevent technical debt from shortcuts.

Ad

More Related Content

What's hot (20)

Building Large-Scale Stream Infrastructures Across Multiple Data Centers with...

Building Large-Scale Stream Infrastructures Across Multiple Data Centers with...DataWorks Summit/Hadoop Summit This document discusses common patterns for running Apache Kafka across multiple data centers. It describes stretched clusters, active/passive, and active/active cluster configurations. For each pattern, it covers how to handle failures and recover consumer offsets when switching data centers. It also discusses considerations for using Kafka with other data stores in a multi-DC environment and future work like timestamp-based offset seeking.

Distribute Key Value Store

Distribute Key Value StoreSantal Li This document provides an overview of distributed key-value stores and Cassandra. It discusses key concepts like data partitioning, replication, and consistency models. It also summarizes Cassandra's features such as high availability, elastic scalability, and support for different data models. Code examples are given to demonstrate basic usage of the Cassandra client API for operations like insert, get, multiget and range queries.

Cassandra Summit 2014: Active-Active Cassandra Behind the Scenes

Cassandra Summit 2014: Active-Active Cassandra Behind the ScenesDataStax Academy Presenter: Roopa Tangirala, Senior Cloud Data Architect at Netflix

High availability is an important requirement for any online business and trying to architect around failures and expecting infrastructure to fail, and even then be highly available, is the key to success. One such effort here at Netflix was the Active-Active implementation where we provided region resiliency. This presentation will discuss the brief overview of the active-active implementation and how it leveraged Cassandra’s architecture in the backend to achieve its goal. It will cover our journey through A-A from Cassandra’s perspective, the data validation we did to prove the backend would work without impacting customer experience. The various problems we faced, like long repair times and gc_grace settings, plus lessons learned and what would we do differently next time around, will also be discussed.

NewSQL overview, Feb 2015

NewSQL overview, Feb 2015Ivan Glushkov NewSQL overview:

- History of RDBMs

- The reasons why NoSQL concept appeared

- Why NoSQL was not enough, the necessity of NewSQL

- Characteristics of NewSQL

- 7 DBs that belongs to NewSQL

- Overview Table with main properties

Client Drivers and Cassandra, the Right Way

Client Drivers and Cassandra, the Right WayDataStax Academy This document discusses Cassandra drivers and how to optimize queries. It begins with an introduction to Cassandra drivers and examples of basic usage in Java, Python and Ruby. It then covers the differences between synchronous and asynchronous queries. Prepared statements and consistency levels are also discussed. The document explores how consistency levels, driver policies and node outages impact performance and latency. Hinted handoff is described as a performance optimization that stores hints for missed writes on down nodes. Lastly, it provides best practices around driver usage.

BigData Developers MeetUp

BigData Developers MeetUpChristian Johannsen Christian Johannsen presents on evaluating Apache Cassandra as a cloud database. Cassandra is optimized for cloud infrastructure with features like transparent elasticity, scalability, high availability, easy data distribution and redundancy. It supports multiple data types, is easy to manage, low cost, supports multiple infrastructures and has security features. A demo of DataStax OpsCenter and Apache Spark on Cassandra is shown.

Webinar: Getting Started with Apache Cassandra

Webinar: Getting Started with Apache CassandraDataStax Would you like to learn how to use Cassandra but don’t know where to begin? Want to get your feet wet but you’re lost in the desert? Longing for a cluster when you don’t even know how to set up a node? Then look no further! Rebecca Mills, Junior Evangelist at Datastax, will guide you in the webinar “Getting Started with Apache Cassandra...”

You'll get an overview of Planet Cassandra’s resources to get you started quickly and easily. Rebecca will take you down the path that's right for you, whether you are a developer or administrator. Join if you are interested in getting Cassandra up and working in the way that suits you best.

C* Summit 2013: Cassandra at eBay Scale by Feng Qu and Anurag Jambhekar

C* Summit 2013: Cassandra at eBay Scale by Feng Qu and Anurag JambhekarDataStax Academy We have seen rapid adoption of C* at eBay in past two years. We have made tremendous efforts to integrate C* into existing database platforms, including Oracle, MySQL, Postgres, MongoDB, XMP etc.. We also scale C* to meet business requirement and encountered technical challenges you only see at eBay scale, 100TB data on hundreds of nodes. We will share our experience of deployment automation, managing, monitoring, reporting for both Apache Cassandra and DataStax enterprise.

A glimpse of cassandra 4.0 features netflix

A glimpse of cassandra 4.0 features netflixVinay Kumar Chella These slides are from the recent meetup @ Uber - Apache Cassandra at Uber and Netflix on new features in 4.0.

Abstract:

A glimpse of Cassandra 4.0 features:

There are a lot of exciting features coming in 4.0, but this talk covers some of the features that we at Netflix are particularly excited about and looking forward to. In this talk, we present an overview of just some of the many improvements shipping soon in 4.0.

Devops kc

Devops kcPhilip Thompson Philip Thompson is a software engineer at DataStax and contributor to Apache Cassandra. The document discusses Apache Cassandra, an open source, distributed database built for scalability and high availability. It describes Cassandra's architecture including data distribution across nodes, replication, consistency levels, and mechanisms for repair and anti-entropy.

Intro to cassandra

Intro to cassandraAaron Ploetz An introduction to the Apache Cassandra database, as presented at the Northern Illinois Coders user group on 20141022.

Scaling with sync_replication using Galera and EC2

Scaling with sync_replication using Galera and EC2Marco Tusa Challenging architecture design, and proof of concept on a real case of study using Syncrhomous solution.

Customer asks me to investigate and design MySQL architecture to support his application serving shops around the globe.

Scale out and scale in base to sales seasons.

Tales From The Front: An Architecture For Multi-Data Center Scalable Applicat...

Tales From The Front: An Architecture For Multi-Data Center Scalable Applicat...DataStax Academy - Quick review of Cassandra functionality that applies to this use case

- Common Data Center and application architectures for highly available inventory applications, and why the were designed that way

- Cassandra implementations vis-a-vis infrastructure capabilities

The impedance mismatch: compromises made to fit into IT infrastructures designed and implemented with an old mindset

Operations, Consistency, Failover for Multi-DC Clusters (Alexander Dejanovski...

Operations, Consistency, Failover for Multi-DC Clusters (Alexander Dejanovski...DataStax This document discusses operations, consistency, and failover for multi-datacenter Apache Cassandra clusters. It describes how to configure replication strategies to distribute data across DCs, maintain consistency levels, and handle reads and writes between DCs. It also covers adding a new DC, removing a DC, running repairs across DCs, and designing for failover between DCs in the event of network partitions or DC outages.

Zero Downtime Schema Changes - Galera Cluster - Best Practices

Zero Downtime Schema Changes - Galera Cluster - Best PracticesSeveralnines Database schema changes are usually not popular among DBAs or sysadmins, not when you are operating a cluster and cannot afford to switch off the service during a maintenance window. There are different ways to perform schema changes, some procedures being more complicated than others.

Galera Cluster is great at making your MySQL database highly available, but are you concerned about schema changes? Is an ALTER TABLE statement something that requires a lot of advance scheduling? What is the impact on your database uptime?

This is a common question, since ALTER operations in MySQL usually cause the table to be locked and rebuilt – which can potentially be disruptive to your live applications. Fortunately, Galera Cluster has mechanisms to replicate DDL across its nodes.

In these slides, you will learn about the following:

How to perform Zero Downtime Schema Changes

2 main methods: TOI and RSU

Total Order Isolation: predictability and consistency

Rolling Schema Upgrades

pt-online-schema-change

Schema synchronization with re-joining nodes

Recommended procedures

Common pitfalls/user errors

The slides are courtesy of Seppo Jaakola, CEO, Codership - creators of Galera Cluster

Scylla on Kubernetes: Introducing the Scylla Operator

Scylla on Kubernetes: Introducing the Scylla OperatorScyllaDB The document introduces the Scylla Operator for Kubernetes, which provides a management layer for Scylla on Kubernetes. It addresses some limitations of using StatefulSets alone to run Scylla, such as safe scale down operations and tracking member identity. The operator implements the controller pattern with custom resources to deploy and manage Scylla clusters on Kubernetes. It handles tasks like cluster creation and scale up/down while addressing issues like local storage failures.

Cassandra Introduction & Features

Cassandra Introduction & FeaturesPhil Peace Cassandra is an open source, distributed, decentralized, and fault-tolerant NoSQL database that is highly scalable and provides tunable consistency. It was created at Facebook based on Amazon's Dynamo and Google's Bigtable. Cassandra's key features include elastic scalability through horizontal partitioning, high availability with no single point of failure, tunable consistency levels, and a column-oriented data model with a CQL interface. Major companies like eBay, Netflix, and Apple use Cassandra for applications requiring large volumes of writes, geographical distribution, and evolving data models.

Connecting kafka message systems with scylla

Connecting kafka message systems with scylla Maheedhar Gunturu Maheedhar Gunturu presented on connecting Kafka message systems with Scylla. He discussed the benefits of message queues like Kafka including centralized infrastructure, buffering capabilities, and streaming data transformations. He then explained Kafka Connect which provides a standardized framework for building connectors with distributed and scalable connectors. Scylla and Cassandra connectors are available today with a Scylla shard aware connector being developed.

Cassandra internals

Cassandra internalsnarsiman The document summarizes a meetup about Cassandra internals. It provides an agenda that discusses what Cassandra is, its data placement and replication, read and write paths, compaction, and repair. Key concepts covered include Cassandra being decentralized with no single point of failure, its peer-to-peer architecture, and data being eventually consistent. A demo is also included to illustrate gossip, replication, and how data is handled during node failures and recoveries.

PaaSTA: Autoscaling at Yelp

PaaSTA: Autoscaling at YelpNathan Handler Capacity planning is a difficult challenge faced by most companies. If you have too few machines, you will not have enough compute resources available to deal with heavy loads. On the other hand, if you have too many machines, you are wasting money. This is why companies have started investing in automatically scaling services and infrastructure to minimize the amount of wasted money and resources.

In this talk, Nathan will describe how Yelp is using PaaSTA, a PaaS built on top of open source tools including Docker, Mesos, Marathon, and Chronos, to automatically and gracefully scale services and the underlying cluster. He will go into detail about how this functionality was implemented and the design designs that were made while architecting the system. He will also provide a brief comparison of how this approach differs from existing solutions.

Building Large-Scale Stream Infrastructures Across Multiple Data Centers with...

Building Large-Scale Stream Infrastructures Across Multiple Data Centers with...DataWorks Summit/Hadoop Summit

Similar to Scylla Summit 2016: Outbrain Case Study - Lowering Latency While Doing 20X IOPS of Cassandra (20)

M6d cassandrapresentation

M6d cassandrapresentationEdward Capriolo Cassandra is used for real-time bidding in online advertising. It processes billions of bid requests per day with low latency requirements. Segment data, which assigns product or service affinity to user groups, is stored in Cassandra to reduce calculations and allow users to be bid on sooner. Tuning the cache size and understanding the active dataset helps optimize performance.

Red Hat Storage Day Seattle: Stabilizing Petabyte Ceph Cluster in OpenStack C...

Red Hat Storage Day Seattle: Stabilizing Petabyte Ceph Cluster in OpenStack C...Red_Hat_Storage Cisco uses Ceph for storage in its OpenStack cloud platform. The initial Ceph cluster design used HDDs which caused stability issues as the cluster grew to petabytes in size. Improvements included throttling client IO, upgrading Ceph versions, moving MON metadata to SSDs, and retrofitting journals to NVMe SSDs. These steps stabilized performance and reduced recovery times. Lessons included having clear stability goals and automating testing to prevent technical debt from shortcuts.

Cpu Caches

Cpu Cachesshinolajla This document provides an overview of CPU caches, including definitions of key terms like SMP, NUMA, data locality, cache lines, and cache architectures. It discusses cache hierarchies, replacement strategies, write policies, inter-socket communication, and cache coherency protocols. Latency numbers for different levels of cache and memory are presented. The goal is to provide information to help improve application performance.

CPU Caches - Jamie Allen

CPU Caches - Jamie Allenjaxconf This document provides an overview of CPU caches, including definitions of key terms like SMP, NUMA, data locality, cache lines, and cache architectures. It discusses cache hierarchies, replacement strategies, write policies, inter-socket communication, and cache coherency protocols. Latency numbers for different levels of cache and memory are presented.

From Message to Cluster: A Realworld Introduction to Kafka Capacity Planning

From Message to Cluster: A Realworld Introduction to Kafka Capacity Planningconfluent From Message to Cluster: A Realworld Introduction to Kafka Capacity Planning, Jason Bell, Kafka DevOps Engineer @ Digitalis.io

Eventual Consistency @WalmartLabs with Kafka, Avro, SolrCloud and Hadoop

Eventual Consistency @WalmartLabs with Kafka, Avro, SolrCloud and HadoopAyon Sinha This document discusses Walmart Labs' use of eventual consistency with Kafka, SolrCloud, and Hadoop to power their large-scale ecommerce operations. It describes some of the challenges they faced, including slow query times, garbage collection pauses, and Zookeeper configuration issues. The key aspects of their solution involved using Kafka to handle asynchronous data ingestion into SolrCloud and Hadoop, batching updates for improved performance, dedicating hardware resources, and monitoring metrics to identify issues. This architecture has helped Walmart Labs scale to support their customers' high volumes of online shopping.

Memory, Big Data, NoSQL and Virtualization

Memory, Big Data, NoSQL and VirtualizationBigstep In-memory processing has started to become the norm in large scale data handling. This is aclose to the metal analysis of highly important but often neglected aspects of memory accesstimes and how it impacts big data and NoSQL technologies.We cover aspects such as the TLB, the Transparent Huge Pages, the QPI Link, Hyperthreading and the impact of virtualization on high-memory footprint applications. We present benchmarks of various technologies ranging from Cloudera’s Impala to Couchbase and how they are impacted by the underlying hardware.The key takeaway is a better understanding of how to size a cluster, how to choose a cloud provider and an instance type for big data and NoSQL workloads and why not every core or GB of RAM is created equal.

Flink Forward Berlin 2017: Robert Metzger - Keep it going - How to reliably a...

Flink Forward Berlin 2017: Robert Metzger - Keep it going - How to reliably a...Flink Forward Let’s be honest: Running a distributed stateful stream processor that is able to handle terabytes of state and tens of gigabytes of data per second while being highly available and correct (in an exactly-once sense) does not work without any planning, configuration and monitoring. While the Flink developer community tries to make everything as simple as possible, it is still important to be aware of all the requirements and implications In this talk, we will provide some insights into the greatest operations mysteries of Flink from a high-level perspective: - Capacity and resource planning: Understand the theoretical limits. - Memory and CPU configuration: Distribute resources according to your needs. - Setting up High Availability: Planning for failures. - Checkpointing and State Backends: Ensure correctness and fast recovery For each of the listed topics, we will introduce the concepts of Flink and provide some best practices we have learned over the past years supporting Flink users in production.

Cистема распределенного, масштабируемого и высоконадежного хранения данных дл...

Cистема распределенного, масштабируемого и высоконадежного хранения данных дл...Ontico 1. The document discusses a distributed, scalable, and highly reliable data storage system for virtual machines and other uses called HighLoad++.

2. It proposes using a simplified design that focuses on core capabilities like data replication and recovery to achieve both low costs and high performance.

3. The design splits data into chunks that are replicated across multiple servers and includes metadata servers to track the location and versions of chunks to enable eventual consistency despite failures.

C* Summit 2013: Netflix Open Source Tools and Benchmarks for Cassandra by Adr...

C* Summit 2013: Netflix Open Source Tools and Benchmarks for Cassandra by Adr...DataStax Academy Netflix has updated and added new tools and benchmarks for Cassandra in the last year. In this talk we will cover the latest additions and recipes for the Astyanax Java client, updates to Priam to support Cassandra 1.2 Vnodes, plus newly released and upcoming tools that are all part of the NetflixOSS platform. Following on from the Cassandra on SSD on AWS benchmark that was run live during the 2012 Summit, we've been benchmarking a large write intensive multi-region cluster to see how far we can push it. Cassandra is the data storage and global replication foundation for the Cloud Native architecture that runs Netflix streaming for 36 Million users. Netflix is also offering a Cloud Prize for open source contributions to NetflixOSS, and there are ten categories including Best Datastore Integration and Best Contribution to Performance Improvements, with $10K cash and $5K of AWS credits for each winner. We'd like to pay you to use our free software!

Tuning the Kernel for Varnish Cache

Tuning the Kernel for Varnish CachePer Buer Tuning the Linux IP stack for high performance Varnish + some basic Varnish tuning tips. Talk given at Linuxcon 2016 Berlin

Journey to Stability: Petabyte Ceph Cluster in OpenStack Cloud

Journey to Stability: Petabyte Ceph Cluster in OpenStack CloudCeph Community Cisco Cloud Services provides an OpenStack platform to Cisco SaaS applications using a petabyte-scale Ceph cluster. The initial Ceph cluster design led to stability problems as usage grew past 50% capacity. Improvements such as client IO throttling, NVMe journaling, upgrading Ceph versions, and moving the MON levelDB to SSD stabilized the cluster and reduced recovery times from hardware failures. Lessons learned included the need for devops practices, knowledge sharing, performance modeling, and avoiding technical debt from shortcuts.

Journey to Stability: Petabyte Ceph Cluster in OpenStack Cloud

Journey to Stability: Petabyte Ceph Cluster in OpenStack CloudPatrick McGarry Cisco Cloud Services provides an OpenStack platform to Cisco SaaS applications using a worldwide deployment of Ceph clusters storing petabytes of data. The initial Ceph cluster design experienced major stability problems as the cluster grew past 50% capacity. Strategies were implemented to improve stability including client IO throttling, backfill and recovery throttling, upgrading Ceph versions, adding NVMe journals, moving the MON levelDB to SSDs, rebalancing the cluster, and proactively detecting slow disks. Lessons learned included the importance of devops practices, sharing knowledge, rigorous testing, and balancing performance, cost and time.

RedisConf18 - Redis at LINE - 25 Billion Messages Per Day

RedisConf18 - Redis at LINE - 25 Billion Messages Per DayRedis Labs LINE uses Redis for caching and primary storage of messaging data. It operates over 60 Redis clusters with over 1,000 machines and 10,000 nodes to handle 25 billion messages per day. LINE developed its own Redis client and monitoring system to support client-side sharding without a proxy, automated failure detection, and scalable cluster monitoring. While the official Redis Cluster was tested, it exhibited some issues around memory usage and maximum node size for LINE's large scale needs.

HBaseCon 2013: How to Get the MTTR Below 1 Minute and More

HBaseCon 2013: How to Get the MTTR Below 1 Minute and MoreCloudera, Inc. This document discusses ways to reduce the mean time to recovery (MTTR) in HBase to below 1 minute. It outlines improvements made to failure detection, region reassignment, and data recovery processes. Faster failure detection is achieved by lowering ZooKeeper timeouts to 30 seconds from 180. Region reassignment is made faster through parallelism. Data recovery is improved by rewriting the recovery process to directly write edits to regions instead of HDFS. These changes have reduced recovery times from 10-15 minutes to less than 1 minute in tests.

Building Stream Infrastructure across Multiple Data Centers with Apache Kafka

Building Stream Infrastructure across Multiple Data Centers with Apache KafkaGuozhang Wang To manage the ever-increasing volume and velocity of data within your company, you have successfully made the transition from single machines and one-off solutions to large distributed stream infrastructures in your data center, powered by Apache Kafka. But what if one data center is not enough? I will describe building resilient data pipelines with Apache Kafka that span multiple data centers and points of presence, and provide an overview of best practices and common patterns while covering key areas such as architecture guidelines, data replication, and mirroring as well as disaster scenarios and failure handling.

Flink Forward SF 2017: Stephan Ewen - Experiences running Flink at Very Large...

Flink Forward SF 2017: Stephan Ewen - Experiences running Flink at Very Large...Flink Forward This talk shares experiences from deploying and tuning Flink steam processing applications for very large scale. We share lessons learned from users, contributors, and our own experiments about running demanding streaming jobs at scale. The talk will explain what aspects currently render a job as particularly demanding, show how to configure and tune a large scale Flink job, and outline what the Flink community is working on to make the out-of-the-box for experience as smooth as possible. We will, for example, dive into - analyzing and tuning checkpointing - selecting and configuring state backends - understanding common bottlenecks - understanding and configuring network parameters

Openstack meetup lyon_2017-09-28

Openstack meetup lyon_2017-09-28Xavier Lucas This document summarizes the key aspects of a public cloud archive storage solution. It offers affordable and unlimited storage using standard transfer protocols. Data is stored using erasure coding for redundancy and fault tolerance. Accessing archived data takes 10 minutes to 12 hours depending on previous access patterns, with faster access for inactive archives. The solution uses middleware to handle sealing and unsealing archives along with tracking access patterns to regulate retrieval times.

Bloomreach - BloomStore Compute Cloud Infrastructure

Bloomreach - BloomStore Compute Cloud Infrastructure bloomreacheng The document discusses BloomReach's efforts to scale their data infrastructure to support hundreds of millions of documents. They implemented an elastic infrastructure called BC2 that dynamically provisions and scales Solr and Cassandra clusters in the cloud on demand. This allows each pipeline or job to have isolated resources, improves performance and stability over sharing clusters, and provides cost savings through only provisioning necessary resources.

Hadoop 3.0 - Revolution or evolution?

Hadoop 3.0 - Revolution or evolution?Uwe Printz Updated version of my talk about Hadoop 3.0 with the newest community updates.

Talk given at the codecentric Meetup Berlin on 31.08.2017 and on Data2Day Meetup on 28.09.2017 in Heidelberg.

Ad

More from ScyllaDB (20)

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep Dive

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep DiveScyllaDB Want to learn practical tips for designing systems that can scale efficiently without compromising speed?

Join us for a workshop where we’ll address these challenges head-on and explore how to architect low-latency systems using Rust. During this free interactive workshop oriented for developers, engineers, and architects, we’ll cover how Rust’s unique language features and the Tokio async runtime enable high-performance application development.

As you explore key principles of designing low-latency systems with Rust, you will learn how to:

- Create and compile a real-world app with Rust

- Connect the application to ScyllaDB (NoSQL data store)

- Negotiate tradeoffs related to data modeling and querying

- Manage and monitor the database for consistently low latencies

Powering a Billion Dreams: Scaling Meesho’s E-commerce Revolution with Scylla...

Powering a Billion Dreams: Scaling Meesho’s E-commerce Revolution with Scylla...ScyllaDB With over a billion Indians set to shop online, Meesho is redefining e-commerce by making it accessible, affordable, and inclusive at an unprecedented scale. But scaling for Bharat isn’t just about growth—it’s about building a tech backbone that can handle massive traffic surges, dynamic pricing, real-time recommendations, and seamless user experiences. In this session, we’ll take you behind the scenes of Meesho’s journey in democratizing e-commerce while operating at Monster Scale. Discover how ScyllaDB plays a crucial role in handling millions of transactions, optimizing catalog ranking, and ensuring ultra-low-latency operations. We’ll deep dive into our real-world use cases, performance optimizations, and the key architectural decisions that have helped us scale effortlessly.

Leading a High-Stakes Database Migration

Leading a High-Stakes Database MigrationScyllaDB Navigating common mistakes and critical success factors

Is your team considering or starting a database migration? Learn from the frontline experience gained guiding hundreds of high-stakes migration projects – from startups to Google and Twitter. Join us as Miles Ward and Tim Koopmans have a candid chat about what tends to go wrong and how to steer things right.

We will explore:

- What really pushes teams to the database migration tipping point

- How to scope and manage the complexity of a migration

- Proven migration strategies and antipatterns

- Where complications commonly arise and ways to prevent them

Expect plenty of war stories, along with pragmatic ways to make your own migration as “blissfully boring” as possible.

Achieving Extreme Scale with ScyllaDB: Tips & Tradeoffs

Achieving Extreme Scale with ScyllaDB: Tips & TradeoffsScyllaDB Explore critical strategies – and antipatterns – for achieving low latency at extreme scale

If you’re getting started with ScyllaDB, you’re probably intrigued by its potential to achieve predictable low latency at extreme scale. But how do you ensure that you’re maximizing that potential for your team’s specific workloads and technical requirements?

This webinar offers practical advice for navigating the various decision points you’ll face as you evaluate ScyllaDB for your project and move into production. We’ll cover the most critical considerations, tradeoffs, and recommendations related to:

- Infrastructure selection

- ScyllaDB configuration

- Client-side setup

- Data modeling

Join us for an inside look at the lessons learned across thousands of real-world distributed database projects.

Securely Serving Millions of Boot Artifacts a Day by João Pedro Lima & Matt ...

Securely Serving Millions of Boot Artifacts a Day by João Pedro Lima & Matt ...ScyllaDB Cloudflare’s boot infrastructure dynamically generates and signs boot artifacts for nodes worldwide, ensuring secure, scalable, and customizable deployments. This talk dives into its architecture, scaling decisions, and how it enables seamless testing while maintaining a strong chain of trust.

How Agoda Scaled 50x Throughput with ScyllaDB by Worakarn Isaratham

How Agoda Scaled 50x Throughput with ScyllaDB by Worakarn IsarathamScyllaDB Learn about Agoda's performance tuning strategies for ScyllaDB. Worakarn shares how they optimized disk performance, fine-tuned compaction strategies, and adjusted SSTable settings to match their workload for peak efficiency.

How Yieldmo Cut Database Costs and Cloud Dependencies Fast by Todd Coleman

How Yieldmo Cut Database Costs and Cloud Dependencies Fast by Todd ColemanScyllaDB Yieldmo processes hundreds of billions of ad requests daily with subsecond latency. Initially using DynamoDB for its simplicity and stability, they faced rising costs, suboptimal latencies, and cloud provider lock-in. This session explores their journey to ScyllaDB’s DynamoDB-compatible API.

ScyllaDB: 10 Years and Beyond by Dor Laor

ScyllaDB: 10 Years and Beyond by Dor LaorScyllaDB There’s a common adage that it takes 10 years to develop a file system. As ScyllaDB reaches that 10 year milestone in 2025, it’s the perfect time to reflect on the last decade of ScyllaDB development – both hits and misses. It’s especially appropriate given that our project just reached a critical mass with certain scalability and elasticity goals that we dreamed up years ago. This talk will cover how we arrived at ScyllaDB X Cloud achieving our initial vision, and share where we’re heading next.

Reduce Your Cloud Spend with ScyllaDB by Tzach Livyatan

Reduce Your Cloud Spend with ScyllaDB by Tzach LivyatanScyllaDB This talk will explore why ScyllaDB Cloud is a cost-effective alternative to DynamoDB, highlighting efficient design implementations like shared compute, local NVMe storage, and storage compression. It will also discuss new X Cloud features, better plans and pricing, and a direct cost comparison between ScyllaDB and DynamoDB

Migrating 50TB Data From a Home-Grown Database to ScyllaDB, Fast by Terence Liu

Migrating 50TB Data From a Home-Grown Database to ScyllaDB, Fast by Terence LiuScyllaDB Terence share how Clearview AI's infra needs evolved and why they chose ScyllaDB after first-principles research. From fast ingestion to production queries, the talk explores their journey with Rust, embedded DB readers, and the ScyllaDB Rust driver—plus config tips for bulk ingestion and achieving data parity.

Vector Search with ScyllaDB by Szymon Wasik

Vector Search with ScyllaDB by Szymon WasikScyllaDB Vector search is an essential element of contemporary machine learning pipelines and AI tools. This talk will share preliminary results on the forthcoming vector storage and search features in ScyllaDB. By leveraging Scylla's scalability and USearch library's performance, we have designed a system with exceptional query latency and throughput. The talk will cover vector search use cases, our roadmap, and a comparison of our initial implementation with other vector databases.

Workload Prioritization: How to Balance Multiple Workloads in a Cluster by Fe...

Workload Prioritization: How to Balance Multiple Workloads in a Cluster by Fe...ScyllaDB Workload Prioritization is a ScyllaDB exclusive feature for controlling how different workloads compete for system resources. It's used to prioritize urgent application requests that require immediate response times versus others that can tolerate slighter delays (e.g., large scans). Join this session for a demo of how applying workload prioritization reduces infrastructure costs while ensuring predictable performance at scale.

Two Leading Approaches to Data Virtualization, and Which Scales Better? by Da...

Two Leading Approaches to Data Virtualization, and Which Scales Better? by Da...ScyllaDB Should you move code to data or data to code? Conventional wisdom favors the former, but cloud trends push the latter. This session by the creator of PACELC explores the shift, its risks, and the ongoing debate in data virtualization between push- and pull-based processing.

Scaling a Beast: Lessons from 400x Growth in a High-Stakes Financial System b...

Scaling a Beast: Lessons from 400x Growth in a High-Stakes Financial System b...ScyllaDB Scaling from 66M to 25B+ records in a core financial system is tough—every number must be right, and data must be fresh. In this session, Dmytro shares real-world strategies to balance accuracy with real-time performance and avoid scaling pitfalls. It's purely practical, no-BS insights for engineers.

Object Storage in ScyllaDB by Ran Regev, ScyllaDB

Object Storage in ScyllaDB by Ran Regev, ScyllaDBScyllaDB In this talk we take a look at how Object Storage is used by Scylla. We focus on current usage, namely - for backup, and we look at the shift in implementation from an external tool to native Scylla. We take a close look at the complexity of backup and restore mostly in the face of topology changes and token assignments. We also take a glimpse to the future and see how Scylla is going to use Object Storage as its native storage. We explore a few possible applications of it and understand the tradeoffs.

Lessons Learned from Building a Serverless Notifications System by Srushith R...

Lessons Learned from Building a Serverless Notifications System by Srushith R...ScyllaDB Reaching your audience isn’t just about email. Learn how we built a scalable, cost-efficient notifications system using AWS serverless—handling SMS, WhatsApp, and more. From architecture to throttling challenges, this talk dives into key decisions for high-scale messaging.

A Dist Sys Programmer's Journey into AI by Piotr Sarna

A Dist Sys Programmer's Journey into AI by Piotr SarnaScyllaDB This talk explores the culture shock of transitioning from distributed databases to AI. While AI operates at massive scale, distributed storage and compute remain essential. Discover key differences, unexpected parallels, and how database expertise applies in the AI world.

High Availability: Lessons Learned by Paul Preuveneers

High Availability: Lessons Learned by Paul PreuveneersScyllaDB How does ScyllaDB keep your data safe, and your mission critical applications running smoothly, even in the face of disaster? In this talk we’ll discuss what we have learned about High Availability, how it is implemented within ScyllaDB and what that means for your business. You’ll learn about ScyllaDB cloud architecture design, consistency, replication and even load balancing and much more.

How Natura Uses ScyllaDB and ScyllaDB Connector to Create a Real-time Data Pi...

How Natura Uses ScyllaDB and ScyllaDB Connector to Create a Real-time Data Pi...ScyllaDB Natura, a top global cosmetics brand with 3M+ beauty consultants in Latin America, processes massive data for orders, campaigns, and analytics. In this talk, Rodrigo Luchini & Marcus Monteiro share how Natura leverages ScyllaDB’s CDC Source Connector for real-time sales insights.

Persistence Pipelines in a Processing Graph: Mutable Big Data at Salesforce b...

Persistence Pipelines in a Processing Graph: Mutable Big Data at Salesforce b...ScyllaDB This is a case study on managing mutable big data: Exploring the evolution of the persistence layer in a processing graph, tackling design challenges, and refining key operational principles along the way.

Ad

Recently uploaded (20)

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...Impelsys Inc. Impelsys provided a robust testing solution, leveraging a risk-based and requirement-mapped approach to validate ICU Connect and CritiXpert. A well-defined test suite was developed to assess data communication, clinical data collection, transformation, and visualization across integrated devices.

Cybersecurity Identity and Access Solutions using Azure AD

Cybersecurity Identity and Access Solutions using Azure ADVICTOR MAESTRE RAMIREZ Cybersecurity Identity and Access Solutions using Azure AD

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

Complete Guide to Advanced Logistics Management Software in Riyadh.pdf

Complete Guide to Advanced Logistics Management Software in Riyadh.pdfSoftware Company Explore the benefits and features of advanced logistics management software for businesses in Riyadh. This guide delves into the latest technologies, from real-time tracking and route optimization to warehouse management and inventory control, helping businesses streamline their logistics operations and reduce costs. Learn how implementing the right software solution can enhance efficiency, improve customer satisfaction, and provide a competitive edge in the growing logistics sector of Riyadh.

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungen

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungenpanagenda Webinar Recording: https://www.panagenda.com/webinars/hcl-nomad-web-best-practices-und-verwaltung-von-multiuser-umgebungen/

HCL Nomad Web wird als die nächste Generation des HCL Notes-Clients gefeiert und bietet zahlreiche Vorteile, wie die Beseitigung des Bedarfs an Paketierung, Verteilung und Installation. Nomad Web-Client-Updates werden “automatisch” im Hintergrund installiert, was den administrativen Aufwand im Vergleich zu traditionellen HCL Notes-Clients erheblich reduziert. Allerdings stellt die Fehlerbehebung in Nomad Web im Vergleich zum Notes-Client einzigartige Herausforderungen dar.

Begleiten Sie Christoph und Marc, während sie demonstrieren, wie der Fehlerbehebungsprozess in HCL Nomad Web vereinfacht werden kann, um eine reibungslose und effiziente Benutzererfahrung zu gewährleisten.

In diesem Webinar werden wir effektive Strategien zur Diagnose und Lösung häufiger Probleme in HCL Nomad Web untersuchen, einschließlich

- Zugriff auf die Konsole

- Auffinden und Interpretieren von Protokolldateien

- Zugriff auf den Datenordner im Cache des Browsers (unter Verwendung von OPFS)

- Verständnis der Unterschiede zwischen Einzel- und Mehrbenutzerszenarien

- Nutzung der Client Clocking-Funktion

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...

Transcript: #StandardsGoals for 2025: Standards & certification roundup - Tec...BookNet Canada Book industry standards are evolving rapidly. In the first part of this session, we’ll share an overview of key developments from 2024 and the early months of 2025. Then, BookNet’s resident standards expert, Tom Richardson, and CEO, Lauren Stewart, have a forward-looking conversation about what’s next.

Link to recording, presentation slides, and accompanying resource: https://bnctechforum.ca/sessions/standardsgoals-for-2025-standards-certification-roundup/

Presented by BookNet Canada on May 6, 2025 with support from the Department of Canadian Heritage.

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager API

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager APIUiPathCommunity Join this UiPath Community Berlin meetup to explore the Orchestrator API, Swagger interface, and the Test Manager API. Learn how to leverage these tools to streamline automation, enhance testing, and integrate more efficiently with UiPath. Perfect for developers, testers, and automation enthusiasts!

📕 Agenda

Welcome & Introductions

Orchestrator API Overview

Exploring the Swagger Interface

Test Manager API Highlights

Streamlining Automation & Testing with APIs (Demo)

Q&A and Open Discussion

Perfect for developers, testers, and automation enthusiasts!

👉 Join our UiPath Community Berlin chapter: https://community.uipath.com/berlin/

This session streamed live on April 29, 2025, 18:00 CET.

Check out all our upcoming UiPath Community sessions at https://community.uipath.com/events/.

Semantic Cultivators : The Critical Future Role to Enable AI

Semantic Cultivators : The Critical Future Role to Enable AIartmondano By 2026, AI agents will consume 10x more enterprise data than humans, but with none of the contextual understanding that prevents catastrophic misinterpretations.

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat The MCP (Model Context Protocol) is a framework designed to manage context and interaction within complex systems. This SlideShare presentation will provide a detailed overview of the MCP Model, its applications, and how it plays a crucial role in improving communication and decision-making in distributed systems. We will explore the key concepts behind the protocol, including the importance of context, data management, and how this model enhances system adaptability and responsiveness. Ideal for software developers, system architects, and IT professionals, this presentation will offer valuable insights into how the MCP Model can streamline workflows, improve efficiency, and create more intuitive systems for a wide range of use cases.

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptx

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptxAnoop Ashok In today's fast-paced retail environment, efficiency is key. Every minute counts, and every penny matters. One tool that can significantly boost your store's efficiency is a well-executed planogram. These visual merchandising blueprints not only enhance store layouts but also save time and money in the process.

Procurement Insights Cost To Value Guide.pptx

Procurement Insights Cost To Value Guide.pptxJon Hansen Procurement Insights integrated Historic Procurement Industry Archives, serves as a powerful complement — not a competitor — to other procurement industry firms. It fills critical gaps in depth, agility, and contextual insight that most traditional analyst and association models overlook.

Learn more about this value- driven proprietary service offering here.

Mobile App Development Company in Saudi Arabia

Mobile App Development Company in Saudi ArabiaSteve Jonas EmizenTech is a globally recognized software development company, proudly serving businesses since 2013. With over 11+ years of industry experience and a team of 200+ skilled professionals, we have successfully delivered 1200+ projects across various sectors. As a leading Mobile App Development Company In Saudi Arabia we offer end-to-end solutions for iOS, Android, and cross-platform applications. Our apps are known for their user-friendly interfaces, scalability, high performance, and strong security features. We tailor each mobile application to meet the unique needs of different industries, ensuring a seamless user experience. EmizenTech is committed to turning your vision into a powerful digital product that drives growth, innovation, and long-term success in the competitive mobile landscape of Saudi Arabia.

AI and Data Privacy in 2025: Global Trends

AI and Data Privacy in 2025: Global TrendsInData Labs In this infographic, we explore how businesses can implement effective governance frameworks to address AI data privacy. Understanding it is crucial for developing effective strategies that ensure compliance, safeguard customer trust, and leverage AI responsibly. Equip yourself with insights that can drive informed decision-making and position your organization for success in the future of data privacy.

This infographic contains:

-AI and data privacy: Key findings

-Statistics on AI data privacy in the today’s world

-Tips on how to overcome data privacy challenges

-Benefits of AI data security investments.

Keep up-to-date on how AI is reshaping privacy standards and what this entails for both individuals and organizations.

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

TrsLabs - Fintech Product & Business Consulting

TrsLabs - Fintech Product & Business ConsultingTrs Labs Hybrid Growth Mandate Model with TrsLabs

Strategic Investments, Inorganic Growth, Business Model Pivoting are critical activities that business don't do/change everyday. In cases like this, it may benefit your business to choose a temporary external consultant.

An unbiased plan driven by clearcut deliverables, market dynamics and without the influence of your internal office equations empower business leaders to make right choices.

Getting things done within a budget within a timeframe is key to Growing Business - No matter whether you are a start-up or a big company

Talk to us & Unlock the competitive advantage

Technology Trends in 2025: AI and Big Data Analytics

Technology Trends in 2025: AI and Big Data AnalyticsInData Labs At InData Labs, we have been keeping an ear to the ground, looking out for AI-enabled digital transformation trends coming our way in 2025. Our report will provide a look into the technology landscape of the future, including:

-Artificial Intelligence Market Overview

-Strategies for AI Adoption in 2025

-Anticipated drivers of AI adoption and transformative technologies

-Benefits of AI and Big data for your business

-Tips on how to prepare your business for innovation

-AI and data privacy: Strategies for securing data privacy in AI models, etc.

Download your free copy nowand implement the key findings to improve your business.

How Can I use the AI Hype in my Business Context?

How Can I use the AI Hype in my Business Context?Daniel Lehner 𝙄𝙨 𝘼𝙄 𝙟𝙪𝙨𝙩 𝙝𝙮𝙥𝙚? 𝙊𝙧 𝙞𝙨 𝙞𝙩 𝙩𝙝𝙚 𝙜𝙖𝙢𝙚 𝙘𝙝𝙖𝙣𝙜𝙚𝙧 𝙮𝙤𝙪𝙧 𝙗𝙪𝙨𝙞𝙣𝙚𝙨𝙨 𝙣𝙚𝙚𝙙𝙨?

Everyone’s talking about AI but is anyone really using it to create real value?

Most companies want to leverage AI. Few know 𝗵𝗼𝘄.

✅ What exactly should you ask to find real AI opportunities?

✅ Which AI techniques actually fit your business?

✅ Is your data even ready for AI?

If you’re not sure, you’re not alone. This is a condensed version of the slides I presented at a Linkedin webinar for Tecnovy on 28.04.2025.

tecnologias de las primeras civilizaciones.pdf

tecnologias de las primeras civilizaciones.pdffjgm517 descaripcion detallada del avance de las tecnologias en mesopotamia, egipto, roma y grecia.

Build Your Own Copilot & Agents For Devs

Build Your Own Copilot & Agents For DevsBrian McKeiver May 2nd, 2025 talk at StirTrek 2025 Conference.

What is Model Context Protocol(MCP) - The new technology for communication bw...

What is Model Context Protocol(MCP) - The new technology for communication bw...Vishnu Singh Chundawat

Scylla Summit 2016: Outbrain Case Study - Lowering Latency While Doing 20X IOPS of Cassandra

- 1. Case Study Shalom Yerushalmy - Production Engineer @ Outbrain Shlomi Livne - VP R&D @ ScyllaDB

- 2. Lowering Latency While Doing 20X IOPS of Cassandra

- 3. About Me • Production Engineer @ Outbrain, Recommendations Group. • Data Operations team. Was in charge of Cassandra, ES, MySQL, Redis, Memcache. • Past was a DevOps Engineer @ EverythingMe. ETL, chef, monitoring.

- 4. About Outbrain • Outbrain is the world’s largest content discovery platform. • Over 557 million unique visitors from across the globe. • 250 billion personalized content recommendations every month. • Outbrain platform include: ESPN, CNN, Le Monde, Fox News, The Guardian, Slate, The Telegraph, New York Post, India.com, Sky News and Time Inc.

- 6. Infrastructure at Outbrain • 3 Data Centers. • 7000 Servers. • 2000 Data servers. (Hadoop, Cassandra, ES, Scylla, etc.) • Peta bytes of data. • Real hardcore opensource devops shop. https://github.com/outbrain

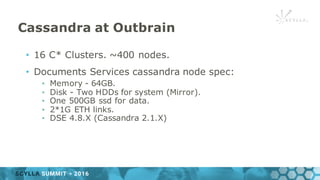

- 7. Cassandra at Outbrain • 16 C* Clusters. ~400 nodes. • Documents Services cassandra node spec: ▪ Memory - 64GB. ▪ Disk - Two HDDs for system (Mirror). ▪ One 500GB ssd for data. ▪ 2*1G ETH links. ▪ DSE 4.8.X (Cassandra 2.1.X)

- 8. Use Case

- 9. Specific use case • Documents (articles) data column family ▪ Holds data in key/value manner ▪ ~2 billion records ▪ 10 nodes in each DC, 3 DCs. ▪ RF: 3, local quorum for writes and reads • Usage: Serving our documents (articles) data to different flows. ▪ ~50K writes per minute, ~2-3M reads per minute per DC and growing… ▪ SLA - single requests in 10 ms and multi requests in 200 ms in the 99%ile. ▪ Same cluster for offline and serving use-cases ▪ Microservices: reads are done using a microservice and not directly

- 10. Cassandra only no memcache Performance • Scales up to ~1.5M RPM with local quorum. • Quick writes (99%ile up to 5 ms), slower reads (up to 50ms in 99%ile) Cassandra deployment V1 Read Microservice Write Process

- 11. Issues we had with V1 • Consistency vs. performance (local one vs. local quorum) • Growing vs. performance

- 12. Cassandra deployment V2 Cassandra + Memcache • Memcached DC-local cluster over C* with 15 minutes TTL. • First read from memcached, go to C* on misses. • Reads volume from C* ~500K RPM. • Reads are now within SLA (5 ms in 99%ile) Read Microservice Write Process

- 13. Issues we had/have with v2 • Stale data from cache • Complex solution • Cold cache -> C* gets full volume

- 14. Scylla & Cassandra + Memcache • Writes are written in parallel to C* and Scylla • Two reads are done in parallel: ▪ First: Memcached + Cassandra ▪ Second: Scylla Scylla/Cassandra side by side deployment Read Microservice Write Process

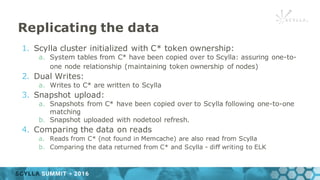

- 15. Replicating the data 1. Scylla cluster initialized with C* token ownership: a. System tables from C* have been copied over to Scylla: assuring one-to- one node relationship (maintaining token ownership of nodes) 2. Dual Writes: a. Writes to C* are written to Scylla 3. Snapshot upload: a. Snapshots from C* have been copied over to Scylla following one-to-one matching b. Snapshot uploaded with nodetool refresh. 4. Comparing the data on reads a. Reads from C* (not found in Memcache) are also read from Scylla b. Comparing the data returned from C* and Scylla - diff writing to ELK

- 16. Things we have Learnt Along the Way

- 17. • The cluster is running on 1 Gb network • Using the metrics collected on servers at 1 minute interval it is impossible to detect issues in networking (the cluster is using ~10 MB/s) • Viewing this locally on servers in a more fine grained manner (quickstats) ▪ Burst of traffic for sub second intervals saturating the network (every 5 minute interval) Bursty clients

- 18. Compressed Block Size • Queries return a full partition • Nodetool cfstats provides info on partition size (4K) • Using the default 64KB chunk_size is can be wasteful • Tune accordingly (ALTER TABLE … WITH compression = { 'sstable_compression': 'LZ4Compressor', 'chunk_length_kb': 4 };)

- 19. Queries with CL=LOCAL_QUORUM are not local • Running with read_repair_chance > 0 adds nodes outside of the local dc. • In case the first returned responses (local ones) do not match (by digest) ▪ wait for all the responses (including the non local ones) to compute the query result ▪ This is especially problematic in cases of read after write • Scylla 1.3 includes scylla-1250 that provides a solution in case of LOCAL_* to detect a potential read after write and downgrade global read_repair to local read_repair.

- 20. Global read_repair when local data is the same DC1 DC2 Client

- 21. Global read_repair when local data is the same DC1 DC2 Client

- 22. Global read_repair when local data is the same DC1 DC2 Client Data is the same

- 23. Global read_repair when local data is the same DC1 DC2 Client

- 24. Global read_repair when local data is different DC1 DC2 Client

- 25. Global read_repair when local data is different DC1 DC2 Client

- 26. Global read_repair when local data is different DC1 DC2 Client Data is different

- 27. Global read_repair when local data is different DC1 DC2 Client Compute Res & Diff

- 28. Global read_repair when local data is different DC1 DC2 Client

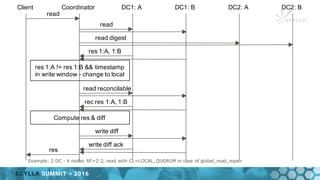

- 29. Client Coordinator DC1: A DC1: B DC2: A DC2: B read read read digest res 1:A, 1:B res 1:A != res 1:B Compute res & diff res read reconcilable rec res 1:A, 1:B, 2:A, 2:B write diff write diff ack Example: 2 DC - 4 nodes RF=2:2, read with CL=LOCAL_QUORUM in case of global_read_repair

- 30. Client Coordinator DC1: A DC1: B DC2: A DC2: B read read read digest res 1:A, 1:B res 1:A != res 1:B && timestamp in write window - change to local Compute res & diff res read reconcilable rec res 1:A, 1:B write diff write diff ack Example: 2 DC - 4 nodes RF=2:2, read with CL=LOCAL_QUORUM in case of global_read_repair

- 31. Cold cache • Restarting a node forces all requests to be served from disk (in peak traffic we are bottlenecked by the disk) • DynamicSnitch in C* tries to address this - yet it assumes that all queries are the same. • We are looking into using the cache hit ratio scylla-1455 and allowing a more fine grained evaluation

- 32. Performance

- 33. Scylla vs Cassandra + Memcached - CL:LOCAL_QUORUM Scylla Cassandra RPM 12M 500K AVG Latency 4 ms 8 ms Max RPM 1.7 RPM Max Latency 8 ms 35 ms

- 34. Lowering Latency While Doing 20X IOPS of Cassandra

- 35. Scylla vs Cassandra - CL:LOCAL_ONE Scylla and Cassandra handling the full load (peak of ~12M RPM) 80 6

- 36. Scylla vs Cassandra - CL:LOCAL_QUORUM * Cassandra has ~500 Timeouts (1 second) per minute in peak hours Scylla and Cassandra handling the full load (peak of ~12M RPM) 200 10

- 37. So ...

- 38. Summary • Scylla handles all the traffic with better latencies • Current bottleneck is network • Scylla nodes are almost idle

- 39. Next steps • A new cluster: ▪ 3 nodes ▪ 10 Gb network ▪ Larger disks ▪ More memory • Move to production

- 40. Visibility ● Outbrain’s Cassandra Grafana dashbords. ● https://github.com/outbrain/Cassibility You are more than welcome to use this and optimize for ScyllaDB use.

- 41. Analytics ● Redash - ● http://redash.io/ ● https://github.com/getredash/redash/pull/1236 Available now on github, will be part of version 0.12.

- 42. Thank You! Shalom Yerushalmy <[email protected]> Shlomi Livne <[email protected]> @slivne