search engines designed to support research on using statistical language models

- 1. Lemur Toolkit Introduction Yihong HONG [email protected] Some slides from Don Metzler, Paul Ogilvie & Trevor Strohman

- 2. About The Lemur Project • The Lemur Project was started in 2000 by the Center for Intelligent Information Retrieval (CIIR) at the University of Massachusetts, Amherst, and the Language Technologies Institute (LTI) at Carnegie Mellon University. Over the years, a large number of UMass and CMU students and staff have contributed to the project. • The project's first product was the Lemur Toolkit, a collection of software tools and search engines designed to support research on using statistical language models for information retrieval tasks. Later the project added the Indri search engine for large-scale search, the Lemur Query Log Toolbar for capture of user interaction data, and the ClueWeb09 dataset for research on web search.

- 3. About The Lemur Project

- 4. Installation • http://www.lemurproject.org • JAVA Runtime(JDK 6) need for evaluation tool. • Linux, OS/X: – Extract lemur-4.12.tar.gz – ./configure --prefix=/install/path – ./make – ./make install – Modify Environment Variable: ~/.bash_profile • Windows – Run lemur-4.12-install.exe – Documentation in windoc/index.html – Modify Environment Variable

- 5. How to use Lemur Project • Indexing • Document Preparation • Indexing Parameters • Retrieval • Parameters

- 6. How to use Lemur Project • Indexing • Document Preparation • Indexing Parameters • Retrieval • Parameters

- 7. Two Index Formats • KeyFile • Term Positions • Metadata • Offline Incremental • InQuery Query Language • Indri • Term Positions • Metadata • Fields / Annotations • Online Incremental • InQuery and Indri Query Languages

- 8. Indexing: Document Preparation • Lemur • TREC Text • TREC Web • HTML • Indri • TREC Text • TREC Web • Plain Text • DOC • PPT • HTML • XML • PDF • Mbox Document Formats The Lemur Toolkit can inherently deal with several different document format types without any modification:

- 9. Indexing: Document Preparation If your documents are not in a format that the Lemur Toolkit can inherently process: • If necessary, extract the text from the document. • Wrap the plaintext in TREC-style wrappers: <DOC> <DOCNO>document_id</DOCNO> <TEXT> Index this document text. </TEXT> </DOC> • – or – For more advanced users, write your own parser to extend the Lemur Toolkit.

- 10. How to use Lemur Project • Indexing • Document Preparation • Indexing Parameters • Retrieval • Parameters

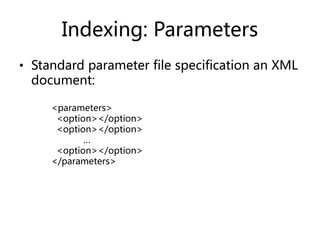

- 11. Indexing: Parameters • Basic usage to build index: – IndriBuildIndex <parameter_file> • Parameter file includes options for • Where to find your data files • Where to place the index • How much memory to use • Stopword, stemming, fields • Many other parameters.

- 12. Indexing: Parameters • Standard parameter file specification an XML document: <parameters> <option></option> <option></option> … <option></option> </parameters>

- 13. Indexing: Parameters • <corpus>: where to find your source files and what type to expect • <path>: (required) the path to the source files (absolute or relative) • <class>: (optional) the document type to expect. If omitted, IndriBuildIndex will attempt to guess at the filetype based on the file’s extension. <parameters> <corpus> <path>/path/to/source/files</path> <class>trectext</class> </corpus> <index>/path/to/the/index</index> <memory>256M</memory> <stopper> <word>first_word</word> <word>next_word</word> … <word>final_word</word> </stopper> </parameters>

- 14. Indexing: Parameters • The <index> parameter tells IndriBuildIndex where to create or incrementally add to the index • If index does not exist, it will create a new one • If index already exists, it will append new documents into the index. <parameters> <corpus> <path>/path/to/source/files</path> <class>trectext</class> </corpus> <index>/path/to/the/index</index> <memory>256M</memory> <stopper> <word>first_word</word> <word>next_word</word> … <word>final_word</word> </stopper> </parameters>

- 15. Indexing: Parameters • <memory>: used to define a “soft-limit” of the amount of memory the indexer should use before flushing its buffers to disk. • Use K for kilobytes, M for megabytes, and G for gigabytes. <parameters> <corpus> <path>/path/to/source/files</path> <class>trectext</class> </corpus> <index>/path/to/the/index</index> <memory>256M</memory> <stopper> <word>first_word</word> <word>next_word</word> … <word>final_word</word> </stopper> </parameters>

- 16. Indexing: Parameters • Stopwords can be defined within a <stopper> block with individual stopwords within enclosed in <word> tags. <parameters> <corpus> <path>/path/to/source/files</path> <class>trectext</class> </corpus> <index>/path/to/the/index</index> <memory>256M</memory> <stopper> <word>first_word</word> <word>next_word</word> … <word>final_word</word> </stopper> </parameters>

- 17. Indexing: Parameters • Term stemming can be used while indexing as well via the <stemmer> tag. – Specify the stemmer type via the <name> tag within. – Stemmers included with the Lemur Toolkit include the Krovetz Stemmer and the Porter Stemmer. <parameters> <corpus> <path>/path/to/source/files</path> <class>trectext</class> </corpus> <index>/path/to/the/index</index> <memory>256M</memory> <stemmer> <name>krovetz</name> </stemmer> </parameters>

- 20. How to use Lemur Project • Indexing • Document Preparation • Indexing Parameters • Retrieval • Parameters

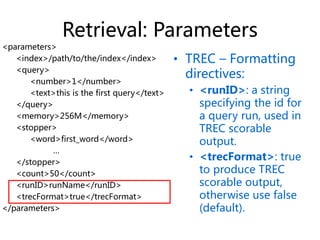

- 21. Retrieval: Parameters • Basic usage for retrieval: • IndriRunQuery/RetEval <parameter_file> • Parameter file includes options for • Where to find the index • The query or queries • How much memory to use • Formatting options • Many other parameters.

- 22. Retrieval: Parameters • Just as with indexing: • A well-formed XML document with options, wrapped by <parameters> tags: <parameters> <options></options> <options></options> … <options></options> </parameters>

- 23. Retrieval: Parameters • The <index> parameter tells IndriRunQuery/RetEval where to find the repository. <parameters> <index>/path/to/the/index</index> <query> <number>1</number> <text>this is the first query</text> </query> <memory>256M</memory> <stopper> <word>first_word</word> … </stopper> <count>50</count> <runID>runName</runID> <trecFormat>true</trecFormat> </parameters>

- 24. Retrieval: Parameters • The <query> parameter specifies a query • plain text or using the Indri query language <parameters> <index>/path/to/the/index</index> <query> <number>1</number> <text>this is the first query</text> </query> <memory>256M</memory> <stopper> <word>first_word</word> … </stopper> <count>50</count> <runID>runName</runID> <trecFormat>true</trecFormat> </parameters>

- 25. Retrieval: Parameters • To specify a maximum number of results to return, use the <count> tag <parameters> <index>/path/to/the/index</index> <query> <number>1</number> <text>this is the first query</text> </query> <memory>256M</memory> <stopper> <word>first_word</word> … </stopper> <count>50</count> <runID>runName</runID> <trecFormat>true</trecFormat> </parameters>

- 26. Retrieval: Parameters • TREC – Formatting directives: • <runID>: a string specifying the id for a query run, used in TREC scorable output. • <trecFormat>: true to produce TREC scorable output, otherwise use false (default). <parameters> <index>/path/to/the/index</index> <query> <number>1</number> <text>this is the first query</text> </query> <memory>256M</memory> <stopper> <word>first_word</word> … </stopper> <count>50</count> <runID>runName</runID> <trecFormat>true</trecFormat> </parameters>

- 29. Introducing the API • Lemur “Classic” API – Many objects, highly customizable – May want to use this when you want to change how the system works – Support for clustering, distributed IR, summarization • Indri API – Two main objects – Best for integrating search into larger applications – Supports Indri query language, XML retrieval, “live” incremental indexing, and parallel retrieval

- 30. Lemur Index Browsing • The Lemur API gives access to the index data (e.g. inverted lists, collection statistics) • IndexManager::openIndex – Returns a pointer to an index object – Detects what kind of index you wish to open, and returns the appropriate kind of index class

- 31. Lemur Index Browsing Index::term term( char* s ) : convert term string to a number term( int id ) : convert term number to a string Index::document document( char* s ) : convert doc string to a number document( int id ) : convert doc number to a string Index::termCount termCount() : Total number of terms indexed termCount( int id ) : Total number of occurrences of term number id. Index::documentCount docCount() : Number of documents indexed docCount( int id ) : Number of documents that contain term number id.

- 32. Lemur Index Browsing Index::docLength( int docID ) The length, in number of terms, of document number docID. Index::docLengthAvg Average indexed document length Index::termCountUnique Size of the index vocabulary

- 33. Lemur Index Browsing Index::docInfoList( int termID ) Returns an iterator to the inverted list for termID. The list contains all documents that contain termID, including the positions where termID occurs. Index::termInfoList( int docID ) Returns an iterator to the direct list for docID. The list contains term numbers for every term contained in document docID, and the number of times each word occurs. (use termInfoListSeq to get word positions)

- 34. Lemur Retrieval Class Name Description TFIDFRetMethod BM25 SimpleKLRetMethod KL-Divergence InQueryRetMethod Simplified InQuery CosSimRetMethod Cosine CORIRetMethod CORI OkapiRetMethod Okapi IndriRetMethod Indri (wraps QueryEnvironment)