Semantic segmentation

- 2. 自己紹介 2 テクニカル・ソリューション・アーキテクト 皆川 卓也(みながわ たくや) フリーエンジニア(ビジョン&ITラボ) 「コンピュータビジョン勉強会@関東」主催 博士(工学) 略歴: 1999-2003年 日本HP(後にアジレント・テクノロジーへ分社)にて、ITエンジニアとしてシステム構築、プリ セールス、プロジェクトマネジメント、サポート等の業務に従事 2004-2009年 コンピュータビジョンを用いたシステム/アプリ/サービス開発等に従事 2007-2010年 慶應義塾大学大学院 後期博士課程にて、コンピュータビジョンを専攻 単位取得退学後、博士号取得(2014年) 2009年-現在 フリーランスとして、コンピュータビジョンのコンサル/研究/開発等に従事 http://visitlab.jp

- 3. 本資料について 本資料は主に以下の2つのサーベイ論文を元に内容 をまとめました。 Matin Thoma, “A Suvey of Semantic Segmentation”, arXiv:1602.06541v2 Hongyuan Zhu, Fanman Meng, Jianfei Cai, Shijian Lu, “Beyond pixels: A comprehensive survey from bottom-up to semantic image segmentation and cosegmentation” 上記サーベイで紹介されている論文に対し、畳み込み ニューラルネットワークを用いた手法を追記しました。 本資料作成にあたり慶應義塾大学小篠裕子先生にご助 言いただきました。

- 5. タスクの分類 A.クラス数 大半が決まったクラス数によるもの。 二値(前景/背景、street/それ以外) unsuperpisedな方法(クラス数可変) それ以外をvoid型として分類できるもの B. 画素のクラス数 ほとんどが1つの画素が1つのクラス 1つの画素が複数のクラスに紐づくものもある マルチレイヤ―の領域分割

- 6. タスクの分類 C. 入力データ 色の有無 デプス情報の有無 1枚、ステレオ、Co-segmentation 2D(画像) vs 3D(ボクセル) D:オペレーション active (ロボット等) passive interactive automatic

- 7. データセット Semantic Segmentation用データセット 医療系 Cityscapes 30 5000(fine) 20000(coarse) 3(2040-2048) x (1016-1024) LabelMe + SUN SIFT-flow dataset Stanford background dataset NYU dataset PASCAL Context dataset

- 8. Cityscapes 概要 主にドイツの50の都市で取得したステレオカメラの画像シーケンス に画素単位で30クラスのラベル付けを行った URL https://www.cityscapes-dataset.com/ ライセンス アカデミックまたは非商用利用のみ https://www.cityscapes-dataset.com/license/ 例: Fine Coarse

- 9. KITTI Road 概要 自動運転に関するビジョン用データセット/ベンチマークKITTIのうち道路、白線、お よび走行レーン領域検出用データセット URL http://www.cvlibs.net/datasets/kitti/eval_road.php ライセンス Creative Commons Attribution-NonCommercial-ShareAlike 3.0 http://creativecommons.org/licenses/by-nc-sa/3.0/ 非商用利用のみ。当データセットを用いた成果は同じラインセンスで提供する必 要 例: Lane Road

- 10. MSRC v2 概要 Mircosoft Researchが提供している、鳥、車、体、顔、空、木、羊、犬、境 界付近、void等、23クラスをラベル付けしたデータセット URL https://www.microsoft.com/en-us/research/project/image- understanding/ ライセンス Microsoft Research Digital Image License Agreement 非商用利用のみ。 例:

- 11. Pascal VOC 概要 画像認識のコンペで使用されたデータセット。flickrの画像に対し人、車、犬、猫、 椅子、など20クラスのラベル付けがされている URL http://host.robots.ox.ac.uk/pascal/VOC/voc2012/ ライセンス flickr term of useに従う https://policies.yahoo.com/us/en/yahoo/terms/utos/index.htm 元々の権利は画像をflickrへアップロードした人にある。(どの写真を誰がアップ ロードしたかのリストは入手可能) 例: object class

- 12. その他のデータセット LabelMe + SUN dataset MITが公開しているLabelMeというアノテーション/ラベル付き画像 データのうち、SUNが515個のオブジェクトに対して付けた45,676枚の Semantic Segmentation用サブセットデータ 21,182枚の屋内、24,494枚の屋外 画像枚数はオブジェクト毎に偏りがある SIFT-flow dataset シーン解析用の2488枚の訓練画像、および200枚のテスト画像 33クラス Stanford background dataset LabelMeやMSRC、PASCAL VOCからサンプリングした720枚の画像 農村、都市、港湾シーンに対して、8つのsemanticラベル(sky, tree, road, grass, water, building, mountain, foreground)と幾何学的特性ラ ベル(sky, vertical, horizontal)を付与

- 13. その他のデータセット NYU dataset 屋内をRGBカメラとデプスカメラ(Kinect)で撮影したビデオ動画に対し、 1449個の密なRGBとデプス間のラベルペアを作成 3都市で撮影した464個のシーンに対し、407,024枚のラベルなしフ レーム それぞれのオブジェクトにはクラス名とID(cup1, cup2, cup3, etc) Microsoft COCO 328K画像に対し91種類の物体クラス PASCAL Context dataset PASCAL VOC2010データセットに対し、さらに520のクラスを追加し、全 部で540クラスのSemantic Segmentation用データセットを作成 10,103枚のtraining/validation用画像

- 14. 評価指標 Confusion Matrix kクラスの識別問題で、クラス𝑖に属する画素が𝑗とラベル付けさ れた数𝑛𝑖𝑗を求める。 Pixel Wise Accuracy 正解画素の比率 1 𝑁 σ𝑖=0 𝑘 𝑛𝑖𝑖 𝑁 = σ𝑖=0 𝑘 σ 𝑗=0 𝑘 𝑛𝑖𝑗 全画素数 Confusion matrixの例

- 15. 評価指標 Mean Accuracy 例えば空や地面のように大きな領域が画像中に存在した場合、 Pixel-Wise Accuracyはこれらの領域を大きく受ける。 クラスごとに個別に精度を求めて平均する。 1 𝑘 ∙ σ𝑖=0 𝑘 𝑛 𝑖𝑖 𝑡 𝑖 𝑡𝑖 = σ 𝑗=0 𝑘 𝑛𝑖𝑗 クラスiの正解画素数 Mean Intersection over Union クラスiに属する画素およびクラスiと判定された画素の総和 (Confusion Matrixの𝑛𝑖𝑖が属する行と列の和)と、クラスiに属して正し くiと判定された画素の和の比 1 𝑘 ∙ σ𝑖=0 𝑘 𝑛 𝑖𝑖 σ 𝑗=0 𝑘 𝑛 𝑖𝑗+𝑛 𝑗𝑖 −𝑛𝑖𝑖

- 16. 評価指標 Frequency Weighted Intersection over Union Mean Intersection over Unionに対しクラスごとの正解画素数 で重みをつけたもの 1 𝑁 ∙ σ𝑖=0 𝑘 𝑡𝑖 ∙ 𝑛 𝑖𝑖 σ 𝑗=0 𝑘 𝑛 𝑖𝑗+𝑛 𝑗𝑖 −𝑛 𝑖𝑖 F-measure KITTIのような二値分類問題の場合の指標。 RecallとPrecisionの調和平均で、どちらの重みを置くかを 𝛽で決定する(ほとんどの場合𝛽 = 1) 𝐹𝛽 = (1 + 𝛽)2 𝑡𝑝 1+𝛽2 ∙𝑡𝑝+𝛽2∙𝑓𝑛+𝑓𝑝 tp: true positive, fn: false negative, fp: false positive

- 17. Segmentationの流れ

- 19. Sliding Windowを用いた識別 スライディングウィンドウで画像をスキャン ウィンドウ内の画像から特徴量を抽出 特徴量を入力として、機械学習によりウィンドウ中心画素のラベルを学習/分類 × 特徴量 • Color • HOG • SIFT • BoVW • Neural Network • etc 機械学習 • SVM • Random Forest • Neural Network

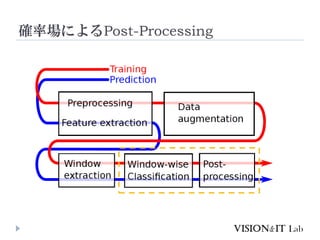

- 21. 確率場によるPost-Processing 画像の各画素をノードとし、隣接画素間にリンクを張ったグラフとみなす。 真の画素のラベルを潜在変数y 画像から取得した特徴量を観測変数x 以下の2つの条件を元に潜在変数yを推定 各画素のラベルは観測変数x「だけ」を基にした推定と一致する確率が高い 隣り合う画素は同じような潜在変数を持つ確率が高い 潜在変数 観測変数

- 22. Markov Random Fields (MRF) xとyの組み合わせ毎の確率分布を求める 𝑝 𝐱, 𝐲 ∝ exp −E 𝐱, 𝐲 E 𝐱, 𝐲 = 𝑖=0 𝑛 𝜓 𝑢 𝑥𝑖, 𝑦𝑖 + 𝑦 𝑖,𝑦 𝑗∈𝑁 𝜓𝑖𝑗 𝑑 𝑦𝑖, 𝑦𝑗 データ項 (画素ごとに独立して識別) 平滑化項 (隣り合うラベルが同じ)

- 23. Conditional Random Fields (CRF) 直接、観測xが与えられたときのyの条件付き確率を求める 𝑝 𝐲|𝐱 ∝ exp −E 𝐲; 𝐱 E 𝐲; 𝐱 = 𝑖=0 𝑛 𝜓 𝑢 𝑦𝑖; 𝐱 + 𝑦 𝑖,𝑦 𝑗∈𝑁 𝜓𝑖𝑗 𝑑 𝑦𝑖, 𝑦𝑗; 𝐱 データ項 (画素ごとに独立して識別) 平滑化項 (隣り合うラベルが同じ) 全画素の特徴量を基に推定

- 24. CRFを用いた例 CRFは認識対象クラスに関する知識をモデルの中に入れ込むこ とが可能なため、 Semantic SegmentationではCRFを用いた手法 が性能的に良い。一方、SemanticでないSegmentationではMRF が用いられることが多い。 X.He, R. S. Zemel, M. A. Carreira-Perpinan, “Multiscale Conditional Random Fields for Image Labeling”, CVPR2004 J. Shotton, J. Winn, C. Rother, A. Criminisi, “TextonBoost for Image Understanding: Multi-Class Object Recognition and Segmentation by Jointly Modeling Texture, Layout, and Context”, IJCV2009 P. Krahenbuhl, V. Koltun, “Efficient Inference in Fully Connected CRFs with Gaussian Edge Potentials”, NIPS2011 P. Arbelaez, B. Hariharan, C. Gu, S. Gupta, L. D. Bourdev, J. Malik, “Semantic Segmentation using Regions and Parts”, CVPR2012 (非CRF)

- 25. CRF for Image Labeling (He, et al., 2004) CRFをSemantic Segmentationへ適用した最初の論文 ローカル特徴、全体特徴、ラベル間の位置関係を考慮し たモデルを構築して最適化

- 26. TextonBoost (Shotton, et al., 2009) ソースコード http://jamie.shotton.org/work/code.html (C#) 各画素の周囲のテクスチャ(Texton)を元に画素のラベルを学 習(Joint Boost)することで物体のコンテクストを学習 CRFを用いてテクスチャ、色、位置、エッジを考慮した学習

- 27. Fully Connected CRFs (Krahenbuhl and Koltun, 2011) 隣接画素だけでなく、全画素同士のペアを考慮したFully Connected CRFsに対し効率的に学習させる方法を提案 ソースコード http://www.philkr.net/ (C++)

- 28. Semantic Segmentation using Regions and Parts (Arbelaez, et al., 2012) 一度ざっくりとした領域分割をして、各領域において多ク ラスに対するスコアを算出し、それらを特徴として用いて, ラベリングをしていく。 CRFを用いず、各領域のスコアを統合して画素ごとのスコ アを算出する。 SVM Part Compatibility Global Appearance Semantic Contours Geometrical properties Multi Class

- 29. ニューラルネットワークによる手法

- 30. 畳み込みニューラルネットワーク 畳み込み層とプーリング層が交互に現れる 畳み込み層: 各位置で学習した特徴との類似度を計算 プーリング層: 位置ずれなどのわずかな違いを無視 ・・・ ・・・・・ 入力画像 出力 畳み込み層 プーリング層 畳み込み層 プーリング層 全結合層

- 31. 畳み込みニューラルネットワーク 畳み込み層 各特徴毎の各場所での類似度を出力する 層が上がるほど複雑な特徴になる 入力 画像 実際はRGBの3チャネルが使われる 出力A 特徴A 特徴B 出力B ・・・

- 32. 畳み込みニューラルネットワーク プーリング層 近傍の情報を統合して、情報に不変性を加える Max Pooling、Lp Pooling、Average Poolingなどがある Max Poolingの例: 近傍領域のうち最大値を出力することで不変性を与える 畳み込み層 の出力A ・・・ ・・・ Max Max 畳み込み層 の出力B

- 33. 畳み込みニューラルネットワークの学習 誤差逆伝播法 1. ネットワークに画像を入力し出力を得る 2. 出力と教師信号を比較し、誤差が小さくなる方向へ特徴を出 力層に近い方から順に更新していく ・・・ ・・・・・ 入力画像 出力 畳み込み層 プーリング層 畳み込み層 プーリング層 全結合層 教師

- 34. Recurrent Neural Networks (RNN) 時系列データなどの「連続したデータ」を扱うための ニューラルネットワーク 音声認識、機械翻訳、動画像認識 予測先の次元数が可変 時刻tの隠れ層の出力が時刻t+1の隠れ層の入力になる ・・ ・ ・・ ・ ・・ ・ 入力 出力 隠れ層

- 35. Recurrent Neural Networks (RNN) 展開すると、静的なネットワークで表せる 通常の誤差逆伝播法でパラメータを学習できる ・・ ・ ・・ ・ ・・・ 入力 出力 隠れ層 ・・・ ・・ ・ ・・ ・ ・・・ ・・・ ・・・ t-1 t t+1 教師データ 教師データ 教師データ

- 36. ニューラルネットワークによる手法の例 P. H. Pinheiro, R. Collobert, “Recurrent Convolutional Neural Networks for Scene Labeling”, ICML2014 J. Long, E. Shelhamer, T. Darrel, “Fully Convolutional Networks for Semantic Segmentation”, CVPR2015 S. Zheng, S. Jayasumana, B. Romera-Paredes, V. Vineet, Z. Su, D. Du, C. Huang, P. H. S. Torr, “Conditional Random Fields as Recurrent Neural Networks”, ICCV2015 H. Noh, S. Hong, B. Han, “Learning Deconvolution Network for Semantic Segmentation”, ICCV2015 G. Lin, C. Shen, A. Hengel, I. Reid, “Efficient Piecewise Training of Deep Structured Models for Semantic Segmentation”, CVPR2016 P. Isola, J. Y. Zhu, T. Zhou, A. A. Efros, “Image to Image Translation with Conditional Adversarial Networks”, arXiv:1611.67004v1, 2016

- 37. RCNN for Scene Labeling (Pinheiro and Collobert, 2014) ネットワークfで各画素のラ ベルを予測し、その結果を 入力に加えて繰り返しfで 予測を行うことで、段階的 にラベルの予測精度を上 げていく CRFの平滑化項にあたるよ うな、コンテクスト(ラベル間 の位置関係)を評価してお らず、各画素ごとに特徴か らラベルを判別しているに 等しい

- 38. Fully Convolutional Networks (Long, et al., 2015) ピクセルごとにラベル付けされた教師信号を与える 最終の全結合層をアップサンプリングした畳み込み層に置き換え ソースコード https://github.com/shelhamer/fcn.berkeleyvision.org (Caffe) 他の人が実装したChainer版やTensorFlow版もあり

- 39. CRF as RNN (Zheng, et al., 2015) Fully Connected CRFの平均場近似による学習と等価なRNNを構築 特徴抽出部分にFCN(Fully Convolutional Networks)を用いることで、 end to endで誤差逆伝播法による学習が行えるネットワークを構築 平均場近似の一回のIterationを表すCNN ネットワークの全体像 ソースコード https://github.com/torrvis ion/crfasrnn (Caffe)

- 40. Deconvolution Network for Semantic Segmentation (Noh, et al., 2015) Deconvolution Networkを学習させることで、FCNよりも詳 細にラベルごとの尤度を推定し、それを元にFully Connected CRFでPost-Processing ソースコード https://github.com/HyeonwooNoh/DeconvNet (Caffe + Matlab)

- 41. Deep Structured Models for Semantic Segmentation (Lin, et al., 2016) CRFのデータ項および平滑化項のエネルギー関数をそれぞれ CNNで表現 CRFへの入力はFeatMap-NetというCNNの特徴マップ CRFの学習を通してエネルギー関数のCNNを学習 平滑化項が、よりsemanticな性質を帯びる

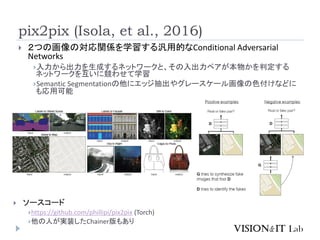

- 42. pix2pix (Isola, et al., 2016) 2つの画像の対応関係を学習する汎用的なConditional Adversarial Networks 入力から出力を生成するネットワークと、その入出力ペアが本物かを判定する ネットワークを互いに競わせて学習 Semantic Segmentationの他にエッジ抽出やグレースケール画像の色付けなどに も応用可能 ソースコード https://github.com/phillipi/pix2pix (Torch) 他の人が実装したChainer版もあり

- 43. まとめ CRFを用いた手法がほぼデファクトだったが、最近はCNN を用いた手法がデファクトになっている CNNが良い性能を上げるのは、単純に識別性能が高いためと 思われる CNN単体の手法だと境界部分や小さい領域などに弱い CNN+CRFの組み合わせによってさらに精度を上げるアプ ローチも多い 複数の特徴を簡単に入れ込みたいときはRegion and Partsを使った非CRFの手法は検討の価値がある Generative Adversarial Network (GAN)はここ数年急激に 研究が進んだ分野なので、今後も注視する必要