Session 03 - Hadoop Installation and Basic Commands

Download as pptx, pdf2 likes115 views

The document outlines a comprehensive guide for installing Hadoop on a virtual machine, detailing commands and steps for setting up necessary permissions, folders, and configurations. It includes instructions for installing Java, configuring environment variables, and editing various Hadoop configuration files. Additionally, it covers starting and stopping Hadoop services and notes on upcoming topics for the next training session.

1 of 21

Downloaded 12 times

![Page 2Classification: Restricted

Installation Guide

• Step:- 1 Copy hadoop installation files from local to virtual machine

Command :-

hadoop@hadoop-VirtualBox:~$ sudo cp -r /media/sf_Dee/

/home/hadoop/Desktop/

• Step:- 2 Give permission to folder on Desktop

Command :-

hadoop@hadoop -VirtualBox:~$ sudo chmod 777 -R

/home/hadoop/Desktop/sf_Dee/

[sudo] password for hadoop:

• Step:- 3 Make a Folder work in /usr/local/work install hadoop and jave

inside it .

Command:-

hadoop@hadoop-VirtualBox:~$ sudo mkdir /usr/local/work](https://image.slidesharecdn.com/session03-hadoopinstallationandbasiccommands-slides-180609010002/85/Session-03-Hadoop-Installation-and-Basic-Commands-3-320.jpg)

Ad

Recommended

Session 01 - Into to Hadoop

Session 01 - Into to HadoopAnandMHadoop The document provides an introduction to Hadoop and big data concepts. It discusses key topics like what big data is characterized by the three V's of volume, velocity and variety. It then defines Hadoop as a framework for distributed storage and processing of large datasets using commodity hardware. The rest of the document outlines the main components of the Hadoop ecosystem including HDFS, YARN, MapReduce, Hive, Pig, Zookeeper, Flume and Sqoop and provides brief descriptions of each.

July 2010 Triangle Hadoop Users Group - Chad Vawter Slides

July 2010 Triangle Hadoop Users Group - Chad Vawter Slidesryancox This document provides an overview of setting up a Hadoop cluster, including installing the Apache Hadoop distribution, configuring SSH keys for passwordless login between nodes, configuring environment variables and Hadoop configuration files, and starting and stopping the HDFS and MapReduce services. It also briefly discusses alternative Hadoop distributions from Cloudera and Yahoo, as well as using cloud platforms like Amazon EC2 for Hadoop clusters.

Hadoop cluster configuration

Hadoop cluster configurationprabakaranbrick This document provides an overview and configuration instructions for Hadoop, Flume, Hive, and HBase. It begins with an introduction to each tool, including what problems they aim to solve and high-level descriptions of how they work. It then provides step-by-step instructions for downloading, configuring, and running each tool on a single node or small cluster. Specific configuration files and properties are outlined for core Hadoop components as well as integrating Flume, Hive, and HBase.

Introduction to hadoop administration jk

Introduction to hadoop administration jkEdureka! This document outlines the key tasks and responsibilities of a Hadoop administrator. It discusses five top Hadoop admin tasks: 1) cluster planning which involves sizing hardware requirements, 2) setting up a fully distributed Hadoop cluster, 3) adding or removing nodes from the cluster, 4) upgrading Hadoop versions, and 5) providing high availability to the cluster. It provides guidance on hardware sizing, installing and configuring Hadoop daemons, and demos of setting up a cluster, adding nodes, and enabling high availability using NameNode redundancy. The goal is to help administrators understand how to plan, deploy, and manage Hadoop clusters effectively.

Hadoop Installation presentation

Hadoop Installation presentationpuneet yadav Hadoop is a framework for distributed processing of large datasets across clusters of computers using a simple programming model. It provides reliable storage through HDFS and processes large amounts of data in parallel through MapReduce. The document discusses installing and configuring Hadoop on Windows, including setting environment variables and configuration files. It also demonstrates running a sample MapReduce wordcount job to count word frequencies in an input file stored in HDFS.

02 Hadoop deployment and configuration

02 Hadoop deployment and configurationSubhas Kumar Ghosh This document provides instructions for configuring a single node Hadoop deployment on Ubuntu. It describes installing Java, adding a dedicated Hadoop user, configuring SSH for key-based authentication, disabling IPv6, installing Hadoop, updating environment variables, and configuring Hadoop configuration files including core-site.xml, mapred-site.xml, and hdfs-site.xml. Key steps include setting JAVA_HOME, configuring HDFS directories and ports, and setting hadoop.tmp.dir to the local /app/hadoop/tmp directory.

Administer Hadoop Cluster

Administer Hadoop ClusterEdureka! The document outlines a Hadoop administration course, detailing essential objectives such as understanding fully distributed cluster setups, Namenode failure management, and cluster architecture. It recommends hardware configurations for master and slave nodes, emphasizing performance, memory, and storage management. Additionally, it lists topics covered in the course, including cluster planning, maintenance, and advanced Hadoop features.

Apache HDFS - Lab Assignment

Apache HDFS - Lab AssignmentFarzad Nozarian The document provides a comprehensive guide on setting up and configuring a single-node Hadoop installation, specifically focusing on the Hadoop Distributed File System (HDFS). It outlines the requirements, installation steps, and various operational modes, including local and pseudo-distributed configurations. Additionally, it includes a lab assignment with practical tasks for users to verify and utilize their HDFS setup.

Learn to setup a Hadoop Multi Node Cluster

Learn to setup a Hadoop Multi Node ClusterEdureka! This document provides an overview of key topics covered in Edureka's Hadoop Administration course, including Hadoop components and configurations, modes of a Hadoop cluster, setting up a multi-node cluster, and terminal commands. The course teaches students how to deploy, configure, manage, monitor, and secure an Apache Hadoop cluster over 24 hours of live online classes with assignments and a project.

Hadoop installation with an example

Hadoop installation with an exampleNikita Kesharwani This document provides an overview of Apache Hadoop, an open-source framework for distributed storage and processing of large datasets across clusters of computers. It discusses what Hadoop is, why it is useful for big data problems, examples of companies using Hadoop, the core Hadoop components like HDFS and MapReduce, and how to install and run Hadoop in pseudo-distributed mode on a single node. It also includes an example of running a word count MapReduce job to count word frequencies in input files.

Hadoop operations basic

Hadoop operations basicHafizur Rahman This document provides an overview of Hadoop, including its architecture, installation, configuration, and commands. It describes the challenges of large-scale data that Hadoop addresses through distributed processing and storage across clusters. The key components of Hadoop are HDFS for storage and MapReduce for distributed processing. HDFS stores data across clusters and provides fault tolerance through replication, while MapReduce allows parallel processing of large datasets through a map and reduce programming model. The document also outlines how to install and configure Hadoop in pseudo-distributed and fully distributed modes.

6.hive

6.hivePrashant Gupta Apache Hive is a data warehousing tool built on Hadoop designed for data analysis of structured data, using a language similar to SQL called HiveQL. It executes queries on a Hadoop infrastructure, allowing it to handle large datasets, while also requiring specific configurations for effective use, such as choosing the correct execution engine and configuring the metastore. The document also details installation, data types, table management, query structure, and best practices for using Hive.

Hive Quick Start Tutorial

Hive Quick Start TutorialCarl Steinbach The document provides an overview of Hive, a data warehousing infrastructure built on Hadoop that allows for querying and managing large datasets. It covers its architecture, data models, installation instructions, configuration, and command-line interface functionalities. Hive is open source, supports rapid ad-hoc queries, and is suitable for handling vast amounts of data while providing a high-level querying interface.

Hadoop

HadoopCassell Hsu Hadoop has a master/slave architecture. The master node runs the NameNode, JobTracker, and optionally SecondaryNameNode. The NameNode stores metadata about data locations. DataNodes on slave nodes store the actual data blocks. The JobTracker schedules jobs, assigning tasks to TaskTrackers on slaves which perform the work. The SecondaryNameNode assists the NameNode in the event of failures. MapReduce jobs split files into blocks, map tasks process the blocks in parallel on slaves, and reduce tasks consolidate the results.

Deployment and Management of Hadoop Clusters

Deployment and Management of Hadoop ClustersAmal G Jose The document outlines the deployment and management of Hadoop clusters, covering aspects such as cluster design, backup and recovery, upgrades, and routine administration tasks. Key topics include cluster planning, operating system hardening, installation methods, and maintenance procedures. It emphasizes the importance of monitoring and managing Hadoop processes to ensure efficient operation and data integrity.

Hadoop installation, Configuration, and Mapreduce program

Hadoop installation, Configuration, and Mapreduce programPraveen Kumar Donta The document outlines the process and components involved in the Hadoop framework for handling big data, including installation, configuration, and execution in a MapReduce environment. It details steps for setting up Hadoop, including Java installation, configuring system properties, and starting and stopping the cluster. Additionally, it offers a brief overview of the MapReduce programming paradigm, including examples like a word count implementation.

Introduction to Hadoop

Introduction to HadoopOvidiu Dimulescu This document provides an overview and introduction to Hadoop, an open-source framework for storing and processing large datasets in a distributed computing environment. It discusses what Hadoop is, common use cases like ETL and analysis, key architectural components like HDFS and MapReduce, and why Hadoop is useful for solving problems involving "big data" through parallel processing across commodity hardware.

Cross-DC Fault-Tolerant ViewFileSystem @ Twitter

Cross-DC Fault-Tolerant ViewFileSystem @ TwitterDataWorks Summit/Hadoop Summit The document describes a distributed Hadoop architecture with multiple data centers and clusters. It shows how to configure Hadoop to access HDFS files across different name nodes and clusters using tools like ViewFileSystem. Client applications can use a single consistent file system namespace and API to access data distributed across the infrastructure.

Hadoop architecture by ajay

Hadoop architecture by ajayHadoop online training The document provides an overview of the Hadoop framework, detailing its architecture with master and slave nodes, specifically focusing on the Hadoop Distributed File System (HDFS) and its components like the namenode, datanodes, and their replication policies for data reliability. It also explains the role of task trackers and job trackers in managing job submission and task execution, along with configuration settings for various operational parameters. Additional functionalities such as decommissioning nodes, block management, and system monitoring using specific commands and scripts are discussed.

Introduction to apache hadoop

Introduction to apache hadoopShashwat Shriparv The document provides an agenda for a presentation on Hadoop. It discusses the need for new big data processing platforms due to the large amounts of data generated each day by companies like Twitter, Facebook, and Google. It then summarizes the origin of Hadoop, describes what Hadoop is and some of its core components like HDFS and MapReduce. The document outlines the Hadoop architecture and ecosystem and provides examples of real world use cases for Hadoop. It poses the question of when an organization should implement Hadoop and concludes by asking if there are any questions.

Hadoop introduction seminar presentation

Hadoop introduction seminar presentationpuneet yadav Hadoop is an open-source framework for distributed processing and storage of large datasets across clusters of commodity hardware. It allows for the parallel processing of large datasets across multiple nodes. Hadoop can store and process huge amounts of structured, semi-structured, and unstructured data from various sources quickly using distributed computing. It provides capabilities for fault tolerance, flexibility, and scalability.

Apache HBase 1.0 Release

Apache HBase 1.0 ReleaseNick Dimiduk The document details the release of Apache HBase 1.0, emphasizing its goal to provide a stable foundation for future versions while maintaining the reliability of prior releases. It covers the history of HBase, highlights major changes such as improved stability and usability, and outlines the upgrade paths from previous versions. The document also discusses new features and compatibility requirements, particularly regarding Hadoop and Java versions.

Hadoop administration

Hadoop administrationAneesh Pulickal Karunakaran This document discusses the Hadoop cluster configuration at InMobi. It includes details about the cluster hardware specifications with 450 nodes and 5PB of storage. It also describes the software stack including Hadoop, Falcon, Oozie, Kafka and monitoring tools like Nagios and Graphite. The document then outlines some common issues faced like tasks hogging CPU resources and solutions implemented like cgroups resource limits. It provides examples of NameNode HA failover challenges and approaches to address slow running jobs.

Learn Hadoop Administration

Learn Hadoop AdministrationEdureka! The document outlines an educational course on Hadoop administration, detailing the weekly topics covered, including big data understanding, Hadoop versions, cluster configurations, job scheduling, and securing the cluster. It discusses Hadoop 2.0 features, configuration files, and various popular distributions like Cloudera, Hortonworks, and Amazon EMR. The document also emphasizes the importance of planning hardware and software requirements for Hadoop clusters.

BIG DATA: Apache Hadoop

BIG DATA: Apache HadoopOleksiy Krotov This document provides an overview of Apache Hadoop, including its architecture, components, and applications. Hadoop is an open-source framework for distributed storage and processing of large datasets. It uses Hadoop Distributed File System (HDFS) for storage and MapReduce for processing. HDFS stores data across clusters of nodes and replicates files for fault tolerance. MapReduce allows parallel processing of large datasets using a map and reduce workflow. The document also discusses Hadoop interfaces, Oracle connectors, and resources for further information.

HDFS: Hadoop Distributed Filesystem

HDFS: Hadoop Distributed FilesystemSteve Loughran The document presents an overview of HDFS (Hadoop Distributed File System), highlighting its structure, goals, and functionalities such as data replication and failure handling. It emphasizes the use of commodity hardware and open-source software to store and manage large-scale datasets, enabling efficient computation and location-aware applications. The document also discusses future developments in storage technologies and the need for evolving application requirements.

Hadoop HDFS

Hadoop HDFSVigen Sahakyan HDFS (Hadoop Distributed File System) is a distributed file system that stores large data sets across clusters of machines. It partitions and stores data in blocks across nodes, with multiple replicas of each block for fault tolerance. HDFS uses a master/slave architecture with a NameNode that manages metadata and DataNodes that store data blocks. The NameNode and DataNodes work together to ensure high availability and reliability even when hardware failures occur. HDFS supports large data sets through horizontal scaling and tools like HDFS Federation that allow scaling the namespace across multiple NameNodes.

Hadoop training in hyderabad-kellytechnologies

Hadoop training in hyderabad-kellytechnologiesKelly Technologies The document provides an overview of the Hadoop Distributed File System (HDFS), highlighting its architecture, features like fault tolerance, and data replication. It describes the roles of namenode and datanodes, as well as the communication protocols involved in data storage and retrieval. Additionally, it covers the challenges related to data integrity, metadata management, and the flexibility of the system for handling large data sets.

Installing hadoop on ubuntu 16

Installing hadoop on ubuntu 16Enrique Davila The document provides instructions for installing Hadoop on Ubuntu 16. It involves installing OpenJDK, generating SSH keys, downloading and extracting Hadoop, configuring environment variables, and modifying configuration files like core-site.xml and hdfs-site.xml to configure the namenode and datanode. The instructions also cover formatting the namenode, starting the Hadoop processes, and accessing the web interface.

Installing hadoop on ubuntu 16

Installing hadoop on ubuntu 16Enrique Davila The document provides instructions for installing Hadoop on Ubuntu 16. It involves installing OpenJDK, generating SSH keys, downloading and extracting Hadoop, configuring environment variables, and modifying configuration files like core-site.xml and hdfs-site.xml. Formatting the namenode creates filesystem metadata. Starting services runs the single node cluster. The web interface is available at localhost:50070 to monitor the installation.

More Related Content

What's hot (20)

Learn to setup a Hadoop Multi Node Cluster

Learn to setup a Hadoop Multi Node ClusterEdureka! This document provides an overview of key topics covered in Edureka's Hadoop Administration course, including Hadoop components and configurations, modes of a Hadoop cluster, setting up a multi-node cluster, and terminal commands. The course teaches students how to deploy, configure, manage, monitor, and secure an Apache Hadoop cluster over 24 hours of live online classes with assignments and a project.

Hadoop installation with an example

Hadoop installation with an exampleNikita Kesharwani This document provides an overview of Apache Hadoop, an open-source framework for distributed storage and processing of large datasets across clusters of computers. It discusses what Hadoop is, why it is useful for big data problems, examples of companies using Hadoop, the core Hadoop components like HDFS and MapReduce, and how to install and run Hadoop in pseudo-distributed mode on a single node. It also includes an example of running a word count MapReduce job to count word frequencies in input files.

Hadoop operations basic

Hadoop operations basicHafizur Rahman This document provides an overview of Hadoop, including its architecture, installation, configuration, and commands. It describes the challenges of large-scale data that Hadoop addresses through distributed processing and storage across clusters. The key components of Hadoop are HDFS for storage and MapReduce for distributed processing. HDFS stores data across clusters and provides fault tolerance through replication, while MapReduce allows parallel processing of large datasets through a map and reduce programming model. The document also outlines how to install and configure Hadoop in pseudo-distributed and fully distributed modes.

6.hive

6.hivePrashant Gupta Apache Hive is a data warehousing tool built on Hadoop designed for data analysis of structured data, using a language similar to SQL called HiveQL. It executes queries on a Hadoop infrastructure, allowing it to handle large datasets, while also requiring specific configurations for effective use, such as choosing the correct execution engine and configuring the metastore. The document also details installation, data types, table management, query structure, and best practices for using Hive.

Hive Quick Start Tutorial

Hive Quick Start TutorialCarl Steinbach The document provides an overview of Hive, a data warehousing infrastructure built on Hadoop that allows for querying and managing large datasets. It covers its architecture, data models, installation instructions, configuration, and command-line interface functionalities. Hive is open source, supports rapid ad-hoc queries, and is suitable for handling vast amounts of data while providing a high-level querying interface.

Hadoop

HadoopCassell Hsu Hadoop has a master/slave architecture. The master node runs the NameNode, JobTracker, and optionally SecondaryNameNode. The NameNode stores metadata about data locations. DataNodes on slave nodes store the actual data blocks. The JobTracker schedules jobs, assigning tasks to TaskTrackers on slaves which perform the work. The SecondaryNameNode assists the NameNode in the event of failures. MapReduce jobs split files into blocks, map tasks process the blocks in parallel on slaves, and reduce tasks consolidate the results.

Deployment and Management of Hadoop Clusters

Deployment and Management of Hadoop ClustersAmal G Jose The document outlines the deployment and management of Hadoop clusters, covering aspects such as cluster design, backup and recovery, upgrades, and routine administration tasks. Key topics include cluster planning, operating system hardening, installation methods, and maintenance procedures. It emphasizes the importance of monitoring and managing Hadoop processes to ensure efficient operation and data integrity.

Hadoop installation, Configuration, and Mapreduce program

Hadoop installation, Configuration, and Mapreduce programPraveen Kumar Donta The document outlines the process and components involved in the Hadoop framework for handling big data, including installation, configuration, and execution in a MapReduce environment. It details steps for setting up Hadoop, including Java installation, configuring system properties, and starting and stopping the cluster. Additionally, it offers a brief overview of the MapReduce programming paradigm, including examples like a word count implementation.

Introduction to Hadoop

Introduction to HadoopOvidiu Dimulescu This document provides an overview and introduction to Hadoop, an open-source framework for storing and processing large datasets in a distributed computing environment. It discusses what Hadoop is, common use cases like ETL and analysis, key architectural components like HDFS and MapReduce, and why Hadoop is useful for solving problems involving "big data" through parallel processing across commodity hardware.

Cross-DC Fault-Tolerant ViewFileSystem @ Twitter

Cross-DC Fault-Tolerant ViewFileSystem @ TwitterDataWorks Summit/Hadoop Summit The document describes a distributed Hadoop architecture with multiple data centers and clusters. It shows how to configure Hadoop to access HDFS files across different name nodes and clusters using tools like ViewFileSystem. Client applications can use a single consistent file system namespace and API to access data distributed across the infrastructure.

Hadoop architecture by ajay

Hadoop architecture by ajayHadoop online training The document provides an overview of the Hadoop framework, detailing its architecture with master and slave nodes, specifically focusing on the Hadoop Distributed File System (HDFS) and its components like the namenode, datanodes, and their replication policies for data reliability. It also explains the role of task trackers and job trackers in managing job submission and task execution, along with configuration settings for various operational parameters. Additional functionalities such as decommissioning nodes, block management, and system monitoring using specific commands and scripts are discussed.

Introduction to apache hadoop

Introduction to apache hadoopShashwat Shriparv The document provides an agenda for a presentation on Hadoop. It discusses the need for new big data processing platforms due to the large amounts of data generated each day by companies like Twitter, Facebook, and Google. It then summarizes the origin of Hadoop, describes what Hadoop is and some of its core components like HDFS and MapReduce. The document outlines the Hadoop architecture and ecosystem and provides examples of real world use cases for Hadoop. It poses the question of when an organization should implement Hadoop and concludes by asking if there are any questions.

Hadoop introduction seminar presentation

Hadoop introduction seminar presentationpuneet yadav Hadoop is an open-source framework for distributed processing and storage of large datasets across clusters of commodity hardware. It allows for the parallel processing of large datasets across multiple nodes. Hadoop can store and process huge amounts of structured, semi-structured, and unstructured data from various sources quickly using distributed computing. It provides capabilities for fault tolerance, flexibility, and scalability.

Apache HBase 1.0 Release

Apache HBase 1.0 ReleaseNick Dimiduk The document details the release of Apache HBase 1.0, emphasizing its goal to provide a stable foundation for future versions while maintaining the reliability of prior releases. It covers the history of HBase, highlights major changes such as improved stability and usability, and outlines the upgrade paths from previous versions. The document also discusses new features and compatibility requirements, particularly regarding Hadoop and Java versions.

Hadoop administration

Hadoop administrationAneesh Pulickal Karunakaran This document discusses the Hadoop cluster configuration at InMobi. It includes details about the cluster hardware specifications with 450 nodes and 5PB of storage. It also describes the software stack including Hadoop, Falcon, Oozie, Kafka and monitoring tools like Nagios and Graphite. The document then outlines some common issues faced like tasks hogging CPU resources and solutions implemented like cgroups resource limits. It provides examples of NameNode HA failover challenges and approaches to address slow running jobs.

Learn Hadoop Administration

Learn Hadoop AdministrationEdureka! The document outlines an educational course on Hadoop administration, detailing the weekly topics covered, including big data understanding, Hadoop versions, cluster configurations, job scheduling, and securing the cluster. It discusses Hadoop 2.0 features, configuration files, and various popular distributions like Cloudera, Hortonworks, and Amazon EMR. The document also emphasizes the importance of planning hardware and software requirements for Hadoop clusters.

BIG DATA: Apache Hadoop

BIG DATA: Apache HadoopOleksiy Krotov This document provides an overview of Apache Hadoop, including its architecture, components, and applications. Hadoop is an open-source framework for distributed storage and processing of large datasets. It uses Hadoop Distributed File System (HDFS) for storage and MapReduce for processing. HDFS stores data across clusters of nodes and replicates files for fault tolerance. MapReduce allows parallel processing of large datasets using a map and reduce workflow. The document also discusses Hadoop interfaces, Oracle connectors, and resources for further information.

HDFS: Hadoop Distributed Filesystem

HDFS: Hadoop Distributed FilesystemSteve Loughran The document presents an overview of HDFS (Hadoop Distributed File System), highlighting its structure, goals, and functionalities such as data replication and failure handling. It emphasizes the use of commodity hardware and open-source software to store and manage large-scale datasets, enabling efficient computation and location-aware applications. The document also discusses future developments in storage technologies and the need for evolving application requirements.

Hadoop HDFS

Hadoop HDFSVigen Sahakyan HDFS (Hadoop Distributed File System) is a distributed file system that stores large data sets across clusters of machines. It partitions and stores data in blocks across nodes, with multiple replicas of each block for fault tolerance. HDFS uses a master/slave architecture with a NameNode that manages metadata and DataNodes that store data blocks. The NameNode and DataNodes work together to ensure high availability and reliability even when hardware failures occur. HDFS supports large data sets through horizontal scaling and tools like HDFS Federation that allow scaling the namespace across multiple NameNodes.

Hadoop training in hyderabad-kellytechnologies

Hadoop training in hyderabad-kellytechnologiesKelly Technologies The document provides an overview of the Hadoop Distributed File System (HDFS), highlighting its architecture, features like fault tolerance, and data replication. It describes the roles of namenode and datanodes, as well as the communication protocols involved in data storage and retrieval. Additionally, it covers the challenges related to data integrity, metadata management, and the flexibility of the system for handling large data sets.

Similar to Session 03 - Hadoop Installation and Basic Commands (20)

Installing hadoop on ubuntu 16

Installing hadoop on ubuntu 16Enrique Davila The document provides instructions for installing Hadoop on Ubuntu 16. It involves installing OpenJDK, generating SSH keys, downloading and extracting Hadoop, configuring environment variables, and modifying configuration files like core-site.xml and hdfs-site.xml to configure the namenode and datanode. The instructions also cover formatting the namenode, starting the Hadoop processes, and accessing the web interface.

Installing hadoop on ubuntu 16

Installing hadoop on ubuntu 16Enrique Davila The document provides instructions for installing Hadoop on Ubuntu 16. It involves installing OpenJDK, generating SSH keys, downloading and extracting Hadoop, configuring environment variables, and modifying configuration files like core-site.xml and hdfs-site.xml. Formatting the namenode creates filesystem metadata. Starting services runs the single node cluster. The web interface is available at localhost:50070 to monitor the installation.

安装Apache Hadoop的轻松

安装Apache Hadoop的轻松Enrique Davila The document provides instructions for installing Hadoop on Ubuntu 16. It involves installing OpenJDK, generating SSH keys, downloading and extracting Hadoop, configuring environment variables, and modifying configuration files like core-site.xml and hdfs-site.xml to configure the namenode and datanode. The instructions also cover formatting the namenode, starting the Hadoop processes, and accessing the web interface.

簡単にApache Hadoopのインストール

簡単にApache HadoopのインストールEnrique Davila The document provides instructions for installing Hadoop on Ubuntu 16. It involves installing OpenJDK, generating SSH keys, downloading and extracting Hadoop, configuring environment variables, and modifying configuration files like core-site.xml and hdfs-site.xml to configure the namenode and datanode. The instructions also cover formatting the namenode, starting the Hadoop processes, and accessing the web interface.

Setting up a HADOOP 2.2 cluster on CentOS 6

Setting up a HADOOP 2.2 cluster on CentOS 6Manish Chopra This document outlines the steps to set up a Hadoop 2.2 cluster on RHEL/CentOS 6 using VMware, requiring one master node and two slave nodes with specified configurations. It covers the installation of Java and Hadoop, configuring user authentication, setting up necessary folders and XML configurations for HDFS and YARN, and starting the services. Finally, it provides URLs for accessing the Namenode and ResourceManager after the services are launched.

Hadoop Installation

Hadoop Installationmrinalsingh385 This document provides a comprehensive guide for installing Hadoop 2.2.0 on a system using Ubuntu, detailing the necessary steps such as Java installation, SSH configuration, user creation, Hadoop installation, and configuration of environment variables. It includes troubleshooting tips for common errors encountered during the setup process and concludes with verifying successful service startup. The guide is aimed at those looking to establish a Hadoop environment and encourages further learning through related training resources.

Hadoop completereference

Hadoop completereferencearunkumar sadhasivam This document provides instructions for configuring Java, Hadoop, and related components on a single Ubuntu system. It includes steps to install Java 7, add a dedicated Hadoop user, configure SSH access, disable IPv6, install Hadoop, and configure core Hadoop files and directories. Prerequisites and configuration of files like yarn-site.xml, core-site.xml, mapred-site.xml, and hdfs-site.xml are described. The goal is to set up a single node Hadoop cluster for testing and development purposes.

Hadoop installation and Running KMeans Clustering with MapReduce Program on H...

Hadoop installation and Running KMeans Clustering with MapReduce Program on H...Titus Damaiyanti 1. The document discusses installing Hadoop in single node cluster mode on Ubuntu, including installing Java, configuring SSH, extracting and configuring Hadoop files. Key configuration files like core-site.xml and hdfs-site.xml are edited.

2. Formatting the HDFS namenode clears all data. Hadoop is started using start-all.sh and the jps command checks if daemons are running.

3. The document then moves to discussing running a KMeans clustering MapReduce program on the installed Hadoop framework.

Hadoop 2.4 installing on ubuntu 14.04

Hadoop 2.4 installing on ubuntu 14.04baabtra.com - No. 1 supplier of quality freshers This document provides steps to install Hadoop 2.4 on Ubuntu 14.04. It discusses installing Java, adding a dedicated Hadoop user, installing SSH, creating SSH certificates, installing Hadoop, configuring files, formatting the HDFS, starting and stopping Hadoop, and using Hadoop interfaces. The steps include modifying configuration files, creating directories for HDFS data, and running commands to format, start, and stop the single node Hadoop cluster.

Hadoop installation on windows

Hadoop installation on windows habeebulla g This document outlines the installation and configuration process for Hadoop on a Windows system using Ubuntu in a virtual machine. It provides detailed steps for creating a user, setting up SSH, installing Java, downloading Hadoop, configuring various XML files, and verifying the installation. It also describes how to access Hadoop services through specified ports in a web browser.

Run wordcount job (hadoop)

Run wordcount job (hadoop)valeri kopaleishvili The document provides step-by-step instructions for installing a single-node Hadoop cluster on Ubuntu Linux using VMware. It details downloading and configuring required software like Java, SSH, and Hadoop. Configuration files are edited to set properties for core Hadoop functions and enable HDFS. Finally, sample data is copied to HDFS and a word count MapReduce job is run to test the installation.

Exp-3.pptx

Exp-3.pptxPraveenKumar581409 1) The document describes the steps to install a single node Hadoop cluster on a laptop or desktop.

2) It involves downloading and extracting required software like Hadoop, JDK, and configuring environment variables.

3) Key configuration files like core-site.xml, hdfs-site.xml and mapred-site.xml are edited to configure the HDFS, namenode and jobtracker.

4) The namenode is formatted and Hadoop daemons like datanode, secondary namenode and jobtracker are started.

Hadoop installation

Hadoop installationhabeebulla g This document provides instructions for installing Hadoop on Ubuntu. It describes creating a separate user for Hadoop, setting up SSH keys for access, installing Java, downloading and extracting Hadoop, configuring core Hadoop files like core-site.xml and hdfs-site.xml, and common errors that may occur during the Hadoop installation and configuration process. Finally, it explains how to format the namenode, start the Hadoop daemons, and check the Hadoop web interfaces.

Single node hadoop cluster installation

Single node hadoop cluster installation Mahantesh Angadi This document provides instructions for installing a single-node Hadoop cluster on Ubuntu. It outlines downloading and configuring Java, installing Hadoop, configuring SSH access to localhost, editing Hadoop configuration files, and formatting the HDFS filesystem via the namenode. Key steps include adding a dedicated Hadoop user, generating SSH keys, setting properties in core-site.xml, hdfs-site.xml and mapred-site.xml, and running 'hadoop namenode -format' to initialize the filesystem.

R hive tutorial supplement 1 - Installing Hadoop

R hive tutorial supplement 1 - Installing HadoopAiden Seonghak Hong This document provides instructions for installing Hadoop on a small cluster of 4 virtual machines for testing purposes. It describes downloading and extracting Hadoop, configuring environment variables and SSH keys, editing configuration files, and checking the Hadoop status page to confirm the installation was successful.

Hadoop Installation

Hadoop InstallationAhmed Salman This document provides instructions for installing Hadoop in standalone mode. It discusses prerequisites like installing Java and configuring SSH. It then outlines the steps to download and extract Hadoop, edit configuration files, format the namenode, start daemons, and test the installation. Key configuration files include core-site.xml, mapred-site.xml, and hdfs-site.xml. The document is presented by Ahmed Shouman and provides his contact information.

Big data using Hadoop, Hive, Sqoop with Installation

Big data using Hadoop, Hive, Sqoop with Installationmellempudilavanya999 The document is a lab manual for a course on big data using Hadoop, outlining prerequisites, objectives, and outcomes. It provides a detailed outline of experiments over several weeks, including topics such as Linux commands, MapReduce programming, and weather data mining. Students will gain practical skills in data analysis and application development as part of their training.

Big Data Course - BigData HUB

Big Data Course - BigData HUBAhmed Salman This document provides an introduction to big data and Hadoop. It discusses what big data is, characteristics of big data like volume, velocity and variety. It then introduces Hadoop as a framework for storing and analyzing big data, describing its main components like HDFS and MapReduce. The document outlines a typical big data workflow and gives examples of big data use cases. It also provides an overview of setting up Hadoop on a single node, including installing Java, configuring SSH, downloading and extracting Hadoop files, editing configuration files, formatting the namenode, starting Hadoop daemons and testing the installation.

Setup and run hadoop distrubution file system example 2.2

Setup and run hadoop distrubution file system example 2.2Mounir Benhalla The document provides instructions for setting up Hadoop 2.2.0 on Ubuntu. It describes installing Java and OpenSSH, creating Hadoop user and groups, setting up SSH keys for passwordless login, configuring Hadoop environment variables, formatting the namenode, starting Hadoop services, and running a sample Pi estimation MapReduce job to test the installation.

Hadoop installation steps

Hadoop installation stepsMayank Sharma This document provides instructions to install Hadoop version 2.6.0 on an Ubuntu 12.04 LTS machine. It involves updating the system, installing Java, creating a Hadoop user, installing SSH, downloading and extracting Hadoop, configuring files and directories, and starting the Hadoop services. The installation is verified by checking running processes and accessing the NameNode and Secondary NameNode web interfaces. The document concludes by providing commands to stop the Hadoop cluster services.

Ad

More from AnandMHadoop (8)

Overview of Java

Overview of Java AnandMHadoop The document provides an overview of Java programming, including its object-oriented nature, data types, variables (local, instance, and static), methods, and constructors. It explains classes with examples, operators, and various built-in methods for string manipulation and character analysis. Additionally, it covers the structure of Java programs and how to create and use objects and methods effectively.

Session 14 - Hive

Session 14 - HiveAnandMHadoop The document provides a comprehensive overview of Hive, a data warehouse infrastructure tool for processing structured data in Hadoop. It covers topics such as HiveQL queries, metadata management, data types, table creation, views, and different types of joins used in data analysis. The document emphasizes Hive's features, such as its SQL-like querying language and integration with Hadoop for analytical processing.

Session 09 - Flume

Session 09 - FlumeAnandMHadoop The document provides an overview of Apache Flume, a tool for collecting streaming data from various sources like log files and events. It details the architecture of Flume agents, which consist of sources, sinks, and channels, and explains the installation and configuration process on a Linux environment. Additionally, it discusses the roles of netcat and telnet in sending and receiving data, along with example configurations for using Flume to log events or store data in HDFS.

Session 23 - Kafka and Zookeeper

Session 23 - Kafka and ZookeeperAnandMHadoop The document provides a comprehensive overview of Apache Kafka, a distributed publish-subscribe messaging system designed for handling high volumes of data with fault tolerance and scalability. It explains Kafka's architecture, components, the role of Zookeeper in coordination, and the messaging workflow between producers and consumers. The document outlines important topics such as message persistence, the partitioning of topics, and the leader election process in Zookeeper, emphasizing how these elements interact to maintain robust data processing in distributed systems.

Session 19 - MapReduce

Session 19 - MapReduce AnandMHadoop This document provides an overview of Hadoop MapReduce concepts including:

- The MapReduce paradigm with mappers processing input splits in parallel during the map phase and reducers processing grouped intermediate outputs in parallel during the reduce phase.

- Key classes involved include the main driver class, mapper class, reducer class, input format class, output format class, and job configuration class.

- An example word count job is described that counts the number of occurrences of each word by emitting (word, 1) pairs from mappers and summing the counts by word from reducers.

- The timeline of a MapReduce job including map and reduce phases is covered along with details of map and reduce task execution.

Session 04 -Pig Continued

Session 04 -Pig ContinuedAnandMHadoop The document provides a comprehensive overview of using Pig, a high-level platform for creating programs that run on Apache Hadoop. It covers various join operations (inner, left outer, right outer), the split operator, filter and distinct operators, the foreach operator for data transformations, and the assert operator for data validation. Additionally, it discusses the creation of reusable scripts with macros in Pig Latin and outlines topics for the next training session.

Session 04 pig - slides

Session 04 pig - slidesAnandMHadoop The document outlines an Apache Pig training session, covering its integration with Hadoop, how to install and run Pig, and the process of loading data for analysis. It describes Pig's high-level language, Pig Latin, and various operations such as loading, storing, and processing data using Pig commands. Additionally, it introduces various operators like dump and illustrate for data manipulation and analysis.

Session 02 - Yarn Concepts

Session 02 - Yarn ConceptsAnandMHadoop YARN evolved from Hadoop's original architecture to split the functionalities of resource management and job monitoring into separate daemons. The ResourceManager allocates containers of resources to ApplicationMasters, which negotiate resources and monitor application execution across NodeManagers. A container is an abstract collection of physical resources like CPU, memory, and disk. When an application is submitted, its ApplicationMaster's first container is launched, and it then requests additional containers for tasks.

Ad

Recently uploaded (20)

OpenPOWER Foundation & Open-Source Core Innovations

OpenPOWER Foundation & Open-Source Core InnovationsIBM penPOWER offers a fully open, royalty-free CPU architecture for custom chip design.

It enables both lightweight FPGA cores (like Microwatt) and high-performance processors (like POWER10).

Developers have full access to source code, specs, and tools for end-to-end chip creation.

It supports AI, HPC, cloud, and embedded workloads with proven performance.

Backed by a global community, it fosters innovation, education, and collaboration.

Powering Multi-Page Web Applications Using Flow Apps and FME Data Streaming

Powering Multi-Page Web Applications Using Flow Apps and FME Data StreamingSafe Software Unleash the potential of FME Flow to build and deploy advanced multi-page web applications with ease. Discover how Flow Apps and FME’s data streaming capabilities empower you to create interactive web experiences directly within FME Platform. Without the need for dedicated web-hosting infrastructure, FME enhances both data accessibility and user experience. Join us to explore how to unlock the full potential of FME for your web projects and seamlessly integrate data-driven applications into your workflows.

From Manual to Auto Searching- FME in the Driver's Seat

From Manual to Auto Searching- FME in the Driver's SeatSafe Software Finding a specific car online can be a time-consuming task, especially when checking multiple dealer websites. A few years ago, I faced this exact problem while searching for a particular vehicle in New Zealand. The local classified platform, Trade Me (similar to eBay), wasn’t yielding any results, so I expanded my search to second-hand dealer sites—only to realise that periodically checking each one was going to be tedious. That’s when I noticed something interesting: many of these websites used the same platform to manage their inventories. Recognising this, I reverse-engineered the platform’s structure and built an FME workspace that automated the search process for me. By integrating API calls and setting up periodic checks, I received real-time email alerts when matching cars were listed. In this presentation, I’ll walk through how I used FME to save hours of manual searching by creating a custom car-finding automation system. While FME can’t buy a car for you—yet—it can certainly help you find the one you’re after!

Creating Inclusive Digital Learning with AI: A Smarter, Fairer Future

Creating Inclusive Digital Learning with AI: A Smarter, Fairer FutureImpelsys Inc. Have you ever struggled to read a tiny label on a medicine box or tried to navigate a confusing website? Now imagine if every learning experience felt that way—every single day.

For millions of people living with disabilities, poorly designed content isn’t just frustrating. It’s a barrier to growth. Inclusive learning is about fixing that. And today, AI is helping us build digital learning that’s smarter, kinder, and accessible to everyone.

Accessible learning increases engagement, retention, performance, and inclusivity for everyone. Inclusive design is simply better design.

Smarter Aviation Data Management: Lessons from Swedavia Airports and Sweco

Smarter Aviation Data Management: Lessons from Swedavia Airports and SwecoSafe Software Managing airport and airspace data is no small task, especially when you’re expected to deliver it in AIXM format without spending a fortune on specialized tools. But what if there was a smarter, more affordable way?

Join us for a behind-the-scenes look at how Sweco partnered with Swedavia, the Swedish airport operator, to solve this challenge using FME and Esri.

Learn how they built automated workflows to manage periodic updates, merge airspace data, and support data extracts – all while meeting strict government reporting requirements to the Civil Aviation Administration of Sweden.

Even better? Swedavia built custom services and applications that use the FME Flow REST API to trigger jobs and retrieve results – streamlining tasks like securing the quality of new surveyor data, creating permdelta and baseline representations in the AIS schema, and generating AIXM extracts from their AIS data.

To conclude, FME expert Dean Hintz will walk through a GeoBorders reading workflow and highlight recent enhancements to FME’s AIXM (Aeronautical Information Exchange Model) processing and interpretation capabilities.

Discover how airports like Swedavia are harnessing the power of FME to simplify aviation data management, and how you can too.

Oh, the Possibilities - Balancing Innovation and Risk with Generative AI.pdf

Oh, the Possibilities - Balancing Innovation and Risk with Generative AI.pdfPriyanka Aash Oh, the Possibilities - Balancing Innovation and Risk with Generative AI

AI VIDEO MAGAZINE - June 2025 - r/aivideo

AI VIDEO MAGAZINE - June 2025 - r/aivideo1pcity Studios, Inc AI VIDEO MAGAZINE - r/aivideo community newsletter – Exclusive Tutorials: How to make an AI VIDEO from scratch, PLUS: How to make AI MUSIC, Hottest ai videos of 2025, Exclusive Interviews, New Tools, Previews, and MORE - JUNE 2025 ISSUE -

The Future of AI Agent Development Trends to Watch.pptx

The Future of AI Agent Development Trends to Watch.pptxLisa ward The Future of AI Agent Development: Trends to Watch explores emerging innovations shaping smarter, more autonomous AI solutions for businesses and technology.

Wenn alles versagt - IBM Tape schützt, was zählt! Und besonders mit dem neust...

Wenn alles versagt - IBM Tape schützt, was zählt! Und besonders mit dem neust...Josef Weingand IBM LTO10

2025_06_18 - OpenMetadata Community Meeting.pdf

2025_06_18 - OpenMetadata Community Meeting.pdfOpenMetadata The community meetup was held Wednesday June 18, 2025 @ 9:00 AM PST.

Catch the next OpenMetadata Community Meetup @ https://www.meetup.com/openmetadata-meetup-group/

In this month's OpenMetadata Community Meetup, "Enforcing Quality & SLAs with OpenMetadata Data Contracts," we covered data contracts, why they matter, and how to implement them in OpenMetadata to increase the quality of your data assets!

Agenda Highlights:

👋 Introducing Data Contracts: An agreement between data producers and consumers

📝 Data Contracts key components: Understanding a contract and its purpose

🧑🎨 Writing your first contract: How to create your own contracts in OpenMetadata

🦾 An OpenMetadata MCP Server update!

➕ And More!

PyCon SG 25 - Firecracker Made Easy with Python.pdf

PyCon SG 25 - Firecracker Made Easy with Python.pdfMuhammad Yuga Nugraha Explore the ease of managing Firecracker microVM with the firecracker-python. In this session, I will introduce the basics of Firecracker microVM and demonstrate how this custom SDK facilitates microVM operations easily. We will delve into the design and development process behind the SDK, providing a behind-the-scenes look at its creation and features. While traditional Firecracker SDKs were primarily available in Go, this module brings a simplicity of Python to the table.

OpenACC and Open Hackathons Monthly Highlights June 2025

OpenACC and Open Hackathons Monthly Highlights June 2025OpenACC The OpenACC organization focuses on enhancing parallel computing skills and advancing interoperability in scientific applications through hackathons and training. The upcoming 2025 Open Accelerated Computing Summit (OACS) aims to explore the convergence of AI and HPC in scientific computing and foster knowledge sharing. This year's OACS welcomes talk submissions from a variety of topics, from Using Standard Language Parallelism to Computer Vision Applications. The document also highlights several open hackathons, a call to apply for NVIDIA Academic Grant Program and resources for optimizing scientific applications using OpenACC directives.

"How to survive Black Friday: preparing e-commerce for a peak season", Yurii ...

"How to survive Black Friday: preparing e-commerce for a peak season", Yurii ...Fwdays We will explore how e-commerce projects prepare for the busiest time of the year, which key aspects to focus on, and what to expect. We’ll share our experience in setting up auto-scaling, load balancing, and discuss the loads that Silpo handles, as well as the solutions that help us navigate this season without failures.

Tech-ASan: Two-stage check for Address Sanitizer - Yixuan Cao.pdf

Tech-ASan: Two-stage check for Address Sanitizer - Yixuan Cao.pdfcaoyixuan2019 A presentation at Internetware 2025.

AI vs Human Writing: Can You Tell the Difference?

AI vs Human Writing: Can You Tell the Difference?Shashi Sathyanarayana, Ph.D This slide illustrates a side-by-side comparison between human-written, AI-written, and ambiguous content. It highlights subtle cues that help readers assess authenticity, raising essential questions about the future of communication, trust, and thought leadership in the age of generative AI.

GenAI Opportunities and Challenges - Where 370 Enterprises Are Focusing Now.pdf

GenAI Opportunities and Challenges - Where 370 Enterprises Are Focusing Now.pdfPriyanka Aash GenAI Opportunities and Challenges - Where 370 Enterprises Are Focusing Now

9-1-1 Addressing: End-to-End Automation Using FME

9-1-1 Addressing: End-to-End Automation Using FMESafe Software This session will cover a common use case for local and state/provincial governments who create and/or maintain their 9-1-1 addressing data, particularly address points and road centerlines. In this session, you'll learn how FME has helped Shelby County 9-1-1 (TN) automate the 9-1-1 addressing process; including automatically assigning attributes from disparate sources, on-the-fly QAQC of said data, and reporting. The FME logic that this presentation will cover includes: Table joins using attributes and geometry, Looping in custom transformers, Working with lists and Change detection.

"Database isolation: how we deal with hundreds of direct connections to the d...

"Database isolation: how we deal with hundreds of direct connections to the d...Fwdays What can go wrong if you allow each service to access the database directly? In a startup, this seems like a quick and easy solution, but as the system scales, problems appear that no one could have guessed.

In my talk, I'll share Solidgate's experience in transforming its architecture: from the chaos of direct connections to a service-based data access model. I will talk about the transition stages, bottlenecks, and how isolation affected infrastructure support. I will honestly show what worked and what didn't. In short, we will analyze the controversy of this talk.

Session 03 - Hadoop Installation and Basic Commands

- 1. Hadoop Developer Training Session 3 - Installation and Commands

- 2. Page 1Classification: Restricted Agenda • Hadoop Installation and Commands

- 3. Page 2Classification: Restricted Installation Guide • Step:- 1 Copy hadoop installation files from local to virtual machine Command :- hadoop@hadoop-VirtualBox:~$ sudo cp -r /media/sf_Dee/ /home/hadoop/Desktop/ • Step:- 2 Give permission to folder on Desktop Command :- hadoop@hadoop -VirtualBox:~$ sudo chmod 777 -R /home/hadoop/Desktop/sf_Dee/ [sudo] password for hadoop: • Step:- 3 Make a Folder work in /usr/local/work install hadoop and jave inside it . Command:- hadoop@hadoop-VirtualBox:~$ sudo mkdir /usr/local/work

- 4. Page 3Classification: Restricted Installation Guide • Step:- 4 Copy the hadoop and java.tar files to the work folder. Command:- hadoop@hadoop-VirtualBox:~$ sudo cp -r /home/hadoop/Desktop/sf_Dee/jdk-8u60-linux-x64.tar.gz /usr/local/work/ hadoop@hadoop-VirtualBox:~$ sudo cp -r /home/hadoop/Desktop/sf_Dee/de/Setups/hadoop-2.6.0.tar.gz /usr/local/work/ hadoop@hadoop-VirtualBox:~$ cd /usr/local/work/ • Step:- 5 Untar the java & hadoop.tar files inside work folder Command:- hadoop@hadoop-VirtualBox:/usr/local/work$ sudo tar -xzvf jdk-8u60-linux- x64.tar.gz hadoop@hadoop-VirtualBox:/usr/local/work$ sudo tar -xzvf hadoop- 2.6.0.tar.gz

- 5. Page 4Classification: Restricted Installation Guide • Step:- 6 Move the hadoop-2.6.0 and jdk1.8.0_60 folder as hadoop and java. Command:- hadoop@hadoop-VirtualBox:/usr/local/work$ sudo mv hadoop-2.6.0 /usr/local/work/hadoop hadoop@hadoop-VirtualBox:/usr/local/work$ sudo mv jdk1.8.0_60 /usr/local/work/java • Step:- 7 Install rsync & ssh Command:- hadoop@hadoop-VirtualBox:/usr/local/work$ sudo apt-get install ssh hadoop@hadoop-VirtualBox:/usr/local/work$ sudo apt-get install rsync

- 6. Page 5Classification: Restricted Installation Guide • Step:- 8 Generate and copy keys to make passwordless connection Command:- hadoop@hadoop-VirtualBox:/usr/local/work$ ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa hadoop@hadoop-VirtualBox:/usr/local/work$ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys • Step:- 9 Update java to os Command:- hadoop@hadoop-VirtualBox:/usr/local/work$ sudo update-alternatives -- install "/usr/bin/java" "java" "/usr/local/work/java/bin/java" 1 update- alternatives: using /usr/local/work/java/bin/java to provide /usr/bin/java (java) in auto mode

- 7. Page 6Classification: Restricted Installation Guide hadoop@hadoop-VirtualBox:/usr/local/work$ sudo update-alternatives -- install "/usr/bin/javac" "javac" "/usr/local/work/java/bin/javac" 1 update- alternatives: using /usr/local/work/java/bin/javac to provide /usr/bin/javac (javac) in auto mode hadoop@hadoop-VirtualBox:/usr/local/work$ sudo update-alternatives -- install "/usr/bin/javaws" "javaws" "/usr/local/work/java/bin/javaws" 1 update-alternatives: using /usr/local/work/java/bin/javaws to provide /usr/bin/javaws (javaws) in auto mode • Step:- 10 Set java to OS Command:-

- 8. Page 7Classification: Restricted Installation Guide • Step:- 11 Update java & hadoop path in bashrc profile (env variables at the end of doc) Command:- hadoop@hadoop-VirtualBox:/usr/local/work$ sudo nano ~/.bashrc hadoop@hadoop-VirtualBox:/usr/local/work$ source ~/.bashrc • Step:- 12 Check if the hadoop and java are installed perfectly. Command:- hadoop@hadoop-VirtualBox:/usr/local/work$ echo $HADOOP_PREFIX output would be :- /usr/local/work/hadoop/

- 9. Page 8Classification: Restricted Installation Guide hadoop@hadoop-VirtualBox:/usr/local/work$ echo $JAVA_HOME output would be :- /usr/local/work/java • Step:- 13 Copy the mapred-site.xml.template to mapred-site.xml in /usr/local/work/hadoop/etc/hadoop Command:- hadoop@hadoop-VirtualBox:/usr/local/work$ hadoop@hadoop-VirtualBox:/usr/local/work/hadoop/etc/hadoop$ sudo cp mapred-site.xml.template mapred-site.xml

- 10. Page 9Classification: Restricted Installation Guide • Step:- 14 Edit hadoop-env.sh file (you should be inside the directory /usr/local/work/hadoop/etc/hadoop) Command:- hadoop@hadoop-VirtualBox:/usr/local/work/hadoop/etc/hadoop$ sudo nano hadoop-env.sh enter/change java path to your path of java installation : export JAVA_HOME=/usr/local/work/java • Step:- 15 Edit core-site.xml file (you should be inside the directory /usr/local/work/hadoop/etc/hadoop) Command:- hadoop@hadoop-VirtualBox:/usr/local/work/hadoop/etc/hadoop$ sudo nano core-site.xml

- 11. Page 10Classification: Restricted Installation Guide Enter the following properties inside it <configuration> <property> <name>fs.defaultFS</name> <value>hdfs://localhost:8020</value> <final>true</final> </property> </configuration> • Step:- 16 Edit hdfs-site.xml file (you should be inside the directory /usr/local/work/hadoop/etc/hadoop) Command:- hadoop@hadoop-VirtualBox:/usr/local/work/hadoop/etc/hadoop$ sudo nano hdfs-site.xml

- 12. Page 11Classification: Restricted Installation Guide Enter the following properties inside it <configuration> <property> <name>dfs.namenode.name.dir</name> <value>file:/usr/local/work/hadoop/hadoop_data/dfs/name</value> </property> <property> <name>dfs.blocksize</name> <value>268435456</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/usr/local/work/hadoop/hadoop_data/dfs/data</value> </property> </configuration>

- 13. Page 12Classification: Restricted Installation Guide • Step:- 17 Edit mapred-site.xml file (you should be inside the directory /usr/local/work/hadoop/etc/hadoop) Command:- hadoop@hadoop-VirtualBox:/usr/local/work/hadoop/etc/hadoop$ sudo nano mapred-site.xml Enter the following properties inside it <configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration>

- 14. Page 13Classification: Restricted Installation Guide • Step:- 18 Edit yarn-mapred.xml file (you should be inside the directory /usr/local/work/hadoop/etc/hadoop) Command:- hadoop@hadoop-VirtualBox:/usr/local/work/hadoop/etc/hadoop$ sudo nano yarn-site.xml Enter the following properties inside it <configuration> <property> <name>yarn.resourcemanager.address</name> <value>localhost:8032</value> </property> <property> <name>yarn.resourcemanager.scheduler.address</name> <value>localhost:8030</value> </property>

- 15. Page 14Classification: Restricted Installation Guide <property> <name>yarn.resourcemanager.resource-tracker.address</name> <value>localhost:8031</value> </property> <property> <name>yarn.resourcemanager.admin.address</name> <value>localhost:8033</value> </property> <property> <name>yarn.resourcemanager.webapp.address</name> <value>localhost:8088</value> </property> <property> <name>yarn.resourcemanager.scheduler.class</name> <value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.Capacity Scheduler</value> </property>

- 16. Page 15Classification: Restricted Installation Guide <property> <name>yarn.resourcemanager.scheduler.class</name> <value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.Capacity Scheduler</value> </property> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.nodemanager.local-dirs</name> <value>file:/usr/local/work/hadoop/hadoop_data/yarn/yarn.nodemanager.local- dirs</value> </property> <property> <name>yarn.nodemanager.log-dirs</name> <value>file:/usr/local/work/hadoop/hadoop_data/yarn/logs</value> </property>

- 17. Page 16Classification: Restricted Installation Guide <property> <name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name> <value>org.apache.hadoop.mapred.ShuffleHandler</value> </property> </configuration> • Step:- 19 Format name node Command:- hadoop@hadoop-VirtualBox:/usr/local/work/hadoop/etc/hadoop$ hadoop namenode -format • Step:- 20 Start services of hadoop Command:- hadoop@hadoop-VirtualBox:/usr/local/work/hadoop/etc/hadoop$ start- all.sh

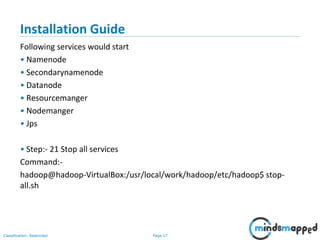

- 18. Page 17Classification: Restricted Installation Guide Following services would start • Namenode • Secondarynamenode • Datanode • Resourcemanger • Nodemanger • Jps • Step:- 21 Stop all services Command:- hadoop@hadoop-VirtualBox:/usr/local/work/hadoop/etc/hadoop$ stop- all.sh

- 19. Page 18Classification: Restricted Installation Guide Note :- variable to be added in ~/.bashrc profile • export HADOOP_PREFIX="/usr/local/work/hadoop/" • export PATH=$PATH:$HADOOP_PREFIX/bin • export PATH=$PATH:$HADOOP_PREFIX/sbin • export HADOOP_COMMON_HOME=${HADOOP_PREFIX} • export HADOOP_MAPRED_HOME=${HADOOP_PREFIX} • export HADOOP_HDFS_HOME=${HADOOP_PREFIX} • export YARN_HOME=${HADOOP_PREFIX} • export JAVA_HOME="/usr/local/work/java" • export PATH=$PATH:$JAVA_HOME/bin

- 20. Page 19Classification: Restricted Topics to be discussed in the next session • PIG • PIG - Overview • Installation and Running Pig • Load in Pig • Macros in Pig