Slide Presentasi EM Algorithm (Play Tennis & Brain Tissue Segmentation)

0 likes1,398 views

Slide Presentasi Machine Learning, EM-Algorithm (Kasus Play Tennis dan Brain tissue Segmentation pada citra MRI)

1 of 26

Downloaded 64 times

![Implementasi EM

●

Inisialisasi

–

–

●

Menentukan probabilitas sense P(Sk) dari jumlah cluster yang ditentukan

– total P(Sk) adalah 1

Menentukan probabilitas P(Vj|Sk): angka random

Langkah E

–

●

Langkah M

–

●

Calculate the posterior probability that Sk generated Ci

re-estimate P(Vj|Sk) and P(Sk)

Perhitungan Konvergensi

–

Hitung model likelihood score: l(C|u) = Sum_I[Log_K(P(Ci|Sk)*P(Sk))]

–

Jika | model score baru – model score lama | < threshold, konvergen](https://image.slidesharecdn.com/slidepresentasitugasem-140129003803-phpapp02/85/Slide-Presentasi-EM-Algorithm-Play-Tennis-Brain-Tissue-Segmentation-18-320.jpg)

Ad

Recommended

Random Variable

Random VariableUniversitas Telkom Slide mata kuliah probabilitas & statistika tentang materi peubah acak (random variable).

Membangun perceptron operator and

Membangun perceptron operator andRakhmat Aji Dokumen tersebut membahas tentang membangun jaringan syaraf perceptron untuk operasi AND menggunakan MATLAB. Langkah-langkahnya meliputi inisialisasi jaringan dengan fungsi newp, pelatihan jaringan dengan adaptasi menggunakan fungsi adapt, dan simulasi input baru dengan fungsi sim. Hasil pelatihan disimpan ke file HasilPerceptronAnd.m

STRATEGI OPTIMASI DALAM MENENTUKAN LINTASAN TERPENDEK UNDIVIDED RAGNAROK ASSA...

STRATEGI OPTIMASI DALAM MENENTUKAN LINTASAN TERPENDEK UNDIVIDED RAGNAROK ASSA...faisalpiliang1 Menyelesaikan masalah jalur terpendek dari suatu kota ke kota berikutnya dengan menggunakan grafik. Algoritma kruskal dapat menyelesaikan masalah jalur terpendek.

pemampatan citra

pemampatan citraanis_mh Anis Maghfirotul Habibah (1610501035)

Pengolahan Citra Digital

Teknik Elektro Universitas Tidar

Heart attack diagnosis from DE-MRI images

Heart attack diagnosis from DE-MRI imagesVanya Valindria Automatic Quantification of Myocardial Infarction from Delayed Enhancement MRI

(My master thesis research work)

Implementasi Algoritma Naive Bayes (Studi Kasus : Prediksi Kelulusan Mahasisw...

Implementasi Algoritma Naive Bayes (Studi Kasus : Prediksi Kelulusan Mahasisw...Jonathan Christian Menggunakan Algoritma Naive Bayes untuk memprediksi kelulusan mahasiswa menggunakan bahasa pemrograman C#.NET dan LINQ

PENDUGAAN PARAMETER

PENDUGAAN PARAMETERRepository Ipb Bab 6 membahas pendugaan parameter untuk berbagai jenis sebaran seperti Poisson, binomial, binomial negatif, Neyman Type A, dan Poisson-binomial. Metode yang digunakan adalah metode momen dan maksimum likelihood. Rumus penduga parameter diturunkan dari fungsi pembangkit peluang masing-masing sebaran. Metode maksimum likelihood lebih efisien dibandingkan momen apabila nilai parameter besar.

Data - Science and Engineering slide at Bandungpy Sharing Session

Data - Science and Engineering slide at Bandungpy Sharing SessionHendri Karisma This document discusses data science and engineering roles. It defines data scientist and data engineer roles. Data scientists analyze large amounts of data to answer questions and drive organizational strategy, while data engineers build systems to collect, manage and transform raw data for analysis. The document also discusses the role of AI engineers, who develop complex algorithms and infrastructure for AI systems. It provides examples of responsibilities for each role and the data science experiment process.

ML Abstraciton for Keras to Serve Several Cases

ML Abstraciton for Keras to Serve Several CasesHendri Karisma This document discusses abstracting machine learning solutions to serve different cases. It proposes a microkernel architecture and class diagram to create reusable ML components that can handle different data types, models, libraries and technologies. This will allow ML solutions to be scalable and adopt various frameworks while solving problems like classification for two sample cases using Keras and Scikit-learn.

Data Analytics Today - Data, Tech, and Regulation.pdf

Data Analytics Today - Data, Tech, and Regulation.pdfHendri Karisma This document discusses analytics, data, technology, and regulation. It begins with an introduction to Hendri Karisma and his role in data and analytics. It then defines data analytics and describes the main types: descriptive, diagnostic, predictive, and prescriptive analytics. The document outlines different data roles including data scientist, data analyst, data engineer, and AI/ML engineer. It emphasizes that building data and AI solutions requires expertise not just in science but also engineering and an understanding of relevant regulations to ensure systems are secure, trusted and reliable.

Python 101 - Indonesia AI Society.pdf

Python 101 - Indonesia AI Society.pdfHendri Karisma This document provides an overview of the Python programming language and its uses. It introduces Python, how to set it up, and popular packages and tools used for software engineering, AI engineering, and data science. It discusses object-oriented programming, functional programming, APIs, web apps, databases, machine learning, data analysis, and visualization in Python. Popular integrated development environments and libraries like NumPy, Pandas, Matplotlib, and Scikit-Learn are also introduced. The presenter's credentials and experience working with Python are provided at the end.

Slide DevSecOps Microservices

Slide DevSecOps Microservices Hendri Karisma The document discusses best practices for implementing DevSecOps for microservices architectures. It begins by defining microservices and explaining their advantages over monolithic architectures. It then covers challenges of microservices including communication between services, databases, testing, and deployment. The document recommends using a choreography pattern for asynchronous communication between loosely coupled services. It provides examples of event-driven architectures and deploying to Kubernetes. It also discusses technologies like Jenkins, Docker, Kubernetes, SonarQube, and Trivy that can help support continuous integration, deployment, and security in DevSecOps pipelines.

Machine Learning: an Introduction and cases

Machine Learning: an Introduction and casesHendri Karisma The document is a disclaimer for an educational presentation on machine learning that will be given by Hendri Karisma. It states that the presentation is intended for educational purposes only and does not replace independent professional judgment. It also notes that any opinions or information presented are those of the individual participants and may not reflect the views of the company, and that the company does not endorse or approve the content.

Python, Data science, and Unsupervised learning

Python, Data science, and Unsupervised learningHendri Karisma Presentation slide for Python ID x Tech in Asia Dev talks "How to Analyze & Manipulate Data with Python" at GoWork Coworking and Office Space.

Machine Learning Research in blibli

Machine Learning Research in blibliHendri Karisma This document summarizes a presentation about machine learning research at blibli.com. It introduces Hendri Karisma, a senior R&D engineer at blibli.com working on fraud detection and recommendation systems. Key topics covered include definitions of informatics and machine learning, machine learning techniques like supervised and unsupervised learning, tools used for machine learning in Java like Weka and H2O, and applications of AI in industry like fraud detection, recommendations, and social media analysis. Complexities of machine learning discussed include dealing with big data, knowledge representation, feature engineering, and use of high performance computing resources.

Comparison Study of Neural Network and Deep Neural Network on Repricing GAP P...

Comparison Study of Neural Network and Deep Neural Network on Repricing GAP P...Hendri Karisma This document summarizes a study that compared neural network and deep neural network models for predicting repricing gaps in Indonesian banks. The study used monthly report data from 2003-2013 to construct datasets for evaluating the models. Deep neural networks had better performance than standard backpropagation neural networks, achieving lower error rates with faster convergence. The deep learning approach was able to better handle the nonlinear and missing data characteristics of the bank reports. The researchers concluded deep neural networks are a promising approach for repricing gap prediction on Indonesian bank data.

Fraud Detection System using Deep Neural Networks

Fraud Detection System using Deep Neural NetworksHendri Karisma This document describes using a deep neural network for fraud detection. It discusses current methods used for fraud detection like GASS, ANN, and SVM. Deep learning is proposed due to the large, highly nonlinear dataset with many features and mostly unlabeled data. The document outlines the proposed deep neural network architecture, including pre-training with an autoencoder. It describes the dataset, feature engineering, and results showing 89.475% accuracy and low mean squared error. Challenges discussed include imbalanced data, changing data structures, and optimization opportunities.

Artificial Intelligence and The Complexity

Artificial Intelligence and The ComplexityHendri Karisma This document discusses the complexity of artificial intelligence and machine learning. It notes that complexity arises from big data's volume, variety, velocity and veracity, as well as from knowledge representation, unlabeled data, feature engineering, hardware limitations, and the stack of methods and technologies used. High performance computing techniques like in-memory data fabrics and GPU machines can help address these complexities. Topological data analysis is also mentioned as a technique that can help with complexity through properties like coordinate and deformation invariance and compressed representations.

Software Engineering: Today in The Betlefield

Software Engineering: Today in The BetlefieldHendri Karisma This document discusses software engineering best practices for building reliable systems, including using agile methodologies like Scrum and Kanban. It recommends microservices architectures with messaging between independent services. The technology stack should include front-end frameworks, back-end languages like Java/Python, databases like MongoDB, and infrastructure tools for deployment to cloud services. The goals are to deliver high reliability, availability, and security while improving efficiency and responsiveness to business needs.

Introduction to Topological Data Analysis

Introduction to Topological Data AnalysisHendri Karisma Topological data analysis analyzes large, complicated datasets by representing data points as nodes in a network and their relationships as edges. It has three key properties: coordinate invariance, which allows it to analyze data regardless of its coordinate system; deformation invariance, which means the analysis is unaffected by distortions of the data; and compressed representations, which allow it to represent complex shape patterns in fewer dimensions. These properties enable topological data analysis to capture the underlying shape and structure of data to help analyze and understand even very large, complex datasets.

Sharing-akka-pub

Sharing-akka-pubHendri Karisma This document provides an introduction and overview of Akka and the actor model. It begins by discussing reactive programming principles and how applications can react to events, load, failures, and users. It then defines the actor model as treating actors as the universal primitives of concurrent computation that process messages asynchronously. The document outlines the history and origins of the actor model. It defines Akka as a toolkit for building highly concurrent, distributed, and resilient message-driven applications on the JVM. It also distinguishes between parallelism, which modifies algorithms to run parts simultaneously, and concurrency, which refers to applications running through multiple threads of execution simultaneously in an event-driven way. Finally, it provides examples of shared-state concurrency issues

Presentasi cca it now and tomorow

Presentasi cca it now and tomorowHendri Karisma This document discusses emerging trends in information technology including mobility and services, security, the Internet of Things, artificial intelligence, natural user interfaces, high performance computing, big data, personalization, lean agile processes, business transformation, smart cities, and the importance of lifelong learning. It provides examples and references to support discussions on how these technologies are applying science and transforming businesses, communities, and our lives.

Bayes Belief Network

Bayes Belief NetworkHendri Karisma This document discusses machine learning using Bayesian belief networks. It begins by reviewing Bayesian reasoning as a probabilistic approach. It then discusses Bayesian learning as a method used in machine learning that explicitly calculates probabilities for hypotheses. Finally, it provides an example of using Bayes' theorem to calculate probabilities based on prior probabilities and observed data.

Slide Presentasi Kelompok E bagian Sistem Rekognisi

Slide Presentasi Kelompok E bagian Sistem RekognisiHendri Karisma Dokumen tersebut membahas tentang sistem rekognisi pola dan pengenalan pola, yang merupakan bidang ilmu penting dalam teknik informatika. Dokumen tersebut menjelaskan berbagai pendekatan, area penerapan, metode, dan contoh judul tugas akhir yang terkait dengan sistem rekognisi pola dan pengenalan pola. Tujuan utamanya adalah mencapai kinerja yang efektif dan efisien dalam menyelesaikan masalah-masalah ter

Slide Presentasi Kelompok Keilmuan E

Slide Presentasi Kelompok Keilmuan EHendri Karisma This document provides an overview of artificial intelligence and computer science. It discusses the goals of a capstone project, which aims to demonstrate skills and knowledge gained during undergraduate study. Key areas of artificial intelligence covered include problem solving, learning, and applications. Supervised, unsupervised, and reinforcement learning techniques are also summarized. The document emphasizes developing critical thinking, research skills, and presentations skills through the capstone project.

Slide Seminar Open Source (CodeLabs UNIKOM Bandung)

Slide Seminar Open Source (CodeLabs UNIKOM Bandung)Hendri Karisma Slide materi seminar opensource programming with node.js and mongoDB.

Slide for opensource programming seminar (with node.js and mongoDB)

in CodeLabs UNIKOM (Indonesian Computer University) Bandung

Ad

More Related Content

More from Hendri Karisma (19)

Data - Science and Engineering slide at Bandungpy Sharing Session

Data - Science and Engineering slide at Bandungpy Sharing SessionHendri Karisma This document discusses data science and engineering roles. It defines data scientist and data engineer roles. Data scientists analyze large amounts of data to answer questions and drive organizational strategy, while data engineers build systems to collect, manage and transform raw data for analysis. The document also discusses the role of AI engineers, who develop complex algorithms and infrastructure for AI systems. It provides examples of responsibilities for each role and the data science experiment process.

ML Abstraciton for Keras to Serve Several Cases

ML Abstraciton for Keras to Serve Several CasesHendri Karisma This document discusses abstracting machine learning solutions to serve different cases. It proposes a microkernel architecture and class diagram to create reusable ML components that can handle different data types, models, libraries and technologies. This will allow ML solutions to be scalable and adopt various frameworks while solving problems like classification for two sample cases using Keras and Scikit-learn.

Data Analytics Today - Data, Tech, and Regulation.pdf

Data Analytics Today - Data, Tech, and Regulation.pdfHendri Karisma This document discusses analytics, data, technology, and regulation. It begins with an introduction to Hendri Karisma and his role in data and analytics. It then defines data analytics and describes the main types: descriptive, diagnostic, predictive, and prescriptive analytics. The document outlines different data roles including data scientist, data analyst, data engineer, and AI/ML engineer. It emphasizes that building data and AI solutions requires expertise not just in science but also engineering and an understanding of relevant regulations to ensure systems are secure, trusted and reliable.

Python 101 - Indonesia AI Society.pdf

Python 101 - Indonesia AI Society.pdfHendri Karisma This document provides an overview of the Python programming language and its uses. It introduces Python, how to set it up, and popular packages and tools used for software engineering, AI engineering, and data science. It discusses object-oriented programming, functional programming, APIs, web apps, databases, machine learning, data analysis, and visualization in Python. Popular integrated development environments and libraries like NumPy, Pandas, Matplotlib, and Scikit-Learn are also introduced. The presenter's credentials and experience working with Python are provided at the end.

Slide DevSecOps Microservices

Slide DevSecOps Microservices Hendri Karisma The document discusses best practices for implementing DevSecOps for microservices architectures. It begins by defining microservices and explaining their advantages over monolithic architectures. It then covers challenges of microservices including communication between services, databases, testing, and deployment. The document recommends using a choreography pattern for asynchronous communication between loosely coupled services. It provides examples of event-driven architectures and deploying to Kubernetes. It also discusses technologies like Jenkins, Docker, Kubernetes, SonarQube, and Trivy that can help support continuous integration, deployment, and security in DevSecOps pipelines.

Machine Learning: an Introduction and cases

Machine Learning: an Introduction and casesHendri Karisma The document is a disclaimer for an educational presentation on machine learning that will be given by Hendri Karisma. It states that the presentation is intended for educational purposes only and does not replace independent professional judgment. It also notes that any opinions or information presented are those of the individual participants and may not reflect the views of the company, and that the company does not endorse or approve the content.

Python, Data science, and Unsupervised learning

Python, Data science, and Unsupervised learningHendri Karisma Presentation slide for Python ID x Tech in Asia Dev talks "How to Analyze & Manipulate Data with Python" at GoWork Coworking and Office Space.

Machine Learning Research in blibli

Machine Learning Research in blibliHendri Karisma This document summarizes a presentation about machine learning research at blibli.com. It introduces Hendri Karisma, a senior R&D engineer at blibli.com working on fraud detection and recommendation systems. Key topics covered include definitions of informatics and machine learning, machine learning techniques like supervised and unsupervised learning, tools used for machine learning in Java like Weka and H2O, and applications of AI in industry like fraud detection, recommendations, and social media analysis. Complexities of machine learning discussed include dealing with big data, knowledge representation, feature engineering, and use of high performance computing resources.

Comparison Study of Neural Network and Deep Neural Network on Repricing GAP P...

Comparison Study of Neural Network and Deep Neural Network on Repricing GAP P...Hendri Karisma This document summarizes a study that compared neural network and deep neural network models for predicting repricing gaps in Indonesian banks. The study used monthly report data from 2003-2013 to construct datasets for evaluating the models. Deep neural networks had better performance than standard backpropagation neural networks, achieving lower error rates with faster convergence. The deep learning approach was able to better handle the nonlinear and missing data characteristics of the bank reports. The researchers concluded deep neural networks are a promising approach for repricing gap prediction on Indonesian bank data.

Fraud Detection System using Deep Neural Networks

Fraud Detection System using Deep Neural NetworksHendri Karisma This document describes using a deep neural network for fraud detection. It discusses current methods used for fraud detection like GASS, ANN, and SVM. Deep learning is proposed due to the large, highly nonlinear dataset with many features and mostly unlabeled data. The document outlines the proposed deep neural network architecture, including pre-training with an autoencoder. It describes the dataset, feature engineering, and results showing 89.475% accuracy and low mean squared error. Challenges discussed include imbalanced data, changing data structures, and optimization opportunities.

Artificial Intelligence and The Complexity

Artificial Intelligence and The ComplexityHendri Karisma This document discusses the complexity of artificial intelligence and machine learning. It notes that complexity arises from big data's volume, variety, velocity and veracity, as well as from knowledge representation, unlabeled data, feature engineering, hardware limitations, and the stack of methods and technologies used. High performance computing techniques like in-memory data fabrics and GPU machines can help address these complexities. Topological data analysis is also mentioned as a technique that can help with complexity through properties like coordinate and deformation invariance and compressed representations.

Software Engineering: Today in The Betlefield

Software Engineering: Today in The BetlefieldHendri Karisma This document discusses software engineering best practices for building reliable systems, including using agile methodologies like Scrum and Kanban. It recommends microservices architectures with messaging between independent services. The technology stack should include front-end frameworks, back-end languages like Java/Python, databases like MongoDB, and infrastructure tools for deployment to cloud services. The goals are to deliver high reliability, availability, and security while improving efficiency and responsiveness to business needs.

Introduction to Topological Data Analysis

Introduction to Topological Data AnalysisHendri Karisma Topological data analysis analyzes large, complicated datasets by representing data points as nodes in a network and their relationships as edges. It has three key properties: coordinate invariance, which allows it to analyze data regardless of its coordinate system; deformation invariance, which means the analysis is unaffected by distortions of the data; and compressed representations, which allow it to represent complex shape patterns in fewer dimensions. These properties enable topological data analysis to capture the underlying shape and structure of data to help analyze and understand even very large, complex datasets.

Sharing-akka-pub

Sharing-akka-pubHendri Karisma This document provides an introduction and overview of Akka and the actor model. It begins by discussing reactive programming principles and how applications can react to events, load, failures, and users. It then defines the actor model as treating actors as the universal primitives of concurrent computation that process messages asynchronously. The document outlines the history and origins of the actor model. It defines Akka as a toolkit for building highly concurrent, distributed, and resilient message-driven applications on the JVM. It also distinguishes between parallelism, which modifies algorithms to run parts simultaneously, and concurrency, which refers to applications running through multiple threads of execution simultaneously in an event-driven way. Finally, it provides examples of shared-state concurrency issues

Presentasi cca it now and tomorow

Presentasi cca it now and tomorowHendri Karisma This document discusses emerging trends in information technology including mobility and services, security, the Internet of Things, artificial intelligence, natural user interfaces, high performance computing, big data, personalization, lean agile processes, business transformation, smart cities, and the importance of lifelong learning. It provides examples and references to support discussions on how these technologies are applying science and transforming businesses, communities, and our lives.

Bayes Belief Network

Bayes Belief NetworkHendri Karisma This document discusses machine learning using Bayesian belief networks. It begins by reviewing Bayesian reasoning as a probabilistic approach. It then discusses Bayesian learning as a method used in machine learning that explicitly calculates probabilities for hypotheses. Finally, it provides an example of using Bayes' theorem to calculate probabilities based on prior probabilities and observed data.

Slide Presentasi Kelompok E bagian Sistem Rekognisi

Slide Presentasi Kelompok E bagian Sistem RekognisiHendri Karisma Dokumen tersebut membahas tentang sistem rekognisi pola dan pengenalan pola, yang merupakan bidang ilmu penting dalam teknik informatika. Dokumen tersebut menjelaskan berbagai pendekatan, area penerapan, metode, dan contoh judul tugas akhir yang terkait dengan sistem rekognisi pola dan pengenalan pola. Tujuan utamanya adalah mencapai kinerja yang efektif dan efisien dalam menyelesaikan masalah-masalah ter

Slide Presentasi Kelompok Keilmuan E

Slide Presentasi Kelompok Keilmuan EHendri Karisma This document provides an overview of artificial intelligence and computer science. It discusses the goals of a capstone project, which aims to demonstrate skills and knowledge gained during undergraduate study. Key areas of artificial intelligence covered include problem solving, learning, and applications. Supervised, unsupervised, and reinforcement learning techniques are also summarized. The document emphasizes developing critical thinking, research skills, and presentations skills through the capstone project.

Slide Seminar Open Source (CodeLabs UNIKOM Bandung)

Slide Seminar Open Source (CodeLabs UNIKOM Bandung)Hendri Karisma Slide materi seminar opensource programming with node.js and mongoDB.

Slide for opensource programming seminar (with node.js and mongoDB)

in CodeLabs UNIKOM (Indonesian Computer University) Bandung

Recently uploaded (20)

PPT KIAN Pak Majid Dcss asfsdfsfsf sdcfsgregd.pptx

PPT KIAN Pak Majid Dcss asfsdfsfsf sdcfsgregd.pptxsyukronbayani dfsfdsfvcddddddd zasdcccccccccccccdcvd sdfdsfcdzxcvxcvfxv cxdcvfdxccccccccccccccccc dcxxxxxxxxxxxxxxxxxxxxxxxc sdfcsxxxxxxxxxxxxxxxxxx zsdcxcx

seminar proposal pengaruh ekstrakuler.pptx

seminar proposal pengaruh ekstrakuler.pptxAntonio817866 this paper is a purpose to explain how we known about rohis from all audiens

Tesis-analisis-spasial-perubahan-penggunaan-lahan-terhadap-rth-dikota-palangk...

Tesis-analisis-spasial-perubahan-penggunaan-lahan-terhadap-rth-dikota-palangk...bangandri3 bahan seminar hasil

_Tugas Kelompok 1 - Modul 4 Pemanfaatan Media Audio Dalam Pembelajaran.pptx

_Tugas Kelompok 1 - Modul 4 Pemanfaatan Media Audio Dalam Pembelajaran.pptxnurhani101 Pemanfaatan media audio dalam pembelajaran

MANAJEMEN DATA DAN PENGETAHUAN dalam Konsep Sistem Informasi

MANAJEMEN DATA DAN PENGETAHUAN dalam Konsep Sistem InformasiDewiWidyawati Data harus diorganisasikan sehingga pada manajer dapat menemukan data tertentu dengan mudah dan cepat untuk mengambil keputusan. Sedangkan data adalah bahan baku informasi yang dikumpulkan dalam suatu basis data agar pengumpulan dapat dilaksanakan secara efektif dan efesien diperlukan manajemen data.

MODUL 2 KEL 7 statistika penndiidkan ppt

MODUL 2 KEL 7 statistika penndiidkan pptRiskaIndriKurniawati Peserta didik diarahkan untuk menuliskan hasil identiikasi identitas diri sesuai budaya, suku bangsa, bahasa, agama, dan kepercayaannya.

Guru melakukan kegiatan OREO (Observe, Respond, Exit, Observe) pada setiap kelompok. Guru melakukan observasi, kemudian melontarkan pertanyaan-pertanyaan pengiring dan memberikan tanggapan tanpa membenarkan atau menyalahkan pendapat peserta didik sehingga membuat peserta didik selalu berpikir dan mencoba asumsinya (Bergotong Royong dan Bernalar Kritis).

Guru memantau keterlibatan peserta didik dalam diskusi kelompok.

Fase 5: Penilaian Hasil

Ad

Slide Presentasi EM Algorithm (Play Tennis & Brain Tissue Segmentation)

- 1. Expectation-Maximalization (EM) Algorithm Hendri Karisma 23512060 Luqman Abdul Mushawwir 23512146

- 2. Referensi ● Lecture Notes, Andrew Ng ● Machine Learning, Tom M. Mitchell ● ● Maximum Likelihood from Incomplete Data via the EM Algorithm, A. P. Dempster; N. M. Laird; D. B. Rubin; 1977 Dll

- 3. Konsep EM ● Maximum Likelihood Estimation (MLE) ● Mixtures of Gaussians ● Estimation-Maximization (EM) ● Rate of Convergence

- 4. Maximum Likelihood Estimation (MLE) ● ● ● Sebuah dataset dengan instans sebanyak m Parameter dari model p(x, z) akan disesuaikan dengan data, likelihood diberikan berupa Dengan Mixture of Gaussian

- 5. Maximum yang Digunakan Dalam EM-Algorithm

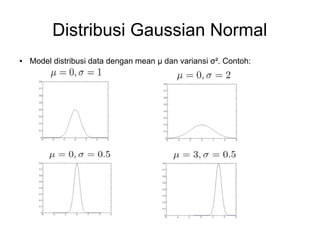

- 6. Formula Gaussian ● Gaussian Normal ● Gaussian Multivariet (extension) – Perbedaannya adalah penggunaan matrik covariant guna memperbaiki model yang dibangun.

- 7. Distribusi Gaussian Normal ● Model distribusi data dengan mean μ dan variansi σ². Contoh:

- 8. Visualisasi Gaussian 3D dengan Mean & Variance yang Berbeda

- 9. Contoh Gaussian pada histogram

- 10. Contoh Distribusi Gaussian X1 dan X2 ● Contoh penerapan:

- 11. Visualisasi Gaussian 3D ● Contoh distribusi dan pemodelan dengan Gaussian

- 14. Mixture Gaussian

- 15. Estimation-Maximization (EM) ● Iterasi yang terdiri dari dua langkah: ● Step E (Estimation): mendekati z(i) ● Step M (Maximization): memperbaharui parameter

- 16. Fungsi E-M-Step ● ● Fungsi E-Step, melakukan estimasi gaussian awal dan akan di maksimalisasi oleh step M. Fungsi M-Step, atau Maximization step, melakukan perubahan parameter pada step estimasi, sehingga akan merubah posisi gaussian selanjutnya sehingga mencapai nilai maksimum.

- 18. Implementasi EM ● Inisialisasi – – ● Menentukan probabilitas sense P(Sk) dari jumlah cluster yang ditentukan – total P(Sk) adalah 1 Menentukan probabilitas P(Vj|Sk): angka random Langkah E – ● Langkah M – ● Calculate the posterior probability that Sk generated Ci re-estimate P(Vj|Sk) and P(Sk) Perhitungan Konvergensi – Hitung model likelihood score: l(C|u) = Sum_I[Log_K(P(Ci|Sk)*P(Sk))] – Jika | model score baru – model score lama | < threshold, konvergen

- 19. Pengujian dengan PlayTennis ● Pengujian dengan dataset 1 PlayTennis (14 instance)

- 20. Cont'd ● Representasi instance biner (0 dan 1), akurasi 57% ● Representasi instance index (0-2), akurasi 50%

- 21. EKSPERIMEN EM (Partial Volume Segmentation of Brain) ● Deskripsi Data ● Hasil Eksperimen ● Kesimpulan

- 22. Partial Volume Segmentation of Brain ● ● ● Dibagi menjadi 3 cluster utama + 3 irisan cluster. 3 Cluster : White Matter, Black Matter, CSF (Cerebrospinal Fluid) Data yang diambil adalah histogram dari MRI

- 23. Input Data

- 24. Hasil Eksperimen

- 26. Kesimpulan ● ● ● ● Dalam Algoritma Clustering Expectation Maximization Data harus didistribusikan dalam bentuk mixture gaussian, dan pada kasus eksplorasi kedua, mixture dapat memanfaatkan histogram citra MRI. Dalam Eksplorasi kedua terdapat perbedaan implementasi dengan paper pertama, dan hasilnya pun berbeda secara signifikan, tidak semua terdistribusi pada setiap cluster dan penyebab adalah data yang digunakan hanya 1 citra MRI pada eksplorasi sedangkan pada paper referensi utama 16 MRI. EM Algorithm untuk kasus segmentasi jaringan otak menghasilkan kompleksitas yang cukup tinggi. Belum dapat ditarik kesimpulan mengenai hasil dari eksplorasi karena dataset yang digunakan belum tepat digunakan dalam proses eksplorasi untuk mendapatkan akurasi dari implementasi em-algorithm dalam partial volume segmentation of human brain.