Ad

Software Process and Project Management - CS832E02 unit 3

- 1. MISSION CHRIST is a nurturing ground for an individual’s holistic development to make effective contribution to the society in a dynamic environment VISION Excellence and Service CORE VALUES Faith in God | Moral Uprightness Love of Fellow Beings Social Responsibility | Pursuit of Excellence Software Process and Project Management (CS832E02) Unit 3: Software Project Management Renaissance Mithun B N Asst. Prof Dept. of CSE

- 2. Excellence and Service CHRIST Deemed to be University Unit 3: Software Project Management Renaissance Contents: Conventional Software Management Evolution of Software Economics Improving Software Economics The old way and the new way

- 3. MISSION CHRIST is a nurturing ground for an individual’s holistic development to make effective contribution to the society in a dynamic environment VISION Excellence and Service CORE VALUES Faith in God | Moral Uprightness Love of Fellow Beings Social Responsibility | Pursuit of Excellence Unit 3: Software Project Management Renaissance Chapter 1: Conventional Software Management

- 4. Excellence and Service CHRIST Deemed to be University Conventional Software Management ● The best thing about software is its flexibility. It can be used to programmed any thing. ● The worst thing about software is also its flexibility. The anything characteristic has made it difficult to plan, monitor, and control software development. ● The analysis in software development states that, the success rate of software project is very low. The following are three main reasons for low success rate.

- 5. Excellence and Service CHRIST Deemed to be University ● a) Software development is highly unpredictable. Only 10% software projects are delivered successfully within budget and time. ● b) Management discipline is more discriminator in success or failure than technology advances. ● c) The level of software scrap and rework is indicative of an immature process.

- 6. Excellence and Service CHRIST Deemed to be University The Waterfall Model

- 7. Excellence and Service CHRIST Deemed to be University The waterfall model

- 8. Excellence and Service CHRIST Deemed to be University The Waterfall Model – In Theory ● There are three primary points: ○ There are two essential steps common to the development of computer programs: analysis and coding ○ Need to other steps in between analysis and coding. Steps include system requirements definition, software requirements definition, program design and testing. ○ The basic framework described in the waterfall model is risky and invites failure. Because of postponing the testing towards end of software development.

- 9. Excellence and Service CHRIST Deemed to be University The Waterfall Model – In Theory ● Five improvements to the waterfall process are as follows: ○ Program design comes first ○ Document the design ○ Do it twice ○ Plan, control and monitor testing ○ Involve the customer

- 10. Excellence and Service CHRIST Deemed to be University The Waterfall Model – In Theory ● Program design comes first ○ Add program design phase between the software requirements generation phase and the analysis phase. ○ This would help program designer assures that the software will not fail because of storage, timing and data flux. ○ Program designer must impose on the analyst the storage, timing and operational constraints in such a way that he sense the consequences. ○ The steps required for adding program design phase are as follows: ■ Design process initiated with program designers, not analysts or programmers. ■ Design, define and allocate the data processing. Allocate processing functions, design the database allocation execution time, define interfaces and processing modes with OS, describe input and output processing, and define preliminary operations procedures. ■ Write an overview document that is understandable, informative and current so that every worker on the project can gain an elemental understanding of the system.

- 11. Excellence and Service CHRIST Deemed to be University The Waterfall Model – In Theory ● Document the design ○ Need of documentation are as follows: ○ Each designer must communicated with interfacing designers, managers and customers ○ During early phases, the documentation is the design ○ The real monetary value of documentation is to support later modifications by a separate test team, a separate maintenance team and operations personnel who are not software literate ● Note: Major advances in notations, languages, browsers, tools, and methods have rendered the need for many of the documents obsolete

- 12. Excellence and Service CHRIST Deemed to be University The Waterfall Model – In Theory ● Do it Twice: ○ In the first version, the team must have a special broad competence where they can quickly sense trouble spots in the design, model them, model alternatives, forget the straight forward aspects of the design that aren’t worth studying at this early point and finally arrive at an error-free program. ○ There is a need of architecture first development, architecture team is responsible for the initial engineering. ‘Do it N times’ is a principle of modern-day iterative development.

- 13. Excellence and Service CHRIST Deemed to be University The Waterfall Model – In Theory ● Plan, control and monitor testing: ○ The biggest user of project resources – manpower, computer time and the test phase (has greatest risk in terms of cost and schedule) ○ Previous three recommendations were aimed at solving problems before testing ○ Improvements in testing phase: ■ Employ a team of test specialists who were not responsible for the original design ■ Employ visual inspections to spot the obvious errors ■ Test every logic path ■ Employ the final checkout on the target computer

- 14. Excellence and Service CHRIST Deemed to be University The Waterfall Model – In Theory ● Involve the customer ○ It is important to involve the customer in a formal way so that he has committed himself at earlier points before final delivery. ○ Three points following requirements definitions where the insight, judgement and commitment of the customer. ○ Involve customer during critical software design reviews, during program design and a final software acceptance review ○ Involving the customer with early demonstrations and planned alpha/beta releases is a proven, valuable technique

- 15. Excellence and Service CHRIST Deemed to be University The Waterfall Model – In Practice ● Project destined for trouble, has following symptoms: ○ Protracted integration and late design breakage ○ Late risk resolution ○ Requirements-driven functional decomposition ○ Adversarial stakeholder relationships ○ Focus on documents and review meetings

- 16. Excellence and Service CHRIST Deemed to be University The Waterfall Model – In Practice ● Protracted Integration and Late Design Breakage ○ Progress is defined as percent coded, demonstrable in its target form. ○ The sequence is: ■ Early success via paper designs and thorough briefings ■ Commitment to code late in the life cycle ■ Integration nightmares due to unforeseen implementation issues and interface ambiguities ■ Heavy budget and schedule pressure to get the system working ■ Late shoe-horning of non optimal fixes, with no time for redesign ■ A very fragile, unmaintainable product delivered late

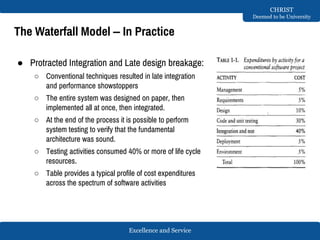

- 17. Excellence and Service CHRIST Deemed to be University The Waterfall Model – In Practice ● Protracted Integration and Late design breakage: ○ Conventional techniques resulted in late integration and performance showstoppers ○ The entire system was designed on paper, then implemented all at once, then integrated. ○ At the end of the process it is possible to perform system testing to verify that the fundamental architecture was sound. ○ Testing activities consumed 40% or more of life cycle resources. ○ Table provides a typical profile of cost expenditures across the spectrum of software activities

- 18. Excellence and Service CHRIST Deemed to be University The Waterfall Model – In Practice ● Late risk resolution ○ A serious issue associated with the waterfall life cycle was the lack of early risk resolution ○ Risk is defined as the probability of missing a cost, schedule, feature, or quality goal. ○ Early in the life cycle, as the requirements were being specified, the actual risk exposure was highly unpredictable. ○ As the system was coded, some of the individual component risks got started becoming tangible ○ Projects tended to have a protracted integration phase as major redesign initiatives were implemented. ○ This would resolve important risks, but not sacrificing the quality of the end product.

- 19. Excellence and Service CHRIST Deemed to be University The Waterfall Model – In Practice ● Requirements driven functional decomposition ○ Software development process has been requirements driven. ○ This approach depends on specifying requirements completely and unambiguously before other development activities begin ○ Specifications of requirements is a difficult and important part of the software development process. ○ The equal treatment of all requirements drains away substantial numbers of engineering hours from the driving requirements and wastes those efforts on paperwork associated with traceability, testability, logistics support and so on ○ In conventional approach, requirements were typically specified in a functional manner which is built into the classic waterfall process was the fundamental assumption that the software itself was decomposed into functions; requirements were then allocated to the resulting components.

- 20. Excellence and Service CHRIST Deemed to be University The Waterfall Model – In Practice ● Adversarial stakeholder relationships ○ The conventional process tended to result in adversarial stakeholder relationships, in large part because of the difficulties of requirements specification and the exchange of information through paper documents that capture engineering information in ad hoc formats. ○ The following sequence of events was typical for most contractual s/w efforts: ■ The contractor prepared a draft contract-deliverable document that captured an intermediate artefact and delivered it to the customer for approval ■ The customer was expected to provide comments ■ The contractor incorporated these comments and submitted a final version for approval ■ This approach resulted in customer-contractor relationships degenerating into mutual distrust, making it difficult to achieve a balance among requirements, schedule and cost

- 21. Excellence and Service CHRIST Deemed to be University The Waterfall Model – In Practice ● Focus on documents and review meetings ○ The conventional process focused on producing various documents with insufficient focus on producing tangible increments of the products themselves. ○ Contractors produce literally tons of paper to meet milestones and demonstrate progress to stakeholders ○ Typical design review is as shown in figure

- 22. Excellence and Service CHRIST Deemed to be University Conventional software management performance ● Barry Boehm top 10 list are as follows: ○ Finding and fixing a software problem after delivery costs 100 times more than finding and fixing the problem in early design phases. ○ You can compress software development schedules 25% of nominal, but no more. ○ For every $1 you spend on development, you will spend $2 on maintenance. ○ Software development and maintenance costs are primarily a function of the number of source lines of code ○ Variations among people account for the biggest differences in software productivity ○ The overall ratio of software to hardware costs is still growing. In 1955 it was 15:85, in 1985, 85:15 ○ Only about 15% of software development effort is devoted to programming

- 23. Excellence and Service CHRIST Deemed to be University Conventional software management performance ● Software systems and products typically cost 3 times as much per SLOC as individual software programs. Software system products cost 9 times as much ● Walkthroughs catch 60% of the errors. ● 80% of the contribution comes from 20% of the contributors.

- 24. MISSION CHRIST is a nurturing ground for an individual’s holistic development to make effective contribution to the society in a dynamic environment VISION Excellence and Service CORE VALUES Faith in God | Moral Uprightness Love of Fellow Beings Social Responsibility | Pursuit of Excellence Unit 3: Software Project Management Renaissance Chapter 2: Evolution of Software Economics

- 25. Excellence and Service CHRIST Deemed to be University Evolution of software economics ● Economic results of conventional software projects reflect an industry dominated by custom development, ad hoc processes and diseconomies of scale. ● Today’s cost models are based primarily on empirical project databases with very few modern iterative development success stories. ● Good software cost estimates are difficult to attain. Decision makers must deal with highly imprecise estimates. ● A modern process framework attacks the primary sources of the inherent diseconomy of scale in the conventional software process.

- 26. Excellence and Service CHRIST Deemed to be University Evolution of software economics - ● Five basic parameters of software cost models are as follows: ○ Size – end product, typically quantified in terms of the number of source instructions or the number of function points required to develop the required functionality ○ Process – used to produce end product, the ability of the process to avoid non-value- adding activities ○ Personnel – capabilities of software engineering personnel and particularly their experience with the computer science issues and the applications domain issues of the project. ○ Environment – is made up of the tools and techniques available to support efficient software development and to automate the process. ○ Quality – the required quality of the product, including its features, performance, reliability and adaptability.

- 27. Excellence and Service CHRIST Deemed to be University Software Economics ● The relationships among these parameters and the estimated cost can be written as follows: ○ Effort = (personnel) (environment) (quality) (size process) ● Important aspect of software economics is that the relationship between effort and size exhibits a diseconomy of scale ○ Ex: for a given application, a 10,000 line software solution will cost less per line than a 100,000 line software solution. How much less? ○ Assume that 100,000 line system requires 900 staff-months for development, or about 111 lines per staff-month, or 1.37 hours per line. ○ If this same system were only 10,000 lines and all other parameters were held constant, this project would be estimated at 62 staff-months or about 175 lines per staff-month, or 0.87 hour per line. ○ The per line cost for the smaller application is much less than for the larger application

- 28. Excellence and Service CHRIST Deemed to be University

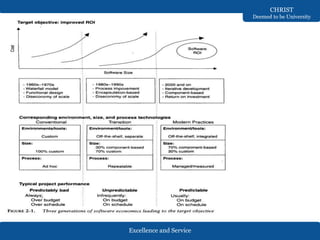

- 29. Excellence and Service CHRIST Deemed to be University Software Economics ● Figure shows three generations of basic technology advancement in tools, components, and processes. ● The required levels of quality and personnel are assumed to be constant ● The ordinate of the graph refers to software unit costs realized by an organization. ● The three generations of software development are defined as follows: ○ Conventional ○ Transition ○ Modern practice

- 30. Excellence and Service CHRIST Deemed to be University Software Economics ● Conventional - 1960s and 1970s craftsmanship ○ Organizations used custom tools, custom processes and virtually all custom components built in primitive languages ○ Project performance was highly predictable in that cost, schedule and quality objectives were almost under achieved ● Transition – 1980s and 1990s software engineering ○ Organizations used more-repeated processes and off-the-shelf tools. ○ Commercial products (os, dbms, n/w and graphics) are available ○ Some organizations began achieving economies of scale, with the growth in applications complexity

- 31. Excellence and Service CHRIST Deemed to be University Software Economics ● Modern practices – 2000 and later software production ○ 30% of the components are custom built ○ With the advances in the software technology and integrated production environments, these component based systems can be produced very rapidly

- 32. Excellence and Service CHRIST Deemed to be University Pragmatic Software Cost Estimation ● One critical problem in software cost estimation is a lack of well-documented case studies of projects that used an iterative development approach. ● The data from actual projects are highly suspect in terms of consistency and comparability because the software industry has in consistently defined metrics or atomic units of measure. ● Three points to decide on software cost are as follows: ○ Which cost estimation model to use? ○ Whether to measure software size in source lines of code or function points ○ What constitutes a good estimate?

- 33. Excellence and Service CHRIST Deemed to be University Pragmatic Software Cost Estimation ● Popular cost estimation models are: ○ COCOMO ○ CHECKPOINT ○ ESTIMACS ○ KnowledgePlan ○ Price-S ○ ProQMS ○ SEER ○ SLIM ○ SOFTCOST ○ SPQR/20

- 34. Excellence and Service CHRIST Deemed to be University Pragmatic Software Cost Estimation ● Measuring software size has two objective points of view: source lines of code and function points. ● Many software experts argued that SLOC is a lousy measure of size. ● Ex: when a code segment is described as a 1,000 source line program, most people feel comfortable with its general ‘mass’. ● If the description were 20 function points, 6 classes, 5 use cases, 4 object points, 6 files, 2 subsystems , 1 component , or 6,000 bytes. ● SLOC works as an objective point for custom built software. It is easy to automate and instrument. ● Language advances and the use of components, automatic source code generation, and other object orientations have made SLOC an ambiguous measure

- 35. Excellence and Service CHRIST Deemed to be University Pragmatic Software Cost Estimation ● The use of function points has a large following. ● The international function point users group was formed in 1984 is the dominant software measurement association in the industry. ● It is independent of the technology and it is better primitive unit for comparisons among projects and organizations. ● But, primitive definitions are abstract and measurements are not easily derived directly from the evolving artifacts. ● People working with cross-projects or cross-organization should be using function points as the measure of size. ● The general accuracy of conventional cost models has been described as within 20% of actuals, 70% of the time which is high level unpredictability in the conventional software development process.

- 36. Excellence and Service CHRIST Deemed to be University Pragmatic Software Cost Estimation ● Software cost estimation is usually bottom up as defined in most of the real world models. ● The software project manager defines the target cost of the software, then manipulates the parameters and sizing until the target cost can be justified. ● It is necessary to analyse the cost risks and understand the sensitivities and trade offs objectively. ● It provides a good vehicle for a basis of estimate and an over cost analysis ● Independent cost estimates are usually inaccurate ● The only way to produce a credible estimate is by iterating through several estimates and sensitivity analyses by software project manager and the software architecture, development and test managers

- 37. Excellence and Service CHRIST Deemed to be University Pragmatic Software Cost Estimation ● A good cost estimate has the following attributes: ○ It is conceived and supported by the project manager, architecture team, development team and test team accountable for performing the work. ○ It is accepted by all stakeholders as ambitious but realize ○ It is based on a well defined software cost model with a credible basis ○ It is based on a database of relevant project experience that includes similar processes, similar technologies, similar environments, similar quality requirements and similar people. ○ It is defined in enough detail so that its key risk areas are understood and the probability of success is objectively assessed.

- 38. Excellence and Service CHRIST Deemed to be University

- 39. MISSION CHRIST is a nurturing ground for an individual’s holistic development to make effective contribution to the society in a dynamic environment VISION Excellence and Service CORE VALUES Faith in God | Moral Uprightness Love of Fellow Beings Social Responsibility | Pursuit of Excellence Unit 3: Software Project Management Renaissance Chapter 3: Improving Software Economics

- 40. Excellence and Service CHRIST Deemed to be University Improving Software Economics ● Improvements is the economics of software development have been not only difficult to achieve but also difficult to measure and substantiate. ● The key to substantial improvement is a balanced attack across several interrelated dimensions. ● Five basic parameters of the software cost model are: ○ Reducing the size or complexity of what need to be developed ○ Improving the development process ○ Using more-skilled personnel and better teams ○ Using better environments ○ Trading off or backing off on quality thresholds

- 41. Excellence and Service CHRIST Deemed to be University Important trends in improving software economics

- 42. Excellence and Service CHRIST Deemed to be University Improving software economics ● Graphical User Interface (GUI) technology is a good example of tools enabling a new and different process. ● GUI builder tools permitted engineering teams to construct an executable UI faster and at less cost. ● The new process was geared toward taking the UI through a few realistic versions, incorporating user feedback and achieving a stable understanding of requirements and the design issues. ● Improvements in hardware performance also has influenced the software technology

- 43. Excellence and Service CHRIST Deemed to be University Reducing software product size ● The way to improve affordability and return on investment (ROI) is to produce a product that achieves the design goals with the minimum amount of human generated source material. ● CBD is introduced as the general term for reducing the source language size necessary to achieve a software solution. ● Usage of newer programming languages contributed in reducing software product size

- 44. Excellence and Service CHRIST Deemed to be University Reducing software product size ● Languages: ○ Universal functional points (UFPs) are useful estimators for language independent, early life cycle estimates. ○ The basic units of functional points are external user inputs, external outputs, internal logical data groups, external data interfaces and extern inquiries. ○ Observe difference between ADA 83 and ADA 95 ○ Observe difference between C and C++ ● UFP is used to indicate the relative program sizes required to implement a given functionality.

- 45. Excellence and Service CHRIST Deemed to be University Reducing software product size ● Program sizes are as follows: ○ 1,000,000 lines of assembly language ○ 400,000 lines of C ○ 220,000 lines of Ada 83 ○ 175,000 lines of Ada 95 or C++ ● The difference between large and small projects has a greater than linear impact on the life-cycle cost.

- 46. Excellence and Service CHRIST Deemed to be University Reducing software product size ● O O Methods and Visual Modelling ○ Widespread movement in 1990s towards object oriented technology ● Three reasons for the success of object oriented approach are as follows: ○ An object oriented model of the problem and its solution encourages a common vocabulary between the end users of a system and its developers, thus creating a shared understanding of the problem being solved ○ The use of continuous integration creates opportunities to recognize risk early and make incremental corrections without destabilizing the entire development effort. ○ An object oriented architecture provides a clear separation of concerns among disparate elements of a system, creating firewalls that prevent a change in one part of the system from rending the fabric of the entire architecture.

- 47. Excellence and Service CHRIST Deemed to be University Reducing software product size ● Five characteristics of a successful as described by booch are: ○ A ruthless focus on the development of a system that provides a well understood collection of essential minimal characteristics. ○ The existence of a culture that is centered on results, encourages communication, and yet is not afraid to fail ○ The effectiveness use of object-oriented modelling ○ The existence of a strong architectural vision ○ The application of well managed iterative and incremental development life cycle

- 48. Excellence and Service CHRIST Deemed to be University Reducing software product size ● Reuse: ○ Reusing existing components and building reusable components have natural software engineering activities since the earlier improvements in programming languages ○ Reuse achieves undeserved importance within the software engineering community. ○ Reusable components of value are transitioned to commercial products supported by organization with the following characteristics: ■ They have an economic motivation for continued support ■ The take ownership of improving product quality, adding new features, and transitioning to new technologies ■ They have a sufficiently broad customer base to be profitable

- 49. Excellence and Service CHRIST Deemed to be University Reducing software product size ● Commercial Components ○ A common approach being pursued today in many domains is to maximize integration of commercial components and off the shelf products.

- 50. Excellence and Service CHRIST Deemed to be University Improving Software Process

- 51. Excellence and Service CHRIST Deemed to be University Improving Team Effectiveness ● Teamwork is much more important than the sum of the individuals. ● With software teams, a project manager needs to configure a balance of solid talent with highly skilled people in the leverage positions ● Team management include the following: ○ A well-managed project can succeed with a nominal engineering team. ○ A mismanaged project will almost never succeed, even with an expert team of engineers ○ A well-architecture system can be built by a nominal team of software builders. ○ A poorly architecture system will flounder even with an expert team of builders.

- 52. Excellence and Service CHRIST Deemed to be University Improving team effectiveness ● To improve staff of software project, Boehm has offered the following staffing principles: ○ The principle of top talent: use better and fewer people ○ The principle of job matching: fit the tasks to the skills and motivation of the people available ○ The principle of career progression: an organization does best in the long run by helping its people to self-actualize ○ The principle of team balance: select people who will complement and harmonize with one another ○ The principle of phaseout: keeping a misfit on the team doesn’t benefit anyone

- 53. Excellence and Service CHRIST Deemed to be University Improving team effectiveness ● Software development is a team sport ● Managers must nurture a culture of team work and results rather than individual accomplishment. ● Team balance and job matching are the primary objectives. ● Software project managers need many leadership qualities in order to enhance team effectiveness ● Following are the crucial attributes of a successful software project managers: ○ Hiring skills ○ Customer interface skills ○ Decision making skills ○ Team-building skills ○ Selling skills

- 54. Excellence and Service CHRIST Deemed to be University Improving Automation through software environments ● The tools and environment used in the software process have a linear effect on the productivity of the process ● Planning tools, requirements management tools, visual modelling tools, compilers, editors, debuggers, quality assurance analysis tools, test tools, and user interfaces provide crucial automation support for evolving the software engineering artifacts. ● An environment that supports incremental compilation, automated system builds, and integrated regression can provide rapid turnaround for iterative development and allow development teams to iterate more freely. ● Development and maintenance environment is defined as a first-class artefact of the process in the modern approach

- 55. Excellence and Service CHRIST Deemed to be University Improving Automation through software environments ● Round trip engineering is a term used to describe the key capability of environments that supports iterative development. ● Automation support is required to ensure efficient and error-free transition of data from one artefact to another. ● Forward engineering is the automation of one engineering artefact to another. It is more abstract representation ● Reverse engineering is the generation or modification of a more abstract representation from an existing artifact

- 56. Excellence and Service CHRIST Deemed to be University Improving Automation through software environments ● Tool vendors make relatively accurate individual assessments of life-cycle activities to support claims about the potential economic impact of the tools. ● Some of the claims are: ○ Requirements analysis and evolution activities consume 40% of life cycle costs ○ Software design activities have an impact on more than 50% of the resources ○ Coding and unit testing activities consume about 50% of software development effort and schedule ○ Test activities can consume as much as 50% of a project’s resources. ○ Configuration control and change management are critical activities that can consume as much as 25% of resources on a large-scale project ○ Documentation activities can consume more than 30% of project engineering resources ○ Project management, business administration, and progress assessment can consume as much as 30% project budgets

- 57. Excellence and Service CHRIST Deemed to be University Achieving required quality ● Key practices that improve overall software quality: ○ Focusing on driving requirements and critical use cases early in the life cycle, focusing on requirements completeness and traceability late in the life cycle, and focusing throughout the life cycle on a balance between requirements evolution, design evolution, and plan evolution ○ Using metrics and indicators to measure the progress and quality of an architecture as it evolves from a high-level prototype into a fully compliant product ○ Providing integrated life-cycle environments that support early and continuous configuration control, change management, rigorous design methods, document automation, and regression test automation ○ Using visual modeling and higher level language that support architectural control, abstraction, reliable programming, reuse, and self-documentation ○ Early and continuous insight into performance issues through demonstration-based evaluations

- 58. Excellence and Service CHRIST Deemed to be University Achieving required quality

- 59. Excellence and Service CHRIST Deemed to be University Achieving required quality ● The typical chronology of events in performance assessment is as follows: ○ Project inception: ○ Initial design review ○ Mid-life-cycle design review ○ Integration and test ● This sequence occurred because early performance insight was based on naïve engineering judgement of innumerable criteria. ● Early performance issue are typical. ● They tend to expose architectural flaws or weaknesses in commercial components.

- 60. Excellence and Service CHRIST Deemed to be University Peer Inspections: A pragmatic view ● Peer inspections are frequently overhyped as the key aspect of a quality system. Peer reviews are valuable as secondary mechanisms, but they are rarely significant contributors to quality compared with following primary quality mechanisms and indicators. ○ Transitioning engineering information from one artifact set to another, assessing consistency, feasibility, understandability, and technology constraints inherent in the engineering artifacts. ○ Major milestone demonstrations that force the artifacts to be assessed against tangible criteria in the context of relevant use cases ○ Environment tools that ensure representation rigor, consistency, completeness and change control ○ Life-cycle testing for detailed insight into critical trade-offs, acceptance criteria and requirements compliance. ○ Change management metircs for objective insight into multiple perspective change trends and convergence or divergence from quality and progress goals

- 61. Excellence and Service CHRIST Deemed to be University Peer Inspections: A pragmatic view ● Inspections are a good vehicle for holding authors accountable for quality products. ● The coverage of inspections should be across all authors rather than across all components. ● Junior authors need to have a random component inspected periodically, and they can learn by inspecting the products of senior authors. ● Varying levels of informal inspection are performed continuously when developers are reading or integrating software with another author’s software, and during testing by independent test teams. ● A critical component deserves to be inspected by several people, preferably those who have a stake in its quality, performance or feature set.

- 62. Excellence and Service CHRIST Deemed to be University Peer Inspections: A pragmatic view ● Significant or substantial design errors or architecture issues are rarely obvious. ● Random human inspections tend to degenerate into comments on style and first-order semantic issues. ● Architectural issues are exposed with more rigorous engineering activities are as follows: ○ Analysis, prototyping or experimentation ○ Constructing design models ○ Committing the current state of the design model to an executable implementation ○ Demonstrating the current implementation strengths and weaknesses in the context of critical subsets of the use cases and scenarios ○ Incorporating lessons learned back into the models, use cases, implementations, and plans.

- 63. MISSION CHRIST is a nurturing ground for an individual’s holistic development to make effective contribution to the society in a dynamic environment VISION Excellence and Service CORE VALUES Faith in God | Moral Uprightness Love of Fellow Beings Social Responsibility | Pursuit of Excellence Unit 3: Software Project Management Renaissance Chapter 4: The old way and the new

- 64. Excellence and Service CHRIST Deemed to be University The old way and the new

- 65. Excellence and Service CHRIST Deemed to be University The principles of conventional software engineering ● Top 30 principles of David about conventional software engineering are as follows: ● Make quality #1: quality must be quantified and mechanisms put into place to motivate its achievement ● High-quality software is possible: techniques that have been demonstrated to increase quality include involving the customer, prototyping, simplifying design, conducting inspections, and hiring the best people ● Give products to customer early: No matter how hard you try to learn users needs during the requirements phase, the most effective way to determine real needs is to give users a product and let them play with it. ● Determine the problem before writing the requirements: when faced with what they believe is a problem, most engineers rush to offer a solution. Before you try to solve a problem, be sure to explore all the alternatives and don’t be blinded by the obvious solutions

- 66. Excellence and Service CHRIST Deemed to be University The principles of conventional software engineering ● Evaluate design alternatives: after the requirements are agreed upon, you must examine a variety of architectures and algorithms. You certainly want to use an ‘architecture’ simply because it was used in the requirements specifications. ● Use an appropriate process model: each project must select a process that makes the most sense for that project on the basis of corporate culture, willingness to take risks, applications area, volatility of requirements and the extent to which requirements are well understood. ● Use different languages for different phases: our industry’s eternal thirst for simple solutions to complex problems has driven many to declare that the best development method is one that uses the same notation throughout the life cycle. Why should software engineers use ada for requirements, design and code unless ada were optimal for all these phases?

- 67. Excellence and Service CHRIST Deemed to be University The principles of conventional software engineering ● Minimize intellectual distance: to minimize intellectual distance, the software’s structure should be as close as possible to the real-world structure. ● Put techniques before tools: an undisciplined software engineer with a tool becomes a dangerous, undisciplined software engineer. ● Get it right before you make it faster: it is far easier to make a working program run faster than it is to make a fast program work. Don’t worry about optimization during initial coding. ● Inspect code: inspecting the detailed design and code is a much better way to find errors than testing. ● Good management is more important than good technology: the best technology will not compensate for poor management, and a good manager can produce great results even with meagre resources. Good management motivates people to do their best, but there are no universal ‘right’ styles of management.

- 68. Excellence and Service CHRIST Deemed to be University The principles of conventional software engineering ● People are the key to success: highly skilled people with appropriate experience, talent, and training are key. The right people with insufficient tools, languages and process will succeed. The wrong people with appropriate tools, languages, and process will probably fail. ● Follow with care: just because everybody is doing something does not make it right for you. It may be right, but you must carefully assess its applicability to your environment, object orientation, measurement, reuse, process improvement, CASE, prototyping – all these might increase quality, decrease cost, and increase user satisfaction. The potential of such techniques is often oversold and benefits are by no means guaranteed or universal. ● Take responsibility: When a bridge collapse we ask – “ what did the engineers do wrong?”. Even when software fails, we rarely ask this. The fact is that in any engineering discipline, the best methods can be used to produce awful designs, and the most antiquated methods to produce elegant designs.

- 69. Excellence and Service CHRIST Deemed to be University The principles of conventional software engineering ● Understand the customer’s priorities: It is possible the customer would tolerate 90% of the functionality delivered late if they could have 10% of it on time. ● The more they see, the more they need: the more functionality (or performance) you provide a user, the more functionality (or performance) the user wants. ● Plan to throw one away: One of the most important critical success factors is whether or not a product is entirely new. Such brand new applications, architectures, interfaces, or algorithms rarely work the first time. ● Design for change: The architectures, components and specification techniques you use must accommodate change. ● Design without documentation is not design: I have often heard software engineers say, “I have finished the design. all that is left is the documentation”.

- 70. Excellence and Service CHRIST Deemed to be University The principles of conventional software engineering ● Use tools, but be realistic: software tools make their users more efficient. ● Avoid tricks: Many programmers love to created programs with tricks – constructs that perform a function correctly, but in an obscure way. Show the world how smart you are by avoiding tricky code. ● Encapsulate: Information hiding is a simple, proven concept that results in software that is easier to test and much easier to maintain. ● Use coupling and cohesion: Coupling and cohesion are the best ways to measure software’s inherent maintainability and adaptability. ● Use the McCabe complexity measure: Although there are many metrics available to report the inherent complexity of software, none is as intuitive and easy to use as Tom McCabe’s. ● Don’t test your own software: Software developers should never be the primary testers of their own software.

- 71. Excellence and Service CHRIST Deemed to be University The principles of conventional software engineering ● Analyze causes for errors: it is far more cost-effective to reduce the effect of an error by preventing it than it is to find and fix it. One way to do this is to analyse the causes of errors as they are detected. ● Realize the software’s entropy increases: Any software system that undergoes continuous change will grow in complexity and will become more and more disorganized. ● People and time are not interchangeable: Measuring a project solely by person-months makes little sense. ● Expect excellence: Your employees will do much better if you have high expectations for them.

- 72. Excellence and Service CHRIST Deemed to be University The principles of modern software management ● Davis top 10 principles of modern management are as follows: ● Base the process on an architecture-first approach: demonstrable balanced achieved among the driving requirements, the architecturally significant design decisions and the life-cycle plans before the resources are committed for full-scale development. ● Establish an iterative life-cycle process that confronts risk early: at this age, it is not possible to define the entire problem, design the entire solution, build the software, then test the end product sequence. Instead use iterative process. ● Transition design methods to emphasize component based development: Moving from a line-of-code mentality to a CBD is necessary. A component is cohesive set of pre-existing lines of code, either in source or executable format with a defined interface and behaviour.

- 73. Excellence and Service CHRIST Deemed to be University The principles of modern software management ● Establish a change management environment: The dynamics of iterative development, by different teams working on shared artifacts, necessitates objectively controlled baselines. ● Enhance change freedom through tools that support round-trip engineering: RTE is the environment support necessary to automate and synchronize engineering information in different formats. ● Capture design artifacts in rigorous, model-based notation: A model based approach supports the evolution of semantically rich graphical and textual design notations. ● Instrument the process for objective quality control and progress assessment: Life-cycle assessment of the progress and the quality of all intermediate products must be integrated into the process

- 74. Excellence and Service CHRIST Deemed to be University The principles of modern software management ● Use a demonstrate-based approach to assess intermediate artifacts: transitioning the current state-of-the-product artifacts into an executable demonstration of relevant scenarios stimulates earlier convergence on integration, a more tangible understanding of design tradeoffs and earlier elimination of architectural defects. ● Plan intermediate releases in groups of usage scenarios with evolving levels of detail: software management process drive toward early and continuous demonstrations within the operational context of the system and its use cases. ● Establish a configurable process that is economically scalable: No single process is suitable for all software developments.

- 75. Excellence and Service CHRIST Deemed to be University The principles of modern software management

- 76. Excellence and Service CHRIST Deemed to be University The principles of modern software management

- 77. Excellence and Service CHRIST Deemed to be University Transitioning to an iterative process ● Modern software development process have move away from the conventional waterfall model, each stage of the development process is dependent on completion of the previous stage. ● Development proceeds as a series of iterations, building on the core architecture until the desired levels of functionality, performance, and robustness are achieved. ● The economic benefits inherent in transitioning from the conventional waterfall model to an iterative development process are significant but difficult to quantify. ● Top 10 principles are combined into 5 principles which are as shown below

- 78. Excellence and Service CHRIST Deemed to be University Transitioning to iterative process ● Application precedentedness: modern software industry has moved to an iterative life-cycle process. Early iterations in the life-cycle establish precedents from which the product, the process, and the plans can be elaborated in evolving levels of detail. ● Process flexibility: project artifacts must be supported be efficient change management commensurate with project needs. A configurable process that allows a common framework to be adapted across a range of projects is necessary to achieve a software return on investment.

- 79. Excellence and Service CHRIST Deemed to be University Transitioning to iterative process ● Architecture risk resolution: Architecture first development is a crucial theme underlying a successful iterative development process. An architecture-first and component based development approach forces the infrastructure, common mechanisms, and control mechanisms to be elaborated early in the life cycle as the verification activity of the design process and products. Also ensures the early attention to testability and a foundation for demonstration- based assessment. ● Team cohesion: successful teams are cohesive, and cohesive teams are successful. The model based formats have also enabled the round-trip engineering support needed to establish change freedom sufficient for evolving design representations.

- 80. Excellence and Service CHRIST Deemed to be University Transitioning to iterative process ● Software process maturity: The software Engineering Institute’s Capability Maturity Model (CMM) is a well-accepted benchmark for software process assessment. One of the key themes is that truly mature processes are enabled through an integrated