Ad

Apache Spark and DataStax Enablement

- 1. Introduction to Apache Spark and Spark in DataStax Enterprise Vincent Poncet DataStax Solution Engineer 25/01/2016 +

- 2. 2 Introduction and background Spark RDD API Introduction to Scala Spark DataFrames API + SparkSQL Spark Execution Model Spark Shell & Application Deployment Spark Extensions (Spark Streaming, MLLib, ML) Spark & DataStax Enterprise Integration Demos Agenda

- 4. 4 § Apache Spark is a fast, general purpose, easy-to-use cluster computing system for large-scale data processing – Fast • Leverages aggressively cached in-memory distributed computing and dedicated Executor processes even when no jobs are running • Faster than MapReduce – General purpose • Covers a wide range of workloads • Provides SQL, streaming and complex analytics – Flexible and easier to use than Map Reduce • Spark is written in Scala, an object oriented, functional programming language • Scala, Java, Python and R • Scala and Python interactive shells • Runs on Hadoop, Mesos, standalone or cloud Logistic regression in Hadoop and Spark Spark Stack val wordCounts = sc.textFile("README.md").flatMap(line => line.split(" ")).map(word => (word, 1)).reduceByKey((a, b) => a + b) WordCount

- 5. 5 Brief History of Spark § 2002 – MapReduce @ Google § 2004 – MapReduce paper § 2006 – Hadoop @ Yahoo § 2008 – Hadoop Summit § 2010 – Spark paper § 2013 – Spark 0.7 Apache Incubator § 2014 – Apache Spark top-level § 2014 – 1.2.0 release in December § 2015 – 1.3.0 release in March § 2015 – 1.4.0 release in June § 2015 – 1.5.0 release in September § 2016 – 1.6.0 release in January § Most active project in Hadoop ecosystem § One of top 3 most active Apache projects § Databricks founded by the creators of Spark from UC Berkeley’s AMPLab Activity for 6 months in 2014 (from Matei Zaharia – 2014 Spark Summit) DataBricks In June 2015, code base was about 400K lines

- 6. 6 Apache Spark Market Adoption DataBricks / Spark Summit 2015

- 7. 7 Large Scale Usage DataBricks / Spark Summit 2015

- 8. 8 Why Spark on Cassandra? § Analytics on transactional data and operational applications § Data model independent queries § Cross-table operations (JOIN, UNION, etc.) § Complex analytics (e.g. machine learning) § Data transformation, aggregation, etc. § Stream processing

- 9. 9 DataStax Involvment on Spark § Apache Spark is embedded in DataStax Enterprise since DSE 4.5 released in July 2014 § DataStax provides enterprise support to the embedded Apache Spark § DataStax released a open source Spark Cassandra Connector https://github.com/datastax/spark-cassandra-connector Connector Spark Cassandra Cassandra Java Driver DSE 1.5 1.5 2.1.5+ 2.2 1.4 1.4 2.1.5+ 2.1 4.8 1.3 1.3 2.1.5+ 2.1 1.2 1.2 2.1, 2.0 2.1 4.7 1.1 1.1, 1.0 2.1, 2.0 2.1 4.6 1.0 1.0, 0.9 2.0 2.0 4.5

- 10. 10 Spark RDD API

- 11. 11 § An RDD is a distributed collection of Scala/Python/Java objects of the same type: – RDD of strings – RDD of integers – RDD of (key, value) pairs – RDD of class Java/Python/Scala objects § An RDD is physically distributed across the cluster, but manipulated as one logical entity: – Spark will “distribute” any required processing to all partitions where the RDD exists and perform necessary redistributions and aggregations as well. – Example: Consider a distributed RDD “Names” made of names Resilient Distributed Dataset (RDD): definition Vincent Victor Pascal Steve Dani Nate Matt Piotr Alice Partition 1 Partition 2 Partition 3 Names

- 12. 12 § Suppose we want to know the number of names in the RDD “Names” § User simply requests: Names.count() – Spark will “distribute” count processing to all partitions so as to obtain: • Partition 1: Mokhtar(1), Jacques (1), Dirk (1) è 3 • Partition 2: Cindy (1), Dan (1), Susan (1) è 3 • Partition 3: Dirk (1), Frank (1), Jacques (1) è 3 – Local counts are subsequently aggregated: 3+3+3=9 § To lookup the first element in the RDD: Names.first() § To display all elements of the RDD: Names.collect() (careful with this) Resilient Distributed Dataset: definition Names Vincent Victor Pascal Steve Dani Nate Matt Piotr Alice Partition 1 Partition 2 Partition 3

- 13. 13 Resilient Distributed Datasets: Creation and Manipulation § Three methods for creation – Distributing a collection of objects from the driver program (using the parallelize method of the spark context) val rddNumbers = sc.parallelize(1 to 10) val rddLetters = sc.parallelize (List(“a”, “b”, “c”, “d”)) – Loading an external dataset (file) val quotes = sc.textFile("hdfs:/sparkdata/sparkQuotes.txt") – Transformation from another existing RDD val rddNumbers2 = rddNumbers.map(x=> x+1) § Dataset from any storage – CFS, HDFS, Cassandra, Amazon S3 § File types supported – Text files, SequenceFiles, Parquet, JSON – Hadoop InputFormat

- 14. 14 Resilient Distributed Datasets: Properties § Immutable § Two types of operations – Transformations ~ DDL (Create View V2 as…) • val rddNumbers = sc.parallelize(1 to 10): Numbers from 1 to 10 • val rddNumbers2 = rddNumbers.map (x => x+1): Numbers from 2 to 11 • The LINEAGE on how to obtain rddNumbers2 from rddNumber is recorded • It’s a Directed Acyclic Graph (DAG) • No actual data processing does take place è Lazy evaluations – Actions ~ DML (Select * From V2…) • rddNumbers2.collect(): Array [2, 3, 4, 5, 6, 7, 8, 9, 10, 11] • Performs transformations and action • Returns a value (or write to a file) § Fault tolerance – If data in memory is lost it will be recreated from lineage § Caching, persistence (memory, spilling, disk) and check-pointing

- 15. 15 RDD Transformations § Transformations are lazy evaluations § Returns a transformed RDD § Pair RDD (K,V) functions for MapReduce style transformations Transformation Meaning map(func) Return a new dataset formed by passing each element of the source through a function func. filter(func) Return a new dataset formed by selecting those elements of the source on which func returns true. flatMap(func) Similar to map, but each input item can be mapped to 0 or more output items. So func should return a Seq rather than a single item Full documentation at http://spark.apache.org/docs/1.4.1/api/scala/index.html#org.apache.spark.rdd.PairRDDFunctions join(otherDataset, [numTasks]) When called on datasets of type (K, V) and (K, W), returns a dataset of (K, (V, W)) pairs with all pairs of elements for each key. reduceByKey(func) When called on a dataset of (K, V) pairs, returns a dataset of (K,V) pairs where the values for each key are aggregated using the given reduce function func sortByKey([ascendin g],[numTasks]) When called on a dataset of (K, V) pairs where K implements Ordered, returns a dataset of (K,V) pairs sorted by keys in ascending or descending order. combineByKey[C}(cr eateCombiner, mergeValue, mergeCombiners)) Generic function to combine the elements for each key using a custom set of aggregation functions. Turns an RDD[(K, V)] into a result of type RDD[(K, C)], for a "combined type" C. createCombiner: (V) ⇒ C, mergeValue: (C, V) ⇒ C, mergeCombiners: (C, C) ⇒ C) Full documentation at http://spark.apache.org/docs/1.4.1/api/scala/index.html#org.apache.spark.rdd.RDD

- 16. 16 RDD and PairRDD methods https://spark.apache.org/docs/1.4.1/api/scala/index.html#org.apache.spark.rdd.RDD § reduce, Aggregate, sample, randomSplit § cartesian § Count, countApprox, countByValue § Distinct, top § map, FlatMap, Filter, foreach, fold, mapPartitions, groupBy § repartition, coalesce § first § keyBy § Union, intersection, substract § min, max § cache, persist, unpersist § sortBy § collect, take, takeOrdered § zip, zipPartitions https://spark.apache.org/docs/1.4.1/api/scala/index.html#org.apache.spark.rdd.PairRDDFunctions § reduceByKey, aggregateByKey, sampleByKey, Cogroup § combineByKey § countByKey, CountByKeyApprox § mapValues, flatMapValues § foldByKey, groupByKey, groupWith § Join, fullOuterJoin, leftOuterJoint, rightOuterJoin, substractByKey § keys, values

- 17. 17 RDD Actions § Actions returns values or save a RDD to disk Action Meaning collect() Return all the elements of the dataset as an array of the driver program. This is usually useful after a filter or another operation that returns a sufficiently small subset of data. count() Return the number of elements in a dataset. first() Return the first element of the dataset take(n) Return an array with the first n elements of the dataset. foreach(func) Run a function func on each element of the dataset. saveAsTextFile Save the RDD into a TextFile Full documentation at http://spark.apache.org/docs/1.4.1/api/scala/index.html#org.apache.spark.rdd.RDD

- 18. 18 RDD Persistence § Each node stores any partitions of the cache that it computes in memory § Reuses them in other actions on that dataset (or datasets derived from it) – Future actions are much faster (often by more than 10x) § Two methods for RDD persistence: persist() and cache() Storage Level Meaning MEMORY_ONLY Store as deserialized Java objects in the JVM. If the RDD does not fit in memory, part of it will be cached. The other will be recomputed as needed. This is the default. The cache() method uses this. MEMORY_AND_DISK Same except also store on disk if it doesn’t fit in memory. Read from memory and disk when needed. MEMORY_ONLY_SER Store as serialized Java objects (one bye array per partition). Space efficient, but more CPU intensive to read. MEMORY_AND_DISK_SER Similar to MEMORY_AND_DISK but stored as serialized objects. DISK_ONLY Store only on disk. MEMORY_ONLY_2, MEMORY_AND_DISK_2,etc. Same as above, but replicate each partition on two cluster nodes OFF_HEAP (experimental) Store RDD in serialized format in Tachyon.

- 20. 20 Introduction to Scala What is Scala? Functions in Scala Operating on collections in Scala Scala Crash Course, Holden Karau, DataBricks

- 21. 21 About Scala High-level language for the JVM ● Object oriented + functional programming Statically typed ● Comparable in speed to Java ● Type inference saves us from having to write explicit types most of the time Interoperates with Java ● Can use any Java class (inherit from, etc.) ● Can be called from Java code Scala Crash Course, Holden Karau, DataBricks

- 22. 22 Quick Tour of Scala Declaring variables: var x: Int = var x = 7 // val y = “hi” 7 Type inferred // read-only String) = } def announce(text: { println(text) } Java equivalent: int x= 7; final String y = “hi”; Functions: Java equivalent: def square(x: Int): Int = x*x int square(int x) { def square(x: Int): Int = { return x*x; x*x } void announce(String text) { System.out.println(text); } Scala Crash Course, Holden Karau, DataBricks

- 23. 23 Scala functions (closures) (x: Int) => x + 2 // full version x => x + 2 //type inferred Scala Crash Course, Holden Karau, DataBricks

- 24. 24 Scala functions (closures) (x: Int) => x + 2 // full version x => x + 2 // type inferred _ + 2 // placeholder syntax (each argument must be used exactly once) Scala Crash Course, Holden Karau, DataBricks

- 25. 25 Scala functions (closures) (x: Int) => x + 2 // full version x => x + 2 // type inferred _ + 2 // placeholder syntax (each argument must be used exactly once) x => { // body is a block of code val numberToAdd = 2 x + numberToAdd } Scala Crash Course, Holden Karau, DataBricks

- 26. 26 Scala functions (closures) (x: Int) => x + 2 // full version x => x + 2 // type inferred _ + 2 // placeholder syntax (each argument must be used exactly once) x => { // body is a block of code val numberToAdd = 2 x + numberToAdd } // Regular functions def addTwo(x:Int): Int = x + 2 Scala Crash Course, Holden Karau, DataBricks

- 27. 27 Quick Tour of Scala Part 2 Processing collections with functional programming val list = List(1,2,3) list.foreach(x => println(x)) list.foreach(println) // prints 1, 2, 3 // same list.map(x =>x + 2) list.map(_ + 2) // returns a new // same List(3, 4, 5) list.filter(x => x % 2 == 1)// returns a new List(1, 3) list.filter(_ % 2 == 1) // same list.reduce((x, y) => x + y) // => 6 list.reduce(_ + _) // same All of these leave the list unchanged as it is immutable. Scala Crash Course, Holden Karau, DataBricks

- 28. 28 Functional methods on collections Method on Seq[T] Explanation map(f: T => U): Seq[U] Each element is result of f flatMap(f: T => Seq[U]): Seq[U] One to many map filter(f: T => Boolean): Seq[T] Keep elements passing f exists(f: T => Boolean): Boolean True if one element passes f forall(f: T => Boolean): Boolean True if all elements pass reduce(f: (T, T) => T): T Merge elements using f groupBy(f: T => K): Map[K, List[T]] Group elements by f sortBy(f: T => K): Seq[T] Sort elements ….. There are a lot of methods on Scala collections, just google Scala Seq or http://www.scala-lang.org/api/2. 10.4/index.html#scala.collection.Seq Scala Crash Course, Holden Karau, DataBricks

- 29. 29 Code Execution (1) // Create RDD val quotes = sc.textFile("hdfs:/sparkdata/sparkQuotes.txt") // Transformations val vpnQuotes = quotes.filter(_.startsWith(”VPN")) val vpnSpark = vpnQuotes.map(_.split(" ")).map(x => x(1)) // Action vpnSpark.filter(_.contains("Spark")).count() VPN Spark is cool BOB Spark is fun BRIAN Spark is great VPN Scala is awesome BOB Scala is flexible File: sparkQuotes.txt § ‘spark-shell’ provides Spark context as ‘sc’

- 30. 30 // Create RDD val quotes = sc.textFile("hdfs:/sparkdata/sparkQuotes.txt") // Transformations val vpnQuotes = quotes.filter(_.startsWith(”VPN")) val vpnSpark = vpnQuotes.map(_.split(" ")).map(x => x(1)) // Action vpnSpark.filter(_.contains("Spark")).count() Code Execution (2) VPN Spark is cool BOB Spark is fun BRIAN Spark is great VPN Scala is awesome BOB Scala is flexible File: sparkQuotes.txt RDD: quotes VPN Spark is cool BOB Spark is fun BRIAN Spark is great VPN Scala is awesome BOB Scala is flexible

- 31. 31 // Create RDD val quotes = sc.textFile("hdfs:/sparkdata/sparkQuotes.txt") // Transformations val danQuotes = quotes.filter(_.startsWith(”VPN")) val vpnSpark = vpnQuotes.map(_.split(" ")).map(x => x(1)) // Action vpnSpark.filter(_.contains("Spark")).count() Code Execution (3) File: sparkQuotes.txt RDD: quotes RDD: vpnQuotes VPN Spark is cool VPN Scala is awesome VPN Spark is cool BOB Spark is fun BRIAN Spark is great VPN Scala is awesome BOB Scala is flexible VPN Spark is cool BOB Spark is fun BRIAN Spark is great VPN Scala is awesome BOB Scala is flexible

- 32. 32 // Create RDD val quotes = sc.textFile("hdfs:/sparkdata/sparkQuotes.txt") // Transformations val danQuotes = quotes.filter(_.startsWith(”VPN")) val vpnSpark = vpnQuotes.map(_.split(" ")).map(x => x(1)) // Action vpnSpark.filter(_.contains("Spark")).count() Code Execution (4) File: sparkQuotes.txt RDD: quotes RDD: vpnQuotes RDD: vpnSpark Spark Scala VPN Spark is cool VPN Scala is awesome VPN Spark is cool BOB Spark is fun BRIAN Spark is great VPN Scala is awesome BOB Scala is flexible VPN Spark is cool BOB Spark is fun BRIAN Spark is great VPN Scala is awesome BOB Scala is flexible

- 33. 33 // Create RDD val quotes = sc.textFile("hdfs:/sparkdata/sparkQuotes.txt") // Transformations val danQuotes = quotes.filter(_.startsWith(”VPN")) val vpnSpark = vpnQuotes.map(_.split(" ")).map(x => x(1)) // Action vpnSpark.filter(_.contains("Spark")).count() Code Execution (5) File: sparkQuotes.txt RDD: quotes RDD: vpnQuotes Spark Scala RDD: vpnSpark 1 VPN Spark is cool VPN Scala is awesome VPN Spark is cool BOB Spark is fun BRIAN Spark is great VPN Scala is awesome BOB Scala is flexible VPN Spark is cool BOB Spark is fun BRIAN Spark is great VPN Scala is awesome BOB Scala is flexible

- 34. 34 Spark DataFrame API + SparkSQL

- 35. 35 DataFrames § A DataFrame is a distributed collection of data organized into named columns. It is conceptually equivalent to a table in a relational database, an R dataframe or Python Pandas, but in a distributed manner and with query optimizations and predicate pushdown to the underlying storage using a DataSource connector. § DataFrames can be constructed from a wide array of sources such as: structured data files, tables in Hive, external databases, or existing RDDs. § Released in Spark 1.3 DataBricks / Spark Summit 2015

- 36. 36 DataFrames Examples // Show the content of the DataFrame (default 100 records, use show(int) for specifying the number) df.show() // Print the schema in a tree format df.printSchema() // root // |-‐-‐ age: long (nullable = true) // |-‐-‐ name: string (nullable = true) // Select only the "name" column df.select(df(“name”)).show() // full statement using Column df.select($”name”).show() // $ is implicit of Column, need import sqlContext.implicits._ df.select("name").show() // use of StringExpression, related to SparkSQL expression // Select everybody, but increment the age by 1 df.select(df("name"), df("age") + 1).show() df.select($"name”, $"age” + 1).show() df.selectExpr(“name”, “age +1”).show() // Select people older than 21 df.filter(df("age") > 21).show() df.filter($"age” > 21).show() df.filter("age > 21”).show() // Count people by age df.groupBy("age").count().show() Full documentation at http://spark.apache.org/docs/1.4.1/api/scala/index.html#org.apache.spark.sql.DataFrame

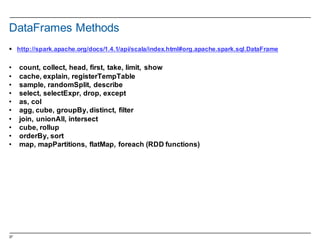

- 37. 37 DataFrames Methods § http://spark.apache.org/docs/1.4.1/api/scala/index.html#org.apache.spark.sql.DataFrame • count, collect, head, first, take, limit, show • cache, explain, registerTempTable • sample, randomSplit, describe • select, selectExpr, drop, except • as, col • agg, cube, groupBy, distinct, filter • join, unionAll, intersect • cube, rollup • orderBy, sort • map, mapPartitions, flatMap, foreach (RDD functions)

- 38. 38 DataFrames Functions § http://spark.apache.org/docs/1.4.1/api/scala/index.html#org.apache.spark.sql.functions$ § Import sqlContext.functions._ – min, max, avg, count, sum, … – cos, sin, tan, acos, asin, atan, cosh, sinh, tanh, … – exp, log, log10, pow, cbrt – floor, ceil – to Degrees, toRadians – abs, sqrt, rand – not – upper, lower – asc, desc – lead, lag, rank, denseRank, nTile, rowNumber, cumeDist, percentRank – udf § Window functions – import org.apache.spark.sql.expressions.Window – val overCategory = Window.partitionBy("category").orderBy(desc("revenue")) – val rank = denseRank.over(overCategory)

- 39. 39 Comparison between Spark DFs and Python Pandas § https://databricks.com/blog/2015/08/12/from-pandas-to-apache- sparks-dataframe.html § https://medium.com/@chris_bour/6-differences-between-pandas-and- spark-dataframes-1380cec394d2#.n1rcu0imr § https://lab.getbase.com/pandarize-spark-dataframes/

- 40. 40 DataFrames - Getting Started § SQLContext created from SparkContext // An existing SparkContext, sc val sqlContext = new org.apache.spark.sql.SQLContext(sc) § HiveContext created from SparkContext (better SQL support) // An existing SparkContext, sc val sqlContext = new org.apache.spark.sql.hive.HiveContext(sc) § Import a library to convert an RDD to a DataFrame – Scala: import sqlContext.implicits._ § Creating a DataFrame – From RDD – Inferring the schema using reflection – Programmatic Interface – Using a DataSource connector

- 41. 41 DataFrames – creating a DataFrame from RDD § Infering the Schema using Reflection – The case class in Scala defines the schema of the table case class Person(name: String, age: Int) – The arguments of the case class becomes the names of the columns – Create the RDD of the Person object and create a DataFrame val people = sc.textFile ("examples/src/main/resources/people.txt").map(_.split(",")).map(p => Person(p(0), p(1).trim.toInt)).toDF() § Programmatic Interface – Schema encoded as a String, import SparkSQL Struct types val schemaString = “name age” import org.apache.spark.sql.Row; import org.apache.spark.sql.types.{StructType,StructField,StringType}; – Create the schema represented by a StructType matching the structure of the Rows in the RDD from previous step val schema = StructType( schemaString.split(" ").map(fieldName => StructField(fieldName, StringType, true))) – Apply the schema to the RDD of Rows using the createDataFrame method val rowRDD = people.map(_.split(",")).map(p => Row(p(0), p(1).trim)) val peopleDataFrame = sqlContext.createDataFrame(rowRDD, schema)

- 42. 42 DataFrames - DataSources Spark 1.4+ § Generic Load/Save – val df = sqlContext.read.format(“<datasource type>”).options(“<datasource specific option, ie connection parameters, filename, table,…>”).load(“< datasource specific option, table, filename, or nothing>”) – df.write.format(“<datasource type>”).options(“<datasource specific option, ie connection parameters, filename, table,…>”).save (“< datasource specific option, table, filename, or nothing>”) § JSON – val df = sqlContext.read.load("people.json", "json") – df.select("name", "age").write.save("namesAndAges.json", “json") § CSV (external package) – val df = sqlContext.read.format("com.databricks.spark.csv").option("header", "true").load("cars.csv") – df.select("year", "model").write.format("com.databricks.spark.csv").save("newcars.csv") Spark Cassandra Connector is a DataSource API compliant connector. DataSource APIs provides generic methods to manage connectors to any datasource (file, jdbc, cassandra, mongodb, etc…). From Spark 1.3 DataSource APIs provides predicate pushdown capabilities to leverage the performance of the backend. Most connectors are available at http://spark- packages.org/

- 43. 43 Spark SQL § Process relational queries expressed in SQL (HiveQL) § Seamlessly mix SQL queries with Spark programs – Register the DataFrame as a table people.registerTempTable("people") – Run SQL statements using the sql method provided by the SQLContext val teenagers = sqlContext.sql("SELECT name FROM people WHERE age >= 13 AND age <= 19") § Provide a single interface for working with structured data including Apache Hive, Parquet, JSON files and any DataSource § Leverages Hive frontend and metastore – Compatibility with Hive data, queries and UDFs – HiveQL limitations may apply – Not ANSI SQL compliant § Connectivity through JDBC/ODBC using SparkSQL Thrift Server § Do Adhoc query / Report using BI Tool § Tableau, Cognos, ClickView, Microstrategy, …

- 45. 45 sc = new SparkContext f = sc.textFile(“…”) f.filter(…) .count() ... Your program Spark client (app master) Spark worker CFS, Cassandra, HDFS, … Block manager Task threads RDD graph Scheduler Block tracker Shuffle tracker Cluster manager Components DataBricks Python YARN, Mesos Master, Spark Master

- 46. 46 rdd1.join(rdd2) .groupBy(…) .filter(…) RDD Objects build operator DAG agnostic to operators! doesn’t know about stages DAGScheduler split graph into stages of tasks submit each stage as ready DAG TaskScheduler TaskSet launch tasks via cluster manager retry failed or straggling tasks Cluster manager Worker execute tasks store and serve blocks Block manager Threads Task stage failed Scheduling Process DataBricks

- 47. 47 Pipelines narrow ops. within a stage Picks join algorithms based on partitioning (minimize shuffles) Reuses previously cached data DAGScheduler Optimizations join union groupBy map Stage 3 Stage 1 Stage 2 A: B: C: D: E: F: G: = previously computed partition Task DataBricks

- 48. 48 RDD Direct Acyclic Graph (DAG) § View the lineage § Could be issued in a continuous line scala> vpnSpark.toDebugString res1: String = (2) MappedRDD[4] at map at <console>:16 | MappedRDD[3] at map at <console>:16 | FilteredRDD[2] at filter at <console>:14 | hdfs:/sparkdata/sparkQuotes.txt MappedRDD[1] at textFile at <console>:12 | hdfs:/sparkdata/sparkQuotes.txt HadoopRDD[0] at textFile at <console>:12 val vpnSpark = sc.textFile("hdfs:/sparkdata/sparkQuotes.txt"). filter(_.startsWith(”VPN")). map(_.split(" ")). map(x => x(1)). .filter(_.contains("Spark")) vpnSpark.count()

- 49. 49 DataFrame Execution § DataFrame API calls are transformed into Physical Plan by Catalyst § Catalyst provides numerous optimisation schemes – Join strategy selection through cost model evaluation – Operations fusion – Predicates pushdown to the DataSource – Code generation to reduce virtual function calls (Spark 1.5) § The Physical execution is made as RDDs § Benefits are better performance, datasource leverage and consistent performances accross langages

- 50. 50 Showing Multiple Apps SparkContext Driver Program Cluster Manager Worker Node Executor Task Task Cache Worker Node Executor Task Task Cache App § Each Spark application runs as a set of processes coordinated by the Spark context object (driver program) – Spark context connects to Cluster Manager (standalone, Mesos/Yarn) – Spark context acquires executors (JVM instance) on worker nodes – Spark context sends tasks to the executors DataBricks

- 51. 51 Spark Terminology § Context (Connection): – Represents a connection to the Spark cluster. The Application which initiated the context can submit one or several jobs, sequentially or in parallel, batch or interactively, or long running server continuously serving requests. § Driver (Coordinator agent) – The program or process running the Spark context. Responsible for running jobs over the cluster and converting the App into a set of tasks § Job (Query / Query plan): – A piece of logic (code) which will take some input from HDFS (or the local filesystem), perform some computations (transformations and actions) and write some output back. § Stage (Subplan) – Jobs are divided into stages § Tasks (Sub section) – Each stage is made up of tasks. One task per partition. One task is executed on one partition (of data) by one executor § Executor (Sub agent) – The process responsible for executing a task on a worker node § Resilient Distributed Dataset

- 52. 52 Spark Shell & Application Deployment

- 53. 53 Spark’s Scala and Python Shell § Spark comes with two shells – Scala – Python § APIs available for Scala, Python and Java § Appropriate versions for each Spark release § Spark’s native language is Scala, more natural to write Spark applications using Scala. § This presentation will focus on code examples in Scala

- 54. 54 Spark’s Scala and Python Shell § Powerful tool to analyze data interactively § The Scala shell runs on the Java VM – Can leverage existing Java libraries § Scala: – To launch the Scala shell (from Spark home directory): dse spark – To read in a text file: scala> val textFile = sc.textFile("file:///Users/vincentponcet/toto") § Python: – To launch the Python shell (from Spark home directory): dse pyspark – To read in a text file: >>> textFile = sc.textFile("file:///Users/vincentponcet/toto")

- 55. 55 SparkContext in Applications § The main entry point for Spark functionality § Represents the connection to a Spark cluster § Create RDDs, accumulators, and broadcast variables on that cluster § In the Spark shell, the SparkContext, sc, is automatically initialized for you to use § In a Spark program, import some classes and implicit conversions into your program: import org.apache.spark.SparkContext import org.apache.spark.SparkContext._ import org.apache.spark.SparkConf

- 56. 56 A Spark Standalone Application in Scala Import statements SparkConf and SparkContext Transformations and Actions

- 57. 57 Running Standalone Applications § Define the dependencies – Scala à simple.sbt § Create the typical directory structure with the files § Create a JAR package containing the application’s code. – Scala: sbt package § Use spark-submit to run the program Scala: ./simple.sbt ./src ./src/main ./src/main/scala ./src/main/scala/SimpleApp.scala

- 58. 58 Spark Properties § Set application properties via the SparkConf object val conf = new SparkConf() .setMaster("local") .setAppName("CountingSheep") .set("spark.executor.memory", "1g") val sc = new SparkContext(conf) § Dynamically setting Spark properties – SparkContext with an empty conf val sc = new SparkContext(new SparkConf()) – Supply the configuration values during runtime ./bin/spark-submit --name "My app" --master local[4] --conf spark.shuffle.spill=false --conf "spark.executor.extraJavaOptions=- XX:+PrintGCDetails -XX:+PrintGCTimeStamps" myApp.jar – conf/spark-defaults.conf

- 59. 59 Spark Configuration § Three locations for configuration: – Spark properties – Environment variables conf/spark-env.sh – Logging log4j.properties § Override default configuration directory (SPARK_HOME/conf) – SPARK_CONF_DIR • spark-defaults.conf • spark-env.sh • log4j.properties • etc.

- 60. 60 Spark Monitoring § Three ways to monitor Spark applications 1. Web UI • Default port 4040 • Available for the duration of the application • Jobs and stages metrics, stage execution plan and tasks timeline 2. Metrics API • Report to a variety of sinks (HTTP, JMX, and CSV) • /conf/metrics.properties

- 62. 62 Spark Streaming § Scalable, high-throughput, fault-tolerant stream processing of live data streams § Write Spark streaming applications like Spark applications § Recovers lost work and operator state (sliding windows) out-of-the- box § Uses HDFS and Zookeeper for high availability § Data sources also include TCP sockets, ZeroMQ or other customized data sources

- 63. 63 Spark Streaming - Internals § The input stream goes into Spark Steaming § Breaks up into batches of input data § Feeds it into the Spark engine for processing § Generate the final results in streams of batches § DStream - Discretized Stream – Represents a continuous stream of data created from the input streams – Internally, represented as a sequence of RDDs

- 64. 64 Spark Streaming - Getting Started § Count the number of words coming in from the TCP socket § Import the Spark Streaming classes import org.apache.spark._ import org.apache.spark.streaming._ import org.apache.spark.streaming.StreamingContext._ § Create the StreamingContext object val conf = new SparkConf().setMaster("local[2]").setAppName("NetworkWordCount") val ssc = new StreamingContext(conf, Seconds(1)) § Create a DStream val lines = ssc.socketTextStream("localhost", 9999) § Split the lines into words val words = lines.flatMap(_.split(" ")) § Count the words val pairs = words.map(word => (word, 1)) val wordCounts = pairs.reduceByKey(_ + _) § Print to the console wordCounts.print()

- 65. 65 Spark Streaming - Continued § No real processing happens until you tell it ssc.start() // Start the computation ssc.awaitTermination() // Wait for the computation to terminate § Code and application can be found in the NetworkWordCount example § To run the example: – Invoke netcat to start the data stream – In a different terminal, run the application ./bin/run-example streaming.NetworkWordCount localhost 9999

- 66. 66 SparkMLlib § SparkMLlib for machine learning library § Since Spark 0.8 § Provides common algorithms and utilities • Classification • Regression • Clustering • Collaborative filtering • Dimensionality reduction § Leverages in-memory cache of Spark to speed up iteration processing

- 67. 67 SparkMLlib - Getting Started § Use k-means clustering for set of latitudes and longitudes § Import the SparkMLlib classes import org.apache.spark.mllib.clustering.KMeans import org.apache.spark.mllib.linalg.Vectors § Create the SparkContext object val conf = new SparkConf().setAppName("KMeans") val sc = new SparkContext(conf) § Create a data RDD val taxifile = sc.textFile("user/spark/sparkdata/nyctaxisub/*") § Create Vectors for input to algorithm val taxi = taxifile.map{line=>Vectors.dense(line.split(",").slice(3,5).map(_.toDouble))} § Run the k-means algorithm with 3 clusters and 10 iterations val model = Kmeans.train(taxi,3,10) val clusterCenters = model.clusterCenters.map(_.toArray) § Print to the console clusterCenters.foreach(lines=>println(lines(0),lines(1)))

- 68. 68 SparkML § SparkML provides an API to build ML pipeline (since Spark 1.3) § Similar to Python scikit-learn § SparkML provides abstraction for all steps of an ML workflow Generic ML Workflow Real Life ML Workflow § Transformer: A Transformer is an algorithm which can transform one DataFrame into another DataFrame. E.g., an ML model is a Transformer which transforms an RDD with features into an RDD with predictions. § Estimator: An Estimator is an algorithm which can be fit on a DataFrame to produce a Transformer. E.g., a learning algorithm is an Estimator which trains on a dataset and produces a model. § Pipeline: A Pipeline chains multiple Transformers and Estimators together to specify an ML workflow. § Param: All Transformers and Estimators now share a common API for specifying parameters. Xebia HUG France 06/2015

- 69. 69 Spark & DataStax Enterprise Integration

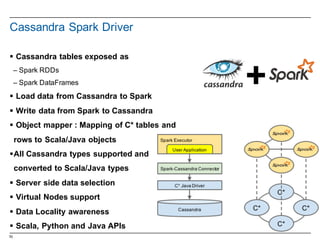

- 70. 70 Cassandra Spark Driver § Cassandra tables exposed as – Spark RDDs – Spark DataFrames § Load data from Cassandra to Spark § Write data from Spark to Cassandra § Object mapper : Mapping of C* tables and rows to Scala/Java objects §All Cassandra types supported and converted to Scala/Java types § Server side data selection § Virtual Nodes support § Data Locality awareness § Scala, Python and Java APIs

- 71. 71 $ dse spark ... Spark context available as sc. HiveSQLContext available as hc. CassandraSQLContext available as csc. scala> sc.cassandraTable("test", "kv") res5: com.datastax.spark.connector.rdd.CassandraRDD [com.datastax.spark.connector.CassandraRow] = CassandraRDD[2] at RDD at CassandraRDD.scala:48 scala> sc.cassandraTable("test", "kv").collect res6: Array[com.datastax.spark.connector.CassandraRow] = Array(CassandraRow{k: 1, v: foo}) cqlsh> select * from test.kv; k | v -‐-‐-‐+-‐-‐-‐-‐-‐ 1 | foo (1 rows) DSE Spark Interactive Shell

- 72. 72 Connecting to Cassandra // Import Cassandra-‐specific functions on SparkContext and RDD objects import com.datastax.driver.spark._ // Spark connection options val conf = new SparkConf(true) .setMaster("spark://192.168.123.10:7077") .setAppName("cassandra-‐demo") .set("cassandra.connection.host", "192.168.123.10") // initial contact .set("cassandra.username", "cassandra") .set("cassandra.password", "cassandra") val sc = new SparkContext(conf)

- 73. 73 Reading Data as RDD val table = sc .cassandraTable[CassandraRow]("db", "tweets") .select("user_name", "message") .where("user_name = ?", "ewa") row representation keyspace table server side column and row selection

- 74. 74 Writing RDD to Cassandra CREATE TABLE test.words(word TEXT PRIMARY KEY, count INT); val collection = sc.parallelize(Seq(("foo", 2), ("bar", 5))) collection.saveToCassandra("test", "words", SomeColumns("word", "count")) cqlsh:test> select * from words; word | count -‐-‐-‐-‐-‐-‐+-‐-‐-‐-‐-‐-‐-‐ bar | 5 foo | 2 (2 rows)

- 75. 75 Mapping Rows to Objects CREATE TABLE test.cars ( id text PRIMARY KEY, model text, fuel_type text, year int ); case class Vehicle( id: String, model: String, fuelType: String, year: Int ) sc.cassandraTable[Vehicle]("test", "cars").toArray //Array(Vehicle(KF334L, Ford Mondeo, Petrol, 2009), // Vehicle(MT8787, Hyundai x35, Diesel, 2011) à • Mapping rows to Scala Case Classes • CQL underscore case column mapped to Scala camel case property • Custom mapping functions (see docs)

- 76. 76 Type Mapping CQL Type Scala Type ascii String bigint Long boolean Boolean counter Long decimal BigDecimal, java.math.BigDecimal double Double float Float inet java.net.InetAddress int Int list Vector, List, Iterable, Seq, IndexedSeq, java.util.List map Map, TreeMap, java.util.HashMap set Set, TreeSet, java.util.HashSet text, varchar String timestamp Long, java.util.Date, java.sql.Date, org.joda.time.DateTime timeuuid java.util.UUID uuid java.util.UUID varint BigInt, java.math.BigInteger *nullable values Option

- 77. 77 §Mapping of Cassandra keyspaces and tables §Read and write on Cassandra tables Usage of Spark DataFrames import com.datastax.spark.connector._ // Connect to the Spark cluster val conf = new SparkConf(true)... val sc = new SparkContext(conf) // Create Cassandra SQL context val sqlContext = new org.apache.spark.sql.hive.HiveContext(sc) // Cassandra Table as a Spark DataFrame val df = sqlContext.read.format("org.apache.spark.sql.cassandra"). options(Map("keyspace"-‐>"music", "table" -‐> "tracks_by_album”)). load() // Some DataFrames transformations // for floor : import sqlContext.functions._ val tmp = df.groupBy((floor(df("album_year") / 10) * 10).cast("int").alias("decade")).count val count_by_decade = tmp.select(tmp("decade"), tmp("count").alias("album_count"))count_by_decade.show // Save the resulting DataFrame into a Cassandra Table count_by_decade.write.format("org.apache.spark.sql.cassandra").options(Map( "table" -‐> "albums_by_decade", "keyspace" -‐> "steve")).mode(SaveMode.Overwrite).save()

- 78. 78 §Mapping of Cassandra keyspaces and tables §Read and write on Cassandra tables Usage of Spark SQL import com.datastax.spark.connector._ // Connect to the Spark cluster val conf = new SparkConf(true)... val sc = new SparkContext(conf) // Create Cassandra SQL context val sqlContext = new org.apache.spark.sql.hive.HiveContext(sc) // Create the SparkSQL alias to the Cassandra Table sqlContext.sql(“””CREATE TEMPORARY TABLE tmp_tracks_by_album USING org.apache.spark.sql.cassandra OPTIONS (keyspace "music", table "tracks_by_album”)”””) // Execute the SparkSQL query. The result of the query is a DataFrame val track_count_by_year = sqlContext.sql("select 'dummy' as dummy, album_year as year, count(*) as track_count from tmp_tracks_by_album group by album_year") // Register the DataFrame as a SparkSQL alias track_count_by_year.registerTempTable("tmp_track_count_by_year”) // Save the DataFrame using SparkSQL Insert into sqlContext.sql("insert into table music.tracks_by_year select dummy, track_count, year from tmp_track_count_by_year")

- 79. 79 § Easy setup and config – No need to setup a separate Spark cluster – No need to tweak classpaths or config files § High availability of Spark Master § Enterprise security – Password / Kerberos / LDAP authentication – SSL for all Spark to Cassandra connections § Included support of Apache Spark in DSE Max subscription DataStax Enterprise Max - Spark Special Features

- 80. 80 DataStax Enterprise - High Availability § All nodes are Spark Workers § By default resilient to Worker failures § First Spark node promoted as Spark Master ( state saved in Cassandra, no SPOF) § Standby Master promoted on failure (New Spark Master reconnects to Workers and the driver app and continues the job) § No need of Zookeeper, unlike § Spark Standalone § Mesos § YARN

- 81. 81 OpsCenter Services Monitoring Operations Operational Application Real Time Search Real Time Analytics Batch Analytics Analytics Transformations Cassandra Cluster – Nodes Ring – Column Family Storage High Performance – Alway Available – Massive Scalability Advanced Security In-Memory Support DataStax Enterprise

- 82. 82 Demos

- 83. 83 Demos § RDD & DataFrames examples in Scala and Python § https://github.com/datastax-training/justenoughspark – Scala • JustEnoughScalaForSpark • MoreScalaExamples – Scala Spark • JustEnoughtSpark – Spark Cassandra connector Scala • Move Data withRDD • DataFrames Scala • Move it with SparkSQL – Spark Cassandra connector Python • DataFrames Python • Move it with SparkSQL Python

![14

Resilient Distributed Datasets: Properties

§ Immutable

§ Two types of operations

– Transformations ~ DDL (Create View V2 as…)

• val rddNumbers = sc.parallelize(1 to 10): Numbers from 1 to 10

• val rddNumbers2 = rddNumbers.map (x => x+1): Numbers from 2 to 11

• The LINEAGE on how to obtain rddNumbers2 from rddNumber is recorded

• It’s a Directed Acyclic Graph (DAG)

• No actual data processing does take place è Lazy evaluations

– Actions ~ DML (Select * From V2…)

• rddNumbers2.collect(): Array [2, 3, 4, 5, 6, 7, 8, 9, 10, 11]

• Performs transformations and action

• Returns a value (or write to a file)

§ Fault tolerance

– If data in memory is lost it will be recreated from lineage

§ Caching, persistence (memory, spilling, disk) and check-pointing](https://image.slidesharecdn.com/sparkdseenablementin4hoursvp201601252350-160126143807/85/Apache-Spark-and-DataStax-Enablement-14-320.jpg)

![15

RDD Transformations

§ Transformations are lazy evaluations

§ Returns a transformed RDD

§ Pair RDD (K,V) functions for MapReduce style transformations

Transformation Meaning

map(func) Return a new dataset formed by passing each element of the source through a function func.

filter(func) Return a new dataset formed by selecting those elements of the source on which func returns

true.

flatMap(func) Similar to map, but each input item can be mapped to 0 or more output items. So func should

return a Seq rather than a single item

Full documentation at http://spark.apache.org/docs/1.4.1/api/scala/index.html#org.apache.spark.rdd.PairRDDFunctions

join(otherDataset,

[numTasks])

When called on datasets of type (K, V) and (K, W), returns a dataset of (K, (V, W)) pairs with all

pairs of elements for each key.

reduceByKey(func) When called on a dataset of (K, V) pairs, returns a dataset of (K,V) pairs where the values for

each key are aggregated using the given reduce function func

sortByKey([ascendin

g],[numTasks])

When called on a dataset of (K, V) pairs where K implements Ordered, returns a dataset of (K,V)

pairs sorted by keys in ascending or descending order.

combineByKey[C}(cr

eateCombiner,

mergeValue,

mergeCombiners))

Generic function to combine the elements for each key using a custom set of aggregation

functions. Turns an RDD[(K, V)] into a result of type RDD[(K, C)], for a "combined type" C.

createCombiner: (V) ⇒ C, mergeValue: (C, V) ⇒ C, mergeCombiners: (C, C) ⇒ C)

Full documentation at http://spark.apache.org/docs/1.4.1/api/scala/index.html#org.apache.spark.rdd.RDD](https://image.slidesharecdn.com/sparkdseenablementin4hoursvp201601252350-160126143807/85/Apache-Spark-and-DataStax-Enablement-15-320.jpg)

![28

Functional methods on collections

Method on Seq[T] Explanation

map(f: T => U): Seq[U] Each element is result of f

flatMap(f: T => Seq[U]): Seq[U] One to many map

filter(f: T => Boolean): Seq[T] Keep elements passing f

exists(f: T => Boolean): Boolean True if one element passes f

forall(f: T => Boolean): Boolean True if all elements pass

reduce(f: (T, T) => T): T Merge elements using f

groupBy(f: T => K): Map[K, List[T]] Group elements by f

sortBy(f: T => K): Seq[T] Sort elements

…..

There are a lot of methods on Scala collections, just google Scala Seq or http://www.scala-lang.org/api/2.

10.4/index.html#scala.collection.Seq

Scala Crash Course, Holden Karau, DataBricks](https://image.slidesharecdn.com/sparkdseenablementin4hoursvp201601252350-160126143807/85/Apache-Spark-and-DataStax-Enablement-28-320.jpg)

![48

RDD Direct Acyclic Graph (DAG)

§ View the lineage

§ Could be issued in a continuous line

scala> vpnSpark.toDebugString

res1: String =

(2) MappedRDD[4] at map at <console>:16

| MappedRDD[3] at map at <console>:16

| FilteredRDD[2] at filter at <console>:14

| hdfs:/sparkdata/sparkQuotes.txt MappedRDD[1] at textFile at <console>:12

| hdfs:/sparkdata/sparkQuotes.txt HadoopRDD[0] at textFile at <console>:12

val vpnSpark = sc.textFile("hdfs:/sparkdata/sparkQuotes.txt").

filter(_.startsWith(”VPN")).

map(_.split(" ")).

map(x => x(1)).

.filter(_.contains("Spark"))

vpnSpark.count()](https://image.slidesharecdn.com/sparkdseenablementin4hoursvp201601252350-160126143807/85/Apache-Spark-and-DataStax-Enablement-48-320.jpg)

![58

Spark Properties

§ Set application properties via the SparkConf object

val conf = new SparkConf()

.setMaster("local")

.setAppName("CountingSheep")

.set("spark.executor.memory", "1g")

val sc = new SparkContext(conf)

§ Dynamically setting Spark properties

– SparkContext with an empty conf

val sc = new SparkContext(new SparkConf())

– Supply the configuration values during runtime

./bin/spark-submit --name "My app" --master local[4] --conf

spark.shuffle.spill=false --conf "spark.executor.extraJavaOptions=-

XX:+PrintGCDetails -XX:+PrintGCTimeStamps" myApp.jar

– conf/spark-defaults.conf](https://image.slidesharecdn.com/sparkdseenablementin4hoursvp201601252350-160126143807/85/Apache-Spark-and-DataStax-Enablement-58-320.jpg)

![64

Spark Streaming - Getting Started

§ Count the number of words coming in from the TCP socket

§ Import the Spark Streaming classes

import org.apache.spark._

import org.apache.spark.streaming._

import org.apache.spark.streaming.StreamingContext._

§ Create the StreamingContext object

val conf =

new SparkConf().setMaster("local[2]").setAppName("NetworkWordCount")

val ssc = new StreamingContext(conf, Seconds(1))

§ Create a DStream

val lines = ssc.socketTextStream("localhost", 9999)

§ Split the lines into words

val words = lines.flatMap(_.split(" "))

§ Count the words

val pairs = words.map(word => (word, 1))

val wordCounts = pairs.reduceByKey(_ + _)

§ Print to the console

wordCounts.print()](https://image.slidesharecdn.com/sparkdseenablementin4hoursvp201601252350-160126143807/85/Apache-Spark-and-DataStax-Enablement-64-320.jpg)

![71

$ dse spark

...

Spark context available as sc.

HiveSQLContext available as hc.

CassandraSQLContext available as csc.

scala> sc.cassandraTable("test", "kv")

res5: com.datastax.spark.connector.rdd.CassandraRDD

[com.datastax.spark.connector.CassandraRow] =

CassandraRDD[2] at RDD at CassandraRDD.scala:48

scala> sc.cassandraTable("test", "kv").collect

res6: Array[com.datastax.spark.connector.CassandraRow] =

Array(CassandraRow{k: 1, v: foo})

cqlsh> select *

from test.kv;

k | v

-‐-‐-‐+-‐-‐-‐-‐-‐

1 | foo

(1 rows)

DSE Spark Interactive Shell](https://image.slidesharecdn.com/sparkdseenablementin4hoursvp201601252350-160126143807/85/Apache-Spark-and-DataStax-Enablement-71-320.jpg)

.select("user_name", "message")

.where("user_name = ?", "ewa")

row

representation keyspace table

server side

column and row

selection](https://image.slidesharecdn.com/sparkdseenablementin4hoursvp201601252350-160126143807/85/Apache-Spark-and-DataStax-Enablement-73-320.jpg)

.toArray

//Array(Vehicle(KF334L, Ford Mondeo,

Petrol, 2009),

// Vehicle(MT8787, Hyundai x35,

Diesel, 2011)

à

• Mapping rows to Scala Case Classes

• CQL underscore case column mapped to Scala camel case property

• Custom mapping functions (see docs)](https://image.slidesharecdn.com/sparkdseenablementin4hoursvp201601252350-160126143807/85/Apache-Spark-and-DataStax-Enablement-75-320.jpg)

![Get & Download Wondershare Filmora Crack Latest [2025]](https://cdn.slidesharecdn.com/ss_thumbnails/revolutionizingresidentialwi-fi-250422112639-60fb726f-250429170801-59e1b240-thumbnail.jpg?width=560&fit=bounds)

![Download Wondershare Filmora Crack [2025] With Latest](https://cdn.slidesharecdn.com/ss_thumbnails/neo4j-howkgsareshapingthefutureofgenerativeaiatawssummitlondonapril2024-240426125209-2d9db05d-250419-250428115407-a04afffa-thumbnail.jpg?width=560&fit=bounds)