Spark Core

0 likes511 views

When learning Apache Spark, where should a person begin? What are the key fundamentals when learning Apache Spark? Resilient Distributed Datasets, Spark Drivers and Context, Transformations, Actions.

1 of 14

Downloaded 27 times

![Spark RDDs, Transformations, Actions Diagram

Load from External Source

Example: textFile

Transformations Actions

RDDs

Output Value(s)

Example: count, collect

5, ['a','b', 'c']

Friday, January 22, 16](https://image.slidesharecdn.com/spark-core-presentation-pdf-160122104822/85/Spark-Core-10-320.jpg)

Ad

Recommended

An Overview of Apache Spark

An Overview of Apache SparkYasoda Jayaweera This lecture was intended to provide an introduction to Apache Spark's features and functionality and importance of Spark as a distributed data processing framework compared to Hadoop MapReduce. The target audience was MSc students with programming skills at beginner to intermediate level.

Introduction to apache spark

Introduction to apache sparkUserReport Spark is a general-purpose cluster computing framework that provides high-level APIs and is faster than Hadoop for iterative jobs and interactive queries. It leverages cached data in cluster memory across nodes for faster performance. Spark supports various higher-level tools including SQL, machine learning, graph processing, and streaming.

Introduction to Apache Spark

Introduction to Apache Sparkdatamantra Apache Spark is a fast, general engine for large-scale data processing. It provides unified analytics engine for batch, interactive, and stream processing using an in-memory abstraction called resilient distributed datasets (RDDs). Spark's speed comes from its ability to run computations directly on data stored in cluster memory and optimize performance through caching. It also integrates well with other big data technologies like HDFS, Hive, and HBase. Many large companies are using Spark for its speed, ease of use, and support for multiple workloads and languages.

Introduction to Apache Spark

Introduction to Apache Spark Juan Pedro Moreno Introduction to Apache Spark Workshop at Lambda World 2015 on October 23th and 24th, 2015, celebrated in Cádiz. Speakers: @fperezp and @juanpedromoreno

Github Repo: https://github.com/47deg/spark-workshop

Apache Spark Briefing

Apache Spark BriefingThomas W. Dinsmore Apache Spark is rapidly emerging as the prime platform for advanced analytics in Hadoop. This briefing is updated to reflect news and announcements as of July 2014.

Apache spark - History and market overview

Apache spark - History and market overviewMartin Zapletal This document provides a history and market overview of Apache Spark. It discusses the motivation for distributed data processing due to increasing data volumes, velocities and varieties. It then covers brief histories of Google File System, MapReduce, BigTable, and other technologies. Hadoop and MapReduce are explained. Apache Spark is introduced as a faster alternative to MapReduce that keeps data in memory. Competitors like Flink, Tez and Storm are also mentioned.

Introduction to apache spark

Introduction to apache sparkMuktadiur Rahman My presentation on Java User Group BD Meet up # 5.0 (JUGBD#5.0)

Apache Spark™ is a fast and general engine for large-scale data processing.Run programs up to 100x faster than Hadoop MapReduce in memory, or 10x faster on disk.

Big Data Processing with Apache Spark 2014

Big Data Processing with Apache Spark 2014mahchiev This document provides an overview of Apache Spark, a framework for large-scale data processing. It discusses what big data is, the history and advantages of Spark, and Spark's execution model. Key concepts explained include Resilient Distributed Datasets (RDDs), transformations, actions, and MapReduce algorithms like word count. Examples are provided to illustrate Spark's use of RDDs and how it can improve on Hadoop MapReduce.

Introduction to Apache Spark

Introduction to Apache SparkSamy Dindane Apache Spark is a fast distributed data processing engine that runs in memory. It can be used with Java, Scala, Python and R. Spark uses resilient distributed datasets (RDDs) as its main data structure. RDDs are immutable and partitioned collections of elements that allow transformations like map and filter. Spark is 10-100x faster than Hadoop for iterative algorithms and can be used for tasks like ETL, machine learning, and streaming.

Spark as a Platform to Support Multi-Tenancy and Many Kinds of Data Applicati...

Spark as a Platform to Support Multi-Tenancy and Many Kinds of Data Applicati...Spark Summit This document summarizes Uber's use of Spark as a data platform to support multi-tenancy and various data applications. Key points include:

- Uber uses Spark on YARN for resource management and isolation between teams/jobs. Parquet is used as the columnar file format for performance and schema support.

- Challenges include sharing infrastructure between many teams with different backgrounds and use cases. Spark provides a common platform.

- An Uber Development Kit (UDK) is used to help users get Spark jobs running quickly on Uber's infrastructure, with templates, defaults, and APIs for common tasks.

Introduction to apache spark

Introduction to apache spark Aakashdata we will see an overview of Spark in Big Data. We will start with an introduction to Apache Spark Programming. Then we will move to know the Spark History. Moreover, we will learn why Spark is needed. Afterward, will cover all fundamental of Spark components. Furthermore, we will learn about Spark’s core abstraction and Spark RDD. For more detailed insights, we will also cover spark features, Spark limitations, and Spark Use cases.

Spark Streaming and MLlib - Hyderabad Spark Group

Spark Streaming and MLlib - Hyderabad Spark GroupPhaneendra Chiruvella - A brief introduction to Spark Core

- Introduction to Spark Streaming

- A Demo of Streaming by evaluation top hashtags being used

- Introduction to Spark MLlib

- A Demo of MLlib by building a simple movie recommendation engine

Apache spark on Hadoop Yarn Resource Manager

Apache spark on Hadoop Yarn Resource Managerharidasnss How we can configure the spark on apache hadoop environment, and why we need that compared to standalone cluster manager.

Slide also includes docker based demo to play with the hadoop and spark on your laptop itself. See more on the demo codes and other documentation here - https://github.com/haridas/hadoop-env

Building end to end streaming application on Spark

Building end to end streaming application on Sparkdatamantra This document discusses building a real-time streaming application on Spark to analyze sensor data. It describes collecting data from servers through Flume into Kafka and processing it using Spark Streaming to generate analytics stored in Cassandra. The stages involve using files, then Kafka, and finally Cassandra for input/output. Testing streaming applications and redesigning for testability is also covered.

Apache Spark Fundamentals

Apache Spark FundamentalsZahra Eskandari This document provides an overview of the Apache Spark framework. It covers Spark fundamentals including the Spark execution model using Resilient Distributed Datasets (RDDs), basic Spark programming, and common Spark libraries and use cases. Key topics include how Spark improves on MapReduce by operating in-memory and supporting general graphs through its directed acyclic graph execution model. The document also reviews Spark installation and provides examples of basic Spark programs in Scala.

Lambda architecture: from zero to One

Lambda architecture: from zero to OneSerg Masyutin Story of architecture evolution of one project from zero to Lambda Architecture. Also includes information on how we scaled cluster as soon as architecture is set up.

Contains nice performance charts after every architecture change.

Spark - The Ultimate Scala Collections by Martin Odersky

Spark - The Ultimate Scala Collections by Martin OderskySpark Summit Spark is a domain-specific language for working with collections that is implemented in Scala and runs on a cluster. While similar to Scala collections, Spark differs in that it is lazy and supports additional functionality for paired data. Scala can learn from Spark by adding views to make laziness clearer, caching for persistence, and pairwise operations. Types are important for Spark as they prevent logic errors and help with programming complex functional operations across a cluster.

Spark meetup TCHUG

Spark meetup TCHUGRyan Bosshart This document discusses Apache Spark, an open-source cluster computing framework. It describes how Spark allows for faster iterative algorithms and interactive data mining by keeping working sets in memory. The document also provides an overview of Spark's ease of use in Scala and Python, built-in modules for SQL, streaming, machine learning, and graph processing, and compares Spark's machine learning library MLlib to other frameworks.

Introduction to Apache Spark

Introduction to Apache SparkRahul Jain Apache Spark is a In Memory Data Processing Solution that can work with existing data source like HDFS and can make use of your existing computation infrastructure like YARN/Mesos etc. This talk will cover a basic introduction of Apache Spark with its various components like MLib, Shark, GrpahX and with few examples.

Hadoop and Spark

Hadoop and SparkShravan (Sean) Pabba This document provides an overview and introduction to Spark, including:

- Spark is a general purpose computational framework that provides more flexibility than MapReduce while retaining properties like scalability and fault tolerance.

- Spark concepts include resilient distributed datasets (RDDs), transformations that create new RDDs lazily, and actions that run computations and return values to materialize RDDs.

- Spark can run on standalone clusters or as part of Cloudera's Enterprise Data Hub, and examples of its use include machine learning, streaming, and SQL queries.

Intro to Apache Spark

Intro to Apache SparkRobert Sanders Introduction to Apache Spark. With an emphasis on the RDD API, Spark SQL (DataFrame and Dataset API) and Spark Streaming.

Presented at the Desert Code Camp:

http://oct2016.desertcodecamp.com/sessions/all

Bigdata and Hadoop with Docker

Bigdata and Hadoop with Dockerharidasnss This document provides an introduction to big data and Hadoop. It discusses how distributed systems can scale to handle large data volumes and discusses Hadoop's architecture. It also provides instructions on setting up a Hadoop cluster on a laptop and summarizes Hadoop's MapReduce programming model and YARN framework. Finally, it announces an upcoming workshop on Spark and Pyspark.

Big Data visualization with Apache Spark and Zeppelin

Big Data visualization with Apache Spark and Zeppelinprajods This presentation gives an overview of Apache Spark and explains the features of Apache Zeppelin(incubator). Zeppelin is the open source tool for data discovery, exploration and visualization. It supports REPLs for shell, SparkSQL, Spark(scala), python and angular. This presentation was made on the Big Data Day, at the Great Indian Developer Summit, Bangalore, April 2015

Apache Spark on Kubernetes

Apache Spark on Kubernetesharidasnss How we can make use of Kubernetes as Resource Manager for Spark. What are the Pros and Cons of Spark Resource manager are discussed on this slides and the associated tutorial.

Refer this github project for more details and code samples : https://github.com/haridas/hadoop-env

Kafka website activity architecture

Kafka website activity architectureOmid Vahdaty Using Kafka in a website activity use-case. for disaster recovery, multi DC clusters, and multi cloud clusters.

Productionizing Spark and the REST Job Server- Evan Chan

Productionizing Spark and the REST Job Server- Evan ChanSpark Summit The document discusses productionizing Apache Spark and using the Spark REST Job Server. It provides an overview of Spark deployment options like YARN, Mesos, and Spark Standalone mode. It also covers Spark configuration topics like jars management, classpath configuration, and tuning garbage collection. The document then discusses running Spark applications in a cluster using tools like spark-submit and the Spark Job Server. It highlights features of the Spark Job Server like enabling low-latency Spark queries and sharing cached RDDs across jobs. Finally, it provides examples of using the Spark Job Server in production environments.

Building a REST API with Cassandra on Datastax Astra Using Python and Node

Building a REST API with Cassandra on Datastax Astra Using Python and NodeAnant Corporation DataStax Astra provides the ability to develop and deploy data-driven applications with a cloud-native service, without the hassles of database and infrastructure administration. In this webinar, we are going to walk you through creating a REST API and exposing that to your Cassandra database.

Webinar Link: https://www.youtube.com/watch?v=O64pJa3eLqs&t=20s

Hands on with Apache Spark

Hands on with Apache SparkDan Lynn This document provides an overview of Apache Spark and a hands-on workshop for using Spark. It begins with a brief history of Spark and how it evolved from Hadoop to address limitations in processing iterative tasks and keeping data in memory. Key Spark concepts are explained including RDDs, transformations, actions and Spark's execution model. New APIs in Spark SQL, DataFrames and Datasets are also introduced. The workshop agenda includes an overview of Spark followed by a hands-on example to rank Colorado counties by gender ratio using census data and both RDD and DataFrame APIs.

Apache Spark - Lightning Fast Cluster Computing - Hyderabad Scalability Meetup

Apache Spark - Lightning Fast Cluster Computing - Hyderabad Scalability MeetupHyderabad Scalability Meetup This document provides an introduction and overview of Apache Spark, a lightning-fast cluster computing framework. It discusses Spark's ecosystem, how it differs from Hadoop MapReduce, where it shines well, how easy it is to install and start learning, includes some small code demos, and provides additional resources for information. The presentation introduces Spark and its core concepts, compares it to Hadoop MapReduce in areas like speed, usability, tools, and deployment, demonstrates how to use Spark SQL with an example, and shows a visualization demo. It aims to provide attendees with a high-level understanding of Spark without being a training class or workshop.

Dec6 meetup spark presentation

Dec6 meetup spark presentationRamesh Mudunuri This document provides an introduction and overview of Apache Spark, a lightning-fast cluster computing framework. It discusses Spark's ecosystem, how it differs from Hadoop MapReduce, where it shines well, how easy it is to install and start learning, includes some small code demos, and provides additional resources for information. The presentation introduces Spark and its core concepts, compares it to Hadoop MapReduce in areas like speed, usability, tools, and deployment, demonstrates how to use Spark SQL with an example, and shows a visualization demo. It aims to provide attendees with a high-level understanding of Spark without being a training class or workshop.

Ad

More Related Content

What's hot (20)

Introduction to Apache Spark

Introduction to Apache SparkSamy Dindane Apache Spark is a fast distributed data processing engine that runs in memory. It can be used with Java, Scala, Python and R. Spark uses resilient distributed datasets (RDDs) as its main data structure. RDDs are immutable and partitioned collections of elements that allow transformations like map and filter. Spark is 10-100x faster than Hadoop for iterative algorithms and can be used for tasks like ETL, machine learning, and streaming.

Spark as a Platform to Support Multi-Tenancy and Many Kinds of Data Applicati...

Spark as a Platform to Support Multi-Tenancy and Many Kinds of Data Applicati...Spark Summit This document summarizes Uber's use of Spark as a data platform to support multi-tenancy and various data applications. Key points include:

- Uber uses Spark on YARN for resource management and isolation between teams/jobs. Parquet is used as the columnar file format for performance and schema support.

- Challenges include sharing infrastructure between many teams with different backgrounds and use cases. Spark provides a common platform.

- An Uber Development Kit (UDK) is used to help users get Spark jobs running quickly on Uber's infrastructure, with templates, defaults, and APIs for common tasks.

Introduction to apache spark

Introduction to apache spark Aakashdata we will see an overview of Spark in Big Data. We will start with an introduction to Apache Spark Programming. Then we will move to know the Spark History. Moreover, we will learn why Spark is needed. Afterward, will cover all fundamental of Spark components. Furthermore, we will learn about Spark’s core abstraction and Spark RDD. For more detailed insights, we will also cover spark features, Spark limitations, and Spark Use cases.

Spark Streaming and MLlib - Hyderabad Spark Group

Spark Streaming and MLlib - Hyderabad Spark GroupPhaneendra Chiruvella - A brief introduction to Spark Core

- Introduction to Spark Streaming

- A Demo of Streaming by evaluation top hashtags being used

- Introduction to Spark MLlib

- A Demo of MLlib by building a simple movie recommendation engine

Apache spark on Hadoop Yarn Resource Manager

Apache spark on Hadoop Yarn Resource Managerharidasnss How we can configure the spark on apache hadoop environment, and why we need that compared to standalone cluster manager.

Slide also includes docker based demo to play with the hadoop and spark on your laptop itself. See more on the demo codes and other documentation here - https://github.com/haridas/hadoop-env

Building end to end streaming application on Spark

Building end to end streaming application on Sparkdatamantra This document discusses building a real-time streaming application on Spark to analyze sensor data. It describes collecting data from servers through Flume into Kafka and processing it using Spark Streaming to generate analytics stored in Cassandra. The stages involve using files, then Kafka, and finally Cassandra for input/output. Testing streaming applications and redesigning for testability is also covered.

Apache Spark Fundamentals

Apache Spark FundamentalsZahra Eskandari This document provides an overview of the Apache Spark framework. It covers Spark fundamentals including the Spark execution model using Resilient Distributed Datasets (RDDs), basic Spark programming, and common Spark libraries and use cases. Key topics include how Spark improves on MapReduce by operating in-memory and supporting general graphs through its directed acyclic graph execution model. The document also reviews Spark installation and provides examples of basic Spark programs in Scala.

Lambda architecture: from zero to One

Lambda architecture: from zero to OneSerg Masyutin Story of architecture evolution of one project from zero to Lambda Architecture. Also includes information on how we scaled cluster as soon as architecture is set up.

Contains nice performance charts after every architecture change.

Spark - The Ultimate Scala Collections by Martin Odersky

Spark - The Ultimate Scala Collections by Martin OderskySpark Summit Spark is a domain-specific language for working with collections that is implemented in Scala and runs on a cluster. While similar to Scala collections, Spark differs in that it is lazy and supports additional functionality for paired data. Scala can learn from Spark by adding views to make laziness clearer, caching for persistence, and pairwise operations. Types are important for Spark as they prevent logic errors and help with programming complex functional operations across a cluster.

Spark meetup TCHUG

Spark meetup TCHUGRyan Bosshart This document discusses Apache Spark, an open-source cluster computing framework. It describes how Spark allows for faster iterative algorithms and interactive data mining by keeping working sets in memory. The document also provides an overview of Spark's ease of use in Scala and Python, built-in modules for SQL, streaming, machine learning, and graph processing, and compares Spark's machine learning library MLlib to other frameworks.

Introduction to Apache Spark

Introduction to Apache SparkRahul Jain Apache Spark is a In Memory Data Processing Solution that can work with existing data source like HDFS and can make use of your existing computation infrastructure like YARN/Mesos etc. This talk will cover a basic introduction of Apache Spark with its various components like MLib, Shark, GrpahX and with few examples.

Hadoop and Spark

Hadoop and SparkShravan (Sean) Pabba This document provides an overview and introduction to Spark, including:

- Spark is a general purpose computational framework that provides more flexibility than MapReduce while retaining properties like scalability and fault tolerance.

- Spark concepts include resilient distributed datasets (RDDs), transformations that create new RDDs lazily, and actions that run computations and return values to materialize RDDs.

- Spark can run on standalone clusters or as part of Cloudera's Enterprise Data Hub, and examples of its use include machine learning, streaming, and SQL queries.

Intro to Apache Spark

Intro to Apache SparkRobert Sanders Introduction to Apache Spark. With an emphasis on the RDD API, Spark SQL (DataFrame and Dataset API) and Spark Streaming.

Presented at the Desert Code Camp:

http://oct2016.desertcodecamp.com/sessions/all

Bigdata and Hadoop with Docker

Bigdata and Hadoop with Dockerharidasnss This document provides an introduction to big data and Hadoop. It discusses how distributed systems can scale to handle large data volumes and discusses Hadoop's architecture. It also provides instructions on setting up a Hadoop cluster on a laptop and summarizes Hadoop's MapReduce programming model and YARN framework. Finally, it announces an upcoming workshop on Spark and Pyspark.

Big Data visualization with Apache Spark and Zeppelin

Big Data visualization with Apache Spark and Zeppelinprajods This presentation gives an overview of Apache Spark and explains the features of Apache Zeppelin(incubator). Zeppelin is the open source tool for data discovery, exploration and visualization. It supports REPLs for shell, SparkSQL, Spark(scala), python and angular. This presentation was made on the Big Data Day, at the Great Indian Developer Summit, Bangalore, April 2015

Apache Spark on Kubernetes

Apache Spark on Kubernetesharidasnss How we can make use of Kubernetes as Resource Manager for Spark. What are the Pros and Cons of Spark Resource manager are discussed on this slides and the associated tutorial.

Refer this github project for more details and code samples : https://github.com/haridas/hadoop-env

Kafka website activity architecture

Kafka website activity architectureOmid Vahdaty Using Kafka in a website activity use-case. for disaster recovery, multi DC clusters, and multi cloud clusters.

Productionizing Spark and the REST Job Server- Evan Chan

Productionizing Spark and the REST Job Server- Evan ChanSpark Summit The document discusses productionizing Apache Spark and using the Spark REST Job Server. It provides an overview of Spark deployment options like YARN, Mesos, and Spark Standalone mode. It also covers Spark configuration topics like jars management, classpath configuration, and tuning garbage collection. The document then discusses running Spark applications in a cluster using tools like spark-submit and the Spark Job Server. It highlights features of the Spark Job Server like enabling low-latency Spark queries and sharing cached RDDs across jobs. Finally, it provides examples of using the Spark Job Server in production environments.

Building a REST API with Cassandra on Datastax Astra Using Python and Node

Building a REST API with Cassandra on Datastax Astra Using Python and NodeAnant Corporation DataStax Astra provides the ability to develop and deploy data-driven applications with a cloud-native service, without the hassles of database and infrastructure administration. In this webinar, we are going to walk you through creating a REST API and exposing that to your Cassandra database.

Webinar Link: https://www.youtube.com/watch?v=O64pJa3eLqs&t=20s

Hands on with Apache Spark

Hands on with Apache SparkDan Lynn This document provides an overview of Apache Spark and a hands-on workshop for using Spark. It begins with a brief history of Spark and how it evolved from Hadoop to address limitations in processing iterative tasks and keeping data in memory. Key Spark concepts are explained including RDDs, transformations, actions and Spark's execution model. New APIs in Spark SQL, DataFrames and Datasets are also introduced. The workshop agenda includes an overview of Spark followed by a hands-on example to rank Colorado counties by gender ratio using census data and both RDD and DataFrame APIs.

Similar to Spark Core (20)

Apache Spark - Lightning Fast Cluster Computing - Hyderabad Scalability Meetup

Apache Spark - Lightning Fast Cluster Computing - Hyderabad Scalability MeetupHyderabad Scalability Meetup This document provides an introduction and overview of Apache Spark, a lightning-fast cluster computing framework. It discusses Spark's ecosystem, how it differs from Hadoop MapReduce, where it shines well, how easy it is to install and start learning, includes some small code demos, and provides additional resources for information. The presentation introduces Spark and its core concepts, compares it to Hadoop MapReduce in areas like speed, usability, tools, and deployment, demonstrates how to use Spark SQL with an example, and shows a visualization demo. It aims to provide attendees with a high-level understanding of Spark without being a training class or workshop.

Dec6 meetup spark presentation

Dec6 meetup spark presentationRamesh Mudunuri This document provides an introduction and overview of Apache Spark, a lightning-fast cluster computing framework. It discusses Spark's ecosystem, how it differs from Hadoop MapReduce, where it shines well, how easy it is to install and start learning, includes some small code demos, and provides additional resources for information. The presentation introduces Spark and its core concepts, compares it to Hadoop MapReduce in areas like speed, usability, tools, and deployment, demonstrates how to use Spark SQL with an example, and shows a visualization demo. It aims to provide attendees with a high-level understanding of Spark without being a training class or workshop.

Big_data_analytics_NoSql_Module-4_Session

Big_data_analytics_NoSql_Module-4_SessionRUHULAMINHAZARIKA - Apache Spark is an open-source cluster computing framework that is faster than Hadoop for batch processing and also supports real-time stream processing.

- Spark was created to be faster than Hadoop for interactive queries and iterative algorithms by keeping data in-memory when possible.

- Spark consists of Spark Core for the basic RDD API and also includes modules for SQL, streaming, machine learning, and graph processing. It can run on several cluster managers including YARN and Mesos.

Data processing with spark in r & python

Data processing with spark in r & pythonMaloy Manna, PMP® In this webinar, we'll see how to use Spark to process data from various sources in R and Python and how new tools like Spark SQL and data frames make it easy to perform structured data processing.

Unit II Real Time Data Processing tools.pptx

Unit II Real Time Data Processing tools.pptxRahul Borate Apache Spark is a lightning-fast cluster computing framework designed for real-time processing. It overcomes limitations of Hadoop by running 100 times faster in memory and 10 times faster on disk. Spark uses resilient distributed datasets (RDDs) that allow data to be partitioned across clusters and cached in memory for faster processing.

Spark Worshop

Spark WorshopJuan Pedro Moreno This document provides an overview of the Spark workshop agenda. It will introduce Big Data and Spark architecture, cover Resilient Distributed Datasets (RDDs) including transformations and actions on data using RDDs. It will also overview Spark SQL and DataFrames, Spark Streaming, and Spark architecture and cluster deployment. The workshop will be led by Juan Pedro Moreno and Fran Perez from 47Degrees and utilize the Spark workshop repository on GitHub.

Introduction to Big Data Analytics using Apache Spark and Zeppelin on HDInsig...

Introduction to Big Data Analytics using Apache Spark and Zeppelin on HDInsig...Alex Zeltov Introduction to Big Data Analytics using Apache Spark on HDInsights on Azure (SaaS) and/or HDP on Azure(PaaS)

This workshop will provide an introduction to Big Data Analytics using Apache Spark using the HDInsights on Azure (SaaS) and/or HDP deployment on Azure(PaaS) . There will be a short lecture that includes an introduction to Spark, the Spark components.

Spark is a unified framework for big data analytics. Spark provides one integrated API for use by developers, data scientists, and analysts to perform diverse tasks that would have previously required separate processing engines such as batch analytics, stream processing and statistical modeling. Spark supports a wide range of popular languages including Python, R, Scala, SQL, and Java. Spark can read from diverse data sources and scale to thousands of nodes.

The lecture will be followed by demo . There will be a short lecture on Hadoop and how Spark and Hadoop interact and compliment each other. You will learn how to move data into HDFS using Spark APIs, create Hive table, explore the data with Spark and SQL, transform the data and then issue some SQL queries. We will be using Scala and/or PySpark for labs.

Apache Spark Core

Apache Spark CoreGirish Khanzode Spark is an open-source cluster computing framework that uses in-memory processing to allow data sharing across jobs for faster iterative queries and interactive analytics, it uses Resilient Distributed Datasets (RDDs) that can survive failures through lineage tracking and supports programming in Scala, Java, and Python for batch, streaming, and machine learning workloads.

Spark SQL

Spark SQLCaserta The document provides an agenda and overview for a Big Data Warehousing meetup hosted by Caserta Concepts. The meetup agenda includes an introduction to SparkSQL with a deep dive on SparkSQL and a demo. Elliott Cordo from Caserta Concepts will provide an introduction and overview of Spark as well as a demo of SparkSQL. The meetup aims to share stories in the rapidly changing big data landscape and provide networking opportunities for data professionals.

Apache Spark for Beginners

Apache Spark for BeginnersAnirudh Spark is an open-source cluster computing framework that provides high performance for both batch and streaming data processing. It addresses limitations of other distributed processing systems like MapReduce by providing in-memory computing capabilities and supporting a more general programming model. Spark core provides basic functionalities and serves as the foundation for higher-level modules like Spark SQL, MLlib, GraphX, and Spark Streaming. RDDs are Spark's basic abstraction for distributed datasets, allowing immutable distributed collections to be operated on in parallel. Key benefits of Spark include speed through in-memory computing, ease of use through its APIs, and a unified engine supporting multiple workloads.

Programming in Spark using PySpark

Programming in Spark using PySpark Mostafa This session covers how to work with PySpark interface to develop Spark applications. From loading, ingesting, and applying transformation on the data. The session covers how to work with different data sources of data, apply transformation, python best practices in developing Spark Apps. The demo covers integrating Apache Spark apps, In memory processing capabilities, working with notebooks, and integrating analytics tools into Spark Applications.

Big Data training

Big Data trainingvishal192091 The document provides an overview of big data concepts and frameworks. It discusses the dimensions of big data including volume, velocity, variety, veracity, value and variability. It then describes the traditional approach to data processing and its limitations in dealing with large, complex data. Hadoop and its core components HDFS and YARN are introduced as the solution. Spark is presented as a faster alternative to Hadoop for processing large datasets in memory. Other frameworks like Hive, Pig and Presto are also briefly mentioned.

Introduction to spark

Introduction to sparkHome Spark was introduced by Apache Software Foundation for speeding up the Hadoop computational computing software process.

Introduction To Big Data with Hadoop and Spark - For Batch and Real Time Proc...

Introduction To Big Data with Hadoop and Spark - For Batch and Real Time Proc...Agile Testing Alliance Introduction To Big Data with Hadoop and Spark - For Batch and Real Time Processing by "Sampat Kumar" from "Harman". The presentation was done at #doppa17 DevOps++ Global Summit 2017. All the copyrights are reserved with the author

Apache Spark on HDinsight Training

Apache Spark on HDinsight TrainingSynergetics Learning and Cloud Consulting An Engine to process big data in faster(than MR), easy and extremely scalable way. An Open Source, parallel, in-memory processing, cluster computing framework. Solution for loading, processing and end to end analyzing large scale data. Iterative and Interactive : Scala, Java, Python, R and with Command line interface.

4Introduction+to+Spark.pptx sdfsdfsdfsdfsdf

4Introduction+to+Spark.pptx sdfsdfsdfsdfsdfyafora8192 r instance, in zero-dimensional (0D) nanomaterials all the dimensions are measured within the nanoscale (no dimensions are larger than 100 nm); in two-dimensional nanomaterials (2D), two dimensions are outside the nanoscale; and in three-dimensional nanomaterials (3D) are materials that are not confined to the nanoscale in any dimension. This class can contain bulk powders, dispersions of nanoparticles, bundles of nanowires, and nanotubes as well as multi-nanolayers. Check our Frequently Asked Questions to get more details.

For instance, in zero-dimensional (0D) nanomaterials all the dimensions are measured within the nanoscale (no dimensions are larger than 100 nm); in two-dimensional nanomaterials (2D), two dimensions are outside the nanoscale; and in three-dimensional nanomaterials (3D) are materials that are not confined to the nanoscale in any dimension. This class can contain bulk powders, dispersions of nanoparticles, bundles of nanowires, and nanotubes as well as multi-nanolayers. Check our Frequently Asked Questions to g

Spark from the Surface

Spark from the SurfaceJosi Aranda Apache Spark is an open-source distributed processing engine that is up to 100 times faster than Hadoop for processing data stored in memory and 10 times faster for data stored on disk. It provides high-level APIs in Java, Scala, Python and SQL and supports batch processing, streaming, and machine learning. Spark runs on Hadoop, Mesos, Kubernetes or standalone and can access diverse data sources using its core abstraction called resilient distributed datasets (RDDs).

Spark Advanced Analytics NJ Data Science Meetup - Princeton University

Spark Advanced Analytics NJ Data Science Meetup - Princeton UniversityAlex Zeltov Workshop - How to Build Recommendation Engine using Spark 1.6 and HDP

Hands-on - Build a Data analytics application using SPARK, Hortonworks, and Zeppelin. The session explains RDD concepts, DataFrames, sqlContext, use SparkSQL for working with DataFrames and explore graphical abilities of Zeppelin.

b) Follow along - Build a Recommendation Engine - This will show how to build a predictive analytics (MLlib) recommendation engine with scoring This will give a better understanding of architecture and coding in Spark for ML.

Enkitec E4 Barcelona : SQL and Data Integration Futures on Hadoop :

Enkitec E4 Barcelona : SQL and Data Integration Futures on Hadoop : Mark Rittman Mark Rittman gave a presentation on the future of analytics on Oracle Big Data Appliance. He discussed how Hadoop has enabled highly scalable and affordable cluster computing using technologies like MapReduce, Hive, Impala, and Parquet. Rittman also talked about how these technologies have improved query performance and made Hadoop suitable for both batch and interactive/ad-hoc querying of large datasets.

Apache Spark - Lightning Fast Cluster Computing - Hyderabad Scalability Meetup

Apache Spark - Lightning Fast Cluster Computing - Hyderabad Scalability MeetupHyderabad Scalability Meetup

Introduction To Big Data with Hadoop and Spark - For Batch and Real Time Proc...

Introduction To Big Data with Hadoop and Spark - For Batch and Real Time Proc...Agile Testing Alliance

Ad

Recently uploaded (20)

computer organization and assembly language.docx

computer organization and assembly language.docxalisoftwareengineer1 computer organization and assembly language : its about types of programming language along with variable and array description..https://www.nfciet.edu.pk/

md-presentHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHation.pptx

md-presentHHHHHHHHHHHHHHHHHHHHHHHHHHHHHHation.pptxfatimalazaar2004 BBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBBB

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...James Francis Paradigm Asset Management By James Francis, CEO of Paradigm Asset Management

In the landscape of urban safety innovation, Mt. Vernon is emerging as a compelling case study for neighboring Westchester County cities. The municipality’s recently launched Public Safety Camera Program not only represents a significant advancement in community protection but also offers valuable insights for New Rochelle and White Plains as they consider their own safety infrastructure enhancements.

Calories_Prediction_using_Linear_Regression.pptx

Calories_Prediction_using_Linear_Regression.pptxTijiLMAHESHWARI Calorie prediction using machine learning

Classification_in_Machinee_Learning.pptx

Classification_in_Machinee_Learning.pptxwencyjorda88 Brief powerpoint presentation about different classification of machine learning

CTS EXCEPTIONSPrediction of Aluminium wire rod physical properties through AI...

CTS EXCEPTIONSPrediction of Aluminium wire rod physical properties through AI...ThanushsaranS Prediction of Aluminium wire rod physical properties through AI, ML

or any modern technique for better productivity and quality control.

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...James Francis Paradigm Asset Management

Ad

Spark Core

- 1. Introducing Spark Core Friday, January 22, 16

- 2. Agenda • Assumptions • Why Spark? • What you need to know to begin? Friday, January 22, 16

- 3. Assumptions • You want to learn Apache Spark, but need to know where to begin • You need to know the fundamentals of Spark in order to progress in your learning of Spark • You need to evaluate if Spark could be an appropriate fit for your use cases or career growth One or more of the following Friday, January 22, 16

- 4. In a nutshell, why spark? • Engine for efficient large-scale processing. It’s faster than Hadoop MapReduce • Spark can complement your existing Hadoop investments such as HDFS and Hive • Rich ecosystem including support for SQL, Machine Learning, Steaming and multiple language APIs such as Scala, Python and Java Friday, January 22, 16

- 5. Introduction • Ok, so where should I start? Friday, January 22, 16

- 6. Spark Essentials • Resilient Distributed Datasets (RDD) • Transformers • Actions • Spark Driver Programs and SparkContext To begin, you need to know: Friday, January 22, 16

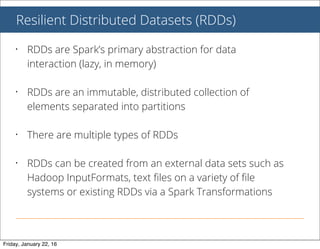

- 7. Resilient Distributed Datasets (RDDs) • RDDs are Spark’s primary abstraction for data interaction (lazy, in memory) • RDDs are an immutable, distributed collection of elements separated into partitions • There are multiple types of RDDs • RDDs can be created from an external data sets such as Hadoop InputFormats, text files on a variety of file systems or existing RDDs via a Spark Transformations Friday, January 22, 16

- 8. Transformations • RDD functions which return pointers to new RDDs (remember: lazy) • map, flatMap, filter, etc. Friday, January 22, 16

- 9. Actions • RDD functions which return values to the driver • reduce, collect, count, etc. Friday, January 22, 16

- 10. Spark RDDs, Transformations, Actions Diagram Load from External Source Example: textFile Transformations Actions RDDs Output Value(s) Example: count, collect 5, ['a','b', 'c'] Friday, January 22, 16

- 11. Spark Driver Programs and Context • Spark driver is a program that declares transformations and actions on RDDs of data • A driver submits the serialized RDD graph to the master where the master creates tasks. These tasks are delegated to the workers for execution. • Workers are where the tasks are actually executed. Friday, January 22, 16

- 12. Driver Program and SparkContext Image borrowed from http://spark.apache.org/docs/latest/cluster-overview.html Friday, January 22, 16

- 13. References • For course information and discount coupons, visit http:// www.supergloo.com/ • Learning Spark Book Summary http://www.amazon.com/ Learning-Spark-Summary-Lightning-Fast-Deconstructed- ebook/dp/B019HS7USA/ Friday, January 22, 16

- 14. Next Steps Friday, January 22, 16