Spark Streaming Intro @KTech

- 1. Introto Spark Streaming (pandemic edition) Oleg Korolenko for RSF Talks @Ktech, March 2020

- 2. image credits: @Matt Turck - Big Data Landscape 2017

- 3. Agenda 1.Some streaming concepts (quickly) 2.Streaming models: Microbatchning vs One-record-a- Time models 3.Windowing, watermarks, state management 4.Operations on state and joins 5.Sources and Sinks Oleg Korolenko for RSF Talks @Ktech, March 2020

- 4. Notinthistalk » Spark as distributed compute engine » I will not cover specific integrations (like with Kafka) » I will not compare it to some specific streaming solutions Oleg Korolenko for RSF Talks @Ktech, March 2020

- 5. API hell - DStreams (deprecated) - Continuous mode (experimental from 2.3) - Structured Streaming (the way to go, in this talk) Oleg Korolenko for RSF Talks @Ktech, March 2020

- 6. Streaming concepts: Data Data in motion vs data at rest (in the past) Potentially unbounded vs known size Oleg Korolenko for RSF Talks @Ktech, March 2020

- 7. Spark streaming - Concept » serves small batches of data collected from stream » provides them at fixed time intervals (from 0.5 secs) » performs computation image credits: Spark official doc

- 8. Microbatching application of Bulk Synchronous Parallelism (BSP) system Consists of : 1. A split distribution of asynchronous work (tasks) 2. A synchronous barrier, coming in at fixed intervals (stages) Oleg Korolenko for RSF Talks @Ktech, March 2020

- 9. Model: Microbatching Transforms a batch-like query into a series of incremental execution plans Oleg Korolenko for RSF Talks @Ktech, March 2020

- 10. One-record-at-a-time-processing Dataflow programming - computation is a graph of data flowing between operations - computations are black boxes one to-each other ( vs Catalyst in Spark) In : ApacheFlink, Google DataFlow Oleg Korolenko for RSF Talks @Ktech, March 2020

- 11. Model: One-record-at-a-time-processing processing user functions by pipelining - deploys functions as pipelines in a cluster - flows data through pipelines - pipelines steps are parallilized (differently, depedning on operators) Oleg Korolenko for RSF Talks @Ktech, March 2020

- 12. Microbatchingvs One-at-a-time despite higher latency PROS: 1.sync boundaries gives the ability to adapt (f.i task recovering from failure if executor is down, scala executors etc) 2.data is available as a set at every microbatch (we can inspect, adapt, drop, get stats) 3.easier model that looks like data at rest Oleg Korolenko for RSF Talks @Ktech, March 2020

- 13. Spark streamingAPI » API on top of Spark SQL Dataframe,Dataset APIs // Read text from socket val socketDF = spark .readStream .format("socket") .option(...) .load() socketDF.isStreaming // Returns True for DataFrames that have streaming sources Oleg Korolenko for RSF Talks @Ktech, March 2020

- 14. Spark streamingAPI, behindthe lines [DataFrame/Dataset] => [Logical plan] => [Optimized plan] => [Series of incremental execution plans] Oleg Korolenko for RSF Talks @Ktech, March 2020

- 15. Triggering Run only once: val onceStream = data .writeStream .format("console") .queryName("Once") .trigger(Trigger.Once()) Oleg Korolenko for RSF Talks @Ktech, March 2020

- 16. Triggering Scheduled execution based on processing time: val processingTimeStream = data .writeStream .format("console") .trigger(Trigger.ProcessingTime("20 seconds")) processing hasn't yet finished next batch will start immediately Oleg Korolenko for RSF Talks @Ktech, March 2020

- 17. Processing We can use usual Spark transformation and aggregation APIs but where's streaming semantics there ? Oleg Korolenko for RSF Talks @Ktech, March 2020

- 19. Processing:WindowingAPI val avgBySensorTypeOverTime = sensorStream .select($"timestamp", $"sensorType") .groupBy(window($"timestamp", "1 minutes", "1 minute"), $"sensorType") .count() Oleg Korolenko for RSF Talks @Ktech, March 2020

- 20. Tumblingwindow eventsDF .groupBy(window("eventTime", "5 minute")) .count() image credits: @DataBricks Engineering blog

- 21. Slidingwindow eventsDF .groupBy(window("eventTime", "10 minutes", "5 minutes")) .count() image credits: @DataBricks Engineering blog

- 22. Late events image credits: @DataBricks Engineering blog

- 23. Watermarks "all input data with event times less than X have been observed" eventsDF .groupBy(window("eventTime", "10 minutes", "5 minutes")) .watermark("10 minutes") .count() Oleg Korolenko for RSF Talks @Ktech, March 2020

- 24. Watermarks image credits: @DataBricks Engineering blog

- 25. Statefulprocessing Work with data in the context of what we had already seen in the stream Oleg Korolenko for RSF Talks @Ktech, March 2020

- 26. State management image credits: @DataBricks Engineering blog

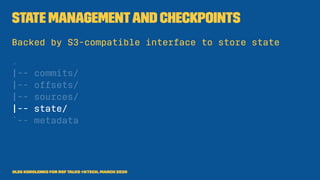

- 27. State managementand checkpoints Backed by S3-compatible interface to store state . |-- commits/ |-- offsets/ |-- sources/ |-- state/ `-- metadata Oleg Korolenko for RSF Talks @Ktech, March 2020

- 28. Operations - State mapWithState // we produce a single result flatMapWithState // we produce 0 or N results in output Oleg Korolenko for RSF Talks @Ktech, March 2020

- 29. Example: Domain // Input events val weatherEvents: Dataset[WeatherEvents] // Weather station event case class WeatherEvent( stationId: String, timestamp: Timestamp, temp: Double ) // Weather avg temp output case class WeatherEventAvg( stationId: String, start: Timestamp, end: Timestamp, avgTemp: Double ) Oleg Korolenko for RSF Talks @Ktech, March 2020

- 30. Compute using state val weatherEventsMovingAvg = weatherEvents // group by station .groupByKey(_.stationId) // processing timeout .mapGroupsWithState(GroupStateTimeout.ProcessingTimeTimeout) (mappingFunction) Oleg Korolenko for RSF Talks @Ktech, March 2020

- 31. Mapping function def mappingFunction( key: String, values: Iterator[WeatherEvent], groupState: GroupState[List[WeatherEvent]] ): WeatherEventAvg = { // update the state with the new events val updatedState = ... // update the group state groupState.update(updatedState) // compute new event output using updated state WeatherEventAvg(key, ts1, ts2, tempAvg) } Oleg Korolenko for RSF Talks @Ktech, March 2020

- 32. Writetoasinkand startthe stream // define the sink for the stream weatherEventsMovingAvg .writeStream .format("kafka") // determines that the kafka sink is used .option("kafka.bootstrap.servers", kafkaBootstrapServer) .option("checkpointLocation", "/path/checkpoint") // stream will start processing events from sources and write to sink .start() } Oleg Korolenko for RSF Talks @Ktech, March 2020

- 33. Operations -Joins » stream join stream » stream join batch Oleg Korolenko for RSF Talks @Ktech, March 2020

- 34. Sources » File-based: JSON, CSV, Parquet, ORC, and plain text » Kafka, Kinesis, Flume » TCP sockets Oleg Korolenko for RSF Talks @Ktech, March 2020

- 35. Workingwith sources image credits: Stream Processing with Apache Spark @OReilly

- 36. Offsets in checkpoints . |-- commits/ |-- offsets/ |-- sources/ |-- state/ `-- metadata Oleg Korolenko for RSF Talks @Ktech, March 2020

- 37. Sinks » File-based: JSON, CSV, Parquet, ORC, and plain text » Kafka, Kinesis, Flume Experimentation: - Memory, Console Custom: - forEach (implement ForEachWriter to integrate with anything) Oleg Korolenko for RSF Talks @Ktech, March 2020

- 38. Failure recovery » Spark uses checkpoints Write Ahead Log (WAL) » for Spark Streaming hwen we receive data from sources we buffer it » we need to store additional metadata to register offsets etc » we save on offset, data to be able to replay it from sources Oleg Korolenko for RSF Talks @Ktech, March 2020

- 39. "Exactlyonce" deliveryguarantee Combination of replayable sources idempotent sinks processing checkpoints Oleg Korolenko for RSF Talks @Ktech, March 2020

- 40. Readsand refs 1.Streaming 102:The World beyond Batch(article) by Tyler Akidau, 2016 2.Stream Processing with Apache Flink by Fabian Hueske and Vasiliki Kalavri, O'Reilly, April 2019 3.Stream Processing with Apache Spark by Francois Garillot and Gerard Maas, O'Reilly, 2019 4.Discretized Streams: Fault-Tolerant Streaming Computation at Scale(whitepaper) by MatheiZaharia, Berkley 5.Event-time Aggregation and Watermarking in Apache Spark’s Structured Streaming by Tathagata Das, DataBricks enginnering blog Oleg Korolenko for RSF Talks @Ktech, March 2020

- 41. Thanks ! Oleg Korolenko for RSF Talks @Ktech, March 2020

![Spark streamingAPI, behindthe lines

[DataFrame/Dataset] =>

[Logical plan] =>

[Optimized plan] =>

[Series of incremental execution plans]

Oleg Korolenko for RSF Talks @Ktech, March 2020](https://image.slidesharecdn.com/20200317spark-streaming-intro-talkktech-200924174045/85/Spark-Streaming-Intro-KTech-14-320.jpg)

![Example: Domain

// Input events

val weatherEvents: Dataset[WeatherEvents]

// Weather station event

case class WeatherEvent(

stationId: String,

timestamp: Timestamp,

temp: Double

)

// Weather avg temp output

case class WeatherEventAvg(

stationId: String,

start: Timestamp,

end: Timestamp,

avgTemp: Double

)

Oleg Korolenko for RSF Talks @Ktech, March 2020](https://image.slidesharecdn.com/20200317spark-streaming-intro-talkktech-200924174045/85/Spark-Streaming-Intro-KTech-29-320.jpg)

![Mapping function

def mappingFunction(

key: String,

values: Iterator[WeatherEvent],

groupState: GroupState[List[WeatherEvent]]

): WeatherEventAvg = {

// update the state with the new events

val updatedState = ...

// update the group state

groupState.update(updatedState)

// compute new event output using updated state

WeatherEventAvg(key, ts1, ts2, tempAvg)

}

Oleg Korolenko for RSF Talks @Ktech, March 2020](https://image.slidesharecdn.com/20200317spark-streaming-intro-talkktech-200924174045/85/Spark-Streaming-Intro-KTech-31-320.jpg)