Spectrum Scale - Diversified analytic solution based on various storage services - public

- 1. #ibmedge© 2016 IBM Corporation A Diversified Analytic Solution Based on Various Storage Services Sep 21, 2016

- 2. #ibmedge Agenda • Spectrum Scale Deliveries for Data Analytics • Spectrum Scale HDFS Transparency Hadoop Connector • Advanced Data Management and Protection for Analytics • Spectrum Scale Data Analytics Deployment Models • Solutions dedicated to Client’s Success 1

- 3. #ibmedge Hadoop Data Analytics with IBM Spectrum Scale • IBM Spectrum Scale is a proven enterprise ready alternative of HDFS for Hadoop data analytics • Completely compatible with HDFS client API and Hadoop applications • Unified File, Object and HDFS access interface • Mature enterprise features make it easy to manage and protect data 2

- 4. #ibmedge IBM Spectrum Storage and Computing for Data Analytics • IBM Spectrum Storage family and Spectrum Computing provide integrated solution for Data Analytics workload. • Mature enterprise class and proven features in Spectrum Storage provides rich of data management, monitor, security enhancement. • Spectrum Computing provide high performance and cost effective services for Data Analytics workload. 3 IBM Spectrum Control IBM Spectrum Protect IBM Spectrum Archive IBM Spectrum Virtualize IBM Spectrum Accelerate IBM Spectrum Scale IBM Spectrum Conductor IBM Spectrum Conductor With Spark IBM Spectrum LSF IBM Spectrum Storage IBM Spectrum Computing

- 5. #ibmedge Spectrum Scale - Unified Software Defined Storage 4 Unified Scale-out Data Lake • File In/Out, Object In/Out; Analytics on demand. • High-performance native protocols • Single Management Plane • Cluster replication & global namespace • Enterprise storage features across file, object & HDFS Spectrum Scale Expand to other Storage Systems (DeepFlash 150 or others) Expand to server local disk drives …… FDR/QDR IB or 10/40 GBE POSIX File- System APISMB Windows File Sharing NFS Object iSCSI Linux / UNIX File Sharing Web & Swift / S3 Compatible Block Storage (Experimental) Protocol Nodes managed by CES Framework General Applications Elastic Storage Server HDFS Transparency External Hadoop / Spark Cluster And Cloud Spectrum Scale TCT

- 6. #ibmedge IBM Elastic Storage Server and IBM Data Engine 5 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 IBM Elastic Storage Server (ESS) is Powered by IBM Spectrum Scale RAID delivers: Analytics • ESS • Spectrum Computing • IOP with Hadoop & Spark • Watson Explorer Hadoop & Spark • Spectrum Scale • Spectrum Symphony • Platform Cluster Manager • IOP with Hadoop and Spark NoSQL • CAPI enabled Power System SAP NANA • ESS Certified • IBM Power Certified IBM Data Engine for: Optimal Solution for All Data Needs Single repository of data with unified file, Hadoop and object support Highest Reliability Mean Time to Failure many orders of magnitude longer than the life of the hardware Highest Performance Faster than rated disk speeds Cost Effectiveness Less then 1 cent per GB per month

- 7. © 2016 IBM Corporation #ibmedge 6 Spectrum Scale HDFS Transparency Hadoop Connector

- 8. #ibmedge We are transforming • Storage & Compute integrated in the same node • Compute node has to access Spectrum Scale file system directly • Build system from scratch 7 Hadoop Client Hadoop FileSystem API Connector on Spectrum Scale API & POSIX Spectrum Scale Node Hadoop Client Hadoop FileSystem API Connector on Spectrum Scale API & POSIX Node Hadoop Client Hadoop FileSystem API Connector on Spectrum Scale API & POSIX Node Hadoop Client Hadoop FileSystem API HDFS Client Node Hadoop Client Hadoop FileSystem API HDFS Client Node Hadoop Client Hadoop FileSystem API HDFS Client Node HDFS RPC over network Spectrum Scale Connector Service Node Connector on Spectrum Scale API & POSIX Spectrum Scale Connector Service Node Connector on Spectrum Scale API & POSIX Spectrum Scale Dedicate Hadoop compute cluster Compatible with more Hadoop components and features, such as Impala, distcp, webhdfs and so on. Enhanced security. Full Kerberos support. Improved performance. Leverage HDFS client cache.

- 9. #ibmedge Based on Hadoop RPC 8 Integrated system for data analytics • Seamless supports Hadoop application without any changes • Not necessary to mount file system in compute node • Leverage HDFS client cache to improve performance and security HDFS RPC Similar FileSystem method Implemented By ------------------------------------------------------------------ ------------------------------------------ ---------------------- rpc getBlockLocations(GetBlockLocationsRequestProto); getFileBlockLocations Spectrum Scale API rpc getServerDefaults(GetServerDefaultsRequestProto); get default value from configuration file Spectrum Scale API rpc create(CreateRequestProto)returns(CreateResponseProto); create POSIX I/O Interface rpc append(AppendRequestProto) returns(AppendResponseProto); append POXIX I/O Interface rpc setReplication(SetReplicationRequestProto) setReplication Spectrum Scale API rpc setPermission(SetPermissionRequestProto) setPermission Spectrum Scale API rpc setOwner(SetOwnerRequestProto) returns(SetOwnerResponseProto); setOwner Spectrum Scale API rpc setSafeMode(SetSafeModeRequestProto) HDFS specific, can skip …… ……

- 10. #ibmedge Data Integration with HDFS 9 https://hadoop.apache.org/docs/r1.2.1/distcp.html https://hadoop.apache.org/docs/r2.7.2/hadoop-project-dist/hadoop-hdfs/Federation.html distcp •Use MapReduce for large inter/intra-cluster data copying between native HDFS and Spectrum Scale •Use the same semantic as copying data between HDFS •Locality awareness and data is also balanced Federation •Spectrum Scale storage can be co-exist with native HDFS storage to service the same Hadoop workload •Spectrum Scale storage can be added to exsiting HDFS storage without any change. •viewFs is invoked to provide single namespace view

- 11. © 2016 IBM Corporation #ibmedge 10 Advanced Data Management and Protection for Analytics

- 12. #ibmedge Locality Awareness Data Recovery 11 • Map/Reduce jobs • Map/Reduce job comprises of tasks and each task will process one HDFS-called data block • Disk failure or node failures won’t impact much on data locality in native HDFS • Hbase Hfile data locality • If one node fails, the Hbase region server over that node is down • The region server file will be assigned to other region server for service • The data locality of region files can only be restored when major compaction happens if taking native HDFS • Hive table files • In native HDFS, no easy way to restore/control the hive table file’s data locality • Easy data locality restore in Spectrum Scale • WADFG/mmchattr/mmresripefile A B C D Node A has all data for Hbase region / Hive hfile for better performance X A B C D When node A fails, data have to migrate to other node to keep data safe √ A B C D A B C D √ Spectrum Scale locality awareness data recovery HDFS natively won’t recovery data to original node

- 13. #ibmedge Rack-awareness Data Locality for Shared Storage, IBM DeepFlash and ESS 12 Benefit for: • More than 1 rack in the file system • One shared storage(or IBM DeepFlash and ESS) per rack • Top of Rack switch network Advantages: • Map/Reduce could be scheduled to the rack where the input data are located • Reduce the network traffic for inter-rack switch • Performance gain from fast reading from local rack <property> <name>gpfs.storage.type</name> <value>rackaware</value> </property>

- 14. #ibmedge Transparent Data Compression • Transparent compression for HDFS transparency, Object, NFS, SMB and POSIX interface. • Improved storage efficiency • Typically 2x improvement in storage efficiency • Improved i/o bandwidth • Read/write compressed data reduces load on storage • Improved client side caching • Caching compressed data increases apparent cache size 13 Traditional Application (POSIX) Spectrum Scale Node Native Compression Block Device / NSD Disk Object/NFS/SMB HDFS Transparency Vision • Per file compression • Use policies • Compress low use data

- 15. #ibmedge Transparent Data Encryption • Native Encryption of data at rest • Files are encrypted before they are stored on disk • Keys are never written to disk • No data leakage in case disks are stolen or improperly decommissioned • Secure deletion • Ability to destroy arbitrarily large subsets of a file system • No ‘digital shredding’, no overwriting: Security deletion is a cryptographic operation • Use Spectrum Scale Policy to encrypted (or exclude) files in fileset or file system • Generally < 5% performance impact 14 Sequential Read Sequential Write Traditional Application (POSIX) Spectrum Scale Node (Encryption/Decryption) IBM Security Key Lifecycle Manager Vormetric Key ManagerBlock Device / NSD Disk Object/NFS/SMB HDFS Transparency

- 16. #ibmedge Spectrum Scale for Hadoop Security and Audit • Spectrum Scale HDFS Transparency + IBM Security Guardium Data Activity Monitor • Spectrum Scale enables IBM Security Guardium Data Active Monitor for Hadoop data access Audit • Spectrum Scale HDFS Transparency support Ranger • Ranger is open source and used for security/audit • IBM IOP 4.2 includes this component. • Spectrum Scale HDFS Transparency fully supports Kerberos • ACL • Supports NameNode Block Access Token 15 Spectrum Scale Ranger HDFS Transparency Hadoop Platform YARN MapReduceSpark HBase Zookeeper Flume Hive Pig Sqoop HCatalog Solr/Lucene kafka

- 17. #ibmedge Transparent Cloud Tiering Expanding storage to the cloud | 16 • Archive to object or tape • Tier to Spectrum Archive incl. dual copies for long term retention • Tier to Cleversafe as an option for disk at lower TCO • Cognitive engine to move data based upon ‘heat’ or policy, even across the Internet High capacity tiered storage, mixed data access requirements for high-performance & retention • Media storage for raw, processing, storage • Digital surveillance for ingest, plus retention • Technical Computing for clusters, data analytics and aged results System pool (Flash) Gold pool (Disk) Tape Library Cloud Object Storage OR One cloud or tape per filesystem

- 18. #ibmedge Data DR Derived from Spectrum Scale • Hbase Cluster Replication • WAL-based asynchronous way • All nodes in both cluster should be accessible for each other • Both clusters could provide Hbase service on the same time • Only available for Hbase • Supported over HDFS Transparency + Spectrum Scale • Spectrum Scale AFM IW-based replication • AFM IW-based replication from production cluster(cache site) to standby cluster(home site) • Both sites should be Spectrum Scale cluster for Hadoop application failover • Only one cluster can provide Hbase service(conflict in assigning region servers if Hbase is up on both cluster) • Spectrum Scale Active-Active DR • 2 replica in production cluster; another replica in standby cluster • Dedicated network for two clusters(10Gb bandwidth) • Distance between two clusters is less than 100Km • Can achieve RPO=0 in DR 17 Spectrum Scale HDFS Transparency Spectrum Scale HDFS Transparency AFM WAN Spectrum Scale HDFS Transparency HDFS Transparency Dedicated network

- 19. © 2016 IBM Corporation #ibmedge 18 Spectrum Scale Data Analytics Solution Deployment Models

- 20. #ibmedge In-place Data Analytics 19 A A A Existing System Analytics SystemData ingest Export result A A A Existing System Spectrum Scale File System File Object Analytics System HDFS Transparency Traditional Analytics Solution In-place Analytics Solution • Build analytics system from scratch, not only for compute but also for storage • Add storage and compute resource at the same time no matter it’s required • Native HDFS doesn’t support native POSIX • Lacks of enterprise data management and protection capability Can leverage existing Spectrum Scale storage Unified interface for File and Object analytics POSIX compatibility Mature enterprise data management and protect solutions derived from Spectrum Storage family and 3rd part components Transparency Cloud Tiering

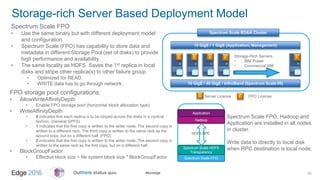

- 21. #ibmedge Storage-rich Server Based Deployment Model 20 S FServer License FPO License Storage-Rich Servers • IBM Power • Commercial X86 S FS S F Spectrum Scale BD&A Cluster 10 GigE / 40 GigE / InfiniBand (Spectrum Scale I/0) 10 GigE / 1 GigE (Application, Management) Spectrum Scale FPO Spectrum Scale HDFS Transparency Hadoop Application HDFS RPC Spectrum Scale FPO, Hadoop and Application are installed in all nodes in cluster. Write data to directly to local disk when RPC destination is local node. Spectrum Scale FPO • Use the same binary but with different deployment model and configuration. • Spectrum Scale (FPO) has capability to store data and metadata in different Storage Pool (set of disks) to provide high performance and availability. • The same locality as HDFS. Saves the 1st replica in local disks and stripe other replica(s) to other failure group. • Optimized for READ. • WRITE data has to go through network. FPO storage pool configurations: • AllowWriteAffinityDepth • Enable FPO storage pool (horizontal block allocation type) • WriteAffinityDepth • 0 indicates that each replica is to be striped across the disks in a cyclical fashion. (General GPFS) • 1 indicates that the first copy is written to the writer node. The second copy is written to a different rack. The third copy is written to the same rack as the second copy, but on a different half. (FPO) • 2 indicates that the first copy is written to the writer node. The second copy is written to the same rack as the first copy, but on a different half. • BlockGroupFactor • Effective block size = file system block size * BlockGroupFactor

- 22. #ibmedge SAN Shared Storage Based Deployment Model • Compute Nodes only needs to install Hadoop components, like Hbase, and HDFS client to service Analytics workload. • Spectrum Scale cluster is built on SAN shared storage. • Spectrum Scale HDFS Transparency Connector (includes NameNode and DataNode) are deployed in Spectrum Scale NSD Servers or dedicate client nodes. 21 Software defined storage supports various platform, such as IBM Power and commercial X86, and storage system, such as IBM DeepFlash 150 and other RAID system. No data recovery when compute node or Spectrum Scale Transparency node (NameNode or DataNode) failed. Easy to extend storage capacity, I/O performance, Analytics performance separately. Leverage SAN shared storage to provide high performance and high availability derived from FC and InfiniBand (and RDMA) and Spectrum Scale replication. S Server License S S S FC Switch / InfiniBand (RDMA) 10 GigE / 1 GigE RAID Storage Compute Nodes • IBM Power or X86 • Only Hadoop services and HDFS client Spectrum Scale BD&A Cluster • HDFS Transparency NameNode/DataNode • IBM Power or X86 C Client License C C C

- 23. #ibmedge IBM DeepFlash 150 Based Deployment Model 22 • IBM DeepFlash 150 • 3U chassis starting at 128TB up to 512TB • 8 to 64 8TB flash cards • Up to 2M raw IOPS • sub 1ms latency • 12GB/s throughput • Data platform for Analytics (Hadoop, Spark, SAP Hana) • Faster time to insights • Load and off load data to and from memory faster • Shared data platform for multiple instance and forms of analytics IBM DeepFlash 150 S Server License S S S SAS Connection 10 GigE / 1 GigE RAID Storage Compute Nodes • IBM Power or X86 • Only Hadoop services and HDFS client Spectrum Scale BD&A Cluster • HDFS Transparency NameNode/DataNode • IBM Power or X86

- 24. #ibmedge ESS Based Deployment Model 23 C C C 10 GigE / 1 GigE Spectrum Scale BD&A Cluster ESS E E InfiniBand (RDMA) / 40 GigE / 10 GigE C Client License Compute Nodes • IBM Power or X86 • Only Hadoop services and HDFS client • Compute Nodes only needs to install Hadoop components, like Hbase, and HDFS client to service Analytics workload. • Spectrum Scale cluster is built on IBM Elastic Storage Server (ESS). • Spectrum Scale HDFS Transparency Connector (includes NameNode and DataNode) are deployed in dedicate client nodes which joined into Spectrum Scale cluster in ESS. ESS provides high performance and reliability powered by Spectrum Scale RAID Software De-Cluster RAID Erasure code ready End-to-end checksum Supports FDR/QDR InfiniBand and RDMA ESS can also service other workload, such as OpenStack at the same time

- 25. © 2016 IBM Corporation #ibmedge 24 Solutions dedicated to Client’s Success

- 26. #ibmedge Analytics on Docker with Spectrum Scale 25 Unified Data Plane for Docker’s Orchestration Fileset X Fileset Y Fileset Z Image File A Image File B Image File C …… Docker Container A SharedPriv. Docker Container B SharedPriv. Docker Container C SharedPriv. Fileset X Fileset YVirtual Data Plane across Docker instances Multi-tenant Storage- Centric Services in Cloud Managed by Docker’s Orchestration IBM Spectrum Computing GPFS File (w/ file clone) as the runtime Image GPFS Fileset as shared volume between Docker containers Shared Fileset build up the virtual data planes across containers. Spectrum Scale control-plane can transparently control the virtual data plane Achieve/Snapshot/DR can be done at Fileset level. Disk Tape ESS Single name space Share Nothing Cluster Spectrum Scale AFM Spectrum Scale TCT

- 27. #ibmedge Solutions for Data Warehouse – SAP HAHA 26 node03 Spectrum Scale FPO + SAP NAHA node01 node02 shared filesystem - GPFS HDD flash data01 log01 DB partition 1 - index server - statistic server - SAP HANA® studio - SAP HANA® DB worker node HDD flash DB partition 2 - index server - statistic server - SAP HANA® DB worker node SAP HANA® DB data02 log02 HDD flash DB partition 3 - index server - statistic server - SAP HANA® DB worker node data03 log03 Key advantages from Spectrum Scale: Disk failure tolerance Node failure tolerance Data locality/data locality restore Fast repair when bringing down disk back 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 FC5887 IBM Elastic Storage Server is SAP NAHA Certified Enterprise Storage

- 28. #ibmedge Solutions for Data Warehouse – IBM DB2 • Each Data Module • Has a set of local disks • Hosts a set of Partitions (1-N) • 3 Module building block • GPFS File system over building block • One or Two replicas • Failure of any one Failure Group does not affect GPFS operation • High Availability • Distributed or mutual failover • Data fully visible across DPF cluster • Data locally available within building block • Optional - local RAID for disk • Avoid rebuild due to disk failure 27 Spectrum Scale + IBM DB2 DPF / DashDB

- 29. #ibmedge Bill Analytics System for a Telecom Operators 28 Data Collection Sub-system ETL System Network equipment's data collection Internet activities capture and pre-processing (merge, etc) Real time sales/marketing sub-system Power Linux Power Linux Power Linux Spectrum Computing Spectrum Scale FPO Spectrum Scale Multi-cluster Power Linux Power Linux Power Linux Spectrum Computing Spectrum Scale FPO Built on 2013 runs Hadoop 1.x Built on 2015 runs Hadoop 2.x NoSQL database Import aggregated results into NoSQL database Customer Behavior Subsystem Other business sub-system HBase MapReduce MapReduce Spark HBase Hive Power Linux More than 100 Nodes Process 40 TB data each day Spectrum Scale FPO manages more than 2600 disks (2.6 PB raw capacity) in this system. Spectrum Scale and Spectrum Computing is the most stable Big Data system we have. Spectrum Scale POSIX access interface and Multi-cluster feature resolves data balance issue and provides 5X performance improvement in data loading phase. -- Client IT Architecture

- 30. #ibmedge ESS Services Hadoop and HPC Mixed Workload IBM Power E880 IBM Elastic Storage Server (ESS) Compute Nodes HDFS Transparency Nodes Hadoop Dev Cluser Hadoop Test Cluser Hadoop PROD Cluser HPC Cluser Spectrum Scale Cluster Key Advantages - IOP with Value-Add (Spectrum Scale + Spectrum Computing) - Share the same ESS storage for both Hadoop clusters and HPC cluster - Deploying several Hadoop clusters over one file system - Mixed workloads, scaling storage and computing capability separately

- 31. #ibmedge Notices and Disclaimers 30 Copyright © 2016 by International Business Machines Corporation (IBM). No part of this document may be reproduced or transmitted in any form without written permission from IBM. U.S. Government Users Restricted Rights - Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM. Information in these presentations (including information relating to products that have not yet been announced by IBM) has been reviewed for accuracy as of the date of initial publication and could include unintentional technical or typographical errors. IBM shall have no responsibility to update this information. THIS DOCUMENT IS DISTRIBUTED "AS IS" WITHOUT ANY WARRANTY, EITHER EXPRESS OR IMPLIED. IN NO EVENT SHALL IBM BE LIABLE FOR ANY DAMAGE ARISING FROM THE USE OF THIS INFORMATION, INCLUDING BUT NOT LIMITED TO, LOSS OF DATA, BUSINESS INTERRUPTION, LOSS OF PROFIT OR LOSS OF OPPORTUNITY. IBM products and services are warranted according to the terms and conditions of the agreements under which they are provided. IBM products are manufactured from new parts or new and used parts. In some cases, a product may not be new and may have been previously installed. Regardless, our warranty terms apply.” Any statements regarding IBM's future direction, intent or product plans are subject to change or withdrawal without notice. Performance data contained herein was generally obtained in a controlled, isolated environments. Customer examples are presented as illustrations of how those customers have used IBM products and the results they may have achieved. Actual performance, cost, savings or other results in other operating environments may vary. References in this document to IBM products, programs, or services does not imply that IBM intends to make such products, programs or services available in all countries in which IBM operates or does business. Workshops, sessions and associated materials may have been prepared by independent session speakers, and do not necessarily reflect the views of IBM. All materials and discussions are provided for informational purposes only, and are neither intended to, nor shall constitute legal or other guidance or advice to any individual participant or their specific situation. It is the customer’s responsibility to insure its own compliance with legal requirements and to obtain advice of competent legal counsel as to the identification and interpretation of any relevant laws and regulatory requirements that may affect the customer’s business and any actions the customer may need to take to comply with such laws. IBM does not provide legal advice or represent or warrant that its services or products will ensure that the customer is in compliance with any law

- 32. #ibmedge Notices and Disclaimers Con’t. 31 Information concerning non-IBM products was obtained from the suppliers of those products, their published announcements or other publicly available sources. IBM has not tested those products in connection with this publication and cannot confirm the accuracy of performance, compatibility or any other claims related to non-IBM products. Questions on the capabilities of non-IBM products should be addressed to the suppliers of those products. IBM does not warrant the quality of any third-party products, or the ability of any such third-party products to interoperate with IBM’s products. IBM EXPRESSLY DISCLAIMS ALL WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. The provision of the information contained h erein is not intended to, and does not, grant any right or license under any IBM patents, copyrights, trademarks or other intellectual property right. IBM, the IBM logo, ibm.com, Aspera®, Bluemix, Blueworks Live, CICS, Clearcase, Cognos®, DOORS®, Emptoris®, Enterprise Document Management System™, FASP®, FileNet®, Global Business Services ®, Global Technology Services ®, IBM ExperienceOne™, IBM SmartCloud®, IBM Social Business®, Information on Demand, ILOG, Maximo®, MQIntegrator®, MQSeries®, Netcool®, OMEGAMON, OpenPower, PureAnalytics™, PureApplication®, pureCluster™, PureCoverage®, PureData®, PureExperience®, PureFlex®, pureQuery®, pureScale®, PureSystems®, QRadar®, Rational®, Rhapsody®, Smarter Commerce®, SoDA, SPSS, Sterling Commerce®, StoredIQ, Tealeaf®, Tivoli®, Trusteer®, Unica®, urban{code}®, Watson, WebSphere®, Worklight®, X-Force® and System z® Z/OS, are trademarks of International Business Machines Corporation, registered in many jurisdictions worldwide. Other product and service names might be trademarks of IBM or other companies. A current list of IBM trademarks is available on the Web at "Copyright and trademark information" at: www.ibm.com/legal/copytrade.shtml.

- 33. © 2016 IBM Corporation #ibmedge Thank You