Ad

SplunkLive! Frankfurt 2018 - Monitoring the End User Experience with Splunk

- 1. Monitoring the End User Experience with Splunk Gain insight on both the experience, and the “why” behind the experience Dirk Nitschke | Senior Sales Engineer 10 April 2018 | Frankfurt

- 2. During the course of this presentation, we may make forward-looking statements regarding future events or the expected performance of the company. We caution you that such statements reflect our current expectations and estimates based on factors currently known to us and that actual events or results could differ materially. For important factors that may cause actual results to differ from those contained in our forward-looking statements, please review our filings with the SEC. The forward-looking statements made in this presentation are being made as of the time and date of its live presentation. If reviewed after its live presentation, this presentation may not contain current or accurate information. We do not assume any obligation to update any forward looking statements we may make. In addition, any information about our roadmap outlines our general product direction and is subject to change at any time without notice. It is for informational purposes only and shall not be incorporated into any contract or other commitment. Splunk undertakes no obligation either to develop the features or functionality described or to include any such feature or functionality in a future release. Splunk, Splunk>, Listen to Your Data, The Engine for Machine Data, Splunk Cloud, Splunk Light and SPL are trademarks and registered trademarks of Splunk Inc. in the United States and other countries. All other brand names, product names, or trademarks belong to their respective owners. ©2018 Splunk Inc. All rights reserved. Forward-Looking Statements

- 4. Complexity – Difficult Issues for Everyone ▶ Is the problem with the app, the network or the backend system? ▶ Why are my specialists all saying “it works” but the application is down? ▶ How does performance compare mobile vs. web vs. desktop?APP MANAGERS/ OPERATIONS ▶ How can I deliver new releases faster? ▶ How can I see how my applications are working in production? ▶ How can other developer, test and monitoring tools improve my coding?DEVELOPERS ▶ How do I ensure new releases don’t break critical apps? ▶ How can I do “full stack” monitoring easily? ▶ What changes will optimize application and infrastructure performance?DEVOPS, SRE PERF MANAGER ▶ How are customers using my app? How is it impacting my business? ▶ Which features should I prioritize for future versions? ▶ Are my customers impacted by outages and performance issues?LINE OF BUSINESS

- 5. Infrastructure and Application Silos Web Servers Legacy Systems End Users Network/ Load Balancing Messaging Databases Java, .NET, PHG, etc. App Servers Security Virtualization, Containers, Servers, Storage

- 6. What Is Needed? Web Servers Legacy Systems End Users Network/ Load Balancing Messaging Databases Java,.NET, PHG, etc. App Servers Security Virtualization, Containers, Servers, Storage KPIs, SLOs, service visualization, notable events affecting SLAs Mobile intelligence, wire data, deep integration w/ AWS Correlation with business data to enable context Platform: Universal indexing + analytics of data across silos

- 7. ▶ Ingest data once – single source of truth across teams ▶ Analyze machine data across entire stack ▶ Integrate data from other management tools ▶ Connect machine data to business services ▶ Identify root cause of problems quickly ▶ Apply best practices in analytics to predict changes in reliability and service usage Reliability Requires a Platform Approach OTHER TEAMS PRODUCT MANAGERS/ BUSINESS OWNERS DEVOPS, SRE PERF MANAGER APP MANAGERS/ OPERATIONS DEVELOPERS

- 8. A Platform Approach for Application Performance Analytics Network InfrastructureLayer Packet, Payload, Traffic, Utilization, Perf Storage Utilization, Capacity, Performance Server Performance, Usage, Dependency ApplicationLayer User Experience Usage, Response Time, Failed Interactions Byte Code Instrumentation Usage, Experience, Performance, Quality Business Performance Corporate Data, Intake, Output, Throughput Splunk Approach: ▶ Single repository for ALL data ▶ Data in original raw format ▶ Machine learning ▶ Simplified architecture ▶ Fewer resources to manage ▶ Collaborative approach MACHINE DATA

- 9. Apps for Application Monitoring *ni x Splunk Stream, Real User Monitoring 300+ IT Ops and App Delivery Apps and Add-Ons Splunk for Mobile Intelligence Splunk Apps for Amazon Web Services and Microsoft Exchange

- 10. ▶ Gain real-time insight into application performance and customer experience ▶ Attain visibility into cloud services ▶ Deliver immediate insights from streaming network ▶ Network-based packet capture does not require DBA or other admin tools and doesn’t affect performance Gaining Transaction Insight From Your Network Splunk Stream

- 11. HTTP Event Collector – Agentless Fast Insight ▶ Immediate visibility to mobile app crashes ▶ Insight into mobile app use – MAU/DAU, device usage, network insight ▶ Transaction performance insight curl -k https://<host>:8088/services/collector -H 'Authorization: Splunk <token>' -d '{"event":"Hello Event Collector"}' Applications IoT Devices Agentless, direct data onboarding via a standard API Scales to Millions of Events/Second

- 12. ▶ Immediate visibility to mobile app crashes ▶ Insight into mobile app use – MAU/DAU, device usage, network insight ▶ Transaction performance insights ▶ Correlate mobile with other data types for complete insight Gaining Insight on Your Mobile Apps

- 13. Splunk IT Service Intelligence Data-driven service monitoring and analytics Splunk IT Service Intelligence Time-Series Index Platform for Operational Intelligence Dynamic Service Models Schema-on-Read Data Model Common Information Model At-a-Glance Problem Analysis Early Warning on Deviations Event Analytics Simplified Incident Workflows

- 14. Splunk: Application Performance Analytics End Users Networking/ Load-balancing Web Servers App Servers Legacy Systems Messaging Databases Security Virtualization, Containers, Servers, Storage Java, .NET, PHP, etc. Manage to KPIs, SLOs – isolate root case and service impact Analytics for hybrid and cloud environments + microservices stacks Full stack monitoring that integrates your APM tool’s data Platform approach that spans technology and team silos

- 15. Splunk and APM Section subtitle goes here

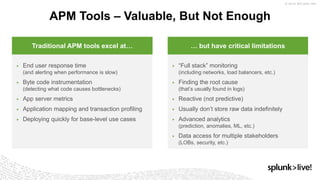

- 16. Traditional APM tools excel at… … but have critical limitations ▶ End user response time (and alerting when performance is slow) ▶ Byte code instrumentation (detecting what code causes bottlenecks) ▶ App server metrics ▶ Application mapping and transaction profiling ▶ Deploying quickly for base-level use cases ▶ “Full stack” monitoring (including networks, load balancers, etc.) ▶ Finding the root cause (that’s usually found in logs) ▶ Reactive (not predictive) ▶ Usually don’t store raw data indefinitely ▶ Advanced analytics (prediction, anomalies, ML, etc.) ▶ Data access for multiple stakeholders (LOBs, security, etc.) APM Tools – Valuable, But Not Enough

- 17. ▶ Some, but not all of your apps are instrumented ▶ Other “off-the-shelf” apps can’t be instrumented with traditional APM ▶ Non-instrumented parts of your stack can’t be “seen” Covering APM “Blind Spots” Without Splunk Physical Server (Dell, HP, CISCO blades or servers) Guest OS (Windows/Linux/*Nix) Database (Oracle, SQL Server, MySQL) Hypervisor (ESX, HyperV, Citrix) Applications, business/mission services App Server (WebLogic, Jboss EAP, WebSphere) Web Server (Apache, TomCat) SAN/NAS Storage (EMC, AppNet) Network AWS Firewalls Database (Oracle, SQL Server, MySQL) SAN/NAS Storage (EMC, AppNet) Network Load Balancers Legacy Environments (AS400, Mainframe, ESBs, others) Akamai Packaged Apps (SAP, PeopleSoft, etc) Log Analysis (System, Application, Security, etc) APMInstrumented- ApplicationA APMInstrumented- ApplicationB ApplicationD (notAPMInstrumented) ApplicationC (notAPMInstrumented) ▶ End-to-end, holistic visibility to the complete service ▶ Insight across ALL data sources and applications ▶ PREDICTIVE analysis, before issues occur With Splunk

- 18. ▶ Pull data from APM tools and provide events to APM tools ▶ Gain insight into EUM, application requests, app errors and correlate with logs all in one platform ▶ Reduce the “clicks” between spotting problems and finding root cause ▶ Forecast, predict and detect anomalies in APM data ▶ Integrate triage with non-application layers of the stack APM as a Data Source for Splunk

- 19. APM Tools ▶ Splunk Add-on and App for New Relic ▶ Splunk Add-on and App for AppDynamics ▶ Dynatrace App (provided by Dynatrace) Other Notable APM Apps ▶ Web Performance (based on boomerang.js) ▶ Splunk Mobile Intelligence (Splunk MINT) ▶ Splunk Stream splunkbase.splunk.com Splunk Apps for APM

- 20. Splunk Demo Presented by Buttercup Splunker

- 21. © 2018 SPLUNK INC. ▶ Ensures continuous uptime thanks to real-time operational insights into service and quality metrics ▶ Gains digital intelligence by comparing customer engagement across Zillow sites ▶ Improves DevOps collaboration for faster release cycles Gaining Real-Time Visibility Into Site Operations “Being part of the larger Splunk ecosystem is extremely valuable to us. We couldn't replicate that if we tried to build something on our own… We have given teams enough autonomy to create their own solutions on top of the Splunk platform. It's all predicated on the fact that Splunk is an enterprise-wide self- service utility now within Zillow.” – Director of Site Operations, Zillow ONLINE SERVICES – IT OPERATIONS, APPLICATION DELIVERY

- 22. © 2018 SPLUNK INC. ▶ Improving website uptime with real-time notifications ▶ Quickly and reliably delivering application features to users ▶ Uncovering business insights and improving the customer experience Democratizing Data to Ensure Great Customer Experience “I don’t believe there is any other product on the market that is able to quickly bring together diverse data sets, offer a powerful language to engineers for data analysis and then ultimately deliver beautiful, visual, actionable reports to the business users.” – Vice President of Engineering, Yelp Reservations TECHNOLOGY – IT OPERATIONS, BUSINESS ANALYTICS

- 23. © 2018 SPLUNK INC. 1. Transcend the silos 2. Ask any question of your data 3. Liberate your APM data Key Takeaways

- 24. Thank You! Don't forget to rate this session on Pony Poll https://ponypoll.com/frankfurt

Editor's Notes

- #2: Hallo, mein Name ist Dirk Nitschke und ich arbeite als Sales Engineer bei Splunk. Dieser Vortrag trägt den Titel „Überwachung des Anwendererlebnisses mit Splunk“. Dabei geht es nicht nur darum, Einsichten in das Anwendererlebnis zu gewinnen, sondern auch die Ursachen zu erforschen, also der Frage nachzugehen, warum der Endnutzer zum Beispiel eine für ihn unerfreuliche Antwortzeit bei der Nutzung einer Anwendung erlebt. Auf Basis dieser Erkenntnisse kann man dann versuchen, das Anwendererlebnis zu verbessern und ggf. auch proaktiv zu agieren bevor der Enduser eine Beeinträchtigung eines Services mitbekommt. Warum macht man das? Häufig wird ein und derselbe Service von unterschiedlichen Firmen angeboten. Und ich nutze den Service, der für mich am einfachsten zu bedienen ist.

- #4: Was brauchen wir dazu: als erstes natürlich Daten über das Erlebnis des Anwenders. Wenn wir uns dann fragen, warum ist das Erlebnis so wie es ist, benötigen wir natürlich auch Daten über die Applikation selber, klar. Aber welche Informationen und Erkenntnisse erwarten wir uns eigentlich von diesen Daten?

- #5: Wie üblich kommt es darauf an, welche Sichtweise man hat. Wir haben hier einmal 4 verschiedene Personengruppen aufgelistet, für die Applikationsperformance von Interesse ist: Als Application Manager, Verantwortlicher für den Betrieb einer Applikation ist es für mich wichtig, sicherzustellen, dass die Applikation das tut, was sie soll. Und wenn das einmal nicht der Fall ist, möchte ich möglichst schnell feststellen, dass es ein Problem gibt, wer wie davon betroffen ist, dann die Ursache identifizieren und natürlich zügig eine Lösung finden und die normale Funktion der Applikation wiederherstellen. Als Applikationsentwickler möchte ich neue Versionen schnell fertigstellen, Fehler schnell identifizieren, sicherstellen dass Test/Build-Zyklen problemlos laufen. Und zusätzlich interessiert mich vielleicht auch, wie sich aktuelle Versionen denn tatsächlich in der Produktion verhalten – nicht nur in meiner meist besc hränkten Testumgebung. Oder testen Sie neue Softwareversionen in ihrer Produktivumgebung? Als Site Reliability Engineer betrachte ich den gesamten Technologiestack. Bei meinen Entscheidungen muss ich berücksichtigen, welche Auswirkungen ein neues Release auf die gesamte Produktivumgebung hat, mit welchen Codeänderungen kann ich welche Verbesserung in Bezug auf Performance und Erlebnis erreichen? Somit benötige ich nicht nur eine Sicht auf eine einzelne Applikation, sondern auf andere Applikationen zu denen Abhängigkeiten bestehen, die Endpunkte an denen die Benutzer sitzen, die Infrastruktur – sowohl die Hardware als auch die kleinen Helferlein, wie DNS. Was passiert, wenn DNS langsam ist? Als Businessverantwortlicher geht es darum zu wissen, wie viele Benutzer meinen Service (nicht die Applikation, sondern den ganzen Service!) benutzen, wie wird der Service verwendet? Gibt es Funktionen, die zum Beispiel gar nicht genutzt werden? Welche finanziellen Auswirkungen hat ein schlechtes Antwortverhalten oder gar Ausfall des Services auf mein Geschäft?

- #6: Die Komplexität von IT Umgebungen war schon immer eine Herausforderung. Aktuelle Entwicklungen wie die Nutzung von Containern oder Umgebungen, die Komponenten on-premise und in der Cloud nutzen, machen es nicht unbedingt einfacher. So übersichtlich wie auf dieser Slide ist Ihre IT Umgebung sicherlich nur, wenn sie sie sehr stark abstrahieren und aus der Reiseflughöhe auf sie schauen. Wesentlich ist aber, dass ein Enduser, der heute eine Applikation oder einen Service nutzt, mit Komponenten aus all diesen Bereichen direkt oder indirekt interagiert Der Betrieb und die Überwachung der Komponenten ist häufig in Silos organisiert. Mit jeweils eigenen Tools und großen Herausforderungen bei der Lösung von Problemen. Identifikation der Fehlerursache, Austausch von Informationen zwischen den Teams und einer fehlenden gemeinsamen Sicht auf die Gesamtumgebung einschließlich der Komponenten in der Cloud.

- #7: Was wird also benötigt? Was wünscht man sich? Zum einen eine Platform, die es erlaubt jede Art von Maschinendaten zu Verarbeiten und analysieren – und zwar über die alten Silogrenzen hinweg. Auf Basis dieser Maschinendaten wird der Gesundheitszustand ganzer Services bewertet, Abweichungen vom Sollzustand und Ausreißer gemeldet. Direkter Zugriff auf die Maschinendaten erlaubt die RCA bei Problemen Die Lösung erlaubt die Integration von Daten die on-premise gesammelt werden, aber auch Daten aus Cloudumgebung oder von Mobilgeräten. Und zusätzlich lassen sich Beziehungen zu Geschäftsdaten herstellen, die die in IT Systemen gesammelten Daten in den Business Kontext setzen (BEISPIEL MIT DEM WEBSTORE: Preis kommt aus DB -> Auf Basis IT Daten sehen, wie viel Umsatz gemacht wurde, aber auch wie viel Umsatz in gefüllten Warenkörben liegt, die nicht ausgecheckt wurden!!!)

- #8: Ein solcher Platform-Ansatz bringt diverse Vorteile mit sich: * Daten werden nur einmal eingelesen statt an mehrere Systeme geschickt zu werden. So hat man nur eine „Source of Truth“ für alle Teams * Daten können über den gesamten Technologiestack analysiert werden, wobei auch Daten aus anderen Tools, die heute schon existieren integriert werden können. * Durch eine zentrale Sicht lassen sich Fehlerursachen üblicherweise schneller analysieren als bei der Nutzung verschiedener Tools.

- #9: Auf Application Performance Analytics angewendet heißt das folgendes: Im Application Layer benötigen wir Daten über das Anwendererlebnis, also wie wird die Applikation genutzt, welche Antwortzeiten treten auf, welche Interaktionen schlagen fehl. Informationen, die sie über Byte Code Instrumentalisierung erfassen, erlauben einen tieferen Einblick in die Nutzung und Laufzeit einzelner Methoden. Aus dem Bereich der Infrastruktur sprechen wir über Daten von Servern, Storage, Netzwerkkomponenten. Diese werden zentral in Splunk gesammelt. Splunk speichert Daten im Originalformat und bewahrt sie so lange auf, wie sie möchten. Die Daten können dann für unterschiedliche Anwendungsfälle von unterschiedlichen Gruppen genutzt und analysiert werden. Verschiedene Nutzer erhalten die für sie relevante Sicht auf den gemeinsamen Datenbestand. Dabei kann es sich um einfache Abfragen handeln, etwa die Anzahl der Benutzer, die in der letzten Stunde meinen Webshop besucht haben. Es sind aber auch kompliziertere Dinge möglich, etwa die Vorhersage von Werten, also zum Beispiel die erwartete Anzahl von Benutzern auf Basis des historischen Verlaufes. Oder eine Klassifikation von Benutzern basierend auf ihrem Kaufverhalten. Insgesamt führt dies zu einer Reduktion der Anzahl von Werkzeugen, die eingesetzt werden und damit natürlich auch zu einer Vereinfachung der Gesamtarchitektur.

- #10: Mit welchen Hilfsmitteln kann ich jetzt Applikationsüberwachung mit Splunk durchführen bzw. wielesen wir die benötigten Daten in Splunk ein? Wir wollen ja den gesamten Technologiestack überwachen, also nicht nur eine einzelne Applikation, sondern auch die Komponenten, von denen die Applikation abhängig ist. Also üblicherweise Datenbanken, Middleware, aber auch Infrastrukturkomponenten wie Betriebssystem, Virtualisierung, Netzwerk, Storage – und nicht zu vergessen ggf. auch Informationen über den genutzten Cloud-Service. Für viele dieser Komponenten bietet Splunk vorgefertigte Erweiterungen – sogenannte Apps oder Add-ons, die sowohl die Erfassung als auch die Auswertung der Daten vereinfachen. Hier links sehen wir zum Beispiel das Splunk Add-on for Amazon WebServices und die zugehörige App, mit denen Daten aus AWS erfasst und visualisiert werden. Rechts außen sehen wir exemplarisch Erweiterungen für VMware, Datenbanken, Windows und UNIX Betriebssysteme, die typischen Webserver oder Applikationsserver. Und ja, es besteht auch die Möglichkeit Daten, die von spezialisierten APM Tool erzeugt wurden, in Splunk zu nutzen. Für Applikationen, bei denen man Zugriff auf den Quellcode hat hilft der Splunk HTTP Event Collector und speziell für ihre Mobile Apps gibt es noch Splunk MINT – Splunk for Mobile Intelligence. Nicht immer ist es möglich, auf einem Gerät Software wie den Splunk Universal Forwarder zu installieren oder Daten remote abzufragen. Nicht alle Applikation lassen sich instrumentalisieren oder sollen instrumentalisiert werden und sie möchten lieber passiv Daten erfassen statt in die Applikation einzugreifen. In diesem Fall kann Splunk Stream von Interesse sein.

- #11: Wer kennt bereits Splunk Stream? Splunk Stream erlaubt es, den Inhalt von Netzwerkpaketen als Datenquelle zu nutzen. Der Netzwerkverkehr ist sicherlich die ultimative Quelle, wenn man untersuchen möchte wie Komponenten miteinander kommunizieren. Und manchmal ist es die einzige Datenquelle, die wir haben – zum Beispiel, wenn es nicht möglich ist, Software wie den Splunk Universal Forwarder auf einem System zu installieren. Netzwerkdaten enthalten viele Informationen. Schauen wir auf HTTP Verbindungen, so können wir aus den Daten nützliche Informationen für den Betrieb ziehen, etwa Performance Metriken wie Round Trip time, Antwortzeiten auf Anfragen. Aus Sicht der Entwickler einer Webapplikation ist wiederum von Interesse, welche Pages aufgerufen werden, in welcher Reihenfolge. Der Verantwortliche für den gesamten Webshop interessiert sich aber eher für Informationen über die verkauften oder eben nicht verkauften Produkte, liegen gebliebene Warenkörbe und welche Kunden im Shop unterwegs sind.

- #12: Der Splunk HTTP Event Collector erlaubt es, ohne zusätzlichen Agenten / Forwarder und auf einfache Art und Weise Daten über HTTP bzw. HTTPS an Splunk zu schicken. Dies ist für Entwickler auch leicht in Applikationen einzubinden. Diese Variante ist nicht nur einfach zu nutzen, sondern auch effektiv, sicher und sehr gut skalierbar.

- #13: Nehmen wir an, wir vertreiben eine Mobile App. Dann interessiert uns die User Experience. Zum einen natürlich die Performance der App, welche Latenz tritt beim Netzwerkverkehr auf, wie bewegt sich der User durch die App, wie sieht der Crash Report aus, gibt es bei Problemen vielleicht Zusammenhänge zur verwendeten Version der App, dem genutzten Endgerät oder der Firmware auf dem Endgerät oder dem Provider? Mit Splunk MINT stellen wir ein SDK für Android und iOS bereit, welches es einfach macht, Daten aus mobilen Apps an Splunk zu schicken.

- #14: OK, jetzt haben wir die Daten also in Splunk. Was machen wir jetzt damit? Wie bereits gesagt, lebt eine Applikation ja nicht für sich allein, sondern ist Teil eines oder mehrerer Business Services und es ist sinnvoll diesen End-to-End zu überwachen. Splunk IT Service Intelligence als Erweiterung von Splunk bietet uns genau diese Möglichkeiten: Dazu erstellen wir ein Service Model mit den einzelnen Komponenten eines Services, deren Abhängigkeiten und Key Performance Indikatoren. Auf Basis dieser KPIs berechnen wir dann den Gesundheitszustand oder Qualität des Services. Auf Basis von Schwellwerten können wir uns dann benachrichtigen lassen. Adaptive Threshold... Outlier detection, Event Grouping auf Basis von Services zur besseren Priorisierung von Notable Events. Splunk als Basis bietet uns weiterhin ohne Medienbruch Zugang auf die Raw Events zur Root Cause Analyse bei Problemen.

- #15: Fasen wir zusammen: Splunk bietet eine Platform, mit der wir unterschiedliche Maschinendaten zentral, über unterschiedliche Teams hinweg sammeln und analysieren können. Key Performance Indikatoren, Service Level Targets einschließlich der Abhängigkeiten und Auswirkungen auf Services lassen sich abbilden. Dabei haben Sie weiterhin direkten Zugriff auf die gesammelten Raw Events für die Root Cause Analyse von Problemen. Daten können on-premise, aus Cloud-umgebungen erfasst werden und bieten so auch Einblicke in hybride Umgebungen. Die zentrale Erfassung von Daten erlaubt den Blick über den gesamten Technologiestack hinweg – einschließlich der Daten, die APM Tools oder andere Systeme sammeln.

- #17: APM Tools sind gut im Bereich Byte Code Instrumentalisierung, Application Mapping, End user response times messen. Andererseits decken sie nicht den gesamten Technologiestack ab. Aber diese Abdeckung ist wichtig, denn nur etwa 40% der Ausfälle sind bedingt durch Fehler in Applikationen. Weitere 40% haben ihre Ursache in der Infrastruktur, und 20% haben andere Ursachen, also etwa Stromausfälle, DDOS Attacken oder Ausfälle von wichtigen Services wie DNS.

- #18: Nicht alle Ihre Applikation sind instrumentalisiert oder lassen sich überhaupt instrumentalisieren und stellen somit einen weißen Fleck auf der Karte dar. Hier hilft Splunk, diese Flecken zu erschließen und eine End-to-End Sicht über den gesamten Technologiestack zu erhalten. Sie haben eine zentrale Sicht auf alle Datenquellen. Und diese Daten können wir nicht nur nutzen, um den aktuellen Gesundheitszustand von Services abzubilden oder bei der Root Cause Analyse zu helfen. Splunk speichert historische Daten solange sie wollen – mit voller Granularität und erlaubt es so auch, proaktiv zu werden und auf Basis der historischen Daten Predictive Analysis zu betreiben und damit helfen Probleme zu adressieren bevor die Enduser sie bemerken.

- #19: Für die Gesamtsicht ist es also sinnvoll, Daten aus APM Tools ebenfalls in Splunk einzubinden. Die meisten dieser Tools haben eine Schnittstelle, die es erlaubt Daten direkt abzufragen oder zu exportieren. Dies werden dann in Splunk indiziert und können mit anderen Daten in Beziehung gesetzt werden. Also zum Beispiel bei der Root Cause Analyse. Oder sie analysieren die Daten aus dem APM genauer und machen Vorhersagen oder finden Ausreißer in den APM Daten.

- #20: Für APM Tools wie New Relic, AppDynamics oder Dynatrace existieren bereits fertige Integration, die es einfach machen, Daten in Splunk zu übernehmen. Auf splunkbase.splunk.com sind die entsprechenden Apps und Add-ons kostenfrei zu finden. Nützliche Informationen liefern wie schon beschrieben auch Splunk Stream, Splunk MINT oder Web Performance basierend auf boomerang.

- #21: Kommen wir jetzt zu einer Demonstration. Wie könnte die Überwachung eines WebStores mit Splunk aussehen? Dieser Web Store wird aktuell in die Cloud migriert und der Verantwortliche für den WebStore ist ziemlich nervös deswegen. Er schaut auf seinen Executive View -> sehe niedrige Anzahl an erfolgreichen Käufen und schlechte umsatzzahlen, Mittelmäßiger ApDex (wer weiss, was ApDex ist?) Apdex: #good + 0.5#tolerated / #total Da wir gerade eine Migration machen, wollen wir doch einmal prüfen, ob es etwas gibt, was auffällig ist zwischen on-premise und Cloud. Die Kollegen aus der IT schauen sich das an. Sieht eigentlich alles gut aus. Keine Unterschiede zwischen Cloud und VMware Umgebung. Daher schließen wir die Migration als Ursache aus. Wie geht es dem Web Shop? Lange Antwortzeiten... in allen Tiern über dem Mittelwert des letzten Tages. Sehe Fehler bei DB Verbindungen des Tomcat Servers. Und bei der DB sehe ich Fehler, dass Logdateien nicht geschrieben werden konnten. Kann jetzt genauer auf die Datenbank schauen (klick on Database Tier!!!) Hier kann ich bestätigen, dass es Probleme mit dem freien Speicherplatz gibt. Eigentlich sollte die Logs ja regelmäßig gelöscht werden. Aber ich sehe, dass mysql Server Problem mit einem Locked Account hat. Hmm, aber die letzten Fehler sind schon eine Weile her. Ist da noch mehr? Schauen wir noch auf die Mobile App. Wie sieht es da aus? End User Performance Metrics (MINT) Error Rate by App Version -> only 6.0! Latency per Platform -> android Latency per App Version -> 6.0!!! Am Ende: Mobile App Health, Latency by App Version -> Version 6.0 hat lange Antwortzeiten.

- #22: Industry Online services Real estate Splunk Use Cases • Business analytics • IT operations • Application delivery Challenges Third-party and homegrown open-source solutions could not keep up with data volume Needed to ensure uptime and maintain SLAs for issue resolution Log les were not standardized and contained unnecessary information Required robust monitoring and reporting solution Lacked visibility into vast volumes of siloed log data Needed the ability to create ad hoc reporting and provide visibility into the health of key transactions, end-to-end, in real time Additional Business Impact: Provides self-service to teams across the enterprise to create their own solutions Faster incident isolation and mitigation Correlates user experience metrics with application performance for improved customer website experience Splunk Products • Splunk Enterprise • Splunk Cloud (Planned: Trulia,® Retsly®) • Splunk SDK Data Sources • Application logs • Server logs • Website logs including property listings • Data from API endpoints (JSON) • Mobile application data • Website performance data Case Study http://www.splunk.com/en_us/customers/success-stories/zillow.html Video http://www.splunk.com/en_us/resources/video.psbW41MzE6QgFDBeMDL0VtdskHezTBDw.html Blog Post: http://blogs.splunk.com/2016/05/10/zillow-finds-its-way-home-with-splunk/?awesm=splk.it_w0S Sales Email template: https://splunk.my.salesforce.com/06933000001O5t0 SplunkLive! Seattle presentation: http://www.slideshare.net/Splunk/zillow-35018327 Splunk blog by Grigori Melnick: http://blogs.splunk.com/2015/05/13/zillow-developing-on-splunk/

- #23: Industry Technology Splunk Use Cases IT operations Application delivery Business analytics Challenges Difficulty accessing and managing data across the enterprise Open source platform lacked stability and scalability needed to accommodate large and growing data volume Accessing data to make actionable decisions took up to weeks Developers lacked infrastructure visibility needed to ensure smooth application delivery Splunk Products Splunk Enterprise Splunk App for Unix and Linux Splunk Machine Learning Toolkit Splunk App for AWS Data Sources Application Database Third-party Case Study https://www.splunk.com/en_us/customers/success-stories/yelp.html

- #24: Fassen wir zusammen: Für das Monitoring des Anwendererlebnisses ist es nicht ausreichend, eine einzelne Applikation zu überwachen. Überwinden Sie die Silos in Ihrer Monitoringlandschaft und sammelt sie Daten zentral in Splunk. So können Sie den vollen Informationsgehalt Ihrer Maschinendaten nutzen. Und das gilt auch für Daten, die zu zurzeit mit anderen Tools sammeln. Lassen sie auch diese mit in Splunk einfließen.

- #25: Vielen Dank! Wie auch bei den anderen Sessiosn können Sie uns über unser sogenanntes Pony Poll Feedback geben. Die entsprechende URL versteckt sich in diesem QR Code.