Spotfire Integration & Dynamic Output creation

Download as ppt, pdf6 likes6,470 views

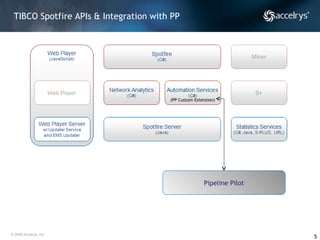

This document proposes a solution to dynamically create or modify Spotfire output (DXP) files through integration with Pipeline Pilot. The key points are: 1. Spotfire Automation Services and its APIs would allow a custom task to create/modify DXP files. 2. A request XML format would enable Pipeline Pilot to specify the type of DXP file and updates. 3. The custom task would interpret the XML and use Spotfire APIs to generate the requested DXP file. 4. A proof-of-concept uses sample data and components to demonstrate the end-to-end workflow between Pipeline Pilot and Spotfire.

1 of 25

Downloaded 224 times

Ad

Recommended

Spotfire

SpotfireSudarsan Desikan The document discusses TIBCO Spotfire, an analytics platform. It shows how Spotfire connects various clients to data sources via servers. It provides visualizations, analytic engines, and automation services. Spotfire Application Data Services connects Spotfire to enterprise systems like SAP, Siebel, and Oracle by introspecting their data models and delivering the data using SQL. The rest of the document focuses on how Spotfire connects specifically to SAP Business Warehouse (BW) data, discussing the challenges of differing data structures and query languages between Spotfire and BW, and how Spotfire's adapter generates optimized queries and allows unified access to BW data in Spotfire.

Integrating SFDC and Oracle ERP with IBM Websphere CastIron Appliance

Integrating SFDC and Oracle ERP with IBM Websphere CastIron ApplianceSandeep Chellingi Sandeep Chellingi presented on integrating Salesforce.com and Oracle ERP using IBM WebSphere Cast Iron. He discussed the challenges of traditional integration using multiple on-demand integration tools across a hybrid environment. The solution overviewed using Cast Iron to integrate various business data and systems like Salesforce, Oracle ERP, and custom applications. Key elements included reusable templates, error handling, monitoring and logging capabilities to provide a reliable integration solution.

Informatica slides

Informatica slidessureshpaladi12 The document provides an overview of the Informatica PowerCenter 7.1 product, describing its major components for ETL development, how to build basic mappings and workflows, and available options for loading target data. It also outlines the course objectives to understand PowerCenter architecture and components, build mappings and workflows, and troubleshoot common problems. Resources available from Informatica like documentation, support, and certification programs are also summarized.

Machine Learning Models in Production

Machine Learning Models in ProductionDataWorks Summit Data Scientists and Machine Learning practitioners, nowadays, seem to be churning out models by the dozen and they continuously experiment to find ways to improve their accuracies. They also use a variety of ML and DL frameworks & languages , and a typical organization may find that this results in a heterogenous, complicated bunch of assets that require different types of runtimes, resources and sometimes even specialized compute to operate efficiently.

But what does it mean for an enterprise to actually take these models to "production" ? How does an organization scale inference engines out & make them available for real-time applications without significant latencies ? There needs to be different techniques for batch (offline) inferences and instant, online scoring. Data needs to be accessed from various sources and cleansing, transformations of data needs to be enabled prior to any predictions. In many cases, there maybe no substitute for customized data handling with scripting either.

Enterprises also require additional auditing and authorizations built in, approval processes and still support a "continuous delivery" paradigm whereby a data scientist can enable insights faster. Not all models are created equal, nor are consumers of a model - so enterprises require both metering and allocation of compute resources for SLAs.

In this session, we will take a look at how machine learning is operationalized in IBM Data Science Experience (DSX), a Kubernetes based offering for the Private Cloud and optimized for the HortonWorks Hadoop Data Platform. DSX essentially brings in typical software engineering development practices to Data Science, organizing the dev->test->production for machine learning assets in much the same way as typical software deployments. We will also see what it means to deploy, monitor accuracies and even rollback models & custom scorers as well as how API based techniques enable consuming business processes and applications to remain relatively stable amidst all the chaos.

Speaker

Piotr Mierzejewski, Program Director Development IBM DSX Local, IBM

Informatica Pentaho Etl Tools Comparison

Informatica Pentaho Etl Tools ComparisonRoberto Espinosa This document compares different ETL (extract, transform, load) tools. It begins with introductions to ETL tools in general and four specific tools: Pentaho Kettle, Talend, Informatica PowerCenter, and Inaplex Inaport. The document then compares the tools across various criteria like cost, ease of use, speed, and connectivity. It aims to help readers evaluate the tools for different use cases.

Informatica Powercenter Architecture

Informatica Powercenter ArchitectureBigClasses Com Watch Tutorial On Informatica Powercenter Architecture with video,informatica powercenter architecture diagram,informatica powercenter architecture over view,informatica powercenter architecture 8 diagram,informatica powercenter 9.5 architecture,informatica powercenter architecture ppt

1. informatica power center architecture

1. informatica power center architectureMuhammad Salah ElOkda This slides presents the architecture of the Informatica PowerCenter and each of its component.

This can help ETL PowerCenter developers understand how their mapping works internally and it's an introduction to the Informatica Administration.

Kettle – Etl Tool

Kettle – Etl ToolDr Anjan Krishnamurthy Pentaho Data Integration (Kettle) is an open-source extract, transform, load (ETL) tool. It allows users to visually design data transformations and jobs to extract data from source systems, transform it, and load it into data warehouses. Kettle includes components like Spoon for designing transformations and jobs, Pan for executing transformations, and Carte for remote execution. It supports various databases and file formats through flexible components and transformations.

Hortonworks DataFlow (HDF) 3.3 - Taking Stream Processing to the Next Level

Hortonworks DataFlow (HDF) 3.3 - Taking Stream Processing to the Next LevelHortonworks The HDF 3.3 release delivers several exciting enhancements and new features. But, the most noteworthy of them is the addition of support for Kafka 2.0 and Kafka Streams.

https://hortonworks.com/webinar/hortonworks-dataflow-hdf-3-3-taking-stream-processing-next-level/

From an experiment to a real production environment

From an experiment to a real production environmentDataWorks Summit Rabobank is a worldwide food- and agri-bank from the Netherlands. Rabobank wants to make a substantial contribution to welfare and prosperity in the Netherlands and to feeding the world sustainably. Rabobank Group operates through Rabobank and its subsidiaries in 40 countries.

Rabobank is active in both retail and wholesale banking. For our wholesale clients we provide real-time business insight information by making use of Cloudera and Hortonworks technology. An example is our recently launched service that gives insight in market performance of Rabobank customers, starting with the dairy farmers market segment, by making use of benchmark information. Our current technology stack contains Hortonworks Data Flow (HDF) and Cloudera Hadoop (CDH). Our real-time data stream is implemented by making use of Kafka and Nifi from HDF. Cloudera is used to store the data needed for the business insight information, mainly in HDFS and HBase.

During our presentation we will provides insight about the project approach, the architecture and actual implementation.

Speaker

Jeroen Wolffensperger, Solution Architect Data, Rabobank

Martijn Groen, Delivery Manager Data , Rabobank Netherlands

Microsoft SQL Server - Parallel Data Warehouse Presentation

Microsoft SQL Server - Parallel Data Warehouse PresentationMicrosoft Private Cloud The document discusses Microsoft's SQL Server 2008 R2 Parallel Data Warehouse, which offers massively scalable data warehousing capabilities. It provides an appliance-based architecture that can scale from tens to hundreds of terabytes in size on industry-standard hardware. The Parallel Data Warehouse uses a hub-and-spoke architecture to integrate traditional SMP data warehousing with new massively parallel processing capabilities. Early testing programs are underway to get customer feedback on the new technology.

IBM Spectrum Scale ECM - Winning Combination

IBM Spectrum Scale ECM - Winning CombinationSasikanth Eda This presentation describes various deployment options to configure IBM enterprise content management (ECM) FileNet® Content Manager components to use IBM Spectrum Scale™ (formerly known as IBM GPFS™) as back-end storage. It also describes various IBM Spectrum Scale value-added features with FileNet Content Manager

to facilitate an efficient and effective data-management solution.

Breathing New Life into Apache Oozie with Apache Ambari Workflow Manager

Breathing New Life into Apache Oozie with Apache Ambari Workflow ManagerDataWorks Summit Running scheduled, long-running or repetitive workflows on Hadoop clusters, especially secure clusters, is the domain of Apache Oozie. Oozie, however, suffers from XML for job configuration and a dated UI -- very bad usability in all. Apache Ambari, in its quest to make cluster management easier, has branched out to offering views for user services. This talk covers the Ambari Workflow Manager view which provides a GUI to author and visualize Oozie jobs.

To provide an example of Workflow Manager, Oozie jobs for log management and HBase compactions will be demonstrated showing off how easy Oozie can now be and what the exciting future for Oozie and Workflow Manager holds.

Apache Oozie is the long-time incumbent in big data processing. It is known to be hard to use and the interface is not aesthetically pleasing -- Oozie suffers from a dated UI. However, for secure Hadoop clusters, Oozie is the most readily available, obvious and full featured solution.

Apache Ambari is a deployment and configuration management tool used to deploy Hadoop clusters. Ambari Workflow Manager is a new Ambari view that helps address the usability and UI appeal of Apache Oozie.

In this talk, we’re going to leverage the stable foundation of Apache Oozie and clarity of Workflow Manager to demonstrate how one can build powerful batch workflows on top of Apache Hadoop. We’re also going to cover future roadmap and vision for both Apache Oozie and Workflow Manager. We will finish off with a live demo of Workflow Manager in action.

Speaker

Artem Ervits, Solutions Engineer, Hortonworks

Clay Baenziger, Hadoop Infrastructure, Bloomberg

The Future of Data Warehousing, Data Science and Machine Learning

The Future of Data Warehousing, Data Science and Machine LearningModusOptimum Watch the on-demand recording here:

https://event.on24.com/wcc/r/1632072/803744C924E8BFD688BD117C6B4B949B

Evolution of Big Data and the Role of Analytics | Hybrid Data Management

IBM, Driving the future Hybrid Data Warehouse with IBM Integrated Analytics System.

SAP PI 71 EHP1 Feature Highlights

SAP PI 71 EHP1 Feature Highlightshanishjohn This document summarizes new features and enhancements in SAP PI 7.1 EHP1. It covers improvements to design/development, configuration, and SOA/governance capabilities. Key enhancements include new folders, usage profiles, and function libraries for mapping; message prioritization, routing, and splitting on the AAE; integrated configuration; and expanded service registry functionality including usage profiles, cleanup, and automated interface creation.

Oracle Data Integration Presentation

Oracle Data Integration Presentationkgissandaner Oracle Data Integrator is an ETL tool that has three main differentiators: 1) It uses a declarative, set-based design approach which allows for shorter implementation times and reduced learning curves compared to specialized ETL skills. 2) It can transform data directly in the existing RDBMS for high performance and lower costs versus using a separate ETL server. 3) It has hot-pluggable knowledge modules that provide a library of reusable templates to standardize best practices and reduce costs.

Big Data, Big Thinking: Simplified Architecture Webinar Fact Sheet

Big Data, Big Thinking: Simplified Architecture Webinar Fact SheetSAP Technology This webinar discusses how to simplify IT architecture for handling big data. It explains that SAP's HANA platform allows consolidating transactional and analytical systems onto one platform to process and deliver data in real-time. The webinar also outlines the benefits of Cloudera's Hadoop working with SAP HANA, including keeping historical or unstructured IoT data in Hadoop without duplicating it, and enhancing security and performance through Intel partnerships.

Why and how to leverage the simplicity and power of SQL on Flink

Why and how to leverage the simplicity and power of SQL on FlinkDataWorks Summit SQL is the lingua franca of data processing, and everybody working with data knows SQL. Apache Flink provides SQL support for querying and processing batch and streaming data. Flink's SQL support powers large-scale production systems at Alibaba, Huawei, and Uber. Based on Flink SQL, these companies have built systems for their internal users as well as publicly offered services for paying customers.

In our talk, we will discuss why you should and how you can (not being Alibaba or Uber) leverage the simplicity and power of SQL on Flink. We will start exploring the use cases that Flink SQL was designed for and present real-world problems that it can solve. In particular, you will learn why unified batch and stream processing is important and what it means to run SQL queries on streams of data. After we explored why you should use Flink SQL, we will show how you can leverage its full potential.

Since recently, the Flink community is working on a service that integrates a query interface, (external) table catalogs, and result serving functionality for static, appending, and updating result sets. We will discuss the design and feature set of this query service and how it can be used for exploratory batch and streaming queries, ETL pipelines, and live updating query results that serve applications, such as real-time dashboards. The talk concludes with a brief demo of a client running queries against the service.

Speaker

Timo Walther, Software Engineer, Data Artisans

Database@Home - Data Driven : Loading, Indexing, and Searching with Text and ...

Database@Home - Data Driven : Loading, Indexing, and Searching with Text and ...Tammy Bednar This session will cover loading large JSON datasets into Oracle Database 19c, indexing the content and providing a RESTful search interface - all using Oracle Cloud features.

Time to Talk about Data Mesh

Time to Talk about Data MeshLibbySchulze This document discusses data mesh, a distributed data management approach for microservices. It outlines the challenges of implementing microservice architecture including data decoupling, sharing data across domains, and data consistency. It then introduces data mesh as a solution, describing how to build the necessary infrastructure using technologies like Kubernetes and YAML to quickly deploy data pipelines and provision data across services and applications in a distributed manner. The document provides examples of how data mesh can be used to improve legacy system integration, batch processing efficiency, multi-source data aggregation, and cross-cloud/environment integration.

Hybrid Cloud Keynote

Hybrid Cloud Keynote gcamarda - CIOs need to improve data center operations and support new workloads while reducing costs. They must embrace both evolutionary and transformative approaches like virtualization, private cloud, and public cloud.

- Cloud adoption is increasing, both in external public clouds and internal private clouds. Hybrid cloud approaches that leverage both internal and external options are becoming standard.

- Oracle provides integrated private and public cloud solutions from chips to cloud, allowing workloads to move seamlessly between on-premise and public cloud deployments.

ETL Market Webcast

ETL Market Webcastmark madsen Short overview of the components of the data integration market, some statistics and trends related to ETL, and how ETL products are evolving.

Real Time Streaming Architecture at Ford

Real Time Streaming Architecture at FordDataWorks Summit Ford Motor Company's mission to become both an Automotive and Mobility company has required an evolution in our analytics data flow, from traditional batch processing systems to dynamically routed stream processing based systems. Valuable data is continually being generated across the enterprise, from consumer WiFi in dealerships, robots working on the assembly line, and vehicle diagnostic data, and is now flowing into Ford's Real Time Streaming Architecture (RTSA). Our goal was to develop a provider agnostic, end to end solution to ingest and dynamically route individual streams of data in less than one second from edge node to Ford's on premise data center, or vice versa. The architecture dynamically scales in the cloud to reliably handle thousands of outbound and inbound transactions per second, with data provenance capabilities to audit data flow from end to end.

OpenPOWER Roadmap Toward CORAL

OpenPOWER Roadmap Toward CORALinside-BigData.com Klaus Gottschalk from IBM presented this deck at the 2016 HPC Advisory Council Switzerland Conference.

"Last year IBM together with partners out of the OpenPOWER foundation won two of the multi-year contacts of the US CORAL program. Within these contacts IBM develops an ac- celerated HPC infrastructure and software development ecosystem that will be a major step towards Exascale Computing. We believe that the CORAL roadmap will enable a massive pull for transformation of HPC codes for accelerated systems. The talk will discuss the IBM HPC strategy, explain the OpenPOWER foundation and the show IBM OpenPOWER roadmap for CORAL and beyond."

Watch the video presentation: http://wp.me/p3RLHQ-f9x

Learn more: http://e.huawei.com/us/solutions/business-needs/data-center/high-performance-computing

See more talks from the Switzerland HPC Conference:

http://insidehpc.com/2016-swiss-hpc-conference/

Sign up for our insideHPC Newsletter: http://insidehpc.com/newsletter

Make streaming processing towards ANSI SQL

Make streaming processing towards ANSI SQLDataWorks Summit SQL is the most widely used language for data processing. It allows users to concisely and easily declare their business logic. Data analysts usually do not have complex software programing backgrounds, but they can program SQL and use it on a regular basis to analyze data and power the business decisions. Apache Flink is one of streaming engines that supports SQL. Besides Flink, some other stream processing frameworks, like Kafka and Spark structured streaming, have SQL-like DSL, but they do not have the same semantics as Flink. Flink’s SQL implementation follows ANSI SQL standard while others do not.

In this talk, we will present why following ANSI SQL standard is essential characteristic of Flink SQL and how we achieved this. The core business of Alibaba is now fully driven by the data processing engine: Blink, a project based on Flink with Alibaba’s improvements. About 90% of blink jobs are written by Flink SQL. We will show the use cases and the experience of running large scale Flink SQL jobs at Alibaba in the talk.

Speakers

Shaoxuan Wang, Senior Engineering Manager, Alibaba

Xiaowei Jiang, Senior Director, Alibaba

Database@Home : The Future is Data Driven

Database@Home : The Future is Data DrivenTammy Bednar These slides were presented during the Database@Home : Data-Driven Apps event. This session will discuss the importance of data to an organisation and the need to build applications where the value within that data can easily be exploited. To achieve that aim we need to start building applications that benefit from the flexibility of new development paradigms but don't create artificial barriers of complexity that stop us from easily responding to change within our organisations.

SQLAnywhere 16.0 and Odata

SQLAnywhere 16.0 and OdataSAP Technology OData is becoming the "Lingua Franca" for data exchange across the internet. Serve up OData web services from most relational database backends requires a web server and 3rd-party custom components that translate OData calls into SQL statements (and vice-versa). SQLAnywhere 16.0 introduced a new OData Producer that does all this work for you automatically. This session will walk throught the setup and configuration of this new server process, and show real world examples on how to use it.

DBCS Office Hours - Modernization through Migration

DBCS Office Hours - Modernization through MigrationTammy Bednar Speakers:

Kiran Tailor - Cloud Migration Director, Oracle

Kevin Lief – Partnership and Alliances Manager - (EMEA), Advanced

Modernisation of mainframe and other legacy systems allows organizations to capitalise on existing assets as they move toward more agile, cost-effective and open technology environments. Do you have legacy applications and databases that you could modernise with Oracle, allowing you to apply cutting edge technologies, like machine learning, or BI for deeper insights about customers or products? Come to this webcast to learn about all this and how Advanced can help to get you on the path to modernisation.

AskTOM Office Hours offers free, open Q&A sessions with Oracle Database experts. Join us to get answers to all your questions about Oracle Database Cloud Service.

Advanced Use of Properties and Scripts in TIBCO Spotfire

Advanced Use of Properties and Scripts in TIBCO SpotfireHerwig Van Marck The document discusses advanced uses of properties and scripts in TIBCO Spotfire including:

1) Using properties to control trellis simulations and page selections.

2) Using the $map and $csearch functions to search data and summarize results.

3) Parsing comma separated tag columns and dynamically updating list box content using scripts.

TIBCO Spotfire

TIBCO SpotfireVarun Varghese The document provides information on TIBCO Spotfire, an in-memory analytics tool. It discusses Spotfire's architecture including its server, web player, and ability to connect to different databases. It then covers various data modeling techniques in Spotfire like importing data, creating calculated columns, filters, and visualizations. Different types of charts are demonstrated including bar charts, line charts, scatter plots, and more. It also shows how to customize visualizations and use property controls to make dashboards interactive.

Ad

More Related Content

What's hot (20)

Hortonworks DataFlow (HDF) 3.3 - Taking Stream Processing to the Next Level

Hortonworks DataFlow (HDF) 3.3 - Taking Stream Processing to the Next LevelHortonworks The HDF 3.3 release delivers several exciting enhancements and new features. But, the most noteworthy of them is the addition of support for Kafka 2.0 and Kafka Streams.

https://hortonworks.com/webinar/hortonworks-dataflow-hdf-3-3-taking-stream-processing-next-level/

From an experiment to a real production environment

From an experiment to a real production environmentDataWorks Summit Rabobank is a worldwide food- and agri-bank from the Netherlands. Rabobank wants to make a substantial contribution to welfare and prosperity in the Netherlands and to feeding the world sustainably. Rabobank Group operates through Rabobank and its subsidiaries in 40 countries.

Rabobank is active in both retail and wholesale banking. For our wholesale clients we provide real-time business insight information by making use of Cloudera and Hortonworks technology. An example is our recently launched service that gives insight in market performance of Rabobank customers, starting with the dairy farmers market segment, by making use of benchmark information. Our current technology stack contains Hortonworks Data Flow (HDF) and Cloudera Hadoop (CDH). Our real-time data stream is implemented by making use of Kafka and Nifi from HDF. Cloudera is used to store the data needed for the business insight information, mainly in HDFS and HBase.

During our presentation we will provides insight about the project approach, the architecture and actual implementation.

Speaker

Jeroen Wolffensperger, Solution Architect Data, Rabobank

Martijn Groen, Delivery Manager Data , Rabobank Netherlands

Microsoft SQL Server - Parallel Data Warehouse Presentation

Microsoft SQL Server - Parallel Data Warehouse PresentationMicrosoft Private Cloud The document discusses Microsoft's SQL Server 2008 R2 Parallel Data Warehouse, which offers massively scalable data warehousing capabilities. It provides an appliance-based architecture that can scale from tens to hundreds of terabytes in size on industry-standard hardware. The Parallel Data Warehouse uses a hub-and-spoke architecture to integrate traditional SMP data warehousing with new massively parallel processing capabilities. Early testing programs are underway to get customer feedback on the new technology.

IBM Spectrum Scale ECM - Winning Combination

IBM Spectrum Scale ECM - Winning CombinationSasikanth Eda This presentation describes various deployment options to configure IBM enterprise content management (ECM) FileNet® Content Manager components to use IBM Spectrum Scale™ (formerly known as IBM GPFS™) as back-end storage. It also describes various IBM Spectrum Scale value-added features with FileNet Content Manager

to facilitate an efficient and effective data-management solution.

Breathing New Life into Apache Oozie with Apache Ambari Workflow Manager

Breathing New Life into Apache Oozie with Apache Ambari Workflow ManagerDataWorks Summit Running scheduled, long-running or repetitive workflows on Hadoop clusters, especially secure clusters, is the domain of Apache Oozie. Oozie, however, suffers from XML for job configuration and a dated UI -- very bad usability in all. Apache Ambari, in its quest to make cluster management easier, has branched out to offering views for user services. This talk covers the Ambari Workflow Manager view which provides a GUI to author and visualize Oozie jobs.

To provide an example of Workflow Manager, Oozie jobs for log management and HBase compactions will be demonstrated showing off how easy Oozie can now be and what the exciting future for Oozie and Workflow Manager holds.

Apache Oozie is the long-time incumbent in big data processing. It is known to be hard to use and the interface is not aesthetically pleasing -- Oozie suffers from a dated UI. However, for secure Hadoop clusters, Oozie is the most readily available, obvious and full featured solution.

Apache Ambari is a deployment and configuration management tool used to deploy Hadoop clusters. Ambari Workflow Manager is a new Ambari view that helps address the usability and UI appeal of Apache Oozie.

In this talk, we’re going to leverage the stable foundation of Apache Oozie and clarity of Workflow Manager to demonstrate how one can build powerful batch workflows on top of Apache Hadoop. We’re also going to cover future roadmap and vision for both Apache Oozie and Workflow Manager. We will finish off with a live demo of Workflow Manager in action.

Speaker

Artem Ervits, Solutions Engineer, Hortonworks

Clay Baenziger, Hadoop Infrastructure, Bloomberg

The Future of Data Warehousing, Data Science and Machine Learning

The Future of Data Warehousing, Data Science and Machine LearningModusOptimum Watch the on-demand recording here:

https://event.on24.com/wcc/r/1632072/803744C924E8BFD688BD117C6B4B949B

Evolution of Big Data and the Role of Analytics | Hybrid Data Management

IBM, Driving the future Hybrid Data Warehouse with IBM Integrated Analytics System.

SAP PI 71 EHP1 Feature Highlights

SAP PI 71 EHP1 Feature Highlightshanishjohn This document summarizes new features and enhancements in SAP PI 7.1 EHP1. It covers improvements to design/development, configuration, and SOA/governance capabilities. Key enhancements include new folders, usage profiles, and function libraries for mapping; message prioritization, routing, and splitting on the AAE; integrated configuration; and expanded service registry functionality including usage profiles, cleanup, and automated interface creation.

Oracle Data Integration Presentation

Oracle Data Integration Presentationkgissandaner Oracle Data Integrator is an ETL tool that has three main differentiators: 1) It uses a declarative, set-based design approach which allows for shorter implementation times and reduced learning curves compared to specialized ETL skills. 2) It can transform data directly in the existing RDBMS for high performance and lower costs versus using a separate ETL server. 3) It has hot-pluggable knowledge modules that provide a library of reusable templates to standardize best practices and reduce costs.

Big Data, Big Thinking: Simplified Architecture Webinar Fact Sheet

Big Data, Big Thinking: Simplified Architecture Webinar Fact SheetSAP Technology This webinar discusses how to simplify IT architecture for handling big data. It explains that SAP's HANA platform allows consolidating transactional and analytical systems onto one platform to process and deliver data in real-time. The webinar also outlines the benefits of Cloudera's Hadoop working with SAP HANA, including keeping historical or unstructured IoT data in Hadoop without duplicating it, and enhancing security and performance through Intel partnerships.

Why and how to leverage the simplicity and power of SQL on Flink

Why and how to leverage the simplicity and power of SQL on FlinkDataWorks Summit SQL is the lingua franca of data processing, and everybody working with data knows SQL. Apache Flink provides SQL support for querying and processing batch and streaming data. Flink's SQL support powers large-scale production systems at Alibaba, Huawei, and Uber. Based on Flink SQL, these companies have built systems for their internal users as well as publicly offered services for paying customers.

In our talk, we will discuss why you should and how you can (not being Alibaba or Uber) leverage the simplicity and power of SQL on Flink. We will start exploring the use cases that Flink SQL was designed for and present real-world problems that it can solve. In particular, you will learn why unified batch and stream processing is important and what it means to run SQL queries on streams of data. After we explored why you should use Flink SQL, we will show how you can leverage its full potential.

Since recently, the Flink community is working on a service that integrates a query interface, (external) table catalogs, and result serving functionality for static, appending, and updating result sets. We will discuss the design and feature set of this query service and how it can be used for exploratory batch and streaming queries, ETL pipelines, and live updating query results that serve applications, such as real-time dashboards. The talk concludes with a brief demo of a client running queries against the service.

Speaker

Timo Walther, Software Engineer, Data Artisans

Database@Home - Data Driven : Loading, Indexing, and Searching with Text and ...

Database@Home - Data Driven : Loading, Indexing, and Searching with Text and ...Tammy Bednar This session will cover loading large JSON datasets into Oracle Database 19c, indexing the content and providing a RESTful search interface - all using Oracle Cloud features.

Time to Talk about Data Mesh

Time to Talk about Data MeshLibbySchulze This document discusses data mesh, a distributed data management approach for microservices. It outlines the challenges of implementing microservice architecture including data decoupling, sharing data across domains, and data consistency. It then introduces data mesh as a solution, describing how to build the necessary infrastructure using technologies like Kubernetes and YAML to quickly deploy data pipelines and provision data across services and applications in a distributed manner. The document provides examples of how data mesh can be used to improve legacy system integration, batch processing efficiency, multi-source data aggregation, and cross-cloud/environment integration.

Hybrid Cloud Keynote

Hybrid Cloud Keynote gcamarda - CIOs need to improve data center operations and support new workloads while reducing costs. They must embrace both evolutionary and transformative approaches like virtualization, private cloud, and public cloud.

- Cloud adoption is increasing, both in external public clouds and internal private clouds. Hybrid cloud approaches that leverage both internal and external options are becoming standard.

- Oracle provides integrated private and public cloud solutions from chips to cloud, allowing workloads to move seamlessly between on-premise and public cloud deployments.

ETL Market Webcast

ETL Market Webcastmark madsen Short overview of the components of the data integration market, some statistics and trends related to ETL, and how ETL products are evolving.

Real Time Streaming Architecture at Ford

Real Time Streaming Architecture at FordDataWorks Summit Ford Motor Company's mission to become both an Automotive and Mobility company has required an evolution in our analytics data flow, from traditional batch processing systems to dynamically routed stream processing based systems. Valuable data is continually being generated across the enterprise, from consumer WiFi in dealerships, robots working on the assembly line, and vehicle diagnostic data, and is now flowing into Ford's Real Time Streaming Architecture (RTSA). Our goal was to develop a provider agnostic, end to end solution to ingest and dynamically route individual streams of data in less than one second from edge node to Ford's on premise data center, or vice versa. The architecture dynamically scales in the cloud to reliably handle thousands of outbound and inbound transactions per second, with data provenance capabilities to audit data flow from end to end.

OpenPOWER Roadmap Toward CORAL

OpenPOWER Roadmap Toward CORALinside-BigData.com Klaus Gottschalk from IBM presented this deck at the 2016 HPC Advisory Council Switzerland Conference.

"Last year IBM together with partners out of the OpenPOWER foundation won two of the multi-year contacts of the US CORAL program. Within these contacts IBM develops an ac- celerated HPC infrastructure and software development ecosystem that will be a major step towards Exascale Computing. We believe that the CORAL roadmap will enable a massive pull for transformation of HPC codes for accelerated systems. The talk will discuss the IBM HPC strategy, explain the OpenPOWER foundation and the show IBM OpenPOWER roadmap for CORAL and beyond."

Watch the video presentation: http://wp.me/p3RLHQ-f9x

Learn more: http://e.huawei.com/us/solutions/business-needs/data-center/high-performance-computing

See more talks from the Switzerland HPC Conference:

http://insidehpc.com/2016-swiss-hpc-conference/

Sign up for our insideHPC Newsletter: http://insidehpc.com/newsletter

Make streaming processing towards ANSI SQL

Make streaming processing towards ANSI SQLDataWorks Summit SQL is the most widely used language for data processing. It allows users to concisely and easily declare their business logic. Data analysts usually do not have complex software programing backgrounds, but they can program SQL and use it on a regular basis to analyze data and power the business decisions. Apache Flink is one of streaming engines that supports SQL. Besides Flink, some other stream processing frameworks, like Kafka and Spark structured streaming, have SQL-like DSL, but they do not have the same semantics as Flink. Flink’s SQL implementation follows ANSI SQL standard while others do not.

In this talk, we will present why following ANSI SQL standard is essential characteristic of Flink SQL and how we achieved this. The core business of Alibaba is now fully driven by the data processing engine: Blink, a project based on Flink with Alibaba’s improvements. About 90% of blink jobs are written by Flink SQL. We will show the use cases and the experience of running large scale Flink SQL jobs at Alibaba in the talk.

Speakers

Shaoxuan Wang, Senior Engineering Manager, Alibaba

Xiaowei Jiang, Senior Director, Alibaba

Database@Home : The Future is Data Driven

Database@Home : The Future is Data DrivenTammy Bednar These slides were presented during the Database@Home : Data-Driven Apps event. This session will discuss the importance of data to an organisation and the need to build applications where the value within that data can easily be exploited. To achieve that aim we need to start building applications that benefit from the flexibility of new development paradigms but don't create artificial barriers of complexity that stop us from easily responding to change within our organisations.

SQLAnywhere 16.0 and Odata

SQLAnywhere 16.0 and OdataSAP Technology OData is becoming the "Lingua Franca" for data exchange across the internet. Serve up OData web services from most relational database backends requires a web server and 3rd-party custom components that translate OData calls into SQL statements (and vice-versa). SQLAnywhere 16.0 introduced a new OData Producer that does all this work for you automatically. This session will walk throught the setup and configuration of this new server process, and show real world examples on how to use it.

DBCS Office Hours - Modernization through Migration

DBCS Office Hours - Modernization through MigrationTammy Bednar Speakers:

Kiran Tailor - Cloud Migration Director, Oracle

Kevin Lief – Partnership and Alliances Manager - (EMEA), Advanced

Modernisation of mainframe and other legacy systems allows organizations to capitalise on existing assets as they move toward more agile, cost-effective and open technology environments. Do you have legacy applications and databases that you could modernise with Oracle, allowing you to apply cutting edge technologies, like machine learning, or BI for deeper insights about customers or products? Come to this webcast to learn about all this and how Advanced can help to get you on the path to modernisation.

AskTOM Office Hours offers free, open Q&A sessions with Oracle Database experts. Join us to get answers to all your questions about Oracle Database Cloud Service.

Viewers also liked (20)

Advanced Use of Properties and Scripts in TIBCO Spotfire

Advanced Use of Properties and Scripts in TIBCO SpotfireHerwig Van Marck The document discusses advanced uses of properties and scripts in TIBCO Spotfire including:

1) Using properties to control trellis simulations and page selections.

2) Using the $map and $csearch functions to search data and summarize results.

3) Parsing comma separated tag columns and dynamically updating list box content using scripts.

TIBCO Spotfire

TIBCO SpotfireVarun Varghese The document provides information on TIBCO Spotfire, an in-memory analytics tool. It discusses Spotfire's architecture including its server, web player, and ability to connect to different databases. It then covers various data modeling techniques in Spotfire like importing data, creating calculated columns, filters, and visualizations. Different types of charts are demonstrated including bar charts, line charts, scatter plots, and more. It also shows how to customize visualizations and use property controls to make dashboards interactive.

Extending the Reach of R to the Enterprise with TERR and Spotfire

Extending the Reach of R to the Enterprise with TERR and SpotfireLou Bajuk An overview of how TIBCO integrates dynamic, interactive visual applications in Spotfire with predictive and advanced analytics in the R language, using TIBCO Enterprise Runtime for R--our R-compatible, enterprise-grade platform for the R language.

Advantages of Spotfire

Advantages of SpotfireIntellipaat Advantages of Spotfire:

1.Easily provide targeted, relevant predictive analytics to business users

2.Increase confidence and effectiveness in decision-making

3.Reduce/Manage Risk

4.Forecast specific behavior, preemptively act on it 5.Anticipate and react to emerging trends

Source URL: https://intellipaat.com/spotfire-training/

TIBCO Spotfire deck

TIBCO Spotfire decksyncsite1 1) The document discusses enterprise optimization through analytics that go beyond traditional business intelligence (BI) and spreadsheets.

2) It promotes the benefits of TIBCO's analytics solutions, including clarity of visualization, freedom of spreadsheets, relevance of applications, and confidence in statistics.

3) TIBCO's analytics can help organizations better analyze processes and events in real-time to improve decision making and business outcomes.

Getting the most out of Tibco Spotfire

Getting the most out of Tibco SpotfireHerwig Van Marck 1. The document discusses various techniques for getting the most out of Tibco Spotfire software, including formatting visualizations, using custom expressions and functions, linking multiple data tables, and creating interactive structure viewers.

2. It provides examples of custom expressions, functions, and visualization techniques like details views, formatting options, and linking selections across visualizations.

3. The presentation aims to demonstrate how to apply advanced Tibco Spotfire features to improve data analysis and visualization.

Using the R Language in BI and Real Time Applications (useR 2015)

Using the R Language in BI and Real Time Applications (useR 2015)Lou Bajuk R provides tremendous value to statisticians and data scientists, however they are often challenged to integrate their work and extend that value to the rest of their organization. This presentation will demonstrate how the R language can be used in Business Intelligence applications (such as Financial Planning and Budgeting, Marketing Analysis, and Sales Forecasting) to put advanced analytics into the hands of a wider pool of decisions makers. We will also show how R can be used in streaming applications (such as TIBCO Streambase) to rapidly build, deploy and iterate predictive models for real-time decisions. TIBCO's enterprise platform for the R language, TIBCO Enterprise Runtime for R (TERR) will be discussed, and examples will include fraud detection, marketing upsell and predictive maintenance.

TibcoSpotfire@VGSoM

TibcoSpotfire@VGSoMNilesh Kumar Tibco Spotfire is a business intelligence tool that allows users to quickly analyze data and visualize it in interactive charts and graphs. Data can be imported from files, databases, or other sources. Spotfire provides various visualization types like bar charts, scatter plots, and tree maps. Visualizations can be customized through properties and shared as images, PDFs, or presentations. The document then demonstrates how to install Spotfire, load sample sales data, perform linear regressions on the data, and create pie charts and stacked bar charts to visualize goods purchased by gender and total sales by age group. Results can be exported from Spotfire using various file formats.

Validation

ValidationBoris Mergold בוריס מרגולד Spotfire is an analytics platform that provides value across use cases from data discovery to predictive analytics. It offers advanced analytics capabilities like predictive modeling, big data handling, and real-time monitoring. Manufacturing customers use Spotfire for applications such as quality control, reliability analysis, equipment monitoring, and supply chain optimization.

TIBCO Advanced Analytics Meetup (TAAM) November 2015

TIBCO Advanced Analytics Meetup (TAAM) November 2015Bipin Singh This document provides an agenda and overview for a TIBCO Advanced Analytics Meetup. The meetup will cover various topics related to TIBCO analytics products and data science, including data analysis pipelines, visual analytics/dashboards, predictive analytics, data access/APIs, and geoanalytics. Speakers will discuss TIBCO Analytics & Data Science, building dashboards, predictive modeling for customer analytics, IronPython, advanced geoanalytics, and resources/training. The meetup aims to increase productivity, grow revenue, and reduce risk through analytics.

Adopting Tibco Spotfire in Bio-Informatics

Adopting Tibco Spotfire in Bio-InformaticsHerwig Van Marck The document discusses adopting Tibco Spotfire software for bioinformatics analysis at Virco, a company that applies technologies to infectious diseases like HIV. It highlights advantages of Spotfire over other options, including improved formatting, custom expressions, details visualizations, and handling multiple data tables. It also provides examples of using custom expressions, details visualizations, linking and refreshing multiple data tables, and column from marked to integrate visualizations.

Real time applications using the R Language

Real time applications using the R LanguageLou Bajuk TIBCO Enterprise Runtime for R (TERR) allows for real-time analytics using the R language within TIBCO's Complex Event Processing platforms. TERR provides a faster R engine that can be embedded in TIBCO products like Spotfire and CEP workflows. This enables rapid prototyping and deployment of predictive models to monitor real-time data streams and trigger automated actions. Example use cases discussed include predictive maintenance, customer loyalty analytics, and severe weather alert tracking.

Two-Way Integration with Writable External Objects

Two-Way Integration with Writable External ObjectsSalesforce Developers Do you want to be able to integrate external systems to Salesforce without copying the data and be able to write back to that system? Join us to go through several techniques that will allow you to leverage Lightning Connect's new write capability to its fullest potential. We'll show you how to build robust two-way integrations using a variety of declarative and programmatic tools and techniques. In addition, we'll explore common pitfalls like high operation latency and transaction semantics to help you avoid potential failures.

100 days of Spotfire - Tips & Tricks

100 days of Spotfire - Tips & TricksPerkinElmer Informatics This document provides 18 tips for using TIBCO Spotfire, a data visualization and analysis software, including adding comments to visualizations, advanced filtering of data, customizing the formatting of legends, data points, and color schemes, recommending visualizations for different data types, filtering data by chemical structure, adjusting table layouts, calculating dose curves, viewing multiple formats at once, analyzing data alongside visualizations in real-time, and calculating averages, minimums, and maximums. It concludes by informing the reader to follow for more information on using Spotfire in scientific applications.

Deploying R in BI and Real time Applications

Deploying R in BI and Real time ApplicationsLou Bajuk Overview of how Spotfire and TERR enables the deployment of R language analytics into Business Intelligence and Real time applications, including several examples. Presented at useR 2014 at UCLA on 7/2/14

Webinar: SAP BW Dinosaur to Agile Analytics Powerhouse

Webinar: SAP BW Dinosaur to Agile Analytics PowerhouseAgilexi Organisations who can quickly harness their corporate data to make optimum decisions will outperform their competitors. Use the corporate data in your SAP Business Warehouse to create significant business value and competitive advantage for your organisation.

View this webinar presentation to learn about:

• The business imperative to go analytics directly with SAP BW

• How you can rapidly, and at low cost, turn SAP BW into an analytics powerhouse

• Comprehensive and market leading integration of SAP BW with TIBCO Spotfire Analytics

See how SAP BW can be used to deliver analytics at speed and power not seen before. Empower your business and delighting your users beautifully presented insights.

The webinar can be viewed online at: http://bit.ly/sapbwanalytics1

Building a Perfect Strategic Partnership in 5 Stages

Building a Perfect Strategic Partnership in 5 StagesOgilvy The document outlines a 15-step process for creating successful strategic partnerships presented in the book "Strategic Partnering" by Luc, Raphaël, and Guillaume Bardin. It begins by noting that over 70% of strategic partnerships fail and less than 10% deliver original expectations, highlighting the need for an effective process. The 15-step process involves selecting potential partners based on strategic fit, defining a business case, appointing partnership managers, agreeing internally on a partnership strategy, engaging the partner, formalizing cooperation agreements, setting up joint workstreams, executing together, and expanding the partnership over time.

Fundamental JavaScript [UTC, March 2014]![Fundamental JavaScript [UTC, March 2014]](https://cdn.slidesharecdn.com/ss_thumbnails/fundamentaljavascriptutcmarch2014-140305104047-phpapp01-thumbnail.jpg?width=560&fit=bounds)

![Fundamental JavaScript [UTC, March 2014]](https://cdn.slidesharecdn.com/ss_thumbnails/fundamentaljavascriptutcmarch2014-140305104047-phpapp01-thumbnail.jpg?width=560&fit=bounds)

![Fundamental JavaScript [UTC, March 2014]](https://cdn.slidesharecdn.com/ss_thumbnails/fundamentaljavascriptutcmarch2014-140305104047-phpapp01-thumbnail.jpg?width=560&fit=bounds)

![Fundamental JavaScript [UTC, March 2014]](https://cdn.slidesharecdn.com/ss_thumbnails/fundamentaljavascriptutcmarch2014-140305104047-phpapp01-thumbnail.jpg?width=560&fit=bounds)

Fundamental JavaScript [UTC, March 2014]Aaron Gustafson The document provides an overview of fundamental JavaScript concepts such as variables, data types, operators, control structures, functions, and objects. It also covers DOM manipulation and interacting with HTML elements. Code examples are provided to demonstrate JavaScript syntax and how to define and call functions, work with arrays and objects, and select and modify elements of a web page.

Analysis and visualization of microarray experiment data integrating Pipeline...

Analysis and visualization of microarray experiment data integrating Pipeline...Vladimir Morozov This document summarizes the analysis and visualization of microarray experiment data using Pipeline Pilot, Spotfire and R. Key points:

- More than 30 public and proprietary microarray experiments were analyzed using in-house software workflows in Pipeline Pilot.

- Pipeline Pilot workflows retrieve gene annotation from NCBI and produce visualizations of differential expression statistics and biological pathway regulation in Spotfire.

- The gene expression values are analyzed via custom R scripts and plotted using the R connector. Results are integrated into the company's knowledge platform.

JavaScript Programming

JavaScript ProgrammingSehwan Noh The document provides an overview of JavaScript programming. It discusses the history and components of JavaScript, including ECMAScript, the DOM, and BOM. It also covers JavaScript basics like syntax, data types, operators, and functions. Finally, it introduces object-oriented concepts in JavaScript like prototype-based programming and early vs. late binding.

Ad

Similar to Spotfire Integration & Dynamic Output creation (20)

Ten Steps To Empowerment

Ten Steps To EmpowermentMohan Dutt Ten Steps To Empowerment Convert Custom Reports To Oracle Business Intelligence Publisher and Empower your IT organization as well as your business users

Dxl As A Lotus Domino Integration Tool

Dxl As A Lotus Domino Integration Tooldominion The document discusses using DXL (Domino XML Language) to integrate a Domino application called "Customer Orders" with other systems. It provides an overview of several demos that use DXL and XSLT to export order data to formats like PDF and Excel, get shipping rates from a USPS API, and search products on Amazon. Code examples demonstrate transforming XML data between DXL, JSON and other formats using XSLT.

Automation Techniques In Documentation

Automation Techniques In DocumentationSujith Mallath This document discusses automation techniques for documentation including what automation is, its scope, case studies, and benefits. Automation is using programming to alter document structure and content based on requirements. It can be used to convert file formats or insert/delete text based on criteria. Tool automation focuses on enhancing specific tools like FrameMaker. FrameMaker automation options include the FDK, FrameScript, and fmPython plugins. Automation can also convert documents to open standards like XML for processing with scripts. A case study describes automating production editing by converting files to XML and using JavaScript for validation. Benefits of automation include increased accuracy, consistency, standardized processes, and improved productivity.

OPEN TEXT ADMINISTRATION

OPEN TEXT ADMINISTRATIONSUMIT KUMAR This document provides an overview of OpenText and its product landscape. It discusses the typical 3-tier architecture with database, application, and presentation layers. It describes the Livelink and Archive Server applications, their architecture, administration tools, and typical document workflows. Key components include the Archive Server, Livelink, Pipeline Server, and various administration tools for managing the OpenText landscape.

Autoconfig r12

Autoconfig r12techDBA Autoconfig is a tool that automates configuration of Oracle E-Business Suite instances. It works by maintaining a central context file containing configuration parameters. Template files include tags that are replaced with values from the context file. Running adconfig generates configured files. Customizations can be made by copying templates to a custom directory. Some files are locked from customization. In R12.2, some Apache files are managed by WebLogic while others remain under Autoconfig control, and the context file must be synced when changing the port.

ebs xml.ppt

ebs xml.pptshubhtomar5 This document provides an overview of Oracle XML Publisher and its integration with PeopleTools. Key points include:

- XML Publisher allows separation of data, layout, and translations to provide flexibility and reduced maintenance.

- PeopleTools embeds the XML Publisher formatting engine and provides APIs for template management and report generation.

- The process involves registering data sources, defining reports, adding templates, and publishing reports for viewing.

- Advanced options like bursting, content libraries, and translations are described.

Cetas - Application Development Services

Cetas - Application Development ServicesKabilan D CETAS offers Application Development Services on web, Client & Server and Mobile platforms using Microsoft Technologies. An home grown application development framework enables us to develop applications faster and error free. We use MVC and nhibernate as technologies to provide hardware and DB agnostic solutions.

PLAT-13 Metadata Extraction and Transformation

PLAT-13 Metadata Extraction and TransformationAlfresco Software In this session, we will look first at the rich metadata that documents in your repository have, how to control the mapping of this on to your content model, and some of the interesting things this can deliver. We’ll then move on to the content transformation and rendition services, and see how you can easily and powerfully generate a wide range of media from the content you already have. Finally, we’ll look at how to extend these services to support additional formats.

Filemaker Pro in the Pre-Media environment

Filemaker Pro in the Pre-Media environmentTom Langton FileMaker Pro works best in a client/server environment and now has a cloud-based version. It handles record locking automatically to prevent simultaneous modifications by multiple users. FileMaker Pro also works well in a Macintosh environment due to its familiar interface and ability to connect to the MacOS through plugins and Applescript. The document discusses several ways the author has used FileMaker Pro for tasks like automating file processing, integrating with accounting systems, remote time tracking, and generating reports.

Tutorial_Python1.pdf

Tutorial_Python1.pdfMuzamilFaiz This document provides a step-by-step tutorial for scripting with Python in PowerFactory. It begins with an introduction to importing a sample project and creating a Python command object to contain Python scripts. Basic examples are shown for accessing PowerFactory functions like working with parameters and objects, executing calculations, and plotting results. More advanced topics are then introduced. The tutorial focuses on PowerFactory-specific scripting methods and assumes basic Python knowledge. Example scripts are provided and discussed to demonstrate executing a load flow calculation, accessing network objects and their parameters, and the anatomy of a Python command object.

Best Implementation Practices with BI Publisher

Best Implementation Practices with BI PublisherMohan Dutt The document discusses best practices for implementing Oracle Business Intelligence Publisher (BI Publisher). It provides an overview of BI Publisher and discusses tips like getting to the latest BI Publisher version, understanding delivery options, using the correct tools, knowing what BI Publisher can do in different applications, and how to troubleshoot issues. It also describes an implementation case study of converting Oracle E-Business Suite reports to BI Publisher.

Ibm

Ibmtechbed The document discusses setting up a development environment for Java web applications using frameworks like Struts, including installing Java, Tomcat, and Struts. It also provides an overview of developing a basic "Hello World" Struts application, including creating an action class and configuring it in the struts.xml file to return the view page. The document includes code samples for configuring filters in web.xml and implementing a basic action class and JSP view page.

Intro to flask2

Intro to flask2Mohamed Essam Flask is a micro web framework written in Python. It is based on Werkzeug WSGI toolkit and Jinja2 template engine. Flask implements the Model-View-Controller (MVC) architectural pattern. All Flask applications must create an application instance by instantiating the Flask class, which acts as a central dispatcher for requests. Flask uses routes defined with the @app.route decorator to map URLs to view functions that generate responses. Templates are rendered with the Jinja2 templating language by passing context variables. Static files are served from the /static route.

Intro to flask

Intro to flaskMohamed Essam Flask is a micro web framework written in Python. It is based on Werkzeug WSGI toolkit and Jinja2 templating engine. Flask implements the model-view-controller (MVC) pattern, where the controller is the Python code, views are templates, and models are the application's data. All Flask apps must create a Flask object instance, which acts as a central registry and WSGI application object. Flask uses route decorators to map URLs to view functions that generate responses. Templates are rendered with the Jinja2 engine by passing context variables.

Dost.jar and fo.jar

Dost.jar and fo.jarSuite Solutions This document provides an overview of the dost.jar and fo.jar files in the DITA-OT. It discusses that dost.jar holds the Java code for the overall DITA-OT framework, while fo.jar holds the code for the FO plugin that generates PDF output. It then describes how to build a customized version of these jars by modifying the source code, and outlines some of the key functions contained within each jar file, such as the Java invoker, integrator, and processing pipeline modules.

Adobe Flex4

Adobe Flex4 Rich Helton This document provides an overview of Rich Internet Applications (RIA) and the Adobe Flex software development kit. It discusses how Flex uses MXML and ActionScript to create RIA applications that interact with the Flash plugin. It also covers related technologies like Adobe AIR, BlazeDS, and LifeCycle Data Services that allow Flex applications to communicate with backend services. Examples of MXML code and Flex application architecture are provided.

Understanding and Configuring the FO Plug-in for Generating PDF Files: Part I...

Understanding and Configuring the FO Plug-in for Generating PDF Files: Part I...Suite Solutions - The document provides an overview of the FO plug-in for generating PDF files from DITA content in DITA-OT. It discusses the components involved in PDF generation including style sheets, XML files, and rendering engines.

- It describes how to customize the FO plug-in by overriding or adding to existing XSLT templates and attribute sets. Customizations can be made by copying files to a customization directory or directly editing files.

- Tips are provided for debugging customizations using intermediate XML files and utilizing features of XSL-FO rendering engines like Antenna House. Specialization support and backwards compatibility are also addressed.

sdzdas sd sdsd sa sd sadasd sd sadsadsadasdsad

sdzdas sd sdsd sa sd sadasd sd sadsadsadasdsadlokeshg5155 zXxzxZz x zX zx zx z adfsafsa dsa fsa dfsa fsaf saf af safsafffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffffff dfffffffffffffffffffffffffffffffffff

Design document Report Of Project

Design document Report Of Projectsayan1998 This document provides specifications for developing a robotic process automation (RPA) bot that is activated by an email containing an input document attachment. The bot will save the attachment and enter text from it into a web form. It was developed using UiPath software over 210 hours. The bot saves email attachments to a folder and uses activities from packages like UiPath.Word and UiPath.UIAutomation to read text from attachments and enter it into a web form. The project files, including the main workflow file and packages, are stored on GitHub. The bot is designed to be an attended robot that a human can trigger to help speed up data entry tasks.

Ad

More from Ambareesh Kulkarni (20)

Travel Management Dashboard application

Travel Management Dashboard applicationAmbareesh Kulkarni Carlson Wagonlit Travel is the #2 travel firm in the world with over $11 billion in revenue. They were lagging behind competitors with an outdated dashboard solution that lacked self-service capabilities and aesthetic appeal. Carlson Wagonlit Travel engaged Actuate Professional Services to design a new dashboard solution on the Actuate platform. The new Program Management Center dashboard was rolled out to over 12,000 users across Carlson Wagonlit Travel's 9,000 clients and leverages open source technology and metadata driven architecture for flexibility and scalability.

Carlson Wagonlit: Award winning application

Carlson Wagonlit: Award winning applicationAmbareesh Kulkarni Carlson Wagonlit Travel (CWT) has launched the CWT Program Management Center, a global web-based tool that provides travel managers and buyers with central access to all the information needed to simplify, prioritize and optimize their travel programs. The tool was rolled out to over 10,000 corporate clients and provides data views in nine languages and multiple currencies. The tool includes dashboard features that were tested with over 100 clients and allows travel managers to easily monitor key metrics and trends.

Analyze Optimize Realize - Business Value Analysis

Analyze Optimize Realize - Business Value AnalysisAmbareesh Kulkarni 1E uses its Analyze, Optimize, Realize (AOR) methodology to reduce IT waste for clients through rigorous analysis of technical infrastructure and ongoing assessment. 1E's experienced consultants and analysts have a proven record of minimizing client spending while improving efficiency. The AOR process ensures all parties are satisfied with results and has helped 1E build deep, lasting relationships with its customers.

Evolution of Client Services functions

Evolution of Client Services functionsAmbareesh Kulkarni The document discusses Expert Services' evolution from 2011-2016 focusing on margins, backlog, and knowledge accumulation, to 2017+ focusing on maintaining backlog, speed to value, growth through product sales, and knowledge distribution. It outlines plans to build a partner community to take over repetitive implementation work through a subcontracting model, training partners in 1E's SuccessNow methodology with oversight gradually reducing. By end of 2017, 1E consultants will transition from projects to pilots and software sales while 30-70% of implementation work is subcontracted to partners, the methodology is documented and published, and partners are integrated into the sales process, maintaining >95% project success.

Building the Digital Bank

Building the Digital BankAmbareesh Kulkarni This document discusses automating the migration of ATMs from Windows XP to Windows 7/8/10. A regional US bank had significantly delayed and overspent on a manual migration with no results. The bank asked the team to remotely migrate all ATMs with zero downtime. The document outlines challenges like extended support costs for Windows XP. It proposes using 1E's zero touch deployment methodology to pre-cache software updates before remotely installing them, minimizing downtime. A case study showed how 1E helped a bank pilot and fully deploy the migration on thousands of ATMs over WAN links within time and budget.

Packaged Dashboard Reporting Solution

Packaged Dashboard Reporting Solution Ambareesh Kulkarni This document provides examples of dashboard reports created with Actuate software. It includes a user friendly menu for selecting dashboard reports, as well as the ability to hyperlink from dashboards to more detailed reports or between multiple pages of a dashboard report. The dashboards and reports allow users to easily access and navigate essential business information.

Actuate Certified Business Solutions for SAP

Actuate Certified Business Solutions for SAPAmbareesh Kulkarni Actuate provides business intelligence solutions that can transform operational ERP data from systems like SAP and PeopleSoft into actionable business insights. Their solutions allow accessing both structured and unstructured ERP data without needing a data warehouse. They also provide consolidated reporting across multiple ERP systems through a single interface. The document discusses the market potential for SAP and PeopleSoft implementations, describes their product architectures and reporting capabilities, identifies limitations in their existing BI solutions, and outlines how Actuate's solutions address these gaps by delivering enhanced reporting and analytics.

Professional Services Project Delivery Methodology

Professional Services Project Delivery MethodologyAmbareesh Kulkarni The 1E project delivery methodology is a structured process designed to overcome common IT project failures. It involves key stages like training, discovery, health checks, design, testing, implementation, pre-piloting, piloting, and closeout. By following this standardized methodology, projects benefit from lessons learned on over 100 previous projects. It aims to address issues early and manage expectations to help ensure project success.

Windows 10 Migration

Windows 10 MigrationAmbareesh Kulkarni This document discusses concerns and processes around migrating to Windows 10 using zero-touch deployment. It outlines business concerns like cost, testing applications, and infrastructure readiness. Process concerns include minimizing disruption, handling different user types, reducing images, and tracking progress. The document then describes how 1E can help with automation, self-service scheduling, optimizing infrastructure, and migrating over 2,000 machines per day. It provides an example project timeline and discusses planning, deployment engineering, training, and piloting the 1E Windows 10 solution.

Actuate BI implementation for MassMutual's SAP BW

Actuate BI implementation for MassMutual's SAP BW Ambareesh Kulkarni A Fortune 100 insurance company needed to consolidate financial data from nine business units, generate GAAP-audited reports, and provide secure web-based access to reports for management. They were previously using a manual Excel-based process that took 20-30 days to close books. After implementing Actuate, they could close books much faster, reports were more accurate, and 12 employees were freed from manual review work. Actuate integrated with their SAP systems using custom ABAP functions and single sign-on for secure access.

Professional Services packaged solutions for SAP

Professional Services packaged solutions for SAPAmbareesh Kulkarni Upon successful SAP implementation, organizations need to deliver business information to various users. This document describes Actuate's solution for integrating SAP data into interactive reports accessible inside and outside the organization. Actuate provides tools for connecting to SAP data sources, developing personalized reports, and securely delivering them. Actuate experts help customers implement this using proven methodologies over 6 weeks, including planning, design, development, testing, and deployment.

SAP R3 SQL Query Builder

SAP R3 SQL Query BuilderAmbareesh Kulkarni The document discusses Actuate's SAP R/3 Connector. It provides access to SAP R/3 data without needing ABAP expertise. Developers can leverage SQL skills and build reports using a graphical query editor. The connector was built on Actuate's Open Data Access framework using Java and is integrated with Actuate's reporting tools. It eliminates the need to program in ABAP and allows direct table access using Open-SQL.

Zero Touch Operating Systems Deployment

Zero Touch Operating Systems DeploymentAmbareesh Kulkarni Integrate ServiceNow, SCCM and 1E to deliver 100% Automated Windows 10 migrations

Ambareesh Kulkarni, Professional background

Ambareesh Kulkarni, Professional backgroundAmbareesh Kulkarni In this presentation I have described my management philosophy and methods that I have developed and actively use through my teams to deliver impact and customer success

Professional Services Roadmap 2011 and beyond

Professional Services Roadmap 2011 and beyondAmbareesh Kulkarni This document provides an overview of 1E Professional Services. It discusses who they are, why customers should use their services, their goals for growth, and how sales and professional services can work together. Key points include that the team has 16 consultants with experience across 25+ projects, their focus on customer satisfaction and flawless execution, and how packaging offerings can help customers deploy solutions more quickly and profitably through reuse of best practices.

1E and Servicenow integration

1E and Servicenow integrationAmbareesh Kulkarni This document discusses the integration of 1E Shopping and AppClarity with ServiceNow for complete software lifecycle automation. A tight integration between these platforms ensures application requests are validated, license compliant, and delivered immediately. ServiceNow provides ITSM capabilities while 1E Shopping enables self-service access to applications and AppClarity manages software asset and license information. Their seamless integration streamlines software delivery and asset management processes.

Enterprise BI & SOA

Enterprise BI & SOAAmbareesh Kulkarni Actuate provides information delivery and business intelligence solutions through packaged offerings and services. It has adopted a service-oriented architecture with over 60 web services APIs that allow reporting and dashboard capabilities to be integrated and consumed by various applications. Actuate services can integrate with enterprise systems and messaging buses to enable real-time access to critical business data and indicators across different platforms.

Professional Services Automation

Professional Services AutomationAmbareesh Kulkarni Most well run Professional Services Organizations should consider implementing a PSA system to streamline and automate its core Operations. Automating core business processes enables PSOs to maximize revenue and allows them to focus on more important business functions such as customer success.

Storage Provisioning for Enterprise Information Applications

Storage Provisioning for Enterprise Information ApplicationsAmbareesh Kulkarni This document discusses various storage provisioning options for Actuate implementations, including server-attached storage, network-attached storage (NAS), and storage area networks (SANs). It describes the components, protocols, and benefits of each approach. NAS and SAN solutions using NFS are recommended over server-attached storage due to providing a common storage file system and avoiding disk management overhead. The document also covers backup and replication technologies.

Professional Services Sales Techniques & Methodology

Professional Services Sales Techniques & MethodologyAmbareesh Kulkarni A prescribed and simple sales process is key to the timely and accurate positioning of Professional Services. The attached presentation describes a simple process and techniques that have worked well for Enterprise Software companies of medium to large sizes.

Recently uploaded (20)

Reimagine How You and Your Team Work with Microsoft 365 Copilot.pptx

Reimagine How You and Your Team Work with Microsoft 365 Copilot.pptxJohn Moore M365 Community Conference 2025 Workshop on Microsoft 365 Copilot

Top 5 Benefits of Using Molybdenum Rods in Industrial Applications.pptx

Top 5 Benefits of Using Molybdenum Rods in Industrial Applications.pptxmkubeusa This engaging presentation highlights the top five advantages of using molybdenum rods in demanding industrial environments. From extreme heat resistance to long-term durability, explore how this advanced material plays a vital role in modern manufacturing, electronics, and aerospace. Perfect for students, engineers, and educators looking to understand the impact of refractory metals in real-world applications.

Shoehorning dependency injection into a FP language, what does it take?

Shoehorning dependency injection into a FP language, what does it take?Eric Torreborre This talks shows why dependency injection is important and how to support it in a functional programming language like Unison where the only abstraction available is its effect system.

Top-AI-Based-Tools-for-Game-Developers (1).pptx

Top-AI-Based-Tools-for-Game-Developers (1).pptxBR Softech Discover the top AI-powered tools revolutionizing game development in 2025 — from NPC generation and smart environments to AI-driven asset creation. Perfect for studios and indie devs looking to boost creativity and efficiency.

https://www.brsoftech.com/ai-game-development.html

Config 2025 presentation recap covering both days

Config 2025 presentation recap covering both daysTrishAntoni1 Config 2025 What Made Config 2025 Special

Overflowing energy and creativity

Clear themes: accessibility, emotion, AI collaboration

A mix of tech innovation and raw human storytelling

(Background: a photo of the conference crowd or stage)

Unlocking Generative AI in your Web Apps

Unlocking Generative AI in your Web AppsMaximiliano Firtman Slides for the session delivered at Devoxx UK 2025 - Londo.

Discover how to seamlessly integrate AI LLM models into your website using cutting-edge techniques like new client-side APIs and cloud services. Learn how to execute AI models in the front-end without incurring cloud fees by leveraging Chrome's Gemini Nano model using the window.ai inference API, or utilizing WebNN, WebGPU, and WebAssembly for open-source models.

This session dives into API integration, token management, secure prompting, and practical demos to get you started with AI on the web.

Unlock the power of AI on the web while having fun along the way!

Zilliz Cloud Monthly Technical Review: May 2025

Zilliz Cloud Monthly Technical Review: May 2025Zilliz About this webinar

Join our monthly demo for a technical overview of Zilliz Cloud, a highly scalable and performant vector database service for AI applications

Topics covered

- Zilliz Cloud's scalable architecture

- Key features of the developer-friendly UI

- Security best practices and data privacy

- Highlights from recent product releases