2015 SQL Pass Summit Breakfast session #1

- 1. Breakfast Session #1 Amazon RDS for SQL Server Optimizing Cost & Data Migration Ghim-Sim Chua, Sr. Technical Product Manager, AWS

- 2. What to expect from this session Quick overview of Amazon RDS for SQL Server Lowering the costs of running Amazon RDS for SQL Server Migrating your data into and out of Amazon RDS for SQL Server

- 3. What is Amazon RDS for SQL Server? Power, HVAC, net Rack and stack Server maintenance OS patches DB software patches Database backups High availability DB software installs OS installation Scaling Amazon RDS for SQL Server

- 4. Amazon RDS for SQL Server Options Express, Web, Standard, Enterprise License Included, Bring Your Own License

- 6. Picking the right instance

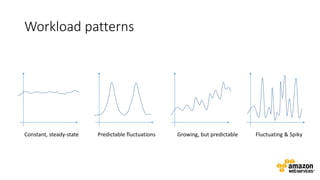

- 7. Workload patterns Constant, steady-state Predictable fluctuations Growing, but predictable Fluctuating & Spiky

- 8. Amazon RDS for SQL Server Instance Type: T2 vCPU vCPU vCPU vCPU

- 9. Understanding T2 credits Instance type # vCPUs Initial CPU credit CPU credits earned per hour Base performance Maximum CPU credit balance t2.micro 1 30 6 10% 144 t2.small 1 30 12 20% 288 t2.medium 2 60 24 40% 576

- 10. Other Amazon RDS Instance Types M3 (Standard) vCPUs: 1 - 8 RAM: 3GB to 30GB R3 (Memory Optimized) vCPUs: 2 - 32 RAM: 15GB to 244GB

- 11. Optimize your RDS SQL Server for cost Region Instance Storage type Multi-AZ Pricing model Licensing model

- 12. On Demand vs Reserved Instances On-Demand Pay by the hour No term commitment Reserved Instances No upfront Partial upfront All upfront

- 13. Import/Export data options 1. Import and Export Wizard 2. Bulk Copy (bcp utility) 3. AWS Database Migration Service Importing and Exporting SQL Server Data http://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/SQLServer.Procedural.Importing.html

- 14. AWS Database Migration Service Start your first migration in 10 minutes or less Keep your apps running during the migration Replicate within, to or from Amazon EC2 or RDS Move data to the same or different database engine Sign up for preview at aws.amazon.com/dms

- 15. Using the AWS Database Migration Service Customer Premises Application Users AWS Internet VPN • Start a replication instance • Connect to source and target databases • Select tables, schemas or databases Let the AWS Database Migration Service create tables, load data and keep them in sync Switch applications over to the target at your convenience AWS Database Migration Service

- 16. Replication and data integration Replicate data in on-premises databases to AWS Replicate OLTP data to Amazon Redshift Integrate tables from third-party software into your reporting or core OLTP systems Hybrid cloud is a stepping stone in migration to AWS

- 17. Cost effective and no upfront costs T2 pricing starts at $0.018 per Hour for T2.micro C4 pricing starts at $0.154 per Hour for C4.large 50GB GP2 storage included with T2 instances 100GB GP2 storage included with C4 instances Data transfer inbound and within AZ is free Data transfer across AZs starts at $0.01 per GB Swap Logs Cache

- 18. Q & A Check out Amazon RDS for SQL Server and AWS Database Migration Service! Thank you!

Editor's Notes

- #3: In today’s session, I want to give a quick overview of Amazon RDS for SQL Server is as well as talk about how you can lower your costs of running SQL Server in RDS and Migrating your data into and out of Amazon RDS for SQL Server.

- #4: For those who are not familiar, Amazon RDS for SQL Server is a fully managed SQL Server database service. By managed service, we mean that you can easily launch a wide variety SQL Server databases with a few clicks of a button. It is easy to set up, operate and scale SQL Server databases. Amazon RDS will take care of mundane DBA tasks such as patching, backups, and automatically replace failed hosts for you. You can also monitor, manage, scale and create High Availability SQL Server databases easily.

- #5: RDS for SQL Server offers many flavors of SQL Server, from 2008 R2, SQL Server 2012 to SQL Server 2014, which we launched earlier this week. You can also pick from SQL Server Express, Web, Standard and Enterprise Editions, in License Included and Bring-Your-Own-License offering. With the License Included offering, you pay an hourly rate that includes all the underlying hardware operating costs as well as the software license fees necessary to run SQL Server. If you have purchased your own SQL Server License, you can choose to bring your own license with License Mobility under Software Assurance, and only pay for the cost of operating the hardware.

- #6: A lot of our customers come to us and ask us how they can reduce their RDS bill while not sacrificing performance, manageability, scalability and durability.

- #7: One of the most important cost optimizing considerations is picking the right size of the instance for your workload. Not only are larger instances more costly to run, SQL Server license costs are based on the number of cores or vCPUs. If you select an instance that is bigger than you need, you are paying a disproportionate amount of the cost of running your SQL Server on the license. And we often see customers over provisioning their SQL Server instances, resulting in a much higher RDS bill than is necessary.

- #8: So how do you pick the right instance to run your SQL Server? Well, one way is understand what the demand pattern of your workload looks like. In talking to our customers we found four generic patterns: Constant, steady-state: your workload may require the same performance characteristics throughout the lifetime of the workload, some always-on mission critical enterprise systems fit in this category Predictable fluctuations, but steady-state: over the lifetime of the workload, the load on the database fluctuates over predictable time intervals. This can be seen in line of business systems that receive increased load during business hours, but less outside business hours Growing, but predictable: these workloads grow over time, as more data gets accumulated, or the business itself grows in a fairly predictable way Fluctuating and spiky: these are workloads that have an unpredictable curve and can see unexpected spikes in load. We see this pattern in web-based consumer workloads, where there are viral spikes. Your workload may fit within more than one of these patterns at different times. If you’re a startup you may see a combination of the latter 2, caused by organic business growth and viral events.

- #9: Amazon RDS for SQL Server offers a variety of instance types to meet the needs of customers with all sorts of workloads. For instance, though T2 instances are generally less expensive than other instance types, they are burst capable and they are great for fluctuating & spiky workloads or workloads with predictable fluctuations. They have a decent base performance, with the capability burst up to 100% vCPU utilization when the workload needs it. You earn credits per hour when below base performance, and it stores up to 24 hours worth of credits. You can use Amazon CloudWatch metrics to see credits and usage <click>T2 credits are earned during low CPU utilization and used up during heavy utilization. For T2, 1 CPU credit is equal to 100% utilization of the vCPU for 1 minute, Or 50% of the vCPU for 2 minutes, Or 25% of the vCPU for 2 minutes.

- #10: For instance if you use a T2.micro instance, you are given 30 initial CPU credits to ensure that you have decent startup performance. What are 30 credits good for? You can run the instance for an hour consuming half the CPU resources. Thereafter, you earn 6 CPU credits per hour and have a max CPU credit balance of 144 units. 6 credits per hour means you are able to consume 10% of the CPU over the course of that hour. If you consume less, the remainder gets added to the balance, up to 144 credits. You can use these credits to burst your workload up to 100% utilization of a vCPU. Once your credit is exhausted, you will go back down to your base performance of 10% utilization. CPU credits expire after 24 hours they are earned and they do not persist between shutdown and startups. However, you are given the initial CPU credit again on startup.

- #11: Other instance types that RDS offers include the standard M3 which has between 1 to 8 vCPUs with 3GB to 30GB of memory. The memory optimized instance type, R3, has between 2 to 32 vCPUs and between 15 and 244 GB of memory. If you have a memory intensive workload, you may want to pick the R3 instance type over the M3 instance type. If your workload is less memory intensive but is constant steady-state, you might want to consider the M3 instance type.

- #12: So your workload pattern may provide one avenue to save on costs. If your growth is predictable, you don’t need to run overprovisioned instances from the get go. You can run your instance on smaller instance types until you grow out of it, then simply restart into a larger instance type. If your workloads has known fluctuations, you can do the same scaling down and back up as needed. You can even stop, or terminate and re-provision from snapshot if you know you won’t need the instance for a while. Beyond leveraging the workload pattern, here are some factors that you can consider to optimize your costs. For example, instances in different regions have different prices. You may want to compare the instance prices between regions and pick one that is cheaper if network latency is not an issue. Multi-AZ will also double the cost of your database instance as you are paying for 2 instances, so we generally recommend that you use Multi-AZ for production purposes.

- #13: You can also run your RDS SQL Server instance using on-demand or reserved instance pricing models. On demand is great if you don’t want a long term commitment. You pay by the hour and if you are done, you can simply turn your SQL Server database off and charges will stop accumulating. If you want to save even more and have no problems committing to a 1 or 3 year term, you can consider purchasing 1 or 3 year reserved instance or RI and save up to 60% over on demand costs. There are 3 types of RIs: No upfront, partial upfront and all upfront. Generally, savings are the greatest with all upfront RIs.

- #14: Next, I’d like to talk about how you can import and export data into and out of RDS SQL Server. There are currently 3 options you can choose from – the Import and Export Wizard, bulk copy utility and the recently announced AWS Data Migration Service and AWS Schema Conversion Tool. So when do you use which tool? You’ll want to use the Import and Export Wizard if you want to transfer small- to medium-size tables to another DB instance. You’ll want to use the bulk copy utility if you want to have a large quantity of data to move, like > 1GB of data, because it is more efficient The Data Migration Service should be used if you cannot afford a long downtime for your database. It will enable your database to still be online while it makes an initial copy of your database to the migrated instance. It will then continually replicate changes until you choose a short outage to cut over from one database to another. The Schema Conversion Tool should be used if you want to migrate your data to or from a totally different database engine like MySQL, Oracle or Postgres to SQL Server. The tool will help you transform tables, partitions, sequences, views, stored procedures, triggers and functions. It will also automatically find equivalent functions to transform from one database to another. If it cannot, it will give you links on how to do it manually. BTW, I highly recommend that you read the importing and exporting SQL Server Data guide listed here for more information.

- #15: Like all AWS services, it is easy and straightforward to get started with the AWS Data Migration Service or DMS. *You can get started with your first migration task in 10 min or less. You simply connect it to your source and target databases, and it copies the data over, and begins replicating changes from source to target. *That means that you can keep your apps running during the migration, then switch over at a time that is convenient for your business. * In addition to one-time database migration, you can also use DMS for ongoing data replication. Replicate within, to or from AWS EC2 or RDS databases. For instance, after migrating your database, you can use the AWS Database Migration Service to replicate data into your Redshift data warehouses, cross-region to other RDS instances, or back to your on-premises database. *With DMS, you can move data between engines. DMS supports Oracle, Microsoft SQL Server, MySQL, PostgreSQL, MariaDB, Amazon Aurora, Amazon Redshift * If you would like to sign up for the preview of DMS, go to this url

- #16: Using the AWS Database Migration Service to migrate data to AWS is simple. (CLICK) Start by spinning up a DMS instance in your AWS environment (CLICK) Next, from within DMS, connect to both your source and target databases (CLICK) Choose what data you want to migrate. DMS lets you migrate tables, schemas, or whole databases Then sit back and let DMS do the rest. (CLICK) It creates the tables, loads the data, and best of all, keeps them synchronized for as long as you need That replication capability, which keeps the source and target data in sync, allows customers to switch applications (CLICK) over to point to the AWS database at their leisure. DMS eliminates the need for high-stakes extended outages to migrate production data into the cloud and provides a graceful switchover capability.

- #17: But DMS is for much more than just migration. DMS enables customers to adopt a hybrid approach to the cloud, maintaining some applications on premises, and others within AWS. There are dozens of compelling use cases for a hybrid cloud approach using DMS. For customers just getting their feet wet, AWS is a great place to keep up-to-date read-only copies of on-premises data for reporting purposes. AWS services like Aurora, Redshift and RDS are great platforms for this. With DMS, you can maintain copies of critical business data from third-party or ERP applications, like employee data from Peoplesoft, or financial data from Oracle E-Business Suite, in the databases used by the other applications in your enterprise. In this way, it enables application integration in the enterprise. Another nice thing about the hybrid cloud approach is that it lets customers become familiar with AWS technology and services gradually. Moving to the cloud is much simpler if you have a way to link the data and applications that have moved to AWS with those that haven’t.

- #18: AWS Database Migration Service currently supports the T2 and C4 instance classes. T2 instances are suitable for developing, configuring and testing your database migration process, and for periodic data migration tasks that can benefit from the CPU burst capability. C4 instances are designed to deliver the highest level of processor performance and achieve significantly higher packet per second (PPS) performance, lower network jitter, and lower network latency. You should use C4 instances if you are migrating large databases and are looking to minimize the migration time. With the AWS Database Migration Service you pay for the migration instance that moves your data from your source database to your target database. Each database migration instance includes storage sufficient to support the needs of the replication engine, such as swap space, logs, and cache. (CLICK) Inbound data transfer is free. (CLICK) Additional charges only apply if you decide to allocate additional storage for data migration logs or when you replicate your data to a database in another region or on-premises.

- #19: So thank you for attending this breakfast session on RDS SQL Server and the AWS Data Migration Service. I do hope that you can check out both services. I have a few $50 credit codes here for anyone who is interested in trying it out. You can come by after the Q&A session to pick one up.

![[よくわかるAmazon Redshift]Amazon Redshift最新情報と導入事例のご紹介](https://cdn.slidesharecdn.com/ss_thumbnails/20140219redshiftupdatesv1tokyo-140224010117-phpapp02-thumbnail.jpg?width=560&fit=bounds)