Ad

Sql Server2008

- 1. 1

- 2. SQL Server 2008 for relational and multi-dimentional solution developers Silvano Coriani [email protected] Developer Evangelist Microsoft 2

- 3. Agenda • SQL Server 2008 support for next generation application development – Geospatial data type – Filestream – Date & Time – Large UDT • Simplify existing application scenario – Table Valued Parameters – Change Tracking – Hierarchy ID • Going multi-dimensional – Developer’s roadbook to SSIS, SSAS and SSRS 3

- 4. Relational and Non-Relational Data • Relational data uses simple data types – Each type has a single value – Generic operations work well with the types • Relational storage/query may not be optimal for – Hierarchical data – Spatial data – Sparse, variable, property bags • Some types – benefit by using a custom library – use extended type system (complex types, inheritance) – use custom storage and non-SQL APIs – use non-relational queries and indexing 4

- 5. Spatial Data • Spatial data provides answers to location- based queries – Which roads intersect the Microsoft campus? – Does my land claim overlap yours? – List all of the Italian restaurants within 5 kilometers • Spatial data is part of almost every database – If your database includes an address 5

- 6. SQL Server 2008 and Spatial Data • SQL Server supports two spatial data types – GEOMETRY - flat earth model – GEOGRAPHY - round earth model • Both types support all of the instanciable OGC types – InstanceOf method can distinguish between them • Supports two dimension data – X and Y or Lat and Long members – Z member - elevation (user-defined semantics) – M member - measure (user-defined semantics) 6

- 7. Sample Query Which roads intersect Microsoft’s main SELECT * FROM roads campus? roads.geom.STIntersects(@ms)=1 WHERE 7

- 8. Filestream storage • Storing large binary objects in databases is suboptimal – Large objects take buffers in database memory – Updating large objects cause database fragmentation • In file system however, "update" is delete and insert • "Before image" in an update is not deleted immediately • Storing all related data in a database adds – Transactional consistency – Integrated, point-in-time backup and restore – Single storage and query vehicle 8

- 9. SQL Server 2008 Filestream Implementation • A filegroup for filestream storage is declared using DDL – Filestream storage is tied to a database • The filegroup is mapped to a directory – Must be NTFS file system – Caution: Files deleteable from file system if you have appropriate permissions • VARBINARY(MAX) columns can be defined with FILESTREAM attribute – Table must also have UNIQUEIDENTIFIER column – Filestream storage not available for other large types • Data is stored in the file system 9

- 10. Programming with Filestreams • Filestream columns are available with SQL methods – If SQL is used, indistinguishable from varbinary(max) • Filestream can be accessed and modified using file IO – PathName function retrieves a symbolic path name – Acquire context with • GET_FILESTREAM_TRANSACTION_CONTEXT – Use OpenSqlFilestream to get a file handle based on • File Name • Required Access • Access Options • FilestreamTransaction context 10

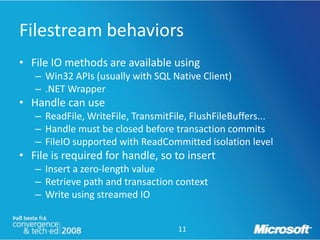

- 11. Filestream behaviors • File IO methods are available using – Win32 APIs (usually with SQL Native Client) – .NET Wrapper • Handle can use – ReadFile, WriteFile, TransmitFile, FlushFileBuffers... – Handle must be closed before transaction commits – FileIO supported with ReadCommitted isolation level • File is required for handle, so to insert – Insert a zero-length value – Retrieve path and transaction context – Write using streamed IO 11

- 12. Demo Spatial Data and Filestream 12

- 13. New SQL Server 2008 Date Types 13

- 14. Table-valued Parameters (TVP) • Input parameters of Table type on SPs/Functions • Optimized to scale and perform better for large data CREATE TYPE myTableType AS TABLE (id INT, name NVARCHAR(100),qty • Behaves like BCP in server INT); CREATE PROCEDURE myProc (@tvp • Simple programming model myTableType READONLY) AS UPDATE Inventory SET • Strongly typed qty += s.qty FROM Inventory AS i INNER JOIN @tvp AS tvp • Reduce client/server round trips GO ON i.id = tvp.id • Do not cause a statement to recompile 14

- 15. Table-valued Parameters (TVP) TVP Client Stack Support • Fully supported in ADO.NET 3 • New Parameter type: SqlDbType.Structured • Parameters can be passed in multiple ways – DataTable – IEnumerable<SqlDataRecord> (fully streamed) – DbDataReader 15

- 16. Hierarchical Data • Hierarchical data consists of nodes and edges – In employee-boss relationship, employee and boss are each nodes, the relationship between them is an edge • Hierarchical data can be modeled in relational as – Adjacency model - separate column for edge • Most common, column can either be in same or separate table – Path Enumeration model - column w/hierarchical path – Nested Set model - adds "left" and "right" columns to represent edges, which must be maintained separately 16

- 17. SQL Server 2008 and Hierarchical Data • New Built-In Data Type - HierarchyID • SQLCLR based system UDT – Useable on .NET clients directly as SqlHierarchyId • An implementation of path enumeration model – Uses ORDPATH internally for speed 17

- 18. HierarchyID • Depth-first indexing • "Level" property - allows breadth-first indexing • Methods for common hierarchical operations – GetRoot – GetLevel – IsDescendant – GetDescendant, GetAncestor – Reparent • Does not enforce tree structure – Can enforce tree using constraints 18

- 19. Demo HierarchyID 19

- 20. Sparse Properties • Many designs require sparse properties – Hardware store has different attributes for each product – Lab tests have different readings for each test – Directory systems have different attributes for each item • These are name-value pairs (property bags) • Because they don't appear on each tuple (row) they are difficult to model 20

- 21. Modeling Sparse Properties • Sparse Properties often modeled as separate table – Base table has one row per item - common properties – Property table has N rows per item - one per property – Known as Entity-Attribute-Value • Can be modeled as sparse tables – 256 table limit in SQL Server JOIN • Can be modeled as sparse columns – 1024 column limit in SQL Server tables • Can be modeled as XML – Common properties are elements, sparse are attributes 21

- 22. SQL Server 2008 and Sparse Columns • Sparse Column extends column limit • Still 1024 column limit for "non-sparse" columns • Over 1024 (10000) for sparse columns • Column marked as SPARSE in table definition • Additional column represents all sparse column name value pairs as attributes in a single XML element 22

- 23. Change Tracking • 3 different “flavor” of tracking data changes in SQL Server 2008 – Change Tracking, CDC (used in DW), Auditing (security- oriented) • Keeps track of data modifications in a table – Lightweight (No trigger, No schema changes) • Overhead similar to a traditional index – Synchronous at commit time – Gives you access to “net changes” from T0 • Doesn’t keep track of “historical” changes 23

- 24. Why go multi-dimensional? • Organizations have large volumes of related data stored in a variety of data systems, often in different formats • Data systems may not… – Be optimized for analytical queries – Contain all the data required by design or by time – Manage historical context – Be available or accessible • Non-technical employees and managers may not have sufficient skills, tools, or permissions to query data systems • Systems may not have universal definitions of an entity • Analytical queries & reporting can impact operational system performance 24

- 25. A realistic scenario • Data source independence – Can survive OLTP system changes – Heterogeneous data source • Single version of the truth – Data Warehouse data centralization – Data Mart as specific model for analysis – Data Mart is user oriented, not Data Warehouse • Some tools can be used also by OLTP solutions – Reporting Services – OLTP queries 25 25

- 26. The Microsoft BI Platform SQL Server 2008 Integrate Store Analyze Report 26

- 27. New with Microsoft SQL Server 2008 Integration & Data Warehousing • Scale and Manage large number of users and data – Improved Query performance on large tables Enhanced Partitioning – Queries Optimized for data warehousing scenarios DW Query Optimizations – Increase I/O performance with efficient and cost effective data storage Data Compression – Manage concurrent workloads of ad-hoc queries, reporting and analysis Resource Governor • Integrate growing volumes of data Persistent Lookups – Optimize your ETL performance by identifying data in your largest tables Change Data Capture – Reduce the data load volumes by capturing operational changes in data MERGE SQL Statement – Simplify your insert and update data processing – Profile your information to identify dirty data Data Profiling 27

- 28. Enterprise-class Data Integration with SQL Server Integration Services • Scalable Integrations – Connect to data – Multi-threaded architecture – Comprehensive transformations – Profile your data – Cleanse your data • Data Quality – Cleanse data – Text Mining – Identify dirty data 28

- 29. Rich Connectivity • Extensive Connectivity – Standards based support Unstructured data – XML, Flat Files and Excel – Binary Files Legacy data: Binary files – BizTalk, MS Message Queues – Oracle, DB2 and SQL Server Application database – Partner Ecosystem OLTP • Change Data Capture – Transparently capture changes Change Tables – Real time integration DW 29

- 30. Rich Connectivity Data Providers ODBC SQL Server SAP NetWeaver BI SQL Server Report Server Models SQL Server Integration Services Teradata XML OLE DB DB2 MySAP SQL Server Data Mining Models Oracle SQL Server Analysis Services Hyperion Essbase 30

- 31. Analysis Services 2008 Drive Pervasive Insights • Design Scalable Solutions – Productivity enhancing designers – Scalable Infrastructure – Superior Performance • Extend Usability – Unified meta data model – Central KPI manageability – Predictive Analysis • Deliver Actionable Insight – Optimized Office interoperability – Rich partner extensibility – Open, embeddable architecture 31

- 32. New with Microsoft SQL Server 2008 Analysis Services Innovative Cube Designer Best Practice Design Alerts Enhanced Dimension Design Enhanced Aggregation Design New Subspace Computations MOLAP Enabled Write-Back Enhanced Back-Up Scalability New Resource Monitor Execution Plan 32

- 33. Reporting Services 2008 Deliver Enterprise Reports • Author Impactful Reports – Powerful Designers – Flexible Report Layout – Rich Visualizations • Manage Enterprise Workload – Enterprise Scale Platform – Central Deployment – Strong Manageability • Deliver Personalized Reports – Interactive Reports – Rendering in the Format Users Want – Delivery to Location Users Want 33

- 34. New with Microsoft SQL Server 2008 Reporting Services New Report Designer Enhanced Data Visualization New Flexible Report Layout Scalable Report Engine Single Service Architecture New Word Rendering Improved Excel Rendering New End User Design Experience SharePoint Integration 34

- 35. The complete flow OLTP Client Portal Analytical Applications (MBS, third-party) Office/SharePoint/PPS Query and CRM DW, Reporting ERP ODS Integration Analytical Devices Services Data Analysis Components LOB (ETL) (OLAP, DM) Analytic Platform .NET Framework (IIS, ASP, Net, CLR) and SQL Server (Relational, Multidimensional, XML) BI Development and Management Tools SQL Server Management Tools 35

- 36. Languages, APIs, And SDKs • MDX + DMX • ADO MD.NET – AdoMdClient and AdoMdServer • XML/A • AMO • RDL • Report Server Web Service, RS URL Access, and RS Extensions 36

- 37. Develop Custom Client Applications • Using ADO MD.NET, AMO, and XMLA in your own applications • Front-ending RS and ProClarity • Integrating with AdoMdServer and server-side assemblies • Using Data Mining Model Viewer controls • Visualization with WPF and Silverlight 37

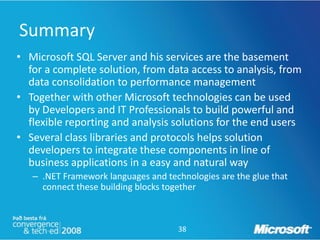

- 38. Summary • Microsoft SQL Server and his services are the basement for a complete solution, from data access to analysis, from data consolidation to performance management • Together with other Microsoft technologies can be used by Developers and IT Professionals to build powerful and flexible reporting and analysis solutions for the end users • Several class libraries and protocols helps solution developers to integrate these components in line of business applications in a easy and natural way – .NET Framework languages and technologies are the glue that connect these building blocks together 38

- 39. Don’t forget the evalutations!! • Fill the evaluations and you’ll get – Windows Home Server (1st day) – Windows 7 Beta (2nd day) 39

- 40. © 2009 Microsoft Corporation. All rights reserved. Microsoft, Hyper-V, RemoteApp, Windows logo, Windows Start button, Windows Server Windows, Windows Vista and other product names are or may be registered trademarks and/or trademarks in the U.S. and/or other countries. All other trademarks are property of their respective owners. The information herein is for informational purposes only and represents the current view of Microsoft Corporation as of the date of this presentation. Because Microsoft must respond to changing market conditions, it should not be interpreted to be a commitment on the part of Microsoft, and Microsoft cannot guarantee the accuracy of any information provided after the date of this presentation. MICROSOFT MAKES NO WARRANTIES, EXPRESS, IMPLIED OR STATUTORY, AS TO THE INFORMATION IN THIS PRESENTATION. 40