Ad

Get started with Microsoft SQL Polybase

- 1. SQL Server 2016 PolyBase Henk van der Valk Oct.15, 2016 Level: Beginner www.Henkvandervalk.com

- 2. Starting SQL2016 on a server with 24 TB RAM

- 3. Thanks to our platinum sponsors : PASS SQL Saturday Holland - 20163 |

- 4. Thanks to our gold and silver sponsors : PASS SQL Saturday Holland - 20164 | APS Onsite!

- 5. Speaker Introduction 10+ years active in SQLPass community! 10 years of Unisys-EMEA Performance Center • 2002- Largest SQL DWH in the world (SQL2000) • Project Real – (SQL 2005) • ETL WR - loading 1TB within 30 mins (SQL 2008) • Contributor to SQL performance whitepapers • Perf Tips & tricks: www.henkvandervalk.com Schuberg Philis- 100% uptime for mission critical apps Since april 1st, 2011 – Microsoft Data Platform ! All info represents my own personal opinion (based upon my own experience) and not that of Microsoft @HenkvanderValk

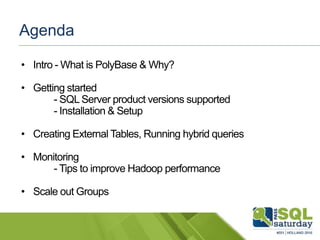

- 6. Agenda

- 7. SQL Server 2016 as fraud detection scoring engine https://blogs.technet.microsoft.com/machinelearning/2016/09/22/predictions-at-the-speed-of-data/ HTAP (Hybrid Transactional Analytical Processing)

- 8. The Big Data lake Challenge How to orchestrate? Different types of data Webpages, logs, and clicks Hardware and software sensors Semi-structured/unstructured data Large scale Hundreds of servers Advanced data analysis Integration between structured and unstructured data Power of both

- 9. PolyBase builds the Bridge Access any data Azure Blob Storage Just-in-Time data integration Across relational and non-relational data Fast, simple data loading Best of both worlds T-SQL compatible Uses computational power at source Opportunity for new types of analysis

- 10. PolyBase View in SQL Server 2016 PolyBase View • Execute T-SQL queries against relational data in SQL Server and ‘semi-structured’ data in HDFS and/or Azure • Leverage existing T-SQL skills and BI tools to gain insights from different data stores • Expand the reach of SQL Server to Hadoop(HDFS & WASB) Access any data

- 11. Remove the complexity of big data T-SQL over Hadoop JSON support PolyBase T-SQL query SQL Server Hadoop Quote: ************************ ********************** ********************* ********************** *********************** $658.39 Simple T-SQL to query Hadoop data (HDFS) Name DOB State Denny Usher 11/13/58 WA Gina Burch 04/29/76 WA NEW NEW NEW

- 12. PolyBase use cases Access any data

- 13. Polybase - Turning raw data tweets into information Query & Store Hadoop data Bi-directional seamless & fast

- 16. 16 Setup & Query SQL Server 2016 & SQL DW Polybase!

- 17. Prerequisites •An instance of SQL Server (64-bit) Ent.Ed. / Developer Ed.. •Microsoft .NET Framework 4.5. •Oracle Java SE RunTime Environment (JRE) version 7.51 or higher (64-bit). (Either JRE or Server JRE will work). Go to Java SE downloads. •Note:The installer will fail if JRE is not present. •Minimum memory: 4GB •Minimum hard disk space: 2GB •TCP/IP connectivity must be enabled.

- 18. Step 2: Install SQL Server PolyBase DLLs PolyBase DLLs PolyBase DLLs PolyBase DLLs Install one or more SQL Server instances with PolyBase PolyBase DLLs (Engine and DMS) are installed and registered as Windows Services Prerequisite: User must download and install JRE (Oracle) Access any data

- 19. Components introduced in SQL Server 2016 PolyBase Engine Service PolyBase Data Movement Service (with HDFS Bridge) External table constructs MR pushdown computation support Access any data

- 20. How to use PolyBase in SQL Server 2016 - Set up a Hadoop Cluster or Azure Storage blob - Install SQL Server - Configure a PolyBase group - Choose Hadoop flavor - Attach Hadoop Cluster or Azure Storage PolyBase T-SQL queries submitted here PolyBase queries can only refer to tables here and/or external tables here Computenodes Head nodes Access any data

- 21. Step 1: Set up a Hadoop Cluster… Hortonworks or Cloudera Distributions Hadoop 2.0 or above Linux or Windows On-premises or in Azure Access any data

- 22. Step 1: …Or set up an Azure Storage blob Azure Storage blob (ASB) exposes an HDFS layer PolyBase reads and writes from ASB using Hadoop RecordReader/RecordWrite No compute pushdown support for ASB Azure Storage Volume Azure Storage Volume Azure Storage Volume Azure Access any data

- 23. Step 2: Configure a PolyBase group PolyBase Engine PolyBase DMS PolyBase DMS PolyBase DMS PolyBase DMS Head node Compute nodes PolyBase scale-out group Head node is the SQL Server instance to which queries are submitted Compute nodes are used for scale-out query processing for data in HDFS or Azure

- 24. Step 3: Choose /Select Hadoop flavor Supported Hadoop distributions Cloudera CHD 5.x on Linux Hortonworks 2.x on Linux and Windows Server What happens under the covers? Loading the right client jars to connect to Hadoop distribution Access any data

- 25. Step 4: Attach Hadoop Cluster or Azure Storage PolyBase Engine PolyBaseDMS PolyBaseDMS PolyBaseDMS PolyBaseDMS Head node Azure Storage Volume Azure Storage Volume Azure Storage Volume Azure Access any data

- 26. After Setup Compute nodes are used for scale- out query processing on external tables in HDFS Tables on compute nodes cannot be referenced by queries submitted to head node Number of compute nodes can be dynamically adjusted by DBA Hadoop clusters can be shared between multiple SQL16 PolyBase groups PolyBase T-SQL queries submitted here PolyBase queries can only refer to tables here and/or external tables here Computenodes Head nodes Access any data

- 27. Polybase configuration --1: Create a master key on the database. -- Required to encrypt the credential secret. CREATE MASTER KEY ENCRYPTION BY PASSWORD = 'SQLSat#551'; -- select * from sys.symmetric_keys -- Create a database scoped credential for Azure blob storage. -- IDENTITY: any string (this is not used for authentication to Azure storage). -- SECRET: your Azure storage account key. CREATE DATABASE SCOPED CREDENTIAL AzureStorageCredential WITH IDENTITY = 'wasbuser', Secret = '1abcdEFGb3Mcn0F9UdJS/10taXmr5L17xrEO17rlMRL8SNYg==';

- 28. Create external Data Source --2: Create an external data source. -- LOCATION: Azure account storage account name and blob container name. -- CREDENTIAL: The database scoped credential created above. CREATE EXTERNAL DATA SOURCE AzureStorage with ( TYPE = HADOOP, LOCATION ='wasbs://[email protected]', CREDENTIAL = AzureStorageCredential ); -- view list of external data sources; select * from sys.external_data_sources

- 29. Create External file format --select * from sys.external_file_formats --3: Create an external file format. -- FORMAT TYPE: Type of format in Hadoop -- (DELIMITEDTEXT, RCFILE, ORC, PARQUET). -- With GZIP: CREATE EXTERNAL FILE FORMAT TextDelimited_GZIP WITH ( FORMAT_TYPE = DELIMITEDTEXT , FORMAT_OPTIONS (FIELD_TERMINATOR ='|', USE_TYPE_DEFAULT = TRUE) , DATA_COMPRESSION = 'org.apache.hadoop.io.compress.GzipCodec' );

- 30. Create External Table --4: Create an external table. -- The external table points to data stored in Azure storage. -- LOCATION: path to a file or directory that contains the data (relative to the blob co -- To point to all files under the blob container, use LOCATION='/' CREATE EXTERNAL TABLE [dbo].[lineitem4] ( [ROWID1] [bigint] NULL, [L_SHIPDATE] [smalldatetime] NOT NULL, [L_ORDERKEY] [bigint] NOT NULL, [L_DISCOUNT] [smallmoney] NOT NULL, [.. [L_COMMENT] [varchar](44) NOT NULL ) WITH (LOCATION='/', DATA_SOURCE = AzureStorage, FILE_FORMAT = TextFileFormat, REJECT_TYPE = VALUE, REJECT_VALUE = 0 ));

- 31. Import ------------------------------------ -- IMPORT Data from WASB into NEW table: ------------------------------------ SELECT * INTO [dbo].[LINEITEM_MO_final_temp] from ( SELECT * FROM [dbo].[lineitem1] ) AS Import

- 32. Export data (Gzipped) -- Enable Export/ INSERT into external table sp_configure 'allow polybase export', 1; Reconfigure CREATE EXTERNAL TABLE [dbo].[lineitem_export] ( [ROWID1] [bigint] NULL, .. [L_SHIPINSTRUCT] [varchar](25) NOT NULL, [L_COMMENT] [varchar](44) NOT NULL ) WITH (LOCATION='/gzipped', DATA_SOURCE = AzureStorage, FILE_FORMAT = TextDelimited_GZIP, REJECT_TYPE = VALUE, REJECT_VALUE = 0 )

- 33. Manage External resources SSMS / VSTS New: - External Tables - External Resources - Ext. Data Sources - Ext. File formats

- 34. PolyBase query example #1 -- select on external table (data in HDFS) SELECT * FROM Customer WHERE c_nationkey = 3 and c_acctbal < 0; A possible execution plan: CREATE temp table T Execute on compute nodes1 IMPORT FROM HDFS HDFS Customer file read into T2 EXECUTE QUERY Select * from T where T.c_nationkey =3 and T.c_acctbal < 0 3 Access any data

- 35. PolyBase query example #2 -- select and aggregate on external table (data in HDFS) SELECT AVG(c_acctbal) FROM Customer WHERE c_acctbal < 0 GROUP BY c_nationkey; Execution plan: Run MR Job on Hadoop Apply filter and compute aggregate on Customer. 1 What happens here? Step 1: QO compiles predicate into Java and generates a MapReduce (MR) job Step 2: Engine submits MR job to Hadoop cluster. Output left in hdfsTemp. hdfsTemp <US, $-975.21> <FRA, $-119.13> <UK, $-63.52> Access any data

- 36. PolyBase query example #2 -- select and aggregate on external table (data in HDFS) SELECT AVG(c_acctbal) FROM Customer WHERE c_acctbal < 0 GROUP BY c_nationkey; Execution plan: 1. Predicate and aggregate pushed into Hadoop cluster as a MapReduce job 2. Query optimizer makes a cost- based decision on what operators to push Run MR Job on Hadoop Apply filter and compute aggregate on Customer. Output left in hdfsTemp 1 IMPORT hdfsTEMP Read hdfsTemp into T3 CREATE temp table T On DW compute nodes2 RETURN OPERATION Select * from T4 hdfsTemp <US, $-975.21> <FRA, $-119.13> <UK, $-63.52> Access any data

- 37. Query relational and non-relational data, on-premises and in Azure Apps T-SQL query SQL Server Hadoop Summary: PolyBase Query relational and non-relational data with T-SQL Access any data

- 39. Lots of new DMV’s ---------------------------------------- -- Monitoring Polybase / All DMV's : ---------------------------------------- SELECT * FROM sys.external_tables SELECT * FROM sys.external_data_sources SELECT * FROM sys.external_file_formats SELECT * FROM sys.dm_exec_compute_node_errors SELECT * FROM sys.dm_exec_compute_node_status SELECT * FROM sys.dm_exec_compute_nodes SELECT * FROM sys.dm_exec_distributed_request_steps SELECT * FROM sys.dm_exec_dms_services SELECT * FROM sys.dm_exec_distributed_requests SELECT * FROM sys.dm_exec_distributed_sql_requests SELECT * FROM sys.dm_exec_dms_workers SELECT * FROM sys.dm_exec_external_operations SELECT * FROM sys.dm_exec_external_work

- 40. Find the longest running query -- Find the longest running query SELECT execution_id, st.text, dr.total_elapsed_time FROM sys.dm_exec_distributed_requests dr cross apply sys.dm_exec_sql_text(sql_handle) st ORDER BY total_elapsed_time DESC;

- 41. Find the longest running step of the distributed query plan -- Find the longest running step of the distributed query plan SELECT execution_id, step_index, operation_type, distribution_type, location_type, status, total_elapsed_time, command FROM sys.dm_exec_distributed_request_steps WHERE execution_id = 'QID1120' ORDER BY total_elapsed_time DESC;

- 42. Details on a Step_index SELECT execution_id, step_index, dms_step_index, compute_node_id, type, input_name, length, total_elapsed_time, status FROM sys.dm_exec_external_work WHERE execution_id = 'QID1120' and step_index = 7 ORDER BY total_elapsed_time DESC;

- 43. Optimizations

- 44. Polybase - data compression to minimize data movement http://henkvandervalk.com/aps-polybase-for-hadoop-and-windows-azure-blob-storage-wasb-integration

- 45. Enable Pushdown configuration (Hadoop) Improves query performance 1.Find the file yarn-site.xml in the installation path of SQL Server. C:Program FilesMicrosoft SQL ServerMSSQL13.SQL2016RTMMSSQL BinnPolybaseHadoopconf yarn-site.xml On the Hadoop machine: in the Hadoop configuration directory. Copy the value of the configuration key yarn.application.classpath. 3.On the SQL Server machine, in the yarn.site.xml file, find the yarn.application.classpath property. Paste the value from the Hadoop machine into the value element.

- 46. Time to InsightsAPS Cybercrime Filmpje & Demo! Various sources Single query

- 47. Further Reading » Get started with Polybase: » https://msdn.microsoft.com/en-us/library/mt163689.aspx Data compression tests: http://henkvandervalk.com/aps-polybase-for-hadoop-and- windows-azure-blob-storage-wasb-integration

- 49. Please fill in the evaluation forms

Editor's Notes

- #2: http://www.sqlsaturday.com/551/Sessions/Schedule.aspx SQL PolyBase has been an high-end feature for SQL APS and now also introduced in SQL2016, SQL DB and SQLDW! It allows you to use regular T-SQL statements to ad-hoc access data stored in Hadoop and/or Azure Blob Storage from within SQL Server. This session will show you how it works & how to get started!

- #4: Please add this slide add the start of your presentation after the first welcome slide

- #5: Please add this slide add the start of your presentation after the first welcome slide

- #17: BCP out vs RTC

- #27: - Improved PolyBase query performance with scale-out computation on external data (PolyBase scale-out groups) - Improved PolyBase query performance with faster data movement from HDFS to SQL Server and between PolyBase Engine and SQL Server

- #35: Additionally - there is… - Support for exporting data to external data source via INSERT INTO EXTERNAL TABLE SELECT FROM TABLE - Support for push-down computation to Hadoop for string operations (compare, LIKE) - Support for ALTER EXTERNAL DATA SOURCE statement

- #38: When it comes to key BI investments, we are making it much easier to manage relational and non-relational data. PolyBase technology allows you to query Hadoop data and SQL Server relational data through a single T-SQL query. One of the challenges we see with Hadoop is there are not enough people knowledgeable in Hadoop and MapReduce, and this technology simplifies the skill set needed to manage Hadoop data. This can also work across your on-premises environment or SQL Server running in Azure.

- #50: Please add this slide add the end of your presentation to get feedback from the audience

![Create External Table

--4: Create an external table.

-- The external table points to data stored in Azure storage.

-- LOCATION: path to a file or directory that contains the data (relative to the blob co

-- To point to all files under the blob container, use LOCATION='/'

CREATE EXTERNAL TABLE [dbo].[lineitem4] (

[ROWID1] [bigint] NULL,

[L_SHIPDATE] [smalldatetime] NOT NULL,

[L_ORDERKEY] [bigint] NOT NULL,

[L_DISCOUNT] [smallmoney] NOT NULL,

[..

[L_COMMENT] [varchar](44) NOT NULL

)

WITH (LOCATION='/',

DATA_SOURCE = AzureStorage,

FILE_FORMAT = TextFileFormat,

REJECT_TYPE = VALUE,

REJECT_VALUE = 0

));](https://image.slidesharecdn.com/c1c91249-9340-4e92-aef5-772a3450c66c-161016195846/85/Get-started-with-Microsoft-SQL-Polybase-30-320.jpg)

![Import

------------------------------------

-- IMPORT Data from WASB into NEW table:

------------------------------------

SELECT *

INTO [dbo].[LINEITEM_MO_final_temp]

from

(

SELECT * FROM [dbo].[lineitem1]

) AS Import](https://image.slidesharecdn.com/c1c91249-9340-4e92-aef5-772a3450c66c-161016195846/85/Get-started-with-Microsoft-SQL-Polybase-31-320.jpg)

![Export data (Gzipped)

-- Enable Export/ INSERT into external table

sp_configure 'allow polybase export', 1;

Reconfigure

CREATE EXTERNAL TABLE [dbo].[lineitem_export] (

[ROWID1] [bigint] NULL,

..

[L_SHIPINSTRUCT] [varchar](25) NOT NULL,

[L_COMMENT] [varchar](44) NOT NULL

)

WITH (LOCATION='/gzipped',

DATA_SOURCE = AzureStorage,

FILE_FORMAT = TextDelimited_GZIP,

REJECT_TYPE = VALUE,

REJECT_VALUE = 0

)](https://image.slidesharecdn.com/c1c91249-9340-4e92-aef5-772a3450c66c-161016195846/85/Get-started-with-Microsoft-SQL-Polybase-32-320.jpg)