stable_diffusion_a_tutorial, How stable_diffusion works, build stable_diffusion from scratch

- 1. Stable Diffusion Binxu Wang, John Vastola Machine Learning from Scratch Nov.1st 2022

- 2. What’s the deal with all these pictures?

- 3. These pictures were generated by Stable Diffusion, a recent diffusion generative model. You may have also heard of DALL·E 2, which works in a similar way. It can turn text prompts (e.g. “an astronaut riding a horse”) into images. It can also do a variety of other things!

- 4. Could be a model of imagination. Why should we care? Similar techniques could be used to generate any number of things (e.g. neural data). It’s cool! "a lovely cat running in the desert in Van Gogh style, trending art."

- 5. It’s complicated… but here’s the high-level idea. How does it work? “Batman eating pizza in a diner"

- 6. What do we need? 1. Method of learning to generate new stuff given many examples Example pictures of people “bad stick figure drawing"

- 7. What do we need? “cool professor person” 3. Way to compress images (for speed in training and generation) 2. Way to link text and images 𝑧[0: 3, : , : ]

- 8. What do we need? …since when you’re generating something new, you need a way to safely go beyond the images you’ve seen before. 4. Way to add in good image-related inductive biases…

- 9. What do we need? 4. Way to add in good inductive biases 1. Method of learning to generate new stuff 3. Way to compress images 2. Way to link text and images Forward/reverse dffusion Text-image representation model Autoencoder U-net architecture Making a ‘good’ generative model is about making all these parts work together well! + ‘attention’

- 11. Stable Diffusion in Action

- 12. Cartoon with StableDiffusion + Cartoon https://www.reddit.com/r/Sta bleDiffusion/comments/xcjj7u /sd_img2img_after_effects_i _generated_2_images_and/

- 13. Some Resources • Diffusion model in general • What are Diffusion Models? | Lil'Log • Generative Modeling by Estimating Gradients of the Data Distribution | Yang Song • Stable diffusion • Annotated & simplified code: U-Net for Stable Diffusion (labml.ai) • Illustrations: The Illustrated Stable Diffusion – Jay Alammar • Attention & Transformers • The Illustrated Transformer

- 14. Outline • Stable Diffusion is cool! • Build Stable Diffusion “from Scratch” • Principle of Diffusion models (sampling, learning) • Diffusion for Images – UNet architecture • Understanding prompts – Word as vectors, CLIP • Let words modulate diffusion – Conditional Diffusion, Cross Attention • Diffusion in latent space – AutoEncoderKL • Training on Massive Dataset. – LAION 5Billion • Let’s try ourselves.

- 15. Principle of Diffusion Models Learning to generate by iterative denoising.

- 16. “Creating noise from data is easy; Creating data from noise is generative modeling.” -- Song, Yang

- 17. Diffusion models • Forward diffusion (noising) • 𝑥0 → 𝑥1 → ⋯ 𝑥𝑇 • Take a data distribution 𝑥0~𝑝(𝑥), turn it into noise by diffusion 𝑥𝑇~𝒩 0, 𝜎2𝐼 • Reverse diffusion (denoising) • 𝑥𝑇 → 𝑥𝑇−1 → ⋯ 𝑥0 • Sample from the noise distribution 𝑥𝑇~𝒩(0, 𝜎2𝐼), reverse the diffusion process to generate data 𝑥0~𝑝(𝑥) 𝒙𝟎 𝒙𝟏 𝒙𝑻 𝒙𝑻−𝟏

- 18. Math Formalism • For a forward diffusion process 𝑑𝒙 = 𝑓 𝒙, 𝑡 𝑑𝑡 + 𝑔 𝑡 𝑑𝒘 • There is a backward diffusion process that reverse the time 𝑑𝒙 = 𝑓 𝑥, 𝑡 − 𝑔 𝑡 2∇𝑥 log 𝑝(𝒙, 𝑡) 𝑑𝑡 + 𝑔 𝑡 𝑑𝒘 • If we know the time-dependent score function ∇𝑥 log 𝑝(𝒙, 𝑡) • Then we can reverse the diffusion process.

- 19. Animation for the Reverse Diffusion Score Vector Field Reverse Diffusion guided by the score vector field https://yang-song.net/blog/2021/score/

- 20. Training diffusion model = Learning to denoise • If we can learn a score model 𝑓𝜃 𝑥, 𝑡 ≈ ∇ log 𝑝(𝑥, 𝑡) • Then we can denoise samples, by running the reverse diffusion equation. 𝑥𝑡 → 𝑥𝑡−1 • Score model 𝑓𝜃: 𝒳 × 0,1 → 𝒳 • A time dependent vector field over 𝑥 space. • Training objective: Infer noise from a noised sample 𝑥 ∼ 𝑝 𝑥 , 𝜖 ∼ 𝒩 0, 𝐼 , 𝑡 ∈ [0,1] min 𝜖 + 𝑓𝜃 𝑥 + 𝜎𝑡 𝜖, 𝑡 2 2 • Add Gaussian noise 𝜖 to an image 𝑥 with scale 𝜎𝑡, learn to infer the noise 𝜎.

- 21. Conditional denoising • Infer noise from a noised sample, based on a condition 𝑦 • 𝑥, 𝑦 ∼ 𝑝 𝑥, 𝑦 , 𝜖 ∼ 𝒩 0, 𝐼 , 𝑡 ∈ [0,1] • min 𝜖 − 𝑓𝜃 𝑥 + 𝜎𝑡 𝜖, 𝑦, 𝑡 2 2 • Conditional score model 𝑓𝜃: 𝒳 × 𝒴 × 0,1 → 𝒳 • Use Unet as to model image to image mapping • Modulate the Unet with condition (text prompt).

- 23. GAN • One shot generation. Fast. • Harder to control in one pass. • Adversarial min-max objective. Can collapse. Diffusion • Multi-iteration generation. Slow. • Easier to control during generation. • Simple objective, no adversary in training. Diffusion vs GAN / VAE

- 24. Activation maximization ~ Reverse Diffusion • For a neuron, activation maximization can be realized by gradient ascent 𝑧𝑡+1 ← 𝑧𝑡 + ∇𝑓 𝐺 𝑧𝑡 + 𝜖 • Homologous to the reverse diffusion equation. • Idea: Neuron activation defines a Generative model on image space.

- 25. Modelling Score function over Image Domain Introducing UNet

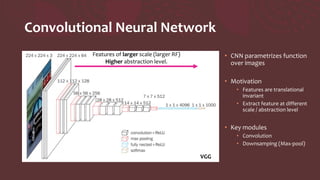

- 26. Convolutional Neural Network • CNN parametrizes function over images • Motivation • Features are translational invariant • Extract feature at different scale / abstraction level • Key modules • Convolution • Downsamping (Max-pool) VGG Features of larger scale (larger RF) Higher abstraction level.

- 27. CNN + inverted CNN ⇒ UNet • Inverted CNN (generator) can generate images. • CNN + inverted CNN could model Image → Image function. Down Sampling Up Sampling Convolution TransposedConvolution

- 28. UNet: a natural architecture for image-to- image function Skip connection Transporting information at the same resolution. Down (sampling) side Encoder Up (sampling) side Decoder

- 29. Key Ingredients of UNet • Convolution operation • Save parameter, spatial invariant • Down/Up sampling • Multiscale / Hierarchy • Learn modulation at multi scale and multi-abstraction levels. • Skip connection • No bottleneck • Route feature of the same scaledirectly. • Cf. AutoEncoder has bottleneck

- 30. Note: Add Time Dependency • The score function is time-dependent. • Target: 𝑠 𝑥, 𝑡 = ∇𝑥 log 𝑝(𝑥, 𝑡) • Add time dependency • Assume time dependency is spatially homogeneous. • Add one scalar value per channel 𝑓(𝑡) • Parametrize 𝑓(𝑡) by MLP / linear of Fourier basis. Conv tensor 𝒕 ⊕ Linear/ MLP 𝑡 embedding [𝐬𝐢𝐧 𝝎𝒊𝒕 , 𝐜𝐨𝐬 𝝎𝒊𝒕 , … ]

- 31. Unet in Stable Diffusion (conv_in): Conv2d(4, 320, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (time_proj): Timesteps() (time_embedding): TimestepEmbedding (linear_1): Linear(in_features=320, out_features=1280, bias=True) (act): SiLU() (linear_2): Linear(in_features=1280, out_features=1280, bias=True) (down_blocks): (0): CrossAttnDownBlock2D (1): CrossAttnDownBlock2D (2): CrossAttnDownBlock2D (3): DownBlock2D (up_blocks): (0): UpBlock2D (1): CrossAttnUpBlock2D (2): CrossAttnUpBlock2D (3): CrossAttnUpBlock2D (mid_block): UNetMidBlock2DCrossAttn (attentions): (resnets): (conv_norm_out): GroupNorm(32, 320, eps=1e-05, affine=True) (conv_act): SiLU() (conv_out): Conv2d(320, 4, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

- 32. How to understand prompts? Language / Multimodal Transformer, CLIP!

- 33. Word as Vectors: Language Model 101 • Unlike pixel, meaning of word are not explicitly in the characters. • Word can be represented as index in dictionary • But index is also meaning less. • Represent words in a vector space • Vector geometry => semantic relation. I love cats and dogs . Words in a sentence 328, 793, 3989, 537, 3255, 269 Token Index Word Vectors

- 34. Word Vector in Context: RNN / Transformers • Meaning of word depends on context, not always the same. • “I book a ticket to buy that book.” • Word vectors should depend on context. • Transformers let each word “absorb” influence from other words to be “contextualized” I love cats and dogs . Transformer Block Transformer Block N layers …… More on attention later…

- 35. Learning Word Vectors: GPT & BERT & CLIP • Self-supervised learning of word representation • Predicting missing / next words in a sentence. (BERT, GPT) • Contrastive Learning, matching image and text. (CLIP) MLM — Sentence-Transformers documentation (sbert.net) Downstream Classifier can decode: Part of speech, Sentiment, …

- 36. Joint Representation for Vision and Language : CLIP • Learn a joint encoding space for text caption and image • Maximize representation similarity between an image and its caption. • Minimize other pairs Vision Transformer Transformer CLIP paper 2021

- 37. Choice of text encoding • Encoder in Stable Diffusion: pre-trained CLIP ViT-L/14 text encoder • Word vector can be randomly initialized and learned online. • Representing other conditional signals • Object categories (e.g. Shark, Trout, etc.): • 1 vector per class • Face attributes (e.g. {female, blonde hair, with glasses, …}, {male, short hair, dark skin}): • set of vectors, 1 vector per attributes • Time to be creative!!

- 38. How does text affect diffusion? Incoming Cross Attention

- 39. I love cats and dogs . Original sentence Origin of Attention: Machine Translation (Seq2Seq) • Use Attention to retrieve useful info from a batch of vectors. 𝑒1 𝑒2 𝑒3 𝑒4 𝑒5 𝑒6 Encoder hidden state (Word Vectors) ℎ1 ℎ2 ℎ3 Decoder hidden state (Word Vectors) J'adore les chats et les chiens. French Translation

- 40. From Dictionary to Attention Dictionary: Hard-indexing • `dic = {1 : 𝑣1, 2 : 𝑣2, 3 : 𝑣3}` • Keys 1,2,3 • Values 𝑣1, 𝑣2, 𝑣3 • `dic[2]` • Query 2 • Find 2 in keys • Get corresponding value. • Retrieving values as matrix vector product • One hot vector over the keys • Matrix vector product 𝑣1 𝑣2 𝑣3 1 0 0 × = 𝑣2 𝟏 𝟐 𝟑

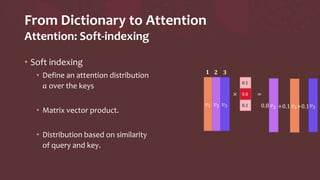

- 41. From Dictionary to Attention Attention: Soft-indexing • Soft indexing • Define an attention distribution 𝑎 over the keys • Matrix vector product. • Distribution based on similarity of query and key. 𝑣1 𝑣2 𝑣3 0.8 0.1 0.1 × = 𝟏 𝟐 𝟑 𝑣2 𝑣1 𝑣3 0.8 +0.1 +0.1

- 42. QKV attention • Query : what I need (J’adore : “I want subject pronoun & verb”) • Key : what the target provide (I : “Here is the subject”) • Value : the information to be retrieved (latent related to Je or J’ ) • Linear projection of “word vector” • Query 𝑞𝑖 = 𝑊 𝑞ℎ𝑖 • Key 𝑘𝑗 = 𝑊𝑘𝑒𝑗 • Value 𝑣𝑗 = 𝑊 𝑣𝑒𝑗 • 𝑒𝑗 hidden state of encoder (English, source) • ℎ𝑖 hidden state of decoder (French, target)

- 43. Attention mechanism • Compute the inner product (similarity) of key 𝑘 and query 𝑞 • SoftMax the normalized score as attention distribution. 𝑎𝑖𝑗 = SoftMax 𝑘𝑗 𝑇 𝑞𝑖 𝑙𝑒𝑛(𝑞) , 𝑗 𝑎𝑖𝑗 = 1 • Use attention distribution to weighted average values 𝑣. 𝑐𝑖 = 𝑗 𝑎𝑖𝑗𝑣𝑗

- 44. Visualizing Attention matrix 𝒂𝒊𝒋 • French 2 English • “Learnt to pay Attention” • “la zone economique europeenne” -> “the European Economic Area” • “a ete signe” -> “was signed” https://jalammar.github.io/visualizing-neural-machine- translation-mechanics-of-seq2seq-models-with-attention/ Attention + RNN

- 45. Cross & Self Attention • Cross Attention • Tokens in one language pay attention to tokens in another. • Self Attention (𝑒𝑖 = ℎ𝑖) • Tokens in a language pay attention to each other. ℎ1 ℎ2 ℎ3 Decoder hidden state (Word Vectors) J'adore les chats French Translation

- 46. https://jalammar.github.io/illustrated-gpt2/ “A robot must obey the order given it.”

- 47. https://jalammar.github.io/illustrated-gpt2/ “A robot must obey the order given it.”

- 49. Note: Feed Forward network • Attention is usually followed by a 2-layer MLP and Normalization • Learn nonlinear transform.

- 50. Text2Image as translation “ A ballerina chasing her cat running on the grass in the style of Monet " Encoded Word Vectors Latent State of Image Spatial Dimensions Channel Dimensions Sequence Dimensions Patch Vectors! Target language: Images Source language: Words

- 51. Text2Image as translation “ A ballerina chasing her cat running on the grass in the style of Monet " Encoded Word Vectors Latent State of Image Spatial Dimensions Channel Dimensions Sequence Dimensions Cross Attention: Image to Words Self Attention: Image to Image

- 52. Spatial Transformer • Rearrange spatial tensor to sequence. • Cross Attention • Self Attention • FFN • Rearrange back to spatial tensor (same shape)

- 53. Tips: Implementing attention `einops` lib • `einops.rearrange` function • Shift order of axes • Split / combine dimension. • `torch.einsum` function • Multiply & sum tensors along axes.

- 54. • Down blocks ResBlock SpatialTransformer ResBlock SpatialTransformer DownSample ResBlock SpatialTransformer ResBlock SpatialTransformer DownSample ResBlock SpatialTransformer ResBlock SpatialTransformer DownSample ResBlock ResBlock • Up blocks ResBlock ResBlock ResBlock UpSample ResBlock SpatialTransformer ResBlock SpatialTransformer ResBlock SpatialTransformer UpSample ResBlock SpatialTransformer ResBlock SpatialTransformer ResBlock SpatialTransformer UpSample ResBlock SpatialTransformer ResBlock SpatialTransformer ResBlock SpatialTransformer UNet = Giant Sandwich of Spatial transformer + ResBlock (Conv layer)

- 55. Spatial transformer + ResBlock (Conv layer) • Alternating Time and Word Modulation • Alternating Local and Nonlocal operation Resblock Spatial Transformer Resblock Spatial Transformer Latent tensor 𝟒, 𝟔𝟒, 𝟔𝟒 Time embedding 𝟏𝟐𝟖𝟎 Word Vectors 𝑳𝒔𝒆𝒒, 𝟕𝟖𝟒

- 56. Diffusion in Latent Space Adding in AutoEncoder Rombach, R., Blattmann, A., Lorenz, D., Esser, P., & Ommer, B. (2022). High- resolution image synthesis with latent diffusion models, CVPR

- 57. Diffusion in latent space • Motivation: • Natural images are high dimensional • but have many redundant details that could be compressed / statistically filled out • Division of labor • Diffusion model -> Generate low resolution sketch • AutoEncoder -> Fill out high resolution details • Train a VAE model to compress images into latent space. • 𝑥 → 𝑧 → 𝑥 • Train diffusion models in latent space of 𝑧. 32 pix 180 pix 𝑑 = 97200 𝑑 = 2352 DownSampling 𝑥 [3,512,512] 𝑧 [4,512/𝑓, 512/𝑓] ො 𝑥 [3,512,512]

- 58. Spatial Compression Tradeoff • LDM-{𝑓}. 𝑓 = Spatial downsampling factor • Higher 𝑓 leads to faster sampling, with degraded image quality (FID ↑) • Fewer sampling steps leads to faster sampling, with lower quality (FID ↑) Face CelebA-HQ ImageNet

- 59. Spatial Compression Tradeoff • LDM-{𝑓}. 𝑓 = Spatial downsampling factor • Too little compression 𝑓 = 1,2 or too much compression 𝑓 = 32, makes diffusion hard to train.

- 60. Details in Stable Diffusion • In stable diffusion, spatial downsampling 𝑓 = 8 • 𝑥 is (3, 512, 512) image tensor • 𝑧 is (4, 64, 64) latent tensor

- 61. Regularizing the Latent Space • KL regularizer • Similar to VAE, make latent distribution like Gaussian distribution. • VQ regularizer • Make the latent representation quantized to be a set of discrete tokens.

- 62. Let the GPUs roar! Training data & details.

- 63. Large Data Training • SD is trained on ~ 2 Billion image – caption (English) pairs. • Scraped from web, filtered by CLIP. • https://laion.ai/blog/laion-5b/

- 66. Meaning of latent space • Latent state contains a “sketch version” of the image. 𝑧[0: 3, : , : ]

![What do we need?

“cool professor person”

3. Way to compress images

(for speed in training and generation)

2. Way to link text and images

𝑧[0: 3, : , : ]](https://image.slidesharecdn.com/stablediffusionatutorial-250221112958-c16597e8/85/stable_diffusion_a_tutorial-How-stable_diffusion-works-build-stable_diffusion-from-scratch-7-320.jpg)

![Training diffusion model =

Learning to denoise

• If we can learn a score model

𝑓𝜃 𝑥, 𝑡 ≈ ∇ log 𝑝(𝑥, 𝑡)

• Then we can denoise samples, by running the reverse diffusion equation. 𝑥𝑡 → 𝑥𝑡−1

• Score model 𝑓𝜃: 𝒳 × 0,1 → 𝒳

• A time dependent vector field over 𝑥 space.

• Training objective: Infer noise from a noised sample

𝑥 ∼ 𝑝 𝑥 , 𝜖 ∼ 𝒩 0, 𝐼 , 𝑡 ∈ [0,1]

min 𝜖 + 𝑓𝜃 𝑥 + 𝜎𝑡

𝜖, 𝑡 2

2

• Add Gaussian noise 𝜖 to an image 𝑥 with scale 𝜎𝑡, learn to infer the noise 𝜎.](https://image.slidesharecdn.com/stablediffusionatutorial-250221112958-c16597e8/85/stable_diffusion_a_tutorial-How-stable_diffusion-works-build-stable_diffusion-from-scratch-20-320.jpg)

![Conditional denoising

• Infer noise from a noised sample, based on a condition 𝑦

• 𝑥, 𝑦 ∼ 𝑝 𝑥, 𝑦 , 𝜖 ∼ 𝒩 0, 𝐼 , 𝑡 ∈ [0,1]

• min 𝜖 − 𝑓𝜃 𝑥 + 𝜎𝑡

𝜖, 𝑦, 𝑡 2

2

• Conditional score model 𝑓𝜃: 𝒳 × 𝒴 × 0,1 → 𝒳

• Use Unet as to model image to image mapping

• Modulate the Unet with condition (text prompt).](https://image.slidesharecdn.com/stablediffusionatutorial-250221112958-c16597e8/85/stable_diffusion_a_tutorial-How-stable_diffusion-works-build-stable_diffusion-from-scratch-21-320.jpg)

![Note: Add Time Dependency

• The score function is time-dependent.

• Target: 𝑠 𝑥, 𝑡 = ∇𝑥 log 𝑝(𝑥, 𝑡)

• Add time dependency

• Assume time dependency is spatially

homogeneous.

• Add one scalar value per channel 𝑓(𝑡)

• Parametrize 𝑓(𝑡) by MLP / linear of Fourier basis.

Conv

tensor

𝒕

⊕

Linear/

MLP

𝑡 embedding

[𝐬𝐢𝐧 𝝎𝒊𝒕 ,

𝐜𝐨𝐬 𝝎𝒊𝒕 ,

… ]](https://image.slidesharecdn.com/stablediffusionatutorial-250221112958-c16597e8/85/stable_diffusion_a_tutorial-How-stable_diffusion-works-build-stable_diffusion-from-scratch-30-320.jpg)

![From Dictionary to Attention

Dictionary: Hard-indexing

• `dic = {1 : 𝑣1, 2 : 𝑣2, 3 : 𝑣3}`

• Keys 1,2,3

• Values 𝑣1, 𝑣2, 𝑣3

• `dic[2]`

• Query 2

• Find 2 in keys

• Get corresponding value.

• Retrieving values as matrix vector product

• One hot vector over the keys

• Matrix vector product

𝑣1 𝑣2 𝑣3

1

0

0

× =

𝑣2

𝟏 𝟐 𝟑](https://image.slidesharecdn.com/stablediffusionatutorial-250221112958-c16597e8/85/stable_diffusion_a_tutorial-How-stable_diffusion-works-build-stable_diffusion-from-scratch-40-320.jpg)

![Diffusion in latent space

• Motivation:

• Natural images are high dimensional

• but have many redundant details that could be

compressed / statistically filled out

• Division of labor

• Diffusion model -> Generate low resolution sketch

• AutoEncoder -> Fill out high resolution details

• Train a VAE model to compress images into latent

space.

• 𝑥 → 𝑧 → 𝑥

• Train diffusion models in latent space of 𝑧.

32 pix 180 pix

𝑑 = 97200

𝑑 = 2352

DownSampling

𝑥

[3,512,512]

𝑧

[4,512/𝑓, 512/𝑓]

ො

𝑥

[3,512,512]](https://image.slidesharecdn.com/stablediffusionatutorial-250221112958-c16597e8/85/stable_diffusion_a_tutorial-How-stable_diffusion-works-build-stable_diffusion-from-scratch-57-320.jpg)

![Meaning of latent space

• Latent state contains a “sketch version” of the image.

𝑧[0: 3, : , : ]](https://image.slidesharecdn.com/stablediffusionatutorial-250221112958-c16597e8/85/stable_diffusion_a_tutorial-How-stable_diffusion-works-build-stable_diffusion-from-scratch-66-320.jpg)

![[系列活動] 一日搞懂生成式對抗網路](https://cdn.slidesharecdn.com/ss_thumbnails/gan-170813004356-thumbnail.jpg?width=560&fit=bounds)

![Canva Pro 2025 PC Crack Latest Version [New Updated]](https://cdn.slidesharecdn.com/ss_thumbnails/presentation-droneinspectioninpakistaninnovationsahead-250524055147-e9fe5536-250524070606-11136562-thumbnail.jpg?width=560&fit=bounds)

![WinX HD Video Converter Deluxe 5.16.7.342 Crack + Key [Latest]](https://cdn.slidesharecdn.com/ss_thumbnails/artificialintelligence14-250526150454-fabc75ca-thumbnail.jpg?width=560&fit=bounds)

![AVS Video Converter 12.1.5.673 Full Crack Download [Latest]](https://cdn.slidesharecdn.com/ss_thumbnails/artificialintelligence20-250528093742-69471b40-thumbnail.jpg?width=560&fit=bounds)

![GlarySoft Malware Hunter Pro 1.117.0.710 with Crack [Latest]](https://cdn.slidesharecdn.com/ss_thumbnails/artificialintelligence15-250529072518-7f8c1b72-thumbnail.jpg?width=560&fit=bounds)