Moving Towards a Streaming Architecture

- 1. Moving Towards a Streaming Architecture Garry Turkington (CTO), Gabriele Modena (Data Scientist) Improve Digital

- 6. Data sources A geographically distributed fleet of ad servers push out several types of data, primarily: - 1 minute metrics packets - 15 minute full log files Previously used ad hoc system to process the former, Hadoop for the latter

- 7. Conceptualizing the data We think of event streams when considering the operational system But have this sliced into many log files for practical reasons Recreating the stream-based view gets us back to what is happening And brings data creation and processing closer together

- 8. How did we get here Need to process more data, faster - Reporting - Decision Making Evolution of the system to meet scalability requirements Stream processing by definition It is changing the way we think about data collection and distribution Not everything is obvious It opened new doors

- 9. Let’s take a step back Aggregates grow in number and size Keep a copy in HDFS Run job, then go and make coffee Generate new / other aggregates / ad hoc datasets Analytics datawarehouse (column store), denormalized data model BI, reporting Hadoop for logs and event processing (eg. ETL, simulation, learning, optimization)

- 10. Hadoop workflows

- 11. Incremental processing Ingest data every eg. 24 hours (/data/raw/event/d=YYYY-MM-DD) Scheduling and partitioning are straightforward Schema migrations: Avro + HCatalog Fixed length time windows - What is a good length? - Ok if it takes 1 hour to process 15 minutes of data Need to reprocess data It can be made efficient (eg. DataFu Hourglass) MapReduce

- 12. A more interactive system We added tools to circumvent MapReduce - Latency, really - Still a “batch” mindset Cloudera Impala (+ Parquet) - Fast, familiar Move computation closer to data - Spark (among other things) for prototyping - Python/R integration, batch and “streaming”

- 13. Outcome Multiple overlapping data sources, processing tools - point-to-point Different pipelines for analytics and production models - Divide - Where is the “correct” data ?

- 14. All in all Data collection and distribution is critical We are still looking at “yesterday’s” data We need a different level of abstraction

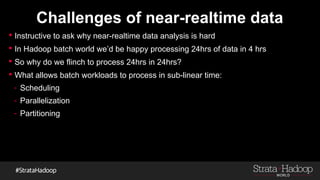

- 16. Challenges of near-realtime data Instructive to ask why near-realtime data analysis is hard In Hadoop batch world we’d be happy processing 24hrs of data in 4 hrs So why do we flinch to process 24hrs in 24hrs? What allows batch workloads to process in sub-linear time: - Scheduling - Parallelization - Partitioning

- 17. The streaming view Consider these from the streaming perspective: - Scheduling is challenging because of unknown and variable data rates - Parallelization breaks our nice logical model of a single data stream - Partitioning can’t be simple file size/no of blocks - The method of partitioning the stream is fundamental

- 18. Kafka We use Kafka (http://kafka.apache.org) for all data we view as streams It is resilient, performant and provides a very clear topic-based interface Producers write to topics, consumers read from topics Topics can be partitioned based on a producer-provided key Provides at-least once message delivery semantics Messages are persistently stored – topics can be re-read

- 19. Samza We use Samza (http://samza.incubator.apache.org) for stream processing Analogous to MapReduce atop YARN atop HDFS We have Samza atop YARN atop Kafka E L E M O D E N A L E A R N IN G H A D O O P 2 A p a c h e S a m z a afka for streaming ! arn for resource anagement and exec! amzaAPI for ocessing! weet spot: second, inutes Y A R N S a m z a A P I K a fk a

- 20. Samza API A simple call-back API that should be familiar to MapReduce developers: public interface StreamTask{ void process(IncomingMessageEnvelope envelope, MessageCollector collector, TaskCoordinator) ; } public interface WindowableTask{ void window(MessageCollector collector, StreamCoordinator coordinator); }

- 21. Kafka/Samza integration A Samza job is comprised of multiple tasks Each Kafka partition topic is consumed by only one Samza task Each YARN container runs multiple Samza tasks The output of a task is often written to a different topic Overall applications are then constructed by composition of jobs

- 22. Stream job output You’ve processed the data, now what? Need consider how the output of each stream partition will be processed A downstream destination with an idempotent interface is ideal Otherwise try to have expressions of unique state Hardest is when the outputs need applied in sequence

- 23. Example: log data It’s a common model: server process rolls a log every x minutes Each resultant file is ingested into Kafka and read by multiple Samza jobs Each Samza task receives a series of potentially interleaved log chunks Consider a task receiving data from servers S1, S2 and S3 across time periods T1..T3 It’s view may become something like: S1[T1..T2], S2[T1..T2], S1[T2..T3], S3[T1..T2]…

- 24. Example - Streaming aggregation Input is a Stream of records with the form (key, value) Output is a series of (key, aggregate) 3 approaches to producing these outputs: - Each task pushes local counts downstream - Each task pushes to another stream aggregated by a different job - Each task uses local state to produce final aggregate values

- 25. Comparing stream aggregation Option 1: each stream partition outputs local values for each key it sees across a time window - Each partial result is an update record (e.g. increment key k at time t by x) - Downstream consumer has the responsibility of combining partial results Option 2 : aggregate partial results in a second stream processing job - The output becomes a series of state changes (set the value of key k at time t to x)

- 26. Comparing stream aggregation contd Option 3 - push state into the state processors - Samza tasks can hold persistent local state - Creates a key/value store modelled as a Kafka topic - Ensure that all records for each key are sent to the same partition - State processing task can then create unique state aggregates locally

- 27. A side effect of Kafka A firehose for event data - Can tap into with Samza - Can tap into with Spark Unifies how we access and publish data - Streams are defined by application / use case - These can come from other teams - Single entry point, multiple outputs

- 28. Analytics on streams Incremental processing pipelines as a basis Tried to reuse logic Not that simple Conceptualization vs. implementation

- 29. Time windows Temporal constraints When is data ready? - The key is defining “ready”, which is weaker than “complete” - Enough to make an informed decision (this varies case by case) - We can update the dataset to improve a confidence interval When are we done processing? - Tradeoff between timeliness and accuracy

- 30. Schema evolution Schema changes appear within / across time windows - Not a solved problem Stop streaming, propagate changes, replay or resume SAMZA-317 - Avro SerDe - Schema stream

- 31. Challenges Data completeness Windows length Tradeoff between finding answers / satisfying temporal constraints Need to adjust our approach (representation and data structures) - approximate - probabilistic - iterations to convergence This is a work in progress

- 32. Model based outlier detection Previously: Batch and real time datasets = different approaches Now: Same dataset Model based anomaly detection Reuse knowledge and infrastructure What is normal? How do we set thresholds ? Literature review (q-digest, t-digest)

- 33. Testing and alerting Some features are hard to test Functionally correct but unexpected consequences unexpected ~ hard to predict advertisment is a complex system Bugs are outliers we can detect (sort of)

- 34. Testing as predictive modelling Monitoring (next to testing) Make software monitorable Think of testing as an experiment Establish short, continuous, feedback loops

- 35. Implications How do we use real time output - “live” dashboards - Instrumentation and alerting (predict failure) - feed optimization models - back propagating methods into ETL How we think about feedback loops Make data more consumable

- 36. Conclusion Streaming is part of the broader system We are fine tuning how the two fit together Data collection and distribution is important publish results follows Kafka + Samza data and processing model that fits our applications well Conceptualization vs. implementation Real time != incremental A certain degree of uncertainty Tradeoffs

![Example: log data

It’s a common model: server process rolls a log every x minutes

Each resultant file is ingested into Kafka and read by multiple Samza jobs

Each Samza task receives a series of potentially interleaved log chunks

Consider a task receiving data from servers S1, S2 and S3 across time periods

T1..T3

It’s view may become something like:

S1[T1..T2], S2[T1..T2], S1[T2..T3], S3[T1..T2]…](https://image.slidesharecdn.com/stratahadoop-streamingarchitecture-150417051503-conversion-gate01/85/Moving-Towards-a-Streaming-Architecture-23-320.jpg)