Ad

Streaming Big Data & Analytics For Scale

- 1. STREAMING BIG DATA & ANALYTICS FOR SCALE Helena Edelson 1 @helenaedelson

- 2. @helenaedelson Who Is This Person? • VP of Product Engineering @Tuplejump • Big Data, Analytics, Cloud Engineering, Cyber Security • Committer / Contributor to FiloDB, Spark Cassandra Connector, Akka, Spring Integration 2 • @helenaedelson • github.com/helena • linkedin.com/in/helenaedelson • slideshare.net/helenaedelson

- 4. @helenaedelson Topics • The Problem Domain - What needs to be solved • The Stack (Scala,Akka,Spark Streaming,Kafka,Cassandra) • Simplifying the architecture • The Pipeline - integration 4

- 5. @helenaedelson THE PROBLEM DOMAIN Delivering Meaning From A Flood Of Data 5 @helenaedelson

- 6. @helenaedelson The Problem Domain Need to build scalable, fault tolerant, distributed data processing systems that can handle massive amounts of data from disparate sources, with different data structures. 6

- 7. @helenaedelson Delivering Meaning • Deliver meaning in sec/sub-sec latency • Disparate data sources & schemas • Billions of events per second • High-latency batch processing • Low-latency stream processing • Aggregation of historical from the stream 7

- 8. I need fast access to historical data on the fly for predictive modeling with real time data from the stream

- 9. @helenaedelson It's Not A Stream It's A Flood • Netflix • 50 - 100 billion events per day • 1 - 2 million events per second at peak • LinkedIn • 500 billion write events per day • 2.5 trillion read events per day • 4.5 million events per second at peak with Kafka • 1 PB of stream data 9

- 10. @helenaedelson Reality Check • Massive event spikes & bursty traffic • Fast producers / slow consumers • Network partitioning & out of sync systems • DC down • Wait, we've DDOS'd ourselves from fast streams? • Autoscale issues – When we scale down VMs how do we not lose data? 10

- 11. @helenaedelson And stay within our cloud hosting budget 11

- 12. @helenaedelson Oh, and don't loose data 12

- 14. @helenaedelson About The Stack An ensemble of technologies enabling a data pipeline, storage and analytics. 14

- 16. @helenaedelson Scala and Spark Scala is now becoming this glue that connects the dots in big data. The emergence of Spark owes directly to its simplicity and the high level of abstraction of Scala. • Distributed collections that are functional by default • Apply transformations to them • Spark takes that exact idea and puts it on a cluster 16

- 18. @helenaedelson Pick Technologies Wisely Based on your requirements • Latency • Real time / Sub-Second: < 100ms • Near real time (low): > 100 ms or a few seconds - a few hours • Consistency • Highly Scalable • Topology-Aware & Multi-Datacenter support • Partitioning Collaboration - do they play together well 18

- 19. @helenaedelson Strategies 19 • Partition For Scale & Data Locality • Replicate For Resiliency • Share Nothing • Fault Tolerance • Asynchrony • Async Message Passing • Memory Management • Data lineage and reprocessing in runtime • Parallelism • Elastically Scale • Isolation • Location Transparency

- 20. @helenaedelson Strategy Technologies Scalable Infrastructure / Elastic Spark, Cassandra, Kafka Partition For Scale, Network Topology Aware Cassandra, Spark, Kafka, Akka Cluster Replicate For Resiliency Spark,Cassandra, Akka Cluster all hash the node ring Share Nothing, Masterless Cassandra, Akka Cluster both Dynamo style Fault Tolerance / No Single Point of Failure Spark, Cassandra, Kafka Replay From Any Point Of Failure Spark, Cassandra, Kafka, Akka + Akka Persistence Failure Detection Cassandra, Spark, Akka, Kafka Consensus & Gossip Cassandra & Akka Cluster Parallelism Spark, Cassandra, Kafka, Akka Asynchronous Data Passing Kafka, Akka, Spark Fast, Low Latency, Data Locality Cassandra, Spark, Kafka Location Transparency Akka, Spark, Cassandra, Kafka My Nerdy Chart 20

- 21. @helenaedelson 21 few years in Silicon Valley Cloud Engineering team @helenaedelson

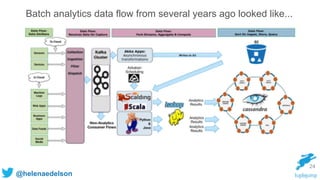

- 22. @helenaedelson 22 Batch analytics data flow from several years ago looked like...

- 24. @helenaedelson 24 Batch analytics data flow from several years ago looked like...

- 26. @helenaedelson 26 Transforming data multiple times, multiple ways

- 27. @helenaedelson 27 Sweet, let's triple the code we have to update and regression test every time our analytics logic changes

- 28. @helenaedelson AND THEN WE GREEKED OUT 28 Lambda

- 29. @helenaedelson Lambda Architecture A data-processing architecture designed to handle massive quantities of data by taking advantage of both batch and stream processing methods. 29 • Or, "How to beat the CAP theorum" • An approach coined by Nathan Mars • This was a huge stride forward

- 31. @helenaedelson Implementing Is Hard • Real-time pipeline backed by KV store for updates • Many moving parts - KV store, real time, batch • Running similar code in two places • Still ingesting data to Parquet/HDFS • Reconcile queries against two different places 31

- 32. @helenaedelson Performance Tuning & Monitoring on so many disparate systems 32 Also Hard

- 33. @helenaedelson 33 λ: Streaming & Batch Flows Evolution Or Just Addition? Or Just Technical Debt?

- 34. @helenaedelson Lambda Architecture Ingest an immutable sequence of records is captured and fed into • a batch system • and a stream processing system in parallel 34

- 35. @helenaedelson WAIT, DUAL SYSTEMS? 35 Challenge Assumptions

- 36. @helenaedelson Which Translates To • Performing analytical computations & queries in dual systems • Duplicate Code • Untyped Code - Strings • Spaghetti Architecture for Data Flows • One Busy Network 36

- 37. @helenaedelson Architectyr? "This is a giant mess" - Going Real-time - Data Collection and Stream Processing with Apache Kafka, Jay Kreps 37

- 38. @helenaedelson 38 These are not the solutions you're looking for

- 39. @helenaedelson Escape from Hadoop? Hadoop • MapReduce - very powerful, no longer enough • It’s Batch • Stale data • Slow, everything written to disk • Huge overhead • Inefficient with respect to memory use • Inflexible vs Dynamic 39

- 40. @helenaedelson One Pipeline 40 • A unified system for streaming and batch • Real-time processing and reprocessing • Code changes • Fault tolerance http://radar.oreilly.com/2014/07/questioning-the-lambda-architecture.html - Jay Kreps

- 41. @helenaedelson THE PIPELINE 41 Stream Integration, IoT, Timeseries and Data Locality

- 42. @helenaedelson 42 KillrWeather http://github.com/killrweather/killrweather A reference application showing how to easily integrate streaming and batch data processing with Apache Spark Streaming, Apache Cassandra, Apache Kafka and Akka for fast, streaming computations on time series data in asynchronous event-driven environments. http://github.com/databricks/reference-apps/tree/master/timeseries/scala/timeseries-weather/src/main/scala/com/ databricks/apps/weather

- 43. @helenaedelson val context = new StreamingContext(conf, Seconds(1)) val stream = KafkaUtils.createDirectStream[Array[Byte], Array[Byte], DefaultDecoder, DefaultDecoder]( context, kafkaParams, kafkaTopics) stream.flatMap(func1).saveToCassandra(ks1,table1) stream.map(func2).saveToCassandra(ks1,table1) context.start() 43 Kafka, Spark Streaming and Cassandra

- 44. @helenaedelson class KafkaProducerActor[K, V](config: ProducerConfig) extends Actor { override val supervisorStrategy = OneForOneStrategy(maxNrOfRetries = 10, withinTimeRange = 1.minute) { case _: ActorInitializationException => Stop case _: FailedToSendMessageException => Restart case _: ProducerClosedException => Restart case _: NoBrokersForPartitionException => Escalate case _: KafkaException => Escalate case _: Exception => Escalate } private val producer = new KafkaProducer[K, V](producerConfig) override def postStop(): Unit = producer.close() def receive = { case e: KafkaMessageEnvelope[K,V] => producer.send(e) } } 44 Kafka, Spark Streaming, Cassandra & Akka

- 45. Training Data Feature Extraction Model Training Model Testing Test Data Your Data Extract Data To Analyze Train your model to predict Spark Streaming ML, Kafka & Cassandra

- 46. @helenaedelson Spark Streaming, ML, Kafka & C* val ssc = new StreamingContext(new SparkConf()…, Seconds(5) val testData = ssc.cassandraTable[String](keyspace,table).map(LabeledPoint.parse) val trainingStream = KafkaUtils.createStream[K, V, KDecoder, VDecoder]( ssc, kafkaParams, topicMap, StorageLevel.MEMORY_ONLY) .map(_._2).map(LabeledPoint.parse) trainingStream.saveToCassandra("ml_keyspace", "raw_training_data") val model = new StreamingLinearRegressionWithSGD() .setInitialWeights(Vectors.dense(weights)) .trainOn(trainingStream) //Making predictions on testData model .predictOnValues(testData.map(lp => (lp.label, lp.features))) .saveToCassandra("ml_keyspace", "predictions") 46

- 48. @helenaedelson 48 class KafkaStreamingActor(params: Map[String, String], ssc: StreamingContext) extends AggregationActor(settings: Settings) { import settings._ val stream = KafkaUtils.createStream( ssc, params, Map(KafkaTopicRaw -> 1), StorageLevel.DISK_ONLY_2) .map(_._2.split(",")) .map(RawWeatherData(_)) stream.saveToCassandra(CassandraKeyspace, CassandraTableRaw) stream .map(hour => (hour.wsid, hour.year, hour.month, hour.day, hour.oneHourPrecip)) .saveToCassandra(CassandraKeyspace, CassandraTableDailyPrecip) } Kafka, Spark Streaming, Cassandra & Akka

- 49. @helenaedelson class KafkaStreamingActor(params: Map[String, String], ssc: StreamingContext) extends AggregationActor(settings: Settings) { import settings._ val stream = KafkaUtils.createStream( ssc, params, Map(KafkaTopicRaw -> 1), StorageLevel.DISK_ONLY_2) .map(_._2.split(",")) .map(RawWeatherData(_)) stream.saveToCassandra(CassandraKeyspace, CassandraTableRaw) stream .map(hour => (hour.wsid, hour.year, hour.month, hour.day, hour.oneHourPrecip)) .saveToCassandra(CassandraKeyspace, CassandraTableDailyPrecip) } 49 Now we can replay • On failure • Reprocessing on code changes • Future computation...

- 50. @helenaedelson 50 Here we are pre-aggregating to a table for fast querying later - in other secondary stream aggregation computations and scheduled computing class KafkaStreamingActor(params: Map[String, String], ssc: StreamingContext) extends AggregationActor(settings: Settings) { import settings._ val stream = KafkaUtils.createStream( ssc, params, Map(KafkaTopicRaw -> 1), StorageLevel.DISK_ONLY_2) .map(_._2.split(",")) .map(RawWeatherData(_)) stream.saveToCassandra(CassandraKeyspace, CassandraTableRaw) stream .map(hour => (hour.wsid, hour.year, hour.month, hour.day, hour.oneHourPrecip)) .saveToCassandra(CassandraKeyspace, CassandraTableDailyPrecip) }

- 51. @helenaedelson CREATE TABLE weather.raw_data ( wsid text, year int, month int, day int, hour int, temperature double, dewpoint double, pressure double, wind_direction int, wind_speed double, one_hour_precip PRIMARY KEY ((wsid), year, month, day, hour) ) WITH CLUSTERING ORDER BY (year DESC, month DESC, day DESC, hour DESC); CREATE TABLE daily_aggregate_precip ( wsid text, year int, month int, day int, precipitation counter, PRIMARY KEY ((wsid), year, month, day) ) WITH CLUSTERING ORDER BY (year DESC, month DESC, day DESC); Data Model (simplified) 51

- 52. @helenaedelson 52 class KafkaStreamingActor(params: Map[String, String], ssc: StreamingContext) extends AggregationActor(settings: Settings) { import settings._ val stream = KafkaUtils.createStream( ssc, params, Map(KafkaTopicRaw -> 1), StorageLevel.DISK_ONLY_2) .map(_._2.split(",")) .map(RawWeatherData(_)) stream.saveToCassandra(CassandraKeyspace, CassandraTableRaw) stream .map(hour => (hour.wsid, hour.year, hour.month, hour.day, hour.oneHourPrecip)) .saveToCassandra(CassandraKeyspace, CassandraTableDailyPrecip) } Gets the partition key: Data Locality Spark C* Connector feeds this to Spark Cassandra Counter column in our schema, no expensive `reduceByKey` needed. Simply let C* do it: not expensive and fast.

- 53. @helenaedelson CREATE TABLE weather.raw_data ( wsid text, year int, month int, day int, hour int, temperature double, dewpoint double, pressure double, wind_direction int, wind_speed double, one_hour_precip PRIMARY KEY ((wsid), year, month, day, hour) ) WITH CLUSTERING ORDER BY (year DESC, month DESC, day DESC, hour DESC); C* Clustering Columns Writes by most recent Reads return most recent first Timeseries Data 53 Cassandra will automatically sort by most recent for both write and read

- 54. @helenaedelson 54 val multipleStreams = for (i <- numstreams) { streamingContext.receiverStream[HttpRequest](new HttpReceiver(port)) } streamingContext.union(multipleStreams) .map { httpRequest => TimelineRequestEvent(httpRequest)} .saveToCassandra("requests_ks", "timeline") CREATE TABLE IF NOT EXISTS requests_ks.timeline ( timesegment bigint, url text, t_uuid timeuuid, method text, headers map <text, text>, body text, PRIMARY KEY ((url, timesegment) , t_uuid) ); Record Every Event In The Order In Which It Happened, Per URL timesegment protects from writing unbounded partitions. timeuuid protects from simultaneous events over-writing one another.

- 55. @helenaedelson val stream = KafkaUtils.createDirectStream(...) .map(_._2.split(",")) .map(RawWeatherData(_)) stream.saveToCassandra(CassandraKeyspace, CassandraTableRaw) stream .map(hour => (hour.id, hour.year, hour.month, hour.day, hour.oneHourPrecip)) .saveToCassandra(CassandraKeyspace, CassandraTableDailyPrecip) 55 Replay and Reprocess - Any Time Data is on the nodes doing the querying - Spark C* Connector - Partitions • Timeseries data with Data Locality • Co-located Spark + Cassandra nodes • S3 does not give you Cassandra & Spark Streaming: Data Locality For Free®

- 56. @helenaedelson class PrecipitationActor(ssc: StreamingContext, settings: Settings) extends AggregationActor { import akka.pattern.pipe def receive : Actor.Receive = { case GetTopKPrecipitation(wsid, year, k) => topK(wsid, year, k, sender) } /** Returns the 10 highest temps for any station in the `year`. */ def topK(wsid: String, year: Int, k: Int, requester: ActorRef): Unit = { val toTopK = (aggregate: Seq[Double]) => TopKPrecipitation(wsid, year, ssc.sparkContext.parallelize(aggregate).top(k).toSeq) ssc.cassandraTable[Double](keyspace, dailytable) .select("precipitation") .where("wsid = ? AND year = ?", wsid, year) .collectAsync().map(toTopK) pipeTo requester } } 56 Queries pre-aggregated tables from the stream Compute Isolation: Actor

- 57. @helenaedelson 57 class TemperatureActor(sc: SparkContext, settings: Settings) extends AggregationActor { import akka.pattern.pipe def receive: Actor.Receive = { case e: GetMonthlyHiLowTemperature => highLow(e, sender) } def highLow(e: GetMonthlyHiLowTemperature, requester: ActorRef): Unit = sc.cassandraTable[DailyTemperature](keyspace, daily_temperature_aggr) .where("wsid = ? AND year = ? AND month = ?", e.wsid, e.year, e.month) .collectAsync() .map(MonthlyTemperature(_, e.wsid, e.year, e.month)) pipeTo requester } C* data is automatically sorted by most recent - due to our data model. Additional Spark or collection sort not needed. Efficient Batch Analysis

- 58. @helenaedelson TCO: Cassandra, Hadoop • Compactions vs file management and de-duplication jobs • Storage cost, cloud providers / hardware, storage format, query speeds, cost of maintaining • Hiring talent to manage Cassandra vs Hadoop • Hadoop has some advantages if used with AWS EMR or other hosted solutions (What about HCP?) • HDFS vs Cassandra is not a fair comparison – You have to decide first if you want file vs DB – Where in the stack – Then you can compare • See http://velvia.github.io/presentations/2015-breakthrough-olap-cass-spark/ index.html#/15/3 58

- 60. @helenaedelson

- 62. Analytic Analytic Search • Fast, distributed, scalable and fault tolerant cluster compute system • Enables Low-latency with complex analytics • Developed in 2009 at UC Berkeley AMPLab, open sourced in 2010, and became a top-level Apache project in February, 2014

- 63. @helenaedelson Spark Streaming • One runtime for streaming and batch processing • Join streaming and static data sets • No code duplication • Easy, flexible data ingestion from disparate sources to disparate sinks • Easy to reconcile queries against multiple sources • Easy integration of KV durable storage 63

- 64. @helenaedelson Training Data Feature Extraction Model Training Model Testing Test Data Your Data Extract Data To Analyze Train your model to predict 64 val context = new StreamingContext(conf, Milliseconds(500)) val model = KMeans.train(dataset, ...) // learn offline val stream = KafkaUtils .createStream(ssc, zkQuorum, group,..) .map(event => model.predict(event.feature))

- 65. @helenaedelson Apache Cassandra • Extremely Fast • Extremely Scalable • Multi-Region / Multi-Datacenter • Always On • No single point of failure • Survive regional outages • Easy to operate • Automatic & configurable replication 65

- 66. @helenaedelson 66 The one thing in your infrastructure you can always rely on

- 67. •Massively Scalable • High Performance • Always On • Masterless

- 69. IoT

- 71. Science Physics: Astro Physics / Particle Physics..

- 72. Genetics / Biological Computations

- 73. • High Throughput Distributed Messaging • Decouples Data Pipelines • Handles Massive Data Load • Support Massive Number of Consumers • Distribution & partitioning across cluster nodes • Automatic recovery from broker failures

- 75. @helenaedelson 75 High performance concurrency framework for Scala and Java • Fault Tolerance • Asynchronous messaging and data processing • Parallelization • Location Transparency • Local / Remote Routing • Akka: Cluster / Persistence / Streams

- 76. @helenaedelson Akka Actors 76 A distribution and concurrency abstraction • Compute Isolation • Behavioral Context Switching • No Exposed Internal State • Event-based messaging • Easy parallelism • Configurable fault tolerance

- 77. @helenaedelson Some Resources • http://www.planetcassandra.org/blog/equinix-evaluates-hbase-and-cassandra-for-the-real-time- billing-and-fraud-detection-of-hundreds-of-data-centers-worldwide • Building a scalable platform for streaming updates and analytics • @SAPdevs tweet: Watch us take a dive deeper and explore the benefits of using Akka and Scala https://t.co/M9arKYy02y • https://mesosphere.com/blog/2015/07/24/learn-everything-you-need-to-know-about-scala-and- big-data-in-oakland/ • https://www.youtube.com/watch?v=buszgwRc8hQ • https://www.youtube.com/watch?v=G7N6YcfatWY • http://noetl.org 77

![@helenaedelson

val context = new StreamingContext(conf, Seconds(1))

val stream = KafkaUtils.createDirectStream[Array[Byte],

Array[Byte], DefaultDecoder, DefaultDecoder](

context, kafkaParams, kafkaTopics)

stream.flatMap(func1).saveToCassandra(ks1,table1)

stream.map(func2).saveToCassandra(ks1,table1)

context.start()

43

Kafka, Spark Streaming and Cassandra](https://image.slidesharecdn.com/streaming-big-data-analytics-for-scale-160111203421/85/Streaming-Big-Data-Analytics-For-Scale-43-320.jpg)

extends Actor {

override val supervisorStrategy =

OneForOneStrategy(maxNrOfRetries = 10, withinTimeRange = 1.minute) {

case _: ActorInitializationException => Stop

case _: FailedToSendMessageException => Restart

case _: ProducerClosedException => Restart

case _: NoBrokersForPartitionException => Escalate

case _: KafkaException => Escalate

case _: Exception => Escalate

}

private val producer = new KafkaProducer[K, V](producerConfig)

override def postStop(): Unit = producer.close()

def receive = {

case e: KafkaMessageEnvelope[K,V] => producer.send(e)

}

} 44

Kafka, Spark Streaming, Cassandra & Akka](https://image.slidesharecdn.com/streaming-big-data-analytics-for-scale-160111203421/85/Streaming-Big-Data-Analytics-For-Scale-44-320.jpg)

.map(LabeledPoint.parse)

val trainingStream = KafkaUtils.createStream[K, V, KDecoder, VDecoder](

ssc, kafkaParams, topicMap, StorageLevel.MEMORY_ONLY)

.map(_._2).map(LabeledPoint.parse)

trainingStream.saveToCassandra("ml_keyspace", "raw_training_data")

val model = new StreamingLinearRegressionWithSGD()

.setInitialWeights(Vectors.dense(weights))

.trainOn(trainingStream)

//Making predictions on testData

model

.predictOnValues(testData.map(lp => (lp.label, lp.features)))

.saveToCassandra("ml_keyspace", "predictions")

46](https://image.slidesharecdn.com/streaming-big-data-analytics-for-scale-160111203421/85/Streaming-Big-Data-Analytics-For-Scale-46-320.jpg)

![@helenaedelson

48

class KafkaStreamingActor(params: Map[String, String], ssc: StreamingContext)

extends AggregationActor(settings: Settings) {

import settings._

val stream = KafkaUtils.createStream(

ssc, params, Map(KafkaTopicRaw -> 1), StorageLevel.DISK_ONLY_2)

.map(_._2.split(","))

.map(RawWeatherData(_))

stream.saveToCassandra(CassandraKeyspace, CassandraTableRaw)

stream

.map(hour => (hour.wsid, hour.year, hour.month, hour.day, hour.oneHourPrecip))

.saveToCassandra(CassandraKeyspace, CassandraTableDailyPrecip)

}

Kafka, Spark Streaming, Cassandra & Akka](https://image.slidesharecdn.com/streaming-big-data-analytics-for-scale-160111203421/85/Streaming-Big-Data-Analytics-For-Scale-48-320.jpg)

![@helenaedelson

class KafkaStreamingActor(params: Map[String, String], ssc: StreamingContext)

extends AggregationActor(settings: Settings) {

import settings._

val stream = KafkaUtils.createStream(

ssc, params, Map(KafkaTopicRaw -> 1), StorageLevel.DISK_ONLY_2)

.map(_._2.split(","))

.map(RawWeatherData(_))

stream.saveToCassandra(CassandraKeyspace, CassandraTableRaw)

stream

.map(hour => (hour.wsid, hour.year, hour.month, hour.day, hour.oneHourPrecip))

.saveToCassandra(CassandraKeyspace, CassandraTableDailyPrecip)

}

49

Now we can replay

• On failure

• Reprocessing on code changes

• Future computation...](https://image.slidesharecdn.com/streaming-big-data-analytics-for-scale-160111203421/85/Streaming-Big-Data-Analytics-For-Scale-49-320.jpg)

![@helenaedelson

50

Here we are pre-aggregating to a table for fast querying later -

in other secondary stream aggregation computations and scheduled computing

class KafkaStreamingActor(params: Map[String, String], ssc: StreamingContext)

extends AggregationActor(settings: Settings) {

import settings._

val stream = KafkaUtils.createStream(

ssc, params, Map(KafkaTopicRaw -> 1), StorageLevel.DISK_ONLY_2)

.map(_._2.split(","))

.map(RawWeatherData(_))

stream.saveToCassandra(CassandraKeyspace, CassandraTableRaw)

stream

.map(hour => (hour.wsid, hour.year, hour.month, hour.day, hour.oneHourPrecip))

.saveToCassandra(CassandraKeyspace, CassandraTableDailyPrecip)

}](https://image.slidesharecdn.com/streaming-big-data-analytics-for-scale-160111203421/85/Streaming-Big-Data-Analytics-For-Scale-50-320.jpg)

![@helenaedelson

52

class KafkaStreamingActor(params: Map[String, String], ssc: StreamingContext)

extends AggregationActor(settings: Settings) {

import settings._

val stream = KafkaUtils.createStream(

ssc, params, Map(KafkaTopicRaw -> 1), StorageLevel.DISK_ONLY_2)

.map(_._2.split(","))

.map(RawWeatherData(_))

stream.saveToCassandra(CassandraKeyspace, CassandraTableRaw)

stream

.map(hour => (hour.wsid, hour.year, hour.month, hour.day, hour.oneHourPrecip))

.saveToCassandra(CassandraKeyspace, CassandraTableDailyPrecip)

}

Gets the partition key: Data Locality

Spark C* Connector feeds this to Spark

Cassandra Counter column in our schema,

no expensive `reduceByKey` needed. Simply

let C* do it: not expensive and fast.](https://image.slidesharecdn.com/streaming-big-data-analytics-for-scale-160111203421/85/Streaming-Big-Data-Analytics-For-Scale-52-320.jpg)

)

}

streamingContext.union(multipleStreams)

.map { httpRequest => TimelineRequestEvent(httpRequest)}

.saveToCassandra("requests_ks", "timeline")

CREATE TABLE IF NOT EXISTS requests_ks.timeline (

timesegment bigint, url text, t_uuid timeuuid, method text, headers map <text, text>, body text,

PRIMARY KEY ((url, timesegment) , t_uuid)

);

Record Every Event In The Order In

Which It Happened, Per URL

timesegment protects from writing

unbounded partitions.

timeuuid protects from simultaneous

events over-writing one another.](https://image.slidesharecdn.com/streaming-big-data-analytics-for-scale-160111203421/85/Streaming-Big-Data-Analytics-For-Scale-54-320.jpg)

![@helenaedelson

class PrecipitationActor(ssc: StreamingContext, settings: Settings) extends AggregationActor {

import akka.pattern.pipe

def receive : Actor.Receive = {

case GetTopKPrecipitation(wsid, year, k) => topK(wsid, year, k, sender)

}

/** Returns the 10 highest temps for any station in the `year`. */

def topK(wsid: String, year: Int, k: Int, requester: ActorRef): Unit = {

val toTopK = (aggregate: Seq[Double]) => TopKPrecipitation(wsid, year,

ssc.sparkContext.parallelize(aggregate).top(k).toSeq)

ssc.cassandraTable[Double](keyspace, dailytable)

.select("precipitation")

.where("wsid = ? AND year = ?", wsid, year)

.collectAsync().map(toTopK) pipeTo requester

}

}

56

Queries pre-aggregated

tables from the stream

Compute Isolation: Actor](https://image.slidesharecdn.com/streaming-big-data-analytics-for-scale-160111203421/85/Streaming-Big-Data-Analytics-For-Scale-56-320.jpg)

.where("wsid = ? AND year = ? AND month = ?", e.wsid, e.year, e.month)

.collectAsync()

.map(MonthlyTemperature(_, e.wsid, e.year, e.month)) pipeTo requester

}

C* data is automatically sorted by most recent - due to our data model.

Additional Spark or collection sort not needed.

Efficient Batch Analysis](https://image.slidesharecdn.com/streaming-big-data-analytics-for-scale-160111203421/85/Streaming-Big-Data-Analytics-For-Scale-57-320.jpg)