Ad

실시간 Streaming using Spark and Kafka 강의교재

- 1. Spark 및 Kafka를 이용한 빅데이터 실시간 처리 기술 2024.4 윤형기 [email protected]

- 2. Spark및Kafka를이용한빅데이터실시간처리기술 일자 모듈 세부내용 1일차 (오전) 인사 빅데이터 ▪ 과정소개 ▪ Offline 빅데이터 --> streaming 빅데이터 ▪ 기반기술 Apache Spark 실습환경 구축 Spark API ▪ Spark 아키텍처 ▪ 설치 & 프로그래밍언어 (Scala, Java, Python) ▪ Structured API (오후) Spark SQL (1) ▪ Spark SQL & DataFrame Spark SQL (2) ▪ Spark SQL & Dataset 2일차 (오전) Spark Streaming (1) ▪ Spark Structured Streaming (오후) Spark Streaming (2) Spark Connect Spark ML ▪ Event-time & Stateful Processing ▪ Spark Connect ▪ Data Lake, Spark Mlib 3일차 (오전) Apache Kafka ▪ Kafka 개요, 아키텍처 ▪ Kafka Connect (오후) 데이터공학 Wrap-up ▪ Data Lakehouse ▪ Wrap-up (참고) 강의자료 중의 그림, 테이블, 코드 등 출처는 자료 맨 뒤의 참고자료를 참조하세요.

- 4. Spark및Kafka를이용한빅데이터실시간처리기술 Intro – 빅데이터와 데이터 엔지니어링

- 6. Spark및Kafka를이용한빅데이터실시간처리기술 • Hadoop & ecosystems ▪ “function-to-data model vs. data-to-function” (Locality) ▪ KVP (Key-Value Pair)

- 7. Spark및Kafka를이용한빅데이터실시간처리기술 • GFS 그림출처: Ghemawat et.al., “Google File System”, SOSP, 2003

- 8. Spark및Kafka를이용한빅데이터실시간처리기술 • Spark • 아키텍처: • 2009년에 UC Berkeley의 AMPLab 에서 개발 • 인메모리 방식 – Cached intermediate data sets, • Multi-step DAG 실행엔진, • …

- 9. Spark및Kafka를이용한빅데이터실시간처리기술 • Streams via Message Brokers • Apache Kafka • Apache Pulsar • AMQP Based Brokers • Streams via Stream Engines • Apache Flink • Apache Storm • Apache Heron • Spark Streaming Stream Big Data https://hazelcast.com/glossary/real-time-stream-processing/

- 10. Spark및Kafka를이용한빅데이터실시간처리기술 Data Engineering & Analytics • Log Collection • Apache Flume, Fluentd • Transferring Big Data Sets • Reloading/Partition Loading • Streaming • Data Pipeline Scheduler • Jenkins • Azkaban • Airflow https://hackr.io/blog/what-is-data-engineering

- 11. Spark및Kafka를이용한빅데이터실시간처리기술 • Real-time analytics • 2 ways: on fresh data at rest vs data in motion. https://www.striim.com/blog/an-in-depth-guide-to-real-time-analytics/

- 13. Spark및Kafka를이용한빅데이터실시간처리기술 Apache Spark: Unified Analytics Engine • Spark 개발의 배경 • Google의 빅데이터와 Hadoop at Yahoo! • MapReduce framework on HDFS • 확장과 다양한 시도 • Apache Hive, Storm, Impala, Giraph, Drill, etc., ; 각자의 API와 cluster 구성 → operational complexity • What Is Apache Spark? • Unified Analytics • Spark Components as a Unified Stack • Spark’s Distributed Execution Intermittent iteration of reads and writes between map and reduce computations

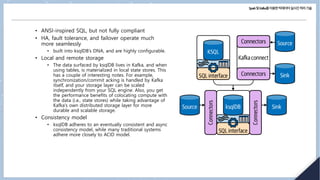

- 14. Spark및Kafka를이용한빅데이터실시간처리기술 Apache Spark? • Speed • DAG 방식의 query computations • DAG scheduler and query optimizer construct an efficient computational graph that can usually be decomposed into tasks that are executed in parallel across workers on the cluster. • Tungsten (whole-stage code generater) • 사용 용이성 • RDD + operations (transformations + actions) • Modularity • Extensibility • Spark decouples storage and compute to read data stored in myriad sources— Hadoop, Cassandra, Hbase, MongoDB, Hive, RDBMSs, and more—and process it all in memory. • (cf. Hadoop included both) • Spark의 DataFrameReader과 DataFrameWriter를 통해 외부 소스 이용 가능 • 예: Kafka, Kinesis, Azure Storage, Amazon S3

- 15. Spark및Kafka를이용한빅데이터실시간처리기술 Unified Analytics Platform • 개요 • Spark replaces all separate batch processing, graph, stream, and query engines like Storm, Impala, Dremel, Pregel, etc. with a unified stack of components that addresses diverse workloads under a single distributed fast engine. • Apache Spark Components as a Unified Stack • Spark SQL Apache Spark components and API stack // In Scala // Read data off Amazon S3 bucket into a Spark DataFrame spark.read.json("s3://apache_spark/data/committers.json") .createOrReplaceTempView("committers") // Issue a SQL query and return the result as a Spark DataFrame val results = spark.sql("""SELECT name, org, module, release, num_commits FROM committers WHERE module = 'mllib' AND num_commits > 10 ORDER BY num_commits DESC""")

- 16. Spark및Kafka를이용한빅데이터실시간처리기술 • Spark Mllib • GraphX • Graph-parallel computations from pyspark.ml.classification import LogisticRegression ... training = spark.read.csv("s3://...") test = spark.read.csv("s3://...") # Load training data lr = LogisticRegression(maxIter=10, regParam=0.3, elasticNetParam=0.8) # Fit the model lrModel = lr.fit(training) # Predict lrModel.transform(test) ... // In Scala val graph = Graph(vertices, edges) messages = spark.textFile("hdfs://...") val graph2 = graph.joinVertices(messages) { (id, vertex, msg) => ... }

- 17. Spark및Kafka를이용한빅데이터실시간처리기술 • Spark Structured Streaming • Spark 2.0 - Continuous Streaming model and Structured Streaming APIs, built atop Spark SQL engine and DataFrame-based APIs. • Spark 2.2 - views a stream as a continually growing table, with new rows of data appended at the end # In Python # Read a stream from a local host from pyspark.sql.functions import explode, split lines = (spark .readStream .format("socket") .option("host", "localhost") .option("port", 9999) .load()) # Perform transformation # Split the lines into words words = lines.select(explode(split(lines.value, " ")).alias("word")) # Generate running word count word_counts = words.groupBy("word").count() # Write out to the stream to Kafka query = (word_counts .writeStream .format("kafka") .option("topic", "output"))

- 18. Spark및Kafka를이용한빅데이터실시간처리기술 • 주요 개념 (용어) • Application • A user program built on Spark using its APIs. It consists of a driver program and executors on the cluster. • SparkSession • An object that provides a point of entry to interact with underlying Spark functionality and allows programming Spark with its APIs. • In Spark shell, Spark driver instantiates a SparkSession for you, while in a Spark application, you create a SparkSession object yourself. • Job • A parallel computation consisting of multiple tasks that gets spawned in response to a Spark action (e.g., save(), collect()). • Stage • Each job gets divided into smaller sets of tasks called stages that depend on each other. • Task • A single unit of work or execution that will be sent to a Spark executor.

- 19. Spark및Kafka를이용한빅데이터실시간처리기술 • Apache Spark의 "Distributed Execution” 모델 Spark components and architecture

- 20. Spark및Kafka를이용한빅데이터실시간처리기술 • Spark driver • SparkSession • a unified conduit to all Spark operations and data (Spark 2.0) • 기존의 SparkContext, SQLContext, HiveContext, SparkConf, StreamingContext 을 이어 받음 // In Scala import org.apache.spark.sql.SparkSession // Build SparkSession val spark = SparkSession .builder .appName("LearnSpark") .config("spark.sql.shuffle.partitions", 6) .getOrCreate() ... // Use the session to read JSON val people = spark.read.json("...") ... // Use the session to issue a SQL query val resultsDF = spark.sql("SELECT city, pop, state, zip FROM table_name")

- 21. Spark및Kafka를이용한빅데이터실시간처리기술 • Cluster manager • 4 cluster managers: standalone cluster manager, Hadoop YARN, Mesos, and Kubernetes. • Spark executor • Deployment modes Mode Spark driver Spark executor Cluster manager Local Runs on a single JVM, like a laptop or single node Runs on the same JVM as the driver Runs on the same host Standalone Cluster 내의 어떤 node에서든 가능 각 node는 각자의 executor JVM 수행 Can be allocated arbitrarily to any host in the cluster YARN (client) Runs on a client, not part of the cluster YARN’s NodeManager’s container YARN의 RM works with AM to allocate containers on NodeManagers for executors YARN (cluster) YARN의 AM 와 함꼐 수행 YARN client mode와 동일 YARN client mode와 동일 Kubernetes Runs in a Kubernetes pod 각 worker는 자신 pod에서 수행 Kubernetes Master

- 22. Spark및Kafka를이용한빅데이터실시간처리기술 • Distributed data와 partitions • 데이터를 클러스터 내의 서버에 partition의 형태로 분산 → parallelism • Spark treats each partition as a high-level logical data abstraction—as a DataFrame in memory.

- 24. Spark및Kafka를이용한빅데이터실시간처리기술 (ex) 데이터를 8개 partition으로 분해한 후 각 executor에 배분: # In Python log_df = spark.read.text("path_to_large_text_file").repartition(8) print(log_df.rdd.getNumPartitions()) (ex) DataFrame 생성 (10,000 integers distributed over 8 partitions in memory): # In Python df = spark.range(0, 10000, 1, 8) print(df.rdd.getNumPartitions()) Both code snippets will print out 8.

- 25. Spark및Kafka를이용한빅데이터실시간처리기술 Spark RDDs • 특징 • 분산 데이터 (Distributed Data Collection) : 다수의 worker node에 분산. • Driver node assumes the responsibility of creating and overseeing this distribution. • Resilience to Faults: capacity to regenerate RDDs when: • RDD corrupted (by memory volatility), lost during computation, etc. • Immutability: • aids in preserving the data lineage, a concept you will delve into later in this session. • Parallel Processing: RDD가 분산 파일이지만 processing은 concurrently 진행. • Multiple worker nodes collaborate simultaneously to execute the entire task. • Versatility in Data Sources: RDDs are adaptable and can be constructed from a variety of sources.

- 26. Spark및Kafka를이용한빅데이터실시간처리기술 • RDD lineage, maintained in Directed Acyclic Graph (DAG) Scheduler within SparkContext https://pub.aimind.so/pyspark-everything-you-need-to-know-24f87d12bfe1

- 27. Spark및Kafka를이용한빅데이터실시간처리기술 Spark 설치와 운영 • Step 1: 설치 • Apache Spark 파일 다운로드 • 환경변수 설정 • Spark’s Directories and Files • Step 2: Scala or PySpark Shell을 이용 • Using Local Machine • Step 3: Spark Application 개념의 이해 • Spark Application과 SparkSession • Spark Jobs • Spark Stages • Spark Tasks • Transformations, Actions 및 Lazy Evaluation • Narrow and Wide Transformations • Spark UI

- 28. Spark및Kafka를이용한빅데이터실시간처리기술 • Spark Application과 SparkSession Spark의 분산 아키텍처

- 29. Spark및Kafka를이용한빅데이터실시간처리기술 • Spark Jobs • Spark shell에서 driver는 application을 여러 Spark job으로 분해한 후 DAG로 변환 (transform) • = Spark’s execution plan, where each node within a DAG could be a single or multiple Spark stages. • Spark Stages • 각 stage는 DAG node로서 생성되고 operation은 serially or in parallelly 실행됨

- 30. Spark및Kafka를이용한빅데이터실시간처리기술 • Spark Tasks • Each stage is comprised of Spark tasks (a unit of execution), which are then federated across each Spark executor; each task maps to a single core and works on a single partition of data.

- 31. Spark및Kafka를이용한빅데이터실시간처리기술 Job execution in Spark https://avinash333.com/spark-2-2/

- 32. Spark및Kafka를이용한빅데이터실시간처리기술 Transformation과 Actions • Spark operation의 2가지 유형: transformation과 action • Transformations • transform a Spark DataFrame into a new DataFrame = immutability. • Actions • Lazy Evaluation • All transformations are evaluated lazily → Spark optimize queries by peeking into chained transformations, lineage and data immutability provide fault tolerance.

- 33. Spark및Kafka를이용한빅데이터실시간처리기술 • Narrow 및 Wide Transformations • narrow transformation • transformation where a single output partition can be computed from a single input partition • wide transformations - data from other partitions is read in, combined, and written to disk.

- 35. Spark및Kafka를이용한빅데이터실시간처리기술 Apache Spark의 Structured APIs • Spark & RDD • Structuring Spark • DataFrame API • Spark Data Types • Schema 개념과 DataFrames 생성 • Columns and Expressions, Rows • Common DataFrame Operations • End-to-End DataFrame Example • Dataset API • Typed Objects, Untyped Objects, and Generic Rows • Dataset의 생성과 Operations • DataFrames vs. Datasets • Spark SQL과 SQL Engine • Catalyst Optimizer

- 36. Spark및Kafka를이용한빅데이터실시간처리기술 Spark & RDD? • RDD • Spark 에서의 기본형 • 특징 • Dependencies • Partitions (with some locality information) • Compute function: Partition => Iterator[T] • 단, original model에서의 문제 • (i) compute function is opaque to Spark. Spark only sees it as a lambda expression. • (ii) Iterator[T] data type is also opaque for Python RDDs. • (iii) Spark has no way to optimize the expression • (iv) Spark has no knowledge of specific data type in T.

- 37. Spark및Kafka를이용한빅데이터실시간처리기술 Structuring Spark • 장점 • Low-level RDD API vs. high-level DSL # In Python # Create an RDD of tuples (name, age) dataRDD = sc.parallelize([("Brooke", 20), ("Denny", 31), ("Jules", 30), ("TD", 35), ("Brooke", 25)]) # Use map and reduceByKey transformations with lambda # expressions to aggregate and then compute average agesRDD = (dataRDD .map(lambda x: (x[0], (x[1], 1))) .reduceByKey(lambda x, y: (x[0] + y[0], x[1] + y[1])) .map(lambda x: (x[0], x[1][0]/x[1][1]))) # In Python from pyspark.sql import SparkSession from pyspark.sql.functions import avg # Create a DataFrame using SparkSession spark = (SparkSession .builder .appName("AuthorsAges") .getOrCreate()) # Create a DataFrame data_df = spark.createDataFrame([("Brooke", 20), ("Denny", 31), ("Jules", 30), ("TD", 35), ("Brooke", 25)], ["name", "age"]) # Group the same names together, aggregate, and average avg_df = data_df.groupBy("name").agg(avg("age")) # Show the results of the final execution avg_df.show() +------+--------+ | name|avg(age)| +------+--------+ |Brooke| 22.5| | Jules| 30.0| | TD| 35.0| | Denny| 31.0| +------+--------+

- 38. Spark및Kafka를이용한빅데이터실시간처리기술 // In Scala import org.apache.spark.sql.functions.avg import org.apache.spark.sql.SparkSession // Create a DataFrame using SparkSession val spark = SparkSession .builder .appName("AuthorsAges") .getOrCreate() // Create a DataFrame of names and ages val dataDF = spark.createDataFrame(Seq(("Brooke", 20), ("Brooke", 25), ("Denny", 31), ("Jules", 30), ("TD", 35))).toDF("name", "age") // Group the same names together, aggregate their ages, and compute an average val avgDF = dataDF.groupBy("name").agg(avg("age")) // Show the results of the final execution avgDF.show() +------+--------+ | name|avg(age)| +------+--------+ |Brooke| 22.5| | Jules| 30.0| | TD| 35.0| | Denny| 31.0| +------+--------+

- 39. Spark및Kafka를이용한빅데이터실시간처리기술 DataFrame API • Spark의 Basic Data Types • Spark의 Structured and Complex Data Types • Schema • schema-on-read 의 장점 • DataFrame 생성 $SPARK_HOME/bin/spark-shell scala> import org.apache.spark.sql.types._ import org.apache.spark.sql.types._ scala> val nameTypes = StringType nameTypes: org.apache.spark.sql.types.StringType.type = StringType scala> val firstName = nameTypes firstName: org.apache.spark.sql.types.StringType.type = StringType scala> val lastName = nameTypes lastName: org.apache.spark.sql.types.StringType.type = StringType

- 40. Spark및Kafka를이용한빅데이터실시간처리기술 Spark에서의 Basic Scala data types Data type Value assigned in Scala API to instantiate ByteType Byte DataTypes.ByteType ShortType Short DataTypes.ShortType IntegerType Int DataTypes.IntegerType LongType Long DataTypes.LongType FloatType Float DataTypes.FloatType DoubleType Double DataTypes.DoubleType StringType String DataTypes.StringType BooleanType Boolean DataTypes.BooleanType DecimalType java.math.BigDecimal DecimalType

- 41. Spark및Kafka를이용한빅데이터실시간처리기술 Spark에서의 Basic Python data types Data type Value assigned in Python API to instantiate ByteType int DataTypes.ByteType ShortType int DataTypes.ShortType IntegerType int DataTypes.IntegerType LongType int DataTypes.LongType FloatType float DataTypes.FloatType DoubleType Float DataTypes.DoubleType StringType str DataTypes.StringType BooleanType bool DataTypes.BooleanType DecimalType decimal.Decimal DecimalType

- 42. Spark및Kafka를이용한빅데이터실시간처리기술 • Spark’s Structured and Complex Data Types Spark에서의 Scala structured data types Data type Value assigned in Scala API to instantiate BinaryType Array[Byte] DataTypes.BinaryType TimestampType java.sql.Timestamp DataTypes.TimestampType DateType java.sql.Date DataTypes.DateType ArrayType scala.collection.Seq DataTypes.createArrayType(ElementTy pe) MapType scala.collection.Map DataTypes.createMapType(keyType, valueType) StructType org.apache.spark.sql.Row StructType(ArrayType[fieldTypes]) StructField A value type corresponding to the type of this field StructField(name, dataType, [nullable])

- 43. Spark및Kafka를이용한빅데이터실시간처리기술 Spark에서의 Python structured data types Data type Value assigned in Python API to instantiate BinaryType Bytearray BinaryType() TimestampType datetime.datetime TimestampType() DateType datetime.date DateType() ArrayType List, tuple, or array ArrayType(dataType, [nullable]) MapType Dict MapType(keyType, valueType, [nullable]) StructType List or tuple StructType([fields]) StructField A value type corresponding to the type of this field StructField(name, dataType, [nullable])

- 44. Spark및Kafka를이용한빅데이터실시간처리기술 • Schema 지정의 2가지 방법 • (i) 프로그램에 의한 DataFrame 용의 schema 생성: • (ii) DDL의 이용(simpler): // In Scala import org.apache.spark.sql.types._ val schema = StructType(Array(StructField("author", StringType, false), StructField("title", StringType, false), StructField("pages", IntegerType, false))) # In Python from pyspark.sql.types import * schema = StructType([StructField("author", StringType(), False), StructField("title", StringType(), False), StructField("pages", IntegerType(), False)]) // In Scala val schema = "author STRING, title STRING, pages INT" # In Python schema = "author STRING, title STRING, pages INT" # In Python from pyspark.sql import SparkSession

- 45. // In Scala val schema = "author STRING, title STRING, pages INT" # In Python schema = "author STRING, title STRING, pages INT" # In Python from pyspark.sql import SparkSession # Define schema for our data using DDL schema = "`Id` INT, `First` STRING, `Last` STRING, `Url` STRING, `Published` STRING, `Hits` INT, `Campaigns` ARRAY<STRING>" # Create our static data data = [[1, "Jules", "Damji", "https://tinyurl.1", "1/4/2016", 4535, ["twitter", "LinkedIn"]], [2, "Brooke","Wenig", "https://tinyurl.2", "5/5/2018", 8908, ["twitter", "LinkedIn"]], [3, "Denny", "Lee", "https://tinyurl.3", "6/7/2019", 7659, ["web", "twitter", "FB", "LinkedIn"]], [4, "Tathagata", "Das", "https://tinyurl.4", "5/12/2018", 10568, ["twitter", "FB"]], [5, "Matei","Zaharia", "https://tinyurl.5", "5/14/2014", 40578, ["web", "twitter", "FB", "LinkedIn"]], [6, "Reynold", "Xin", "https://tinyurl.6", "3/2/2015", 25568, ["twitter", "LinkedIn"]] ] if __name__ == "__main__": spark = (SparkSession .builder .appName("Example-3_6") .getOrCreate()) # Create a DataFrame using the schema defined above blogs_df = spark.createDataFrame(data, schema) # Show the DataFrame; it should reflect our table above blogs_df.show() # Print the schema used by Spark to process the DataFrame print(blogs_df.printSchema())

- 46. Spark및Kafka를이용한빅데이터실시간처리기술 • to read data from a JSON file // In Scala package main.scala.chapter3 import org.apache.spark.sql.SparkSession import org.apache.spark.sql.types._ object Example3_7 { def main(args: Array[String]) { val spark = SparkSession .builder .appName("Example-3_7") .getOrCreate() if (args.length <= 0) { println("usage Example3_7 <file path to blogs.json>") System.exit(1) } val jsonFile = args(0) // Get the path to the JSON file // Define our schema programmatically val schema = StructType(Array(StructField("Id", IntegerType, false), StructField("First", StringType, false), StructField("Last", StringType, false), StructField("Url", StringType, false), StructField("Published", StringType, false),

- 47. Spark및Kafka를이용한빅데이터실시간처리기술 • Column과 Expression 이용 // In Scala scala> import org.apache.spark.sql.functions._ scala> blogsDF.columns res2: Array[String] = Array(Campaigns, First, Hits, Id, Last, Published, Url) // Access a particular column with col and it returns a Column type scala> blogsDF.col("Id") res3: org.apache.spark.sql.Column = id // Use an expression to compute a value scala> blogsDF.select(expr("Hits * 2")).show(2) // or use col to compute value scala> blogsDF.select(col("Hits") * 2).show(2) +----------+ |(Hits * 2)| +----------+ | 9070| | 17816| +----------+

- 48. Spark및Kafka를이용한빅데이터실시간처리기술 // Use an expression to compute big hitters for blogs // This adds a new column, Big Hitters, based on the conditional expression blogsDF.withColumn("Big Hitters", (expr("Hits > 10000"))).show() +---+---------+-------+---+---------+-----+-----------------+-----------+ | Id| First| Last|Url|Published| Hits| Campaigns|Big Hitters| +---+---------+-------+---+---------+-----+-----------------+-----------+ | 1| Jules| Damji|...| 1/4/2016| 4535| [twitter, LinkedIn]| false| | 2| Brooke| Wenig|...| 5/5/2018| 8908| [twitter, LinkedIn]| false| | 3| Denny| Lee|...| 6/7/2019| 7659|[web, twitter, FB...| false| | 4|Tathagata| Das|...|5/12/2018|10568| [twitter, FB]| true| | 5| Matei|Zaharia|...|5/14/2014|40578|[web, twitter, FB...| true| | 6| Reynold| Xin|...| 3/2/2015|25568| [twitter, LinkedIn]| true| +---+---------+-------+---+---------+-----+-----------------+-----------+

- 49. Spark및Kafka를이용한빅데이터실시간처리기술 // Concatenate three columns, create a new column, and show the // newly created concatenated column blogsDF .withColumn("AuthorsId", (concat(expr("First"), expr("Last"), expr("Id")))) .select(col("AuthorsId")) .show(4) +-------------+ | AuthorsId| +-------------+ | JulesDamji1| | BrookeWenig2| | DennyLee3| |TathagataDas4| +-------------+ // These statements return the same value, showing that // expr is the same as a col method call blogsDF.select(expr("Hits")).show(2) blogsDF.select(col("Hits")).show(2) blogsDF.select("Hits").show(2) +-----+ | Hits| +-----+ | 4535| | 8908| +-----+

- 50. Spark및Kafka를이용한빅데이터실시간처리기술 // Sort by column "Id" in descending order blogsDF.sort(col("Id").desc).show() blogsDF.sort($"Id".desc).show() +-----------------+---------+-----+---+-------+---------+--------------+ | Campaigns| First| Hits| Id| Last|Published| Url| +-----------------+---------+-----+---+-------+---------+--------------+ | [twitter, LinkedIn]| Reynold|25568| 6| Xin| 3/2/2015|https://tinyurl.6| |[web, twitter, FB...| Matei|40578| 5|Zaharia|5/14/2014|https://tinyurl.5| | [twitter, FB]|Tathagata|10568| 4| Das|5/12/2018|https://tinyurl.4| |[web, twitter, FB...| Denny| 7659| 3| Lee| 6/7/2019|https://tinyurl.3| | [twitter, LinkedIn]| Brooke| 8908| 2| Wenig| 5/5/2018|https://tinyurl.2| | [twitter, LinkedIn]| Jules| 4535| 1| Damji| 1/4/2016|https://tinyurl.1| +-----------------+---------+-----+---+-------+---------+--------------+

- 51. Spark및Kafka를이용한빅데이터실시간처리기술 • Rows // In Scala import org.apache.spark.sql.Row // Create a Row val blogRow = Row(6, "Reynold", "Xin", "https://tinyurl.6", 255568, "3/2/2015", Array("twitter", "LinkedIn")) // Access using index for individual items blogRow(1) res62: Any = Reynold # In Python from pyspark.sql import Row blog_row = Row(6, "Reynold", "Xin", "https://tinyurl.6", 255568, "3/2/2015", ["twitter", "LinkedIn"]) # access using index for individual items blog_row[1] 'Reynold’ # Row objects can be used to create DFs if you need quick interactivity and exploration: # In Python rows = [Row("Matei Zaharia", "CA"), Row("Reynold Xin", "CA")] authors_df = spark.createDataFrame(rows, ["Authors", "State"]) authors_df.show() // In Scala val rows = Seq(("Matei Zaharia", "CA"), ("Reynold Xin", "CA")) val authorsDF = rows.toDF("Author", "State") authorsDF.show() +-------------+-----+ | Author|State| +-------------+-----+ |Matei Zaharia| CA| | Reynold Xin| CA| +-------------+-----+

- 52. Spark및Kafka를이용한빅데이터실시간처리기술 • 일반적인 DataFrame Operations • DataFrameReader와 DataFrameWriter • SAVING A DATAFRAME AS A PARQUET FILE OR SQL TABLE • ((code)) • Transformation과 actions • PROJECTION과 FILTER • projection • = returns only the rows matching a certain condition using filters. • projections with select() method, while filters using filter() or where(). • Column의 rename, add, drop • Aggregation • 기타의 일반적인 DataFrame operations • ((code))

- 53. Spark및Kafka를이용한빅데이터실시간처리기술 Dataset API • Spark 2.0의 unified DataFrame과 Dataset APIs as Structured APIs • DataFrame = an alias for a collection of generic objects, Dataset[Row], where a Row is a generic untyped JVM object that may hold different types of fields. • Dataset = a collection of strongly typed JVM objects in Scala or a class in Java. • = a strongly typed collection of domain-specific objects that can be transformed in parallel using functional or relational operations. • Each Dataset [in Scala] also has an untyped view called a DataFrame, which is a Dataset of Row.

- 54. Spark및Kafka를이용한빅데이터실시간처리기술 • Typed Objects, Untyped Objects, and Generic Rows • Spark에서의 Typed 및 untyped objects • Internally, Spark manipulates Row objects, converting them to equivalent types. • Dataset의 생성 • Dataset Operations Language Typed 및 untyped main abstraction Typed or untyped Scala Dataset[T] 와 DataFrame (alias for Dataset[Row]) Both typed and untyped Java Dataset<T> Typed Python DataFrame Generic Row untyped R DataFrame Generic Row untyped

- 55. Spark및Kafka를이용한빅데이터실시간처리기술 DataFrames vs. Datasets • 일반사항 • 예 • … • When to Use RDDs • Are using a third-party package that’s written using RDDs • Can forgo the code optimization, efficient space utilization, and performance benefits available with DataFrames and Datasets • Want to precisely instruct Spark how to do a query

- 56. Spark및Kafka를이용한빅데이터실시간처리기술 Spark SQL (Preview) • (Spark SQL과 엔진)

- 57. Spark및Kafka를이용한빅데이터실시간처리기술 • Catalyst Optimizer • Phase 1: Analysis • Phase 2: Logical optimization • Phase 3: Physical planning • Phase 4: Code generation • ((code: M&Ms example))

- 58. Spark및Kafka를이용한빅데이터실시간처리기술 Spark SQL과 DataFrames • Spark SQL의 이용 • SQL Table과 View • Managed vs. UnmanagedTables • SQL Database와 Table의 생성 • View 생성 • Viewing the Metadata • Caching SQL Tables • Reading Tables into DataFrames • DataFrame과 SQL Tables의 데이터 소스 • DataFrameReader와 DataFrameWriter • Parquet • JSON, CSV • Avro • ORC • Image와 Binary Files

- 60. Spark및Kafka를이용한빅데이터실시간처리기술 Spark SQL의 이용 • Query 예 // In Scala import org.apache.spark.sql.SparkSession val spark = SparkSession .builder .appName("SparkSQLExampleApp") .getOrCreate() // Path to data set val csvFile="/databricks-datasets/learning-spark-v2/flights/departuredelays.csv" // Read and create a temporary view // Infer schema (note that for larger files you may want to specify the schema) val df = spark.read.format("csv") .option("inferSchema", "true") .option("header", "true") .load(csvFile) // Create a temporary view df.createOrReplaceTempView("us_delay_flights_tbl")

- 61. Spark및Kafka를이용한빅데이터실시간처리기술 • To specify a schema, use a DDL-formatted string. # In Python from pyspark.sql import SparkSession # Create a SparkSession spark = (SparkSession .builder .appName("SparkSQLExampleApp") .getOrCreate()) # Path to data set csv_file = "/databricks-datasets/learning-spark-v2/flights/departuredelays.csv" # Read and create a temporary view # Infer schema (note that for larger files you # may want to specify the schema) df = (spark.read.format("csv") .option("inferSchema", "true") .option("header", "true") .load(csv_file)) df.createOrReplaceTempView("us_delay_flights_tbl") // In Scala val schema = "date STRING, delay INT, distance INT, origin STRING, destination STRING“ # In Python schema = "`date` STRING, `delay` INT, `distance` INT, `origin` STRING, `destination` STRING"

- 62. Spark및Kafka를이용한빅데이터실시간처리기술 SQL Table과 View • Managed vs. Unmanaged Tables • managed table ; Spark manages both metadata and data. (a local filesystem, HDFS, or an object store). • unmanaged table, Spark only manages metadata, while you manage data yourself in an external data source (ex: Cassandra). • SQL Database와 Table 생성 • managed table의 생성 // In Scala/Python spark.sql("CREATE DATABASE learn_spark_db") spark.sql("USE learn_spark_db") // In Scala/Python spark.sql("CREATE TABLE managed_us_delay_flights_tbl (date STRING, delay INT, distance INT, origin STRING, destination STRING)") # You can do the same thing using the DataFrame API like this: # In Python # Path to our US flight delays CSV file csv_file = "/databricks-datasets/learning-spark-v2/flights/departuredelays.csv" # Schema as defined in the preceding example schema="date STRING, delay INT, distance INT, origin STRING, destination STRING" flights_df = spark.read.csv(csv_file, schema=schema) flights_df.write.saveAsTable("managed_us_delay_flights_tbl")

- 63. Spark및Kafka를이용한빅데이터실시간처리기술 • unmanaged table의 생성 • View의 생성 • Temporary views vs. global temporary views • A temporary view is tied to a single SparkSession within a Spark application. • A global temporary view is visible across multiple SparkSessions within a Spark application. • application 내에서 여러 개의 SparkSession을 생성할 수 있음 • 예: in cases where you want to access (and combine) data from two different SparkSessions that don’t share the same Hive metastore configurations. # To create an unmanaged table from a data source such as a CSV file, in SQL use: spark.sql("""CREATE TABLE us_delay_flights_tbl(date STRING, delay INT, distance INT, origin STRING, destination STRING) USING csv OPTIONS (PATH '/databricks-datasets/learning-spark-v2/flights/departuredelays.csv')""") # And within the DataFrame API use: (flights_df .write .option("path", "/tmp/data/us_flights_delay") .saveAsTable("us_delay_flights_tbl"))

- 64. Spark및Kafka를이용한빅데이터실시간처리기술 • Viewing Metadata • Caching SQL Tables • Table을 DataFrame에 읽어 들이기 // In Scala/Python spark.catalog.listDatabases() spark.catalog.listTables() spark.catalog.listColumns("us_delay_flights_tbl") -- In SQL CACHE [LAZY] TABLE <table-name> UNCACHE TABLE <table-name> // In Scala val usFlightsDF = spark.sql("SELECT * FROM us_delay_flights_tbl") val usFlightsDF2 = spark.table("us_delay_flights_tbl") # In Python us_flights_df = spark.sql("SELECT * FROM us_delay_flights_tbl") us_flights_df2 = spark.table("us_delay_flights_tbl")

- 65. Spark및Kafka를이용한빅데이터실시간처리기술 DataFrame과 SQL Tables의 데이터 소스 • DataFrameReader • DataFrameReader methods, arguments, and options Method Arguments Description format() "parquet", "csv", "txt", "json", "jdbc", "orc", "avro", etc. default is Parquet or whatever is set in spark.sql.sources.default. option() ("mode", {PERMISSIVE | FAILFAST | DROPMALFORMED } ) ("inferSchema", {true | false}) ("path", "path_file_data_source") A series of key/value pairs and options. Default: PERMISSIVE. "inferSchema" and "mode" options are specific to JSON and CSV file formats. schema() DDL String or StructType 예: 'A INT, B STRING’ or StructType(...) JSON or CSV format의 경우 option() method에서 infer schema 지정 가능. load() "/path/to/data/source" path to data source.

- 66. Spark및Kafka를이용한빅데이터실시간처리기술 • DataFrameWriter • DataFrameWriter methods, arguments, and options Method Arguments Description format() "parquet", "csv", "txt", "json", "jdbc", "orc", "avro", etc. default is Parquet or whatever set in spark.sql.sources.default. option() ("mode", {append | overwrite | ignore | error or errorifexists} ) ("mode", {SaveMode.Overwrite | SaveMode.Append, SaveMode.Ignore, SaveMode.ErrorIfExists}) ("path", "path_to_write_to") A series of key/value pairs and options. This is an overloaded method. The default mode options are error or errorifexists and SaveMode.ErrorIfExists; they throw an exception at runtime if the data already exists. bucketBy() (numBuckets, col, col..., coln) number of buckets and names of columns to bucket by. Uses Hive’s bucketing scheme on a filesystem. save() "/path/to/data/source" The path to save to. saveAsTable() "table_name" The table to save to.

- 67. Spark및Kafka를이용한빅데이터실시간처리기술 // In Scala // Use Parquet val file = """/databricks-datasets/learning-spark-v2/flights/summary- data/parquet/2010-summary.parquet""" val df = spark.read.format("parquet").load(file) // Use Parquet; you can omit format("parquet") if you wish as it's the default val df2 = spark.read.load(file) // Use CSV val df3 = spark.read.format("csv") .option("inferSchema", "true") .option("header", "true") .option("mode", "PERMISSIVE") .load("/databricks-datasets/learning-spark-v2/flights/summary-data/csv/*") // Use JSON val df4 = spark.read.format("json") .load("/databricks-datasets/learning-spark-v2/flights/summary-data/json/*")

- 68. Spark및Kafka를이용한빅데이터실시간처리기술 • Parquet • Parquet 파일을 DataFrame에 읽어 들이기 • Parquet 파일을 Spark SQL table 에 읽어 들이기 • Writing DataFrames to Parquet files • Writing DataFrames to Spark SQL tables • ((code))

- 69. Spark및Kafka를이용한빅데이터실시간처리기술 • JSON • JSON 파일을 DataFrame에 읽어 들이기 • JSON 파일을 Spark SQL table 에 읽어 들이기 • Writing DataFrames to JSON files • JSON data source options • JSON options for DataFrameReader and DataFrameWriter Property 이름 Values 의미 Scope compression none, uncompressed, bzip2, deflate, gzip, lz4, or snappy read will only detect the compression or codec from the file extension. Write dateFormat yyyy-MM-dd or DateTimeFormatter Use this format or any format from Java’s DateTimeFormatter. Read/write multiLine true, false Default is false (single-line mode). Read allowUnquotedFieldName s true, false Allow unquoted JSON field names. Default is false. Read

- 70. Spark및Kafka를이용한빅데이터실시간처리기술 • CSV • Reading a CSV file into a DataFrame • Reading a CSV file into a Spark SQL table • Writing DataFrames to CSV files • CSV data source options • Avro • Reading an Avro file into a DataFrame • Reading an Avro file into a Spark SQL table • Writing DataFrames to Avro files • Avro data source options

- 71. Spark및Kafka를이용한빅데이터실시간처리기술 • ORC • Reading an ORC file into a DataFrame • Reading an ORC file into a Spark SQL table • Writing DataFrames to ORC files • Images • Reading an image file into a DataFrame • Binary Files • eading a binary file into a DataFrame

- 72. Spark및Kafka를이용한빅데이터실시간처리기술 Spark SQL (1) – Spark SQL & DataFrame

- 73. Spark및Kafka를이용한빅데이터실시간처리기술 Spark SQL과 DataFrames • Spark SQL과 Apache Hive • User-Defined Functions • Spark SQL Shell, Beeline를 이용한 Query • External Data Sources • JDBC 및 SQL Databases • 기타의 External Sources • DataFrame과 Spark SQL에서의 Higher-Order Functions • Option 1: Explode와 Collect • Option 2: User-Defined Function • Complex Data Type을 위한 내장 함수 • Higher-Order Functions • 일반적인 DataFrames과 Spark SQL의 Operations • Unions, Joins, Windowing, Modifications

- 74. Spark및Kafka를이용한빅데이터실시간처리기술 Spark SQL과 Apache Hive • User-Defined Functions • Spark SQL UDFs // In Scala // Create cubed function val cubed = (s: Long) => { s * s * s } // Register UDF spark.udf.register("cubed", cubed) // Create temporary view spark.range(1, 9).createOrReplaceTempView("udf_test") # In Python from pyspark.sql.types import LongType # Create cubed function def cubed(s): return s * s * s # Register UDF spark.udf.register("cubed", cubed, LongType()) # Generate temporary view spark.range(1, 9).createOrReplaceTempView("udf_test")

- 75. Spark및Kafka를이용한빅데이터실시간처리기술 // In Scala/Python // Query the cubed UDF spark.sql("SELECT id, cubed(id) AS id_cubed FROM udf_test").show() +---+--------+ | id|id_cubed| +---+--------+ | 1| 1| | 2| 8| | 3| 27| | 4| 64| | 5| 125| | 6| 216| | 7| 343| | 8| 512| +---+--------+

- 76. Spark및Kafka를이용한빅데이터실시간처리기술 • Pandas UDFs를 이용한 PySpark UDFs 배포에서의 속도 개선 • Issues: PySpark UDFs are slower than Scala UDFs. • Solution: Pandas UDFs (= vectorized UDFs) in Spark 2.3. • 특히 Spark 3.0 + > Python 3.6에서 Pandas UDF는 다음 2개로 분리 • Pandas UDFs • Pandas UDFs infer the Pandas UDF type from Python type hints in Pandas UDFs (예: pandas.Series, pandas.DataFrame, Tuple, and Iterator) (Spark 3.0) • 기존: 각 Pandas UDF type을 manually define and specify. • 지원되는 Python type hints in Pandas UDFs: Series to Series, Iterator of Series to Iterator of Series, Iterator of Multiple Series to Iterator of Series, and Series to Scalar (a single value). • Pandas Function APIs • allow to directly apply a local Python function to a PySpark DataFrame where both the input and output are Pandas instances. For Spark 3.0, the supported Pandas Function APIs are grouped map, map, co-grouped map.

- 77. Spark및Kafka를이용한빅데이터실시간처리기술 Spark SQL Shell, Beeline를 이용한 Query • Spark SQL Shell의 이용 • (…) • While communicating with Hive metastore service in local mode, it does not talk to Thrift JDBC/ODBC server (a.k.a. Spark Thrift Server or STS). • STS allows JDBC/ODBC clients to execute SQL queries over JDBC and ODBC protocols on Apache Spark. • To start Spark SQL CLI: ./bin/spark-sql • Create a table spark-sql> CREATE TABLE people (name STRING, age int); • Insert data into the table INSERT INTO people SELECT name, age FROM ... spark-sql> INSERT INTO people VALUES ("Michael", NULL); Time taken: 1.696 seconds • Running a Spark SQL query spark-sql> SHOW TABLES; spark-sql> SELECT * FROM people WHERE age < 20;

- 78. Spark및Kafka를이용한빅데이터실시간처리기술 • Beeline의 이용 • (…) • Beeline is a JDBC client based on SQLLine CLI. • You can use this to execute Spark SQL queries against the Spark Thrift server. • Start the Thrift server ./sbin/start-thriftserver.sh ./sbin/start-all.sh • Connect to the Thrift server via Beeline ./bin/beeline !connect jdbc:hive2://localhost:10000 • Execute a Spark SQL query with Beeline 0: jdbc:hive2://localhost:10000> SHOW tables; • Stop the Thrift server ./sbin/stop-thriftserver.sh

- 79. Spark및Kafka를이용한빅데이터실시간처리기술 External Data Sources • JDBC와 SQL Databases • specify JDBC driver for JDBC data source and make on the Spark classpath. ./bin/spark-shell --driver-class-path $database.jar --jars $database.jar Property name Description user, password These are normally provided as connection properties for logging into the data sources. url JDBC connection URL, e.g., jdbc:postgresql://localhost/test?user=fred&password=secret. dbtable JDBC table to read from or write to. You can’t specify the dbtable and query options at the same time. query Query to be used to read data from Apache Spark, e.g., SELECT column1, column2, ..., columnN FROM [table|subquery]. You can’t specify the query and dbtable options at the same time. driver Class name of the JDBC driver to use to connect to the specified URL.

- 80. Spark및Kafka를이용한빅데이터실시간처리기술 • Partitioning의 중요성 • Spark SQL와 외부의 JDBC source와 대량 데이터 전달 시 partition the data source! Property name Description numPartitions The maximum number of partitions that can be used for parallelism in table reading and writing. This also determines the maximum number of concurrent JDBC connections. partitionColumn When reading an external source, partitionColumn is the column that is used to determine the partitions; note, partitionColumn must be a numeric, date, or timestamp column. lowerBound Sets the minimum value of partitionColumn for the partition stride. upperBound Sets the maximum value of partitionColumn for the partition stride. numPartitions: 10 lowerBound: 1000 upperBound: 10000 SELECT * FROM table WHERE partitionColumn BETWEEN 1000 and 2000 SELECT * FROM table WHERE partitionColumn BETWEEN 2000 and 3000 ... SELECT * FROM table WHERE partitionColumn BETWEEN 9000 and 10000

- 81. Spark및Kafka를이용한빅데이터실시간처리기술 기타의 External Sources • PostgreSQL • MySQL • Azure Cosmos DB • MS SQL Server

- 82. Spark및Kafka를이용한빅데이터실시간처리기술 DataFrame과 Spark SQL에서의 Higher-Order Functions • 2 typical solutions for manipulating complex data types • Nested structure를 개별 row로 explode → apply function → re-create nested structure • (ii) Build a user-defined function such as get_json_object(), from_json(), to_json(), explode(), and selectExpr(). • Option 1: Explode and Collect • Option 2: User-Defined Function • then use this UDF in Spark SQL: spark.sql("SELECT id, plusOneInt(values) AS values FROM table").show() • serialization and deserialization process itself may be expensive. However collect_list() may cause executors to experience out-of-memory issues for large data sets, whereas using UDFs would alleviate these issues. -- In SQL SELECT id, collect_list(value + 1) AS values FROM (SELECT id, EXPLODE(values) AS value FROM table) x GROUP BY id // In Scala def addOne(values: Seq[Int]): Seq[Int] = { values.map(value => value + 1) } val plusOneInt = spark.udf.register("plusOneInt", addOne(_: Seq[Int]): Seq[Int])

- 83. Spark및Kafka를이용한빅데이터실시간처리기술 Complex Data Type을 위한 내장 함수 • Complex Data Type에 대한 내장 함수 • Array type functions Function/Description Query Output array_distinct(array<T>): array<T> SELECT array_distinct(array(1, 2, 3, null, 3)); [1,2,3,null] array_intersect(array<T>, array<T>): array<T> SELECT array_intersect(array(1, 2, 3), array(1, 3, 5)); [1,3] array_union(array<T>, array<T>): array<T> SELECT array_union(array(1, 2, 3), array(1, 3, 5)); [1,2,3,5] array_except(array<T>, array<T>): array<T> SELECT array_except(array(1, 2, 3), array(1, 3, 5)); [2] array_join(array<String>, String[, String]): String SELECT array_join(array('hello', 'world'), ' '); hello world

- 84. Spark및Kafka를이용한빅데이터실시간처리기술 • Complex Data Type을 위한 내장 함수 • Map functions Function/Description Query Output map_form_arrays(array<K>, array<V>): map<K, V> SELECT map_from_arrays(array(1.0, 3.0), array('2', '4')); {"1.0":"2", "3.0":"4"} map_from_entries(array<struct<K, V>>): map<K, V> SELECT map_from_entries(array(struct(1, 'a'), struct(2, 'b'))); {"1":"a", "2":"b"} map_concat(map<K, V>, ...): map<K, V> SELECT map_concat(map(1, 'a', 2, 'b'), map(2, 'c', 3, 'd')); {"1":"a", "2":"c","3":"d"} element_at(map<K, V>, K): V SELECT element_at(map(1, 'a', 2, 'b'), 2); B cardinality(array<T>): Int SELECT cardinality(map(1, 'a', 2, 'b')); 2

- 85. Spark및Kafka를이용한빅데이터실시간처리기술 • Higher-Order Functions • (…) • 내장함수 외에도: higher-order functions • 예: -- In SQL transform(values, value -> lambda expression) # In Python from pyspark.sql.types import * schema = StructType([StructField("celsius", ArrayType(IntegerType()))]) t_list = [[35, 36, 32, 30, 40, 42, 38]], [[31, 32, 34, 55, 56]] t_c = spark.createDataFrame(t_list, schema) t_c.createOrReplaceTempView("tC") # Show the DataFrame t_c.show() // In Scala // Create DataFrame with two rows of two arrays (tempc1, tempc2) val t1 = Array(35, 36, 32, 30, 40, 42, 38) val t2 = Array(31, 32, 34, 55, 56) val tC = Seq(t1, t2).toDF("celsius") tC.createOrReplaceTempView("tC") // Show the DataFrame tC.show() +--------------------+ | celsius| +--------------------+ |[35, 36, 32, 30, ...| |[31, 32, 34, 55, 56]| +--------------------+

- 86. Spark및Kafka를이용한빅데이터실시간처리기술 • transform() • transform(array<T>, function<T, U>): array<U> • filter() filter(array<T>, function<T, Boolean>): array<T> // In Scala/Python // Calculate Fahrenheit from Celsius for an array of temperatures spark.sql(""" SELECT celsius, transform(celsius, t -> ((t * 9) div 5) + 32) as fahrenheit FROM tC """).show() +--------------------+--------------------+ | celsius| fahrenheit| +--------------------+--------------------+ |[35, 36, 32, 30, ...|[95, 96, 89, 86, ...| |[31, 32, 34, 55, 56]|[87, 89, 93, 131,...| +--------------------+--------------------+ // In Scala/Python // Filter temperatures > 38C for array of temperatures spark.sql(""" SELECT celsius, filter(celsius, t -> t > 38) as high FROM tC """).show() +--------------------+--------+ | celsius| high| +--------------------+--------+ |[35, 36, 32, 30, ...|[40, 42]| |[31, 32, 34, 55, 56]|[55, 56]| +--------------------+--------+

- 87. Spark및Kafka를이용한빅데이터실시간처리기술 • exists() exists(array<T>, function<T, V, Boolean>): Boolean • reduce() reduce(array<T>, B, function<B, T, B>, function<B, R>) // In Scala/Python // Is there a temperature of 38C in the array of temperatures spark.sql(""" SELECT celsius, exists(celsius, t -> t = 38) as threshold FROM tC """).show() +--------------------+---------+ | celsius|threshold| +--------------------+---------+ |[35, 36, 32, 30, ...| true| |[31, 32, 34, 55, 56]| false| +--------------------+---------+ // In Scala/Python // Calculate average temperature and convert to F spark.sql(""" SELECT celsius, reduce( celsius, 0, (t, acc) -> t + acc, acc -> (acc div size(celsius) * 9 div 5) + 32 ) as avgFahrenheit FROM tC """).show() +--------------------+-------------+ | celsius|avgFahrenheit| +--------------------+-------------+ |[35, 36, 32, 30, ...| 96| |[31, 32, 34, 55, 56]| 105| +--------------------+-------------+

- 88. Spark및Kafka를이용한빅데이터실시간처리기술 일반적인 DataFrames과 Spark SQL의 Operations • (…) • Aggregate 함수 • Collection 함수 • Datetime 함수 • Math 함수 • Miscellaneous 함수 • Non-aggregate 함수 • Sorting 함수 • String 함수 • UDF 함수 • Window 함수 • For full list, see Spark SQL documentation. • 코드 실습을 위한 데이터 생성 ((code))

- 89. Spark및Kafka를이용한빅데이터실시간처리기술 // In Scala import org.apache.spark.sql.functions._ // Set file paths val delaysPath = "/databricks-datasets/learning-spark-v2/flights/departuredelays.csv" val airportsPath = "/databricks-datasets/learning-spark-v2/flights/airport-codes-na.txt" // Obtain airports data set val airports = spark.read .option("header", "true") .option("inferschema", "true") .option("delimiter", "t") .csv(airportsPath) airports.createOrReplaceTempView("airports_na") // Obtain departure Delays data set val delays = spark.read .option("header","true") .csv(delaysPath) .withColumn("delay", expr("CAST(delay as INT) as delay")) .withColumn("distance", expr("CAST(distance as INT) as distance")) delays.createOrReplaceTempView("departureDelays") // Create temporary small table val foo = delays.filter( expr("""origin == 'SEA' AND destination == 'SFO' AND date like '01010%' AND delay > 0""")) foo.createOrReplaceTempView("foo")

- 90. Spark및Kafka를이용한빅데이터실시간처리기술 # In Python # Set file paths from pyspark.sql.functions import expr tripdelaysFilePath = "/databricks-datasets/learning-spark-v2/flights/departuredelays.csv" airportsnaFilePath = "/databricks-datasets/learning-spark-v2/flights/airport-codes-na.txt" # Obtain airports data set airportsna = (spark.read .format("csv") .options(header="true", inferSchema="true", sep="t") .load(airportsnaFilePath)) airportsna.createOrReplaceTempView("airports_na") # Obtain departure delays data set departureDelays = (spark.read .format("csv") .options(header="true") .load(tripdelaysFilePath)) departureDelays = (departureDelays .withColumn("delay", expr("CAST(delay as INT) as delay")) .withColumn("distance", expr("CAST(distance as INT) as distance"))) departureDelays.createOrReplaceTempView("departureDelays") # Create temporary small table foo = (departureDelays .filter(expr("""origin == 'SEA' and destination == 'SFO' and date like '01010%' and delay > 0"""))) foo.createOrReplaceTempView("foo")

- 91. Spark및Kafka를이용한빅데이터실시간처리기술 // Scala/Python spark.sql("SELECT * FROM airports_na LIMIT 10").show() +-----------+-----+-------+----+ | City|State|Country|IATA| +-----------+-----+-------+----+ | Abbotsford| BC| Canada| YXX| | Aberdeen| SD| USA| ABR| … | Alexandria| LA| USA| AEX| | Allentown| PA| USA| ABE| +-----------+-----+-------+----+ spark.sql("SELECT * FROM departureDelays LIMIT 10").show() +--------+-----+--------+------+-----------+ | date|delay|distance|origin|destination| +--------+-----+--------+------+-----------+ |01011245| 6| 602| ABE| ATL| |01020600| -8| 369| ABE| DTW| … |01051245| 88| 602| ABE| ATL| |01050605| 9| 602| ABE| ATL| +--------+-----+--------+------+-----------+ spark.sql("SELECT * FROM foo").show() +--------+-----+--------+------+-----------+ | date|delay|distance|origin|destination| +--------+-----+--------+------+-----------+ |01010710| 31| 590| SEA| SFO| |01010955| 104| 590| SEA| SFO| |01010730| 5| 590| SEA| SFO| +--------+-----+--------+------+-----------+

- 92. Spark및Kafka를이용한빅데이터실시간처리기술 Unions, Joins, Windowing, Modifications • Unions // Scala // Union two tables val bar = delays.union(foo) bar.createOrReplaceTempView("bar") bar.filter(expr("""origin == 'SEA' AND destination == 'SFO' AND date LIKE '01010%' AND delay > 0""")).show() # In Python # Union two tables bar = departureDelays.union(foo) bar.createOrReplaceTempView("bar") # Show the union (filtering for SEA and SFO in a specific time range) bar.filter(expr("""origin == 'SEA' AND destination == 'SFO' AND date LIKE '01010%' AND delay > 0""")).show() -- In SQL spark.sql(""" SELECT * FROM bar WHERE origin = 'SEA' AND destination = 'SFO' AND date LIKE '01010%' AND delay > 0 """).show() +--------+-----+--------+------+-----------+ | date|delay|distance|origin|destination| +--------+-----+--------+------+-----------+ |01010710| 31| 590| SEA| SFO| |01010955| 104| 590| SEA| SFO| |01010730| 5| 590| SEA| SFO| |01010710| 31| 590| SEA| SFO| |01010955| 104| 590| SEA| SFO| |01010730| 5| 590| SEA| SFO| +--------+-----+--------+------+-----------+

- 93. Spark및Kafka를이용한빅데이터실시간처리기술 • Joins • Join types: inner (default), cross, outer, full, full_outer, left, left_outer, right, right_outer, left_semi, and left_anti. • More in the documentation. // In Scala foo.join( airports.as('air), $"air.IATA" === $"origin" ).select("City", "State", "date", "delay", "distance", "destination").show() # In Python # Join departure delays data (foo) with airport info foo.join( airports, airports.IATA == foo.origin ).select("City", "State", "date", "delay", "distance", "destination").show() -- In SQL spark.sql(""" SELECT a.City, a.State, f.date, f.delay, f.distance, f.destination FROM foo f JOIN airports_na a ON a.IATA = f.origin """).show() +-------+-----+--------+-----+--------+-----------+ | City|State| date|delay|distance|destination| +-------+-----+--------+-----+--------+-----------+ |Seattle| WA|01010710| 31| 590| SFO| |Seattle| WA|01010955| 104| 590| SFO| |Seattle| WA|01010730| 5| 590| SFO| +-------+-----+--------+-----+--------+-----------+

- 94. Spark및Kafka를이용한빅데이터실시간처리기술 • Windowing • uses values from the rows in a window (a range of input rows) to return a set of values, typically in the form of another row. • --> operate on a group of rows while still returning a single value for every input row. In this section, we will show how to use the dense_rank() window function; there are many other functions SQL DataFrame API Ranking functions rank() rank() dense_rank() denseRank() percent_rank() percentRank() ntile() ntile() row_number() rowNumber() Analytic functions cume_dist() cumeDist() first_value() firstValue() last_value() lastValue() lag() lag() lead() lead()

- 95. Spark및Kafka를이용한빅데이터실시간처리기술 -- In SQL DROP TABLE IF EXISTS departureDelaysWindow; CREATE TABLE departureDelaysWindow AS SELECT origin, destination, SUM(delay) AS TotalDelays FROM departureDelays WHERE origin IN ('SEA', 'SFO', 'JFK') AND destination IN ('SEA', 'SFO', 'JFK', 'DEN', 'ORD', 'LAX', 'ATL') GROUP BY origin, destination; SELECT * FROM departureDelaysWindow +------+-----------+-----------+ |origin|destination|TotalDelays| +------+-----------+-----------+ | JFK| ORD| 5608| | SEA| LAX| 9359| | JFK| SFO| 35619| | SFO| ORD| 27412| … | JFK| SEA| 7856| | JFK| LAX| 35755| | SFO| JFK| 24100| | SFO| LAX| 40798| | SEA| JFK| 4667| +------+-----------+-----------+

- 96. Spark및Kafka를이용한빅데이터실시간처리기술 • to find the three destinations that experienced the most delays • a better approach -- In SQL SELECT origin, destination, SUM(TotalDelays) AS TotalDelays FROM departureDelaysWindow WHERE origin = '[ORIGIN]' GROUP BY origin, destination ORDER BY SUM(TotalDelays) DESC LIMIT 3 -- In SQL spark.sql(""" SELECT origin, destination, TotalDelays, rank FROM ( SELECT origin, destination, TotalDelays, dense_rank() OVER (PARTITION BY origin ORDER BY TotalDelays DESC) as rank FROM departureDelaysWindow ) t WHERE rank <= 3 """).show() +------+-----------+-----------+----+ |origin|destination|TotalDelays|rank| +------+-----------+-----------+----+ | SEA| SFO| 22293| 1| | SEA| DEN| 13645| 2| | SEA| ORD| 10041| 3| | SFO| LAX| 40798| 1| | SFO| ORD| 27412| 2| | SFO| JFK| 24100| 3| | JFK| LAX| 35755| 1| | JFK| SFO| 35619| 2| | JFK| ATL| 12141| 3| +------+-----------+-----------+----+

- 97. Spark및Kafka를이용한빅데이터실시간처리기술 • Modifications • Adding new columns // In Scala/Python foo.show() --------+-----+--------+------+-----------+ | date|delay|distance|origin|destination| +--------+-----+--------+------+-----------+ |01010710| 31| 590| SEA| SFO| |01010955| 104| 590| SEA| SFO| |01010730| 5| 590| SEA| SFO| +--------+-----+--------+------+-----------+ // In Scala import org.apache.spark.sql.functions.expr val foo2 = foo.withColumn( "status", expr("CASE WHEN delay <= 10 THEN 'On-time' ELSE 'Delayed' END") ) # In Python from pyspark.sql.functions import expr foo2 = (foo.withColumn( "status", expr("CASE WHEN delay <= 10 THEN 'On-time' ELSE 'Delayed' END") )) // In Scala/Python foo2.show() +--------+-----+--------+------+-----------+-------+ | date|delay|distance|origin|destination| status| +--------+-----+--------+------+-----------+-------+ |01010710| 31| 590| SEA| SFO|Delayed| |01010955| 104| 590| SEA| SFO|Delayed| |01010730| 5| 590| SEA| SFO|On-time| +--------+-----+--------+------+-----------+-------+

- 98. Spark및Kafka를이용한빅데이터실시간처리기술 • Dropping columns • Renaming columns // In Scala val foo3 = foo2.drop("delay") foo3.show() # In Python foo3 = foo2.drop("delay") foo3.show() +--------+--------+------+-----------+-------+ | date|distance|origin|destination| status| +--------+--------+------+-----------+-------+ |01010710| 590| SEA| SFO|Delayed| |01010955| 590| SEA| SFO|Delayed| |01010730| 590| SEA| SFO|On-time| +--------+--------+------+-----------+-------+ // In Scala val foo4 = foo3.withColumnRenamed("status", "flight_status") foo4.show() # In Python foo4 = foo3.withColumnRenamed("status", "flight_status") foo4.show() +--------+--------+------+-----------+-------------+ | date|distance|origin|destination|flight_status| +--------+--------+------+-----------+-------------+ |01010710| 590| SEA| SFO| Delayed| |01010955| 590| SEA| SFO| Delayed| |01010730| 590| SEA| SFO| On-time| +--------+--------+------+-----------+-------------+

- 99. Spark및Kafka를이용한빅데이터실시간처리기술 • Pivoting -- In SQL SELECT destination, CAST(SUBSTRING(date, 0, 2) AS int) AS month, delay FROM departureDelays WHERE origin = 'SEA' +-----------+-----+-----+ |destination|month|delay| +-----------+-----+-----+ | ORD| 1| 92| | JFK| 1| -7| … | DFW| 1| -2| | ORD| 1| -3| +-----------+-----+-----+ only showing top 10 rows

- 100. Spark및Kafka를이용한빅데이터실시간처리기술 • to place names in the month column (instead of 1 and 2 you can show Jan and Feb, respectively) as well as perform aggregate calculations (in this case average and max) on the delays by destination and month: -- In SQL SELECT * FROM ( SELECT destination, CAST(SUBSTRING(date, 0, 2) AS int) AS month, delay FROM departureDelays WHERE origin = 'SEA' ) PIVOT ( CAST(AVG(delay) AS DECIMAL(4, 2)) AS AvgDelay, MAX(delay) AS MaxDelay FOR month IN (1 JAN, 2 FEB) ) ORDER BY destination +-----------+------------+------------+------------+------------+ |destination|JAN_AvgDelay|JAN_MaxDelay|FEB_AvgDelay|FEB_MaxDelay| +-----------+------------+------------+------------+------------+ | ABQ| 19.86| 316| 11.42| 69| | ANC| 4.44| 149| 7.90| 141| … | GEG| 2.28| 63| 2.87| 60| | HDN| -0.44| 27| -6.50| 0| +-----------+------------+------------+------------+------------+ only showing top 20 rows

- 101. Spark및Kafka를이용한빅데이터실시간처리기술 Spark SQL (2) – Spark SQL & Dataset

- 102. Spark및Kafka를이용한빅데이터실시간처리기술 Spark SQL과 Datasets • Single API for Java and Scala • Scala Case Class와 JavaBeans for Datasets • Dataset을 이용한 작업 • 샘플데이터 생성 • Transforming Sample Data • Higher-order functions and functional programming • DataFrame을 Dataset으로 변환 • Dataset과 DataFrame 관련한 메모리 관리 • Dataset Encoders • Spark의 내부 포맷 vs. Java Object Format • Serialization과 Deserialization (SerDe) • Dataset 사용 시의 고려사항 • Strategies to Mitigate Costs

- 103. Spark및Kafka를이용한빅데이터실시간처리기술 Single API for Java and Scala • Scala Case Class와 JavaBeans for Datasets • Spark의 내부 data types: StringType, BinaryType, IntegerType, BooleanType, and MapType. • Spark uses to map seamlessly to the language-specific data types in Scala and Java during Spark operations. This mapping is done via encoders. • Dataset[T]의 생성 (단, T는 typed object in Scala) • Scala case class를 통해 각 filed를 지정 (a blueprint or schema) {id: 1, first: "Jules", last: "Damji", url: "https://tinyurl.1", date: "1/4/2016", hits: 4535, campaigns: {"twitter", "LinkedIn"}}, ... {id: 87, first: "Brooke", last: "Wenig", url: "https://tinyurl.2", date: "5/5/2018", hits: 8908, campaigns: {"twitter", "LinkedIn"}} // In Scala case class Bloggers(id:Int, first:String, last:String, url:String, date:String, hits: Int, campaigns:Array[String]) We can now read the file from the data source: val bloggers = "../data/bloggers.json" val bloggersDS = spark .read .format("json") .option("path", bloggers) .load() .as[Bloggers]

- 104. Spark및Kafka를이용한빅데이터실시간처리기술 • To create a distributed Dataset[Bloggers], define a Scala case class that defines each individual field that comprises a Scala object. This case class serves as a blueprint or schema for the typed object Bloggers: • Each row in the resulting distributed data collection is of type Bloggers. // In Scala case class Bloggers(id:Int, first:String, last:String, url:String, date:String, hits: Int, campaigns:Array[String]) We can now read the file from the data source: val bloggers = "../data/bloggers.json" val bloggersDS = spark .read .format("json") .option("path", bloggers) .load() .as[Bloggers]

- 105. Spark및Kafka를이용한빅데이터실시간처리기술 • Similarly, a JavaBean class of type Bloggers in Java and then use encoders to create a Dataset<Bloggers>: // In Java import org.apache.spark.sql.Encoders; import java.io.Serializable; public class Bloggers implements Serializable { private int id; private String first; private String last; private String url; private String date; private int hits; private Array[String] campaigns; // JavaBean getters and setters int getID() { return id; } void setID(int i) { id = i; } String getFirst() { return first; } void setFirst(String f) { first = f; } String getLast() { return last; } void setLast(String l) { last = l; } String getURL() { return url; } void setURL (String u) { url = u; } String getDate() { return date; } Void setDate(String d) { date = d; } int getHits() { return hits; } void setHits(int h) { hits = h; } Array[String] getCampaigns() { return campaigns; } void setCampaigns(Array[String] c) { campaigns = c; } } // Create Encoder Encoder<Bloggers> BloggerEncoder = Encoders.bean(Bloggers.class); String bloggers = "../bloggers.json" Dataset<Bloggers>bloggersDS = spark .read .format("json") .option("path", bloggers) .load() .as(BloggerEncoder);

- 106. Spark및Kafka를이용한빅데이터실시간처리기술 Dataset을 이용한 작업 • Creating Sample Data • ((code in scala)) • ((code in Java)) • Transforming Sample Data • (transformations) map(), reduce(), filter(), select(), aggregate() • (higher-order functions) can take lambdas, closures, or functions as arguments. → functional programming. // Create a Dataset of Usage typed data val dsUsage = spark.createDataset(data) dsUsage.show(10) +---+----------+-----+ |uid| uname|usage| +---+----------+-----+ | 0|user-Gpi2C| 525| | 1|user-DgXDi| 502| | 2|user-M66yO| 170| | 3|user-xTOn6| 913| | 4|user-3xGSz| 246| | 5|user-2aWRN| 727| | 6|user-EzZY1| 65| | 7|user-ZlZMZ| 935| | 8|user-VjxeG| 756| | 9|user-iqf1P| 3| +---+----------+-----+ only showing top 10 rows

- 107. Spark및Kafka를이용한빅데이터실시간처리기술 • Higher-order function과 functional programming • ex: filter() // In Scala import org.apache.spark.sql.functions._ dsUsage .filter(d => d.usage > 900) .orderBy(desc("usage")) .show(5, false) # Another way def filterWithUsage(u: Usage) = u.usage > 900 dsUsage.filter(filterWithUsage(_)).orderBy(desc("usage")).show(5) +---+----------+-----+ |uid| uname|usage| +---+----------+-----+ |561|user-5n2xY| 999| |113|user-nnAXr| 999| |605|user-NL6c4| 999| |634|user-L0wci| 999| |805|user-LX27o| 996| +---+----------+-----+ only showing top 5 rows

- 108. Spark및Kafka를이용한빅데이터실시간처리기술 // In Java // Define a Java filter function FilterFunction<Usage> f = new FilterFunction<Usage>() { public boolean call(Usage u) { return (u.usage > 900); } }; // Use filter with our function and order the results in descending order dsUsage.filter(f).orderBy(col("usage").desc()).show(5); +---+----------+-----+ |uid|uname |usage| +---+----------+-----+ |67 |user-qCGvZ|997 | |878|user-J2HUU|994 | |668|user-pz2Lk|992 | |750|user-0zWqR|991 | |242|user-g0kF6|989 | +---+----------+-----+ only showing top 5 rows

- 109. Spark및Kafka를이용한빅데이터실시간처리기술 • Lambdas can return computed values too. // In Scala // Use an if-then-else lambda expression and compute a value dsUsage.map(u => {if (u.usage > 750) u.usage * .15 else u.usage * .50 }) .show(5, false) // Define a function to compute the usage def computeCostUsage(usage: Int): Double = { if (usage > 750) usage * 0.15 else usage * 0.50 } // Use the function as an argument to map() dsUsage.map(u => {computeCostUsage(u.usage)}).show(5, false) +------+ |value | +------+ |262.5 | |251.0 | |85.0 | |136.95| |123.0 | +------+ only showing top 5 rows

- 110. Spark및Kafka를이용한빅데이터실시간처리기술 • To use map() in Java, define a MapFunction<T>. • This can either be an anonymous class or a defined class that extends MapFunction<T>. // In Java // Define an inline MapFunction dsUsage.map((MapFunction<Usage, Double>) u -> { if (u.usage > 750) return u.usage * 0.15; else return u.usage * 0.50; }, Encoders.DOUBLE()).show(5); // We need to explicitly specify the Encoder +------+ |value | +------+ |65.0 | |114.45| |124.0 | |132.6 | |145.5 | +------+ only showing top 5 rows

- 111. Spark및Kafka를이용한빅데이터실시간처리기술 • which users the computed values are associated with? // In Scala // Create a new case class with an additional field, cost case class UsageCost(uid: Int, uname:String, usage: Int, cost: Double) // Compute the usage cost with Usage as a parameter // Return a new object, UsageCost def computeUserCostUsage(u: Usage): UsageCost = { val v = if (u.usage > 750) u.usage * 0.15 else u.usage * 0.50 UsageCost(u.uid, u.uname, u.usage, v) } // Use map() on our original Dataset dsUsage.map(u => {computeUserCostUsage(u)}).show(5) +---+----------+-----+------+ |uid| uname|usage| cost| +---+----------+-----+------+ | 0|user-Gpi2C| 525| 262.5| | 1|user-DgXDi| 502| 251.0| | 2|user-M66yO| 170| 85.0| | 3|user-xTOn6| 913|136.95| | 4|user-3xGSz| 246| 123.0| +---+----------+-----+------+ only showing top 5 rows

- 112. Spark및Kafka를이용한빅데이터실시간처리기술 // In Java // Get the Encoder for the JavaBean class Encoder<UsageCost> usageCostEncoder = Encoders.bean(UsageCost.class); // Apply map() function to our data dsUsage.map( (MapFunction<Usage, UsageCost>) u -> { double v = 0.0; if (u.usage > 750) v = u.usage * 0.15; else v = u.usage * 0.50; return new UsageCost(u.uid, u.uname,u.usage, v); }, usageCostEncoder).show(5); +------+---+----------+-----+ | cost|uid| uname|usage| +------+---+----------+-----+ | 65.0| 0|user-xSyzf| 130| |114.45| 1|user-iOI72| 763| | 124.0| 2|user-QHRUk| 248| | 132.6| 3|user-8GTjo| 884| | 145.5| 4|user-U4cU1| 970| +------+---+----------+-----+ only showing top 5 rows

- 113. Spark및Kafka를이용한빅데이터실시간처리기술 • HOF과 datasets 이용 시 유의점: • Spark provides the equivalent of map() and filter() without HOFs, so you are not forced to use FP with Datasets or DataFrames. Instead, you can simply use conditional DSL operators or SQL expressions. • (ex) dsUsage.filter("usage > 900") or dsUsage($"usage" > 900). • For Datasets we use encoders, a mechanism to efficiently convert data between JVM and Spark’s internal binary format for its data types. • (Note) HOFs and FP are not unique to Datasets; you can use them with DataFrames too. • DataFrame is a Dataset[Row], where Row is a generic untyped JVM object that can hold different types of fields. The method signature takes expressions or functions that operate on Row. • Converting DataFrames to Datasets • For strong type checking of queries and constructs, you can convert DataFrames to Datasets. To convert an existing DataFrame df to a Dataset of type SomeCaseClass, simply use df.as[SomeCaseClass] : // In Scala val bloggersDS = spark .read .format("json") .option("path", "/data/bloggers/bloggers.json") .load() .as[Bloggers]

- 114. Spark및Kafka를이용한빅데이터실시간처리기술 Dataset과 DataFrame 관련한 메모리 관리 • 메모리 관리와 관련한 Spark의 진화 • Spark 1.0 used RDD-based Java objects for memory storage, serialization, and deserialization, which was expensive in terms of resources and slow. Also, storage was allocated on the Java heap --> JVM’s GC for large data. • Spark 1.x introduced Project Tungsten. • a new internal row-based format to lay out Datasets and DataFrames in off-heap memory, using offsets and pointers. Spark uses an efficient mechanism called encoders to serialize and deserialize between the JVM and its internal Tungsten format. • Allocating memory off-heap means that Spark is less encumbered by GC. • Spark 2.x introduced 2nd-generation Tungsten engine, featuring whole-stage code generation and vectorized column-based memory layout. • + modern CPU and cache architectures for fast parallel data access with “single instruction, multiple data” (SIMD).

- 115. Spark및Kafka를이용한빅데이터실시간처리기술 Dataset Encoders • Encoders • convert data in off-heap memory from Spark’s internal Tungsten format to JVM Java objects. • 즉, serialize and deserialize Dataset objects from Spark’s internal format to JVM objects, including primitive data types. • 예: Encoder[T] converts from internal Tungsten format to Dataset[T]. • primitive type에 대한 encoder를 자동생성 using Scala case classes & JavaBeans. • Java & Kryo serialization/deserialization보다, significantly faster. Encoder<UsageCost> usageCostEncoder = Encoders.bean(UsageCost.class); • However, for Scala, Spark automatically generates the bytecode for these efficient converters. • Spark의 내부 Format vs. Java Object Format • Java objects have large overheads—header info, hashcode, Unicode info, etc. • Instead of creating JVM-based objects for Datasets or DataFrames, Spark allocates off-heap Java memory to lay out their data and employs encoders to convert the data from in-memory representation to JVM object.

- 116. Spark및Kafka를이용한빅데이터실시간처리기술 • Serialization and Deserialization (SerDe) • JVM’s built-in Java serializer/deserializer slow. → Dataset encoders • Spark’s Tungsten binary format stores objects off the Java heap memory (compact) • Encoders can quickly serialize by traversing across the memory using simple pointer arithmetic. • 수신측: encoders quickly deserializes the binary representation into Spark’s internal representation, not hindered by JVM’s GC.

- 117. Spark및Kafka를이용한빅데이터실시간처리기술 Costs of Using Datasets • Cost 감축 방안 • 전략 1 • Use DSL expressions in queries and avoid excessive use of lambdas as anonymous functions as arguments to higher-order functions, in order to mitigate excessive serialization and deserialization • 전략 2 • Chain queries together so that deserialization is minimized. • Chaining queries together is a common practice in Spark. • 예: Dataset of type Person, defined as a Scala case class: // In Scala Person(id: Integer, firstName: String, middleName: String, lastName: String, gender: String, birthdate: String, ssn: String, salary: String) • FP를 이용한 queries • Inefficient query; repeated serialization and deserialization: • 반면, 다음 query는 (lambdas 없이) DSL 만 이용 —no serialization/deserialization for entire composed and chained query: personDS .filter(year($"birthDate") > earliestYear) // Everyone above 40 .filter($"salary" > 80000) // Everyone earning more than 80K .filter($"lastName".startsWith("J")) // Last name starts with J .filter($"firstName".startsWith("D")) // First name starts with D .count()

- 119. Spark및Kafka를이용한빅데이터실시간처리기술 Spark의 최적화와 Tuning • Spark의 최적화와 Tuning • Apache Spark Configuration의 이용 • Large Workload를 위한 확장 (scaling) • Static vs. dynamic resource allocation • Configuring Spark executors’ memory and the shuffle service • Spark parallelism의 극대화 • Caching 및 Data Persistence • DataFrame.cache() • DataFrame.persist() • When to & When Not to Cache and Persist • 다양한 Spark Joins • Broadcast Hash Join • Shuffle Sort Merge Join • Inspecting the Spark UI

- 120. Spark및Kafka를이용한빅데이터실시간처리기술 • Spark Configuration을 읽거나 설정하는 방법 • (i) through a set of configuration files • Conf/spark-defaults.conf.template, conf/log4j.properties.template, and conf/spark-env.sh.template. (default 변경 후 saving without .template suffix) • (Note) changes in conf/spark-defaults.conf file apply to Spark cluster and all Spark applications submitted to the cluster. • (ii) specify in Spark application or on the command line when submitting • Spark-submit –conf spark.sql.shuffle.partitions=5 –conf "spark.executor.memory=2g" –class main.scala.chapter7.SparkConfig_7_1 jars/main-scala-chapter7_2.12-1.0.jar • 예: in the Spark application itself ((code)) • (iii) through a programmatic interface via Spark shell. • 예: show Spark configs on a local host where Spark is launched in local mode: ((code)) • You can also view only the Spark SQL–specific Spark configs: • Through Spark UI’s Environment tab. (Figure 7-1). • To set or modify an existing configuration programmatically, first check if the property is modifiable. spark.conf.isModifiable("<config_name>")

- 121. Spark및Kafka를이용한빅데이터실시간처리기술 • Large Workload를 위한 확장 (scaling) • Static versus dynamic resource allocation • To enable and configure dynamic allocation, use settings - default spark.dynamicAllocation.enabled is set to false. • Configuring Spark executors’ memory and the shuffle service spark.dynamicAllocation.enabled true spark.dynamicAllocation.minExecutors 2 spark.dynamicAllocation.schedulerBacklogTimeout 1m spark.dynamicAllocation.maxExecutors 20 spark.dynamicAllocation.executorIdleTimeout 2min

- 122. Spark및Kafka를이용한빅데이터실시간처리기술 Configuration Default value, recommendation, and description spark.driver.memory Default = 1g (1 GB). = amount of memory allocated to Spark driver to receive data from executors. spark.shuffle.file.buffer Default = 32 KB. Recommended = 1 MB. spark.file.transferTo Default = true. Setting it to false will force Spark to use the file buffer to transfer files before finally writing to disk; this will decrease the I/O activity. spark.shuffle.unsafe.file.output.buffer Default = 32 KB. the amount of buffering possible when merging files during shuffle operations. spark.io.compression.lz4.blockSize Default is 32 KB. Increase to 512 KB. You can decrease the size of the shuffle file by increasing the compressed size of the block. spark.shuffle.service.index.cache.size Default = 100m. Cache entries are limited to the specified memory footprint in byte. spark.shuffle.registration.timeout Default = 5000 ms. Increase to 120000 ms. spark.shuffle.registration.maxAttempts Default = 3. Increase to 5 if needed.

- 123. Spark및Kafka를이용한빅데이터실시간처리기술 • Spark parallelism의 극대화 • Partitions is a way to arrange data into a subset of configurable and readable chunks or blocks of contiguous data on disk. • These subsets of data can be read or processed independently and in parallel, if necessary, by more than a single thread in a process. • partitions as atomic units of parallelism: a single thread running on a single core can work on a single partition. • Size of a partition: spark.sql.files.maxPartitionBytes. (default; 128 MB).

- 124. Spark및Kafka를이용한빅데이터실시간처리기술 • Partitions are also created when you explicitly use certain methods of the DataFrame API. • shuffle partitions are created during shuffle stage. (default number of shuffle partitions = 200 in spark.sql.shuffle.partitions). Adjustable. • Created during groupBy() or join(), (= wide transformations), shuffle partitions consume both network and disk I/O resources --> shuffle will spill results to executors’ local disks at the location in spark.local.directory. SSD disks for this operation will boost the performance. // In Scala val ds = spark.read.textFile("../README.md").repartition(16) ds: org.apache.spark.sql.Dataset[String] = [value: string] ds.rdd.getNumPartitions res5: Int = 16 val numDF = spark.range(1000L * 1000 * 1000).repartition(16) numDF.rdd.getNumPartitions numDF: org.apache.spark.sql.Dataset[Long] = [id: bigint] res12: Int = 16

- 125. Spark및Kafka를이용한빅데이터실시간처리기술 Caching 및 Data Persistence • DataFrame.cache() • cache() will store as many of the partitions read in memory across Spark executors as memory allows. • While a DataFrame may be fractionally cached, partitions cannot be fractionally cached • (e.g., if you have 8 partitions but only 4.5 partitions can fit in memory, only 4 will be cached). • 단, if not all your partitions are cached, when you want to access the data again, the partitions that are not cached will have to be recomputed, slowing down your Spark job. // In Scala // Create a DataFrame with 10M records val df = spark.range(1 * 10000000).toDF("id").withColumn("square", $"id" * $"id") df.cache() // Cache the data df.count() // Materialize the cache res3: Long = 10000000 Command took 5.11 seconds df.count() // Now get it from the cache res4: Long = 10000000 Command took 0.44 seconds

- 126. Spark및Kafka를이용한빅데이터실시간처리기술 • DataFrame.persist() • persist(StorageLevel.LEVEL) is nuanced, providing control over how your data is cached via StorageLevel. • Data on disk is always serialized using either Java or Kryo serialization. StorageLevel Description MEMORY_ONLY Data is stored directly as objects and stored only in memory. MEMORY_ONLY_SER Data is serialized as compact byte array representation and stored only in memory. To use it, it has to be deserialized at a cost. MEMORY_AND_DISK Data is stored directly as objects in memory, but if there’s insufficient memory the rest is serialized and stored on disk. DISK_ONLY Data is serialized and stored on disk. OFF_HEAP Data is stored off-heap. Off-heap memory is used in Spark for storage and query execution. MEMORY_AND_DISK_SER Like MEMORY_AND_DISK, but data is serialized when stored in memory. (Data is always serialized when stored on disk.)

- 127. Spark및Kafka를이용한빅데이터실시간처리기술 • not only can you cache DataFrames, but can also cache the tables or views derived from DataFrames. This gives them more readable names in the Spark UI. // In Scala import org.apache.spark.storage.StorageLevel // Create a DataFrame with 10M records val df = spark.range(1 * 10000000).toDF("id").withColumn("square", $"id" * $"id") df.persist(StorageLevel.DISK_ONLY) // Serialize the data and cache it on disk df.count() // Materialize the cache res2: Long = 10000000 Command took 2.08 seconds df.count() // Now get it from the cache res3: Long = 10000000 Command took 0.38 seconds // In Scala df.createOrReplaceTempView("dfTable") spark.sql("CACHE TABLE dfTable") spark.sql("SELECT count(*) FROM dfTable").show() +--------+ |count(1)| +--------+ |10000000| +--------+ Command took 0.56 seconds

- 128. Spark및Kafka를이용한빅데이터실시간처리기술 • When to Cache and Persist • Where you want to access a large data set repeatedly for queries or transformations. Examples include: • DataFrames commonly used during iterative ML training • DataFrames accessed commonly for doing frequent transformations during ETL or building data pipelines • When Not to Cache and Persist • DataFrames that are too big to fit in memory • An inexpensive transformation on a DataFrame not requiring frequent use, regardless of size • As a general rule use memory caching judiciously, as it can incur resource costs in serializing and deserializing, depending on the StorageLevel used.

- 129. Spark및Kafka를이용한빅데이터실시간처리기술 다양한 Spark Joins • 개요 • <-- Spark computes what data to produce, what keys and associated data to write to the disk, and how to transfer those keys and data to nodes as part of operations like groupBy(), join(), agg(), sortBy(), and reduceByKey(). == shuffle • Broadcast Hash Join • = map-side-only join, This strategy avoids the large exchange. • ; when two data sets, one small (fitting in driver’s and executor’s memory) and another large enough to be spared from movement, to be joined over certain conditions or columns. • smaller data set is broadcasted by the driver to all Spark executors, and subsequently joined with the larger data set on each executor.

- 130. Spark및Kafka를이용한빅데이터실시간처리기술 • By default Spark will use a broadcast join if the smaller data set is less than 10 MB. This configuration is set in spark.sql.autoBroadcastJoinThreshold.. // In Scala import org.apache.spark.sql.functions.broadcast val joinedDF = playersDF.join(broadcast(clubsDF), "key1 === key2") • BHJ is easiest and fastest join <-- does not involve shuffle; all data is available locally to executor after a broadcast. • At any time after the operation, you can see in the physical plan what join operation was performed by executing: joinedDF.explain(mode) • In Spark 3.0, you can use joinedDF.explain('mode') to display a readable and digestible output. • When to use a broadcast hash join • When each key within the smaller and larger data sets is hashed to the same partition by Spark • When one data set is much smaller than the other (and within the default config of 10 MB, or more if you have sufficient memory) • When you only want to perform an equi-join, to combine two data sets based on matching unsorted keys • When you are not worried by excessive network bandwidth usage or OOM errors, because the smaller data set will be broadcast to all Spark executors • Specifying a value of -1 in spark.sql.autoBroadcastJoinThreshold will cause Spark to always resort to a shuffle sort merge join, which we discuss in the next section.

- 131. Spark및Kafka를이용한빅데이터실시간처리기술 • Shuffle Sort Merge Join • merging two large data sets over a common key that is sortable, unique, and can be assigned to or stored in the same partition—two data sets with a common hashable key that end up being on same partition. • 즉, all rows within each data set with the same key are hashed on the same partition on the same executor. Obviously, this means data has to be colocated or exchanged between executors. • 2 phases (a sort phase followed by a merge phase): • sort phase sorts each data set by its desired join key; • merge phase iterates over each key in the row from each data set and merges the rows if two keys match. • Default = SortMergeJoin is enabled via spark.sql.join.preferSortMergeJoin. • idea is to take two large DataFrames, with one million records, and join them on two common keys, uid == users_id.

- 132. Spark및Kafka를이용한빅데이터실시간처리기술 // In Scala import scala.util.Random // Show preference over other joins for large data sets // Disable broadcast join // Generate data ... spark.conf.set("spark.sql.autoBroadcastJoinThreshold", "-1") // Generate some sample data for two data sets var states = scala.collection.mutable.Map[Int, String]() var items = scala.collection.mutable.Map[Int, String]() val rnd = new scala.util.Random(42) // Initialize states and items purchased states += (0 -> "AZ", 1 -> "CO", 2-> "CA", 3-> "TX", 4 -> "NY", 5-> "MI") items += (0 -> "SKU-0", 1 -> "SKU-1", 2-> "SKU-2", 3-> "SKU-3", 4 -> "SKU-4", 5-> "SKU-5") // Create DataFrames val usersDF = (0 to 1000000).map(id => (id, s"user_${id}", s"user_${id}@databricks.com", states(rnd.nextInt(5)))) .toDF("uid", "login", "email", "user_state") val ordersDF = (0 to 1000000) .map(r => (r, r, rnd.nextInt(10000), 10 * r* 0.2d, states(rnd.nextInt(5)), items(rnd.nextInt(5)))) .toDF("transaction_id", "quantity", "users_id", "amount", "state", "items") // Do the join …