Swift: A parallel scripting for applications at the petascale and beyond.

- 1. Prof N.B. Venkateswarlu GVPCEW, Visakhapatnam [email protected]

- 2. First Let me thank the organizers, especially Prof MHM Krishna Prasad Garu(For whom I am an informal Ph.D guide/mentor).

- 3. 3 Outline • Introduction • Swift Language Introduction (Swift/K and Swift/T) • Swift Coding examples illustrated Apple Swift is different.

- 4. As the theme of the workshop is research guidance, I would like to recall my case while I am doing my PhD

- 5. Community Clusters – Do You Want to join me. It is not the place to talk more. You can be in touch with me at [email protected]

- 6. Being an old CSE teacher, I used to ask students how Computer is used during 1970s and 1980s. Of course, today it is used mostly for entertainment. Any one would like to share?.

- 7. In the same sense, how really the High Performance Computing is becoming necessary today and coming years. An example, Weka.

- 8. Sunway TaihuLight - Sunway MPP, Sunway SW26010 260C 1.45GHz, Sunway, 93Peta Flops 8

- 10. The Scientific Computing Campaign • Swift addresses most of these components 10 THINK about what to run next RUN a battery of tasks COLLECT results IMPROVE methods and codes

- 13. Swift 13 Swift is a data-flow oriented coarse grained scripting language that supports dataset typing and mapping, dataset iteration, conditional branching, and procedural composition. Swift programs (or workflows) are written in a language called Swift. Swift scripts are primarily concerned with processing (possibly large) collections of data files, by invoking programs to do that processing. Swift handles execution of such programs on remote sites by choosing sites, handling the staging of input and output files to and from the chosen sites and remote execution of programs.

- 14. 14 • Swift is a parallel scripting language for multi-cores, clusters, grids, clouds, and supercomputers – for loosely-coupled applications - application linked by exchanging files – debug on a laptop, then run on a Cray • Swift scripts are easy to write – simple high-level functional language with C-like syntax – small Swift scripts can do large-scale work • Swift is easy to run: contain all services for running workflow - in one Java application – simple command line interface • Swift is fast: based on an efficient execution engine – scales readily to millions of tasks • Swift usage is growing: – applications in neuroscience, proteomics, molecular dynamics, biochemistry, economics, statistics, earth systems science, and more.

- 15. 15 Clouds: Magellan, Amazon, FutureGrid, BioNimbus Challenge: complex variety of computing resources • Parallel distributed computing is HARD • Swift harnesses diverse resources with simple scripts • Many applications are well suited to this approach • Motivates collaboration through libraries of pipelines and sharing of data and provenance Challenge: Complexity of parallel computing

- 16. 16 When do you need Swift? Typical application: protein-ligand docking for drug screening 2M+ ligands (B) O(100K) drug candidates Tens of fruitful candidates for wetlab & APS O(10) proteins implicated in a disease 1M compute jobs X … T1af7 T1r69T1b72

- 17. 17 Submit host (Laptop, Linux login node…) Workflow status and logs Java application Compute nodes f1 f2 f3 a1 a2 Data server f1 f2 f3 Provenance log script App a1 App a2 site list app list File transport Swift supports clusters, grids, and supercomputers. Solution: parallel scripting for high level parallellism

- 18. Goals of the Swift language Swift was designed to handle many aspects of the computing campaign • Ability to integrate many application components into a new workflow application • Data structures for complex data organization • Portability- separate site-specific configuration from application logic • Logging, provenance, and plotting features • Today, we will focus on supporting multiple language applications at large scale 18 THIN K RU N COLL ECT IMPR OVE

- 19. 19 A Summary of Swift in a nutshell •Swift scripts are text files ending in .swift The swift command runs on any host, and executes these scripts. • swift is a Java application, which you can install almost anywhere. On Linux, just unpack •the distribution tar file and add its bin/ directory to your PATH. •Swift scripts run ordinary applications, just like shell scripts do. •Swift makes it easy to run these applications on parallel and remote computers (from laptops to supercomputers). If you can ssh to the system, Swift can likely run applications there. •The details of where to run applications and how to get files back and forth are described in configuration files separate from your program. Swift speaks ssh, PBS, Condor, SLURM, LSF, SGE, Cobalt, and Globus to run applications, and scp, http, ftp, and GridFTP to move data. •The Swift language has 5 main data types: boolean, int, string, float, and file. Collections of these are dynamic, sparse arrays of arbitrary dimension and structures of scalars and/or arrays defined by thetype declaration. •Swift file variables are "mapped" to external files. • Swift sends files to and from remote systems for you automatically. •Swift variables are "single assignment": once you set them you can not change them (in a given block of code). This makes Swift a natural, "parallel data flow" language. This programming model keeps your workflow scripts simple and easy to write and understand.

- 20. 20 A Summary of Swift in a nutshell Swift scripts are text files ending in .swift The swift command runs on any host, and executes these scripts. • This programming model keeps your workflow scripts simple and easy to write and •understand. •Swift lets you define functions to "wrap" application programs, and to cleanly structure more complex scripts. Swift app functions take files and parameters as inputs and return files as outputs. •A compact set of built-in functions for string and file manipulation, type conversions, •high level IO, etc. is provided. •Swift’s equivalent of printf() is tracef(), with limited and slightly different format codes. •Swift’s parallel foreach {} statement is the workhorse of the language, and executes all •iterations of the loop concurrently. The actual number of parallel tasks executed is based •on available resources and settable "throttles". •Swift conceptually executes all the statements, expressions and function calls in your • program in parallel, based on data flow. These are similarly throttled based on available •resources and settings. •Swift also has if and switch statements for conditional execution. These are seldom needed •in simple workflows but they enable very dynamic workflow patterns to be specified.

- 21. Swift programming model: all progress driven by concurrent dataflow • F() and G() implemented in native code or external programs • F() and G()run in concurrently in different processes • r is computed when they are both done • This parallelism is automatic • Works recursively throughout the program’s call graph 21 (int r) myproc (int i, int j) { int x = F(i); int y = G(j); r = x + y; }

- 22. 22 Data-flow driven execution 1 (int r) myproc (int i) 2 { 3 j = f(i); 4 k = g(i); 5 r = j + k; 6 } 7 f() and g() are computed in parallel 8 myproc() returns r when they are done 9 This parallelism is AUTOMATIC 10 Works recursively down the program’s call graph 22 F() G() i + j k r

- 24. Swift programming model • Data types int i = 4; string s = "hello world"; file image<"snapshot.jpg">; • Shell access app (file o) myapp(file f, int i) { mysim "-s" i @f @o; } • Structured data typedef image file; image A[]; type protein_run { file pdb_in; file sim_out; } bag<blob>[] B; 24 Conventional expressions if (x == 3) { y = x+2; s = strcat("y: ", y); } Parallel loops foreach f,i in A { B[i] = convert(A[i]); } Data flow merge(analyze(B[0], B[1]), analyze(B[2], B[3])); Swift: A language for distributed parallel scripting, J. Parallel Computing, 2011

- 25. Hierarchical programming model 25 Top-level dataflow script user-workflow.swift Distributed dataflow evaluation Data dependency resolution Work stealing / load balancing SWIG-generated wrappers C C++ Fortran User libraries MPI MPI?

- 26. Support calls to embedded interpreters 26 We have plugins Tcl, Julia, and QtScript

- 27. 27 A simple Swift script: functions run programs 1 type image; // Declare a “file” type. 2 3 app (image output) rotate (image input) { 4 { 5 convert "-rotate" 180 @input @output ; 6 } 7 8 image oldimg <"orion.2008.0117.jpg">; 9 image newimg <"output.jpg">; 10 11 newimg = rotate(oldimg); // runs the “convert” app 27

- 28. 28 A simple Swift script: functions run programs 1 type image; // Declare a “file” type. 2 3 app (image output) rotate (image input) { 4 { 5 convert "-rotate" 180 @input @output ; 6 } 7 8 image oldimg <"orion.2008.0117.jpg">; 9 image newimg <"output.jpg">; 10 11 newimg = rotate(oldimg); // runs the “convert” app 28 “application” wrapping function

- 29. 29 A simple Swift script: functions run programs 1 type image; // Declare a “file” type. 2 3 app (image output) rotate (image input) { 4 { 5 convert "-rotate" 180 @input @output ; 6 } 7 8 image oldimg <"orion.2008.0117.jpg">; 9 image newimg <"output.jpg">; 10 11 newimg = rotate(oldimg); // runs the “convert” app 29 Input fileOutput file Actual files to use

- 30. 30 A simple Swift script: functions run programs 1 type image; // Declare a “file” type. 2 3 app (image output) rotate (image input) { 4 { 5 convert "-rotate" 180 @input @output ; 6 } 7 8 image oldimg <"orion.2008.0117.jpg">; 9 image newimg <"output.jpg">; 10 11 newimg = rotate(oldimg); // runs the “convert” app 30 Invoke the “rotate” function to run the “convert” application

- 31. 31 Parallelism via foreach { } 1 type image; 2 3 (image output) flip(image input) { 4 app { 5 convert "-rotate" "180" @input @output; 6 } 7 } 8 9 image observations[ ] <simple_mapper; prefix=“orion”>; 10 image flipped[ ] <simple_mapper; prefix=“flipped”>; 11 12 13 14 foreach obs,i in observations { 15 flipped[i] = flip(obs); 16 } 31 Name outputs based on index Process all dataset members in parallel Map inputs from local directory

- 32. 32 foreach sim in [1:1000] { (structure[sim], log[sim]) = predict(p, 100., 25.); } result = analyze(structure) … 1000 Runs of the “predict” application Analyze() T1af 7 T1r6 9 T1b72 Large scale parallelization with simple loops

- 33. 33 Nested loops generate massive parallelism 1. Sweep( ) 2. { 3. int nSim = 1000; 4. int maxRounds = 3; 5. Protein pSet[ ] <ext; exec="Protein.map">; 6. float startTemp[ ] = [ 100.0, 200.0 ]; 7. float delT[ ] = [ 1.0, 1.5, 2.0, 5.0, 10.0 ]; 8. 9. foreach p, pn in pSet { 10. foreach t in startTemp { 11. foreach d in delT { 12. ItFix(p, nSim, maxRounds, t, d); 13. } 14. } 15. } 16. } 17. 33 10 proteins x 1000 simulations x 3 rounds x 2 temps x 5 deltas = 300K tasks

- 34. 34 A more complex workflow: fMRI data processing reorientRun reorientRun reslice_warpRun random_select alignlinearRun resliceRun softmean alignlinear combinewarp strictmean gsmoothRun binarize reorient/01 reorient/02 reslice_warp/22 alignlinear/03 alignlinear/07alignlinear/11 reorient/05 reorient/06 reslice_warp/23 reorient/09 reorient/10 reslice_warp/24 reorient/25 reorient/51 reslice_warp/26 reorient/27 reorient/52 reslice_warp/28 reorient/29 reorient/53 reslice_warp/30 reorient/31 reorient/54 reslice_warp/32 reorient/33 reorient/55 reslice_warp/34 reorient/35 reorient/56 reslice_warp/36 reorient/37 reorient/57 reslice_warp/38 reslice/04 reslice/08reslice/12 gsmooth/41 strictmean/39 gsmooth/42gsmooth/43gsmooth/44 gsmooth/45 gsmooth/46 gsmooth/47 gsmooth/48 gsmooth/49 gsmooth/50 softmean/13 alignlinear/17 combinewarp/21 binarize/40 reorient reorient alignlinear reslice softmean alignlinear combine_warp reslice_warp strictmean binarize gsmooth Dataset-level Expanded (10 volume) workflow

- 35. 35 Complex parallel workflows can be easily expressed Example fMRI preprocessing script below is automatically parallelized (Run snr) functional ( Run r, NormAnat a, Air shrink ) { Run yroRun = reorientRun( r , "y" ); Run roRun = reorientRun( yroRun , "x" ); Volume std = roRun[0]; Run rndr = random_select( roRun, 0.1 ); AirVector rndAirVec = align_linearRun( rndr, std, 12, 1000, 1000, "81 3 3" ); Run reslicedRndr = resliceRun( rndr, rndAirVec, "o", "k" ); Volume meanRand = softmean( reslicedRndr, "y", "null" ); Air mnQAAir = alignlinear( a.nHires, meanRand, 6, 1000, 4, "81 3 3" ); Warp boldNormWarp = combinewarp( shrink, a.aWarp, mnQAAir ); Run nr = reslice_warp_run( boldNormWarp, roRun ); Volume meanAll = strictmean( nr, "y", "null" ) Volume boldMask = binarize( meanAll, "y" ); snr = gsmoothRun( nr, boldMask, "6 6 6" ); } (Run or) reorientRun (Run ir, string direction) { foreach Volume iv, i in ir.v { or.v[i] = reorient(iv, direction); } }

- 36. 36 SLIM 36

- 37. 37 SLIM: Swift sketch type DataFile; type ModelFile; type FloatFile; // Function declaration app (DataFile[] dFiles) split(DataFile inputFile, int noOfPartition) { } app (ModelFile newModel, FloatFile newParameter) firstStage(DataFile data, ModelFile model) { } app (ModelFile newModel, FloatFile newParameter) reduce(ModelFile[] model, FloatFile[] parameter){ } app (ModelFile newModel) combine(ModelFile reducedModel, FloatFile reducedParameter, ModelFile oldModel){ } app (int terminate) check(ModelFile finalModel){ }

- 38. 38 SLIM: Swift sketch // Variables to hold the input data DataFile inputData <"MyData.dat">; ModelFile earthModel <"MyModel.mdl">; // Variables to hold the finalized models ModelFile model[]; // Variables for reduce stage ModelFile reducedModel[]; FloatFile reducedFloatParameter[]; // Get the number of partition from command line parameter int n = @toint(@arg("n","1")); model[0] = earthModel; //Partition the input data DataFile partitionedDataFiles[] = split(inputData, n);

- 39. 39 SLIM: Swift sketch // Iterate sequentially until the terminal condition is met iterate v { // Variables to hold the output of the first stage ModelFile firstStageModel[] < simple_mapper; location = "output", prefix = @strcat(v, "_"), suffix = ".mdl" >; file_dat firstStageFloatParameter[] < simple_mapper; padding = 3, location = "output", prefix = @strcat(v, "_"), suffix = ".float" >; // Parallel for loop foreach partitionedFile, count in partitionedDataFiles { // First stage (firstStageModel[count], firstStageFloatParameter[count]) = firstStage(model[v] , partitionedFile); } // Reduce stage (use the files for synchronization) (reducedModel[v], reducedFloatParameter[v]) = reduce(firstStageModel, firstStageFloatParameter); // Combine stage model[v+1] = combine(reducedModel[v] ,reducedFloatParameter[v], model[v]); //Check the termination condition here int shouldTerminate = check(model[v+1]); } until (shouldTerminate != 1);

- 40. 40 Software stack for Swift on ALCF BG/Ps Swift: scripting language, task coordination, throttling, data management, restart Coaster execution provider: per-node agents for fast task dispatch across all nodes Linux OS complete, high performance Linux compute node OS with full fork/exec Swift scripts Shell scripts App invocations File System

- 41. 41 Java application File System f1 f2 f3 Running Swift scripts Coaster Service Coaster Workers • Start Coaster – Start coaster service – Start coaster workers (qsub, ssh, …etc) • Run Swift script Head node

- 42. 42 Swift vs. MapReduce What to compare (to maintain the level of abstraction)? • Swift framework (language, compiler, runtime, scheduler plugin – Coasters, storage) • Map-reduce framework (Hive, Hadoop, HDFS) Similarities: • Goals: productive programming environment, hide complexities related to parallel execution • able to scale, hide individual failures, load balancing 42

- 43. 43 Swift vs. MapReduce Swift Advantages: • Intuitive programing model. • Platform independent: from small clusters, to large ones, to large ‘exotic’ architectures – BG/P, distributed resources (grids). - Map/reduce modes will not support all these - Can generally use the software stack available on the target machine with no changes • More flexible data model (just your old files); - No migration pain; - Easy to share with other applications • Optimized for coarse-granularity workflows. - E.g., files staged in a in-memory file-system; optimizations for specific patterns. 43

- 44. • Write site-independent scripts • Automatic parallelization and data movement • Run native code, script fragments as applications • Rapidly subdivide large partitions for MPI jobs • Move work to data locations 44 Swift control process Swift control process Swift/T control process Swift worker process C C+ + Fortr an C C+ + Fortr an C C+ + Fortr an MPI Swift/T worker 64K cores of Blue Waters 2 billion Python tasks 14 million Pythons/s Swift/T: Enabling high-performance workflows

- 45. Using Swift/Python/Numpy global const string numpy = "from numpy import *nn"; typedef matrix string; (matrix A) eye(int n) { command = sprintf("repr(eye(%i))", n); code = numpy+command; matrix t = python(code); A = replace_all(t, "n", "", 0); } (matrix R) add(matrix A1, matrix A2) { command = sprintf("repr(%s+%s)", A1, A2); code = numpy+command; matrix t = python(code); R = replace_all(t, "n", "", 0); } 45 a1 = eye(3); a2 = eye(3); sum = add(a1, a2); printf("2*eye(3)=%s", sum);

- 46. Fully parallel evaluation of complex scripts 46 int X = 100, Y = 100; int A[][]; int B[]; foreach x in [0:X-1] { foreach y in [0:Y-1] { if (check(x, y)) { A[x][y] = g(f(x), f(y)); } else { A[x][y] = 0; } } B[x] = sum(A[x]); }

- 47. Centralized evaluation can be a bottleneck at extreme scales 47 Had this (Swift/K): For extreme scale, we need (Swift/T):

- 48. MPI: The Message Passing Interface• Programming model used on large supercomputers • Can run on many networks, including sockets, or shared memory • Standard API for C and Fortran, other languages have working implementations • Contains communication calls for – Point-to-point (send/recv) – Collectives (broadcast, reduce, etc.) • Interesting concepts – Communicators: collections of communicating processing and a context – Data types: Language-independent data marshaling scheme 48

- 49. ADLB: Asynchronous Dynamic Load Balancer• An MPI library for master-worker workloads in C • Uses a variable-size, scalable network of servers • Servers implement work-stealing • The work unit is a byte array • Optional work priorities, targets, types • For Swift/T, we added: – Server-stored data – Data-dependent execution – Tcl bindings! 49 Serv ers Workers • Lusk et al. More scalability, less pain: A simple programming model and its implementation for extreme

- 50. One Swift server core per node: 16node job Flexible placement of server ranks in a Swift/T job Four Swift nodes for the job: 16node job

- 51. A[3] = g(A[2]); Example distributed execution • Code • Evaluate dataflow operations • Workers: execute tasks 51 A[2] = f(getenv(“N”)); • Perform getenv() • Submit f • Process f • Store A[2] • Subscribe to A[2] • Submit g • Process g • Store A[3] Task put Task put • Wozniak et al. Turbine: A distributed-memory dataflow engine for high performance many-task applications. Fundamenta Informaticae 128(3), 2013 Task get Task get

- 52. Swift/T-specific features • Task locality: Ability to send a task to a process – Allows for big data –type applications – Allows for stateful objects to remain resident in the workflow – location L = find_data(D); int y = @location=L f(D, x); • Data broadcast • Task priorities: Ability to set task priority – Useful for tweaking load balancing • Updateable variables – Allow data to be modified after its initial write – Consumer tasks may receive original or updated values when they emerge from the work queue 52

- 53. Swift/T: scaling of trivial foreach { } loop 100 microsecond to 10 millisecond tasks on up to 512K integer cores of Blue Waters 53

- 54. Swift/T application benchmarks on Blue Waters 54

- 55. Swift/T Compiler and Runtime • STC translates high-level Swift expressions into low-level Turbine operations: 55 – Create/Store/Retrieve typed data – Manage arrays – Manage data-dependent tasks

- 56. x = g(); if (x > 0) { n = f(x); foreach i in [0:n-1] { output(p(i)); }} Swift code in dataflow • Dataflow definitions create nodes in the dataflow graph • Dataflow assignments create edges • In typical (DAG) workflow languages, this forms a static graph • In Swift, the graph can grow dynamically – code fragments are evaluated (conditionally) as a result of dataflow • In its early implementation, these fragments were just tasks 56 x = g(); x n foreach i … { output(p(i)); if (x > 0) { n = f(x); …

- 58. Swift/T optimization challenge: distributed vars 58 http://swift-lang.org

- 59. Swift/T optimizations improve data locality 59 http://swift-lang.org

- 60. Parallel tasks in Swift/T • Swift expression: z = @par=32 f(x,y); • ADLB server finds 8 available workers – Workers receive ranks from ADLB server – Performs comm = MPI_Comm_create_group() • Workers perform f(x,y)communicating on comm

- 61. LAMMPS parallel tasks • LAMMPS provides a convenient C++ API • Easily used by Swift/T parallel tasks foreach i in [0:20] { t = 300+i; sed_command = sprintf("s/_TEMPERATURE_/%i/g", t); lammps_file_name = sprintf("input-%i.inp", t); lammps_args = "-i " + lammps_file_name; file lammps_input<lammps_file_name> = sed(filter, sed_command) => @par=8 lammps(lammps_args); } Tasks with varying sizes packed into big MPI run Black: Compute Blue: Message White: Idle

- 62. GeMTC: GPU-enabled Many-Task Computing Goals: 1) MTC support 2) Programmability 3) Efficiency 4) MPMD on SIMD 5) Increase concurrency to warp level Approach: Design & implement GeMTC middleware: 1) Manages GPU 2) Spread host/device 3) Workflow system integration (Swift/T) Motivation: Support for MTC on all accelerators!

- 63. Logging and debugging in Swift • Traditionally, Swift programs are debugged through the log or the TUI (text user interface) • Logs were produced using normal methods, containing: – Variable names and values as set with respect to thread – Calls to Swift functions – Calls to application code • A restart log could be produced to restart a large Swift run after certain fault conditions • Methods require single Swift site: do not scale to larger runs 63

- 64. Logging in MPI• The Message Passing Environment (MPE) • Common approach to logging MPI programs • Can log MPI calls or application events – can store arbitrary data • Can visualize log with Jumpshot • Partial logs are stored at the site of each process – Written as necessary to shared file system • in large blocks • in parallel – Results are merged into a big log file (CLOG, SLOG) • Work has been done optimize the file format for various queries 64

- 65. Swift for Really Parallel BuildsApps app (object_file o) gcc(c_file c, string cflags[]) { // Example: // gcc -c -O2 -o f.o f.c "gcc" "-c" cflags "-o" o c; } app (x_file x) ld(object_file o[], string ldflags[]) { // Example: // gcc -o f.x f1.o f2.o ... "gcc" ldflags "-o" x o; } app (output_file o) run(x_file x) { "sh" "-c" x @stdout=o; } app (timing_file t) extract(output_file o) { "tail" "-1" o "|" "cut" "-f" "2" "-d" " " @stdout=t; } Swift code string program_name = "programs/program1.c"; c_file c = input(program_name); // For each foreach O_level in [0:3] { make file names… // Construct compiler flags string O_flag = sprintf("-O%i", O_level); string cflags[] = [ "-fPIC", O_flag ]; object_file o<my_object> = gcc(c, cflags); object_file objects[] = [ o ]; string ldflags[] = []; // Link the program x_file x<my_executable> = ld(objects, ldflags); // Run the program output_file out<my_output> = run(x); // Extract the run time from the program output timing_file t<my_time> = extract(out); 65

- 66. Abstract, extensible MapReduce in Swiftmain { file d[]; int N = string2int(argv("N")); // Map phase foreach i in [0:N-1] { file a = find_file(i); d[i] = map_function(a); } // Reduce phase file final <"final.data"> = merge(d, 0, tasks-1); } (file o) merge(file d[], int start, int stop) { if (stop-start == 1) { // Base case: merge pair o = merge_pair(d[start], d[stop]); } else { // Merge pair of recursive calls n = stop-start; s = n % 2; o = merge_pair(merge(d, start, start+s), merge(d, start+s+1, stop)); }} 66 • User needs to implement map_function() and merg • These may be implemented in native code, Python, etc. • Could add annotations • Could add additional custom application logic

- 67. Crystal Coordinate Transformation Workflow • Goal: Transform 3D image stack from detector coordinates to real space coordinates – Operate on up to 50 GB data – Relatively light processing – I/O rates are critical – Core numerics in C++, Qt – Parallelism via Swift • Challenges – Coupling C++ to Swift via Qscript – Data access to HDF file on distributed storage 67

- 68. CCTW diagrams 68

- 69. CCTW: Swift/T application (C++) bag<blob> M[]; foreach i in [1:n] { blob b1= cctw_input(“pznpt.nxs”); blob b2[]; int outputId[]; (outputId, b2) = cctw_transform(i, b1); foreach b, j in b2 { int slot = outputId[j]; M[slot] += b; }} foreach g in M { blob b = cctw_merge(g); cctw_write(b); }} 69

- 70. Stateful external interpreters• Desire to use high-level, 3rd party algorithms in Python, R to orchestrate Swift workflows, e.g.: – Python DEAP for evolutionary algorithms – R language GA package • Typical control pattern: – GA minimizes the cost function – You pass the cost function to the library and wait • We want Swift to obtain the parameters from the library – We launch a stateful interpreter on a thread – The "cost function" is a dummy that returns the parameters to Swift over IPC – Swift passes the real cost function results back to the library over IPC • Achieve high productivity and high scalability – Library is not modified – unaware of framework! – Application logic extensions in high-level script Load balancingSwift worker Python/R IPC GA MPI Process Task s Resul ts MP I

- 71. Swift/T: Makefile example: 1 app (object_file o) gcc(c_file c, string cflags[]) { // gcc -c -O2 -o f.o f.c "gcc" "-c" cflags "-o" o c; } app (x_file x) ld(object_file o[], string ldflags[]) { // gcc -o f.x f1.o f2.o ... "gcc" ldflags "-o" x o; } app (output_file o) run(x_file x) { "sh" "-c" x @stdout=o; } 71 www.ci.uchicago.edu/swift www.mcs.anl.gov/exm

- 72. Swift/T: Makefile example: 2 // For each foreach O_level in [0:3] { // Construct file names string my_object = sprintf("programs/program1-O%i.o", O_level); . . . string O_flag = sprintf("-O%i", O_level); string cflags[] = [ "-fPIC", O_flag ]; object_file o<my_object> = gcc(c, cflags); object_file objects[] = [ o ]; string ldflags[] = []; x_file x<my_executable> = ld(objects, ldflags); 72

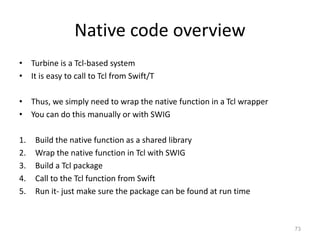

- 73. Native code overview • Turbine is a Tcl-based system • It is easy to call to Tcl from Swift/T • Thus, we simply need to wrap the native function in a Tcl wrapper • You can do this manually or with SWIG 1. Build the native function as a shared library 2. Wrap the native function in Tcl with SWIG 3. Build a Tcl package 4. Call to the Tcl function from Swift 5. Run it- just make sure the package can be found at run time 73

- 74. Simple app type file; app (file out) echo_app (string s){ echo s stdout=filename(out); } file out <"out.txt">; out = echo_app("Hello world!"); 74

- 75. Foreach example 75 type file; app (file o) simulate_app (){ simulate stdout=filename(o);} foreach i in [1:10] { string fname=strcat("output/sim_", i, ".out"); file f <single_file_mapper; file=fname>; f = simulate_app(); }

- 76. Multiple Apps 76 type file; app (file o) simulate_app (int time){ simulate "-t" time stdout=filename(o); } app (file o) stats_app (file s[]){ stats filenames(s) stdout=filename(o); } file sims[]; int time = 5; int nsims = 10; foreach i in [1:nsims] { string fname = strcat("output/sim_",i,".out"); file simout <single_file_mapper; file=fname>; simout = simulate_app(time); sims[i] = simout; } file average <"output/average.out">; average = stats_app(sims);

- 77. Multi stage 77 type file; app (file out) genseed_app (int nseeds){ genseed "-r" 2000000 "-n" nseeds stdout=@out; } app (file out) genbias_app (int bias_range, int nvalues){ genbias "-r" bias_range "-n" nvalues stdout=@out; } app (file out, file log) simulation_app (int timesteps, int sim_range, file bias_file, int scale, int sim_count){ simulate "-t" timesteps "-r" sim_range "-B" @bias_file "-x" scale "-n" sim_count stdout=@out stderr=@log; } app (file out, file log) analyze_app (file s[]){ stats filenames(s) stdout=@out stderr=@log; }

- 78. Multi stage 78 # Values that shape the runint nsim = 10; # number of simulation programs to runint steps = 1; # number of timesteps (seconds) per simulation int range = 100; # range of the generated random numbersint values = 10; # number of values generated per simulation # Main script and data tracef("n*** Script parameters: nsim=%i range=%i num values=%inn", nsim, range, values); # Dynamically generated bias for simulation ensemblefile seedfile<"output/seed.dat">; seedfile = genseed_app(1); int seedval = readData(seedfile); tracef("Generated seed=%in", seedval); file sims[];

- 79. Multi stage 79 foreach i in [0:nsim-1] { file biasfile <single_file_mapper; file=strcat("output/bias_",i,".dat")>; file simout <single_file_mapper; file=strcat("output/sim_",i,".out")>; file simlog <single_file_mapper; file=strcat("output/sim_",i,".log")>; biasfile = genbias_app(1000, 20); (simout,simlog) = simulation_app(steps, range, biasfile, 1000000, values); sims[i] = simout; } file stats_out<"output/average.out">; file stats_log<"output/average.log">; (stats_out,stats_log) = analyze_app(sims);

- 80. 80

- 81. 81

- 82. Thank You 82

![Swift programming model

• Data types

int i = 4;

string s = "hello world";

file image<"snapshot.jpg">;

• Shell access

app (file o) myapp(file f, int i)

{ mysim "-s" i @f @o; }

• Structured data

typedef image file;

image A[];

type protein_run {

file pdb_in; file sim_out;

}

bag<blob>[] B;

24

Conventional expressions

if (x == 3) {

y = x+2;

s = strcat("y: ", y);

}

Parallel loops

foreach f,i in A {

B[i] =

convert(A[i]);

}

Data flow

merge(analyze(B[0],

B[1]),

analyze(B[2],

B[3]));

Swift: A language for distributed parallel scripting, J. Parallel

Computing, 2011](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-24-320.jpg)

![31

Parallelism via foreach { }

1 type image;

2

3 (image output) flip(image input) {

4 app {

5 convert "-rotate" "180" @input @output;

6 }

7 }

8

9 image observations[ ] <simple_mapper; prefix=“orion”>;

10 image flipped[ ] <simple_mapper; prefix=“flipped”>;

11

12

13

14 foreach obs,i in observations {

15 flipped[i] = flip(obs);

16 }

31

Name outputs based on index

Process all dataset members in parallel

Map inputs from local directory](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-31-320.jpg)

![32

foreach sim in [1:1000] {

(structure[sim], log[sim]) = predict(p, 100., 25.);

}

result = analyze(structure)

…

1000

Runs of the

“predict”

application

Analyze()

T1af

7

T1r6

9

T1b72

Large scale parallelization with simple loops](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-32-320.jpg)

![33

Nested loops generate massive parallelism

1. Sweep( )

2. {

3. int nSim = 1000;

4. int maxRounds = 3;

5. Protein pSet[ ] <ext; exec="Protein.map">;

6. float startTemp[ ] = [ 100.0, 200.0 ];

7. float delT[ ] = [ 1.0, 1.5, 2.0, 5.0, 10.0 ];

8.

9. foreach p, pn in pSet {

10. foreach t in startTemp {

11. foreach d in delT {

12. ItFix(p, nSim, maxRounds, t, d);

13. }

14. }

15. }

16. }

17. 33

10 proteins x 1000 simulations x

3 rounds x 2 temps x 5 deltas

= 300K tasks](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-33-320.jpg)

![35

Complex parallel workflows can be easily expressed

Example fMRI preprocessing script below is automatically parallelized

(Run snr) functional ( Run r, NormAnat a,

Air shrink )

{ Run yroRun = reorientRun( r , "y" );

Run roRun = reorientRun( yroRun , "x" );

Volume std = roRun[0];

Run rndr = random_select( roRun, 0.1 );

AirVector rndAirVec = align_linearRun( rndr, std, 12, 1000, 1000, "81 3 3" );

Run reslicedRndr = resliceRun( rndr, rndAirVec, "o", "k" );

Volume meanRand = softmean( reslicedRndr, "y", "null" );

Air mnQAAir = alignlinear( a.nHires, meanRand, 6, 1000, 4, "81 3 3" );

Warp boldNormWarp = combinewarp( shrink, a.aWarp, mnQAAir );

Run nr = reslice_warp_run( boldNormWarp, roRun );

Volume meanAll = strictmean( nr, "y", "null" )

Volume boldMask = binarize( meanAll, "y" );

snr = gsmoothRun( nr, boldMask, "6 6 6" );

}

(Run or) reorientRun (Run ir,

string direction) {

foreach Volume iv, i in ir.v {

or.v[i] = reorient(iv, direction);

}

}](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-35-320.jpg)

![37

SLIM: Swift sketch

type DataFile;

type ModelFile;

type FloatFile;

// Function declaration

app (DataFile[] dFiles) split(DataFile inputFile, int noOfPartition) {

}

app (ModelFile newModel, FloatFile newParameter) firstStage(DataFile data, ModelFile model) {

}

app (ModelFile newModel, FloatFile newParameter) reduce(ModelFile[] model, FloatFile[]

parameter){

}

app (ModelFile newModel) combine(ModelFile reducedModel, FloatFile reducedParameter,

ModelFile oldModel){

}

app (int terminate) check(ModelFile finalModel){

}](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-37-320.jpg)

![38

SLIM: Swift sketch

// Variables to hold the input data

DataFile inputData <"MyData.dat">;

ModelFile earthModel <"MyModel.mdl">;

// Variables to hold the finalized models

ModelFile model[];

// Variables for reduce stage

ModelFile reducedModel[];

FloatFile reducedFloatParameter[];

// Get the number of partition from command line parameter

int n = @toint(@arg("n","1"));

model[0] = earthModel;

//Partition the input data

DataFile partitionedDataFiles[] = split(inputData, n);](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-38-320.jpg)

![39

SLIM: Swift sketch

// Iterate sequentially until the terminal condition is met

iterate v {

// Variables to hold the output of the first stage

ModelFile firstStageModel[] < simple_mapper; location = "output",

prefix = @strcat(v, "_"), suffix = ".mdl" >;

file_dat firstStageFloatParameter[] < simple_mapper; padding = 3, location = "output",

prefix = @strcat(v, "_"), suffix = ".float" >;

// Parallel for loop

foreach partitionedFile, count in partitionedDataFiles {

// First stage

(firstStageModel[count], firstStageFloatParameter[count]) = firstStage(model[v] , partitionedFile);

}

// Reduce stage (use the files for synchronization)

(reducedModel[v], reducedFloatParameter[v]) = reduce(firstStageModel, firstStageFloatParameter);

// Combine stage

model[v+1] = combine(reducedModel[v] ,reducedFloatParameter[v], model[v]);

//Check the termination condition here

int shouldTerminate = check(model[v+1]);

} until (shouldTerminate != 1);](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-39-320.jpg)

![Fully parallel evaluation of complex

scripts

46

int X = 100, Y = 100;

int A[][];

int B[];

foreach x in [0:X-1] {

foreach y in [0:Y-1] {

if (check(x, y)) {

A[x][y] = g(f(x), f(y));

} else {

A[x][y] = 0;

}

}

B[x] = sum(A[x]);

}](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-46-320.jpg)

![A[3] = g(A[2]);

Example distributed execution

• Code

• Evaluate dataflow operations

• Workers: execute tasks

51

A[2] = f(getenv(“N”));

• Perform getenv()

• Submit f

• Process f

• Store A[2]

• Subscribe to

A[2]

• Submit g

• Process g

• Store A[3]

Task put Task put

• Wozniak et al. Turbine: A distributed-memory dataflow

engine for high performance many-task

applications. Fundamenta Informaticae 128(3), 2013

Task get Task get](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-51-320.jpg)

![x = g();

if (x > 0) {

n = f(x);

foreach i in [0:n-1] {

output(p(i));

}}

Swift code in dataflow

• Dataflow definitions create nodes in the dataflow graph

• Dataflow assignments create edges

• In typical (DAG) workflow languages, this forms a static graph

• In Swift, the graph can grow dynamically – code fragments are evaluated

(conditionally) as a result of dataflow

• In its early implementation, these fragments were just tasks

56

x = g();

x

n

foreach i … {

output(p(i));

if (x > 0) {

n = f(x); …](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-56-320.jpg)

![LAMMPS parallel tasks

• LAMMPS provides a

convenient C++ API

• Easily used by Swift/T

parallel tasks

foreach i in [0:20] {

t = 300+i;

sed_command = sprintf("s/_TEMPERATURE_/%i/g", t);

lammps_file_name = sprintf("input-%i.inp", t);

lammps_args = "-i " + lammps_file_name;

file lammps_input<lammps_file_name> =

sed(filter, sed_command) =>

@par=8 lammps(lammps_args);

}

Tasks with varying sizes packed into big MPI run

Black: Compute Blue: Message White: Idle](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-61-320.jpg)

![Swift for Really Parallel BuildsApps

app (object_file o) gcc(c_file c, string cflags[]) {

// Example:

// gcc -c -O2 -o f.o f.c

"gcc" "-c" cflags "-o" o c;

}

app (x_file x) ld(object_file o[], string ldflags[]) {

// Example:

// gcc -o f.x f1.o f2.o ...

"gcc" ldflags "-o" x o;

}

app (output_file o) run(x_file x) {

"sh" "-c" x @stdout=o;

}

app (timing_file t) extract(output_file o) {

"tail" "-1" o "|" "cut" "-f" "2" "-d" " " @stdout=t;

}

Swift code

string program_name = "programs/program1.c";

c_file c = input(program_name);

// For each

foreach O_level in [0:3] {

make file names…

// Construct compiler flags

string O_flag = sprintf("-O%i", O_level);

string cflags[] = [ "-fPIC", O_flag ];

object_file o<my_object> = gcc(c, cflags);

object_file objects[] = [ o ];

string ldflags[] = [];

// Link the program

x_file x<my_executable> = ld(objects, ldflags);

// Run the program

output_file out<my_output> = run(x);

// Extract the run time from the program output

timing_file t<my_time> = extract(out);

65](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-65-320.jpg)

![Abstract, extensible MapReduce in

Swiftmain {

file d[];

int N = string2int(argv("N"));

// Map phase

foreach i in [0:N-1] {

file a = find_file(i);

d[i] = map_function(a);

}

// Reduce phase

file final <"final.data"> = merge(d, 0, tasks-1);

}

(file o) merge(file d[], int start, int stop) {

if (stop-start == 1) {

// Base case: merge pair

o = merge_pair(d[start], d[stop]);

} else {

// Merge pair of recursive calls

n = stop-start;

s = n % 2;

o = merge_pair(merge(d, start, start+s),

merge(d, start+s+1, stop));

}}

66

• User needs to implement

map_function() and merg

• These may be implemented

in native code, Python, etc.

• Could add annotations

• Could add additional custom

application logic](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-66-320.jpg)

![CCTW: Swift/T application (C++)

bag<blob> M[];

foreach i in [1:n] {

blob b1= cctw_input(“pznpt.nxs”);

blob b2[];

int outputId[];

(outputId, b2) = cctw_transform(i, b1);

foreach b, j in b2 {

int slot = outputId[j];

M[slot] += b;

}}

foreach g in M {

blob b = cctw_merge(g);

cctw_write(b);

}}

69](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-69-320.jpg)

![Swift/T: Makefile example: 1

app (object_file o) gcc(c_file c, string cflags[])

{

// gcc -c -O2 -o f.o f.c

"gcc" "-c" cflags "-o" o c;

}

app (x_file x) ld(object_file o[], string ldflags[])

{

// gcc -o f.x f1.o f2.o ...

"gcc" ldflags "-o" x o;

}

app (output_file o) run(x_file x)

{

"sh" "-c" x @stdout=o;

}

71

www.ci.uchicago.edu/swift

www.mcs.anl.gov/exm](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-71-320.jpg)

![Swift/T: Makefile example: 2

// For each

foreach O_level in [0:3]

{

// Construct file names

string my_object =

sprintf("programs/program1-O%i.o", O_level);

. . .

string O_flag = sprintf("-O%i", O_level);

string cflags[] = [ "-fPIC", O_flag ];

object_file o<my_object> = gcc(c, cflags);

object_file objects[] = [ o ];

string ldflags[] = [];

x_file x<my_executable> = ld(objects, ldflags);

72](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-72-320.jpg)

![Foreach example

75

type file;

app (file o) simulate_app (){

simulate stdout=filename(o);}

foreach i in [1:10] {

string fname=strcat("output/sim_", i, ".out");

file f <single_file_mapper; file=fname>;

f = simulate_app();

}](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-75-320.jpg)

![Multiple Apps

76

type file;

app (file o) simulate_app (int time){

simulate "-t" time stdout=filename(o);

}

app (file o) stats_app (file s[]){

stats filenames(s) stdout=filename(o);

}

file sims[];

int time = 5;

int nsims = 10;

foreach i in [1:nsims] {

string fname = strcat("output/sim_",i,".out");

file simout <single_file_mapper; file=fname>;

simout = simulate_app(time); sims[i] = simout;

}

file average <"output/average.out">;

average = stats_app(sims);](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-76-320.jpg)

![Multi stage

77

type file;

app (file out) genseed_app (int nseeds){ genseed "-r" 2000000

"-n" nseeds stdout=@out;

}

app (file out) genbias_app (int bias_range, int nvalues){

genbias "-r" bias_range "-n" nvalues stdout=@out;

}

app (file out, file log) simulation_app (int timesteps, int

sim_range, file bias_file, int scale, int sim_count){

simulate "-t" timesteps "-r" sim_range "-B" @bias_file "-x" scale

"-n" sim_count stdout=@out stderr=@log;

}

app (file out, file log) analyze_app (file s[]){

stats filenames(s) stdout=@out stderr=@log;

}](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-77-320.jpg)

![Multi stage

78

# Values that shape the runint nsim = 10;

# number of simulation programs to runint steps = 1;

# number of timesteps (seconds) per simulation

int range = 100;

# range of the generated random numbersint values = 10;

# number of values generated per simulation

# Main script and data

tracef("n*** Script parameters: nsim=%i range=%i num

values=%inn", nsim, range, values);

# Dynamically generated bias for simulation ensemblefile

seedfile<"output/seed.dat">;

seedfile = genseed_app(1);

int seedval = readData(seedfile);

tracef("Generated seed=%in", seedval);

file sims[];](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-78-320.jpg)

![Multi stage

79

foreach i in [0:nsim-1] {

file biasfile <single_file_mapper;

file=strcat("output/bias_",i,".dat")>;

file simout <single_file_mapper;

file=strcat("output/sim_",i,".out")>;

file simlog <single_file_mapper;

file=strcat("output/sim_",i,".log")>;

biasfile = genbias_app(1000, 20);

(simout,simlog) = simulation_app(steps, range, biasfile,

1000000, values); sims[i] = simout;

}

file stats_out<"output/average.out">;

file stats_log<"output/average.log">;

(stats_out,stats_log) = analyze_app(sims);](https://image.slidesharecdn.com/nbvtalk-160810054455/85/Swift-A-parallel-scripting-for-applications-at-the-petascale-and-beyond-79-320.jpg)