Talend Open Studio Introduction - OSSCamp 2014

2 likes1,768 views

Talend Open Studio is the most open, innovative and powerful data integration solution on the market today. Talend Open Studio for Data Integration allows you to create ETL (extract, transform, load) jobs.

1 of 21

Downloaded 87 times

Ad

Recommended

La Business Intelligence

La Business Intelligence Khchaf Mouna Un sujet au carrfour des SI , des métiers et de la DG . domaine en pleine évolution , la BI peut faire objet d'approches trés différentes d'une entreprises a une autre . c'est un outil d'aide à la décision et d'analyse de la performance .

Mieux rediger-les-user-stories-bonnes-pratiques-oeildecoach 2019

Mieux rediger-les-user-stories-bonnes-pratiques-oeildecoach 2019Oeil de Coach Une User Story (US) est le juste formalisme d’un élément fonctionnel du point de vue de l’utilisateur, précisant la valeur apportée à ce dernier.

Comment rédiger de bonnes USER STORIES ?

Bonnes pratiques à l’usage des équipes agiles (SCRUM / KANBAN / SAFe)

Sommaire :

> Les origines des User Stories

> Les 3C

> Définition et formalisation d’une User Story

> Méthode INVEST

> Comment découper vos US

> Quel niveau de détail

> Quel est le cycle de vie d'une User Story

> Le rôle du Product Owner sur une US

> Erreurs à éviter

Présentation PPT en libre téléchargement, créée par Martial SEGURA - OEIL DE COACH

www.oeildecoach.com

La BI : Qu’est-ce que c’est ? A quoi ça sert ?

La BI : Qu’est-ce que c’est ? A quoi ça sert ?Jean-Marc Dupont Introduction à la Business intelligence

A quoi ça sert ?

Outils et Projets de Business intelligence

Diabetes Mellitus

Diabetes MellitusMD Abdul Haleem This document provides an overview of diabetes mellitus (DM), including the three main types (Type 1, Type 2, and gestational diabetes), signs and symptoms, complications, pathophysiology, oral manifestations, dental management considerations, emergency management, diagnosis, and treatment. DM is caused by either the pancreas not producing enough insulin or cells not responding properly to insulin, resulting in high blood sugar levels. The document compares and contrasts the characteristics of Type 1 and Type 2 DM.

Power Point Presentation on Artificial Intelligence

Power Point Presentation on Artificial Intelligence Anushka Ghosh Its a Power Point Presentation on Artificial Intelligence.I hope you will find this helpful. Thank you.

You can also find out my another PPT on Artificial Intelligence.The link is given below--

https://www.slideshare.net/AnushkaGhosh5/ppt-presentation-on-artificial-intelligence

Anushka Ghosh

Republic Act No. 11313 Safe Spaces Act (Bawal Bastos Law).pptx

Republic Act No. 11313 Safe Spaces Act (Bawal Bastos Law).pptxmaricelabaya1 The document summarizes key aspects of the Safe Spaces Act, which aims to address gender-based sexual harassment. It defines harassment in public spaces, online, and work/educational settings. Acts considered harassment include catcalling, unwanted comments on appearance, stalking, and distributing intimate photos without consent. Those found guilty face penalties like imprisonment or fines. The law also requires employers and educational institutions to disseminate the law, prevent harassment, and address complaints through committees.

Talend Open Studio Data Integration

Talend Open Studio Data IntegrationRoberto Marchetto Talend provides data integration and management solutions. It focuses on combining data from different sources into a unified view for users. Talend offers an open source tool called Talend Open Studio that allows users to visually design procedures to extract, transform, and load data between various databases and file types. It also offers features for data quality, storage optimization, master data management, and reporting.

Talend ETL Tutorial | Talend Tutorial For Beginners | Talend Online Training ...

Talend ETL Tutorial | Talend Tutorial For Beginners | Talend Online Training ...Edureka! The document discusses Extract, Transform, Load (ETL) and Talend as an ETL tool. It states that ETL provides a one-stop solution for issues like data being scattered across different locations and sources, in different formats and volumes increasing. It describes the three processes of ETL - extract, transform and load. It then discusses Talend as an open-source ETL tool, how Talend Open Studio can easily manage the ETL process with drag-and-drop functionality, and its strong connectivity and smooth extraction and transformation capabilities.

Intro to Talend Open Studio for Data Integration

Intro to Talend Open Studio for Data IntegrationPhilip Yurchuk An overview of Talend Open Studio for Data Integration, along with some tips learned from building production jobs and a list of resources. Feel free to contact me for more information.

Talend Open Studio Fundamentals #1: Workspaces, Jobs, Metadata and Trips & Tr...

Talend Open Studio Fundamentals #1: Workspaces, Jobs, Metadata and Trips & Tr...Gabriele Baldassarre Introduction to Talend Open Studio for Data Integration, focusing on job architecture, metadata, workspaces, connection types and common use components. Rick Tips & Tricks sections

Talend Data Integration Tutorial | Talend Tutorial For Beginners | Talend Onl...

Talend Data Integration Tutorial | Talend Tutorial For Beginners | Talend Onl...Edureka! The document provides an overview of Talend Open Studio (TOS), an open source data integration platform. It discusses what Talend is, key TOS features like being open source and providing a unified platform. It also describes installing and using TOS, which includes dragging and dropping components onto a design window. Context variables and metadata are important concepts for reusing configurations across environments in TOS.

Introduction to Azure Databricks

Introduction to Azure DatabricksJames Serra Databricks is a Software-as-a-Service-like experience (or Spark-as-a-service) that is a tool for curating and processing massive amounts of data and developing, training and deploying models on that data, and managing the whole workflow process throughout the project. It is for those who are comfortable with Apache Spark as it is 100% based on Spark and is extensible with support for Scala, Java, R, and Python alongside Spark SQL, GraphX, Streaming and Machine Learning Library (Mllib). It has built-in integration with many data sources, has a workflow scheduler, allows for real-time workspace collaboration, and has performance improvements over traditional Apache Spark.

Introduction of ssis

Introduction of ssisdeepakk073 SSIS is a platform for data integration and workflows that allows users to extract, transform, and load data. It can connect to many different data sources and send data to multiple destinations. SSIS provides functionality for handling errors, monitoring data flows, and restarting packages from failure points. It uses a graphical interface that facilitates transforming data without extensive coding.

Talend Components | tMap, tJoin, tFileList, tInputFileDelimited | Talend Onli...

Talend Components | tMap, tJoin, tFileList, tInputFileDelimited | Talend Onli...Edureka! ***** Talend Training: https://www.edureka.co/talend-for-big-data *****

This Edureka tutorial on Talend Components will demonstrate the usage of few of the majorly used components in Talend like tMap, tJoin, tFileInputDelimited, tMysqlRow etc.This video helps you to learn the following topics:

1. What is Talend?

2. Talend Component Families

3. Talend File Components

4. Talend Processing Components

5. Talend Database Components

1- Introduction of Azure data factory.pptx

1- Introduction of Azure data factory.pptxBRIJESH KUMAR Azure Data Factory is a cloud-based data integration service that allows users to easily construct extract, transform, load (ETL) and extract, load, transform (ELT) processes without code. It offers job scheduling, security for data in transit, integration with source control for continuous delivery, and scalability for large data volumes. The document demonstrates how to create an Azure Data Factory from the Azure portal.

An Introduction to Talend Integration Cloud

An Introduction to Talend Integration CloudTalend Learn more about Talend Integration Cloud - http://www.talend.com/products/integration-cloud

Talend Integration Cloud includes the powerful Talend Studio and new web-based designer tools to maximize your productivity. Speed cloud integration using robust graphical tools and wizards inside Talend Integration Cloud. More than 900 connectors and components simplify development of cloud-to-cloud and hybrid integration flows to deploy as governed integration services. Build simple or complex integration flows inside Talend Studio that connect, cleanse, and transform data. Simply push a button to publish and go live in seconds. Easily de-duplicate and standardize data to increase information accuracy and completeness.

Data Mesh 101

Data Mesh 101ChrisFord803185 Data Mesh is a new socio-technical approach to data architecture, first described by Zhamak Dehghani and popularised through a guest blog post on Martin Fowler's site.

Since then, community interest has grown, due to Data Mesh's ability to explain and address the frustrations that many organisations are experiencing as they try to get value from their data. The 2022 publication of Zhamak's book on Data Mesh further provoked conversation, as have the growing number of experience reports from companies that have put Data Mesh into practice.

So what's all the fuss about?

On one hand, Data Mesh is a new approach in the field of big data. On the other hand, Data Mesh is application of the lessons we have learned from domain-driven design and microservices to a data context.

In this talk, Chris and Pablo will explain how Data Mesh relates to current thinking in software architecture and the historical development of data architecture philosophies. They will outline what benefits Data Mesh brings, what trade-offs it comes with and when organisations should and should not consider adopting it.

Introduction to ETL and Data Integration

Introduction to ETL and Data IntegrationCloverDX (formerly known as CloverETL) This presenation explains basics of ETL (Extract-Transform-Load) concept in relation to such data solutions as data warehousing, data migration, or data integration. CloverETL is presented closely as an example of enterprise ETL tool. It also covers typical phases of data integration projects.

Microsoft Azure Data Factory Hands-On Lab Overview Slides

Microsoft Azure Data Factory Hands-On Lab Overview SlidesMark Kromer This document outlines modules for a lab on moving data to Azure using Azure Data Factory. The modules will deploy necessary Azure resources, lift and shift an existing SSIS package to Azure, rebuild ETL processes in ADF, enhance data with cloud services, transform and merge data with ADF and HDInsight, load data into a data warehouse with ADF, schedule ADF pipelines, monitor ADF, and verify loaded data. Technologies used include PowerShell, Azure SQL, Blob Storage, Data Factory, SQL DW, Logic Apps, HDInsight, and Office 365.

Azure datafactory

Azure datafactoryDimko Zhluktenko Azure Data Factory is a cloud-based data integration service that orchestrates and automates the movement and transformation of data. Key concepts in Azure Data Factory include pipelines, datasets, linked services, and activities. Pipelines contain activities that define actions on data. Datasets represent data structures. Linked services provide connection information. Activities include data movement and transformation. Azure Data Factory supports importing data from various sources and transforming data using technologies like HDInsight Hadoop clusters.

Intro to Azure Data Factory v1

Intro to Azure Data Factory v1Eric Bragas An introduction to the concepts and requirements to get started developing data pipelines in Azure Data Factory version 1.

Ebook - The Guide to Master Data Management

Ebook - The Guide to Master Data Management Hazelknight Media & Entertainment Pvt Ltd Hexaware is a leading global provider of IT and BPO services with leadership positions in banking, financial services, insurance, transportation and logistics. It focuses on delivering business results through technology solutions such as business intelligence and analytics, enterprise applications, independent testing and legacy modernization. Hexaware has over 18 years of experience in providing business technology solutions and offers world class services, technology expertise and skilled human capital.

Manage your ODI Development Cycle – ODTUG Webinar

Manage your ODI Development Cycle – ODTUG WebinarJérôme Françoisse Oracle Data Integrator 12c is a powerful ELT tool with the ability to target different environments. It might be the traditional Development, Test and Production environments but there can be much more. Having a good release process is therefore important to ensure ongoing development doesn't impact the code running in production and that we can always go back to a previous version of the code if something goes wrong. We also want to have different developers or teams working concurrently without impacting each other. All of this is now easier than before thanks to the new features introduced in ODI 12.2.1. After looking at different architectures we will review all these new features and see how we can have a robust and efficient development cycle.

Big data architectures and the data lake

Big data architectures and the data lakeJames Serra The document provides an overview of big data architectures and the data lake concept. It discusses why organizations are adopting data lakes to handle increasing data volumes and varieties. The key aspects covered include:

- Defining top-down and bottom-up approaches to data management

- Explaining what a data lake is and how Hadoop can function as the data lake

- Describing how a modern data warehouse combines features of a traditional data warehouse and data lake

- Discussing how federated querying allows data to be accessed across multiple sources

- Highlighting benefits of implementing big data solutions in the cloud

- Comparing shared-nothing, massively parallel processing (MPP) architectures to symmetric multi-processing (

Building Lakehouses on Delta Lake with SQL Analytics Primer

Building Lakehouses on Delta Lake with SQL Analytics PrimerDatabricks You’ve heard the marketing buzz, maybe you have been to a workshop and worked with some Spark, Delta, SQL, Python, or R, but you still need some help putting all the pieces together? Join us as we review some common techniques to build a lakehouse using Delta Lake, use SQL Analytics to perform exploratory analysis, and build connectivity for BI applications.

Etl overview training

Etl overview trainingMondy Holten This document provides an overview of Extract, Transform, Load (ETL) concepts and processes. It discusses how ETL extracts data from various source systems, transforms it to fit operational needs by applying rules and standards, and loads it into a data warehouse or data mart. The document outlines common ETL scenarios, the overall ETL process, testing concepts, and popular ETL tools. It also discusses how ETL processes data from various sources and loads it into data warehouses and data marts to enable business analysis and reporting for business value.

ilide.info-talend-open-studio-for-data-integration-pr_f4a743b84c8b04cbebbf4c7...

ilide.info-talend-open-studio-for-data-integration-pr_f4a743b84c8b04cbebbf4c7...khadijahd2 Talend Open Studio is an open source data integration tool that provides a graphical environment for designing and executing data integration jobs. It generates Java or Perl code behind the scenes. The document discusses Talend Enterprise, which is the commercial version that includes additional features like an administration center, version control, and execution servers. It also describes key concepts in Talend Open Studio like projects, components, connections, and metadata, and shows screenshots of the main interface elements.

Richa_Profile

Richa_ProfileRicha Sharma This document contains a resume summary for Richa Sharma highlighting her 8.5 years of experience in data warehousing, ETL development, and business intelligence. She has expertise in IBM Datastage and Cognos reporting tools. Her experience includes data modeling, ETL development, database administration, performance tuning, and project experience with clients in the retail and telecom industries.

Ad

More Related Content

What's hot (20)

Talend Open Studio Data Integration

Talend Open Studio Data IntegrationRoberto Marchetto Talend provides data integration and management solutions. It focuses on combining data from different sources into a unified view for users. Talend offers an open source tool called Talend Open Studio that allows users to visually design procedures to extract, transform, and load data between various databases and file types. It also offers features for data quality, storage optimization, master data management, and reporting.

Talend ETL Tutorial | Talend Tutorial For Beginners | Talend Online Training ...

Talend ETL Tutorial | Talend Tutorial For Beginners | Talend Online Training ...Edureka! The document discusses Extract, Transform, Load (ETL) and Talend as an ETL tool. It states that ETL provides a one-stop solution for issues like data being scattered across different locations and sources, in different formats and volumes increasing. It describes the three processes of ETL - extract, transform and load. It then discusses Talend as an open-source ETL tool, how Talend Open Studio can easily manage the ETL process with drag-and-drop functionality, and its strong connectivity and smooth extraction and transformation capabilities.

Intro to Talend Open Studio for Data Integration

Intro to Talend Open Studio for Data IntegrationPhilip Yurchuk An overview of Talend Open Studio for Data Integration, along with some tips learned from building production jobs and a list of resources. Feel free to contact me for more information.

Talend Open Studio Fundamentals #1: Workspaces, Jobs, Metadata and Trips & Tr...

Talend Open Studio Fundamentals #1: Workspaces, Jobs, Metadata and Trips & Tr...Gabriele Baldassarre Introduction to Talend Open Studio for Data Integration, focusing on job architecture, metadata, workspaces, connection types and common use components. Rick Tips & Tricks sections

Talend Data Integration Tutorial | Talend Tutorial For Beginners | Talend Onl...

Talend Data Integration Tutorial | Talend Tutorial For Beginners | Talend Onl...Edureka! The document provides an overview of Talend Open Studio (TOS), an open source data integration platform. It discusses what Talend is, key TOS features like being open source and providing a unified platform. It also describes installing and using TOS, which includes dragging and dropping components onto a design window. Context variables and metadata are important concepts for reusing configurations across environments in TOS.

Introduction to Azure Databricks

Introduction to Azure DatabricksJames Serra Databricks is a Software-as-a-Service-like experience (or Spark-as-a-service) that is a tool for curating and processing massive amounts of data and developing, training and deploying models on that data, and managing the whole workflow process throughout the project. It is for those who are comfortable with Apache Spark as it is 100% based on Spark and is extensible with support for Scala, Java, R, and Python alongside Spark SQL, GraphX, Streaming and Machine Learning Library (Mllib). It has built-in integration with many data sources, has a workflow scheduler, allows for real-time workspace collaboration, and has performance improvements over traditional Apache Spark.

Introduction of ssis

Introduction of ssisdeepakk073 SSIS is a platform for data integration and workflows that allows users to extract, transform, and load data. It can connect to many different data sources and send data to multiple destinations. SSIS provides functionality for handling errors, monitoring data flows, and restarting packages from failure points. It uses a graphical interface that facilitates transforming data without extensive coding.

Talend Components | tMap, tJoin, tFileList, tInputFileDelimited | Talend Onli...

Talend Components | tMap, tJoin, tFileList, tInputFileDelimited | Talend Onli...Edureka! ***** Talend Training: https://www.edureka.co/talend-for-big-data *****

This Edureka tutorial on Talend Components will demonstrate the usage of few of the majorly used components in Talend like tMap, tJoin, tFileInputDelimited, tMysqlRow etc.This video helps you to learn the following topics:

1. What is Talend?

2. Talend Component Families

3. Talend File Components

4. Talend Processing Components

5. Talend Database Components

1- Introduction of Azure data factory.pptx

1- Introduction of Azure data factory.pptxBRIJESH KUMAR Azure Data Factory is a cloud-based data integration service that allows users to easily construct extract, transform, load (ETL) and extract, load, transform (ELT) processes without code. It offers job scheduling, security for data in transit, integration with source control for continuous delivery, and scalability for large data volumes. The document demonstrates how to create an Azure Data Factory from the Azure portal.

An Introduction to Talend Integration Cloud

An Introduction to Talend Integration CloudTalend Learn more about Talend Integration Cloud - http://www.talend.com/products/integration-cloud

Talend Integration Cloud includes the powerful Talend Studio and new web-based designer tools to maximize your productivity. Speed cloud integration using robust graphical tools and wizards inside Talend Integration Cloud. More than 900 connectors and components simplify development of cloud-to-cloud and hybrid integration flows to deploy as governed integration services. Build simple or complex integration flows inside Talend Studio that connect, cleanse, and transform data. Simply push a button to publish and go live in seconds. Easily de-duplicate and standardize data to increase information accuracy and completeness.

Data Mesh 101

Data Mesh 101ChrisFord803185 Data Mesh is a new socio-technical approach to data architecture, first described by Zhamak Dehghani and popularised through a guest blog post on Martin Fowler's site.

Since then, community interest has grown, due to Data Mesh's ability to explain and address the frustrations that many organisations are experiencing as they try to get value from their data. The 2022 publication of Zhamak's book on Data Mesh further provoked conversation, as have the growing number of experience reports from companies that have put Data Mesh into practice.

So what's all the fuss about?

On one hand, Data Mesh is a new approach in the field of big data. On the other hand, Data Mesh is application of the lessons we have learned from domain-driven design and microservices to a data context.

In this talk, Chris and Pablo will explain how Data Mesh relates to current thinking in software architecture and the historical development of data architecture philosophies. They will outline what benefits Data Mesh brings, what trade-offs it comes with and when organisations should and should not consider adopting it.

Introduction to ETL and Data Integration

Introduction to ETL and Data IntegrationCloverDX (formerly known as CloverETL) This presenation explains basics of ETL (Extract-Transform-Load) concept in relation to such data solutions as data warehousing, data migration, or data integration. CloverETL is presented closely as an example of enterprise ETL tool. It also covers typical phases of data integration projects.

Microsoft Azure Data Factory Hands-On Lab Overview Slides

Microsoft Azure Data Factory Hands-On Lab Overview SlidesMark Kromer This document outlines modules for a lab on moving data to Azure using Azure Data Factory. The modules will deploy necessary Azure resources, lift and shift an existing SSIS package to Azure, rebuild ETL processes in ADF, enhance data with cloud services, transform and merge data with ADF and HDInsight, load data into a data warehouse with ADF, schedule ADF pipelines, monitor ADF, and verify loaded data. Technologies used include PowerShell, Azure SQL, Blob Storage, Data Factory, SQL DW, Logic Apps, HDInsight, and Office 365.

Azure datafactory

Azure datafactoryDimko Zhluktenko Azure Data Factory is a cloud-based data integration service that orchestrates and automates the movement and transformation of data. Key concepts in Azure Data Factory include pipelines, datasets, linked services, and activities. Pipelines contain activities that define actions on data. Datasets represent data structures. Linked services provide connection information. Activities include data movement and transformation. Azure Data Factory supports importing data from various sources and transforming data using technologies like HDInsight Hadoop clusters.

Intro to Azure Data Factory v1

Intro to Azure Data Factory v1Eric Bragas An introduction to the concepts and requirements to get started developing data pipelines in Azure Data Factory version 1.

Ebook - The Guide to Master Data Management

Ebook - The Guide to Master Data Management Hazelknight Media & Entertainment Pvt Ltd Hexaware is a leading global provider of IT and BPO services with leadership positions in banking, financial services, insurance, transportation and logistics. It focuses on delivering business results through technology solutions such as business intelligence and analytics, enterprise applications, independent testing and legacy modernization. Hexaware has over 18 years of experience in providing business technology solutions and offers world class services, technology expertise and skilled human capital.

Manage your ODI Development Cycle – ODTUG Webinar

Manage your ODI Development Cycle – ODTUG WebinarJérôme Françoisse Oracle Data Integrator 12c is a powerful ELT tool with the ability to target different environments. It might be the traditional Development, Test and Production environments but there can be much more. Having a good release process is therefore important to ensure ongoing development doesn't impact the code running in production and that we can always go back to a previous version of the code if something goes wrong. We also want to have different developers or teams working concurrently without impacting each other. All of this is now easier than before thanks to the new features introduced in ODI 12.2.1. After looking at different architectures we will review all these new features and see how we can have a robust and efficient development cycle.

Big data architectures and the data lake

Big data architectures and the data lakeJames Serra The document provides an overview of big data architectures and the data lake concept. It discusses why organizations are adopting data lakes to handle increasing data volumes and varieties. The key aspects covered include:

- Defining top-down and bottom-up approaches to data management

- Explaining what a data lake is and how Hadoop can function as the data lake

- Describing how a modern data warehouse combines features of a traditional data warehouse and data lake

- Discussing how federated querying allows data to be accessed across multiple sources

- Highlighting benefits of implementing big data solutions in the cloud

- Comparing shared-nothing, massively parallel processing (MPP) architectures to symmetric multi-processing (

Building Lakehouses on Delta Lake with SQL Analytics Primer

Building Lakehouses on Delta Lake with SQL Analytics PrimerDatabricks You’ve heard the marketing buzz, maybe you have been to a workshop and worked with some Spark, Delta, SQL, Python, or R, but you still need some help putting all the pieces together? Join us as we review some common techniques to build a lakehouse using Delta Lake, use SQL Analytics to perform exploratory analysis, and build connectivity for BI applications.

Etl overview training

Etl overview trainingMondy Holten This document provides an overview of Extract, Transform, Load (ETL) concepts and processes. It discusses how ETL extracts data from various source systems, transforms it to fit operational needs by applying rules and standards, and loads it into a data warehouse or data mart. The document outlines common ETL scenarios, the overall ETL process, testing concepts, and popular ETL tools. It also discusses how ETL processes data from various sources and loads it into data warehouses and data marts to enable business analysis and reporting for business value.

Talend Open Studio Fundamentals #1: Workspaces, Jobs, Metadata and Trips & Tr...

Talend Open Studio Fundamentals #1: Workspaces, Jobs, Metadata and Trips & Tr...Gabriele Baldassarre

Similar to Talend Open Studio Introduction - OSSCamp 2014 (20)

ilide.info-talend-open-studio-for-data-integration-pr_f4a743b84c8b04cbebbf4c7...

ilide.info-talend-open-studio-for-data-integration-pr_f4a743b84c8b04cbebbf4c7...khadijahd2 Talend Open Studio is an open source data integration tool that provides a graphical environment for designing and executing data integration jobs. It generates Java or Perl code behind the scenes. The document discusses Talend Enterprise, which is the commercial version that includes additional features like an administration center, version control, and execution servers. It also describes key concepts in Talend Open Studio like projects, components, connections, and metadata, and shows screenshots of the main interface elements.

Richa_Profile

Richa_ProfileRicha Sharma This document contains a resume summary for Richa Sharma highlighting her 8.5 years of experience in data warehousing, ETL development, and business intelligence. She has expertise in IBM Datastage and Cognos reporting tools. Her experience includes data modeling, ETL development, database administration, performance tuning, and project experience with clients in the retail and telecom industries.

Resume ratna rao updated

Resume ratna rao updatedRatna Rao yamani MCA with 9.5 Years of IT experience and relevant to informatica I am carrying 7 years experience. I was done informatica certification successfully.

Resume_Ratna Rao updated

Resume_Ratna Rao updatedRatna Rao yamani Ratna Rao Yamani has over 9 years of experience in IT and 7 years of experience with data warehousing technologies like Informatica Power Center and Informatica MDM. They have extensive experience developing ETL code, working with databases like Oracle and DB2, and performing tasks like requirements gathering, design documentation, testing, and performance tuning for various projects involving data integration and data warehousing.

Talend for big_data_intorduction

Talend for big_data_intorductionLakshman Dhullipalla Talend for big data online training - www.immensedatadimension.com

contact number:+917799122206, email - [email protected]

Ajith_kumar_4.3 Years_Informatica_ETL

Ajith_kumar_4.3 Years_Informatica_ETLAjith Kumar Pampatti The candidate has over 4 years of experience as an ETL developer using Informatica. They have extensive experience developing mappings using transformations like aggregator, lookup, filter and joiner. They have worked on multiple projects in the telecommunications domain for clients like British Telecom extracting data from various sources and loading to data warehouses. Their skills include Informatica, Oracle, SQL and they have experience in requirements analysis, mapping development, testing and support.

Resume

ResumeNootan Sharma The document provides a technical summary and experience profile of Nootan Sharma. It summarizes his 8 years of experience in data warehousing and business intelligence projects. It details his expertise in tools like Informatica PowerCenter, Oracle, SQL Server and data quality management. It also lists his past work experience with companies like Capgemini, Birlasoft and Infogain on various BI and data warehousing projects for clients in different sectors.

Informatica_Rajesh-CV 28_03_16

Informatica_Rajesh-CV 28_03_16Rajesh Dheeti This document provides a summary of Rajesh Dheeti's professional experience and qualifications. It summarizes his 4+ years of experience developing ETL processes using Informatica PowerCenter to extract, transform, and load data from sources like Oracle and Teradata. It also lists 5 projects he has worked on involving building ETL mappings and workflows to load data into data warehouses.

Renu_Resume

Renu_Resumerenu lalwani Renu Lalwani has over 3 years of experience as an ETL Developer using Informatica Power Centre 9.0. She currently works for Cognizant in Pune, India developing data warehouses and ETL processes from various sources like Oracle and flat files. Some of her responsibilities include requirements gathering, data extraction, transformation and loading, designing mappings, creating reusable objects, and testing. Previously she worked for Intimetec Vision Soft on another data warehousing project involving Oracle databases. She has a Bachelor's degree in Computer Science and is proficient in technologies like SQL, Java, HTML and version control systems.

Neethu_Abraham

Neethu_AbrahamNeethu Abraham This document provides a summary of Neethu Abraham's skills and experience. She has over 9 years of experience in ETL development, data warehousing, and business intelligence solutions using tools like Informatica and Oracle. She has extensive experience designing and developing ETL processes for healthcare, retail, and hospitality clients. Her roles have included team lead, senior consultant, and she has experience working on projects involving customer data management, data migration, and clinical data warehousing. She is proficient in technologies like Informatica, Oracle, Unix, and has a history of successfully delivering ETL solutions on schedule and within budget.

Oracle Data Integrator

Oracle Data Integrator IT Help Desk Inc Oracle Data Integrator (ODI) is an ETL tool acquired by Oracle in 2006. It provides a graphical interface to build, manage, and maintain data integration processes. ODI can extract, transform, and load data between heterogeneous data sources to support business intelligence, data warehousing, data migrations, and master data management projects. It uses a 4-tier architecture with repositories to store metadata and designs, an ODI Studio for development, runtime agents to execute tasks, and a console for monitoring.

Resume_gmail

Resume_gmailShirisha Pothakanuri (Immediate Joinee) Shirisha Pothakanuri has over 3.9 years of experience as a Talend developer. She has strong expertise in extracting, transforming, and loading data using Talend DI. She has experience developing ETL jobs to load data from various sources like flat files and databases into target systems such as Oracle. Some of her responsibilities include data validation, transformation using Talend components, exception handling, reusable job development, and deployment. She is proficient in Talend, PL/SQL, Oracle SQL, and Unix.

25896027-1-ODI-Architecture.ppt

25896027-1-ODI-Architecture.pptAnamariaFuia Oracle Data Integrator (ODI) is an integration platform that uses a centralized metadata repository to move and transform data across systems. It has a graphical user interface and uses an extract-load-transform approach. The key components are the repository, Designer tool for development, Operator tool for monitoring, and Agents that orchestrate runtime execution across target systems. ODI repositories include a master repository for security and topology and multiple work repositories for development, testing, and production.

Toolboxes for data scientists

Toolboxes for data scientistsSudipto Krishna Dutta Toolboxes are collections of built-in functions that help data scientists perform tasks efficiently. The document discusses several Python toolboxes for data science like NumPy, Pandas, SciPy, and Scikit-Learn. It also covers IDEs like Jupyter Notebook that provide interactive environments for coding and data analysis. Overall, the document presents an overview of the Python toolbox ecosystem and how it enables effective data science work.

MyResume

MyResumeSubramanyam Mallipeddi The document provides a summary of Subramanyam M's professional experience including 4+ years working with ETL tools like IBM Datastage and Ascential Datastage. He has experience designing, developing and testing data warehouse projects involving data extraction, transformation and loading. He also has skills in SQL, Oracle, DB2, Unix and data warehousing techniques. The profile outlines various ETL projects he has worked on involving large datasets for clients like HSBC Bank, Scope International and Bharti Airtel.

Pranabesh Ghosh

Pranabesh Ghosh Pranabesh Ghosh This document contains a professional profile summary for Pranabesh Ghosh. It outlines his work experience as a technology consultant, including strengths like client interfacing and communication skills. It also lists technical skills and certifications. Several work experience summaries are then provided detailing roles on projects for clients like HP, Ahold, Aurora Energy, CVS Caremark, and Franklin Templeton, demonstrating experience leading teams and designing/developing ETL solutions.

Basha_ETL_Developer

Basha_ETL_Developerbasha shaik The document provides a summary of an ETL Developer's skills and experience. The developer has 3 years of experience using IBM InfoSphere Datastage for ETL projects involving data extraction, transformation, and loading. Responsibilities include developing and debugging ETL jobs, testing and tuning performance, implementing changes, and working with databases like Oracle. The developer has worked on risk data warehousing and order tracking projects, developing jobs to move data between systems and load enterprise data warehouses.

M_Amjad_Khan_resume

M_Amjad_Khan_resumeAmjad Khan Mohammad Amjad Khan has over 11 years of experience working as an ETL Developer and BI Analyst. He has extensive experience extracting, transforming, and loading data from various sources like SAP, legacy systems, and file systems into data warehouses using tools like Informatica and Cognos Data Manager. He also has experience writing queries, creating reports and dashboards using Cognos and Business Objects. Some of the key projects he has worked on include data migration projects for Amazon, Vodafone, Friends Life, and KPMG.

Streamline heterogeneous database environment management with Toad Data Studio

Streamline heterogeneous database environment management with Toad Data StudioPrincipled Technologies In five data management use cases across three database platforms, we were able to efficiently complete common management tasks with Toad Data Studio

Conclusion

The ability to manage multiple database platforms from a single management console helps your data engineering teams increase efficiencies by removing the need to navigate between platform-specific tool sets. We found Toad Data Studio did well in our everyday data management use cases and allowed us to efficiently accomplish our test tasks. Using Toad Data Studio could help you streamline development and production efforts, improve data quality, and facilitate better data sharing capabilities in heterogeneous environments.

SPEVO13 - Dev212 - Document Assembly Deep Dive Part 1

SPEVO13 - Dev212 - Document Assembly Deep Dive Part 1John F. Holliday This session explores the key development patterns for building document assembly solutions on the SharePoint platform using Open XML.

Streamline heterogeneous database environment management with Toad Data Studio

Streamline heterogeneous database environment management with Toad Data StudioPrincipled Technologies

Ad

More from OSSCube (20)

High Availability Using MySQL Group Replication

High Availability Using MySQL Group ReplicationOSSCube MySQL Group Replication is a recent MySQL plugin that brings together group communication techniques and database replication, providing both a high availability and a multi-master update everywhere replication solution.

The PPT provide provide a broad overview of MySQL Group Replication plugin, what it can achieve and how it helps keep your MySQL databases highly available and your business up and running, without fail.

Accelerate Your Digital Transformation Journey with Pimcore

Accelerate Your Digital Transformation Journey with PimcoreOSSCube A key priority for businesses today is to successfully transform their enterprise into a digital business. Digital transformation offers enormous opportunities to enterprises to refine their business models and to win in this digital era. How is your organization placed in this digital world?

In the video, we have discussed, how Pimcore delivered the promise, consolidating PIM, CMS, DAM & Commerce within one framework platform with faster time-to-market.

We will also go through some recent digital transformation experiences driven through Pimcore that helped clients achieve market differentiation and customer value.

Key Points:

* Understanding Digital Transformation need and strategies

* Transformation of digital strategies through Pimcore

* Helps gain insights into Pimcore and its features

* Identification/Co-relation of end customer needs based on our digital transformation experiences

Migrating Legacy Applications to AWS Cloud: Strategies and Challenges

Migrating Legacy Applications to AWS Cloud: Strategies and ChallengesOSSCube To reduce the TCO of application infrastructure and to make them more scalable and resilient it is advisable to migrate on-premise legacy applications to AWS cloud. In this webinar, you will learn the benefits, key challenges and strategies to mitigate them. It will also talk about leveraging the cloud infrastructure to further modernize the application.

Key Take Away:

Opportunities and challenges while migrating premise application to cloud.

Identifying the applications

Assessing cloud architecture and costs

Data migrations strategies and options

Strategies for migration applications

Leveraging the cloud and optimization

Why Does Omnichannel Experience Matter to Your Customers

Why Does Omnichannel Experience Matter to Your CustomersOSSCube Retailers are looking for better ways to improve the customer experience across the board. An Omnichannel strategy is a win-win solution.

Using MySQL Fabric for High Availability and Scaling Out

Using MySQL Fabric for High Availability and Scaling OutOSSCube MySQL Fabric is an extensible framework for managing farms of MySQL Servers. In this webinar, you will learn what MySQL Fabric is, what it can achieve and how it is used by database administrators and developers. Plus, you will learn how MySQL Fabric can help for sharding and high-availability. See more @ http://www.osscube.com/

Webinar: Five Ways a Technology Refresh Strategy Can Help Make Your Digital T...

Webinar: Five Ways a Technology Refresh Strategy Can Help Make Your Digital T...OSSCube You’ve realized that in order to create new revenue streams, increase efficiency and improve customer engagement your organization may need a digital transformation. But what exactly is a digital transformation, how do you start one, and how does technology play a role? Join experts Dietmar Rietsch, co-founder and CEO of Pimcore, and John Bernard, EVP of North America at OSSCube, as they discuss how Pimcore is disrupting the digital transformation market.

We’ll cover:

- What digital transformation is and why it’s important for your organization

- The role technology plays in the digital transformation process

- How choosing the right technology gives you a competitive advantage

- Outcomes of a successful digital transformation project

Cutting Through the Disruption

Cutting Through the DisruptionOSSCube The pace of change in business is faster than we could have ever imagined, and in this day and age you must either disrupt, or be disrupted.

This presentation aims to explain the changes we are seeing in the business technology world, the struggles many organizations are facing to keep up, and present the audience with solutions to these difficulties.

The presentation was originally presented by OSSCube CEO Lavanya Rastogi.

Legacy to industry leader: a modernization case study

Legacy to industry leader: a modernization case studyOSSCube This live webinar goes through the steps of how MakeMyTrip.com engaged OSSCube to completely modernize their website and help them become one of the top online travel companies in the world. Zend Server and Zend Studio were used to expedite the entire project for what has now become arguably the largest Drupal implementation to date.

Marketing and Sales together at last

Marketing and Sales together at lastOSSCube This live webinar demonstrates how using an integrated customer acquisition solution can help to close the loop between marketing and sales. We show you examples of how this process has worked for other companies, giving them a better understanding as to where their leads are coming from and how to best spend their marketing dollars for the highest return. - See more at: https://www.osscube.com/webinar/sales-and-marketing-together-at-last#sthash.ZT2dsELD.dpuf

Using pim to maximize revenue and improve customer satisfaction

Using pim to maximize revenue and improve customer satisfactionOSSCube This live webinar shows how Pimcore, an open source PIM (Product Information Management) solution, can be used to quickly update and append your product catalog across all channels, effectively reducing data management costs.

Talend for the Enterprise

Talend for the EnterpriseOSSCube This webinar explores the process of how OSSCube developed a Talend solution--for a global provider of digital marketing and client reporting tools--that aggregates and converts information from a variety of resources into well-defined data formats.

Watch on YouTube: https://www.youtube.com/watch?v=gyZiiG7mjx8

OSSCube EVP John Bernard and Talend Alliances and Channels Manager Rich Searle provide an in-depth explanation of the benefits of Talend as well as the usefulness of data organization in today's business world.

Key Discussion Points:

- Talend ETL tools capabilities

- Implementing Talend in your organization

For more information please visit OSSCube.com

For more webinars please visit OSSCube.com/upcoming-webinars

Follow us on Twitter @OSSCube

Follow us on LinkedIn http://linkedin.com/company/osscube

Ahead of the Curve

Ahead of the CurveOSSCube This webinar goes through how the commerce industry today has changed, causing customers to interact differently, expect more from retailers and demand unique shopping experiences. Rakesh Kumar and John Bernard dive into what makes Magento the world’s leading eCommerce platform and how it puts the retailer back in control.

Non functional requirements. do we really care…?

Non functional requirements. do we really care…?OSSCube Non Functional requirements are an essential part of a project’s success, sometimes it becomes less focused area as everyone tries to make project successful in terms of functionality. This recorded webinar uncovers what can happen if Non Functional requirements are not addressed properly. What are the after impacts? You also learn the importance of Non Functional requirement, their identification, implementation and verification.

Learning from experience: Collaborative Journey towards CMMI

Learning from experience: Collaborative Journey towards CMMIOSSCube The document summarizes OSSCube's journey towards achieving CMMI Level 3 accreditation. It discusses the different phases of implementation including initiation, planning, execution, appraisal planning, and final appraisal. Key aspects covered include establishing internal commitment, conducting a gap analysis, forming an implementation team, creating an implementation roadmap and schedule, building a quality management system, rolling out processes, conducting trainings, setting up an audit function, selecting appraisers, planning for the appraisal, and completing the final appraisal. The presentation emphasizes the importance of internal commitment, using tools, collaborative process writing, trainings, and planning well in advance for the final appraisal.

Exploiting JXL using Selenium

Exploiting JXL using SeleniumOSSCube JXL is the library of JExcel API, which is an open source Java API that performs the task to dynamically read, write, and modify Excel spreadsheets.

We can use its powerful features to build an automated testing framework using Selenium Web Drivers. The JXL works as a data provider where multiple sets of data is required as input. Moreover, users can read and write information using external excel files. The JXL also helps create custom reports where users have all authority to design reports as per their need.

Listen to this webinar to explore JXL with examples.

Introduction to AWS

Introduction to AWSOSSCube OSSCube provides consulting, development, integration and support services for open source technologies. They have expertise in areas such as PHP, CRM, marketing automation, content management, e-commerce, BI and big data. This presentation introduces AWS and discusses why organizations use AWS, common use cases, and how to get started. It describes key AWS services for application and web hosting including EC2, ELB, RDS, ElastiCache, EBS and CloudWatch and how they provide scalability, reliability, flexibility and security for applications deployed in the AWS cloud.

Maria DB Galera Cluster for High Availability

Maria DB Galera Cluster for High AvailabilityOSSCube Want to understand how to set high availability solutions for MySQL using MariaDB Galera Cluster? Join this webinar, and learn from experts. During this webinar, you will also get guidance on how to implement MariaDB Galera Cluster.

Performance Testing Session - OSSCamp 2014

Performance Testing Session - OSSCamp 2014OSSCube Performance testing is a type of non-functional testing used to identify a system's response time, throughput, reliability, and scalability under given load conditions. It helps understand how a system will behave under extreme loads, identifies constraints, and which parts may misbehave in real-time. There are different types including baseline, benchmark, load, stress, endurance, and volume testing. JMeter is an open source tool commonly used for performance testing as it can simulate heavy loads and provide instant visual feedback. Key challenges include accurately simulating high user loads, implementing real-life usage scenarios, accounting for network latency, testing certain systems like chat servers, and reducing the time needed for metrics collection and report analysis.

Job Queue Presentation - OSSCamp 2014

Job Queue Presentation - OSSCamp 2014OSSCube JobQueue is one of the feature of Zend Plateform. Where you can schedule and manage the execution of php scripts (jobs). The Job Queue can be used to create asynchronous execution of php script and provide, for instance, the scalability of a server.

application

Introduction to Business Process Model and Notation (BPMN) - OSSCamp 2014

Introduction to Business Process Model and Notation (BPMN) - OSSCamp 2014OSSCube The document introduces Business Process Model and Notation (BPMN) which is a standard for modeling business processes. It discusses BPMN elements like flow objects, connecting objects, and swimlanes. It explains how BPMN helps with requirement documentation, analysis and development by allowing quick modeling of workflows and bridging communication gaps between stakeholders and developers. The document also provides examples of BPMN diagrams and open source BPMN tools like Bizagi.

Ad

Recently uploaded (20)

Cybersecurity Identity and Access Solutions using Azure AD

Cybersecurity Identity and Access Solutions using Azure ADVICTOR MAESTRE RAMIREZ Cybersecurity Identity and Access Solutions using Azure AD

Big Data Analytics Quick Research Guide by Arthur Morgan

Big Data Analytics Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...organizerofv IEDM 2024 Tutorial2

Role of Data Annotation Services in AI-Powered Manufacturing

Role of Data Annotation Services in AI-Powered ManufacturingAndrew Leo From predictive maintenance to robotic automation, AI is driving the future of manufacturing. But without high-quality annotated data, even the smartest models fall short.

Discover how data annotation services are powering accuracy, safety, and efficiency in AI-driven manufacturing systems.

Precision in data labeling = Precision on the production floor.

Dev Dives: Automate and orchestrate your processes with UiPath Maestro

Dev Dives: Automate and orchestrate your processes with UiPath MaestroUiPathCommunity This session is designed to equip developers with the skills needed to build mission-critical, end-to-end processes that seamlessly orchestrate agents, people, and robots.

📕 Here's what you can expect:

- Modeling: Build end-to-end processes using BPMN.

- Implementing: Integrate agentic tasks, RPA, APIs, and advanced decisioning into processes.

- Operating: Control process instances with rewind, replay, pause, and stop functions.

- Monitoring: Use dashboards and embedded analytics for real-time insights into process instances.

This webinar is a must-attend for developers looking to enhance their agentic automation skills and orchestrate robust, mission-critical processes.

👨🏫 Speaker:

Andrei Vintila, Principal Product Manager @UiPath

This session streamed live on April 29, 2025, 16:00 CET.

Check out all our upcoming Dev Dives sessions at https://community.uipath.com/dev-dives-automation-developer-2025/.

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptx

DevOpsDays Atlanta 2025 - Building 10x Development Organizations.pptxJustin Reock Building 10x Organizations with Modern Productivity Metrics

10x developers may be a myth, but 10x organizations are very real, as proven by the influential study performed in the 1980s, ‘The Coding War Games.’

Right now, here in early 2025, we seem to be experiencing YAPP (Yet Another Productivity Philosophy), and that philosophy is converging on developer experience. It seems that with every new method we invent for the delivery of products, whether physical or virtual, we reinvent productivity philosophies to go alongside them.

But which of these approaches actually work? DORA? SPACE? DevEx? What should we invest in and create urgency behind today, so that we don’t find ourselves having the same discussion again in a decade?

Splunk Security Update | Public Sector Summit Germany 2025

Splunk Security Update | Public Sector Summit Germany 2025Splunk Splunk Security Update

Sprecher: Marcel Tanuatmadja

Mobile App Development Company in Saudi Arabia

Mobile App Development Company in Saudi ArabiaSteve Jonas EmizenTech is a globally recognized software development company, proudly serving businesses since 2013. With over 11+ years of industry experience and a team of 200+ skilled professionals, we have successfully delivered 1200+ projects across various sectors. As a leading Mobile App Development Company In Saudi Arabia we offer end-to-end solutions for iOS, Android, and cross-platform applications. Our apps are known for their user-friendly interfaces, scalability, high performance, and strong security features. We tailor each mobile application to meet the unique needs of different industries, ensuring a seamless user experience. EmizenTech is committed to turning your vision into a powerful digital product that drives growth, innovation, and long-term success in the competitive mobile landscape of Saudi Arabia.

Rusty Waters: Elevating Lakehouses Beyond Spark

Rusty Waters: Elevating Lakehouses Beyond Sparkcarlyakerly1 Spark is a powerhouse for large datasets, but when it comes to smaller data workloads, its overhead can sometimes slow things down. What if you could achieve high performance and efficiency without the need for Spark?

At S&P Global Commodity Insights, having a complete view of global energy and commodities markets enables customers to make data-driven decisions with confidence and create long-term, sustainable value. 🌍

Explore delta-rs + CDC and how these open-source innovations power lightweight, high-performance data applications beyond Spark! 🚀

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager API

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager APIUiPathCommunity Join this UiPath Community Berlin meetup to explore the Orchestrator API, Swagger interface, and the Test Manager API. Learn how to leverage these tools to streamline automation, enhance testing, and integrate more efficiently with UiPath. Perfect for developers, testers, and automation enthusiasts!

📕 Agenda

Welcome & Introductions

Orchestrator API Overview

Exploring the Swagger Interface

Test Manager API Highlights

Streamlining Automation & Testing with APIs (Demo)

Q&A and Open Discussion

Perfect for developers, testers, and automation enthusiasts!

👉 Join our UiPath Community Berlin chapter: https://community.uipath.com/berlin/

This session streamed live on April 29, 2025, 18:00 CET.

Check out all our upcoming UiPath Community sessions at https://community.uipath.com/events/.

Quantum Computing Quick Research Guide by Arthur Morgan

Quantum Computing Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungen

HCL Nomad Web – Best Practices und Verwaltung von Multiuser-Umgebungenpanagenda Webinar Recording: https://www.panagenda.com/webinars/hcl-nomad-web-best-practices-und-verwaltung-von-multiuser-umgebungen/

HCL Nomad Web wird als die nächste Generation des HCL Notes-Clients gefeiert und bietet zahlreiche Vorteile, wie die Beseitigung des Bedarfs an Paketierung, Verteilung und Installation. Nomad Web-Client-Updates werden “automatisch” im Hintergrund installiert, was den administrativen Aufwand im Vergleich zu traditionellen HCL Notes-Clients erheblich reduziert. Allerdings stellt die Fehlerbehebung in Nomad Web im Vergleich zum Notes-Client einzigartige Herausforderungen dar.

Begleiten Sie Christoph und Marc, während sie demonstrieren, wie der Fehlerbehebungsprozess in HCL Nomad Web vereinfacht werden kann, um eine reibungslose und effiziente Benutzererfahrung zu gewährleisten.

In diesem Webinar werden wir effektive Strategien zur Diagnose und Lösung häufiger Probleme in HCL Nomad Web untersuchen, einschließlich

- Zugriff auf die Konsole

- Auffinden und Interpretieren von Protokolldateien

- Zugriff auf den Datenordner im Cache des Browsers (unter Verwendung von OPFS)

- Verständnis der Unterschiede zwischen Einzel- und Mehrbenutzerszenarien

- Nutzung der Client Clocking-Funktion

Heap, Types of Heap, Insertion and Deletion

Heap, Types of Heap, Insertion and DeletionJaydeep Kale This pdf will explain what is heap, its type, insertion and deletion in heap and Heap sort

Linux Professional Institute LPIC-1 Exam.pdf

Linux Professional Institute LPIC-1 Exam.pdfRHCSA Guru Introduction to LPIC-1 Exam - overview, exam details, price and job opportunities

Into The Box Conference Keynote Day 1 (ITB2025)

Into The Box Conference Keynote Day 1 (ITB2025)Ortus Solutions, Corp This is the keynote of the Into the Box conference, highlighting the release of the BoxLang JVM language, its key enhancements, and its vision for the future.

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep Dive

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep DiveScyllaDB Want to learn practical tips for designing systems that can scale efficiently without compromising speed?

Join us for a workshop where we’ll address these challenges head-on and explore how to architect low-latency systems using Rust. During this free interactive workshop oriented for developers, engineers, and architects, we’ll cover how Rust’s unique language features and the Tokio async runtime enable high-performance application development.

As you explore key principles of designing low-latency systems with Rust, you will learn how to:

- Create and compile a real-world app with Rust

- Connect the application to ScyllaDB (NoSQL data store)

- Negotiate tradeoffs related to data modeling and querying

- Manage and monitor the database for consistently low latencies

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

Talend Open Studio Introduction - OSSCamp 2014

- 1. Talend Open Studio Sat Apr 19, 2014 Brij Bhushan Sharma Sr. Software Engineer

- 2. 2 Talend Open Studio What is Talend Open Studio?

- 3. 2 What is Talend Open Studio ● Talend Open Studio is the most open, innovative and powerful data integration solution on the market today. ● Talend Open Studio for Data Integration allows you to create ETL (extract, transform, load) jobs. ● A graphical integrated development environment with an intuitive Eclipse-based interface. ● Draw procedures linking components, each component performs an operation. ● Produces fully editable Java (or Perl) code

- 4. 2 Talend Open Studio Main features and benefits of that solution: ● Business modeling ● Graphical development ● Drag-and-drop job design ● Metadata-driven design and execution ● Real-time debugging ● Robust execution ● A unified repository for storing and reusing metadata

- 5. 2 Talend Open Studio ETL is a common process in Data Integration Extract: reading data from different datasources (database, flat files, spreadsheet files, web services, etc). Transfom: converting data in a form so that it can be placed in another container (database, web services, files etc). Cleaning, computations and verifications are also performed. Load: write the data in the target format.

- 6. 2 Talend Open Studio Important concepts in Talend Data Integration Studio

- 7. 2 Talend Open Studio What is a repository? A repository is the storage location Talend Data Integration Studio uses to gather data related to all of the technical items that you use either to describe business models or to design Jobs.

- 8. 2 Talend Open Studio What is a project? Projects are structured collections of technical items and their associated metadata. All of the Jobs and business models you design are organized in Projects.

- 9. 2 Talend Open Studio What is a workspace? A workspace is the directory where you store all your project folders. You need to have one workspace directory per connection (repository connection). Talend enables you to connect to different workspace directories, if you do not want to use the default one.

- 10. 2 Talend Open Studio What is a component? A component is a preconfigured connector used to perform a specific data integration operation, no matter what data sources you are integrating: databases, applications, flat files, Web services, etc. A component can minimize the amount of hand-coding required to work on data from multiple, heterogeneous sources.

- 11. 2 Talend Open Studio What is an item? An item is the fundamental technical unit in a project. Items are grouped, according to their types,as: Job Design, Business model, Context, Code, Metadata, etc. One item can include other items. For example, the business models and the Jobs you design are items, metadata and routines you use inside your Jobs are items as well.

- 12. 2 Talend Open Studio-User Interface

- 13. 2 Talend Open Studio What is a repository? A repository is the storage location Talend Data Integration Studio uses to gather data related to all of the technical items that you use either to describe business models or to design Jobs.

- 14. 2 Talend Open Studio Small Demo on Talend Job

- 15. 2 Talend Open Studio Source Data

- 16. 2 Talend Open Studio Lookup Table

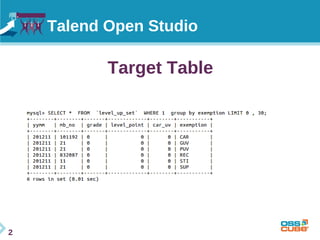

- 17. 2 Talend Open Studio Target Table

- 18. 2 Talend Open Studio Have a look on the main job

- 21. Talend Open Studio Thank You! Have a Question: Catch me on: [email protected]