Trivadis TechEvent 2016 Apache Kafka - Scalable Massage Processing and more! by Guido Schmutz

0 likes399 views

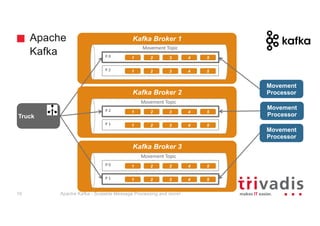

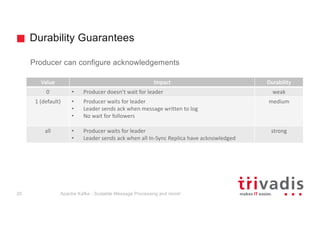

In this presentation Guido Schmutz talks about Apache Kafka, Kafka Core, Kafka Connect, Kafka Streams, Kafka and "Big Data"/"Fast Data Ecosystems, Confluent Data Platform and Kafka in Architecture.

1 of 39

Downloaded 15 times

Ad

Recommended

Kafka Security 101 and Real-World Tips

Kafka Security 101 and Real-World Tips confluent (Stephane Maarek, DataCumulus) Kafka Summit SF 2018

Security in Kafka is a cornerstone of true enterprise production-ready deployment: It enables companies to control access to the cluster and limit risks in data corruption and unwanted operations. Understanding how to use security in Kafka and exploiting its capabilities can be complex, especially as the documentation that is available is aimed at people with substantial existing knowledge on the matter.

This talk will be delivered in a “hero journey” fashion, tracing the experience of an engineer with basic understanding of Kafka who is tasked with securing a Kafka cluster. Along the way, I will illustrate the benefits and implications of various mechanisms and provide some real-world tips on how users can simplify security management.

Attendees of this talk will learn about aspects of security in Kafka, including:

-Encryption: What is SSL, what problems it solves and how Kafka leverages it. We’ll discuss encryption in flight vs. encryption at rest.

-Authentication: Without authentication, anyone would be able to write to any topic in a Kafka cluster, do anything and remain anonymous. We’ll explore the available authentication mechanisms and their suitability for different types of deployment, including mutual SSL authentication, SASL/GSSAPI, SASL/SCRAM and SASL/PLAIN.

-Authorization: How ACLs work in Kafka, ZooKeeper security (risks and mitigations) and how to manage ACLs at scale

Kafka for DBAs

Kafka for DBAsGwen (Chen) Shapira This document discusses Apache Kafka and how it can be used by Oracle DBAs. It begins by explaining how Kafka builds upon the concept of a database redo log by providing a distributed commit log service. It then discusses how Kafka is a publish-subscribe messaging system and can be used to log transactions from any database, application logs, metrics and other system events. Finally, it discusses how schemas are important for Kafka since it only stores messages as bytes, and how Avro can be used to define and evolve schemas for Kafka messages.

Real time Messages at Scale with Apache Kafka and Couchbase

Real time Messages at Scale with Apache Kafka and CouchbaseWill Gardella Kafka is a scalable, distributed publish subscribe messaging system that's used as a data transmission backbone in many data intensive digital businesses. Couchbase Server is a scalable, flexible document database that's fast, agile, and elastic. Because they both appeal to the same type of customers, Couchbase and Kafka are often used together.

This presentation from a meetup in Mountain View describes Kafka's design and why people use it, Couchbase Server and its uses, and the use cases for both together. Also covered is a description and demo of Couchbase Server writing documents to a Kafka topic and consuming messages from a Kafka topic. using the Couchbase Kafka Connector.

Introduction to Apache Kafka and why it matters - Madrid

Introduction to Apache Kafka and why it matters - MadridPaolo Castagna This document provides an introduction to Apache Kafka and discusses why it is an important distributed streaming platform. It outlines how Kafka can be used to handle streaming data flows in a reliable and scalable way. It also describes the various Apache Kafka APIs including Kafka Connect, Streams API, and KSQL that allow organizations to integrate Kafka with other systems and build stream processing applications.

Db2 family and v11.1.4.4

Db2 family and v11.1.4.4ModusOptimum The document discusses IBM's Db2 database family and the latest 11.1.4.4 update. It notes that IBM's statements regarding future products are subject to change and should not be relied upon, and that performance will vary by user. The document then summarizes key capabilities and enhancements of the Db2 Common SQL Engine, including investment protection, support for different workloads, consistent technical capabilities, and flexibility of deployment. It also provides an overview of the Db2 11.1 lifecycle and modification levels, and describes how customers can get critical fixes between official updates.

OpenStack + Nano Server + Hyper-V + S2D

OpenStack + Nano Server + Hyper-V + S2DAlessandro Pilotti Hyper-C is OpenStack on Windows Server 2016, based on Nano Server, Hyper-V, Storage Spaces Direct (S2D) and Open vSwitch for Windows. Bare metal deployment features Cloudbase Solutions Juju charms and MAAS.

kafka for db as postgres

kafka for db as postgresPivotalOpenSourceHub Introduction to Apache Kafka

And Real-Time ETL for DBAs and others who are interested in new ways of working with relational databases

Galera Cluster for MySQL vs MySQL (NDB) Cluster: A High Level Comparison

Galera Cluster for MySQL vs MySQL (NDB) Cluster: A High Level Comparison Severalnines Galera Cluster for MySQL, Percona XtraDB Cluster and MariaDB Cluster (the three “flavours” of Galera Cluster) make use of the Galera WSREP libraries to handle synchronous replication.MySQL Cluster is the official clustering solution from Oracle, while Galera Cluster for MySQL is slowly but surely establishing itself as the de-facto clustering solution in the wider MySQL eco-system.

In this webinar, we will look at all these alternatives and present an unbiased view on their strengths/weaknesses and the use cases that fit each alternative.

This webinar will cover the following:

MySQL Cluster architecture: strengths and limitations

Galera Architecture: strengths and limitations

Deployment scenarios

Data migration

Read and write workloads (Optimistic/pessimistic locking)

WAN/Geographical replication

Schema changes

Management and monitoring

Visualizing Kafka Security

Visualizing Kafka SecurityDataWorks Summit The document discusses security models in Apache Kafka. It describes the PLAINTEXT, SSL, SASL_PLAINTEXT and SASL_SSL security models, covering authentication, authorization, and encryption capabilities. It also provides tips on troubleshooting security issues, including enabling debug logs, and common errors seen with Kafka security.

Architecting for Scale

Architecting for ScalePooyan Jamshidi Guest lecture talk in the CMU's Foundation of Software Engineering course: http://www.cs.cmu.edu/~ckaestne/15313/2017/

20150716 introduction to apache spark v3

20150716 introduction to apache spark v3 Andrey Vykhodtsev Apache Spark is a fast, general-purpose, and easy-to-use cluster computing system for large-scale data processing. It provides APIs in Scala, Java, Python, and R. Spark is versatile and can run on YARN/HDFS, standalone, or Mesos. It leverages in-memory computing to be faster than Hadoop MapReduce. Resilient Distributed Datasets (RDDs) are Spark's abstraction for distributed data. RDDs support transformations like map and filter, which are lazily evaluated, and actions like count and collect, which trigger computation. Caching RDDs in memory improves performance of subsequent jobs on the same data.

Hive2.0 sql speed-scale--hadoop-summit-dublin-apr-2016

Hive2.0 sql speed-scale--hadoop-summit-dublin-apr-2016alanfgates This document discusses new features in Apache Hive 2.0, including:

1) Adding procedural SQL capabilities through HPLSQL for writing stored procedures.

2) Improving query performance through LLAP which uses persistent daemons and in-memory caching to enable sub-second queries.

3) Speeding up query planning by using HBase as the metastore instead of a relational database.

4) Enhancements to Hive on Spark such as dynamic partition pruning and vectorized operations.

5) Default use of the cost-based optimizer and continued improvements to statistics collection and estimation.

The Analytic Platform behind IBM’s Watson Data Platform - Big Data Spain 2017

The Analytic Platform behind IBM’s Watson Data Platform - Big Data Spain 2017Luciano Resende IBM has built a “Data Science Experience” cloud service that exposes Notebook services at web scale. Behind this service, there are various components that power this platform, including Jupyter Notebooks, an enterprise gateway that manages the execution of the Jupyter Kernels and an Apache Spark cluster that power the computation. In this session we will describe our experience and best practices putting together this analytical platform as a service based on Jupyter Notebooks and Apache Spark, in particular how we built the Enterprise Gateway that enables all the Notebooks to share the Spark cluster computational resources.

The DBA 3.0 Upgrade

The DBA 3.0 UpgradeSean Scott In 2008, Harald van Breederode and Joel Goodman wrote a white paper titled "Performing an Oracle DBA 1.0 to Oracle DBA 2.0 Upgrade" in which they suggested DBAs needed to add storage and OS skills to remain relevant in a shifting technical landscape. The role of today's DBA has broadened considerably and with that comes a new set of abilities and concepts to be learned and mastered.

DBA 2.0 was written prior to the release of Oracle 11g and 12c, so the Oracle DBA 3.0 upgrade adds Cloud and virtualization to the DBAs repertoire. Their inclusion also demands that DBAs be able to better manage security and compliance challenges that come with hybrid and Cloud environments, the ability to adapt to continuous deployment cycles, and heterogenous and comingled data stores.

Most significantly DBA 3.0 signals an emergence of the DBA from a mostly utilitarian and anonymous role to one that is more in the limelight. The growing emphasis and influence of data and data-driven decision making means that the DBA must be a partner and driving force in the business and not simply a custodian of the data.

Learn what it will take to build or upgrade your skill set to Oracle DBA 3.0, and how to encourage and mentor a new generation of data professionals into the field.

Developing with the Go client for Apache Kafka

Developing with the Go client for Apache KafkaJoe Stein This document summarizes Joe Stein's go_kafka_client GitHub repository, which provides a Kafka client library written in Go. It describes the motivation for creating a new Go Kafka client, how to use producers and consumers with the library, and distributed processing patterns like mirroring and reactive streams. The client aims to be lightweight with few dependencies while supporting real-world use cases for Kafka producers and high-level consumers.

Matt Franklin - Apache Software (Geekfest)

Matt Franklin - Apache Software (Geekfest)W2O Group The document discusses the potential benefits of container technologies like Docker. It notes that containers offer significantly higher density than virtual machines by avoiding hypervisor overhead. This density improvement can lead to major cost reductions by reducing infrastructure needs. Containers also improve developer efficiency by making development environments portable and disposable. This allows more rapid experimentation and innovation, potentially translating to increased revenue. Technologies like Amazon Lambda take the on-demand aspects of containers even further by abstracting compute resources. The document promotes StackEngine as a solution for managing containers at scale in production environments.

Melbourne Chef Meetup: Automating Azure Compliance with InSpec

Melbourne Chef Meetup: Automating Azure Compliance with InSpecMatt Ray June 26, 2017 presentation. With the move to infrastructure as code and continuous integration/continuous delivery pipelines, it looked like releases would become more frequent and less problematic. Then the auditors showed up and made everyone stop what they were doing. How could this have been prevented? What if the audits were part of the process instead of a roadblock? What sort of visibility do we have into the state of our Azure infrastructure compliance? This talk will provide an overview of Chef's open-source InSpec project (https://inspec.io) and how you can build "Compliance as Code" into your Azure-based infrastructure.

Introduction to Apache Kafka

Introduction to Apache KafkaShiao-An Yuan This document provides an introduction to Apache Kafka. It describes Kafka as a distributed messaging system with features like durability, scalability, publish-subscribe capabilities, and ordering. It discusses key Kafka concepts like producers, consumers, topics, partitions and brokers. It also summarizes use cases for Kafka and how to implement producers and consumers in code. Finally, it briefly outlines related tools like Kafka Connect and Kafka Streams that build upon the Kafka platform.

Scenic City Summit (2021): Real-Time Streaming in any and all clouds, hybrid...

Scenic City Summit (2021): Real-Time Streaming in any and all clouds, hybrid...Timothy Spann Scenic city summit real-time streaming in any and all clouds, hybrid and beyond

24-September-2021. Scenic City Summit. Virtual. Real-Time Streaming in Any and All Clouds, Hybrid and Beyond

Apache Pulsar, Apache NiFi, Apache Flink

StreamNative

Tim Spann

https://sceniccitysummit.com/

Building Event-Driven Systems with Apache Kafka

Building Event-Driven Systems with Apache KafkaBrian Ritchie Event-driven systems provide simplified integration, easy notifications, inherent scalability and improved fault tolerance. In this session we'll cover the basics of building event driven systems and then dive into utilizing Apache Kafka for the infrastructure. Kafka is a fast, scalable, fault-taulerant publish/subscribe messaging system developed by LinkedIn. We will cover the architecture of Kafka and demonstrate code that utilizes this infrastructure including C#, Spark, ELK and more.

Sample code: https://github.com/dotnetpowered/StreamProcessingSample

FOSDEM 2015 - NoSQL and SQL the best of both worlds

FOSDEM 2015 - NoSQL and SQL the best of both worldsAndrew Morgan This document discusses the benefits and limitations of both SQL and NoSQL databases. It argues that while NoSQL databases provide benefits like simple data formats and scalability, relying solely on them can result in data duplication and inconsistent data when relationships are not properly modeled. The document suggests that MySQL Cluster provides a hybrid approach, allowing both SQL queries and NoSQL interfaces while ensuring ACID compliance and referential integrity through its transactional capabilities and handling of foreign keys.

SUSE Webinar - Introduction to SQL Server on Linux

SUSE Webinar - Introduction to SQL Server on LinuxTravis Wright Introduction to SQL Server on Linux for SUSE customers. Talks about scope of the first release of SQL Server on Linux, schedule, Early Adoption Program. Recording is available here:

https://www.brighttalk.com/webcast/11477/243417

Fault Tolerance with Kafka

Fault Tolerance with KafkaEdureka! This document discusses Apache Kafka, an open-source distributed event streaming platform. It provides an overview of Kafka's architecture, how it achieves fault tolerance through replication, and examples of companies that use Kafka like LinkedIn for powering their newsfeed and recommendations. The document also outlines a hands-on exercise on fault tolerance with Kafka and includes references for further reading.

Putting Kafka Into Overdrive

Putting Kafka Into OverdriveTodd Palino Apache Kafka lies at the heart of the largest data pipelines, handling trillions of messages and petabytes of data every day. Learn the right approach for getting the most out of Kafka from the experts at LinkedIn and Confluent. Todd Palino and Gwen Shapira demonstrate how to monitor, optimize, and troubleshoot performance of your data pipelines—from producer to consumer, development to production—as they explore some of the common problems that Kafka developers and administrators encounter when they take Apache Kafka from a proof of concept to production usage. Too often, systems are overprovisioned and underutilized and still have trouble meeting reasonable performance agreements.

Topics include:

- What latencies and throughputs you should expect from Kafka

- How to select hardware and size components

- What you should be monitoring

- Design patterns and antipatterns for client applications

- How to go about diagnosing performance bottlenecks

- Which configurations to examine and which ones to avoid

Introducing Kafka's Streams API

Introducing Kafka's Streams APIconfluent The document introduces Apache Kafka's Streams API for stream processing. Some key points covered include:

- The Streams API allows building stream processing applications without needing a separate cluster, providing an elastic, scalable, and fault-tolerant processing engine.

- It integrates with existing Kafka deployments and supports both stateful and stateless computations on data in Kafka topics.

- Applications built with the Streams API are standard Java applications that run on client machines and leverage Kafka for distributed, parallel processing and fault tolerance via state stores in Kafka.

Developing Real-Time Data Pipelines with Apache Kafka

Developing Real-Time Data Pipelines with Apache KafkaJoe Stein Developing Real-Time Data Pipelines with Apache Kafka http://kafka.apache.org/ is an introduction for developers about why and how to use Apache Kafka. Apache Kafka is a publish-subscribe messaging system rethought of as a distributed commit log. Kafka is designed to allow a single cluster to serve as the central data backbone. A single Kafka broker can handle hundreds of megabytes of reads and writes per second from thousands of clients. It can be elastically and transparently expanded without downtime. Data streams are partitioned and spread over a cluster of machines to allow data streams larger than the capability of any single machine and to allow clusters of coordinated consumers. Messages are persisted on disk and replicated within the cluster to prevent data loss. Each broker can handle terabytes of messages. For the Spring user, Spring Integration Kafka and Spring XD provide integration with Apache Kafka.

How Apache Kafka is transforming Hadoop, Spark and Storm

How Apache Kafka is transforming Hadoop, Spark and StormEdureka! This document provides an overview of Apache Kafka and how it is transforming Hadoop, Spark, and Storm. It begins with explaining why Kafka is needed, then defines what Kafka is and describes its architecture. Key components of Kafka like topics, producers, consumers and brokers are explained. The document also shows how Kafka can be used with Hadoop, Spark, and Storm for stream processing. It lists some companies that use Kafka and concludes by advertising an Edureka course on Apache Kafka.

Kafka Summit SF 2017 - Kafka and the Polyglot Programmer

Kafka Summit SF 2017 - Kafka and the Polyglot Programmerconfluent An Overview of the Kafka clients ecosystem. APIs – wire protocol clients – higher level clients (Streams) – REST Languages (with simple snippets – full examples in GitHub) – the most developed clients – Java and C/C++ – the librdkafka wrappers node-rdkafka, python, GO, C# – why use wrappers Shell scripted Kafka ( e.g. custom health checks) kafkacat Platform gotchas (e.g. SASL on Win32)

Presented at Kafka Summit SF 2017 by Edoardo Comar and Andrew Schofield, IBM

Apache Kafka - Scalable Message-Processing and more !

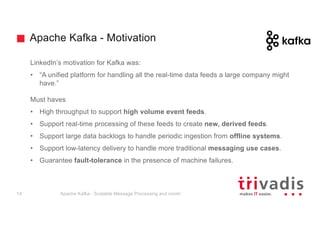

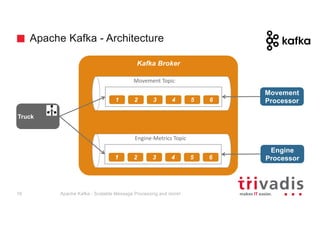

Apache Kafka - Scalable Message-Processing and more !Guido Schmutz Independent of the source of data, the integration of event streams into an Enterprise Architecture gets more and more important in the world of sensors, social media streams and Internet of Things. Events have to be accepted quickly and reliably, they have to be distributed and analysed, often with many consumers or systems interested in all or part of the events. How can me make sure that all these event are accepted and forwarded in an efficient and reliable way? This is where Apache Kafaka comes into play, a distirbuted, highly-scalable messaging broker, build for exchanging huge amount of messages between a source and a target.

This session will start with an introduction into Apache and presents the role of Apache Kafka in a modern data / information architecture and the advantages it brings to the table. Additionally the Kafka ecosystem will be covered as well as the integration of Kafka in the Oracle Stack, with products such as Golden Gate, Service Bus and Oracle Stream Analytics all being able to act as a Kafka consumer or producer.

Apache Kafka - Scalable Message Processing and more!

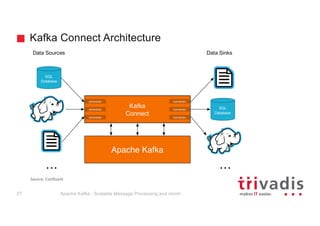

Apache Kafka - Scalable Message Processing and more!Guido Schmutz After a quick overview and introduction of Apache Kafka, this session cover two components which extend the core of Apache Kafka: Kafka Connect and Kafka Streams/KSQL.

Kafka Connects role is to access data from the out-side-world and make it available inside Kafka by publishing it into a Kafka topic. On the other hand, Kafka Connect is also responsible to transport information from inside Kafka to the outside world, which could be a database or a file system. There are many existing connectors for different source and target systems available out-of-the-box, either provided by the community or by Confluent or other vendors. You simply configure these connectors and off you go.

Kafka Streams is a light-weight component which extends Kafka with stream processing functionality. By that, Kafka can now not only reliably and scalable transport events and messages through the Kafka broker but also analyse and process these event in real-time. Interestingly Kafka Streams does not provide its own cluster infrastructure and it is also not meant to run on a Kafka cluster. The idea is to run Kafka Streams where it makes sense, which can be inside a “normal” Java application, inside a Web container or on a more modern containerized (cloud) infrastructure, such as Mesos, Kubernetes or Docker. Kafka Streams has a lot of interesting features, such as reliable state handling, queryable state and much more. KSQL is a streaming engine for Apache Kafka, providing a simple and completely interactive SQL interface for processing data in Kafka.

Ad

More Related Content

What's hot (20)

Visualizing Kafka Security

Visualizing Kafka SecurityDataWorks Summit The document discusses security models in Apache Kafka. It describes the PLAINTEXT, SSL, SASL_PLAINTEXT and SASL_SSL security models, covering authentication, authorization, and encryption capabilities. It also provides tips on troubleshooting security issues, including enabling debug logs, and common errors seen with Kafka security.

Architecting for Scale

Architecting for ScalePooyan Jamshidi Guest lecture talk in the CMU's Foundation of Software Engineering course: http://www.cs.cmu.edu/~ckaestne/15313/2017/

20150716 introduction to apache spark v3

20150716 introduction to apache spark v3 Andrey Vykhodtsev Apache Spark is a fast, general-purpose, and easy-to-use cluster computing system for large-scale data processing. It provides APIs in Scala, Java, Python, and R. Spark is versatile and can run on YARN/HDFS, standalone, or Mesos. It leverages in-memory computing to be faster than Hadoop MapReduce. Resilient Distributed Datasets (RDDs) are Spark's abstraction for distributed data. RDDs support transformations like map and filter, which are lazily evaluated, and actions like count and collect, which trigger computation. Caching RDDs in memory improves performance of subsequent jobs on the same data.

Hive2.0 sql speed-scale--hadoop-summit-dublin-apr-2016

Hive2.0 sql speed-scale--hadoop-summit-dublin-apr-2016alanfgates This document discusses new features in Apache Hive 2.0, including:

1) Adding procedural SQL capabilities through HPLSQL for writing stored procedures.

2) Improving query performance through LLAP which uses persistent daemons and in-memory caching to enable sub-second queries.

3) Speeding up query planning by using HBase as the metastore instead of a relational database.

4) Enhancements to Hive on Spark such as dynamic partition pruning and vectorized operations.

5) Default use of the cost-based optimizer and continued improvements to statistics collection and estimation.

The Analytic Platform behind IBM’s Watson Data Platform - Big Data Spain 2017

The Analytic Platform behind IBM’s Watson Data Platform - Big Data Spain 2017Luciano Resende IBM has built a “Data Science Experience” cloud service that exposes Notebook services at web scale. Behind this service, there are various components that power this platform, including Jupyter Notebooks, an enterprise gateway that manages the execution of the Jupyter Kernels and an Apache Spark cluster that power the computation. In this session we will describe our experience and best practices putting together this analytical platform as a service based on Jupyter Notebooks and Apache Spark, in particular how we built the Enterprise Gateway that enables all the Notebooks to share the Spark cluster computational resources.

The DBA 3.0 Upgrade

The DBA 3.0 UpgradeSean Scott In 2008, Harald van Breederode and Joel Goodman wrote a white paper titled "Performing an Oracle DBA 1.0 to Oracle DBA 2.0 Upgrade" in which they suggested DBAs needed to add storage and OS skills to remain relevant in a shifting technical landscape. The role of today's DBA has broadened considerably and with that comes a new set of abilities and concepts to be learned and mastered.

DBA 2.0 was written prior to the release of Oracle 11g and 12c, so the Oracle DBA 3.0 upgrade adds Cloud and virtualization to the DBAs repertoire. Their inclusion also demands that DBAs be able to better manage security and compliance challenges that come with hybrid and Cloud environments, the ability to adapt to continuous deployment cycles, and heterogenous and comingled data stores.

Most significantly DBA 3.0 signals an emergence of the DBA from a mostly utilitarian and anonymous role to one that is more in the limelight. The growing emphasis and influence of data and data-driven decision making means that the DBA must be a partner and driving force in the business and not simply a custodian of the data.

Learn what it will take to build or upgrade your skill set to Oracle DBA 3.0, and how to encourage and mentor a new generation of data professionals into the field.

Developing with the Go client for Apache Kafka

Developing with the Go client for Apache KafkaJoe Stein This document summarizes Joe Stein's go_kafka_client GitHub repository, which provides a Kafka client library written in Go. It describes the motivation for creating a new Go Kafka client, how to use producers and consumers with the library, and distributed processing patterns like mirroring and reactive streams. The client aims to be lightweight with few dependencies while supporting real-world use cases for Kafka producers and high-level consumers.

Matt Franklin - Apache Software (Geekfest)

Matt Franklin - Apache Software (Geekfest)W2O Group The document discusses the potential benefits of container technologies like Docker. It notes that containers offer significantly higher density than virtual machines by avoiding hypervisor overhead. This density improvement can lead to major cost reductions by reducing infrastructure needs. Containers also improve developer efficiency by making development environments portable and disposable. This allows more rapid experimentation and innovation, potentially translating to increased revenue. Technologies like Amazon Lambda take the on-demand aspects of containers even further by abstracting compute resources. The document promotes StackEngine as a solution for managing containers at scale in production environments.

Melbourne Chef Meetup: Automating Azure Compliance with InSpec

Melbourne Chef Meetup: Automating Azure Compliance with InSpecMatt Ray June 26, 2017 presentation. With the move to infrastructure as code and continuous integration/continuous delivery pipelines, it looked like releases would become more frequent and less problematic. Then the auditors showed up and made everyone stop what they were doing. How could this have been prevented? What if the audits were part of the process instead of a roadblock? What sort of visibility do we have into the state of our Azure infrastructure compliance? This talk will provide an overview of Chef's open-source InSpec project (https://inspec.io) and how you can build "Compliance as Code" into your Azure-based infrastructure.

Introduction to Apache Kafka

Introduction to Apache KafkaShiao-An Yuan This document provides an introduction to Apache Kafka. It describes Kafka as a distributed messaging system with features like durability, scalability, publish-subscribe capabilities, and ordering. It discusses key Kafka concepts like producers, consumers, topics, partitions and brokers. It also summarizes use cases for Kafka and how to implement producers and consumers in code. Finally, it briefly outlines related tools like Kafka Connect and Kafka Streams that build upon the Kafka platform.

Scenic City Summit (2021): Real-Time Streaming in any and all clouds, hybrid...

Scenic City Summit (2021): Real-Time Streaming in any and all clouds, hybrid...Timothy Spann Scenic city summit real-time streaming in any and all clouds, hybrid and beyond

24-September-2021. Scenic City Summit. Virtual. Real-Time Streaming in Any and All Clouds, Hybrid and Beyond

Apache Pulsar, Apache NiFi, Apache Flink

StreamNative

Tim Spann

https://sceniccitysummit.com/

Building Event-Driven Systems with Apache Kafka

Building Event-Driven Systems with Apache KafkaBrian Ritchie Event-driven systems provide simplified integration, easy notifications, inherent scalability and improved fault tolerance. In this session we'll cover the basics of building event driven systems and then dive into utilizing Apache Kafka for the infrastructure. Kafka is a fast, scalable, fault-taulerant publish/subscribe messaging system developed by LinkedIn. We will cover the architecture of Kafka and demonstrate code that utilizes this infrastructure including C#, Spark, ELK and more.

Sample code: https://github.com/dotnetpowered/StreamProcessingSample

FOSDEM 2015 - NoSQL and SQL the best of both worlds

FOSDEM 2015 - NoSQL and SQL the best of both worldsAndrew Morgan This document discusses the benefits and limitations of both SQL and NoSQL databases. It argues that while NoSQL databases provide benefits like simple data formats and scalability, relying solely on them can result in data duplication and inconsistent data when relationships are not properly modeled. The document suggests that MySQL Cluster provides a hybrid approach, allowing both SQL queries and NoSQL interfaces while ensuring ACID compliance and referential integrity through its transactional capabilities and handling of foreign keys.

SUSE Webinar - Introduction to SQL Server on Linux

SUSE Webinar - Introduction to SQL Server on LinuxTravis Wright Introduction to SQL Server on Linux for SUSE customers. Talks about scope of the first release of SQL Server on Linux, schedule, Early Adoption Program. Recording is available here:

https://www.brighttalk.com/webcast/11477/243417

Fault Tolerance with Kafka

Fault Tolerance with KafkaEdureka! This document discusses Apache Kafka, an open-source distributed event streaming platform. It provides an overview of Kafka's architecture, how it achieves fault tolerance through replication, and examples of companies that use Kafka like LinkedIn for powering their newsfeed and recommendations. The document also outlines a hands-on exercise on fault tolerance with Kafka and includes references for further reading.

Putting Kafka Into Overdrive

Putting Kafka Into OverdriveTodd Palino Apache Kafka lies at the heart of the largest data pipelines, handling trillions of messages and petabytes of data every day. Learn the right approach for getting the most out of Kafka from the experts at LinkedIn and Confluent. Todd Palino and Gwen Shapira demonstrate how to monitor, optimize, and troubleshoot performance of your data pipelines—from producer to consumer, development to production—as they explore some of the common problems that Kafka developers and administrators encounter when they take Apache Kafka from a proof of concept to production usage. Too often, systems are overprovisioned and underutilized and still have trouble meeting reasonable performance agreements.

Topics include:

- What latencies and throughputs you should expect from Kafka

- How to select hardware and size components

- What you should be monitoring

- Design patterns and antipatterns for client applications

- How to go about diagnosing performance bottlenecks

- Which configurations to examine and which ones to avoid

Introducing Kafka's Streams API

Introducing Kafka's Streams APIconfluent The document introduces Apache Kafka's Streams API for stream processing. Some key points covered include:

- The Streams API allows building stream processing applications without needing a separate cluster, providing an elastic, scalable, and fault-tolerant processing engine.

- It integrates with existing Kafka deployments and supports both stateful and stateless computations on data in Kafka topics.

- Applications built with the Streams API are standard Java applications that run on client machines and leverage Kafka for distributed, parallel processing and fault tolerance via state stores in Kafka.

Developing Real-Time Data Pipelines with Apache Kafka

Developing Real-Time Data Pipelines with Apache KafkaJoe Stein Developing Real-Time Data Pipelines with Apache Kafka http://kafka.apache.org/ is an introduction for developers about why and how to use Apache Kafka. Apache Kafka is a publish-subscribe messaging system rethought of as a distributed commit log. Kafka is designed to allow a single cluster to serve as the central data backbone. A single Kafka broker can handle hundreds of megabytes of reads and writes per second from thousands of clients. It can be elastically and transparently expanded without downtime. Data streams are partitioned and spread over a cluster of machines to allow data streams larger than the capability of any single machine and to allow clusters of coordinated consumers. Messages are persisted on disk and replicated within the cluster to prevent data loss. Each broker can handle terabytes of messages. For the Spring user, Spring Integration Kafka and Spring XD provide integration with Apache Kafka.

How Apache Kafka is transforming Hadoop, Spark and Storm

How Apache Kafka is transforming Hadoop, Spark and StormEdureka! This document provides an overview of Apache Kafka and how it is transforming Hadoop, Spark, and Storm. It begins with explaining why Kafka is needed, then defines what Kafka is and describes its architecture. Key components of Kafka like topics, producers, consumers and brokers are explained. The document also shows how Kafka can be used with Hadoop, Spark, and Storm for stream processing. It lists some companies that use Kafka and concludes by advertising an Edureka course on Apache Kafka.

Kafka Summit SF 2017 - Kafka and the Polyglot Programmer

Kafka Summit SF 2017 - Kafka and the Polyglot Programmerconfluent An Overview of the Kafka clients ecosystem. APIs – wire protocol clients – higher level clients (Streams) – REST Languages (with simple snippets – full examples in GitHub) – the most developed clients – Java and C/C++ – the librdkafka wrappers node-rdkafka, python, GO, C# – why use wrappers Shell scripted Kafka ( e.g. custom health checks) kafkacat Platform gotchas (e.g. SASL on Win32)

Presented at Kafka Summit SF 2017 by Edoardo Comar and Andrew Schofield, IBM

Similar to Trivadis TechEvent 2016 Apache Kafka - Scalable Massage Processing and more! by Guido Schmutz (20)

Apache Kafka - Scalable Message-Processing and more !

Apache Kafka - Scalable Message-Processing and more !Guido Schmutz Independent of the source of data, the integration of event streams into an Enterprise Architecture gets more and more important in the world of sensors, social media streams and Internet of Things. Events have to be accepted quickly and reliably, they have to be distributed and analysed, often with many consumers or systems interested in all or part of the events. How can me make sure that all these event are accepted and forwarded in an efficient and reliable way? This is where Apache Kafaka comes into play, a distirbuted, highly-scalable messaging broker, build for exchanging huge amount of messages between a source and a target.

This session will start with an introduction into Apache and presents the role of Apache Kafka in a modern data / information architecture and the advantages it brings to the table. Additionally the Kafka ecosystem will be covered as well as the integration of Kafka in the Oracle Stack, with products such as Golden Gate, Service Bus and Oracle Stream Analytics all being able to act as a Kafka consumer or producer.

Apache Kafka - Scalable Message Processing and more!

Apache Kafka - Scalable Message Processing and more!Guido Schmutz After a quick overview and introduction of Apache Kafka, this session cover two components which extend the core of Apache Kafka: Kafka Connect and Kafka Streams/KSQL.

Kafka Connects role is to access data from the out-side-world and make it available inside Kafka by publishing it into a Kafka topic. On the other hand, Kafka Connect is also responsible to transport information from inside Kafka to the outside world, which could be a database or a file system. There are many existing connectors for different source and target systems available out-of-the-box, either provided by the community or by Confluent or other vendors. You simply configure these connectors and off you go.

Kafka Streams is a light-weight component which extends Kafka with stream processing functionality. By that, Kafka can now not only reliably and scalable transport events and messages through the Kafka broker but also analyse and process these event in real-time. Interestingly Kafka Streams does not provide its own cluster infrastructure and it is also not meant to run on a Kafka cluster. The idea is to run Kafka Streams where it makes sense, which can be inside a “normal” Java application, inside a Web container or on a more modern containerized (cloud) infrastructure, such as Mesos, Kubernetes or Docker. Kafka Streams has a lot of interesting features, such as reliable state handling, queryable state and much more. KSQL is a streaming engine for Apache Kafka, providing a simple and completely interactive SQL interface for processing data in Kafka.

Apache Kafka - Scalable Message-Processing and more !

Apache Kafka - Scalable Message-Processing and more !Guido Schmutz ndependent of the source of data, the integration of event streams into an Enterprise Architecture gets more and more important in the world of sensors, social media streams and Internet of Things. Events have to be accepted quickly and reliably, they have to be distributed and analysed, often with many consumers or systems interested in all or part of the events. How can me make sure that all these event are accepted and forwarded in an efficient and reliable way? This is where Apache Kafaka comes into play, a distirbuted, highly-scalable messaging broker, build for exchanging huge amount of messages between a source and a target.

This session will start with an introduction into Apache and presents the role of Apache Kafka in a modern data / information architecture and the advantages it brings to the table. Additionally the Kafka ecosystem will be covered as well as the integration of Kafka in the Oracle Stack, with products such as Golden Gate, Service Bus and Oracle Stream Analytics all being able to act as a Kafka consumer or producer.

Kafka Explainaton

Kafka ExplainatonNguyenChiHoangMinh Kafka is primarily used to build real-time streaming data pipelines and applications that adapt to the data streams. It combines messaging, storage, and stream processing to allow storage and analysis of both historical and real-time data.

[Big Data Spain] Apache Spark Streaming + Kafka 0.10: an Integration Story![[Big Data Spain] Apache Spark Streaming + Kafka 0.10: an Integration Story](https://cdn.slidesharecdn.com/ss_thumbnails/bigdataspainapachesparkstreamingkafka0-171117095800-thumbnail.jpg?width=560&fit=bounds)

![[Big Data Spain] Apache Spark Streaming + Kafka 0.10: an Integration Story](https://cdn.slidesharecdn.com/ss_thumbnails/bigdataspainapachesparkstreamingkafka0-171117095800-thumbnail.jpg?width=560&fit=bounds)

![[Big Data Spain] Apache Spark Streaming + Kafka 0.10: an Integration Story](https://cdn.slidesharecdn.com/ss_thumbnails/bigdataspainapachesparkstreamingkafka0-171117095800-thumbnail.jpg?width=560&fit=bounds)

![[Big Data Spain] Apache Spark Streaming + Kafka 0.10: an Integration Story](https://cdn.slidesharecdn.com/ss_thumbnails/bigdataspainapachesparkstreamingkafka0-171117095800-thumbnail.jpg?width=560&fit=bounds)

[Big Data Spain] Apache Spark Streaming + Kafka 0.10: an Integration StoryJoan Viladrosa Riera This document provides an overview of Apache Kafka and Apache Spark Streaming and their integration. It discusses what Kafka and Spark Streaming are, how they work, their benefits, and semantics when used together. It also provides examples of code for using the new Kafka integration in Spark 2.0+, including getting metadata, storing offsets in Kafka, and achieving at-most-once, at-least-once, and exactly-once processing semantics. Finally, it shares some insights into how Billy Mobile uses Spark Streaming with Kafka to process large volumes of data.

Apache Kafka - Scalable Message-Processing and more !

Apache Kafka - Scalable Message-Processing and more !Guido Schmutz Presentation @ Oracle Code Berlin.

Independent of the source of data, the integration of event streams into an Enterprise Architecture gets more and more important in the world of sensors, social media streams and Internet of Things. Events have to be accepted quickly and reliably, they have to be distributed and analysed, often with many consumers or systems interested in all or part of the events. How can we make sure that all these events are accepted and forwarded in an efficient and reliable way? This is where Apache Kafaka comes into play, a distirbuted, highly-scalable messaging broker, build for exchanging huge amounts of messages between a source and a target. This session will start with an introduction of Apache and presents the role of Apache Kafka in a modern data / information architecture and the advantages it brings to the table.

Building streaming data applications using Kafka*[Connect + Core + Streams] b...![Building streaming data applications using Kafka*[Connect + Core + Streams] b...](https://cdn.slidesharecdn.com/ss_thumbnails/buildingstreamingdataapplicationsusingapachekafka-171011211455-thumbnail.jpg?width=560&fit=bounds)

![Building streaming data applications using Kafka*[Connect + Core + Streams] b...](https://cdn.slidesharecdn.com/ss_thumbnails/buildingstreamingdataapplicationsusingapachekafka-171011211455-thumbnail.jpg?width=560&fit=bounds)

![Building streaming data applications using Kafka*[Connect + Core + Streams] b...](https://cdn.slidesharecdn.com/ss_thumbnails/buildingstreamingdataapplicationsusingapachekafka-171011211455-thumbnail.jpg?width=560&fit=bounds)

![Building streaming data applications using Kafka*[Connect + Core + Streams] b...](https://cdn.slidesharecdn.com/ss_thumbnails/buildingstreamingdataapplicationsusingapachekafka-171011211455-thumbnail.jpg?width=560&fit=bounds)

Building streaming data applications using Kafka*[Connect + Core + Streams] b...Data Con LA Abstract:- Apache Kafka evolved from an enterprise messaging system to a fully distributed streaming data platform for building real-time streaming data pipelines and streaming data applications without the need for other tools/clusters for data ingestion, storage and stream processing. In this talk you will learn more about: A quick introduction to Kafka Core, Kafka Connect and Kafka Streams through code examples, key concepts and key features. A reference architecture for building such Kafka-based streaming data applications. A demo of an end-to-end Kafka-based streaming data application.

Apache Kafka

Apache KafkaJoe Stein Apache Kafka is a distributed publish-subscribe messaging system that was originally created by LinkedIn and contributed to the Apache Software Foundation. It is written in Scala and provides a multi-language API to publish and consume streams of records. Kafka is useful for both log aggregation and real-time messaging due to its high performance, scalability, and ability to serve as both a distributed messaging system and log storage system with a single unified architecture. To use Kafka, one runs Zookeeper for coordination, Kafka brokers to form a cluster, and then publishes and consumes messages with a producer API and consumer API.

Building Streaming Data Applications Using Apache Kafka

Building Streaming Data Applications Using Apache KafkaSlim Baltagi Apache Kafka evolved from an enterprise messaging system to a fully distributed streaming data platform for building real-time streaming data pipelines and streaming data applications without the need for other tools/clusters for data ingestion, storage and stream processing.

In this talk you will learn more about:

1. A quick introduction to Kafka Core, Kafka Connect and Kafka Streams: What is and why?

2. Code and step-by-step instructions to build an end-to-end streaming data application using Apache Kafka

Apache kafka

Apache kafkaNexThoughts Technologies Apache Kafka is a distributed publish-subscribe messaging system that can handle high volumes of data and enable messages to be passed from one endpoint to another. It uses a distributed commit log that allows messages to be persisted on disk for durability. Kafka is fast, scalable, fault-tolerant, and guarantees zero data loss. It is used by companies like LinkedIn, Twitter, and Netflix to handle high volumes of real-time data and streaming workloads.

Kafka Streams for Java enthusiasts

Kafka Streams for Java enthusiastsSlim Baltagi Kafka, Apache Kafka evolved from an enterprise messaging system to a fully distributed streaming data platform (Kafka Core + Kafka Connect + Kafka Streams) for building streaming data pipelines and streaming data applications.

This talk, that I gave at the Chicago Java Users Group (CJUG) on June 8th 2017, is mainly focusing on Kafka Streams, a lightweight open source Java library for building stream processing applications on top of Kafka using Kafka topics as input/output.

You will learn more about the following:

1. Apache Kafka: a Streaming Data Platform

2. Overview of Kafka Streams: Before Kafka Streams? What is Kafka Streams? Why Kafka Streams? What are Kafka Streams key concepts? Kafka Streams APIs and code examples?

3. Writing, deploying and running your first Kafka Streams application

4. Code and Demo of an end-to-end Kafka-based Streaming Data Application

5. Where to go from here?

Kafka for Scale

Kafka for ScaleEyal Ben Ivri Apache Kafka introduction and Design patterns. A Big Data system, that can help even if you're not doing Big Data.

Apache kafka

Apache kafkaKumar Shivam This document provides an introduction to Apache Kafka, an open-source distributed event streaming platform. It discusses Kafka's history as a project originally developed by LinkedIn, its use cases like messaging, activity tracking and stream processing. It describes key Kafka concepts like topics, partitions, offsets, replicas, brokers and producers/consumers. It also gives examples of how companies like Netflix, Uber and LinkedIn use Kafka in their applications and provides a comparison to Apache Spark.

Confluent REST Proxy and Schema Registry (Concepts, Architecture, Features)

Confluent REST Proxy and Schema Registry (Concepts, Architecture, Features)Kai Wähner High level introduction to Confluent REST Proxy and Schema Registry (leveraging Apache Avro under the hood), two components of the Apache Kafka open source ecosystem. See the concepts, architecture and features.

Cloud lunch and learn real-time streaming in azure

Cloud lunch and learn real-time streaming in azureTimothy Spann Cloud lunch and learn real-time streaming in azure

Apache pulsar is an open source, cloud-native distributed messaging and streaming platform.

Big mountain data and dev conference apache pulsar with mqtt for edge compu...

Big mountain data and dev conference apache pulsar with mqtt for edge compu...Timothy Spann This document provides an overview and summary of Apache Pulsar with MQTT for edge computing. It discusses how Pulsar is an open-source, cloud-native distributed messaging and streaming platform that supports MQTT and other protocols. It also summarizes Pulsar's key capabilities like data durability, scalability, geo-replication, and unified messaging model. The document includes diagrams showcasing Pulsar's publish-subscribe model and different subscription modes. It demonstrates how Pulsar can be used with edge devices via protocols like MQTT and how streams of data from edge can be processed using connectors, functions and SQL.

Kafka Connect & Kafka Streams/KSQL - the ecosystem around Kafka

Kafka Connect & Kafka Streams/KSQL - the ecosystem around KafkaGuido Schmutz After a quick overview and introduction of Apache Kafka, this session cover two components which extend the core of Apache Kafka: Kafka Connect and Kafka Streams/KSQL.

Kafka Connects role is to access data from the out-side-world and make it available inside Kafka by publishing it into a Kafka topic. On the other hand, Kafka Connect is also responsible to transport information from inside Kafka to the outside world, which could be a database or a file system. There are many existing connectors for different source and target systems available out-of-the-box, either provided by the community or by Confluent or other vendors. You simply configure these connectors and off you go.

Kafka Streams is a light-weight component which extends Kafka with stream processing functionality. By that, Kafka can now not only reliably and scalable transport events and messages through the Kafka broker but also analyse and process these event in real-time. Interestingly Kafka Streams does not provide its own cluster infrastructure and it is also not meant to run on a Kafka cluster. The idea is to run Kafka Streams where it makes sense, which can be inside a “normal” Java application, inside a Web container or on a more modern containerized (cloud) infrastructure, such as Mesos, Kubernetes or Docker. Kafka Streams has a lot of interesting features, such as reliable state handling, queryable state and much more. KSQL is a streaming engine for Apache Kafka, providing a simple and completely interactive SQL interface for processing data in Kafka.

OSSNA Building Modern Data Streaming Apps

OSSNA Building Modern Data Streaming AppsTimothy Spann OSSNA

Building Modern Data Streaming Apps

https://ossna2023.sched.com/event/1Jt05/virtual-building-modern-data-streaming-apps-with-open-source-timothy-spann-streamnative

Timothy Spann

Cloudera

Principal Developer Advocate

Data in Motion

In my session, I will show you some best practices I have discovered over the last seven years in building data streaming applications, including IoT, CDC, Logs, and more. In my modern approach, we utilize several open-source frameworks to maximize all the best features. We often start with Apache NiFi as the orchestrator of streams flowing into Apache Pulsar. From there, we build streaming ETL with Apache Spark and enhance events with Pulsar Functions for ML and enrichment. We make continuous queries against our topics with Flink SQL. We will stream data into various open-source data stores, including Apache Iceberg, Apache Pinot, and others. We use the best streaming tools for the current applications with the open source stack - FLiPN. https://www.flipn.app/ Updates: This will be in-person with live coding based on feedback from the crowd. This will also include new data stores, new sources, and data relevant to and from the Vancouver area. This will also include updates to the platforms and inclusion of Apache Iceberg, Apache Pinot and some other new tech.

https://github.com/tspannhw/SpeakerProfile Tim Spann is a Principal Developer Advocate for Cloudera. He works with Apache Kafka, Apache Flink, Flink SQL, Apache NiFi, MiniFi, Apache MXNet, TensorFlow, Apache Spark, Big Data, the IoT, machine learning, and deep learning. Tim has over a decade of experience with the IoT, big data, distributed computing, messaging, streaming technologies, and Java programming. Previously, he was a Principal DataFlow Field Engineer at Cloudera, a Senior Solutions Engineer at Hortonworks, a Senior Solutions Architect at AirisData, a Senior Field Engineer at Pivotal and a Team Leader at HPE. He blogs for DZone, where he is the Big Data Zone leader, and runs a popular meetup in Princeton on Big Data, Cloud, IoT, deep learning, streaming, NiFi, the blockchain, and Spark. Tim is a frequent speaker at conferences such as ApacheCon, DeveloperWeek, Pulsar Summit and many more. He holds a BS and MS in computer science.

Timothy J Spann

Cloudera

Principal Developer Advocate

Hightstown, NJ

Websitehttps://datainmotion.dev/

Apache Kafka - Scalable Message Processing and more!

Apache Kafka - Scalable Message Processing and more!Guido Schmutz Apache Kafka is a distributed streaming platform for handling real-time data feeds and deriving value from them. It provides a unified, scalable infrastructure for ingesting, processing, and delivering real-time data feeds. Kafka supports high throughput, fault tolerance, and exactly-once delivery semantics.

Apache kafka

Apache kafkasureshraj43 Data Analytics is often described as one of the biggest challenges associated with big data, but even before that step can happen, data must be ingested and made available to enterprise users. That’s where Apache Kafka comes in.

Ad

More from Trivadis (20)

Azure Days 2019: Azure Chatbot Development for Airline Irregularities (Remco ...

Azure Days 2019: Azure Chatbot Development for Airline Irregularities (Remco ...Trivadis During major irregularities, the service desks of airline companies are heavily overloaded for short periods of time. A chatbot could help out during these peak hours. In this session we show how SWISS International Airlines developed a chatbot for irregularity handling. We shed light on the challenges, such as sensitive customer data and a company starting its journey into the cloud.

Azure Days 2019: Trivadis Azure Foundation – Das Fundament für den ... (Nisan...

Azure Days 2019: Trivadis Azure Foundation – Das Fundament für den ... (Nisan...Trivadis Trivadis Azure Foundation – Das Fundament für den erfolgreichen Einsatz der Azure Cloud

Die Azure Cloud steuert auf ihr 10-jähriges Jubiläum zu und ist in der Schweiz angekommen. Im Vergleich zum Betrieb von On-Premise Lösungen bietet die Cloud eine Vielzahl von Vorteilen. Viele Aufgaben aus der On-Premise Welt werden im Cloud Computing vom Anbieter übernommen.

Aber die Freiheiten, welche Cloud Computing bietet, sind sehr mächtig und das beste Rezept für Wildwuchs und Chaos. Viele unserer Kunden werden sich erst jetzt bewusst, um welche Aufgaben sie sich bereits vor 5 Jahren hätten kümmern sollen. Die Trivadis Azure Foundation ist unser in der Praxis erprobtes Vorgehen, um alle Vorteile der Cloud optimal Nutzen zu können, ohne die Kontrolle zu verlieren. In dieser Session bekommen Sie einen Einblick in unsere Azure Foundation Methodik, zusätzlich berichten wir von den Azure-Erfahrungen unserer Kunden.

Azure Days 2019: Business Intelligence auf Azure (Marco Amhof & Yves Mauron)

Azure Days 2019: Business Intelligence auf Azure (Marco Amhof & Yves Mauron)Trivadis In dieser Session stellen wir ein Projekt vor, in welchem wir ein umfassendes BI-System mit Hilfe von Azure Blob Storage, Azure SQL, Azure Logic Apps und Azure Analysis Services für und in der Azure Cloud aufgebaut haben. Wir berichten über die Herausforderungen, wie wir diese gelöst haben und welche Learnings und Best Practices wir mitgenommen haben.

Azure Days 2019: Master the Move to Azure (Konrad Brunner)

Azure Days 2019: Master the Move to Azure (Konrad Brunner)Trivadis Die Azure Cloud hat sich in den letzten 10 Jahren etabliert und steht heute sowohl global, als auch lokal zur Verfügung,

der Schritt in die Cloud muss aber gut geplant werden. In diesem Talk teilen wir unsere Erfahrungen aus diversen Projekten mit Ihnen. Wir zeigen, worauf Sie besonders achten müssen, damit Ihr Wechsel in die Cloud ein Erfolg wird.

Azure Days 2019: Keynote Azure Switzerland – Status Quo und Ausblick (Primo A...

Azure Days 2019: Keynote Azure Switzerland – Status Quo und Ausblick (Primo A...Trivadis Die Azure Cloud ist in der Schweiz angekommen. In dieser Session beleuchtet Primo Amrein, Cloud Lead bei Microsoft Schweiz, die Einführung der Azure Cloud in der Schweiz, berichtet über die Erfolgsgeschichten und die Lessons Learned. Die Session wird mit einem Ausblick auf die Roadmap abgerundet.

Azure Days 2019: Grösser und Komplexer ist nicht immer besser (Meinrad Weiss)

Azure Days 2019: Grösser und Komplexer ist nicht immer besser (Meinrad Weiss)Trivadis «Moderne» Data Warehouse/Data Lake Architekturen strotzen oft nur von Layern und Services. Mit solchen Systemen lassen sich Petabytes von Daten verwalten und analysieren. Das Ganze hat aber auch seinen Preis (Komplexität, Latenzzeit, Stabilität) und nicht jedes Projekt wird mit diesem Ansatz glücklich.

Der Vortrag zeigt die Reise von einer technologieverliebten Lösung zu einer auf die Anwender Bedürfnisse abgestimmten Umgebung. Er zeigt die Sonnen- und Schattenseiten von massiv parallelen Systemen und soll die Sinne auf das Aufnehmen der realen Kundenanforderungen sensibilisieren.

Azure Days 2019: Get Connected with Azure API Management (Gerry Keune & Stefa...

Azure Days 2019: Get Connected with Azure API Management (Gerry Keune & Stefa...Trivadis This document summarizes Vinci Energies' use of Azure API Management to securely manage interfaces between their applications. It discusses how Vinci Energies used API Management to abstract, secure, and monitor interfaces for applications involved in their digital transformation, including a mobile time sheet app. It also provides an overview of Azure API Management, including key capabilities around publishing, protecting, and managing APIs, as well as pricing tiers and some missing features.

Azure Days 2019: Infrastructure as Code auf Azure (Jonas Wanninger & Daniel H...

Azure Days 2019: Infrastructure as Code auf Azure (Jonas Wanninger & Daniel H...Trivadis Heutzutage schreibt man nicht nur Applikationen mit Code. Dank der Cloud wird die Konfiguration von Infrastruktur wie virtuellen Maschinen oder Netzwerken in Code definiert und automatisiert ausgeliefert. Man spricht von Infrastructure as Code, kurz: IAC. Für Infrastructure as Code auf Azure gibt es viele tools wie Ansible, Puppet, Chef, etc. Zwei Lösungen stechen durch Ihren unterschiedlichen Ansatz heraus - Die Azure Resource Manager Templates (ARM) als Microsoft-native Lösung, immer auf dem neusten Stand, aber an Azure gebunden. Auf der anderen Seite Terraform von HashiCorp mit einer deskriptiven Sprache als Grundlage, dafür weniger Features im Security-Bereich. Für einen Grosskunden haben wir die beiden Technologien verglichen. Die Resultate zeigen wir in dieser Session mit Livedemos auf.

Azure Days 2019: Wie bringt man eine Data Analytics Plattform in die Cloud? (...

Azure Days 2019: Wie bringt man eine Data Analytics Plattform in die Cloud? (...Trivadis Was waren die Learnings und Challenges um eine auf Azure basierende, moderne Data Analytics Plattform für einen großen Konzern als Service bereitzustellen und in das Enterprise zu integrieren? Ein Projekt mit vielen interessanten Aspekten über Azure BI Services wie HDInsight, die Integration in ein Enterprise in einem "as a Service" Model, Management der Kosten und Verrechnungen der Services, und noch viel mehr. Diese Session bietet Einblicke in eines unserer Projekte, die Ihnen in Ihrem nächsten Projekt behilflich sein werden.

Azure Days 2019: Azure@Helsana: Die Erweiterung von Dynamics CRM mit Azure Po...

Azure Days 2019: Azure@Helsana: Die Erweiterung von Dynamics CRM mit Azure Po...Trivadis Die Helsana (https://www.helsana.ch), die Nummer 2 der grössten Krankenversicherungen der Schweiz, verfolgt eine moderne Cloud-First Strategie. Um komplexe Marketingkampagnen mit einem hohen Grad an Automatisierung ausführen zu können, wurden von Helsana diverse Produkte evaluiert. Leider fand sich keines, welches allen Anforderungen genügte. In enger Zusammenarbeit mit Microsoft wurde die zu 100% Azure-basierte Anwendung CRM-Analytics (CRMa) erstellt, welche Leads und Aufgaben aus dem Dynamics CRM gemäss komplexen Verteilregelwerken an die Regionen, Niederlassungen und Kundenbetreuer verteilt. Die Resultate und Performance der Kampagnen können über eine Data Analytics Strecke analysiert und in PowerBI visualisiert werden. Manuelle Prozesse zur Zielgruppenselektion wurden automatisiert und die Zeit von der Idee bis zur Selektion der Zielgruppe konnte von 10(!) Tagen auf einige Minuten reduziert werden. Mit der Einführung von CRMa hat die Helsana einen massgebenden Schritt in die Digitalisierung und zu einem ganzheitlichen Kampagnenmanagement geschafft.

TechEvent 2019: Kundenstory - Kein Angebot, kein Auftrag – Wie Du ein individ...

TechEvent 2019: Kundenstory - Kein Angebot, kein Auftrag – Wie Du ein individ...Trivadis TechEvent 2019: Kundenstory - Kein Angebot, kein Auftrag – Wie Du ein individuelles Angebot in 5 Sek formulierst; Martin Kortstiege, Ronny Bauer - Trivadis

TechEvent 2019: Oracle Database Appliance M/L - Erfahrungen und Erfolgsmethod...

TechEvent 2019: Oracle Database Appliance M/L - Erfahrungen und Erfolgsmethod...Trivadis TechEvent 2019: Oracle Database Appliance M/L - Erfahrungen und Erfolgsmethoden für NON-HA Database Machines; Gabriel Keusen, Martin Berger - Trivadis

TechEvent 2019: Security 101 für Web Entwickler; Roland Krüger - Trivadis

TechEvent 2019: Security 101 für Web Entwickler; Roland Krüger - TrivadisTrivadis The document discusses the top 10 security risks according to the OWASP organization. It summarizes each risk, provides examples, and recommends how to prevent the risks such as implementing access controls, validating user input to prevent injection and cross-site scripting attacks, encrypting sensitive data, keeping software updated to prevent vulnerabilities, and properly logging and monitoring systems. The overall message is for web developers to prioritize security, get informed on risks, validate input, and monitor systems.

TechEvent 2019: Trivadis & Swisscom Partner Angebote; Konrad Häfeli, Markus O...

TechEvent 2019: Trivadis & Swisscom Partner Angebote; Konrad Häfeli, Markus O...Trivadis TechEvent 2019: Trivadis & Swisscom Partner Angebote; Konrad Häfeli, Markus Obrecht, Nicolas Dörig - Trivadis

TechEvent 2019: DBaaS from Swisscom Cloud powered by Trivadis; Konrad Häfeli ...

TechEvent 2019: DBaaS from Swisscom Cloud powered by Trivadis; Konrad Häfeli ...Trivadis The document describes a managed Oracle database as a service (DBaaS) that is jointly offered by Swisscom and Trivadis. It provides concise summaries of the key components and benefits of the service, including:

1) The service leverages the best of both Swisscom and Trivadis - Swisscom provides the cloud infrastructure and security while Trivadis provides database expertise and management.

2) Customers benefit from high availability, security within Swiss data centers, cost savings from outsourced management, and scalability.

3) Automation is a key part of the solution, allowing the service to be scaled through orchestration of virtual infrastructure,

TechEvent 2019: Status of the partnership Trivadis and EDB - Comparing Postgr...

TechEvent 2019: Status of the partnership Trivadis and EDB - Comparing Postgr...Trivadis TechEvent 2019: Status of the partnership Trivadis and EDB - Comparing PostgreSQL to Oracle, the best kept secrets; Konrad Häfeli, Jan Karremans - Trivadis

TechEvent 2019: More Agile, More AI, More Cloud! Less Work?!; Oliver Dörr - T...

TechEvent 2019: More Agile, More AI, More Cloud! Less Work?!; Oliver Dörr - T...Trivadis The document discusses how organizations can increase agility through cloud technologies like containers and serverless computing. It notes that cloud platforms allow developers and operations teams to work more collaboratively through a DevOps approach. This enables continuous delivery of applications and infrastructure as code. The document also emphasizes the importance of security, compliance and control when adopting cloud technologies and a cloud native approach.

TechEvent 2019: Kundenstory - Vom Hauptmann zu Köpenick zum Polizisten 2020 -...

TechEvent 2019: Kundenstory - Vom Hauptmann zu Köpenick zum Polizisten 2020 -...Trivadis TechEvent 2019: Kundenstory - Vom Hauptmann zu Köpenick zum Polizisten 2020 - von klassischen zu agilen Prozessen; Martin Moog, Esther Trapp, Norbert Ziebarth - Trivadis

TechEvent 2019: Vom Rechenzentrum in die Oracle Cloud - Übertragungsmethoden;...

TechEvent 2019: Vom Rechenzentrum in die Oracle Cloud - Übertragungsmethoden;...Trivadis TechEvent 2019: Vom Rechenzentrum in die Oracle Cloud - Übertragungsmethoden; Martin Berger - Trivadis

TechEvent 2019: The sleeping Power of Data; Eberhard Lösch - Trivadis

TechEvent 2019: The sleeping Power of Data; Eberhard Lösch - TrivadisTrivadis Eberhard Loesch gave a presentation on the power of data at the Trivadis TechEvent in Regensdorf, Switzerland. He showed how the world's largest companies are leveraging data to grow their business. In Switzerland, over half of companies are focusing on improving data protection, while a third are experimenting with AI. Loesch provided examples of how customer, material, and sensor data could be combined and analyzed to gain insights and optimize business processes. The event also included sessions on using data to develop new business ideas and models and leveraging AI and analytics to help children.

Ad

Recently uploaded (20)

Semantic Cultivators : The Critical Future Role to Enable AI

Semantic Cultivators : The Critical Future Role to Enable AIartmondano By 2026, AI agents will consume 10x more enterprise data than humans, but with none of the contextual understanding that prevents catastrophic misinterpretations.

AI and Data Privacy in 2025: Global Trends

AI and Data Privacy in 2025: Global TrendsInData Labs In this infographic, we explore how businesses can implement effective governance frameworks to address AI data privacy. Understanding it is crucial for developing effective strategies that ensure compliance, safeguard customer trust, and leverage AI responsibly. Equip yourself with insights that can drive informed decision-making and position your organization for success in the future of data privacy.

This infographic contains:

-AI and data privacy: Key findings

-Statistics on AI data privacy in the today’s world

-Tips on how to overcome data privacy challenges

-Benefits of AI data security investments.

Keep up-to-date on how AI is reshaping privacy standards and what this entails for both individuals and organizations.

Role of Data Annotation Services in AI-Powered Manufacturing

Role of Data Annotation Services in AI-Powered ManufacturingAndrew Leo From predictive maintenance to robotic automation, AI is driving the future of manufacturing. But without high-quality annotated data, even the smartest models fall short.

Discover how data annotation services are powering accuracy, safety, and efficiency in AI-driven manufacturing systems.

Precision in data labeling = Precision on the production floor.

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager API

UiPath Community Berlin: Orchestrator API, Swagger, and Test Manager APIUiPathCommunity Join this UiPath Community Berlin meetup to explore the Orchestrator API, Swagger interface, and the Test Manager API. Learn how to leverage these tools to streamline automation, enhance testing, and integrate more efficiently with UiPath. Perfect for developers, testers, and automation enthusiasts!

📕 Agenda

Welcome & Introductions

Orchestrator API Overview

Exploring the Swagger Interface

Test Manager API Highlights

Streamlining Automation & Testing with APIs (Demo)

Q&A and Open Discussion

Perfect for developers, testers, and automation enthusiasts!

👉 Join our UiPath Community Berlin chapter: https://community.uipath.com/berlin/

This session streamed live on April 29, 2025, 18:00 CET.

Check out all our upcoming UiPath Community sessions at https://community.uipath.com/events/.

Generative Artificial Intelligence (GenAI) in Business

Generative Artificial Intelligence (GenAI) in BusinessDr. Tathagat Varma My talk for the Indian School of Business (ISB) Emerging Leaders Program Cohort 9. In this talk, I discussed key issues around adoption of GenAI in business - benefits, opportunities and limitations. I also discussed how my research on Theory of Cognitive Chasms helps address some of these issues

Andrew Marnell: Transforming Business Strategy Through Data-Driven Insights

Andrew Marnell: Transforming Business Strategy Through Data-Driven InsightsAndrew Marnell With expertise in data architecture, performance tracking, and revenue forecasting, Andrew Marnell plays a vital role in aligning business strategies with data insights. Andrew Marnell’s ability to lead cross-functional teams ensures businesses achieve sustainable growth and operational excellence.

Special Meetup Edition - TDX Bengaluru Meetup #52.pptx

Special Meetup Edition - TDX Bengaluru Meetup #52.pptxshyamraj55 We’re bringing the TDX energy to our community with 2 power-packed sessions:

🛠️ Workshop: MuleSoft for Agentforce

Explore the new version of our hands-on workshop featuring the latest Topic Center and API Catalog updates.

📄 Talk: Power Up Document Processing

Dive into smart automation with MuleSoft IDP, NLP, and Einstein AI for intelligent document workflows.

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In France

Manifest Pre-Seed Update | A Humanoid OEM Deeptech In Francechb3 The latest updates on Manifest's pre-seed stage progress.

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

Big Data Analytics Quick Research Guide by Arthur Morgan

Big Data Analytics Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

Quantum Computing Quick Research Guide by Arthur Morgan

Quantum Computing Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

Dev Dives: Automate and orchestrate your processes with UiPath Maestro

Dev Dives: Automate and orchestrate your processes with UiPath MaestroUiPathCommunity This session is designed to equip developers with the skills needed to build mission-critical, end-to-end processes that seamlessly orchestrate agents, people, and robots.

📕 Here's what you can expect:

- Modeling: Build end-to-end processes using BPMN.

- Implementing: Integrate agentic tasks, RPA, APIs, and advanced decisioning into processes.

- Operating: Control process instances with rewind, replay, pause, and stop functions.

- Monitoring: Use dashboards and embedded analytics for real-time insights into process instances.

This webinar is a must-attend for developers looking to enhance their agentic automation skills and orchestrate robust, mission-critical processes.

👨🏫 Speaker:

Andrei Vintila, Principal Product Manager @UiPath

This session streamed live on April 29, 2025, 16:00 CET.

Check out all our upcoming Dev Dives sessions at https://community.uipath.com/dev-dives-automation-developer-2025/.

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...Aqusag Technologies In late April 2025, a significant portion of Europe, particularly Spain, Portugal, and parts of southern France, experienced widespread, rolling power outages that continue to affect millions of residents, businesses, and infrastructure systems.

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...Alan Dix Talk at the final event of Data Fusion Dynamics: A Collaborative UK-Saudi Initiative in Cybersecurity and Artificial Intelligence funded by the British Council UK-Saudi Challenge Fund 2024, Cardiff Metropolitan University, 29th April 2025

https://alandix.com/academic/talks/CMet2025-AI-Changes-Everything/

Is AI just another technology, or does it fundamentally change the way we live and think?

Every technology has a direct impact with micro-ethical consequences, some good, some bad. However more profound are the ways in which some technologies reshape the very fabric of society with macro-ethical impacts. The invention of the stirrup revolutionised mounted combat, but as a side effect gave rise to the feudal system, which still shapes politics today. The internal combustion engine offers personal freedom and creates pollution, but has also transformed the nature of urban planning and international trade. When we look at AI the micro-ethical issues, such as bias, are most obvious, but the macro-ethical challenges may be greater.