Google TensorFlow Tutorial

290 likes82,869 views

TensorFlow Tutorial given by Dr. Chung-Cheng Chiu at Google Brain on Dec. 29, 2015 http://datasci.tw/event/google_deep_learning

1 of 44

Downloaded 2,944 times

![Define Tensors

xa,a

xb,a

xc,a

xa,b

xb,b

xc,b

xa,c

xb,c

xc,c

w

Variable(<initial-value>,

name=<optional-name>)

w = tf.Variable(tf.random_normal([3, 3]), name='w')

import tensorflow as tf

y = tf.matmul(x, w)

relu_out = tf.nn.relu(y)

Variable stores the state of current execution

Others are operations](https://image.slidesharecdn.com/iispublic-160102031649/85/Google-TensorFlow-Tutorial-5-320.jpg)

![TensorFlow

Code so far defines a data flow graph

MatMul

ReLU

Variable

x

w = tf.Variable(tf.random_normal([3, 3]), name='w')

import tensorflow as tf

y = tf.matmul(x, w)

relu_out = tf.nn.relu(y)

Each variable corresponds to a

node in the graph, not the result

Can be confusing at the beginning](https://image.slidesharecdn.com/iispublic-160102031649/85/Google-TensorFlow-Tutorial-6-320.jpg)

![TensorFlow

Code so far defines a data flow graph

Needs to specify how we

want to execute the graph MatMul

ReLU

Variable

x

Session

Manage resource for graph execution

w = tf.Variable(tf.random_normal([3, 3]), name='w')

sess = tf.Session()

y = tf.matmul(x, w)

relu_out = tf.nn.relu(y)

import tensorflow as tf

result = sess.run(relu_out)](https://image.slidesharecdn.com/iispublic-160102031649/85/Google-TensorFlow-Tutorial-7-320.jpg)

![Graph

Fetch

Retrieve content from a node

w = tf.Variable(tf.random_normal([3, 3]), name='w')

sess = tf.Session()

y = tf.matmul(x, w)

relu_out = tf.nn.relu(y)

import tensorflow as tf

print sess.run(relu_out)

MatMul

ReLU

Variable

x

Fetch

We have assembled the pipes

Fetch the liquid](https://image.slidesharecdn.com/iispublic-160102031649/85/Google-TensorFlow-Tutorial-8-320.jpg)

![Graph

sess = tf.Session()

y = tf.matmul(x, w)

relu_out = tf.nn.relu(y)

import tensorflow as tf

print sess.run(relu_out)

sess.run(tf.initialize_all_variables())

w = tf.Variable(tf.random_normal([3, 3]), name='w')

InitializeVariable

Variable is an empty node

MatMul

ReLU

Variable

x

Fetch

Fill in the content of a

Variable node](https://image.slidesharecdn.com/iispublic-160102031649/85/Google-TensorFlow-Tutorial-9-320.jpg)

![Graph

sess = tf.Session()

y = tf.matmul(x, w)

relu_out = tf.nn.relu(y)

import tensorflow as tf

print sess.run(relu_out)

sess.run(tf.initialize_all_variables())

w = tf.Variable(tf.random_normal([3, 3]), name='w')

x = tf.placeholder("float", [1, 3])

Placeholder

How about x?

MatMul

ReLU

Variable

x

Fetch

placeholder(<data type>,

shape=<optional-shape>,

name=<optional-name>)

Its content will be fed](https://image.slidesharecdn.com/iispublic-160102031649/85/Google-TensorFlow-Tutorial-10-320.jpg)

![Graph

import numpy as np

import tensorflow as tf

sess = tf.Session()

x = tf.placeholder("float", [1, 3])

w = tf.Variable(tf.random_normal([3, 3]), name='w')

y = tf.matmul(x, w)

relu_out = tf.nn.relu(y)

sess.run(tf.initialize_all_variables())

print sess.run(relu_out, feed_dict={x:np.array([[1.0, 2.0, 3.0]])})

Feed

MatMul

ReLU

Variable

x

FetchPump liquid into the pipe

Feed](https://image.slidesharecdn.com/iispublic-160102031649/85/Google-TensorFlow-Tutorial-11-320.jpg)

![Prediction

import numpy as np

import tensorflow as tf

with tf.Session() as sess:

x = tf.placeholder("float", [1, 3])

w = tf.Variable(tf.random_normal([3, 3]), name='w')

relu_out = tf.nn.relu(tf.matmul(x, w))

softmax = tf.nn.softmax(relu_out)

sess.run(tf.initialize_all_variables())

print sess.run(softmax, feed_dict={x:np.array([[1.0, 2.0, 3.0]])})

Softmax

Make predictions for n targets that sum to 1](https://image.slidesharecdn.com/iispublic-160102031649/85/Google-TensorFlow-Tutorial-13-320.jpg)

![Prediction Difference

import numpy as np

import tensorflow as tf

with tf.Session() as sess:

x = tf.placeholder("float", [1, 3])

w = tf.Variable(tf.random_normal([3, 3]), name='w')

relu_out = tf.nn.relu(tf.matmul(x, w))

softmax = tf.nn.softmax(relu_out)

sess.run(tf.initialize_all_variables())

answer = np.array([[0.0, 1.0, 0.0]])

print answer - sess.run(softmax, feed_dict={x:np.array([[1.0, 2.0, 3.0]])})](https://image.slidesharecdn.com/iispublic-160102031649/85/Google-TensorFlow-Tutorial-14-320.jpg)

![Learn parameters: Loss

Define loss function

Loss function for softmax

softmax_cross_entropy_with_logits(

logits, labels, name=<optional-name>)

labels = tf.placeholder("float", [1, 3])

cross_entropy = tf.nn.softmax_cross_entropy_with_logits(

relu_out, labels, name='xentropy')](https://image.slidesharecdn.com/iispublic-160102031649/85/Google-TensorFlow-Tutorial-15-320.jpg)

![Learn parameters: Optimization

Gradient descent

class GradientDescentOptimizer

GradientDescentOptimizer(learning rate)

labels = tf.placeholder("float", [1, 3])

cross_entropy = tf.nn.softmax_cross_entropy_with_logits(

relu_out, labels, name='xentropy')

optimizer = tf.train.GradientDescentOptimizer(0.1)

train_op = optimizer.minimize(cross_entropy)

sess.run(train_op,

feed_dict= {x:np.array([[1.0, 2.0, 3.0]]), labels:answer})

learning rate = 0.1](https://image.slidesharecdn.com/iispublic-160102031649/85/Google-TensorFlow-Tutorial-16-320.jpg)

![Iterative update

labels = tf.placeholder("float", [1, 3])

cross_entropy = tf.nn.softmax_cross_entropy_with_logits(

relu_out, labels, name=‘xentropy')

optimizer = tf.train.GradientDescentOptimizer(0.1)

train_op = optimizer.minimize(cross_entropy)

for step in range(10):

sess.run(train_op,

feed_dict= {x:np.array([[1.0, 2.0, 3.0]]), labels:answer})

Gradient descent usually needs more than one step

Run multiple times](https://image.slidesharecdn.com/iispublic-160102031649/85/Google-TensorFlow-Tutorial-17-320.jpg)

![Add parameters for Softmax

…

softmax_w = tf.Variable(tf.random_normal([3, 3]))

logit = tf.matmul(relu_out, softmax_w)

softmax = tf.nn.softmax(logit)

…

cross_entropy = tf.nn.softmax_cross_entropy_with_logits(

logit, labels, name=‘xentropy')

…

Do not want to use only non-negative input

Softmax layer](https://image.slidesharecdn.com/iispublic-160102031649/85/Google-TensorFlow-Tutorial-18-320.jpg)

![Add biases

…

w = tf.Variable(tf.random_normal([3, 3]))

b = tf.Variable(tf.zeros([1, 3]))

relu_out = tf.nn.relu(tf.matmul(x, w) + b)

softmax_w = tf.Variable(tf.random_normal([3, 3]))

softmax_b = tf.Variable(tf.zeros([1, 3]))

logit = tf.matmul(relu_out, softmax_w) + softmax_b

softmax = tf.nn.softmax(logit)

…

Biases initialized to zero](https://image.slidesharecdn.com/iispublic-160102031649/85/Google-TensorFlow-Tutorial-19-320.jpg)

![Make it deep

…

x = tf.placeholder("float", [1, 3])

relu_out = x

num_layers = 2

for layer in range(num_layers):

w = tf.Variable(tf.random_normal([3, 3]))

b = tf.Variable(tf.zeros([1, 3]))

relu_out = tf.nn.relu(tf.matmul(relu_out, w) + b)

…

Add layers](https://image.slidesharecdn.com/iispublic-160102031649/85/Google-TensorFlow-Tutorial-20-320.jpg)

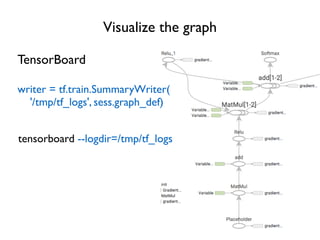

![Improve naming, improve visualization

name_scope(name)

Help specify hierarchical names

…

for layer in range(num_layers):

with tf.name_scope('relu'):

w = tf.Variable(tf.random_normal([3, 3]))

b = tf.Variable(tf.zeros([1, 3]))

relu_out = tf.nn.relu(tf.matmul(relu_out, w) + b)

…

Will help visualizer to better

understand hierarchical relation

Move to outside the loop?](https://image.slidesharecdn.com/iispublic-160102031649/85/Google-TensorFlow-Tutorial-22-320.jpg)

![Visualize states

Add summaries

scalar_summary histogram_summary

merged_summaries = tf.merge_all_summaries()

results = sess.run([train_op, merged_summaries],

feed_dict=…)

writer.add_summary(results[1], step)](https://image.slidesharecdn.com/iispublic-160102031649/85/Google-TensorFlow-Tutorial-28-320.jpg)

![LSTM

# Parameters of gates are concatenated into one multiply for efficiency.

c, h = array_ops.split(1, 2, state)

concat = linear([inputs, h], 4 * self._num_units,True)

# i = input_gate, j = new_input, f = forget_gate, o = output_gate

i, j, f, o = array_ops.split(1, 4, concat)

new_c = c * sigmoid(f + self._forget_bias) + sigmoid(i) * tanh(j)

new_h = tanh(new_c) * sigmoid(o)

BasicLSTMCell](https://image.slidesharecdn.com/iispublic-160102031649/85/Google-TensorFlow-Tutorial-31-320.jpg)

![Word2Vec with TensorFlow

# Look up embeddings for inputs.

embeddings = tf.Variable(

tf.random_uniform([vocabulary_size, embedding_size], -1.0, 1.0))

embed = tf.nn.embedding_lookup(embeddings, train_inputs)

# Construct the variables for the NCE loss

nce_weights = tf.Variable(

tf.truncated_normal([vocabulary_size, embedding_size],

stddev=1.0 / math.sqrt(embedding_size)))

nce_biases = tf.Variable(tf.zeros([vocabulary_size]))

# Compute the average NCE loss for the batch.

# tf.nce_loss automatically draws a new sample of the negative labels each

# time we evaluate the loss.

loss = tf.reduce_mean(

tf.nn.nce_loss(nce_weights, nce_biases, embed, train_labels,

num_sampled, vocabulary_size))](https://image.slidesharecdn.com/iispublic-160102031649/85/Google-TensorFlow-Tutorial-32-320.jpg)

Recommended

TensorFlow

TensorFlowjirimaterna The document provides an introduction to TensorFlow and neural networks. It discusses perceptron classifiers for MNIST data, convolutional neural networks for image recognition, recurrent neural networks for language modeling, and generating poems with RNNs. It also introduces Keras as an easier way to build neural networks and provides contact information for the author and upcoming machine learning conferences.

Gentlest Introduction to Tensorflow

Gentlest Introduction to TensorflowKhor SoonHin Video: https://youtu.be/dYhrCUFN0eM

Article: https://medium.com/p/the-gentlest-introduction-to-tensorflow-248dc871a224

Code: https://github.com/nethsix/gentle_tensorflow/blob/master/code/linear_regression_one_feature.py

This alternative introduction to Google's official Tensorflow (TF) tutorial strips away the unnecessary concepts that overly complicates getting started. The goal is to use TF to perform Linear Regression (LR) that has only a single-feature. We show how to model the LR using a TF graph, how to define the cost function to measure how well the an LR model fits the dataset, and finally train the LR model to find the best fit model.

Gentlest Introduction to Tensorflow - Part 3

Gentlest Introduction to Tensorflow - Part 3Khor SoonHin Articles:

* https://medium.com/all-of-us-are-belong-to-machines/gentlest-intro-to-tensorflow-part-3-matrices-multi-feature-linear-regression-30a81ebaaa6c

* https://medium.com/all-of-us-are-belong-to-machines/gentlest-intro-to-tensorflow-4-logistic-regression-2afd0cabc54

Video: https://youtu.be/F8g_6TXKlxw

Code: https://github.com/nethsix/gentle_tensorflow

In this part, we:

* Use Tensorflow for linear regression models with multiple features

* Use Tensorflow for logistic regression models with multiple features. Specifically:

* Predict multi-class/discrete outcome

* Explain why we use cross-entropy as cost function

* Explain why we use softmax

* Tensorflow Cheatsheet #1

* Single feature linear regression

* Multi-feature linear regression

* Multi-feature logistic regression

TensorFlow Tutorial

TensorFlow TutorialNamHyuk Ahn The document contains code snippets demonstrating the use of TensorFlow for building and training neural networks. It shows how to:

1. Define operations like convolutions, max pooling, fully connected layers using common TensorFlow functions like tf.nn.conv2d and tf.nn.max_pool.

2. Create and initialize variables using tf.Variable and initialize them using tf.global_variables_initializer.

3. Construct a multi-layer perceptron model for MNIST classification with convolutional and fully connected layers.

4. Train the model using tf.train.AdamOptimizer by running optimization steps and calculating loss over batches of data.

5. Evaluate the trained model on test data to calculate accuracy.

Explanation on Tensorflow example -Deep mnist for expert

Explanation on Tensorflow example -Deep mnist for expert홍배 김 you can find the exact and detailed network architecture of 'Deep mnist for expert' example of tensorflow's tutorial. I also added descriptions on the program for your better understanding.

Introduction to Tensorflow

Introduction to TensorflowTzar Umang An introduction to Google's AI Engine, look deeper into Artificial Networks and Machine Learning. Appreciate how our simplest neural network be codified and be used to data analytics.

Tensor flow (1)

Tensor flow (1)景逸 王 TensorFlow is an open source neural network library for Python and C++. It defines data flows as graphs with nodes representing operations and edges representing multidimensional data arrays called tensors. It supports supervised learning algorithms like gradient descent to minimize cost functions. TensorFlow automatically computes gradients so the user only needs to define the network structure, cost function, and optimization algorithm. An example shows training various neural network models on the MNIST handwritten digit dataset, achieving up to 99.2% accuracy. TensorFlow can implement other models like recurrent neural networks and is a simple yet powerful framework for neural networks.

TensorFlow in Practice

TensorFlow in Practiceindico data TensorFlow is a wonderful tool for rapidly implementing neural networks. In this presentation, we will learn the basics of TensorFlow and show how neural networks can be built with just a few lines of code. We will highlight some of the confusing bits of TensorFlow as a way of developing the intuition necessary to avoid common pitfalls when developing your own models. Additionally, we will discuss how to roll our own Recurrent Neural Networks. While many tutorials focus on using built in modules, this presentation will focus on writing neural networks from scratch enabling us to build flexible models when Tensorflow’s high level components can’t quite fit our needs.

About Nathan Lintz:

Nathan Lintz is a research scientist at indico Data Solutions where he is responsible for developing machine learning systems in the domains of language detection, text summarization, and emotion recognition. Outside of work, Nathan is currently writting a book on TensorFlow as an extension to his tutorial repository https://github.com/nlintz/TensorFlow-Tutorials

Link to video https://www.youtube.com/watch?v=op1QJbC2g0E&feature=youtu.be

Tensor board

Tensor boardSung Kim The document describes how to use TensorBoard, TensorFlow's visualization tool. It outlines 5 steps: 1) annotate nodes in the TensorFlow graph to visualize, 2) merge summaries, 3) create a writer, 4) run the merged summary and write it, 5) launch TensorBoard pointing to the log directory. TensorBoard can visualize the TensorFlow graph, plot metrics over time, and show additional data like histograms and scalars.

Gentlest Introduction to Tensorflow - Part 2

Gentlest Introduction to Tensorflow - Part 2Khor SoonHin Video: https://youtu.be/Trc52FvMLEg

Article: https://medium.com/@khor/gentlest-introduction-to-tensorflow-part-2-ed2a0a7a624f

Code: https://github.com/nethsix/gentle_tensorflow

Continuing from Part 1 where we used Tensorflow to perform linear regression for a model with single feature, here we:

* Use Tensorboard to visualize linear regression variables and the Tensorflow network graph

* Perform stochastic/mini-batch/batch gradient descent

Machine Learning - Introduction to Tensorflow

Machine Learning - Introduction to TensorflowAndrew Ferlitsch Abstract: This PDSG workshop introduces basic concepts on TensorFlow. The course covers fundamentals. Concepts covered are Vectors/Matrices/Vectors, Design&Run, Constants, Operations, Placeholders, Bindings, Operators, Loss Function and Training.

Level: Fundamental

Requirements: Some basic programming knowledge is preferred. No prior statistics background is required.

30 分鐘學會實作 Python Feature Selection

30 分鐘學會實作 Python Feature SelectionJames Huang This document provides a summary of a 30-minute presentation on feature selection in Python. The presentation covered several common feature selection techniques in Python like LASSO, random forests, and PCA. Code examples were provided to demonstrate how to perform feature selection on the Iris dataset using these techniques in scikit-learn. Dimensionality reduction with PCA and word embeddings with Gensim were also briefly discussed. The presentation aimed to provide practical code examples to do feature selection without explanations of underlying mathematics or theory.

H2 o berkeleydltf

H2 o berkeleydltfOswald Campesato A fast-paced introduction to Deep Learning concepts, such as activation functions, cost functions, back propagation, and then a quick dive into CNNs, followed by a Keras code sample for defining a CNN. Basic knowledge of vectors, matrices, and derivatives is helpful in order to derive the maximum benefit from this session. Then we'll see a short introduction to TensorFlow 1.x and some insights into TF 2 that will be released some time this year.

Introduction to TensorFlow 2

Introduction to TensorFlow 2Oswald Campesato A fast-paced introduction to TensorFlow 2 about some important new features (such as generators and the @tf.function decorator) and TF 1.x functionality that's been removed from TF 2 (yes, tf.Session() has retired).

Concise code samples are presented to illustrate how to use new features of TensorFlow 2. You'll also get a quick introduction to lazy operators (if you know FRP this will be super easy), along with a code comparison between TF 1.x/iterators with tf.data.Dataset and TF 2/generators with tf.data.Dataset.

Finally, we'll look at some tf.keras code samples that are based on TensorFlow 2. Although familiarity with TF 1.x is helpful, newcomers with an avid interest in learning about TensorFlow 2 can benefit from this session.

Working with tf.data (TF 2)

Working with tf.data (TF 2)Oswald Campesato This session for beginners introduces tf.data APIs for creating data pipelines by combining various "lazy operators" in tf.data, such as filter(), map(), batch(), zip(), flatmap(), take(), and so forth.

Familiarity with method chaining and TF2 is helpful (but not required). If you are comfortable with FRP, the code samples in this session will be very familiar to you.

Introduction to Deep Learning, Keras, and Tensorflow

Introduction to Deep Learning, Keras, and TensorflowOswald Campesato A fast-paced introduction to Deep Learning concepts, such as activation functions, cost functions, back propagation, and then a quick dive into CNNs. Basic knowledge of vectors, matrices, and derivatives is helpful in order to derive the maximum benefit from this session. Then we'll see how to create a Convolutional Neural Network in Keras, followed by a quick introduction to TensorFlow and TensorBoard.

Introduction to TensorFlow 2

Introduction to TensorFlow 2Oswald Campesato A fast-paced introduction to TensorFlow 2 about some important new features (such as generators and the @tf.function decorator) and TF 1.x functionality that's been removed from TF 2 (yes, tf.Session() has retired).

Some concise code samples are presented to illustrate how to use new features of TensorFlow 2.

Introduction to TensorFlow 2 and Keras

Introduction to TensorFlow 2 and KerasOswald Campesato This document provides an overview and introduction to TensorFlow 2. It discusses major changes from TensorFlow 1.x like eager execution and tf.function decorator. It covers working with tensors, arrays, datasets, and loops in TensorFlow 2. It also demonstrates common operations like arithmetic, reshaping and normalization. Finally, it briefly introduces working with Keras and neural networks in TensorFlow 2.

TensorFlow in Your Browser

TensorFlow in Your BrowserOswald Campesato An introductory presentation covered key concepts in deep learning including neural networks, activation functions, cost functions, and optimization methods. Popular deep learning frameworks TensorFlow and tensorflow.js were discussed. Common deep learning architectures like convolutional neural networks and generative adversarial networks were explained. Examples and code snippets in Python demonstrated fundamental deep learning concepts.

NTU ML TENSORFLOW

NTU ML TENSORFLOWMark Chang This document provides an overview of TensorFlow and how to implement machine learning models using TensorFlow. It discusses:

1) How to install TensorFlow either directly or within a virtual environment.

2) The key concepts of TensorFlow including computational graphs, sessions, placeholders, variables and how they are used to define and run computations.

3) An example one-layer perceptron model for MNIST image classification to demonstrate these concepts in action.

Python book

Python bookVictor Rabinovich This book is intended for education and fun. Python is an amazing, text-based coding language, perfectly suited for children older than the age of 10. The Standard Python library has a module called Turtle which is a popular way to introduce programming to kids. This library enables children to create pictures and shapes by providing them with a virtual canvas. With the Python Turtle library, you can create nice animation projects using images that are taken from the internet, scaled-down stored as a gif-files download to the projects. The book includes 19 basic lessons with examples that introduce to the Python codes through Turtle library which is convenient to the school students of 10+years old. The book has also a lot of projects that show how to make different animations with Turtle graphics: games, applications to math, physics, and science.

TensorFlow 深度學習快速上手班--電腦視覺應用

TensorFlow 深度學習快速上手班--電腦視覺應用Mark Chang This document discusses computer vision applications using TensorFlow for deep learning. It introduces computer vision and convolutional neural networks. It then demonstrates how to build and train a CNN for MNIST handwritten digit recognition using TensorFlow. Finally, it shows how to load and run the pre-trained Google Inception model for image classification.

Intro to Python (High School) Unit #3

Intro to Python (High School) Unit #3Jay Coskey This document provides an introduction to GUI programming using Tkinter and turtle graphics in Python. It discusses how turtle programs use Tkinter to create windows and display graphics. Tkinter is a Python package for building graphical user interfaces based on the Tk widget toolkit. Several examples are provided on how to use turtle graphics to draw shapes, add color and interactivity using keyboard and mouse inputs. GUI programming with Tkinter allows creating more complex programs and games beyond what can be done with turtle graphics alone.

Deep Learning and TensorFlow

Deep Learning and TensorFlowOswald Campesato A fast-paced introduction to Deep Learning concepts, such as activation functions, cost functions, back propagation, and then a quick dive into CNNs. Basic knowledge of vectors, matrices, and derivatives is helpful in order to derive the maximum benefit from this session. Then we'll see a short introduction to TensorFlow and TensorBoard.

Dive Into PyTorch

Dive Into PyTorchIllarion Khlestov Distilled PyTorch tutorial. Also in text at my blog - https://towardsdatascience.com/pytorch-tutorial-distilled-95ce8781a89c

About RNN

About RNNYoung Oh Jeong RNNs are neural networks that can handle sequence data by incorporating a time component. They learn from past sequence data to predict future states in new sequence data. The document discusses RNN architecture, which includes an input layer, hidden layer, and output layer. The hidden layer receives input from the current time step and previous hidden state. Backpropagation Through Time is used to train RNNs by propagating error terms back in time. The document provides an example implementation of an RNN for time series prediction using TensorFlow and Keras.

About RNN

About RNNYoung Oh Jeong RNNs are neural networks that can handle sequence data by incorporating a time component. They learn from past sequence data to predict future states in new sequence data. The document discusses RNN architecture, which uses a hidden layer that receives both the current input and the previous hidden state. It also covers backpropagation through time (BPTT) for training RNNs on sequence data. Examples are provided to implement an RNN from scratch using TensorFlow and Keras to predict a noisy sine wave time series.

NTC_Tensor flow 深度學習快速上手班_Part1 -機器學習

NTC_Tensor flow 深度學習快速上手班_Part1 -機器學習NTC.im(Notch Training Center) |課程內容

本課程旨在建立對深度學習的基本認知及Tensorflow的基本操作,重點在於透過採體驗式教學方式的實作,透過執行Tensorflow的API拼湊出簡單的程式,來執行整個深度學習的過程,從過程中驗證課程所學。

|課程大剛

機器學習

/ 機器學習簡介

/ Tensorflow簡介

/ 單層感知器實作

深度學習

/ 深度學習簡介

/ Tensorflow與深度學習

/ 多層感知器實作

電腦視覺應用

/ 電腦視覺簡介

/ 卷積神經網路

/ 影像識別實作

自然語言處理應用

/ 自然語言處理簡介

/ word2vec神經網路

/ 語意運算實作

http://www.hcinnovation.tw/tc/index.php/k2-blog/item/66-tensorflow

Introduction to TensorFlow

Introduction to TensorFlowMatthias Feys TensorFlow is an open source software library for machine learning developed by Google. It provides primitives for defining functions on tensors and automatically computing their derivatives. TensorFlow represents computations as data flow graphs with nodes representing operations and edges representing tensors. It is widely used for neural networks and deep learning tasks like image classification, language processing, and speech recognition. TensorFlow is portable, scalable, and has a large community and support for deployment compared to other frameworks. It works by constructing a computational graph during modeling, and then executing operations by pushing data through the graph.

Large Scale Deep Learning with TensorFlow

Large Scale Deep Learning with TensorFlow Jen Aman Large-scale deep learning with TensorFlow allows storing and performing computation on large datasets to develop computer systems that can understand data. Deep learning models like neural networks are loosely based on what is known about the brain and become more powerful with more data, larger models, and more computation. At Google, deep learning is being applied across many products and areas, from speech recognition to image understanding to machine translation. TensorFlow provides an open-source software library for machine learning that has been widely adopted both internally at Google and externally.

More Related Content

What's hot (20)

Tensor board

Tensor boardSung Kim The document describes how to use TensorBoard, TensorFlow's visualization tool. It outlines 5 steps: 1) annotate nodes in the TensorFlow graph to visualize, 2) merge summaries, 3) create a writer, 4) run the merged summary and write it, 5) launch TensorBoard pointing to the log directory. TensorBoard can visualize the TensorFlow graph, plot metrics over time, and show additional data like histograms and scalars.

Gentlest Introduction to Tensorflow - Part 2

Gentlest Introduction to Tensorflow - Part 2Khor SoonHin Video: https://youtu.be/Trc52FvMLEg

Article: https://medium.com/@khor/gentlest-introduction-to-tensorflow-part-2-ed2a0a7a624f

Code: https://github.com/nethsix/gentle_tensorflow

Continuing from Part 1 where we used Tensorflow to perform linear regression for a model with single feature, here we:

* Use Tensorboard to visualize linear regression variables and the Tensorflow network graph

* Perform stochastic/mini-batch/batch gradient descent

Machine Learning - Introduction to Tensorflow

Machine Learning - Introduction to TensorflowAndrew Ferlitsch Abstract: This PDSG workshop introduces basic concepts on TensorFlow. The course covers fundamentals. Concepts covered are Vectors/Matrices/Vectors, Design&Run, Constants, Operations, Placeholders, Bindings, Operators, Loss Function and Training.

Level: Fundamental

Requirements: Some basic programming knowledge is preferred. No prior statistics background is required.

30 分鐘學會實作 Python Feature Selection

30 分鐘學會實作 Python Feature SelectionJames Huang This document provides a summary of a 30-minute presentation on feature selection in Python. The presentation covered several common feature selection techniques in Python like LASSO, random forests, and PCA. Code examples were provided to demonstrate how to perform feature selection on the Iris dataset using these techniques in scikit-learn. Dimensionality reduction with PCA and word embeddings with Gensim were also briefly discussed. The presentation aimed to provide practical code examples to do feature selection without explanations of underlying mathematics or theory.

H2 o berkeleydltf

H2 o berkeleydltfOswald Campesato A fast-paced introduction to Deep Learning concepts, such as activation functions, cost functions, back propagation, and then a quick dive into CNNs, followed by a Keras code sample for defining a CNN. Basic knowledge of vectors, matrices, and derivatives is helpful in order to derive the maximum benefit from this session. Then we'll see a short introduction to TensorFlow 1.x and some insights into TF 2 that will be released some time this year.

Introduction to TensorFlow 2

Introduction to TensorFlow 2Oswald Campesato A fast-paced introduction to TensorFlow 2 about some important new features (such as generators and the @tf.function decorator) and TF 1.x functionality that's been removed from TF 2 (yes, tf.Session() has retired).

Concise code samples are presented to illustrate how to use new features of TensorFlow 2. You'll also get a quick introduction to lazy operators (if you know FRP this will be super easy), along with a code comparison between TF 1.x/iterators with tf.data.Dataset and TF 2/generators with tf.data.Dataset.

Finally, we'll look at some tf.keras code samples that are based on TensorFlow 2. Although familiarity with TF 1.x is helpful, newcomers with an avid interest in learning about TensorFlow 2 can benefit from this session.

Working with tf.data (TF 2)

Working with tf.data (TF 2)Oswald Campesato This session for beginners introduces tf.data APIs for creating data pipelines by combining various "lazy operators" in tf.data, such as filter(), map(), batch(), zip(), flatmap(), take(), and so forth.

Familiarity with method chaining and TF2 is helpful (but not required). If you are comfortable with FRP, the code samples in this session will be very familiar to you.

Introduction to Deep Learning, Keras, and Tensorflow

Introduction to Deep Learning, Keras, and TensorflowOswald Campesato A fast-paced introduction to Deep Learning concepts, such as activation functions, cost functions, back propagation, and then a quick dive into CNNs. Basic knowledge of vectors, matrices, and derivatives is helpful in order to derive the maximum benefit from this session. Then we'll see how to create a Convolutional Neural Network in Keras, followed by a quick introduction to TensorFlow and TensorBoard.

Introduction to TensorFlow 2

Introduction to TensorFlow 2Oswald Campesato A fast-paced introduction to TensorFlow 2 about some important new features (such as generators and the @tf.function decorator) and TF 1.x functionality that's been removed from TF 2 (yes, tf.Session() has retired).

Some concise code samples are presented to illustrate how to use new features of TensorFlow 2.

Introduction to TensorFlow 2 and Keras

Introduction to TensorFlow 2 and KerasOswald Campesato This document provides an overview and introduction to TensorFlow 2. It discusses major changes from TensorFlow 1.x like eager execution and tf.function decorator. It covers working with tensors, arrays, datasets, and loops in TensorFlow 2. It also demonstrates common operations like arithmetic, reshaping and normalization. Finally, it briefly introduces working with Keras and neural networks in TensorFlow 2.

TensorFlow in Your Browser

TensorFlow in Your BrowserOswald Campesato An introductory presentation covered key concepts in deep learning including neural networks, activation functions, cost functions, and optimization methods. Popular deep learning frameworks TensorFlow and tensorflow.js were discussed. Common deep learning architectures like convolutional neural networks and generative adversarial networks were explained. Examples and code snippets in Python demonstrated fundamental deep learning concepts.

NTU ML TENSORFLOW

NTU ML TENSORFLOWMark Chang This document provides an overview of TensorFlow and how to implement machine learning models using TensorFlow. It discusses:

1) How to install TensorFlow either directly or within a virtual environment.

2) The key concepts of TensorFlow including computational graphs, sessions, placeholders, variables and how they are used to define and run computations.

3) An example one-layer perceptron model for MNIST image classification to demonstrate these concepts in action.

Python book

Python bookVictor Rabinovich This book is intended for education and fun. Python is an amazing, text-based coding language, perfectly suited for children older than the age of 10. The Standard Python library has a module called Turtle which is a popular way to introduce programming to kids. This library enables children to create pictures and shapes by providing them with a virtual canvas. With the Python Turtle library, you can create nice animation projects using images that are taken from the internet, scaled-down stored as a gif-files download to the projects. The book includes 19 basic lessons with examples that introduce to the Python codes through Turtle library which is convenient to the school students of 10+years old. The book has also a lot of projects that show how to make different animations with Turtle graphics: games, applications to math, physics, and science.

TensorFlow 深度學習快速上手班--電腦視覺應用

TensorFlow 深度學習快速上手班--電腦視覺應用Mark Chang This document discusses computer vision applications using TensorFlow for deep learning. It introduces computer vision and convolutional neural networks. It then demonstrates how to build and train a CNN for MNIST handwritten digit recognition using TensorFlow. Finally, it shows how to load and run the pre-trained Google Inception model for image classification.

Intro to Python (High School) Unit #3

Intro to Python (High School) Unit #3Jay Coskey This document provides an introduction to GUI programming using Tkinter and turtle graphics in Python. It discusses how turtle programs use Tkinter to create windows and display graphics. Tkinter is a Python package for building graphical user interfaces based on the Tk widget toolkit. Several examples are provided on how to use turtle graphics to draw shapes, add color and interactivity using keyboard and mouse inputs. GUI programming with Tkinter allows creating more complex programs and games beyond what can be done with turtle graphics alone.

Deep Learning and TensorFlow

Deep Learning and TensorFlowOswald Campesato A fast-paced introduction to Deep Learning concepts, such as activation functions, cost functions, back propagation, and then a quick dive into CNNs. Basic knowledge of vectors, matrices, and derivatives is helpful in order to derive the maximum benefit from this session. Then we'll see a short introduction to TensorFlow and TensorBoard.

Dive Into PyTorch

Dive Into PyTorchIllarion Khlestov Distilled PyTorch tutorial. Also in text at my blog - https://towardsdatascience.com/pytorch-tutorial-distilled-95ce8781a89c

About RNN

About RNNYoung Oh Jeong RNNs are neural networks that can handle sequence data by incorporating a time component. They learn from past sequence data to predict future states in new sequence data. The document discusses RNN architecture, which includes an input layer, hidden layer, and output layer. The hidden layer receives input from the current time step and previous hidden state. Backpropagation Through Time is used to train RNNs by propagating error terms back in time. The document provides an example implementation of an RNN for time series prediction using TensorFlow and Keras.

About RNN

About RNNYoung Oh Jeong RNNs are neural networks that can handle sequence data by incorporating a time component. They learn from past sequence data to predict future states in new sequence data. The document discusses RNN architecture, which uses a hidden layer that receives both the current input and the previous hidden state. It also covers backpropagation through time (BPTT) for training RNNs on sequence data. Examples are provided to implement an RNN from scratch using TensorFlow and Keras to predict a noisy sine wave time series.

NTC_Tensor flow 深度學習快速上手班_Part1 -機器學習

NTC_Tensor flow 深度學習快速上手班_Part1 -機器學習NTC.im(Notch Training Center) |課程內容

本課程旨在建立對深度學習的基本認知及Tensorflow的基本操作,重點在於透過採體驗式教學方式的實作,透過執行Tensorflow的API拼湊出簡單的程式,來執行整個深度學習的過程,從過程中驗證課程所學。

|課程大剛

機器學習

/ 機器學習簡介

/ Tensorflow簡介

/ 單層感知器實作

深度學習

/ 深度學習簡介

/ Tensorflow與深度學習

/ 多層感知器實作

電腦視覺應用

/ 電腦視覺簡介

/ 卷積神經網路

/ 影像識別實作

自然語言處理應用

/ 自然語言處理簡介

/ word2vec神經網路

/ 語意運算實作

http://www.hcinnovation.tw/tc/index.php/k2-blog/item/66-tensorflow

Viewers also liked (20)

Introduction to TensorFlow

Introduction to TensorFlowMatthias Feys TensorFlow is an open source software library for machine learning developed by Google. It provides primitives for defining functions on tensors and automatically computing their derivatives. TensorFlow represents computations as data flow graphs with nodes representing operations and edges representing tensors. It is widely used for neural networks and deep learning tasks like image classification, language processing, and speech recognition. TensorFlow is portable, scalable, and has a large community and support for deployment compared to other frameworks. It works by constructing a computational graph during modeling, and then executing operations by pushing data through the graph.

Large Scale Deep Learning with TensorFlow

Large Scale Deep Learning with TensorFlow Jen Aman Large-scale deep learning with TensorFlow allows storing and performing computation on large datasets to develop computer systems that can understand data. Deep learning models like neural networks are loosely based on what is known about the brain and become more powerful with more data, larger models, and more computation. At Google, deep learning is being applied across many products and areas, from speech recognition to image understanding to machine translation. TensorFlow provides an open-source software library for machine learning that has been widely adopted both internally at Google and externally.

[系列活動] 一日搞懂生成式對抗網路![[系列活動] 一日搞懂生成式對抗網路](https://cdn.slidesharecdn.com/ss_thumbnails/gan-170813004356-thumbnail.jpg?width=560&fit=bounds)

![[系列活動] 一日搞懂生成式對抗網路](https://cdn.slidesharecdn.com/ss_thumbnails/gan-170813004356-thumbnail.jpg?width=560&fit=bounds)

![[系列活動] 一日搞懂生成式對抗網路](https://cdn.slidesharecdn.com/ss_thumbnails/gan-170813004356-thumbnail.jpg?width=560&fit=bounds)

![[系列活動] 一日搞懂生成式對抗網路](https://cdn.slidesharecdn.com/ss_thumbnails/gan-170813004356-thumbnail.jpg?width=560&fit=bounds)

[系列活動] 一日搞懂生成式對抗網路台灣資料科學年會 生成式對抗網路 (Generative Adversarial Network, GAN) 顯然是深度學習領域的下一個熱點,Yann LeCun 說這是機器學習領域這十年來最有趣的想法 (the most interesting idea in the last 10 years in ML),又說這是有史以來最酷的東西 (the coolest thing since sliced bread)。生成式對抗網路解決了什麼樣的問題呢?在機器學習領域,回歸 (regression) 和分類 (classification) 這兩項任務的解法人們已經不再陌生,但是如何讓機器更進一步創造出有結構的複雜物件 (例如:圖片、文句) 仍是一大挑戰。用生成式對抗網路,機器已經可以畫出以假亂真的人臉,也可以根據一段敘述文字,自己畫出對應的圖案,甚至還可以畫出二次元人物頭像 (左邊的動畫人物頭像就是機器自己生成的)。本課程希望能帶大家認識生成式對抗網路這個深度學習最前沿的技術。

[系列活動] Python爬蟲實戰![[系列活動] Python爬蟲實戰](https://cdn.slidesharecdn.com/ss_thumbnails/python-170809083644-thumbnail.jpg?width=560&fit=bounds)

![[系列活動] Python爬蟲實戰](https://cdn.slidesharecdn.com/ss_thumbnails/python-170809083644-thumbnail.jpg?width=560&fit=bounds)

![[系列活動] Python爬蟲實戰](https://cdn.slidesharecdn.com/ss_thumbnails/python-170809083644-thumbnail.jpg?width=560&fit=bounds)

![[系列活動] Python爬蟲實戰](https://cdn.slidesharecdn.com/ss_thumbnails/python-170809083644-thumbnail.jpg?width=560&fit=bounds)

[系列活動] Python爬蟲實戰台灣資料科學年會 資料科學的世界中,資料是一切的基石,而網際網路則蘊藏了豐富的資料等待著挖掘與分析。在這資料科學蔚為風行的時代,網路爬蟲的技術是一項非常實用的技能,若您有朝思暮想的資料在網路上 (例如表特版上被推爆的文章),卻苦無方法可以爬取;又或是想抓取熱門電影的票房、評論資料做分析建模,卻不知如何下手,那麼這堂 Python 爬蟲實戰的課程將會很適合您。

本課程利用六個小時的時間,上午將從最基本的 HTML 網頁結構開始,透過範例與實戰練習帶您學會爬取網頁文字資料並解析其結構與內容,再運用簡單的資料視覺化與資料分析,帶您實際走一回資料分析的歷程。而下午會進一步介紹檔案的爬蟲、從爬取網頁到爬取網站、模擬人類行為的爬蟲程式,以及現代複雜的網頁設計中,爬蟲程式有可能遭遇的問題。

[系列活動] 無所不在的自然語言處理—基礎概念、技術與工具介紹![[系列活動] 無所不在的自然語言處理—基礎概念、技術與工具介紹](https://cdn.slidesharecdn.com/ss_thumbnails/nlptutorial-0828-170830062001-thumbnail.jpg?width=560&fit=bounds)

![[系列活動] 無所不在的自然語言處理—基礎概念、技術與工具介紹](https://cdn.slidesharecdn.com/ss_thumbnails/nlptutorial-0828-170830062001-thumbnail.jpg?width=560&fit=bounds)

![[系列活動] 無所不在的自然語言處理—基礎概念、技術與工具介紹](https://cdn.slidesharecdn.com/ss_thumbnails/nlptutorial-0828-170830062001-thumbnail.jpg?width=560&fit=bounds)

![[系列活動] 無所不在的自然語言處理—基礎概念、技術與工具介紹](https://cdn.slidesharecdn.com/ss_thumbnails/nlptutorial-0828-170830062001-thumbnail.jpg?width=560&fit=bounds)

[系列活動] 無所不在的自然語言處理—基礎概念、技術與工具介紹台灣資料科學年會 自然語言處理 (Natural Language Processing) 技術專門對付非結構性、沒有整理成欄位值的資料,這也是資料分析時的燙手山芋之一。能夠理解語意,讓機器使用自然語言溝通,就能夠通過人工智慧的終極測試 — 圖靈測試 (Turing Test),可見這個問題的確是個極大的挑戰。即使是自然語言的基礎技術,例如怎麼樣從文章中抽取出重要的資訊?怎麼樣分析句子的結構?怎麼樣找到語言中所表達的語意?怎麼樣處理不同的語言?這些技巧在資料分析時不僅必備,想要做得好更需要訣竅。本課程先從基礎介紹開始,希望能帶大家認識自然語言處理這個在資料分析與資料探勘時非常有用的技術,並燃起對自然語言處理的興趣及熱情。

Machine Intelligence at Google Scale: TensorFlow

Machine Intelligence at Google Scale: TensorFlowDataWorks Summit/Hadoop Summit - TensorFlow is Google's open source machine learning library for developing and training neural networks and deep learning models. It operates using data flow graphs to represent computation.

- TensorFlow can be used across many platforms including data centers, CPUs, GPUs, mobile phones, and IoT devices. It is widely used at Google across many products and research areas involving machine learning.

- The TensorFlow library is used along with higher level tools in Google's machine learning platform including TensorFlow Cloud, Machine Learning APIs, and Cloud Machine Learning Platform to make machine learning more accessible and scalable.

TensorFlow Serving, Deep Learning on Mobile, and Deeplearning4j on the JVM - ...

TensorFlow Serving, Deep Learning on Mobile, and Deeplearning4j on the JVM - ...Sam Putnam [Deep Learning] 1) The document discusses TensorFlow Serving, Deep Learning on Mobile, and Deeplearning4j on the JVM as presented by Sam Putnam on 6/8/2017.

2) It provides information on exporting models for TensorFlow Serving, deploying TensorFlow to Android, tools for mobile deep learning like Inception and MobileNets, and using Deeplearning4j on the JVM with integration with Spark.

3) The document shares links to resources on these topics and thanks sponsors while inviting people to join future discussions.

Neural Networks with Google TensorFlow

Neural Networks with Google TensorFlowDarshan Patel This slides explains how Convolution Neural Networks can be coded using Google TensorFlow.

Video available at : https://www.youtube.com/watch?v=EoysuTMmmMc

Introducing TensorFlow: The game changer in building "intelligent" applications

Introducing TensorFlow: The game changer in building "intelligent" applicationsRokesh Jankie This is the slidedeck used for the presentation of the Amsterdam Pipeline of Data Science, held in December 2016. TensorFlow in the open source library from Google to implement deep learning, neural networks. This is an introduction to Tensorflow.

Note: Videos are not included (which were shown during the presentation)

On-device machine learning: TensorFlow on Android

On-device machine learning: TensorFlow on AndroidYufeng Guo This document discusses building machine learning models for mobile apps using TensorFlow. It describes the process of gathering training data, training a model using Cloud ML Engine, optimizing the model for mobile, and integrating it into an Android app. Key steps involve converting video training data to images, retraining an InceptionV3 model, optimizing the model size with graph transformations, and loading the model into an Android app. TensorFlow allows developing machine learning models that can run efficiently on mobile devices.

TensorFlow 深度學習講座

TensorFlow 深度學習講座Mark Chang TensorFlow 深度學習講座

http://www.hcinnovation.tw/tc/index.php/k2-blog/item/65-tensorflow

Deep Learning for Data Scientists - Data Science ATL Meetup Presentation, 201...

Deep Learning for Data Scientists - Data Science ATL Meetup Presentation, 201...Andrew Gardner Note: these are the slides from a presentation at Lexis Nexis in Alpharetta, GA, on 2014-01-08 as part of the DataScienceATL Meetup. A video of this talk from Dec 2013 is available on vimeo at http://bit.ly/1aJ6xlt

Note: Slideshare mis-converted the images in slides 16-17. Expect a fix in the next couple of days.

---

Deep learning is a hot area of machine learning named one of the "Breakthrough Technologies of 2013" by MIT Technology Review. The basic ideas extend neural network research from past decades and incorporate new discoveries in statistical machine learning and neuroscience. The results are new learning architectures and algorithms that promise disruptive advances in automatic feature engineering, pattern discovery, data modeling and artificial intelligence. Empirical results from real world applications and benchmarking routinely demonstrate state-of-the-art performance across diverse problems including: speech recognition, object detection, image understanding and machine translation. The technology is employed commercially today, notably in many popular Google products such as Street View, Google+ Image Search and Android Voice Recognition.

In this talk, we will present an overview of deep learning for data scientists: what it is, how it works, what it can do, and why it is important. We will review several real world applications and discuss some of the key hurdles to mainstream adoption. We will conclude by discussing our experiences implementing and running deep learning experiments on our own hardware data science appliance.

Machine Learning Preliminaries and Math Refresher

Machine Learning Preliminaries and Math Refresherbutest The document is an introduction to machine learning preliminaries and mathematics. It covers general remarks about learning as a process of model building, an overview of key concepts from probability theory and statistics needed for machine learning like random variables, distributions, and expectations. It also introduces linear spaces and vector spaces as mathematical structures that are important foundations for machine learning algorithms. The goal is to cover essential mathematical concepts like probability, statistics, and linear algebra that are prerequisites for machine learning.

Secure Because Math: A Deep-Dive on Machine Learning-Based Monitoring (#Secur...

Secure Because Math: A Deep-Dive on Machine Learning-Based Monitoring (#Secur...Alex Pinto The document discusses machine learning-based security monitoring. It begins with an introduction of the speaker, Alex Pinto, and an agenda that will include a discussion of anomaly detection versus classification techniques. It then covers some history of anomaly detection research dating back to the 1980s. It also discusses challenges with anomaly detection, such as the curse of dimensionality with high-dimensional data and lack of ground truth labels. The document emphasizes communicating these machine learning concepts clearly.

Machine Learning without the Math: An overview of Machine Learning

Machine Learning without the Math: An overview of Machine LearningArshad Ahmed A brief overview of Machine Learning and its associated tasks from a high level. This presentation discusses key concepts without the maths.The more mathematically inclined are referred to Bishops book on Pattern Recognition and Machine Learning.

[系列活動] 資料探勘速遊![[系列活動] 資料探勘速遊](https://cdn.slidesharecdn.com/ss_thumbnails/0114ycchendmquicktour-170110050658-thumbnail.jpg?width=560&fit=bounds)

![[系列活動] 資料探勘速遊](https://cdn.slidesharecdn.com/ss_thumbnails/0114ycchendmquicktour-170110050658-thumbnail.jpg?width=560&fit=bounds)

![[系列活動] 資料探勘速遊](https://cdn.slidesharecdn.com/ss_thumbnails/0114ycchendmquicktour-170110050658-thumbnail.jpg?width=560&fit=bounds)

![[系列活動] 資料探勘速遊](https://cdn.slidesharecdn.com/ss_thumbnails/0114ycchendmquicktour-170110050658-thumbnail.jpg?width=560&fit=bounds)

[系列活動] 資料探勘速遊台灣資料科學年會 資料探勘是資料科學中一個基礎的修習科目,這個學問結合了機器學習、人工智慧、資料庫、訊號處理、與統計等不同領域的技術,期待能從雜亂、巨大的資料中抽取出有意義的知識。理論上,透過這個技術,資料科學家可以作出各種應用。然而實際上,由於資料未經處理前,往往混亂、難以著手,如果沒有正確處理資料,往往無法得到有價值的知識。本課程的目的,在於帶領初學者了解如何從整理混亂的資料、並找到最適合的技術來解決問題,除了會深入淺出的教授一般教科書有的技術外,並會給與實際應用的例子,讓初學者能練習面對問題的方法,也能運用技巧來分析成品並同時教導如何衡量分析結果。

qconsf 2013: Top 10 Performance Gotchas for scaling in-memory Algorithms - Sr...

qconsf 2013: Top 10 Performance Gotchas for scaling in-memory Algorithms - Sr...Sri Ambati Top 10 Performance Gotchas in scaling in-memory Algorithms

Abstract:

Math Algorithms have primarily been the domain of desktop data science. With the success of scalable algorithms at Google, Amazon, and Netflix, there is an ever growing demand for sophisticated algorithms over big data. In this talk, we get a ringside view in the making of the world's most scalable and fastest machine learning framework, H2O, and the performance lessons learnt scaling it over EC2 for Netflix and over commodity hardware for other power users.

Top 10 Performance Gotchas is about the white hot stories of i/o wars, S3 resets, and muxers, as well as the power of primitive byte arrays, non-blocking structures, and fork/join queues. Of good data distribution & fine-grain decomposition of Algorithms to fine-grain blocks of parallel computation. It's a 10-point story of the rage of a network of machines against the tyranny of Amdahl while keeping the statistical properties of the data and accuracy of the algorithm.

Track: Scalability, Availability, and Performance: Putting It All Together

Time: Wednesday, 11:45am - 12:35pm

02 math essentials

02 math essentialsPoongodi Mano 1) Machine learning draws on areas of mathematics including probability, statistical inference, linear algebra, and optimization theory.

2) While there are easy-to-use machine learning packages, understanding the underlying mathematics is important for choosing the right algorithms, making good parameter and validation choices, and interpreting results.

3) Key concepts in probability and statistics that are important for machine learning include random variables, probability distributions, expected value, variance, covariance, and conditional probability. These concepts allow quantification of relationships and uncertainties in data.

Kafka Summit SF Apr 26 2016 - Generating Real-time Recommendations with NiFi,...

Kafka Summit SF Apr 26 2016 - Generating Real-time Recommendations with NiFi,...Chris Fregly This document summarizes a presentation about generating real-time streaming recommendations using NiFi, Kafka, and Spark ML. The presentation demonstrates using NiFi to ingest data from HTTP requests, enrich it with geo data, and write it to a Kafka topic. It then shows how to create a Spark Streaming application that reads from Kafka to perform incremental matrix factorization recommendations in real-time and handles failures using circuit breakers. The presentation also provides an overview of Netflix's large-scale real-time recommendation pipeline.

Big Data Spain - Nov 17 2016 - Madrid Continuously Deploy Spark ML and Tensor...

Big Data Spain - Nov 17 2016 - Madrid Continuously Deploy Spark ML and Tensor...Chris Fregly In this talk, I describe some recent advancements in Streaming ML and AI Pipelines to enable data scientists to rapidly train and test on streaming data - and ultimately deploy models directly into production on their own with low friction and high impact.

With proper tooling and monitoring, data scientist have the freedom and responsibility to experiment rapidly on live, streaming data - and deploy directly into production as often as necessary. I’ll describe this tooling - and demonstrate a real production pipeline using Jupyter Notebook, Docker, Kubernetes, Spark ML, Kafka, TensorFlow, Jenkins, and Netflix Open Source.

TensorFlow Serving, Deep Learning on Mobile, and Deeplearning4j on the JVM - ...

TensorFlow Serving, Deep Learning on Mobile, and Deeplearning4j on the JVM - ...Sam Putnam [Deep Learning]

Similar to Google TensorFlow Tutorial (20)

TensorFlow example for AI Ukraine2016

TensorFlow example for AI Ukraine2016Andrii Babii Workshop about TensorFlow usage for AI Ukraine 2016. Brief tutorial with source code example. Described TensorFlow main ideas, terms, parameters. Example related with linear neuron model and learning using Adam optimization algorithm.

TensorFlow Tutorial.pdf

TensorFlow Tutorial.pdfAntonio Espinosa This document provides an introduction and overview of TensorFlow, a popular deep learning library developed by Google. It begins with administrative announcements for the class and then discusses key TensorFlow concepts like tensors, variables, placeholders, sessions, and computation graphs. It provides examples comparing TensorFlow and NumPy for common deep learning tasks like linear regression. It also covers best practices for debugging TensorFlow and introduces TensorBoard for visualization. Overall, the document serves as a high-level tutorial for getting started with TensorFlow.

Tensor flow description of ML Lab. document

Tensor flow description of ML Lab. documentjeongok1 This document contains slides for a TensorFlow basics lab. It introduces TensorFlow and computational graphs, shows how to install TensorFlow and check the version, and demonstrates a simple "Hello World" TensorFlow program. It also discusses placeholders, variables, feeding data, and building linear regression models in TensorFlow to minimize a cost function. The full Python code for linear regression is provided.

Introduction to Deep Learning and TensorFlow

Introduction to Deep Learning and TensorFlowOswald Campesato A fast-paced introduction to Deep Learning (DL) concepts, starting with a simple yet complete neural network (no frameworks), followed by aspects of deep neural networks, such as back propagation, activation functions, CNNs, and the AUT theorem. Next, a quick introduction to TensorFlow and TensorBoard, along with some code samples with TensorFlow. For best results, familiarity with basic vectors and matrices, inner (aka "dot") products of vectors, the notion of a derivative, and rudimentary Python is recommended.

Introduction to Deep Learning, Keras, and TensorFlow

Introduction to Deep Learning, Keras, and TensorFlowSri Ambati This meetup was recorded in San Francisco on Jan 9, 2019.

Video recording of the session can be viewed here: https://youtu.be/yG1UJEzpJ64

Description:

This fast-paced session starts with a simple yet complete neural network (no frameworks), followed by an overview of activation functions, cost functions, backpropagation, and then a quick dive into CNNs. Next, we'll create a neural network using Keras, followed by an introduction to TensorFlow and TensorBoard. For best results, familiarity with basic vectors and matrices, inner (aka "dot") products of vectors, and rudimentary Python is definitely helpful. If time permits, we'll look at the UAT, CLT, and the Fixed Point Theorem. (Bonus points if you know Zorn's Lemma, the Well-Ordering Theorem, and the Axiom of Choice.)

Oswald's Bio:

Oswald Campesato is an education junkie: a former Ph.D. Candidate in Mathematics (ABD), with multiple Master's and 2 Bachelor's degrees. In a previous career, he worked in South America, Italy, and the French Riviera, which enabled him to travel to 70 countries throughout the world.

He has worked in American and Japanese corporations and start-ups, as C/C++ and Java developer to CTO. He works in the web and mobile space, conducts training sessions in Android, Java, Angular 2, and ReactJS, and he writes graphics code for fun. He's comfortable in four languages and aspires to become proficient in Japanese, ideally sometime in the next two decades. He enjoys collaborating with people who share his passion for learning the latest cool stuff, and he's currently working on his 15th book, which is about Angular 2.

Intro to Deep Learning, TensorFlow, and tensorflow.js

Intro to Deep Learning, TensorFlow, and tensorflow.jsOswald Campesato This fast-paced session introduces Deep Learning concepts, such gradient descent, back propagation, activation functions, and CNNs. We'll look at creating Android apps with TensorFlow Lite (pending its availability). Basic knowledge of vectors, matrices, and Android, as well as elementary calculus (derivatives), are strongly recommended in order to derive the maximum benefit from this session.

Deep Learning in Your Browser

Deep Learning in Your BrowserOswald Campesato An introductory document covered deep learning concepts including neural networks, activation functions, cost functions, gradient descent, TensorFlow, CNNs, RNNs, GANs, and tensorflow.js. Key topics included the use of deep learning for computer vision, speech recognition, and more. Activation functions such as ReLU, sigmoid and tanh were explained. TensorFlow and tensorflow.js were introduced as frameworks for deep learning.

Lucio Floretta - TensorFlow and Deep Learning without a PhD - Codemotion Mila...

Lucio Floretta - TensorFlow and Deep Learning without a PhD - Codemotion Mila...Codemotion With TensorFlow, deep machine learning transitions from an area of research to mainstream software engineering. In this session, we'll work together to construct and train a neural network that recognises handwritten digits. Along the way, we'll discover some of the "tricks of the trade" used in neural network design, and finally, we'll bring the recognition accuracy of our model above 99%.

TensorFlow for IITians

TensorFlow for IITiansAshish Bansal This document provides an overview of TensorFlow presented by Ashish Agarwal and Ashish Bansal. The key points covered include:

- TensorFlow is an open-source machine learning framework for research and production. It allows models to be deployed across different platforms.

- TensorFlow models are represented as dataflow graphs where nodes are operations and edges are tensors flowing between operations. Placeholders, variables, and sessions are introduced.

- Examples demonstrate basic linear regression and logistic regression models built with TensorFlow. Layers API and common neural network components like convolutions and RNNs are also covered.

- Advanced models like AlexNet, Inception, ResNet, and neural machine translation with attention are briefly overviewed.

A Tour of Tensorflow's APIs

A Tour of Tensorflow's APIsDean Wyatte This document summarizes TensorFlow's APIs, beginning with an overview of the low-level API using computational graphs and sessions. It then discusses higher-level APIs like Keras, TensorFlow Datasets for input pipelines, and Estimators which hide graph and session details. Datasets improve training speed by up to 300% by enabling parallelism. Estimators resemble scikit-learn and separate model definition from training, making code more modular and reusable. The document provides examples of using Datasets and Estimators with TensorFlow.

Deep Learning, Scala, and Spark

Deep Learning, Scala, and SparkOswald Campesato This fast-paced session starts with an introduction to neural networks and linear regression models, along with a quick view of TensorFlow, followed by some Scala APIs for TensorFlow. You'll also see a simple dockerized image of Scala and TensorFlow code and how to execute the code in that image from the command line. No prior knowledge of NNs, Keras, or TensorFlow is required (but you must be comfortable with Scala).

Introduction To TensorFlow | Deep Learning Using TensorFlow | CloudxLab

Introduction To TensorFlow | Deep Learning Using TensorFlow | CloudxLabCloudxLab This document provides instructions for getting started with TensorFlow using a free CloudxLab. It outlines the following steps:

1. Open CloudxLab and enroll if not already enrolled. Otherwise go to "My Lab".

2. In "My Lab", open Jupyter and run commands to clone an ML repository containing TensorFlow examples.

3. Go to the deep learning folder in Jupyter and open the TensorFlow notebook to get started with examples.

Introduction to TensorFlow 2.0

Introduction to TensorFlow 2.0Databricks The release of TensorFlow 2.0 comes with a significant number of improvements over its 1.x version, all with a focus on ease of usability and a better user experience. We will give an overview of what TensorFlow 2.0 is and discuss how to get started building models from scratch using TensorFlow 2.0’s high-level api, Keras. We will walk through an example step-by-step in Python of how to build an image classifier. We will then showcase how to leverage a transfer learning to make building a model even easier! With transfer learning, we can leverage other pretrained models such as ImageNet to drastically speed up the training time of our model. TensorFlow 2.0 makes this incredibly simple to do.

Introduction To Using TensorFlow & Deep Learning

Introduction To Using TensorFlow & Deep Learningali alemi This document provides an introduction to using TensorFlow. It begins with an overview of TensorFlow and what it is. It then discusses TensorFlow code basics, including building computational graphs and running sessions. It provides examples of using placeholders, constants, and variables. It also gives an example of linear regression using TensorFlow. Finally, it discusses deep learning techniques like convolutional neural networks (CNNs) and recurrent neural networks (RNNs), providing examples of CNNs for image classification. It concludes with an example of using a multi-layer perceptron for MNIST digit classification in TensorFlow.

The TensorFlow dance craze

The TensorFlow dance crazeGabriel Hamilton The document introduces TensorFlow, a machine learning library. It discusses how TensorFlow uses multi-dimensional arrays called tensors to represent data and models. An example regression problem is demonstrated where TensorFlow is used to fit a line to sample data points by iteratively updating the slope and offset values to minimize loss. The document promotes TensorFlow by noting its ability to distribute operations across processors and optimize entire graphs.

Introduction to TensorFlow, by Machine Learning at Berkeley

Introduction to TensorFlow, by Machine Learning at BerkeleyTed Xiao A workshop introducing the TensorFlow Machine Learning framework. Presented by Brenton Chu, Vice President of Machine Learning at Berkeley.

This presentation cover show to construct, train, evaluate, and visualize neural networks in TensorFlow 1.0

http://ml.berkeley.edu

TensorFlow and Keras: An Overview

TensorFlow and Keras: An OverviewPoo Kuan Hoong TensorFlow and Keras are popular deep learning frameworks. TensorFlow is an open source library for numerical computation using data flow graphs. It was developed by Google and is widely used for machine learning and deep learning. Keras is a higher-level neural network API that can run on top of TensorFlow. It focuses on user-friendliness, modularization and extensibility. Both frameworks make building and training neural networks easier through modular layers and built-in optimization algorithms.

maXbox starter65 machinelearning3

maXbox starter65 machinelearning3Max Kleiner In this article you will learn hot to use tensorflow Softmax Classifier estimator to classify MNIST dataset in one script.

This paper introduces also the basic idea of a artificial neural network.

Language translation with Deep Learning (RNN) with TensorFlow

Language translation with Deep Learning (RNN) with TensorFlowS N This document provides an overview of a meetup on language translation with deep learning using TensorFlow on FloydHub. It will cover the language translation challenge, introducing key concepts like deep learning, RNNs, NLP, TensorFlow and FloydHub. It will then describe the solution approach to the translation task, including a demo and code walkthrough. Potential next steps and references for further learning are also mentioned.

Машинное обучение на JS. С чего начать и куда идти | Odessa Frontend Meetup #12

Машинное обучение на JS. С чего начать и куда идти | Odessa Frontend Meetup #12OdessaFrontend Tensorflow.js allows developing machine learning models with JavaScript. It provides APIs for building neural networks with layers, training models on data, and making predictions. Key capabilities include building, training, and deploying deep learning models directly in the browser or on the server using JavaScript. It supports common ML tasks like image classification, object detection, and natural language processing.

More from 台灣資料科學年會 (20)

[台灣人工智慧學校] 開創台灣產業智慧轉型的新契機![[台灣人工智慧學校] 開創台灣產業智慧轉型的新契機](https://cdn.slidesharecdn.com/ss_thumbnails/aiotforaiabytedchangho-190227081005-thumbnail.jpg?width=560&fit=bounds)

![[台灣人工智慧學校] 開創台灣產業智慧轉型的新契機](https://cdn.slidesharecdn.com/ss_thumbnails/aiotforaiabytedchangho-190227081005-thumbnail.jpg?width=560&fit=bounds)

![[台灣人工智慧學校] 開創台灣產業智慧轉型的新契機](https://cdn.slidesharecdn.com/ss_thumbnails/aiotforaiabytedchangho-190227081005-thumbnail.jpg?width=560&fit=bounds)

![[台灣人工智慧學校] 開創台灣產業智慧轉型的新契機](https://cdn.slidesharecdn.com/ss_thumbnails/aiotforaiabytedchangho-190227081005-thumbnail.jpg?width=560&fit=bounds)

[台灣人工智慧學校] 開創台灣產業智慧轉型的新契機台灣資料科學年會 This document is a presentation by Ted Chang about creating new opportunities for Taiwan's intelligent transformation. It discusses paradigm shifts in technology such as mobile phones and cloud computing. It introduces concepts like the Internet of Things, artificial intelligence, and how they can be combined. It argues that key driving forces for the future will be machine learning, big data, cloud computing and AI. The presentation envisions applications of these technologies in areas like future medicine and smart manufacturing. It ends by emphasizing the importance of wisdom and intelligence in shaping the future.

[台灣人工智慧學校] 開創台灣產業智慧轉型的新契機![[台灣人工智慧學校] 開創台灣產業智慧轉型的新契機](https://cdn.slidesharecdn.com/ss_thumbnails/aiinhealthcare-20190216victoria-v6-190227081004-thumbnail.jpg?width=560&fit=bounds)

![[台灣人工智慧學校] 開創台灣產業智慧轉型的新契機](https://cdn.slidesharecdn.com/ss_thumbnails/aiinhealthcare-20190216victoria-v6-190227081004-thumbnail.jpg?width=560&fit=bounds)

![[台灣人工智慧學校] 開創台灣產業智慧轉型的新契機](https://cdn.slidesharecdn.com/ss_thumbnails/aiinhealthcare-20190216victoria-v6-190227081004-thumbnail.jpg?width=560&fit=bounds)

![[台灣人工智慧學校] 開創台灣產業智慧轉型的新契機](https://cdn.slidesharecdn.com/ss_thumbnails/aiinhealthcare-20190216victoria-v6-190227081004-thumbnail.jpg?width=560&fit=bounds)

[台灣人工智慧學校] 開創台灣產業智慧轉型的新契機台灣資料科學年會 - The document discusses how artificial intelligence can enable earlier and safer medicine.

- It provides background on the author and their expertise in biomedical informatics and roles as editor-in-chief of several academic journals.

- Key applications of AI in healthcare discussed include using machine learning on large medical datasets to detect suspicious moles earlier, reduce medication errors, and more accurately predict cancer occurrence up to 12 months in advance.

- The author argues that AI has the potential to transform medicine by enabling more preventive and earlier detection approaches compared to traditional reactive healthcare models.

[台灣人工智慧學校] 台北總校第三期結業典禮 - 執行長談話![[台灣人工智慧學校] 台北總校第三期結業典禮 - 執行長談話](https://cdn.slidesharecdn.com/ss_thumbnails/tp3closingsw-190126030359-thumbnail.jpg?width=560&fit=bounds)

![[台灣人工智慧學校] 台北總校第三期結業典禮 - 執行長談話](https://cdn.slidesharecdn.com/ss_thumbnails/tp3closingsw-190126030359-thumbnail.jpg?width=560&fit=bounds)

![[台灣人工智慧學校] 台北總校第三期結業典禮 - 執行長談話](https://cdn.slidesharecdn.com/ss_thumbnails/tp3closingsw-190126030359-thumbnail.jpg?width=560&fit=bounds)

![[台灣人工智慧學校] 台北總校第三期結業典禮 - 執行長談話](https://cdn.slidesharecdn.com/ss_thumbnails/tp3closingsw-190126030359-thumbnail.jpg?width=560&fit=bounds)

[台灣人工智慧學校] 台北總校第三期結業典禮 - 執行長談話台灣資料科學年會 主辦單位: 財團法人科技生態發展公益基金會

執行單位: 財團法人人工智慧科技基金會

協辦單位: 中央研究院資訊科學研究所、中央研究院資訊科技創新研究中心

贊助企業: 台塑企業、奇美實業、英業達集團、義隆電子、聯發科技、友達光電

台中分校協辦單位: 中亞聯大、東海大學、逢甲大學、臺中市政府經濟發展局

[台灣人工智慧學校] 台中分校第二期開學典禮 - 執行長報告![[台灣人工智慧學校] 台中分校第二期開學典禮 - 執行長報告](https://cdn.slidesharecdn.com/ss_thumbnails/tc2-opening1-compressed-190107034100-thumbnail.jpg?width=560&fit=bounds)

![[台灣人工智慧學校] 台中分校第二期開學典禮 - 執行長報告](https://cdn.slidesharecdn.com/ss_thumbnails/tc2-opening1-compressed-190107034100-thumbnail.jpg?width=560&fit=bounds)

![[台灣人工智慧學校] 台中分校第二期開學典禮 - 執行長報告](https://cdn.slidesharecdn.com/ss_thumbnails/tc2-opening1-compressed-190107034100-thumbnail.jpg?width=560&fit=bounds)

![[台灣人工智慧學校] 台中分校第二期開學典禮 - 執行長報告](https://cdn.slidesharecdn.com/ss_thumbnails/tc2-opening1-compressed-190107034100-thumbnail.jpg?width=560&fit=bounds)

[台灣人工智慧學校] 台中分校第二期開學典禮 - 執行長報告台灣資料科學年會 日期: 2019/01/05 (六)

地點: 臺中市政府集會堂

主辦單位: 財團法人科技生態發展公益基金會

執行單位: 財團法人人工智慧科技基金會

協辦單位: 中央研究院資訊科學研究所、中央研究院資訊科技創新研究中心

贊助企業: 台塑企業、奇美實業、英業達集團、義隆電子、聯發科技、友達光電

台中分校協辦單位: 中亞聯大、東海大學、逢甲大學、臺中市政府經濟發展局

台灣人工智慧學校成果發表會

台灣人工智慧學校成果發表會台灣資料科學年會 主辦單位: 財團法人科技生態發展公益基金會

執行單位: 財團法人人工智慧科技基金會

協辦單位: 中研院資訊所、中研院資創中心

贊助企業: 台塑企業、奇美實業、英業達集團、義隆電子、聯發科技、友達光電

活動協辦: 《科學人》雜誌

[TOxAIA新竹分校] 工業4.0潛力新應用! 多模式對話機器人![[TOxAIA新竹分校] 工業4.0潛力新應用! 多模式對話機器人](https://cdn.slidesharecdn.com/ss_thumbnails/20181206004-181210031031-thumbnail.jpg?width=560&fit=bounds)

![[TOxAIA新竹分校] 工業4.0潛力新應用! 多模式對話機器人](https://cdn.slidesharecdn.com/ss_thumbnails/20181206004-181210031031-thumbnail.jpg?width=560&fit=bounds)

![[TOxAIA新竹分校] 工業4.0潛力新應用! 多模式對話機器人](https://cdn.slidesharecdn.com/ss_thumbnails/20181206004-181210031031-thumbnail.jpg?width=560&fit=bounds)

![[TOxAIA新竹分校] 工業4.0潛力新應用! 多模式對話機器人](https://cdn.slidesharecdn.com/ss_thumbnails/20181206004-181210031031-thumbnail.jpg?width=560&fit=bounds)

[TOxAIA新竹分校] 工業4.0潛力新應用! 多模式對話機器人台灣資料科學年會 Jane may be able to help. Let me check with her personal assistant Jane-ML.

NextPrevIndex

Meera checks with Jane-ML

User-Agent Interaction (V)

48

PA_Meera: Mina, do you

have trouble in

debugging?

Mina: Yes, is there

anyone who has done

this?

Personal Agent

[Meera]

Jane-ML: Jane has done a similar debugging problem before. She is available now and willing to help.

compiletheme

Compiling output

[TOxAIA新竹分校] 深度學習與Kaggle實戰![[TOxAIA新竹分校] 深度學習與Kaggle實戰](https://cdn.slidesharecdn.com/ss_thumbnails/20181206003-181210031001-thumbnail.jpg?width=560&fit=bounds)

![[TOxAIA新竹分校] 深度學習與Kaggle實戰](https://cdn.slidesharecdn.com/ss_thumbnails/20181206003-181210031001-thumbnail.jpg?width=560&fit=bounds)

![[TOxAIA新竹分校] 深度學習與Kaggle實戰](https://cdn.slidesharecdn.com/ss_thumbnails/20181206003-181210031001-thumbnail.jpg?width=560&fit=bounds)

![[TOxAIA新竹分校] 深度學習與Kaggle實戰](https://cdn.slidesharecdn.com/ss_thumbnails/20181206003-181210031001-thumbnail.jpg?width=560&fit=bounds)

[TOxAIA新竹分校] 深度學習與Kaggle實戰台灣資料科學年會 1) Kaggle is the largest platform for AI and data science competitions, acquired by Google in 2017. It has been used by companies like Bosch, Mercedes, and Asus for challenges like improving production lines, accelerating testing processes, and component failure prediction.

2) The document discusses the author's experiences winning silver medals in Kaggle competitions involving camera model identification, passenger screening algorithms, and pneumonia detection. For camera model identification, the author used transfer learning with InceptionResNetV2 and high-pass filters to identify camera models from images.

3) For passenger screening, the author modified a 2D CNN to 3D and used 3D data augmentation to rank in the top 7% of the $1

[台灣人工智慧學校] Bridging AI to Precision Agriculture through IoT![[台灣人工智慧學校] Bridging AI to Precision Agriculture through IoT](https://cdn.slidesharecdn.com/ss_thumbnails/hc-2nd-openingai-school-181206104858-thumbnail.jpg?width=560&fit=bounds)

![[台灣人工智慧學校] Bridging AI to Precision Agriculture through IoT](https://cdn.slidesharecdn.com/ss_thumbnails/hc-2nd-openingai-school-181206104858-thumbnail.jpg?width=560&fit=bounds)

![[台灣人工智慧學校] Bridging AI to Precision Agriculture through IoT](https://cdn.slidesharecdn.com/ss_thumbnails/hc-2nd-openingai-school-181206104858-thumbnail.jpg?width=560&fit=bounds)

![[台灣人工智慧學校] Bridging AI to Precision Agriculture through IoT](https://cdn.slidesharecdn.com/ss_thumbnails/hc-2nd-openingai-school-181206104858-thumbnail.jpg?width=560&fit=bounds)

[台灣人工智慧學校] Bridging AI to Precision Agriculture through IoT台灣資料科學年會 The document describes a system for precision agriculture using IoT. It involves sensors collecting environmental data from fields and feeding it to a control board connected to actuators like irrigation systems. The data is also sent to an IoTtalk engine and AgriTalk server in the cloud for analysis and remote access/control through an AgriGUI interface. Equations were developed to estimate nutrient levels like nitrogen from sensor readings to help optimize crop growth.

[2018 台灣人工智慧學校校友年會] 產業經驗分享: 如何用最少的訓練樣本,得到最好的深度學習影像分析結果,減少一半人力,提升一倍品質 / 李明達![[2018 台灣人工智慧學校校友年會] 產業經驗分享: 如何用最少的訓練樣本,得到最好的深度學習影像分析結果,減少一半人力,提升一倍品質 / 李明達](https://cdn.slidesharecdn.com/ss_thumbnails/lee-181130104127-thumbnail.jpg?width=560&fit=bounds)

![[2018 台灣人工智慧學校校友年會] 產業經驗分享: 如何用最少的訓練樣本,得到最好的深度學習影像分析結果,減少一半人力,提升一倍品質 / 李明達](https://cdn.slidesharecdn.com/ss_thumbnails/lee-181130104127-thumbnail.jpg?width=560&fit=bounds)

![[2018 台灣人工智慧學校校友年會] 產業經驗分享: 如何用最少的訓練樣本,得到最好的深度學習影像分析結果,減少一半人力,提升一倍品質 / 李明達](https://cdn.slidesharecdn.com/ss_thumbnails/lee-181130104127-thumbnail.jpg?width=560&fit=bounds)

![[2018 台灣人工智慧學校校友年會] 產業經驗分享: 如何用最少的訓練樣本,得到最好的深度學習影像分析結果,減少一半人力,提升一倍品質 / 李明達](https://cdn.slidesharecdn.com/ss_thumbnails/lee-181130104127-thumbnail.jpg?width=560&fit=bounds)