Ad

Text Analytics for Dummies 2010

- 1. Text Analytics for DummiesSeth GrimesAlta Plana Corporation@sethgrimes– 301-270-0795 -- http://altaplana.comText Analytics Summit 2010WorkshopMay 24, 2010

- 2. IntroductionSeth Grimes –Principal Consultant with Alta Plana Corporation.Contributing Editor, IntelligentEnterprise.com.Channel Expert, BeyeNETWORK.com.Contributor, KDnuggets.com.Instructor, The Data Warehousing Institute, tdwi.org.Founding Chair, Sentiment Analysis Symposium.Founding Chair, Text Analytics Summit.

- 3. PerspectivesPerspective #1: You’re a business analyst or other “end user” or a consultant/integrator.You (or your clients) have lots of text. You want an automated way to deal with it.Perspective #2: You work in IT.You support end users who have lots of text.Perspective #3: You work for a solution provider.Welcome to my Reeducation Camp.Perspective #4: Other?You just want to learn about text analytics.

- 4. Value in Data“The bulk of information value is perceived as coming from data in relational tables. The reason is that data that is structured is easy to mine and analyze.”-- Prabhakar Raghavan, Yahoo ResearchYet it’s a truism that 80% of enterprise-relevant information originates in “unstructured” form.

- 5. Unstructured SourcesConsider:Web pages, E-mail, news & blog articles, forum postings, and other social media.Contact-center notes and transcripts.Surveys, feedback forms, warranty claims.And every kind of corporate documents imaginable.These sources may contain “traditional” data.The Dow fell 46.58, or 0.42 percent, to 11,002.14. The Standard & Poor's 500 index fell 1.44, or 0.11 percent, to 1,263.85, and the Nasdaq composite gained 6.84, or 0.32 percent, to 2,162.78.

- 6. Key Message -- #1If you are not analyzing text – if you're analyzing only transactional information – you're missing opportunity or incurring risk...“Industries such as travel and hospitality and retail live and die on customer experience.” -- Clarabridge CEO Sid BanerjeeThis is why you’re here.It’s the “Unstructured Data” challenge.

- 7. Key Message -- #2Text analytics can boost business results...“Organizations embracing text analytics all report having an epiphany moment when they suddenly knew more than before.” -- Philip Russom, the Data Warehousing Institute...via established BI / data-mining programs, or independently.Text Analytics is an answer to the “Unstructured Data” challenge

- 8. Key Message -- #3Some folks may need to expand their views of what BI and business analytics are about.Others can do text analytics without worrying about BI or data mining.Let’s deal with text-BI first...

- 9. Text-BI: Back to the FutureBusiness intelligence (BI) as defined in 1958:In this paper, business is a collection of activities carried on for whatever purpose, be it science, technology, commerce, industry, law, government, defense, et cetera... The notion of intelligence is also defined here... as “the ability to apprehend the interrelationships of presented facts in such a way as to guide action towards a desired goal.”-- Hans Peter Luhn, “A Business Intelligence System,”IBM Journal, October 1958

- 10. Document input and processingKnowledge handling is key

- 11. Business IntelligenceTraditional BI feeds off:Traditional BI feeds off:"SUMLEV","STATE","COUNTY","STNAME","CTYNAME","YEAR","POPESTIMATE",50,19,1,"Iowa","Adair County",1,8243,4036,4207,446,225,221,994,50950,19,1,"Iowa","Adair County",2,8243,4036,4207,446,225,221,994,50950,19,1,"Iowa","Adair County",3,8212,4020,4192,442,222,220,987,50550,19,1,"Iowa","Adair County",4,8095,3967,4128,432,208,224,935,48850,19,1,"Iowa","Adair County",5,8003,3924,4079,405,186,219,928,49550,19,1,"Iowa","Adair County",6,7961,3892,4069,384,183,201,907,47250,19,1,"Iowa","Adair County",7,7875,3855,4020,366,179,187,871,45450,19,1,"Iowa","Adair County",8,7795,3817,3978,343,162,181,841,43950,19,1,"Iowa","Adair County",9,7714,3777,3937,338,159,179,805,417It runs off:

- 12. Business IntelligenceTraditional BI produces:http://www.pentaho.com/products/dashboards/

- 13. Unstructured SourcesSome information doesn’t come from a data file.Axin and Frat1 interact with dvl and GSK, bridging Dvl to GSK in Wnt-mediated regulation of LEF-1.Wnt proteins transduce their signals through dishevelled (Dvl) proteins to inhibit glycogen synthase kinase 3beta (GSK), leading to the accumulation of cytosolic beta-catenin and activation of TCF/LEF-1 transcription factors. To understand the mechanism by which Dvl acts through GSK to regulate LEF-1, we investigated the roles of Axin and Frat1 in Wnt-mediated activation of LEF-1 in mammalian cells. We found that Dvl interacts with Axin and with Frat1, both of which interact with GSK. Similarly, the Frat1 homolog GBP binds Xenopus Dishevelled in an interaction that requires GSK. We also found that Dvl, Axin and GSK can form a ternary complex bridged by Axin, and that Frat1 can be recruited into this complex probably by Dvl. The observation that the Dvl-binding domain of either Frat1 or Axin was able to inhibit Wnt-1-induced LEF-1 activation suggests that the interactions between Dvl and Axin and between Dvl and Frat may be important for this signaling pathway. Furthermore, Wnt-1 appeared to promote the disintegration of the Frat1-Dvl-GSK-Axin complex, resulting in the dissociation of GSK from Axin. Thus, formation of the quaternary complex may be an important step in Wnt signaling, by which Dvl recruits Frat1, leading to Frat1-mediated dissociation of GSK from Axin.www.ncbi.nlm.nih.gov/entrez/query.fcgi?db=PubMed&cmd=Retrieve&list_uids=10428961&dopt=Abstractwww.stanford.edu/%7ernusse/wntwindow.html

- 14. Unstructured SourcesSources may mix fact and sentiment:When you walk in the foyer of the hotel it seems quite inviting but the room was very basis and smelt very badly of stale cigarette smoke, it would have been nice to be asked if we wanted a non smoking room, I know the room was very cheap but I found this very off putting to have to sleep with the smell, and it was to cold to leave the window open. Excellent location for restaurants and barsOverall I would never sell/buy a Motorola V3 unless it is demanded. My life would be way better without this phone being around (I am being 100% serious) Motorola should pay me directly for all the problems I have had with these phones. :-(

- 15. Text and ApplicationsWhat do people do with electronic documents?Publish, Manage, and Archive.Index and Search.Categorize and Classify according to metadata & contents.Information Extraction.For textual documents, text analytics enhances #1 & #2 and enables #3 & #4.You need linguistics to do #1 & #4 well, to deal with Semantics.Search is not enough...

- 16. SearchSearch, a.k.a. Information Retrieval, is just a start.Search doesn’t help you discover things you’re unaware of.Search results often lack relevance.Search finds documents, not knowledge.Articles from a forum siteArticles from 1987

- 17. Search + SemanticsText analytics adds semantic understanding of –Entities: names, e-mail addresses, phone numbers.Concepts: abstractions of entities.Facts and relationships.Abstract attributes, e.g., “expensive,” “comfortable.”Opinions, sentiments: attitudinal information.

- 18. Information AccessText analytics enables results that suit the information and the user, e.g., answers –

- 19. Presentation of search results can be enhanced by knowledge discovery, e.g., clustering.touchgraph.com/ TGGoogleBrowser.php?start=text%20analytics

- 20. Information AccessText analytics transforms Information Retrieval (IR) into Information Access (IA).Search terms become queries.Indexed pages are mined for larger-scale structure, for instance, information categories.Search results are presented intelligently.Capabilities include Information Extraction (IE).Text analytics ≈ text data mining.

- 21. Beyond SearchText Mining = Data Mining of textual sources.Clustering and Classification.Link Analysis.Association Rules.Predictive Modelling.Regression.Forecasting.Text Mining = Knowledge Discovery in Text.

- 22. Text Analytics Uncovers Structure

- 23. Text Analytics DefinitionText analytics automates what researchers, writers, scholars, and all the rest of us have been doing for years. Text analytics --Applies linguistic and/or statistical techniques to extract concepts and patterns that can be applied to categorize and classify documents, audio, video, images.Transforms “unstructured” information into data for application of traditional analysis techniques.Unlocks meaning and relationships in large volumes of information that were previously unprocessable by computer.

- 24. Text Analytics PipelineTypical steps in text analytics include –Retrieve documents for analysis. Apply statistical &/ linguistic &/ structural techniques to identify, tag, and extract entities, concepts, relationships, and events (features) within document sets.Apply statistical pattern-matching & similarity techniques to classify documents and organize extracted features according to a specified or generated categorization / taxonomy.– via a pipeline of statistical & linguistic steps.Let’s look at them...

- 27. “Statistical information derived from word frequency and distribution is used by the machine to compute a relative measure of significance, first for individual words and then for sentences. Sentences scoring highest in significance are extracted and printed out to become the auto-abstract.”H.P. Luhn, The Automatic Creation of Literature Abstracts, IBM Journal, 1958.

- 28. Text ModellingThe text content of a document can be considered an unordered “bag of words.”Particular documents are points in a high-dimensional vector space.Salton, Wong & Yang, “A Vector Space Model for Automatic Indexing,” November 1975.

- 29. Text ModellingWe might construct a document-term matrix...D1 = "I like databases"D2 = "I hate hate databases"and use a weighting such as TF-IDF (term frequency–inverse document frequency)…in computing the cosine of the angle between weighted doc-vectors to determine similarity.http://en.wikipedia.org/wiki/Term-document_matrix

- 30. Text ModellingAnalytical methods make text tractable.Latent semantic indexing utilizing singular value decomposition for term reduction / feature selection.Creates a new, reduced concept space.Takes care of synonymy, polysemy, stemming, etc.Classification technologies / methods:Naive Bayes.Support Vector Machine.K-nearest neighbor.

- 31. Text ModellingIn the form of query-document similarity, this is Information Retrieval 101.See, for instance, Salton & Buckley, “Term-Weighting Approaches in Automatic Text Retrieval,” 1988.If we want to get more out of text, we have to do still more...

- 32. “Tri-grams” here are pretty good at describing the Whatness of the source text. Yet...“This rather unsophisticated argument on ‘significance’ avoids such linguistic implications as grammar and syntax... No attention is paid to the logical and semantic relationships the author has established.”-- Hans Peter Luhn, 1958

- 33. Why Do We Need Linguistics?The Dow fell 46.58, or 0.42 percent, to 11,002.14. The Standard & Poor's 500 index gained1.44, or 0.11 percent, to 1,263.85.The Dow gained 46.58, or 0.42 percent, to 11,002.14. The Standard & Poor's 500 index fell 1.44, or 0.11 percent, to 1,263.85.John pushed Max. He fell.John pushed Max. He laughed.Time flies like an arrow. Fruit flies like a banana.(Luca Scagliarini, Expert System; Laure Vieu and Patrick Saint-Dizier; Groucho Marx.)

- 34. New York Times,September 8, 1957Anaphora / coreference: “They”

- 38. Information ExtractionWhen we understand, for instance, parts of speech (POS) – <subject> <verb> <object> – we’re in a position to discern facts and relationships...

- 40. Information ExtractionLet's see text augmentation (tagging) in action. We'll use GATE, an open-source tool, text from sentiment-analysis article used earlier...

- 44. Information ExtractionFor content analysis, key in on extracting information.Annotated text is typically marked up with XML.If extraction to databases: Entities and concepts (features) are like dimensions in a standard BI model. Both classes of object are hierarchically organized and have attributes.We can have both discovered and predetermined classifications (taxonomies) of text features.

- 45. An IBM representation: “The standard features are stored in the STANDARD_KW table, keywords with their occurrences in the KEYWORD_KW_OCC table, and the text list features in the TEXTLIST_TEXT table. Every feature table contains the DOC_ID as a reference to the DOCUMENT table.”http://www.ibm.com/developerworks/db2/library/techarticle/dm-0804nicola/

- 46. Semi-Structured SourcesAn e-mail message is “semi-structured,” which facilitates extracting metadata --Date: Sun, 13 Mar 2005 19:58:39 -0500From: Adam L. Buchsbaum <[email protected]>To: Seth Grimes <[email protected]>Subject: Re: Papers on analysis on streaming dataseth, you should contact diveshsrivastava, [email protected] at&t labs data streaming technology.AdamSurveys are also typically s-s in a different way...

- 47. Structured &‘Unstructured’ InformationThe respondent is invited to explain his/her attitude:

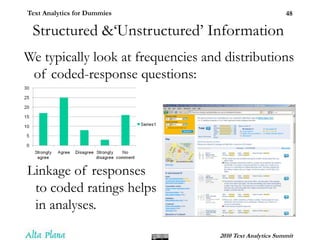

- 48. Structured &‘Unstructured’ InformationWe typically look at frequencies and distributions of coded-response questions:Linkage of responses to coded ratings helps in analyses.

- 49. Sentiment Analysis“Sentiment analysis is the task of identifying positive and negative opinions, emotions, and evaluations.” -- Wilson, Wiebe & Hoffman, 2005, “Recognizing Contextual Polarity in Phrase-Level Sentiment Analysis”From Dell’s IdeaStorm.com --“Dell really... REALLY need to stop overcharging... and when i say overcharing... i mean atleast double what you would pay to pick up the ram yourself.”

- 50. Sentiment AnalysisApplications include:Brand / Reputation Management.Competitive intelligence.Customer Experience Management.Enterprise Feedback Management.Quality improvement.Trend spotting.

- 51. Steps in the Right Direction

- 53. ... And Missteps“Kind” = type, variety, not a sentiment.Complete misclassificationExternal referenceUnfiltered duplicates

- 54. Sentiment ComplicationsThere are many complications.Sentiment may be of interest at multiple levels.Corpus / data space, i.e., across multiple sources.Document.Statement / sentence.Entity / topic / concept.Human language is noisy and chaotic!Jargon, slang, irony, ambiguity, anaphora, polysemy, synonymy, etc.Context is key. Discourse analysis comes into play.Must distinguish the sentiment holder from the object: Greenspan said the recession will…

- 55. ApplicationsText analytics has applications in –Intelligence & law enforcement.Life sciences.Media & publishing including social-media analysis and contextual advertizing.Competitive intelligence.Voice of the Customer: CRM, product management & marketing.Legal, tax & regulatory (LTR) including compliance.Recruiting.

- 56. Getting to Web 3.0Text analytics enables Web 3.0 and the Semantic Web.Automated content categorization and classification.Text augmentation: metadata generation, content tagging.Information extraction to databases.Exploratory analysis and visualization.

- 57. Users’ PerspectiveI estimate a $425 million global market in 2009, up from $350 in 2008. I foresee 25% growth in 2010.Last year, I published a study report, “Text Analytics 2009: User Perspectives on Solutions and Providers.”I relayed findings from a survey that asked…

- 58. Primary ApplicationsWhat are your primary applications where text comes into play?

- 59. AnalyzedTextual InformationWhat textual information are you analyzing or do you plan to analyze?Current users responded:

- 60. ExtractedInformaitonDo you need (or expect to need) to extract or analyze:

- 62. Text Analytics for DummiesSeth GrimesAlta Plana Corporation@sethgrimes– 301-270-0795 -- http://altaplana.comText Analytics Summit 2010WorkshopMay 24, 2010