Ad

Thai Text processing by Transfer Learning using Transformer (Bert)

- 1. ประมวลภาษาแบบ Transfer Learning ด้วย Transformers (BERT) Dr. Kobkrit Viriyayudhakorn iApp Technology Co., Ltd.

- 2. Outlines • ทําไม Transfer Learning ด้วย Transformer ถึงน่าสนใจ? • ประวัติของการประมวลผลข้อความในยุค Deep Learning • Bert: Model Architecture • Bert: Pre-training • Bert: Fine-Tuning • Bert: State of the Art • Better than BERT?

- 3. ทําไม Transfer Learning ด้วย Transformer ถึงน่าสนใจ? • True-Voice Intent Classification • Thai QA (*Not same test set) 88.967% (BERT, 2019)83.498% (ULMFit,2018) Dr.QA (Bi-LSTM, 2017) 34.0% (Exact Match) iApp QA (BERT, 2019) 45.7% (Exact Match) https://github.com/PyThaiNLP/classification-benchmarks https://ai.iapp.co.th/intent-class https://ai.iapp.co.th/qaNSC2019 QA Data Science & Engineering Workshop 2019: The future of Thai NLP

- 4. ทําไม Transfer Learning ด้วย Transformer ถึงน่าสนใจ? • Text Classification • Sentiment Analysis • Intent Classification • Any Classifications • Question Answering • Machine Translation • Text Summarization • Name Entity Recognition • Paraphrasing • Natural Language Inference • Coreference Resolution • Sentence Completion • Word sense disambiguation • Language Generation arxiv.org/abs/1910.12840

- 5. ประวัติการประมวลผลข้อความในยุค Deep Learning • One-hot Encoding • Transfer Learning • Word Global Representation • Word-Embbedding • Word2Vec (Thai2Vec) • Glove • ULMFit (Thai2Fit) • Word Contextual Representation • LSTM+Conv1D • Elmo • Subword Contextual Representations • Transformers • GPT-1, GPT-2 (OpenAI) • BERT (Google) • Tranformer XL (Google) • Xlnet (Google) • XLM (Facebook) • RoBERTa (Facebook) • DistilBERT (Victor Sanh) • CTRL (Saleforce) • Albert (Google)

- 6. One-hot Encoding Word Sequence One-hot VectorText I really love my dog [ 4 <OOV> 2 1 3 ]

- 7. One-hot Encoding • # Dimension = # Vocabulary • English Languages: 25,000 (without NE) – 300,000 (with most NE) vocabulary. • Curse of dimensionality • Too sparse dimensional spaces => Never have enough data points => Can not learn anything. • Get low accuracy, Process too long.

- 8. Transfer Learning in NLP Pretraining Fine Tuning Decision Model Universal Language Model • Create Fundamental Language Model • Epic Corpus • Wikipedia • No Label Need • 5-7 days on TPUv3 Contextual Language Model • Adjust Weight for Specific Task • Small Corpus • True-Voice Intent Class • Label data is need • 1 hour on TPUv3 ML Model • Word-Embedding: LSTM • Transformers: Dense

- 10. Word-Embedding • Libraries • Word2Vec (Thai2Vec) • Glove • ULMFit (Thai2Fit) • FastText • Context Independent • 1 Word = 1 Global Representation • Does not care sequence in Training • Word Arithmetic Pretraining (Embedding) Fine Tuning Word2Vec (Thai2Vec) Glove ULMFit (Thai2Fit) FastText RNN GRU LSTM Conv1D Dense SVM SVR Logit Softmax … Decision Model X Static Word Vectors Text https://towardsdatascience.com/introduction-to-word-embedding-and-word2vec-652d0c2060fa

- 12. Elmo • Context Dependent = Need whole input sentence. • 1 Word = Many Representations • He went to the prison cell with his cell phone to extract blood cell samples from inmates. • Bi-LSTM + Character-based Pretraining Fine Tuning Elmo Model Logit Softmax … Decision Model Contextual Word Vectors X Text https://arxiv.org/abs/1802.05365

- 13. Bert • Using Transformers • 1 Subword = Many Contextual Representations (Aimed to reduce vocabulary size) • Byte-Pair Encoding (BPE) • Universal Languages • Don’t care on the splitting correctness (like in Thai, China) • Similar to TCC • https://github.com/bheinzerlin g/bpemb Pretraining Fine Tuning Bert Logit Softmax … Decision Model Bert Fine Tuned Weight with Label Data Language Model Weight Weight for Downstream Task https://github.com/google-research/bert

- 14. Bert: Model Architecture Equal to Open AI Transformer • 12 Blocks (Heads) • 768 Hidden Unit • 12 Attention Heads • 110M parameters State of the Art Model • 24 Blocks • 1024 Hidden Unit • 16 Attention Heads • 340M parameters https://jalammar.github.io/illustrated-bert/ https://github.com/google-research/bert

- 15. Bert: Model Architecture Size of hidden unit = 768 Sequence Max Length = 512 Units <1 Head> 12 Layers/Blocks https://jalammar.github.io/illustrated-bert/

- 23. Bert: Self-Attention in Matrix Styles https://jalammar.github.io/illustrated-bert/

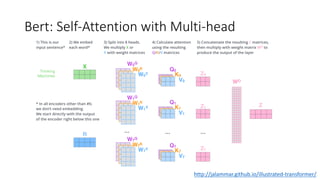

- 26. Bert: Self-Attention with Multi-head http://jalammar.github.io/illustrated-transformer/

- 27. Bert: Self-Attention with Multi-head http://jalammar.github.io/illustrated-transformer/

- 28. Bert: Self-Attention with Multi-head http://jalammar.github.io/illustrated-transformer/

- 29. Bert: Self-Attention with Multi-head Single Head Multi Head http://jalammar.github.io/illustrated-transformer/

- 30. Bert: Self-Attention with Multi-head

- 31. Bert: Model Summary http://jalammar.github.io/illustrated-transformer/

- 32. Bert: Model Summary #2 http://jalammar.github.io/illustrated-transformer/

- 33. Bert: Using Layers Output https://jalammar.github.io/illustrated-bert/

- 35. Bert: Pretraining #1 • Step 1: BERT ไร้ Weight ต้องหาความรู้ด้านภาษาก่อน ไม่มี Weight ไม่มี Weight Pretraining Fine Tuning Decision Model Bert ไร้ซึFงสมองหรือ สิFงใดๆทัIงสิIน ?

- 36. ตัIงใจเรียน Bert: Pretraining #2 • Step 2: BERT นําเข้าข้อมูล Wikipedia + Google Book ภาษาอังกฤษ แล้ว Challenge ตัวเอง (1) หาคําทีFหายไป โดยการใส่ [MARK] แบบสุ่ม [CLS] [MASK] man [MASK] up , put his [MASK] on phil [MASK] ##mon ' s head [SEP] [SEP] the man jumped up , put his basket on phil ##am ##mon ' s head Input to bert Output from bert … the man jumped up , put his basket on philammon ' s head … Preprocessing + Random marking Weight is learning Learn and Adapt from correct Answer Missing (Marking) Word Prediction Text Representation Vector Output from model Pretraining Fine Tuning Decision Model

- 38. Bert: Pretraining #4 (Real Example) • Input: this is one of the most amazing stories i have ever seen . < br / > < br / > if this film had been directed by larry clark , then this story about a school shooting probably would have been shown through the eyes of the killer and whatever led that person to go insane in the first place . < br / > < br / > instead , the plot focuses mainly on the aftermath of a school shooting , and how it effect ##ed the victims who survived . < br / > < br / > i had seen busy phillips in other films before , but her performance in this movie is by far , her best . the … (512 chopped) • INFO:tensorflow:tokens: [CLS] this is one of the most amazing stories i have ever seen . < br / > < br / > if this film had been directed by larry clark , then this story about a school shooting probably would have been shown through the eyes of the killer and whatever led that person to go insane in the first place . < br / > < br / > instead , the plot focuses mainly on the aftermath of a school shooting , and how it effect ##ed the victims who survived . < br / > < br / > i had seen busy phillips in other films before , but her performance in this movie is by far , her best . the [SEP] [SEP] • INFO:tensorflow:input_ids: 101 2023 2003 2028 1997 1996 2087 6429 3441 1045 2031 2412 2464 1012 1026 7987 1013 1028 1026 7987 1013 1028 2065 2023 2143 2018 2042 2856 2011 6554 5215 1010 2059 2023 2466 2055 1037 2082 5008 2763 2052 2031 2042 3491 2083 1996 2159 1997 1996 6359 1998 3649 2419 2008 2711 2000 2175 9577 1999 1996 2034 2173 1012 1026 7987 1013 1028 1026 7987 1013 1028 2612 1010 1996 5436 7679 3701 2006 1996 10530 1997 1037 2082 5008 1010 1998 2129 2009 3466 2098 1996 5694 2040 5175 1012 1026 7987 1013 1028 1026 7987 1013 1028 1045 2018 2464 5697 8109 1999 2060 3152 2077 1010 2021 2014 2836 1999 2023 3185 2003 2011 2521 1010 2014 2190 1012 1996 102 • INFO:tensorflow:input_mask: 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 • INFO:tensorflow:segment_ids: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 c

- 39. Bert: Pretraining #5 (Position Encoding) Using SIN() and COS() for telling positions http://jalammar.github.io/illustrated-transformer/

- 40. Bert: Pretraining #6 (Position Encoding) http://jalammar.github.io/illustrated-transformer/

- 41. Bert: Pretraining #6 • Step 3: (2) ให้ประโยคแรกและประโยคถัดไป แล้วให้ BERT บอกว่าเป็นประโยคต่อกันหรือไม่ [CLS] the man went to the store [SEP] he bought a gallon of milk [SEP] Input to bert … the man went to the store. he bought a gallon of milk … Preprocessing Weight is learning Learn from correct Answer Predict Next Sentence Task Output from bert Text Representation Vector Output from model 1 = Yes Pretraining Fine Tuning Decision Model ตัIงใจเรียน

- 42. Bert: Pretraining #7 • Step 4: BERT มี Language Model แล้ว มี Weight มี Weight Bert เรียนจบแล้ว เย้ๆ Pretraining Fine Tuning Decision Model

- 43. Bert: Pretraining #8 มี Weight มี Weight • หรือ เราสามารถ Download Weight ของ Bert ทีF Pre-train แล้วมาจาก https://github.com/google-research/bert (English) หรือ https://github.com/ThAIKeras/bert (Thai) ได้เลย เอาไปเลยน้องพีF Bert พูดภาษาคนรู้เรืFอง แล้วววววว เอาไปเลยพีFน้อง Pretraining Fine Tuning Decision Model

- 44. มี Weight มี Weight Bert มาสมัครงานครับ

- 45. มี Weight มี Weight ตอนนีIแปลงประโยคให้เป็นตัวเลข Vector ได้ครับ ตอนนีIคุณทําอะไรได้บ้าง

- 46. ความสามารถของ BERT หลัง Pre-train มี Weight มี Weight • หากใส่ข้อความลงไป ก็จะ Subword Representation ใน Context ของประโยคนัIนออกมา I mean, part of the beauty of me is that I’m very rich. [CLS] I mean part of the beaut ##y of me is that I ##m very rich [SEP] [SEP] Preprocessing Bert (Base) Output Parameters= 1 Final Layer x 1 Batch Size x 12 Attention Heads x 512 max-length x 768 hidden features x = 4,718,592 output parameters / sentences

- 47. มี Weight มี Weight อะไรคือ Sentimental Analysis หรอครับ?? แล้วคุณทําเป็นพวก Sentimental Analysis แยกแยะข้อความว่าเป็น บวก เป็น ลบได้หรือยัง

- 48. มี Weight มี Weight ว๊อทททททท ยังใช้งานจริงไม่ได้ คุณยังไม่มีประสบการณ์ทํางานนะ ฝึกงานฟรีไปก่อนแล้วกัน

- 49. BERT ฝึกงาน #1 มี Weight มี Weight • สมมุติเราอยากให้ BERT ทํางานเป็นคนแยกแยะอารมณ์ของข้อความให้กับเรา (Pos, Neg, Neu) • เราสามารถเขียน Classification Model ของเราต่อท้ายผลของ Bert จะเป็นอะไรก็ได้ ตามใจเรา Logit, DNN, LSTM ก็ได้ I mean part of the beauty of me is that I’m very rich. [CLS] I mean part of the beaut ##y of me is that I ##m very rich [SEP] [SEP] Preprocessing Sentimental Classification (Logit, DNN or LSTM or Anythings…) เขียน Model ต่อท้ายของผลของ BERT Sentimental คืออะไรฟร่ะ งง งวย ไม่มี ความรู้ อารายเยย Pretraining Fine Tuning Decision Model

- 50. BERT ฝึกงาน #2 • หา Dataset ทีFมี Label Class (Pos, Neg, Neu) มาสอนงาน (Fine-tune) น้อง Bert • อาทิเช่น • Sentiment140 (English) (https://www.kaggle.com/kazanova/sentiment140) • Wongnai Corpus (1-5) (https://github.com/wongnai/wongnai-corpus) • อย่างน้อย 10,000 แถวขึIน (ยิFงเยอะ ยิFงดี) Pretraining Fine Tuning Decision Model Sentiment 140

- 51. BERT ฝึกงาน #3 (Fine Tuning) [CLS] is upset that he can't update his face book by texting it [SEP] [SEP] Our’s Sentimental Classification (Logits or Dense or LSTM or Any models) Weight Learning เขียน Model ต่อท้ายของผล ของ BERT Sentiment 140 is upset that he can't update his Facebook by texting it...+ SAD Happy? Learn and Adapt from correct Answer It is actually SAD, Go re-learn. Preprocessing Pretraining Fine Tuning Decision Model Weight Learning Weight Frozen Weight Frozen

- 52. BERT ฝึกงาน #4 (Fine Tuning) • และแล้วเราก็ได้ Classifier ทีFสามารถทํานายอารมณ์ได้จาก BERT [CLS] is upset that he can't update his face book by texting it [SEP] [SEP] Our’s Sentimental Classification (DNN or LSTM or Any model you want) Weight Learned SADOutput Input ผ่าน PRO แล้วจ้า Pretraining Fine Tuning Decision Model Weight Learned Weight Frozen Weight Frozen

- 53. Bert: Finetuning in several tasks https://github.com/google-research/bert

![One-hot Encoding

Word Sequence One-hot VectorText

I really love

my dog

[ 4 <OOV> 2 1 3 ]](https://image.slidesharecdn.com/textprocessingusingtransformer-191104114500/85/Thai-Text-processing-by-Transfer-Learning-using-Transformer-Bert-6-320.jpg)

![ตัIงใจเรียน

Bert: Pretraining #2

• Step 2: BERT นําเข้าข้อมูล Wikipedia + Google Book ภาษาอังกฤษ แล้ว Challenge

ตัวเอง (1) หาคําทีFหายไป โดยการใส่ [MARK] แบบสุ่ม

[CLS] [MASK] man [MASK] up , put his [MASK] on phil [MASK] ##mon ' s head [SEP] [SEP]

the man jumped up , put his basket on phil ##am ##mon ' s head

Input to bert

Output from bert

… the man jumped up ,

put his basket on

philammon ' s head …

Preprocessing

+ Random marking

Weight is learning

Learn and Adapt

from correct Answer

Missing (Marking) Word Prediction

Text Representation

Vector

Output from model

Pretraining

Fine

Tuning

Decision

Model](https://image.slidesharecdn.com/textprocessingusingtransformer-191104114500/85/Thai-Text-processing-by-Transfer-Learning-using-Transformer-Bert-36-320.jpg)

![Bert: Pretraining #4 (Real Example)

• Input: this is one of the most amazing stories i have ever seen . < br / > < br / > if this film had been directed by larry clark , then

this story about a school shooting probably would have been shown through the eyes of the killer and whatever led that person to

go insane in the first place . < br / > < br / > instead , the plot focuses mainly on the aftermath of a school shooting , and how it

effect ##ed the victims who survived . < br / > < br / > i had seen busy phillips in other films before , but her performance in this

movie is by far , her best . the … (512 chopped)

• INFO:tensorflow:tokens: [CLS] this is one of the most amazing stories i have ever seen . < br / > < br / > if this film had been

directed by larry clark , then this story about a school shooting probably would have been shown through the eyes of the killer and

whatever led that person to go insane in the first place . < br / > < br / > instead , the plot focuses mainly on the aftermath of a

school shooting , and how it effect ##ed the victims who survived . < br / > < br / > i had seen busy phillips in other films before ,

but her performance in this movie is by far , her best . the [SEP] [SEP]

• INFO:tensorflow:input_ids: 101 2023 2003 2028 1997 1996 2087 6429 3441 1045 2031 2412 2464 1012 1026 7987 1013 1028

1026 7987 1013 1028 2065 2023 2143 2018 2042 2856 2011 6554 5215 1010 2059 2023 2466 2055 1037 2082 5008 2763 2052

2031 2042 3491 2083 1996 2159 1997 1996 6359 1998 3649 2419 2008 2711 2000 2175 9577 1999 1996 2034 2173 1012 1026

7987 1013 1028 1026 7987 1013 1028 2612 1010 1996 5436 7679 3701 2006 1996 10530 1997 1037 2082 5008 1010 1998 2129

2009 3466 2098 1996 5694 2040 5175 1012 1026 7987 1013 1028 1026 7987 1013 1028 1045 2018 2464 5697 8109 1999 2060

3152 2077 1010 2021 2014 2836 1999 2023 3185 2003 2011 2521 1010 2014 2190 1012 1996 102

• INFO:tensorflow:input_mask: 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

• INFO:tensorflow:segment_ids: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 c](https://image.slidesharecdn.com/textprocessingusingtransformer-191104114500/85/Thai-Text-processing-by-Transfer-Learning-using-Transformer-Bert-38-320.jpg)

![Bert: Pretraining #6

• Step 3: (2) ให้ประโยคแรกและประโยคถัดไป แล้วให้ BERT บอกว่าเป็นประโยคต่อกันหรือไม่

[CLS] the man went to the store [SEP] he bought a gallon of milk [SEP]

Input to bert

… the man went to the store.

he bought a gallon of milk …

Preprocessing

Weight is learning

Learn from correct

Answer

Predict Next Sentence Task

Output from bert

Text Representation

Vector

Output from model

1 = Yes

Pretraining

Fine

Tuning

Decision

Model

ตัIงใจเรียน](https://image.slidesharecdn.com/textprocessingusingtransformer-191104114500/85/Thai-Text-processing-by-Transfer-Learning-using-Transformer-Bert-41-320.jpg)

![ความสามารถของ BERT หลัง

Pre-train

มี Weight มี Weight

• หากใส่ข้อความลงไป ก็จะ Subword Representation ใน Context ของประโยคนัIนออกมา

I mean, part of the beauty of me is that I’m very rich.

[CLS] I mean part of the beaut ##y of me is that I ##m very rich [SEP] [SEP]

Preprocessing

Bert (Base)

Output Parameters=

1 Final Layer x

1 Batch Size x

12 Attention Heads x

512 max-length x

768 hidden features x

= 4,718,592 output parameters /

sentences](https://image.slidesharecdn.com/textprocessingusingtransformer-191104114500/85/Thai-Text-processing-by-Transfer-Learning-using-Transformer-Bert-46-320.jpg)

![BERT ฝึกงาน #1

มี Weight มี Weight

• สมมุติเราอยากให้ BERT ทํางานเป็นคนแยกแยะอารมณ์ของข้อความให้กับเรา (Pos, Neg, Neu)

• เราสามารถเขียน Classification Model ของเราต่อท้ายผลของ Bert จะเป็นอะไรก็ได้ ตามใจเรา Logit, DNN, LSTM ก็ได้

I mean part of the beauty of me is that I’m very rich.

[CLS] I mean part of the beaut ##y of me is that I ##m very rich [SEP] [SEP]

Preprocessing

Sentimental Classification (Logit, DNN

or LSTM or Anythings…)

เขียน Model ต่อท้ายของผลของ BERT

Sentimental คืออะไรฟร่ะ งง งวย ไม่มี

ความรู้ อารายเยย

Pretraining

Fine

Tuning

Decision

Model](https://image.slidesharecdn.com/textprocessingusingtransformer-191104114500/85/Thai-Text-processing-by-Transfer-Learning-using-Transformer-Bert-49-320.jpg)

![BERT ฝึกงาน #3 (Fine Tuning)

[CLS] is upset that he can't update

his face book by texting it [SEP] [SEP]

Our’s Sentimental Classification (Logits

or Dense or LSTM or Any models)

Weight Learning

เขียน Model ต่อท้ายของผล

ของ BERT

Sentiment 140

is upset that he can't update

his Facebook by texting it...+ SAD

Happy?

Learn and Adapt

from correct Answer

It is actually

SAD, Go re-learn.

Preprocessing

Pretraining

Fine

Tuning

Decision

Model

Weight Learning

Weight Frozen Weight Frozen](https://image.slidesharecdn.com/textprocessingusingtransformer-191104114500/85/Thai-Text-processing-by-Transfer-Learning-using-Transformer-Bert-51-320.jpg)

![BERT ฝึกงาน #4 (Fine Tuning)

• และแล้วเราก็ได้ Classifier ทีFสามารถทํานายอารมณ์ได้จาก BERT

[CLS] is upset that he can't update

his face book by texting it [SEP] [SEP]

Our’s Sentimental Classification (DNN

or LSTM or Any model you want)

Weight Learned

SADOutput

Input

ผ่าน PRO แล้วจ้า

Pretraining

Fine

Tuning

Decision

Model

Weight Learned

Weight Frozen Weight Frozen](https://image.slidesharecdn.com/textprocessingusingtransformer-191104114500/85/Thai-Text-processing-by-Transfer-Learning-using-Transformer-Bert-52-320.jpg)

![[Paper Reading] Attention is All You Need](https://cdn.slidesharecdn.com/ss_thumbnails/reading20181228-190111054908-thumbnail.jpg?width=560&fit=bounds)

![[Paper review] BERT](https://cdn.slidesharecdn.com/ss_thumbnails/paperreviewbert-190507052754-thumbnail.jpg?width=560&fit=bounds)

![[Lecture 3] AI and Deep Learning: Logistic Regression (Coding)](https://cdn.slidesharecdn.com/ss_thumbnails/lecture3empty-180216132805-thumbnail.jpg?width=560&fit=bounds)

![[Lecture 4] AI and Deep Learning: Neural Network (Theory)](https://cdn.slidesharecdn.com/ss_thumbnails/lecture4ink-180216131712-thumbnail.jpg?width=560&fit=bounds)

![[Lecture 2] AI and Deep Learning: Logistic Regression (Theory)](https://cdn.slidesharecdn.com/ss_thumbnails/lecture2-ink-180216131533-thumbnail.jpg?width=560&fit=bounds)