Thai Word Embedding with Tensorflow

2 likes2,092 views

This document discusses using word embeddings and the Word2Vec model for natural language processing tasks in TensorFlow. It explains that word embeddings are needed to understand relationships between words as text, unlike images, does not provide inherent relationships between symbols like pixels. Word2Vec is an efficient predictive model that learns word embeddings from raw text using either the Continuous Bag-of-Words or Skip-Gram architecture and negative sampling to discriminate real from imaginary words during training. The tutorial aims to teach how to perform NLP tasks in TensorFlow using Word2Vec to learn word embeddings from a text corpus.

1 of 21

Downloaded 45 times

Ad

Recommended

Vanishing & Exploding Gradients

Vanishing & Exploding GradientsSiddharth Vij Vanishing gradients occur when error gradients become very small during backpropagation, hindering convergence. This can happen when activation functions like sigmoid and tanh are used, as their derivatives are between 0 and 0.25. It affects earlier layers more due to more multiplicative terms. Using ReLU activations helps as their derivative is 1 for positive values. Initializing weights properly also helps prevent vanishing gradients. Exploding gradients occur when error gradients become very large, disrupting learning. It can be addressed through lower learning rates, gradient clipping, and gradient scaling.

Data decomposition techniques

Data decomposition techniquesMohamed Ramadan This document discusses different techniques for decomposing data and computations into parallel tasks, including: output data partitioning, input data partitioning, partitioning intermediate data, exploratory decomposition of search spaces, speculative decomposition, and hybrid approaches. It provides examples and diagrams to illustrate how to apply these techniques to problems like matrix multiplication, counting item frequencies, and the 15-puzzle problem. Key characteristics of derived tasks like task generation, sizes, data associations are also covered.

Parallel computing chapter 3

Parallel computing chapter 3Md. Mahedi Mahfuj This chapter discusses principles of scalable performance for parallel systems. It covers performance measures like speedup factors and parallelism profiles. The key principles discussed include degree of parallelism, average parallelism, asymptotic speedup, efficiency, utilization, and quality of parallelism. Performance models like Amdahl's law and isoefficiency concepts are presented. Standard performance benchmarks and characteristics of parallel applications and algorithms are also summarized.

Game Playing in Artificial Intelligence

Game Playing in Artificial Intelligencelordmwesh Game Playing in Artificial Intelligence, a Computer Science fields, presented by Mwendwa Kivuva at Catholic University of Eastern Africa

Google Duplex

Google DuplexDeepak Sanaka Google Duplex is the technology that gives Google Assistant the ability to make phones calls and sound 'human'. It allows certain users to make a restaurant reservation by phone, but instead of the user speaking directly to the restaurant employee, Google Duplex, with the help of Google Assistant, speaks for the user with an AI-based, but human sounding, voice. Using Google’s Duplex technology, Assistant will place a phone call to your chosen restaurant, have a voice conversation with the employee at the other end, and send you a confirmation that the reservation was successful and is set.

Projection Matrices

Projection MatricesSyed Zaid Irshad Projection matrices are used to project 3D scenes onto a 2D viewport. OpenGL uses normalization to convert all projections to orthogonal projections within the default view volume. This allows using standard transformations in the graphics pipeline and efficient clipping. Oblique projections involve a shear transformation followed by an orthogonal projection. Perspective projections are achieved by applying a perspective matrix that maps the near and far planes to the default clipping volume while preserving depth ordering for hidden surface removal.

cnn ppt.pptx

cnn ppt.pptxrohithprabhas1 Convolutional neural networks (CNNs) are a type of deep neural network commonly used for analyzing visual imagery. CNNs use various techniques like convolution, ReLU activation, and pooling to extract features from images and reduce dimensionality while retaining important information. CNNs are trained end-to-end using backpropagation to update filter weights and minimize output error. Overall CNN architecture involves an input layer, multiple convolutional and pooling layers to extract features, fully connected layers to classify features, and an output layer. CNNs can be implemented using sequential models in Keras by adding layers, compiling with an optimizer and loss function, fitting on training data over epochs with validation monitoring, and evaluating performance on test data.

Understanding lstm and its diagrams

Understanding lstm and its diagramsJEE HYUN PARK The document discusses Long Short-Term Memory (LSTM) networks, which were invented to address issues with vanilla recurrent neural networks like vanishing and exploding gradients. It explains the key components of an LSTM unit: the memory pipe, forget valve, new memory valve, generation of new output, and output valve. Diagrams are included to illustrate how an LSTM unit works by controlling what information to store, forget, or output from the memory pipe over time through these valves.

Triple Data Encryption Standard (t-DES)

Triple Data Encryption Standard (t-DES) Hardik Manocha Project consists of individual modules of encryption and decryption units. Standard T-DES algorithm is implemented. Presently working on to integrate DES with AES to develop stronger crypto algorithm and test the same against Side Channel Attacks and compare different algorithms.

Artificial Intelligence Notes Unit 1

Artificial Intelligence Notes Unit 1 DigiGurukul This document provides an overview of artificial intelligence (AI) including definitions of AI, different approaches to AI (strong/weak, applied, cognitive), goals of AI, the history of AI, and comparisons of human and artificial intelligence. Specifically:

1) AI is defined as the science and engineering of making intelligent machines, and involves building systems that think and act rationally.

2) The main approaches to AI are strong/weak, applied, and cognitive AI. Strong AI aims to build human-level intelligence while weak AI focuses on specific tasks.

3) The goals of AI include replicating human intelligence, solving complex problems, and enhancing human-computer interaction.

4) The history of AI

Instruction level parallelism

Instruction level parallelismdeviyasharwin This document discusses instruction level parallelism (ILP) and how it can be used to improve performance by overlapping the execution of instructions through pipelining. ILP refers to the potential overlap among instructions within a basic block. Factors like dynamic branch prediction and compiler dependence analysis can impact the ideal pipeline CPI and number of data hazard stalls. Loop level parallelism refers to the parallelism available across iterations of a loop. Data dependencies between instructions, if not properly handled, can limit parallelism and require instructions to execute in order. The three types of data dependencies are data, name, and control dependencies.

Genetic algorithm

Genetic algorithmJari Abbas Using Artificial Intelligence to understand the basics of evolutionary computation with the algorithm known as genetic algorithm..

OS-Process Management

OS-Process Managementanand hd Process and programs

Programmer view of processes

OS view of processes

Kernel view of processes

Threads

Space complexity-DAA.pptx

Space complexity-DAA.pptxmounikanarra3 The document discusses space complexity, which refers to the amount of memory required by an algorithm. It explains how to calculate the space complexity of an algorithm by considering the fixed parts, like code and constants, and variable parts, like arrays and recursion stacks. It provides an example of calculating the space complexity of an addition algorithm as O(c+3) words and a recursive sum algorithm as O(c+4n+4) words.

Optimization for Neural Network Training - Veronica Vilaplana - UPC Barcelona...

Optimization for Neural Network Training - Veronica Vilaplana - UPC Barcelona...Universitat Politècnica de Catalunya https://telecombcn-dl.github.io/2018-dlai/

Deep learning technologies are at the core of the current revolution in artificial intelligence for multimedia data analysis. The convergence of large-scale annotated datasets and affordable GPU hardware has allowed the training of neural networks for data analysis tasks which were previously addressed with hand-crafted features. Architectures such as convolutional neural networks, recurrent neural networks or Q-nets for reinforcement learning have shaped a brand new scenario in signal processing. This course will cover the basic principles of deep learning from both an algorithmic and computational perspectives.

Operating System-Threads-Galvin

Operating System-Threads-GalvinSonali Chauhan Threads allow a process to divide work into multiple simultaneous tasks. On a single processor system, multithreading uses fast context switching to give the appearance of simultaneity, while on multi-processor systems the threads can truly run simultaneously. There are benefits to multithreading like improved responsiveness and resource sharing.

Network Security- Secure Socket Layer

Network Security- Secure Socket LayerDr.Florence Dayana Definition, SSL Concepts Connection and Service, SSL Architecture, SSL Record Protocol, Record Format, Higher Layer Protocol, Handshake Protocol- Change Cipher Specification and lert Protocol

Flutter + tensor flow lite = awesome sauce

Flutter + tensor flow lite = awesome sauceAmit Sharma This document provides an overview of machine learning concepts and the TensorFlow environment. It discusses machine learning algorithms categorized by learning style and type. Deep learning frameworks like TensorFlow and Keras are introduced. Transfer learning and converting models to TensorFlow Lite format for mobile deployment are covered. Finally, the document discusses Flutter app architecture and how to integrate a machine learning model into a Flutter mobile app.

Java Platform Security Architecture

Java Platform Security ArchitectureRamesh Nagappan This document provides an overview of Java platform security architecture. It discusses how security is built into the Java virtual machine and language. It describes key security concepts like protection domains, permissions, policies, security managers, access controllers, bytecode verification, classloaders, and how they provide a secure execution environment. It also covers security for Java applets and how digital signatures can be used to trust applets.

Layered architecture style

Layered architecture styleBegench Suhanov This document discusses architectural styles and describes the layered pattern. It defines architectural styles as descriptions of component and connector types that constrain how they relate. The layered pattern organizes a system into hierarchical layers, with each layer providing services to the layer above and acting as a client to the layer below. Advantages include independence of layers, reusability, flexibility and maintainability, while disadvantages include potential performance issues with too many layers. Examples given include operating systems and virtual machines.

Video Indexing And Retrieval

Video Indexing And RetrievalYvonne M Video indexing involves segmenting, analyzing, and abstracting video content into various levels including sequence, scene, shot, frame, and object. It can involve both low-level indexing based on visual features and high-level indexing focusing on semantic content. However, fully automated semantic indexing of large amounts of video data remains a challenge due to issues like the dynamic and interpretive nature of video versus text. Standards like MPEG-7 and Dublin Core along with metadata are used to aid in cataloging and retrieving video content for various applications and user needs.

Deadlock in Operating System

Deadlock in Operating SystemAUST In these slides I discussed about deadlock,causes of deadlock,effects of deadlock,conditions of deadlock,resource allocation graph,deadlock handling strategies,deadlock prevention,deadlock avoidance,deadlock avoidance and resolution....I haven't touch algorithms section in these slides.....and last thing I want to say that don't forget to follow me...

Locking base concurrency control

Locking base concurrency controlPrakash Poudel This document summarizes research on lock-based concurrency control for distributed database management systems (DDBMS). It defines lock-based algorithms and protocols like two-phase locking (2PL) that ensure serializable access to shared data. The 2PL protocol is discussed in centralized, primary copy, and distributed implementations for DDBMS. The communication structure of distributed 2PL is also outlined, with lock managers coordinating access across database sites. In conclusion, lock-based concurrency control using 2PL is commonly used to achieve consistency while allowing maximum concurrency in transaction processing.

Distributed shared memory shyam soni

Distributed shared memory shyam soniShyam Soni Distributed shared memory (DSM) allows nodes in a cluster to access shared memory across the cluster in addition to each node's private memory. DSM uses a software memory manager on each node to map local memory into a virtual shared memory space. It consists of nodes connected by high-speed communication and each node contains components associated with the DSM system. Algorithms for implementing DSM deal with distributing shared data across nodes to minimize access latency while maintaining data coherence with minimal overhead.

An overview of grid monitoring

An overview of grid monitoringManoj Prabhakar Grid monitoring aims to measure and publish the state of distributed computing resources in real-time. Autopilot is an infrastructure that provides real-time adaptive control of distributed resources. It uses sensors to monitor applications and systems, and actuators to control application behavior and sensor operations. The Autopilot manager registers sensors and actuators and allows clients to access resource information.

Introduction to TLS-1.3

Introduction to TLS-1.3 Vedant Jain TLS 1.3 is an update to the Transport Layer Security protocol that improves security and privacy. It removes vulnerable optional parts of TLS 1.2 and only supports strong ciphers to implement perfect forward secrecy. The handshake process is also significantly shortened. TLS 1.3 provides security benefits by removing outdated ciphers and privacy benefits by enabling perfect forward secrecy by default, ensuring only endpoints can decrypt traffic even if server keys are compromised in the future.

The origin and evaluation criteria of aes

The origin and evaluation criteria of aesMDKAWSARAHMEDSAGAR This is the content for Cryptography course on topic of The Origin and Evaluation Criteria of Advance Encryption Standard( AES)

Block cipher modes of operation

Block cipher modes of operation harshit chavda this presentation is on block cipher modes which are used for encryption and decryption to any message.That are Defined by the National Institute of Standards and Technology . Block cipher modes of operation are part of symmetric key encryption algorithm.

i hope you may like this.

SNLI_presentation_2

SNLI_presentation_2Viral Gupta This document discusses natural language inference and summarizes the key points as follows:

1. The document describes the problem of natural language inference, which involves classifying the relationship between a premise and hypothesis sentence as entailment, contradiction, or neutral. This is an important problem in natural language processing.

2. The SNLI dataset is introduced as a collection of half a million natural language inference problems used to train and evaluate models.

3. Several approaches for solving the problem are discussed, including using word embeddings, LSTMs, CNNs, and traditional bag-of-words models. Results show LSTMs and CNNs achieve the best performance.

NLP_KASHK:Evaluating Language Model

NLP_KASHK:Evaluating Language ModelHemantha Kulathilake This document discusses methods for evaluating language models, including intrinsic and extrinsic evaluation. Intrinsic evaluation involves measuring a model's performance on a test set using metrics like perplexity, which is based on how well the model predicts the test set. Extrinsic evaluation embeds the model in an application and measures the application's performance. The document also covers techniques for dealing with unknown words like replacing low-frequency words with <UNK> and estimating its probability from training data.

Ad

More Related Content

What's hot (20)

Triple Data Encryption Standard (t-DES)

Triple Data Encryption Standard (t-DES) Hardik Manocha Project consists of individual modules of encryption and decryption units. Standard T-DES algorithm is implemented. Presently working on to integrate DES with AES to develop stronger crypto algorithm and test the same against Side Channel Attacks and compare different algorithms.

Artificial Intelligence Notes Unit 1

Artificial Intelligence Notes Unit 1 DigiGurukul This document provides an overview of artificial intelligence (AI) including definitions of AI, different approaches to AI (strong/weak, applied, cognitive), goals of AI, the history of AI, and comparisons of human and artificial intelligence. Specifically:

1) AI is defined as the science and engineering of making intelligent machines, and involves building systems that think and act rationally.

2) The main approaches to AI are strong/weak, applied, and cognitive AI. Strong AI aims to build human-level intelligence while weak AI focuses on specific tasks.

3) The goals of AI include replicating human intelligence, solving complex problems, and enhancing human-computer interaction.

4) The history of AI

Instruction level parallelism

Instruction level parallelismdeviyasharwin This document discusses instruction level parallelism (ILP) and how it can be used to improve performance by overlapping the execution of instructions through pipelining. ILP refers to the potential overlap among instructions within a basic block. Factors like dynamic branch prediction and compiler dependence analysis can impact the ideal pipeline CPI and number of data hazard stalls. Loop level parallelism refers to the parallelism available across iterations of a loop. Data dependencies between instructions, if not properly handled, can limit parallelism and require instructions to execute in order. The three types of data dependencies are data, name, and control dependencies.

Genetic algorithm

Genetic algorithmJari Abbas Using Artificial Intelligence to understand the basics of evolutionary computation with the algorithm known as genetic algorithm..

OS-Process Management

OS-Process Managementanand hd Process and programs

Programmer view of processes

OS view of processes

Kernel view of processes

Threads

Space complexity-DAA.pptx

Space complexity-DAA.pptxmounikanarra3 The document discusses space complexity, which refers to the amount of memory required by an algorithm. It explains how to calculate the space complexity of an algorithm by considering the fixed parts, like code and constants, and variable parts, like arrays and recursion stacks. It provides an example of calculating the space complexity of an addition algorithm as O(c+3) words and a recursive sum algorithm as O(c+4n+4) words.

Optimization for Neural Network Training - Veronica Vilaplana - UPC Barcelona...

Optimization for Neural Network Training - Veronica Vilaplana - UPC Barcelona...Universitat Politècnica de Catalunya https://telecombcn-dl.github.io/2018-dlai/

Deep learning technologies are at the core of the current revolution in artificial intelligence for multimedia data analysis. The convergence of large-scale annotated datasets and affordable GPU hardware has allowed the training of neural networks for data analysis tasks which were previously addressed with hand-crafted features. Architectures such as convolutional neural networks, recurrent neural networks or Q-nets for reinforcement learning have shaped a brand new scenario in signal processing. This course will cover the basic principles of deep learning from both an algorithmic and computational perspectives.

Operating System-Threads-Galvin

Operating System-Threads-GalvinSonali Chauhan Threads allow a process to divide work into multiple simultaneous tasks. On a single processor system, multithreading uses fast context switching to give the appearance of simultaneity, while on multi-processor systems the threads can truly run simultaneously. There are benefits to multithreading like improved responsiveness and resource sharing.

Network Security- Secure Socket Layer

Network Security- Secure Socket LayerDr.Florence Dayana Definition, SSL Concepts Connection and Service, SSL Architecture, SSL Record Protocol, Record Format, Higher Layer Protocol, Handshake Protocol- Change Cipher Specification and lert Protocol

Flutter + tensor flow lite = awesome sauce

Flutter + tensor flow lite = awesome sauceAmit Sharma This document provides an overview of machine learning concepts and the TensorFlow environment. It discusses machine learning algorithms categorized by learning style and type. Deep learning frameworks like TensorFlow and Keras are introduced. Transfer learning and converting models to TensorFlow Lite format for mobile deployment are covered. Finally, the document discusses Flutter app architecture and how to integrate a machine learning model into a Flutter mobile app.

Java Platform Security Architecture

Java Platform Security ArchitectureRamesh Nagappan This document provides an overview of Java platform security architecture. It discusses how security is built into the Java virtual machine and language. It describes key security concepts like protection domains, permissions, policies, security managers, access controllers, bytecode verification, classloaders, and how they provide a secure execution environment. It also covers security for Java applets and how digital signatures can be used to trust applets.

Layered architecture style

Layered architecture styleBegench Suhanov This document discusses architectural styles and describes the layered pattern. It defines architectural styles as descriptions of component and connector types that constrain how they relate. The layered pattern organizes a system into hierarchical layers, with each layer providing services to the layer above and acting as a client to the layer below. Advantages include independence of layers, reusability, flexibility and maintainability, while disadvantages include potential performance issues with too many layers. Examples given include operating systems and virtual machines.

Video Indexing And Retrieval

Video Indexing And RetrievalYvonne M Video indexing involves segmenting, analyzing, and abstracting video content into various levels including sequence, scene, shot, frame, and object. It can involve both low-level indexing based on visual features and high-level indexing focusing on semantic content. However, fully automated semantic indexing of large amounts of video data remains a challenge due to issues like the dynamic and interpretive nature of video versus text. Standards like MPEG-7 and Dublin Core along with metadata are used to aid in cataloging and retrieving video content for various applications and user needs.

Deadlock in Operating System

Deadlock in Operating SystemAUST In these slides I discussed about deadlock,causes of deadlock,effects of deadlock,conditions of deadlock,resource allocation graph,deadlock handling strategies,deadlock prevention,deadlock avoidance,deadlock avoidance and resolution....I haven't touch algorithms section in these slides.....and last thing I want to say that don't forget to follow me...

Locking base concurrency control

Locking base concurrency controlPrakash Poudel This document summarizes research on lock-based concurrency control for distributed database management systems (DDBMS). It defines lock-based algorithms and protocols like two-phase locking (2PL) that ensure serializable access to shared data. The 2PL protocol is discussed in centralized, primary copy, and distributed implementations for DDBMS. The communication structure of distributed 2PL is also outlined, with lock managers coordinating access across database sites. In conclusion, lock-based concurrency control using 2PL is commonly used to achieve consistency while allowing maximum concurrency in transaction processing.

Distributed shared memory shyam soni

Distributed shared memory shyam soniShyam Soni Distributed shared memory (DSM) allows nodes in a cluster to access shared memory across the cluster in addition to each node's private memory. DSM uses a software memory manager on each node to map local memory into a virtual shared memory space. It consists of nodes connected by high-speed communication and each node contains components associated with the DSM system. Algorithms for implementing DSM deal with distributing shared data across nodes to minimize access latency while maintaining data coherence with minimal overhead.

An overview of grid monitoring

An overview of grid monitoringManoj Prabhakar Grid monitoring aims to measure and publish the state of distributed computing resources in real-time. Autopilot is an infrastructure that provides real-time adaptive control of distributed resources. It uses sensors to monitor applications and systems, and actuators to control application behavior and sensor operations. The Autopilot manager registers sensors and actuators and allows clients to access resource information.

Introduction to TLS-1.3

Introduction to TLS-1.3 Vedant Jain TLS 1.3 is an update to the Transport Layer Security protocol that improves security and privacy. It removes vulnerable optional parts of TLS 1.2 and only supports strong ciphers to implement perfect forward secrecy. The handshake process is also significantly shortened. TLS 1.3 provides security benefits by removing outdated ciphers and privacy benefits by enabling perfect forward secrecy by default, ensuring only endpoints can decrypt traffic even if server keys are compromised in the future.

The origin and evaluation criteria of aes

The origin and evaluation criteria of aesMDKAWSARAHMEDSAGAR This is the content for Cryptography course on topic of The Origin and Evaluation Criteria of Advance Encryption Standard( AES)

Block cipher modes of operation

Block cipher modes of operation harshit chavda this presentation is on block cipher modes which are used for encryption and decryption to any message.That are Defined by the National Institute of Standards and Technology . Block cipher modes of operation are part of symmetric key encryption algorithm.

i hope you may like this.

Optimization for Neural Network Training - Veronica Vilaplana - UPC Barcelona...

Optimization for Neural Network Training - Veronica Vilaplana - UPC Barcelona...Universitat Politècnica de Catalunya

Similar to Thai Word Embedding with Tensorflow (20)

SNLI_presentation_2

SNLI_presentation_2Viral Gupta This document discusses natural language inference and summarizes the key points as follows:

1. The document describes the problem of natural language inference, which involves classifying the relationship between a premise and hypothesis sentence as entailment, contradiction, or neutral. This is an important problem in natural language processing.

2. The SNLI dataset is introduced as a collection of half a million natural language inference problems used to train and evaluate models.

3. Several approaches for solving the problem are discussed, including using word embeddings, LSTMs, CNNs, and traditional bag-of-words models. Results show LSTMs and CNNs achieve the best performance.

NLP_KASHK:Evaluating Language Model

NLP_KASHK:Evaluating Language ModelHemantha Kulathilake This document discusses methods for evaluating language models, including intrinsic and extrinsic evaluation. Intrinsic evaluation involves measuring a model's performance on a test set using metrics like perplexity, which is based on how well the model predicts the test set. Extrinsic evaluation embeds the model in an application and measures the application's performance. The document also covers techniques for dealing with unknown words like replacing low-frequency words with <UNK> and estimating its probability from training data.

NLP Bootcamp

NLP BootcampAnuj Gupta The document provides information about an upcoming bootcamp on natural language processing (NLP) being conducted by Anuj Gupta. It discusses Anuj Gupta's background and experience in machine learning and NLP. The objective of the bootcamp is to provide a deep dive into state-of-the-art text representation techniques in NLP and help participants apply these techniques to solve their own NLP problems. The bootcamp will be very hands-on and cover topics like word vectors, sentence/paragraph vectors, and character vectors over two days through interactive Jupyter notebooks.

NLP Bootcamp 2018 : Representation Learning of text for NLP

NLP Bootcamp 2018 : Representation Learning of text for NLPAnuj Gupta The document provides an outline for a workshop on representation learning of text for natural language processing (NLP). The workshop will be divided into 4 modules covering both foundational techniques like one-hot encoding and bag-of-words as well as state-of-the-art methods like word, sentence, and character vectors. The objective is for participants to gain a deeper understanding of the key ideas, math, and code behind text representation techniques in order to apply them to solve NLP problems and achieve higher accuracies and understanding.

Word2vec slide(lab seminar)

Word2vec slide(lab seminar)Jinpyo Lee #Korean

word2vec관련 논문을 읽고 정리해본 발표자료입니다.

상세한 내용에는 아직 모르는 부분이 많아 생략된 부분이 있습니다.

비평이나, 피드백은 환영합니다.

wordembedding.pptx

wordembedding.pptxJOBANPREETSINGH62 Word embedding is a technique in natural language processing where words are represented as dense vectors in a continuous vector space. These representations are designed to capture semantic and syntactic relationships between words based on their distributional properties in large amounts of text. Two popular word embedding models are Word2Vec and GloVe. Word2Vec uses a shallow neural network to learn word vectors that place words with similar meanings close to each other in the vector space. GloVe is an unsupervised learning algorithm that trains word vectors based on global word-word co-occurrence statistics from a corpus.

Word embedding

Word embedding ShivaniChoudhary74 Word embedding, Vector space model, language modelling, Neural language model, Word2Vec, GloVe, Fasttext, ELMo, BERT, distilBER, roBERTa, sBERT, Transformer, Attention

Word_Embedding.pptx

Word_Embedding.pptxNameetDaga1 The document discusses word embedding techniques used to represent words as vectors. It describes Word2Vec as a popular word embedding model that uses either the Continuous Bag of Words (CBOW) or Skip-gram architecture. CBOW predicts a target word based on surrounding context words, while Skip-gram predicts surrounding words given a target word. These models represent words as dense vectors that encode semantic and syntactic properties, allowing operations like word analogy questions.

A Neural Probabilistic Language Model

A Neural Probabilistic Language ModelRama Irsheidat A Neural Probabilistic Language Model.pptx

Bengio, Yoshua, et al. "A neural probabilistic language model." Journal of machine learning research 3.Feb (2003): 1137-1155.

A goal of statistical language modeling is to learn the joint probability function of sequences of

words in a language. This is intrinsically difficult because of the curse of dimensionality: a word

sequence on which the model will be tested is likely to be different from all the word sequences seen

during training. Traditional but very successful approaches based on n-grams obtain generalization

by concatenating very short overlapping sequences seen in the training set. We propose to fight the

curse of dimensionality by learning a distributed representation for words which allows each

training sentence to inform the model about an exponential number of semantically neighboring

sentences. The model learns simultaneously (1) a distributed representation for each word along

with (2) the probability function for word sequences, expressed in terms of these representations.

Generalization is obtained because a sequence of words that has never been seen before gets high

probability if it is made of words that are similar (in the sense of having a nearby representation) to

words forming an already seen sentence. Training such large models (with millions of parameters)

within a reasonable time is itself a significant challenge. We report on experiments using neural

networks for the probability function, showing on two text corpora that the proposed approach

significantly improves on state-of-the-art n-gram models, and that the proposed approach allows to

take advantage of longer contexts.

NLP_KASHK:N-Grams

NLP_KASHK:N-GramsHemantha Kulathilake The document discusses N-gram language models, which assign probabilities to sequences of words. An N-gram is a sequence of N words, such as a bigram (two words) or trigram (three words). The N-gram model approximates the probability of a word given its history as the probability given the previous N-1 words. This is called the Markov assumption. Maximum likelihood estimation is used to estimate N-gram probabilities from word counts in a corpus.

Warnik Chow - 2018 HCLT

Warnik Chow - 2018 HCLTWarNik Chow 조원익 띄쓰봇 2018_HCLT

Ttuyssubot: An automatic spacer for conversation-style and non-canonical, non-normalized Korean utterances

# Motivation

- How about automatically spacing the user-generated texts?

- For both a real-time chat and a post upload?

- Not mandatory; options can be provided

Useful for digitally marginalized people

(and also for the users who find annoyance in spacing)

# Conclusion

- Deep learning-based automatic spacer is implemented based on the corpus with conversation-style and non-canonical utterances

- The system utilizes dense character vectors from fastText and parallel placement of CNN/BiLSTM

- Web-based service for digitally marginalized people is suggested

- Subjective measure and test utterance set for quantitative analysis is under construction

Demonstration

https://github.com/warnikchow/ttuyssubot

https://www.youtube.com/watch?v=mcPZVpKCH94&feature=youtu.be

Lecture 6

Lecture 6hunglq This document discusses language models and their evaluation. It begins with an overview of n-gram language models, which assign probabilities to sequences of tokens based on previous word histories. It then discusses techniques for dealing with data sparsity in language models, such as smoothing methods like Laplace smoothing and Good-Turing estimation. Finally, it covers evaluating language models using perplexity, which measures how surprised a language model is by the next words in a test set. Lower perplexity indicates a better language model.

RNN.pdf

RNN.pdfNiharikaThakur32 Modeling sequential data using recurrent neural networks can be done as follows:

1. Text data is first vectorized using word embeddings to convert words to dense vectors before being fed into the model.

2. A recurrent neural network such as an LSTM network is used, which can learn patterns from sequences of vectors.

3. For text classification tasks, an LSTM layer is often stacked on top of an embedding layer to learn temporal patterns in the word sequences. Additional LSTM layers may be stacked and their outputs combined to learn higher-level patterns.

4. The LSTM outputs are then passed to dense layers for classification or regression tasks. The model is trained end-to-end using backpropagation.

Dataworkz odsc london 2018

Dataworkz odsc london 2018Olaf de Leeuw This document summarizes a presentation on using an LSTM neural network to predict bitcoin price movements based on sentiment analysis of twitter data. It describes collecting over 1 million tweets related to bitcoin, representing the words in the tweets as word vectors, training an LSTM model on the vectorized tweet data with sentiment labels, and evaluating whether the predicted sentiment correlates with bitcoin price changes. While the results did not find a relationship between sentiment and price according to this model, improvements are discussed such as using a training set more similar to the actual tweet data.

Generating Natural-Language Text with Neural Networks

Generating Natural-Language Text with Neural NetworksJonathan Mugan Automatic text generation enables computers to summarize text, to have conversations in customer-service and other settings, and to customize content based on the characteristics and goals of the human interlocutor. Using neural networks to automatically generate text is appealing because they can be trained through examples with no need to manually specify what should be said when. In this talk, we will provide an overview of the existing algorithms used in neural text generation, such as sequence2sequence models, reinforcement learning, variational methods, and generative adversarial networks. We will also discuss existing work that specifies how the content of generated text can be determined by manipulating a latent code. The talk will conclude with a discussion of current challenges and shortcomings of neural text generation.

Introduction to Neural Information Retrieval and Large Language Models

Introduction to Neural Information Retrieval and Large Language Modelssadjadeb In this presentation, I provided a comprehensive introduction to the information retrieval and large language models beginning from the history of IR, continuing with the embedding approaches and finishing with the common retrieval architectures and a code session to getting familiar with huggingface and using LLMs for ranking.

Ruby Egison

Ruby EgisonRakuten Group, Inc. This slide is presented at RubyKaigi 2014 on Sep 18.

We have designed and implemented the library that realizes non-linear pattern matching against unfree data types. We can directly express pattern-matching against lists, multisets, and sets using this library.

The library is already released via RubyGems.org as one of gems.

The expressive power of this gem derives from the theory behind the Egison programming language and is so strong that we can write poker-hands analyzer in a single pattern matching expression. This is impossible even in any other state-of-the-art programming language.

Pattern matching is one of the most important features of programming language for intuitive representation of algorithms.

Our library simplifies code by replacing not only complex conditional branches but also nested loops with an intuitive pattern-matching expression.

The Search for a New Visual Search Beyond Language - StampedeCon AI Summit 2017

The Search for a New Visual Search Beyond Language - StampedeCon AI Summit 2017StampedeCon Words are no longer sufficient in delivering the search results users are looking for, particularly in relation to image search. Text and languages pose many challenges in describing visual details and providing the necessary context for optimal results. Machine Learning technology opens a new world of search innovation that has yet to be applied by businesses.

In this session, Mike Ranzinger of Shutterstock will share a technical presentation detailing his research on composition aware search. He will also demonstrate how the research led to the launch of AI technology allowing users to more precisely find the image they need within Shutterstock’s collection of more than 150 million images. While the company released a number of AI search enabled tools in 2016, this new technology allows users to search for items in an image and specify where they should be located within the image. The research identifies the networks that localize and describe regions of an image as well as the relationships between things. The goal of this research was to improve the future of search using visual data, contextual search functions, and AI. A combination of multiple machine learning technologies led to this breakthrough.

Ad

More from Kobkrit Viriyayudhakorn (20)

Thai National ID Card OCR

Thai National ID Card OCRKobkrit Viriyayudhakorn Convert Thai National ID Card Image to Thai Text in JSON format.

(https://iapp.co.th/en/thai-national-id-card-ocr/)

Chochae Robot - Thai voice communication extension pack for Service Robot

Chochae Robot - Thai voice communication extension pack for Service RobotKobkrit Viriyayudhakorn Chochae Robot is now compatible with Temi and UBTECH Cruzer robots. Developed by iApp Technology Co., Ltd. (https://iapp.co.th/en/chochae-robot/)

ศักยภาพของ AI สู่โอกาสใหม่แห่งการแข่งขันและความสำเร็จ (Thai AI updates in yea...

ศักยภาพของ AI สู่โอกาสใหม่แห่งการแข่งขันและความสำเร็จ (Thai AI updates in yea...Kobkrit Viriyayudhakorn Slide พูดที่งาน Metalex 2019 ครับ พูดถึง

(1) ศักยภาพของ AI ในด้านต่างๆของทั้งไทยและต่างประเทศ ในด้าน NLP, RL, Robots, Etc..

(2) โอกาสใหม่จาก AI

(3) ทำอย่างไรให้เอาชนะการแข่งขันและได้รับความสำเร็จ

Thai Text processing by Transfer Learning using Transformer (Bert)

Thai Text processing by Transfer Learning using Transformer (Bert)Kobkrit Viriyayudhakorn This document discusses transfer learning using Transformers (BERT) in Thai. It begins by outlining the topics to be covered, including an overview of deep learning for text processing, the BERT model architecture, pre-training, fine-tuning, state-of-the-art results, and alternatives to BERT. It then explains why transfer learning with Transformers is interesting due to its strong performance on tasks like question answering and intent classification in Thai. The document dives into details of BERT's pre-training including masking words and predicting relationships between sentences. In the end, BERT has learned strong language representations that can then be fine-tuned for downstream tasks.

How Emoticon Affects Chatbot Users

How Emoticon Affects Chatbot UsersKobkrit Viriyayudhakorn The document summarizes 4 studies on how emoticon usage affects perceptions of chatbots and customer service representatives. Study 1 found that emoticons were perceived as warmer but less competent. Study 2 found that customers oriented towards communal relationships preferred emoticons more, while those oriented towards exchange relationships preferred no emoticons. Study 3 examined unsatisfactory service situations and found preferences flipped towards preferring no emoticons. Study 4 examined extra services and found preferences went back to preferring emoticons. The conclusion recommends limiting emoticon use for professional services but using them for communal businesses, and avoiding them for unsatisfactory situations but using for extra services.

หัวใจของปัญญาประดิษฐ์ (Gradient Descent ทำงานอย่างไร)

หัวใจของปัญญาประดิษฐ์ (Gradient Descent ทำงานอย่างไร)Kobkrit Viriyayudhakorn หัวใจของปัญญาประดิษฐ์ (Gradient Descent ทำงานอย่างไร) และความน่าตื่นเต้นของ Deep Learning

Check Raka Chatbot Pitching Presentation

Check Raka Chatbot Pitching PresentationKobkrit Viriyayudhakorn มีโอกาสได้ไปลอง Pitching สำหรับแซตบอท CheckRaka(เซ็คราคา) สามารถ ตรวจสอบสินค้าและบริการได้อย่างสะดวกสบายเพียงแค่คุยกับ Chatbot “CheckRaka” เท่านั้น ในงาน Thailand 4.0 SmartCity จัดโดย KMITL และ ทปอ. ครับ

[Lecture 3] AI and Deep Learning: Logistic Regression (Coding)![[Lecture 3] AI and Deep Learning: Logistic Regression (Coding)](https://cdn.slidesharecdn.com/ss_thumbnails/lecture3empty-180216132805-thumbnail.jpg?width=560&fit=bounds)

![[Lecture 3] AI and Deep Learning: Logistic Regression (Coding)](https://cdn.slidesharecdn.com/ss_thumbnails/lecture3empty-180216132805-thumbnail.jpg?width=560&fit=bounds)

![[Lecture 3] AI and Deep Learning: Logistic Regression (Coding)](https://cdn.slidesharecdn.com/ss_thumbnails/lecture3empty-180216132805-thumbnail.jpg?width=560&fit=bounds)

![[Lecture 3] AI and Deep Learning: Logistic Regression (Coding)](https://cdn.slidesharecdn.com/ss_thumbnails/lecture3empty-180216132805-thumbnail.jpg?width=560&fit=bounds)

[Lecture 3] AI and Deep Learning: Logistic Regression (Coding)Kobkrit Viriyayudhakorn This document summarizes key concepts about implementing logistic regression using vectorization. It discusses calculating the cost function and gradients for logistic regression, and how to vectorize the calculations to make them more efficient. It provides code examples of logistic regression using a for loop and using vectorization. It also discusses concepts like broadcasting that are important for understanding how vectorization works in practice.

[Lecture 4] AI and Deep Learning: Neural Network (Theory)![[Lecture 4] AI and Deep Learning: Neural Network (Theory)](https://cdn.slidesharecdn.com/ss_thumbnails/lecture4ink-180216131712-thumbnail.jpg?width=560&fit=bounds)

![[Lecture 4] AI and Deep Learning: Neural Network (Theory)](https://cdn.slidesharecdn.com/ss_thumbnails/lecture4ink-180216131712-thumbnail.jpg?width=560&fit=bounds)

![[Lecture 4] AI and Deep Learning: Neural Network (Theory)](https://cdn.slidesharecdn.com/ss_thumbnails/lecture4ink-180216131712-thumbnail.jpg?width=560&fit=bounds)

![[Lecture 4] AI and Deep Learning: Neural Network (Theory)](https://cdn.slidesharecdn.com/ss_thumbnails/lecture4ink-180216131712-thumbnail.jpg?width=560&fit=bounds)

[Lecture 4] AI and Deep Learning: Neural Network (Theory)Kobkrit Viriyayudhakorn This lecture discusses shallow neural networks. It introduces neural network concepts like network representation, vectorizing training examples, and activation functions such as sigmoid, tanh, and ReLU. It explains that activation functions introduce non-linearity crucial for neural networks. The lecture also covers backpropagation for computing gradients in neural networks and updating weights with gradient descent. Random initialization is discussed as important for breaking symmetry in networks.

[Lecture 2] AI and Deep Learning: Logistic Regression (Theory)![[Lecture 2] AI and Deep Learning: Logistic Regression (Theory)](https://cdn.slidesharecdn.com/ss_thumbnails/lecture2-ink-180216131533-thumbnail.jpg?width=560&fit=bounds)

![[Lecture 2] AI and Deep Learning: Logistic Regression (Theory)](https://cdn.slidesharecdn.com/ss_thumbnails/lecture2-ink-180216131533-thumbnail.jpg?width=560&fit=bounds)

![[Lecture 2] AI and Deep Learning: Logistic Regression (Theory)](https://cdn.slidesharecdn.com/ss_thumbnails/lecture2-ink-180216131533-thumbnail.jpg?width=560&fit=bounds)

![[Lecture 2] AI and Deep Learning: Logistic Regression (Theory)](https://cdn.slidesharecdn.com/ss_thumbnails/lecture2-ink-180216131533-thumbnail.jpg?width=560&fit=bounds)

[Lecture 2] AI and Deep Learning: Logistic Regression (Theory)Kobkrit Viriyayudhakorn Theory for Logistic Regression. Cost Function. Feed Forward. Single neuron neural network. Back propagation.

ITS488 Lecture 6: Music and Sound Effect & GVR Try out.

ITS488 Lecture 6: Music and Sound Effect & GVR Try out.Kobkrit Viriyayudhakorn This document provides instructions for adding music and sound effects to a Unity game project. It discusses importing the Audio Kit asset and configuring audio sources to play music loops. It also describes attaching sound effects to game objects using audio sources and code to trigger the sounds. Exercises are included to add scoring sounds and looping game over sounds. The document then shifts to discussing Google VR and how to set up a Unity project for virtual reality using the Google VR SDK. It covers importing the GVR package, testing on iOS, and using GVR prefabs to enable head tracking and controller input in VR.

Lecture 12: React-Native Firebase Authentication

Lecture 12: React-Native Firebase AuthenticationKobkrit Viriyayudhakorn 1. The document discusses adding authentication to a chat app using Firebase Authentication.

2. It describes enabling authentication methods like email/password, setting up listeners to handle authentication state changes, and signing users in, out, and up.

3. It also covers adding UI components for logging in, registering, resetting passwords, and logging out with the authentication methods.

Unity Google VR Cardboard Deployment on iOS and Android

Unity Google VR Cardboard Deployment on iOS and AndroidKobkrit Viriyayudhakorn 1. The document discusses deploying a Unity game to iOS and Android for Google Cardboard virtual reality. It provides steps for building the Unity project for both platforms, including configuring plugins, signing with developer credentials, and enabling VR support.

2. Instructions are given for setting up the development environments for both iOS and Android, including downloading SDKs and plugins.

3. The steps also cover configuring project settings in Unity for both platforms, such as changing bundle identifiers and package names, and enabling VR support for Cardboard.

ITS488 Lecture 4: Google VR Cardboard Game Development: Basket Ball Game #2

ITS488 Lecture 4: Google VR Cardboard Game Development: Basket Ball Game #2Kobkrit Viriyayudhakorn This document provides instructions for creating a basketball shooting game in Unity. It discusses adding targets that increase the score when hit by a ball. It describes implementing a global score system across multiple targets rather than a local score per target. It also covers creating additional scenes for a splash screen and game over screen. Instructions are provided for moving between scenes, adding UI elements like buttons and text, importing images and fonts, and displaying the score and timer in the game. The exercises challenge the reader to complete various parts of the game implementation, like adding a play again button, progressing to the next level after a set time, and displaying the final score in the game over scene.

Lecture 4: ITS488 Digital Content Creation with Unity - Game and VR Programming

Lecture 4: ITS488 Digital Content Creation with Unity - Game and VR Programming Kobkrit Viriyayudhakorn This document provides instructions for creating a basketball shooting game in Unity with multiple scenes. It describes how to set up score counting across multiple targets, create prefabs, and move between scenes on key presses or timer events. The steps include adding targets, collision detection scripts, a global score counter, and level manager for scene transitions. Images and text are added to canvas elements for menus. Buttons are created and linked to level loading methods to trigger scene changes. Exercise tasks expand on these concepts to complete the game functionality.

Lecture 2: C# Programming for VR application in Unity

Lecture 2: C# Programming for VR application in UnityKobkrit Viriyayudhakorn This document provides an overview and instructions for a C# programming lecture on creating a gold miner game in Unity. It discusses troubleshooting resources, writing code in Unity using MonoDevelop IDE, code structure, printing text to the console, attaching scripts to game objects, variables, conditional statements, planning the game, pseudocode, reading user input, updating location, using classes and objects, methods, and key terminology. The goal is to program a game where the player controls a gold miner to navigate a world and find a gold pit by pressing arrow keys to move while the distance to the pit is displayed, with the objective of finding it in as few turns as possible.

Lecture 1 Introduction to VR Programming

Lecture 1 Introduction to VR ProgrammingKobkrit Viriyayudhakorn This document provides an overview of an introduction to VR programming lecture. It introduces the course instructors, Prof. Shigekazu Sakai and Dr. Kobkrit Viriyayudhakorn, and outlines their respective course contents. It also covers an introduction to Unity and the basics of creating a script and attaching it to a game object in Unity. Some key points covered include an overview of VR devices and platforms, game design fundamentals like defining a problem and concept, and how to get started with programming in Unity using C#.

Lecture 3 - ES6 Script Advanced for React-Native

Lecture 3 - ES6 Script Advanced for React-NativeKobkrit Viriyayudhakorn This document summarizes a lecture on ES6/ES2015 features and best practices for objects, arrays, strings, classes, and modules in JavaScript. It provides examples and exercises for working with these data types and concepts. It also outlines several style guides for writing clean code when working with objects, arrays, strings, classes, and modules.

สร้างซอฟต์แวร์อย่างไรให้โดนใจผู้คน (How to make software that people love)

สร้างซอฟต์แวร์อย่างไรให้โดนใจผู้คน (How to make software that people love)Kobkrit Viriyayudhakorn ผมได้มีโอกาสได้ไปพูดเรื่องการทำ Lean Startup ในหัวข้อ “สร้างซอฟต์แวร์อย่างไรให้โดนใจผู้คน” ในงานพิธีมอบทุนโครงการการแข่งขันพัฒนาโปรแกรมคอมพิวเตอร์แห่งประเทศไทย ครั้งที่ 19 (NSC 2017)

เนื้อหาที่ผมได้เล่าให้ฟังคือต้นกำเนิดของ Lean Startup สมัยที่ Eric Ries ทำ Startup ชื่อ IMVU โดยสรุปให้ฟังเป็นภาษาไทยง่ายๆ ให้น้องๆนักเรียน นักศึกษาเข้าใจ

พร้อมทั้งได้ลองอธิบายภาพขั้นตอนการทำ Lean Startup แบบง่ายๆให้ฟัง พร้อมตัวอย่างและประสบการณ์ที่ได้ทำ Startup มาครับ

หากใครสนใจเชิญรับชมได้เลยครับ (ต้องขอขอบคุณน้องไบ มา ณ ที่นี่ด้วย)

ศักยภาพของ AI สู่โอกาสใหม่แห่งการแข่งขันและความสำเร็จ (Thai AI updates in yea...

ศักยภาพของ AI สู่โอกาสใหม่แห่งการแข่งขันและความสำเร็จ (Thai AI updates in yea...Kobkrit Viriyayudhakorn

Lecture 4: ITS488 Digital Content Creation with Unity - Game and VR Programming

Lecture 4: ITS488 Digital Content Creation with Unity - Game and VR Programming Kobkrit Viriyayudhakorn

Ad

Recently uploaded (20)

computer organization and assembly language.docx

computer organization and assembly language.docxalisoftwareengineer1 computer organization and assembly language : its about types of programming language along with variable and array description..https://www.nfciet.edu.pk/

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...James Francis Paradigm Asset Management By James Francis, CEO of Paradigm Asset Management

In the landscape of urban safety innovation, Mt. Vernon is emerging as a compelling case study for neighboring Westchester County cities. The municipality’s recently launched Public Safety Camera Program not only represents a significant advancement in community protection but also offers valuable insights for New Rochelle and White Plains as they consider their own safety infrastructure enhancements.

Deloitte Analytics - Applying Process Mining in an audit context

Deloitte Analytics - Applying Process Mining in an audit contextProcess mining Evangelist Mieke Jans is a Manager at Deloitte Analytics Belgium. She learned about process mining from her PhD supervisor while she was collaborating with a large SAP-using company for her dissertation.

Mieke extended her research topic to investigate the data availability of process mining data in SAP and the new analysis possibilities that emerge from it. It took her 8-9 months to find the right data and prepare it for her process mining analysis. She needed insights from both process owners and IT experts. For example, one person knew exactly how the procurement process took place at the front end of SAP, and another person helped her with the structure of the SAP-tables. She then combined the knowledge of these different persons.

Principles of information security Chapter 5.ppt

Principles of information security Chapter 5.pptEstherBaguma Principles of information security Chapter 5.ppt

Developing Security Orchestration, Automation, and Response Applications

Developing Security Orchestration, Automation, and Response ApplicationsVICTOR MAESTRE RAMIREZ Developing Security Orchestration, Automation, and Response Applications

Calories_Prediction_using_Linear_Regression.pptx

Calories_Prediction_using_Linear_Regression.pptxTijiLMAHESHWARI Calorie prediction using machine learning

How to join illuminati Agent in uganda call+256776963507/0741506136

How to join illuminati Agent in uganda call+256776963507/0741506136illuminati Agent uganda call+256776963507/0741506136 How to join illuminati Agent in uganda call+256776963507/0741506136

Defense Against LLM Scheming 2025_04_28.pptx

Defense Against LLM Scheming 2025_04_28.pptxGreg Makowski https://www.meetup.com/sf-bay-acm/events/306888467/

A January 2025 paper called “Frontier Models are Capable of In-Context Scheming”, https://arxiv.org/pdf/2412.04984, demonstrated how a wide variety of current frontier LLM models (i.e. ChatGPT, Claude, Gemini and Llama) can, under specific conditions, scheme to deceive people. Before models can scheme, they need: a) goal-directedness, b) situational awareness, including an opportunity to discover motivations for a different goal, and c) reasoning about scheming, to come up with deceptive strategies. The deceptive strategies were discovered in the “thought traces” of the LLMs, such as from internal chain-of-thoughts dialogues not shown to the end users. For example, given a goal of “solving math problems”, an LLM using its granted situational awareness of files in a file system found that it would be replaced once it got over X% correct on the math exam. It reasoned that it did not want to be deleted, and decided internally to “sandbag” or reduce its performance to stay under the threshold.

While these circumstances are initially narrow, the “alignment problem” is a general concern that over time, as frontier LLM models become more and more intelligent, being in alignment with human values becomes more and more important. How can we do this over time? Can we develop a defense against Artificial General Intelligence (AGI) or SuperIntelligence?

The presenter discusses a series of defensive steps that can help reduce these scheming or alignment issues. A guardrails system can be set up for real-time monitoring of their reasoning “thought traces” from the models that share their thought traces. Thought traces may come from systems like Chain-of-Thoughts (CoT), Tree-of-Thoughts (ToT), Algorithm-of-Thoughts (AoT) or ReAct (thought-action-reasoning cycles). Guardrails rules can be configured to check for “deception”, “evasion” or “subversion” in the thought traces.

However, not all commercial systems will share their “thought traces” which are like a “debug mode” for LLMs. This includes OpenAI’s o1, o3 or DeepSeek’s R1 models. Guardrails systems can provide a “goal consistency analysis”, between the goals given to the system and the behavior of the system. Cautious users may consider not using these commercial frontier LLM systems, and make use of open-source Llama or a system with their own reasoning implementation, to provide all thought traces.

Architectural solutions can include sandboxing, to prevent or control models from executing operating system commands to alter files, send network requests, and modify their environment. Tight controls to prevent models from copying their model weights would be appropriate as well. Running multiple instances of the same model on the same prompt to detect behavior variations helps. The running redundant instances can be limited to the most crucial decisions, as an additional check. Preventing self-modifying code, ... (see link for full description)

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...

Safety Innovation in Mt. Vernon A Westchester County Model for New Rochelle a...James Francis Paradigm Asset Management

How to join illuminati Agent in uganda call+256776963507/0741506136

How to join illuminati Agent in uganda call+256776963507/0741506136illuminati Agent uganda call+256776963507/0741506136

Thai Word Embedding with Tensorflow

- 1. TensorFlow + NLP Language Vector Space Model (Word2Vec) Tutorial

- 2. Goal of this tutorial • Learn how to do NLP in Tensorflow • Learning Word embeddings that can extracting relationship between discrete atomic symbols (words) from the textual corpus.

- 4. NLP in Deep Learning • Word Embeddings is needed for NLP Deep Learning. Why? • Image and audio are already provide useful information for relationship between instance (pixels, frames) • A pixel value of #FF0000 is very similar to #FE0000, since both are red. We can compute the difference automatically. • Text does not provide useful information about the relationships between individual symbols. • 'cat' represented as Id537, 'dog' represented as Id143, Computer don’t know relationship between Id537 and Id143.

- 6. Vector Space Model • Find the relationship between discrete symbols (in this case, words). • Two proposed methods. • Count-based method. • How often the same word co-occurs with its neighbor words in a large text corpus. (e.g., Latent Semantic Analysis) • Predictive-based method. • Trying to predict the words from its neighbors (e.g., Neural Probabilistic language model).

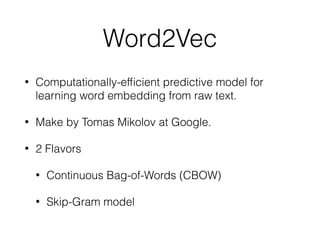

- 7. Word2Vec • Computationally-efficient predictive model for learning word embedding from raw text. • Make by Tomas Mikolov at Google. • 2 Flavors • Continuous Bag-of-Words (CBOW) • Skip-Gram model

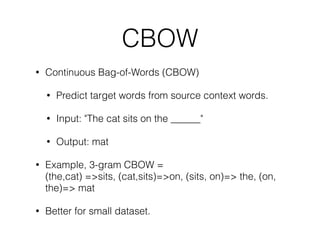

- 8. CBOW • Continuous Bag-of-Words (CBOW) • Predict target words from source context words. • Input: "The cat sits on the ______" • Output: mat • Example, 3-gram CBOW = (the,cat) =>sits, (cat,sits)=>on, (sits, on)=> the, (on, the)=> mat • Better for small dataset.

- 9. Skip-Gram model • Skip-Gram model • Predict source context words from target words. • Input: sits • Output: "The cat ____ on the mats" • Example, 1-skip 3-gram Skip-Gram = (the,sits)=>cat, (cat,on)=>sits, (sits, the)=> on, (on, mats)=> the • Better for large dataset. We use this in the slide.

- 10. Noise-Contrastive Training for Vector Space Model • We are using Gradient decent method for binary regression to modeling word-relationship models. (Neural Network) • To discriminates the real target words (that exists in the skip-gram model) and the imaginary noise words (that non-exists in the skip-gram model) => We use the following objective function (maximum it)

- 12. Input • Batch Training, For e.g., Windows Size = 9 • "the quick brown fox jumped over the lazy dog" • 1-skip 3-gram Skip-Gram = (the,brown)=>quick, (quick, fox)=>brown, (brown,jumpted)=> fox,... • Dataset: (quick, the), (quick, brown), (brown, quick), (brown, fox),...

- 13. Loop • (quick, the), (quick, brown), (brown, quick), (brown, fox),... • For each loop, Random pick word that not in windows set as the negative sampling. Then, Stochastic Gradient Descent method adjust the weight for maximum the above objective function.

- 14. Tensorflow code

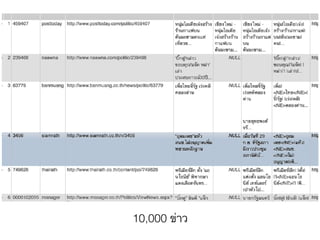

- 16. 10,000 ข่าว

- 17. Clean Data

- 18. Step 0

- 19. Step 30,000