Ad

The Best of Both Worlds: Hybrid Clustering with Delta Lake

- 1. Scott Haines, Distinguished Engineer, Nike The Best of Both Worlds Hybrid Clustering with Delta Lake

- 2. Session Overview Hybrid Clustering with Delta Lake https://www.youtube.com/watch?v=l8CEyXgi7y

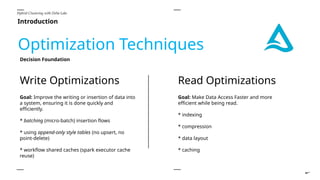

- 3. Introduction Hybrid Clustering with Delta Lake Optimization Techniques Goal: Make Data Access Faster and more efficient while being read. * indexing * compression * data layout * caching Read Optimizations Write Optimizations Goal: Improve the writing or insertion of data into a system, ensuring it is done quickly and efficiently. * batching (micro-batch) insertion flows * using append-only style tables (no upsert, no point-delete) * workflow shared caches (spark executor cache reuse) Decision Foundation

- 4. Introduction Hybrid Clustering with Delta Lake Table Decision: Indecision. Where it Shines ● Provides Read and Write isolation. ● Data Skipping on partition columns available via file-system path ● Simple to understand the table layout Hive-Style Partitioning Where it Shines ● Clustering Flexibility (can change during table lifecycle). ● Removes Data Skew ● *Eliminates Small File Problem ● Incremental Optimization Liquid Clustering The Behavior of your Table should Act as your Guide

- 5. Introduction Hybrid Clustering with Delta Lake Tuning our Tables can feel like a Classic Goldilocks Problem? Performance Tuning ● Run OPTIMIZE to reduce small files (limited to partition boundaries) ● Run OPTIMIZE ZORDER BY to collocate data (non-incremental) Hive-Style Partitioning Performance Tuning ● Run OPTIMIZE (eliminates data skew) Liquid Clustering

- 6. Table Optimization Hybrid Clustering with Delta Lake Wait… What About my Third Option?

- 7. Table Optimization Hybrid Clustering with Delta Lake Why Not Use Both?

- 8. Current (active) Data Architecture Patterns Hybrid Clustering with Delta Lake Use Case: Fast and Slow Systems Close of Books Interval Historic (closed) Data Newes t Oldest

- 9. Architecture Patterns Hybrid Clustering with Delta Lake Workflow Design Sources Table: lifecycle_events Supports Partition Overwrite, Upsert Batch: Periodic Complete Partition Overwrites Table: lifecycle_events_historic Schedule Job Move Data after Close of Books Retention 3 years Retention 14 days Unstable Table Data Period: Close of Books (7d), up to 14d for ooops Stable Table Data: Retention Dictated by Need (3 years in this example) Cron: Daily

- 10. Architecture Patterns Hybrid Clustering with Delta Lake As a workflow across tables. - Individual Tables are optimized for their specific use cases - This means selecting the best technique based on the context (use case) - Leaning on Virtual Tables (Views) to connect the dots Hybrid Optimization Mixed Optimization Techniques Plays well with the Medallion Architecture. Simplified with Unity Catalog ^^ Virtual Tables are your friend. What We’re Going to Build!

- 11. Creating the Bronze Capture Table Hybrid Clustering with Delta Lake - Table must have write isolation (for multiple writers) - Allow Merge Operations - Enable simple deletes at Partition Boundaries - Stores mutating changes during close of books - Retention 14 days “Every week at close-of-books data will be stored a long-term historic table” Requirements Foundation: Ingestion Table

- 12. Creating the Current View Hybrid Clustering with Delta Lake Registered functions that can be applied in creative ways. Easy to reuse and Share. UC Functions Create a Function: Fetch the Close of Books Date Current: Short-Term Memory

- 13. Creating the Current View Hybrid Clustering with Delta Lake - applied at the table globally - *typically used for “sensitive data access” Can also be used to simplify table “behavior” of time-bounded tables and only show “what we want to make visible” UC Row Filters Current: Short-Term Memory Create a Row Filter Function: Check if the row is “current”

- 14. Creating the Current View Hybrid Clustering with Delta Lake Utilizing periodic view refresh to select all data that falls into the “close-of-books” window. Leaning on Views Create or Replace Clamped View: Virtual View of “current” window Current: Short-Term Memory ^^ pushes down to partition filters

- 15. Table Optimization Hybrid Clustering with Delta Lake Liquid Clustering Enabled - data is loaded into the historic table at the “end” of the “close- of-books” - treating the table like an “append-only” table - allows for highly optimized reads of historic data Historic Table Historic: Long-Term Memory

- 16. Table Optimization Hybrid Clustering with Delta Lake Remember the Kappa Architecture? Periodic Job runs to take the latest close of books data and drop it into the long- term historic table. - While this job is batch-esq, it honors throttle and appends a specific series of rows to our historic table, accounting for data at the daily edges (starting, ending timestamp) Append Flow Historic: Long-Term Memory

- 17. Creating the Composite View Hybrid Clustering with Delta Lake Using UNION to combine Historic and Current Tables and Views. View Composition Best of Both Worlds

- 18. Creating the Composite View Hybrid Clustering with Delta Lake “If using Unity Catalog Row Filters, you don’t need to create the two virtual tables, since we can automatically ignore rows that fall out of the predicate” View Composition Best of Both Worlds

- 19. One More Thing … Achieving. Hybrid Dynamic Views “Whoa. That is neat…”

- 20. Questions and Answers Time … Now. Ask us Anything It might even become the topic of another webinar!

Editor's Notes

- #2: Framing this conversation is essentially rooted in table optimization. In order to achieve hybrid clustering, we need to start framing how we think about tables as a series of decisions across time. Tables are not static, and the data needs we have Are also not static. We will look at an end-to-end use case before jumping into questions and answers. Beyond Partitioning Video https://www.youtube.com/watch?v=l8CEyXgi7y4

- #3: Framing this conversation is essentially rooted in table optimization. Read and write optimizations are strategies used to improve the performance of data storage and retrieval systems. These optimizations are targeted at different aspects of the data lifecycle—reading data and writing data. Here’s a breakdown of the differences: 1. Read Optimizations: These are strategies that focus on speeding up the retrieval of data. The goal is to make data access faster and more efficient when it’s being read. Indexing: Creating indexes on frequently queried fields can speed up search operations, as it allows the system to quickly locate data without scanning entire datasets. Caching: Frequently accessed data is stored in a cache (e.g., in-memory) to avoid repeated disk I/O operations. This reduces latency when accessing the same data multiple times. Read Replicas: Multiple copies of the database or dataset are created in different locations. Read requests can be distributed across these replicas, reducing the load on a single server and increasing throughput. Compression: Sometimes, compressed data can be read faster because there is less data to be transferred, although decompression must still occur. Data Layout: Optimizing how data is physically stored (e.g., in databases) so that related data is stored together, reducing the need for unnecessary lookups or joins. Key goal: Reduce the latency and resource consumption of read operations, improving user experience or system efficiency when accessing data. 2. Write Optimizations: These are strategies aimed at improving the writing or insertion of data into a system, ensuring it’s done quickly and efficiently. Batching: Instead of writing data row-by-row or transaction-by-transaction, multiple write operations can be batched together, reducing overhead. Write Ahead Logs (WAL): In some systems, writes are first recorded in a log before being committed to the database. This can improve write performance and ensure data integrity. Sharding: This involves distributing data across multiple servers or databases to prevent any single node from being overwhelmed by writes. Each shard handles a subset of the data. Append-Only Systems: For certain use cases (like time-series data), writes can be optimized by only appending new data, rather than constantly updating existing records. Data Durability Settings: Write performance can be optimized by adjusting the durability settings in a database (e.g., deciding how often data should be synced to disk). Caching Writes: Like reads, writes can also be cached temporarily to reduce the number of actual write operations. The cached data can later be written in batches, which reduces the frequency of costly I/O operations. Key goal: Ensure that data is written to the system efficiently, without unnecessary overhead, and that writes don't significantly slow down the system. In summary: Read optimizations focus on making the retrieval of data as fast as possible. Write optimizations focus on making the process of inserting or updating data faster and more efficient. In many systems, there is a trade-off between read and write performance. Optimizing one can sometimes lead to slower performance on the other, so the best approach depends on the use case and specific requirements of the application. For example, systems with heavy read traffic may prioritize read optimizations (e.g., caching), while write-heavy systems may focus on write optimizations (e.g., batching).

- #4: Throw back to Beyond Partitioning Hive-Style Partitioning works well for specific use cases Squeezing performance from the table requires Optimizing (bin-packing or z-order) Problems with Z-Order is it isn’t incremental Problem with bin-packing is it honors the partition boundaries created when using partitionBy(“colA”, “colB”) - which can increase data skew Liquid-Clustering Eliminates the boundaries that caused data skew with traditional hive-style partitioning Still requires running OPTIMIZE (incremental optimize)

- #5: Throw back to Beyond Partitioning Hive-Style Partitioning works well for specific use cases Squeezing performance from the table requires Optimizing (bin-packing or z-order) Problems with Z-Order is it isn’t incremental Problem with bin-packing is it honors the partition boundaries created when using partitionBy(“colA”, “colB”) - which can increase data skew Liquid-Clustering Eliminates the boundaries that caused data skew with traditional hive-style partitioning Still requires running OPTIMIZE (incremental optimize) Databricks customers can use predictive optimization https://docs.databricks.com/aws/en/optimizations/predictive-optimization to simplify applying OPTIMIZE, VACUUM, and ANALYZE. * OPTIMIZE ZORDER BY isn’t included due to lack of “incremental support”

- #6: Ahh, yes. As with the classic goldilocks problem. We have too hot, too cold, and just right. Getting to the point of just right takes some time. Let’s dive into the overview and then we’ll look at an end-to-end use case that can help frame the “just right” outcome.

- #7: Ahh, yes. As with the classic goldilocks problem. We have too hot, too cold, and just right. Getting to the point of just right takes some time. Let’s dive into the overview and then we’ll look at an end-to-end use case that can help frame the “just right” outcome.

- #8: Many Systems change less frequently the older they get. This is one of the reasons why the Kappa Architecture became popular (streaming - fresh and new) With batch processing incrementally following up

- #9: Supporting Stable and Unstable Table Data requires different ways of working. For Unstable Table Data (any partition, over the close-of-books period) can change for various reasons (there are tons of reasons why specific points in table time need to be modified) The trick to supporting hybrid designs are to create some boundaries. (boundaries are important) Mutating Data (lower retention period) Immutable (append-only style) can have a much longer retention period - and benefit from liquid clustering

- #10: There is no such thing as “hybrid clustering” currently within Delta Lake. There are however two main ways to optimize our tables depending on the needs of the system at a specific point in time. 1. What is the behavior of the table is it append-only style? No upserts or complex point-deletes? – then liquid-clustering makes sense Does the table require complex write logic? For example, a table that requires complete partitions to be overwritten? Are rows upserted?

- #11: The ingestion table is our bronze lineage inception point. - Data can stream into the table, or individual partitions can be dynamically overwritten using write isolation given the “partitionBy” semantics - Given the constraints for partitioning, we automatically generate a date column to partitionBy along with “dc_id” -> which in this case is for a distribution center (but we won’t have time to get into that.)

- #12: Current Data View End result of “volatile” system - bounded view (being replaced every N minutes) Can lean on registered “Functions” in databricks. Show with pyspark. CREATE OR REPLACE FUNCTION get_cob_boundary(days INT) RETURNS DATE RETURN SELECT …; https://docs.databricks.com/aws/en/udf/#python-user-defined-table-functions-udtfs

- #14: The views allow us to easily “remove” any data from earlier points in table time (for the lifecycle_events table) Even though the lifecycle_events table has a shorter retention time, we only care about the “current” active close-of-books week The prior week is mainly for “triage” and “oops”

- #15: Given the shorter retention time of the `lifecycle_events` table We want to specifically “save” the first full day outside of the close-of-books window (periodically)

- #16: When using “startingTimestamp”, “endingTimestamp” - you must account for the possibility that the closest “version” of the table may include rows from the previous or next day. This then allows us to “save” the first entire day (outside of our close of books window). As the data ages out, we can drop it into a more long-term memory table - we could use structured streaming for this, depending on the amount of data being stored, this is feasible. - however, using the Delta transaction log enables us to do interesting things without managing the application StateStore.

- #17: There is no such thing as “hybrid clustering” currently within Delta Lake. There are however two main ways to optimize our tables depending on the needs of the system at a specific point in time. 1. What is the behavior of the table is it append-only style? No upserts or complex point-deletes? – then liquid-clustering makes sense Does the table require complex write logic? For example, a table that requires complete partitions to be overwritten? Are rows upserted?

- #18: There is no such thing as “hybrid clustering” currently within Delta Lake. There are however two main ways to optimize our tables depending on the needs of the system at a specific point in time. 1. What is the behavior of the table is it append-only style? No upserts or complex point-deletes? – then liquid-clustering makes sense Does the table require complex write logic? For example, a table that requires complete partitions to be overwritten? Are rows upserted?

![Serif Affinity Photo Crack 2.3.1.2217 + Serial Key [Latest]](https://cdn.slidesharecdn.com/ss_thumbnails/starrockstechnicaloverview-250128095832-74508123-thumbnail.jpg?width=560&fit=bounds)

![FastStone Capture 10.4 Crack + Serial Key [Latest]](https://cdn.slidesharecdn.com/ss_thumbnails/starrockstechnicaloverview-250128095402-02b8b419-thumbnail.jpg?width=560&fit=bounds)

![EASEUS Partition Master 18.8 Crack + License Code [2025]](https://cdn.slidesharecdn.com/ss_thumbnails/starrockstechnicaloverview-250128094712-a1013639-thumbnail.jpg?width=560&fit=bounds)