The Data Platform Administration Handling the 100 PB.pdf

0 likes672 views

Rakuten Developer Meetup vol.02: How to Manage Large-scale Infrastructure https://rakuten.connpass.com/event/243219/

1 of 16

Ad

Recommended

How We Defined Our Own Cloud.pdf

How We Defined Our Own Cloud.pdfRakuten Group, Inc. This document summarizes Andrew Hajinikitas' work developing Rakuten's private cloud infrastructure. It describes the key components of Rakuten's infrastructure including metal instances, microservers, and GPU servers. It provides details on Rakuten's software stack and their goals to expand managed services. Currently, Rakuten operates 9 data centers in Japan and overseas providing around 30,000 servers to support their ecosystem. Their future plans include extending network self-service, making GPU resources available as a platform service, and improving efficiency through optimized hardware selection.

100PBを越えるデータプラットフォームの実情

100PBを越えるデータプラットフォームの実情Rakuten Group, Inc. Rakuten Developer Meetup vol.01: 楽天エコシステムを支えるインフラエンジニア

https://rakuten.connpass.com/event/232707/

楽天の規模とクラウドプラットフォーム統括部の役割

楽天の規模とクラウドプラットフォーム統括部の役割Rakuten Group, Inc. Rakuten Developer Meetup vol.03

大規模クラウドの仕組みと秘密

https://rakuten.connpass.com/event/254762/

Rakuten Services and Infrastructure Team.pdf

Rakuten Services and Infrastructure Team.pdfRakuten Group, Inc. Rakuten Developer Meetup vol.02: How to Manage Large-scale Infrastructure

https://rakuten.connpass.com/event/243219/

Travel & Leisure Platform Department's tech info

Travel & Leisure Platform Department's tech infoRakuten Group, Inc. Information of Rakuten Group's TLPD technology

Making Cloud Native CI_CD Services.pdf

Making Cloud Native CI_CD Services.pdfRakuten Group, Inc. Rakuten Developer Meetup vol.02: How to Manage Large-scale Infrastructure

https://rakuten.connpass.com/event/243219/

楽天のインフラ事情 2022

楽天のインフラ事情 2022Rakuten Group, Inc. Rakuten Developer Meetup vol.01: 楽天エコシステムを支えるインフラエンジニア

https://rakuten.connpass.com/event/232707/

楽天における大規模データベースの運用

楽天における大規模データベースの運用Rakuten Group, Inc. Rakuten Developer Meetup vol.03

大規模クラウドの仕組みと秘密

https://rakuten.connpass.com/event/254762/

大規模なリアルタイム監視の導入と展開

大規模なリアルタイム監視の導入と展開Rakuten Group, Inc. Rakuten Developer Meetup vol.03

大規模クラウドの仕組みと秘密

https://rakuten.connpass.com/event/254762/

モニタリングプラットフォーム開発の裏側

モニタリングプラットフォーム開発の裏側Rakuten Group, Inc. Rakuten Developer Meetup vol.01: 楽天エコシステムを支えるインフラエンジニア

https://rakuten.connpass.com/event/232707/

楽天サービスを支えるネットワークインフラストラクチャー

楽天サービスを支えるネットワークインフラストラクチャーRakuten Group, Inc. Rakuten Developer Meetup vol.03

大規模クラウドの仕組みと秘密

https://rakuten.connpass.com/event/254762/

Rakuten Platform

Rakuten PlatformRakuten Group, Inc. WebHack#43 Challenges of Global Infrastructure at Rakuten

https://webhack.connpass.com/event/208888/

Kafka & Hadoop in Rakuten

Kafka & Hadoop in RakutenRakuten Group, Inc. WebHack#43 Challenges of Global Infrastructure at Rakuten

https://webhack.connpass.com/event/208888/

Supporting Internal Customers as Technical Account Managers.pdf

Supporting Internal Customers as Technical Account Managers.pdfRakuten Group, Inc. Rakuten Developer Meetup vol.02: How to Manage Large-scale Infrastructure

https://rakuten.connpass.com/event/243219/

Apache Spark on Kubernetes入門(Open Source Conference 2021 Online Hiroshima 発表資料)

Apache Spark on Kubernetes入門(Open Source Conference 2021 Online Hiroshima 発表資料)NTT DATA Technology & Innovation Apache Spark on Kubernetes入門

(Open Source Conference 2021 Online Hiroshima 発表資料)

2021年9月18日

株式会社NTTデータ

技術開発本部 先進コンピューティング技術センタ

依田 玲央奈

楽天サービスとインフラ部隊

楽天サービスとインフラ部隊Rakuten Group, Inc. Rakuten Developer Meetup vol.01: 楽天エコシステムを支えるインフラエンジニア

https://rakuten.connpass.com/event/232707/

Introduction of Rakuten Commerce QA Night#2

Introduction of Rakuten Commerce QA Night#2Rakuten Group, Inc. This document provides an overview of a QA Night event hosted by Rakuten's Service Quality Assurance Group. It includes an introduction to the event as well as an agenda with times and speakers. The agenda focuses on utilizing data from the software testing life cycle (STLC) for various purposes like process improvement, automation frameworks, and reporting to stakeholders. Speakers will provide case studies and examples of how they apply STLC data. The event aims to discuss best practices for collecting, utilizing, and improving the use of testing data throughout the software development life cycle at Rakuten.

PGOを用いたPostgreSQL on Kubernetes入門(PostgreSQL Conference Japan 2022 発表資料)

PGOを用いたPostgreSQL on Kubernetes入門(PostgreSQL Conference Japan 2022 発表資料)NTT DATA Technology & Innovation PGOを用いたPostgreSQL on Kubernetes入門

(PostgreSQL Conference Japan 2022 発表資料)

2022年11月11日(金)

NTTデータ

技術開発本部 先進コンピューティング技術センタ

加藤 慎也

GraalVMの多言語実行機能が凄そうだったので試しにApache Sparkに組み込んで動かしてみたけどちょっとまだ早かったかもしれない(Open So...

GraalVMの多言語実行機能が凄そうだったので試しにApache Sparkに組み込んで動かしてみたけどちょっとまだ早かったかもしれない(Open So...NTT DATA Technology & Innovation GraalVMの多言語実行機能が凄そうだったので試しにApache Sparkに組み込んで動かしてみたけどちょっとまだ早かったかもしれない

(Open Source Conference 2021 Online/Nagoya 発表資料)

2021年5月29日

株式会社NTTデータ

技術開発本部 先進コンピューティング技術センタ

刈谷 満

どうやって決める?kubernetesでのシークレット管理方法(Cloud Native Days 2020 発表資料)

どうやって決める?kubernetesでのシークレット管理方法(Cloud Native Days 2020 発表資料)NTT DATA Technology & Innovation どうやって決める?kubernetesでのシークレット管理方法

(Cloud Native Days 2020 発表資料)

2020年9月8日

株式会社NTTデータ

デジタル技術部

里木、山田

Apache Kafkaって本当に大丈夫?~故障検証のオーバービューと興味深い挙動の紹介~

Apache Kafkaって本当に大丈夫?~故障検証のオーバービューと興味深い挙動の紹介~NTT DATA OSS Professional Services 2019年3月14日に開催されたHadoop / Spark Conference Japan 2019での講演資料です。

チームトポロジーから学び、 データプラットフォーム組織を考え直した話.pptx

チームトポロジーから学び、 データプラットフォーム組織を考え直した話.pptxRakuten Commerce Tech (Rakuten Group, Inc.) チームトポロジーというものがあります。

コンウェイの法則や認知負荷など様々な要素が組織論にはあり、チームトポロジーはそれらを組み合わせ、組織を設計するためのパターンを教えてくれます。

チームが価値創造に集中できるように、チームの役割を明確にし、コミュニケーションをデザインし、ボトルネックを見つけ出す。簡単に聞こえるけど、すごく難しいですよね?

発表の中で、カジュアルにチームトポロジーの紹介と自分がどのように利用したかをご紹介します。

PostgreSQLをKubernetes上で活用するためのOperator紹介!(Cloud Native Database Meetup #3 発表資料)

PostgreSQLをKubernetes上で活用するためのOperator紹介!(Cloud Native Database Meetup #3 発表資料)NTT DATA Technology & Innovation PostgreSQLをKubernetes上で活用するためのOperator紹介!

(Cloud Native Database Meetup #3 発表資料)

2022年1月14日

NTTデータ

技術開発本部 先進コンピューティング技術センタ

藤井 雅雄

ぐるなびが活用するElastic Cloud

ぐるなびが活用するElastic CloudElasticsearch ぐるなびは、Elastic Stackを活用することで膨大なログデータを瞬時に解析し障害対応の迅速化を実現しています。オンプレミスでの課題を解決し、よりKibanaの強力な機能を活用するためにElastic Cloudへマイグレーションを行いました。Elastic Cloudによって解決された課題や、今後の検索基盤としての利用、ビジネス戦略の分析用途などの活用事例をご説明いたします。

Apache Bigtop3.2 (仮)(Open Source Conference 2022 Online/Hiroshima 発表資料)

Apache Bigtop3.2 (仮)(Open Source Conference 2022 Online/Hiroshima 発表資料)NTT DATA Technology & Innovation Apache Bigtop3.2 (仮)

(Open Source Conference 2022 Online/Hiroshima 発表資料)

2022年10月1日(土)

NTTデータ

技術開発本部

関 堅吾、岩崎 正剛

Spring Cloud Data Flow の紹介 #streamctjp

Spring Cloud Data Flow の紹介 #streamctjpYahoo!デベロッパーネットワーク https://connpass.com/event/61546/

Kubernetes ControllerをScale-Outさせる方法 / Kubernetes Meetup Tokyo #55

Kubernetes ControllerをScale-Outさせる方法 / Kubernetes Meetup Tokyo #55Preferred Networks Kubernetes Meetup Tokyo #55 での発表資料です。

https://k8sjp.connpass.com/event/267620/

Jak konsolidovat Vaše databáze s využitím Cloud služeb?

Jak konsolidovat Vaše databáze s využitím Cloud služeb?MarketingArrowECS_CZ Prezentace z webináře dne 10.3.2022

Prezentovali:

Jaroslav Malina - Senior Channel Sales Manager, Oracle

Josef Krejčí - Technology Sales Consultant, Oracle

Josef Šlahůnek - Cloud Systems sales Consultant, Oracle

The Growth Of Data Centers

The Growth Of Data CentersGina Buck Data centers are growing to accommodate more internet-connected devices, with innovations helping achieve network coverage for billions of devices by 2020. As data centers grow, trends like software-driven infrastructure, microtechnology, and alternative energy use are making data centers more efficient by consolidating resources and reducing size. Hyperconvergence allows more efficient use of rack space by consolidating computer storage, networking, and virtualization in compact 2U systems from companies like Simplivity and Nutanix.

Ad

More Related Content

What's hot (20)

大規模なリアルタイム監視の導入と展開

大規模なリアルタイム監視の導入と展開Rakuten Group, Inc. Rakuten Developer Meetup vol.03

大規模クラウドの仕組みと秘密

https://rakuten.connpass.com/event/254762/

モニタリングプラットフォーム開発の裏側

モニタリングプラットフォーム開発の裏側Rakuten Group, Inc. Rakuten Developer Meetup vol.01: 楽天エコシステムを支えるインフラエンジニア

https://rakuten.connpass.com/event/232707/

楽天サービスを支えるネットワークインフラストラクチャー

楽天サービスを支えるネットワークインフラストラクチャーRakuten Group, Inc. Rakuten Developer Meetup vol.03

大規模クラウドの仕組みと秘密

https://rakuten.connpass.com/event/254762/

Rakuten Platform

Rakuten PlatformRakuten Group, Inc. WebHack#43 Challenges of Global Infrastructure at Rakuten

https://webhack.connpass.com/event/208888/

Kafka & Hadoop in Rakuten

Kafka & Hadoop in RakutenRakuten Group, Inc. WebHack#43 Challenges of Global Infrastructure at Rakuten

https://webhack.connpass.com/event/208888/

Supporting Internal Customers as Technical Account Managers.pdf

Supporting Internal Customers as Technical Account Managers.pdfRakuten Group, Inc. Rakuten Developer Meetup vol.02: How to Manage Large-scale Infrastructure

https://rakuten.connpass.com/event/243219/

Apache Spark on Kubernetes入門(Open Source Conference 2021 Online Hiroshima 発表資料)

Apache Spark on Kubernetes入門(Open Source Conference 2021 Online Hiroshima 発表資料)NTT DATA Technology & Innovation Apache Spark on Kubernetes入門

(Open Source Conference 2021 Online Hiroshima 発表資料)

2021年9月18日

株式会社NTTデータ

技術開発本部 先進コンピューティング技術センタ

依田 玲央奈

楽天サービスとインフラ部隊

楽天サービスとインフラ部隊Rakuten Group, Inc. Rakuten Developer Meetup vol.01: 楽天エコシステムを支えるインフラエンジニア

https://rakuten.connpass.com/event/232707/

Introduction of Rakuten Commerce QA Night#2

Introduction of Rakuten Commerce QA Night#2Rakuten Group, Inc. This document provides an overview of a QA Night event hosted by Rakuten's Service Quality Assurance Group. It includes an introduction to the event as well as an agenda with times and speakers. The agenda focuses on utilizing data from the software testing life cycle (STLC) for various purposes like process improvement, automation frameworks, and reporting to stakeholders. Speakers will provide case studies and examples of how they apply STLC data. The event aims to discuss best practices for collecting, utilizing, and improving the use of testing data throughout the software development life cycle at Rakuten.

PGOを用いたPostgreSQL on Kubernetes入門(PostgreSQL Conference Japan 2022 発表資料)

PGOを用いたPostgreSQL on Kubernetes入門(PostgreSQL Conference Japan 2022 発表資料)NTT DATA Technology & Innovation PGOを用いたPostgreSQL on Kubernetes入門

(PostgreSQL Conference Japan 2022 発表資料)

2022年11月11日(金)

NTTデータ

技術開発本部 先進コンピューティング技術センタ

加藤 慎也

GraalVMの多言語実行機能が凄そうだったので試しにApache Sparkに組み込んで動かしてみたけどちょっとまだ早かったかもしれない(Open So...

GraalVMの多言語実行機能が凄そうだったので試しにApache Sparkに組み込んで動かしてみたけどちょっとまだ早かったかもしれない(Open So...NTT DATA Technology & Innovation GraalVMの多言語実行機能が凄そうだったので試しにApache Sparkに組み込んで動かしてみたけどちょっとまだ早かったかもしれない

(Open Source Conference 2021 Online/Nagoya 発表資料)

2021年5月29日

株式会社NTTデータ

技術開発本部 先進コンピューティング技術センタ

刈谷 満

どうやって決める?kubernetesでのシークレット管理方法(Cloud Native Days 2020 発表資料)

どうやって決める?kubernetesでのシークレット管理方法(Cloud Native Days 2020 発表資料)NTT DATA Technology & Innovation どうやって決める?kubernetesでのシークレット管理方法

(Cloud Native Days 2020 発表資料)

2020年9月8日

株式会社NTTデータ

デジタル技術部

里木、山田

Apache Kafkaって本当に大丈夫?~故障検証のオーバービューと興味深い挙動の紹介~

Apache Kafkaって本当に大丈夫?~故障検証のオーバービューと興味深い挙動の紹介~NTT DATA OSS Professional Services 2019年3月14日に開催されたHadoop / Spark Conference Japan 2019での講演資料です。

チームトポロジーから学び、 データプラットフォーム組織を考え直した話.pptx

チームトポロジーから学び、 データプラットフォーム組織を考え直した話.pptxRakuten Commerce Tech (Rakuten Group, Inc.) チームトポロジーというものがあります。

コンウェイの法則や認知負荷など様々な要素が組織論にはあり、チームトポロジーはそれらを組み合わせ、組織を設計するためのパターンを教えてくれます。

チームが価値創造に集中できるように、チームの役割を明確にし、コミュニケーションをデザインし、ボトルネックを見つけ出す。簡単に聞こえるけど、すごく難しいですよね?

発表の中で、カジュアルにチームトポロジーの紹介と自分がどのように利用したかをご紹介します。

PostgreSQLをKubernetes上で活用するためのOperator紹介!(Cloud Native Database Meetup #3 発表資料)

PostgreSQLをKubernetes上で活用するためのOperator紹介!(Cloud Native Database Meetup #3 発表資料)NTT DATA Technology & Innovation PostgreSQLをKubernetes上で活用するためのOperator紹介!

(Cloud Native Database Meetup #3 発表資料)

2022年1月14日

NTTデータ

技術開発本部 先進コンピューティング技術センタ

藤井 雅雄

ぐるなびが活用するElastic Cloud

ぐるなびが活用するElastic CloudElasticsearch ぐるなびは、Elastic Stackを活用することで膨大なログデータを瞬時に解析し障害対応の迅速化を実現しています。オンプレミスでの課題を解決し、よりKibanaの強力な機能を活用するためにElastic Cloudへマイグレーションを行いました。Elastic Cloudによって解決された課題や、今後の検索基盤としての利用、ビジネス戦略の分析用途などの活用事例をご説明いたします。

Apache Bigtop3.2 (仮)(Open Source Conference 2022 Online/Hiroshima 発表資料)

Apache Bigtop3.2 (仮)(Open Source Conference 2022 Online/Hiroshima 発表資料)NTT DATA Technology & Innovation Apache Bigtop3.2 (仮)

(Open Source Conference 2022 Online/Hiroshima 発表資料)

2022年10月1日(土)

NTTデータ

技術開発本部

関 堅吾、岩崎 正剛

Spring Cloud Data Flow の紹介 #streamctjp

Spring Cloud Data Flow の紹介 #streamctjpYahoo!デベロッパーネットワーク https://connpass.com/event/61546/

Kubernetes ControllerをScale-Outさせる方法 / Kubernetes Meetup Tokyo #55

Kubernetes ControllerをScale-Outさせる方法 / Kubernetes Meetup Tokyo #55Preferred Networks Kubernetes Meetup Tokyo #55 での発表資料です。

https://k8sjp.connpass.com/event/267620/

Apache Spark on Kubernetes入門(Open Source Conference 2021 Online Hiroshima 発表資料)

Apache Spark on Kubernetes入門(Open Source Conference 2021 Online Hiroshima 発表資料)NTT DATA Technology & Innovation

PGOを用いたPostgreSQL on Kubernetes入門(PostgreSQL Conference Japan 2022 発表資料)

PGOを用いたPostgreSQL on Kubernetes入門(PostgreSQL Conference Japan 2022 発表資料)NTT DATA Technology & Innovation

GraalVMの多言語実行機能が凄そうだったので試しにApache Sparkに組み込んで動かしてみたけどちょっとまだ早かったかもしれない(Open So...

GraalVMの多言語実行機能が凄そうだったので試しにApache Sparkに組み込んで動かしてみたけどちょっとまだ早かったかもしれない(Open So...NTT DATA Technology & Innovation

PostgreSQLをKubernetes上で活用するためのOperator紹介!(Cloud Native Database Meetup #3 発表資料)

PostgreSQLをKubernetes上で活用するためのOperator紹介!(Cloud Native Database Meetup #3 発表資料)NTT DATA Technology & Innovation

Apache Bigtop3.2 (仮)(Open Source Conference 2022 Online/Hiroshima 発表資料)

Apache Bigtop3.2 (仮)(Open Source Conference 2022 Online/Hiroshima 発表資料)NTT DATA Technology & Innovation

Similar to The Data Platform Administration Handling the 100 PB.pdf (20)

Jak konsolidovat Vaše databáze s využitím Cloud služeb?

Jak konsolidovat Vaše databáze s využitím Cloud služeb?MarketingArrowECS_CZ Prezentace z webináře dne 10.3.2022

Prezentovali:

Jaroslav Malina - Senior Channel Sales Manager, Oracle

Josef Krejčí - Technology Sales Consultant, Oracle

Josef Šlahůnek - Cloud Systems sales Consultant, Oracle

The Growth Of Data Centers

The Growth Of Data CentersGina Buck Data centers are growing to accommodate more internet-connected devices, with innovations helping achieve network coverage for billions of devices by 2020. As data centers grow, trends like software-driven infrastructure, microtechnology, and alternative energy use are making data centers more efficient by consolidating resources and reducing size. Hyperconvergence allows more efficient use of rack space by consolidating computer storage, networking, and virtualization in compact 2U systems from companies like Simplivity and Nutanix.

IRJET- A Comparative Study on Big Data Analytics Approaches and Tools

IRJET- A Comparative Study on Big Data Analytics Approaches and ToolsIRJET Journal This document provides an overview of big data analytics approaches and tools. It begins with an abstract discussing the need to evaluate different methodologies and technologies based on organizational needs to identify the optimal solution. The document then reviews literature on big data analytics tools and techniques, and evaluates challenges faced by small vs large organizations. Several big data application examples across industries are presented. The document also introduces concepts of big data including the 3Vs (volume, velocity, variety), describes tools like Hadoop, Cloudera and Cassandra, and discusses scaling big data technologies based on an organization's requirements.

MapR and Cisco Make IT Better

MapR and Cisco Make IT BetterMapR Technologies You’re not the only one still loading your data into data warehouses and building marts or cubes out of it. But today’s data requires a much more accessible environment that delivers real-time results. Prepare for this transformation because your data platform and storage choices are about to undergo a re-platforming that happens once in 30 years.

With the MapR Converged Data Platform (CDP) and Cisco Unified Compute System (UCS), you can optimize today’s infrastructure and grow to take advantage of what’s next. Uncover the range of possibilities from re-platforming by intimately understanding your options for density, performance, functionality and more.

Big data - what, why, where, when and how

Big data - what, why, where, when and howbobosenthil The document discusses big data, including what it is, its characteristics, and architectural frameworks for managing it. Big data is defined as data that exceeds the processing capacity of conventional database systems due to its large size, speed of creation, and unstructured nature. The architecture for managing big data is demonstrated through Hadoop technology, which uses a MapReduce framework and open source ecosystem to process data across multiple nodes in parallel.

592-1627-1-PB

592-1627-1-PBKamal Jyoti This document discusses perspectives on big data applications for database engineers and IT students. It summarizes key concepts of big data and MongoDB, a popular NoSQL database for managing big data. It then demonstrates practical learning activities using MongoDB, such as installation, terminology, and basic syntax. The document concludes by emphasizing the importance of skills in big data and cloud computing for IT professionals and recommends further research on MongoDB security.

Machine Learning for z/OS

Machine Learning for z/OSCuneyt Goksu IBM's zAnalytics strategy provides a complete picture of analytics on the mainframe using DB2, the DB2 Analytics Accelerator, and Watson Machine Learning for System z. The presentation discusses updates to DB2 for z/OS including agile partition technology, in-memory processing, and RESTful APIs. It also reviews how the DB2 Analytics Accelerator can integrate with Machine Learning for z/OS to enable scoring of machine learning models directly on the mainframe for both small and large datasets.

Bigdata-Intro.pptx

Bigdata-Intro.pptxsmitasatpathy2 The document provides an overview of big data analytics. It defines big data as high-volume, high-velocity, and high-variety information assets that require cost-effective and innovative forms of processing for insights and decision making. Big data is characterized by the 3Vs - volume, velocity, and variety. The emergence of big data is driven by the massive amount of data now being generated and stored, availability of open source tools, and commodity hardware. The course will cover Apache Hadoop, Apache Spark, streaming analytics, visualization, linked data analysis, and big data systems and AI solutions.

Introducing Events and Stream Processing into Nationwide Building Society

Introducing Events and Stream Processing into Nationwide Building Societyconfluent Watch this talk here: https://www.confluent.io/online-talks/introducing-events-and-stream-processing-nationwide-building-society

Open Banking regulations compel the UK’s largest banks, and building societies to enable their customers to share personal information with other regulated companies securely. As a result companies such as Nationwide Building Society are re-architecting their processes and infrastructure around customer needs to reduce the risk of losing relevance and the ability to innovate.

In this online talk, you will learn why, when facing Open Banking regulation and rapidly increasing transaction volumes, Nationwide decided to take load off their back-end systems through real-time streaming of data changes into Apache Kafka®. You will hear how Nationwide started their journey with Apache Kafka®, beginning with the initial use case of creating a real-time data cache using Change Data Capture, Confluent Platform and Microservices. Rob Jackson, Head of Application Architecture, will also cover how Confluent enabled Nationwide to build the stream processing backbone that is being used to re-engineer the entire banking experience including online banking, payment processing and mortgage applications.

View now to:

-Explore the technologies used by Nationwide to meet the challenges of Open Banking

-Understand how Nationwide is using KSQL and Kafka Streams Framework to join topics and process data.

-Learn how Confluent Platform can enable enterprises such as Nationwide to embrace the event streaming paradigm

-See a working demo of the Nationwide system and what happens when the underlying infrastructure breaks.

Qo Introduction V2

Qo Introduction V2Joe_F The document discusses trends in data growth and computing. It notes that the amount of data being stored doubles every 18-24 months and provides examples of large data holdings from companies like AT&T, Google, and Walmart. It then summarizes key points about data growth from enterprises and digital lives. The rest of the document focuses on strategies and technologies for managing large and growing volumes of data, including parallel processing databases, new database architectures, and the QueryObject system.

Idc analyst report a new breed of servers for digital transformation

Idc analyst report a new breed of servers for digital transformationKaizenlogcom Digital transformation requires organizations to leverage new technologies like mobile, cloud, and big data analytics to develop new strategies. This transformation demands new approaches to data management and infrastructure. A robust, high-performing 1-2 socket server infrastructure is critical to support evolving applications from basic web and cloud services to advanced analytics. IBM's OpenPOWER LC servers, powered by the POWER8 processor and accelerators, provide such an infrastructure while also helping control operational expenses associated with low server utilization rates.

Denodo DataFest 2016: The Role of Data Virtualization in IoT Integration

Denodo DataFest 2016: The Role of Data Virtualization in IoT IntegrationDenodo Watch the full session: Denodo DataFest 2016 sessions: https://goo.gl/DOrhiA

Connected use cases are gaining momentum! Data integration is the foundation for enabling these connections. In this session, you will experience first-hand our customer case studies and implementation architectures of IoT solutions.

In this session, you will learn:

• The role of data virtualization in enabling IoT use cases

• How our customers have successfully implemented IoT solutions using data virtualization

• How our product complements other IoT technologies

This session is part of the Denodo DataFest 2016 event. You can also watch more Denodo DataFest sessions on demand here: https://goo.gl/VXb6M6

Deploying cost effective cloud data center

Deploying cost effective cloud data centerWiudo Laos Steps for Building, Deploying powerful big cloud data center for low cost, low energy, reliable, scalable strategy for frontend-backend Ecommerce and financial services.

Oracle databáze - zkonsolidovat, ochránit a ještě ušetřit! (1. část)

Oracle databáze - zkonsolidovat, ochránit a ještě ušetřit! (1. část)MarketingArrowECS_CZ Prezentace z webináře dne 25.11.2020

Prezentoval Jaroslav Malina, Senior Channel Sales Manager, Oracle

Virtual Machine Allocation Policy in Cloud Computing Environment using CloudSim

Virtual Machine Allocation Policy in Cloud Computing Environment using CloudSim IJECEIAES This document discusses virtual machine allocation policies in cloud computing environments using the CloudSim simulation tool. It begins with an introduction to cloud computing and discusses challenges related to resource management and energy consumption. It then reviews previous research on modeling approaches, energy optimization techniques, and network topologies. A UML class model is presented for analyzing energy consumption when accessing cloud servers arranged in a step network topology. The methodology section outlines how energy consumption by system components like processors, RAM, hard disks, and motherboards will be calculated. Simulation results will depict response times and cost details for different data center configurations and allocation policies.

Modern Data Management for Federal Modernization

Modern Data Management for Federal ModernizationDenodo Watch full webinar here: https://bit.ly/2QaVfE7

Faster, more agile data management is at the heart of government modernization. However, Traditional data delivery systems are limited in realizing a modernized and future-proof data architecture.

This webinar will address how data virtualization can modernize existing systems and enable new data strategies. Join this session to learn how government agencies can use data virtualization to:

- Enable governed, inter-agency data sharing

- Simplify data acquisition, search and tagging

- Streamline data delivery for transition to cloud, data science initiatives, and more

Big Data with Hadoop – For Data Management, Processing and Storing

Big Data with Hadoop – For Data Management, Processing and StoringIRJET Journal This document discusses big data and Hadoop. It begins with defining big data and explaining its characteristics of volume, variety, velocity, and veracity. It then provides an overview of Hadoop, describing its core components of HDFS for storage and MapReduce for processing. Key technologies in Hadoop's ecosystem are also summarized like Hive, Pig, and HBase. The document concludes by outlining some challenges of big data like issues of heterogeneity and incompleteness of data.

IRJET- Systematic Review: Progression Study on BIG DATA articles

IRJET- Systematic Review: Progression Study on BIG DATA articlesIRJET Journal This document provides a systematic review of research articles on big data analysis. It analyzed 64 articles published between 2014-2018 from IEEE Explorer and Google Scholar databases. Key findings include: the number of published articles has increased each year, reflecting the growing importance of big data; experimental and case study articles accounted for 25 of the analyzed papers; 19 articles were ultimately selected for review, with 11 from Google Scholar and 8 from IEEE Explorer. The review aims to provide an overview of current research progress on big data analysis techniques.

Resume (1)

Resume (1)naveenreddytamma The document contains the resume of Naveen Reddy Tamma which summarizes his work experience and qualifications. He has over 7 years of experience working as an Associate at Cognizant Technology Solutions on various projects involving Informatica ETL development, data quality, and reporting. He holds a B.Tech in Computer Science and has experience with technologies like Informatica, Teradata, Oracle, and Cognos.

Resume (1)

Resume (1)naveenreddytamma The document contains the resume of Naveen Reddy Tamma which summarizes his work experience and qualifications. He has over 7 years of experience working as an Associate at Cognizant Technology Solutions on various projects involving Informatica ETL development, data quality testing, and report generation. He holds a B.Tech in Computer Science and has experience working with technologies like Informatica, Teradata, Oracle, and Cognos.

Ad

More from Rakuten Group, Inc. (14)

EPSS (Exploit Prediction Scoring System)モニタリングツールの開発

EPSS (Exploit Prediction Scoring System)モニタリングツールの開発Rakuten Group, Inc. EPSS(Exploit Prediction Scoring System)は、FIRST が管理・運用する脆弱性が実際に悪用される可能性を予測するスコアリングシステムです。

脆弱性管理における優先順位をつける際の指標の1つとして注目されていますが、継続的にモニタリングを行う方法に課題を感じていました。

この課題を解決するためのスクリプトを AI アシスタント (Rakuten AI) との共同開発しました。このプレゼンは開発過程について紹介し、実際の適用例、今後の改善点についてご紹介しています。

コードレビュー改善のためにJenkinsとIntelliJ IDEAのプラグインを自作してみた話

コードレビュー改善のためにJenkinsとIntelliJ IDEAのプラグインを自作してみた話Rakuten Group, Inc. CI/CDツールであるJenkinsとIDEであるIntelliJ IDEA。両者の共通点は、Javaで書かれている点と、プラグインによって機能を拡張できる点です。つまり、Javaを用いてプラグインを実装することで、自分が欲しい機能を追加することができます。本セッションでは、コードレビュープロセスを改善するためにJenkinsとIntelliJ IDEAのプラグインを自作した経験を踏まえて、実装方法に触れつつ、得られた結果や苦労した点について話します。

What Makes Software Green?

What Makes Software Green?Rakuten Group, Inc. This document discusses how to make software more green and environmentally friendly. It defines green software as software that is carbon efficient, energy efficient, hardware efficient, and carbon aware. It provides recommendations for various roles within an organization on driving green initiatives, including focusing on efficiency for CxOs, architects, infrastructure engineers, and developers. Examples include optimizing resource usage, using public clouds effectively, prioritizing equipment standardization, and developing applications that can run more efficiently.

Simple and Effective Knowledge-Driven Query Expansion for QA-Based Product At...

Simple and Effective Knowledge-Driven Query Expansion for QA-Based Product At...Rakuten Group, Inc. The document proposes a knowledge-driven query expansion approach for question answering (QA)-based product attribute extraction. It trains QA models using attribute-value pairs from training data as knowledge, while mimicking imperfect knowledge at test time through techniques like knowledge dropout and token mixing. This helps induce better query representations, especially for rare and ambiguous attributes. Experiments on a cleaned product attribute dataset show the proposed approach with all techniques outperforms baseline methods in both macro and micro F1 scores.

DataSkillCultureを浸透させる楽天の取り組み

DataSkillCultureを浸透させる楽天の取り組みRakuten Group, Inc. Rakuten Developer Meetup vol.03

大規模クラウドの仕組みと秘密

https://rakuten.connpass.com/event/254762/

Travel & Leisure Platform Department's tech info

Travel & Leisure Platform Department's tech infoRakuten Group, Inc. The document discusses the Travel & Leisure Platform Dept and its responsibilities related to data and platform management. It provides an overview of the technical stack including private/public clouds, databases, containers, and automation/monitoring tools. It then discusses recent projects involving business continuity, containerization, alert integration, and automation. Finally, it describes open roles for a DBA and DevOps position and their responsibilities related to database provisioning, backup/recovery, infrastructure as code, and providing platforms and tools for developers.

OWASPTop10_Introduction

OWASPTop10_IntroductionRakuten Group, Inc. This presentation introduces the OWASP Top 10:2021.

It explains how to look at the data related to OWASP Top 10:2021, and provides detailed explanations of items with distinctive data. It also introduces the OWASP Project related to each item.

Introduction of GORA API Group technology

Introduction of GORA API Group technologyRakuten Group, Inc. Gora API Group technology provides a microservices architecture and APIs for Rakuten's golf course reservation system, improving the user experience and increasing customer loyalty and annual golf rounds. The architecture migrates the monolithic reservation system to microservices using Kotlin, Spring Boot, and other technologies, exposing APIs for the frontend and new products while sustaining the legacy system through services, queues, continuous delivery, and operations monitoring.

社内エンジニアを支えるテクニカルアカウントマネージャー

社内エンジニアを支えるテクニカルアカウントマネージャーRakuten Group, Inc. Rakuten Developer Meetup vol.01: 楽天エコシステムを支えるインフラエンジニア

https://rakuten.connpass.com/event/232707/

Unclouding Container Challenges

Unclouding Container ChallengesRakuten Group, Inc. WebHack#43 Challenges of Global Infrastructure at Rakuten

https://webhack.connpass.com/event/208888/

Functional Programming in Pattern-Match-Oriented Programming Style <Programmi...

Functional Programming in Pattern-Match-Oriented Programming Style <Programmi...Rakuten Group, Inc. The document discusses pattern-match-oriented programming (PMOP) using the Egison programming language. PMOP aims to clarify programming with patterns by showing examples that confine explicit recursions in patterns. The key features of Egison that enable PMOP include non-linear pattern matching with multiple results, polymorphic patterns via matchers, and built-in patterns like loop patterns. The document presents several PMOP design patterns like join-cons patterns for lists, cons patterns for multisets, and tuple patterns for comparing multiple data. Examples demonstrate using patterns to define functions like map, intersection, and difference.

アジャイル開発とメトリクス

アジャイル開発とメトリクスRakuten Group, Inc. 近年日本のソフトウェア開発チームでも取り入れられるようになったアジャイル/DevOps開発では,今まで主流であったウォーターフォール開発と異なり,短い開発サイクルの中で小刻みなフィードバックループと改善活動を繰り返しながら開発する特徴がある.そのため,品質保証や信頼性でのメトリクス活用においても,メトリクスにもとづいたQAテストを実施することは依然重要であるが,それに加え開発から運用までの一連のプロセスの中でプロダクトとプロセスの品質を見える化し継続的な改善活動を促進するフィードバックを提供することがアジャイル開発では求められる.また、DevOps開発では本番稼働中のシステムについてもレジリエンスの枠組みで障害やバグに関するフィード バックを獲得し継続的に学習する.本講演ではアジャイル /DevOps の品質保証と信頼性におけるメトリクス活用の方法について事例も交えながら紹介する.

AR/SLAM and IoT

AR/SLAM and IoTRakuten Group, Inc. Invited talk on AR/SLAM and IoT in ILAS Seminar :Introduction to IoT and

Security, Kyoto University, 2020.

(https://www.z.k.kyoto-u.ac.jp/freshman-guide/ilas-seminars/ )

◆登壇者: Tomoyuki Mukasa

Ad

Recently uploaded (20)

Generative Artificial Intelligence (GenAI) in Business

Generative Artificial Intelligence (GenAI) in BusinessDr. Tathagat Varma My talk for the Indian School of Business (ISB) Emerging Leaders Program Cohort 9. In this talk, I discussed key issues around adoption of GenAI in business - benefits, opportunities and limitations. I also discussed how my research on Theory of Cognitive Chasms helps address some of these issues

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptx

Increasing Retail Store Efficiency How can Planograms Save Time and Money.pptxAnoop Ashok In today's fast-paced retail environment, efficiency is key. Every minute counts, and every penny matters. One tool that can significantly boost your store's efficiency is a well-executed planogram. These visual merchandising blueprints not only enhance store layouts but also save time and money in the process.

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...

Massive Power Outage Hits Spain, Portugal, and France: Causes, Impact, and On...Aqusag Technologies In late April 2025, a significant portion of Europe, particularly Spain, Portugal, and parts of southern France, experienced widespread, rolling power outages that continue to affect millions of residents, businesses, and infrastructure systems.

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...

Enhancing ICU Intelligence: How Our Functional Testing Enabled a Healthcare I...Impelsys Inc. Impelsys provided a robust testing solution, leveraging a risk-based and requirement-mapped approach to validate ICU Connect and CritiXpert. A well-defined test suite was developed to assess data communication, clinical data collection, transformation, and visualization across integrated devices.

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...

TrustArc Webinar: Consumer Expectations vs Corporate Realities on Data Broker...TrustArc Most consumers believe they’re making informed decisions about their personal data—adjusting privacy settings, blocking trackers, and opting out where they can. However, our new research reveals that while awareness is high, taking meaningful action is still lacking. On the corporate side, many organizations report strong policies for managing third-party data and consumer consent yet fall short when it comes to consistency, accountability and transparency.

This session will explore the research findings from TrustArc’s Privacy Pulse Survey, examining consumer attitudes toward personal data collection and practical suggestions for corporate practices around purchasing third-party data.

Attendees will learn:

- Consumer awareness around data brokers and what consumers are doing to limit data collection

- How businesses assess third-party vendors and their consent management operations

- Where business preparedness needs improvement

- What these trends mean for the future of privacy governance and public trust

This discussion is essential for privacy, risk, and compliance professionals who want to ground their strategies in current data and prepare for what’s next in the privacy landscape.

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep Dive

Designing Low-Latency Systems with Rust and ScyllaDB: An Architectural Deep DiveScyllaDB Want to learn practical tips for designing systems that can scale efficiently without compromising speed?

Join us for a workshop where we’ll address these challenges head-on and explore how to architect low-latency systems using Rust. During this free interactive workshop oriented for developers, engineers, and architects, we’ll cover how Rust’s unique language features and the Tokio async runtime enable high-performance application development.

As you explore key principles of designing low-latency systems with Rust, you will learn how to:

- Create and compile a real-world app with Rust

- Connect the application to ScyllaDB (NoSQL data store)

- Negotiate tradeoffs related to data modeling and querying

- Manage and monitor the database for consistently low latencies

How analogue intelligence complements AI

How analogue intelligence complements AIPaul Rowe

Artificial Intelligence is providing benefits in many areas of work within the heritage sector, from image analysis, to ideas generation, and new research tools. However, it is more critical than ever for people, with analogue intelligence, to ensure the integrity and ethical use of AI. Including real people can improve the use of AI by identifying potential biases, cross-checking results, refining workflows, and providing contextual relevance to AI-driven results.

News about the impact of AI often paints a rosy picture. In practice, there are many potential pitfalls. This presentation discusses these issues and looks at the role of analogue intelligence and analogue interfaces in providing the best results to our audiences. How do we deal with factually incorrect results? How do we get content generated that better reflects the diversity of our communities? What roles are there for physical, in-person experiences in the digital world?

Rusty Waters: Elevating Lakehouses Beyond Spark

Rusty Waters: Elevating Lakehouses Beyond Sparkcarlyakerly1 Spark is a powerhouse for large datasets, but when it comes to smaller data workloads, its overhead can sometimes slow things down. What if you could achieve high performance and efficiency without the need for Spark?

At S&P Global Commodity Insights, having a complete view of global energy and commodities markets enables customers to make data-driven decisions with confidence and create long-term, sustainable value. 🌍

Explore delta-rs + CDC and how these open-source innovations power lightweight, high-performance data applications beyond Spark! 🚀

Quantum Computing Quick Research Guide by Arthur Morgan

Quantum Computing Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...

IEDM 2024 Tutorial2_Advances in CMOS Technologies and Future Directions for C...organizerofv IEDM 2024 Tutorial2

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...

Noah Loul Shares 5 Steps to Implement AI Agents for Maximum Business Efficien...Noah Loul Artificial intelligence is changing how businesses operate. Companies are using AI agents to automate tasks, reduce time spent on repetitive work, and focus more on high-value activities. Noah Loul, an AI strategist and entrepreneur, has helped dozens of companies streamline their operations using smart automation. He believes AI agents aren't just tools—they're workers that take on repeatable tasks so your human team can focus on what matters. If you want to reduce time waste and increase output, AI agents are the next move.

Linux Support for SMARC: How Toradex Empowers Embedded Developers

Linux Support for SMARC: How Toradex Empowers Embedded DevelopersToradex Toradex brings robust Linux support to SMARC (Smart Mobility Architecture), ensuring high performance and long-term reliability for embedded applications. Here’s how:

• Optimized Torizon OS & Yocto Support – Toradex provides Torizon OS, a Debian-based easy-to-use platform, and Yocto BSPs for customized Linux images on SMARC modules.

• Seamless Integration with i.MX 8M Plus and i.MX 95 – Toradex SMARC solutions leverage NXP’s i.MX 8 M Plus and i.MX 95 SoCs, delivering power efficiency and AI-ready performance.

• Secure and Reliable – With Secure Boot, over-the-air (OTA) updates, and LTS kernel support, Toradex ensures industrial-grade security and longevity.

• Containerized Workflows for AI & IoT – Support for Docker, ROS, and real-time Linux enables scalable AI, ML, and IoT applications.

• Strong Ecosystem & Developer Support – Toradex offers comprehensive documentation, developer tools, and dedicated support, accelerating time-to-market.

With Toradex’s Linux support for SMARC, developers get a scalable, secure, and high-performance solution for industrial, medical, and AI-driven applications.

Do you have a specific project or application in mind where you're considering SMARC? We can help with Free Compatibility Check and help you with quick time-to-market

For more information: https://www.toradex.com/computer-on-modules/smarc-arm-family

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...

AI Changes Everything – Talk at Cardiff Metropolitan University, 29th April 2...Alan Dix Talk at the final event of Data Fusion Dynamics: A Collaborative UK-Saudi Initiative in Cybersecurity and Artificial Intelligence funded by the British Council UK-Saudi Challenge Fund 2024, Cardiff Metropolitan University, 29th April 2025

https://alandix.com/academic/talks/CMet2025-AI-Changes-Everything/

Is AI just another technology, or does it fundamentally change the way we live and think?

Every technology has a direct impact with micro-ethical consequences, some good, some bad. However more profound are the ways in which some technologies reshape the very fabric of society with macro-ethical impacts. The invention of the stirrup revolutionised mounted combat, but as a side effect gave rise to the feudal system, which still shapes politics today. The internal combustion engine offers personal freedom and creates pollution, but has also transformed the nature of urban planning and international trade. When we look at AI the micro-ethical issues, such as bias, are most obvious, but the macro-ethical challenges may be greater.

At a micro-ethical level AI has the potential to deepen social, ethnic and gender bias, issues I have warned about since the early 1990s! It is also being used increasingly on the battlefield. However, it also offers amazing opportunities in health and educations, as the recent Nobel prizes for the developers of AlphaFold illustrate. More radically, the need to encode ethics acts as a mirror to surface essential ethical problems and conflicts.

At the macro-ethical level, by the early 2000s digital technology had already begun to undermine sovereignty (e.g. gambling), market economics (through network effects and emergent monopolies), and the very meaning of money. Modern AI is the child of big data, big computation and ultimately big business, intensifying the inherent tendency of digital technology to concentrate power. AI is already unravelling the fundamentals of the social, political and economic world around us, but this is a world that needs radical reimagining to overcome the global environmental and human challenges that confront us. Our challenge is whether to let the threads fall as they may, or to use them to weave a better future.

Build Your Own Copilot & Agents For Devs

Build Your Own Copilot & Agents For DevsBrian McKeiver May 2nd, 2025 talk at StirTrek 2025 Conference.

Andrew Marnell: Transforming Business Strategy Through Data-Driven Insights

Andrew Marnell: Transforming Business Strategy Through Data-Driven InsightsAndrew Marnell With expertise in data architecture, performance tracking, and revenue forecasting, Andrew Marnell plays a vital role in aligning business strategies with data insights. Andrew Marnell’s ability to lead cross-functional teams ensures businesses achieve sustainable growth and operational excellence.

Cyber Awareness overview for 2025 month of security

Cyber Awareness overview for 2025 month of securityriccardosl1 Cyber awareness training educates employees on risk associated with internet and malicious emails

Big Data Analytics Quick Research Guide by Arthur Morgan

Big Data Analytics Quick Research Guide by Arthur MorganArthur Morgan This is a Quick Research Guide (QRG).

QRGs include the following:

- A brief, high-level overview of the QRG topic.

- A milestone timeline for the QRG topic.

- Links to various free online resource materials to provide a deeper dive into the QRG topic.

- Conclusion and a recommendation for at least two books available in the SJPL system on the QRG topic.

QRGs planned for the series:

- Artificial Intelligence QRG

- Quantum Computing QRG

- Big Data Analytics QRG

- Spacecraft Guidance, Navigation & Control QRG (coming 2026)

- UK Home Computing & The Birth of ARM QRG (coming 2027)

Any questions or comments?

- Please contact Arthur Morgan at [email protected].

100% human made.

Procurement Insights Cost To Value Guide.pptx

Procurement Insights Cost To Value Guide.pptxJon Hansen Procurement Insights integrated Historic Procurement Industry Archives, serves as a powerful complement — not a competitor — to other procurement industry firms. It fills critical gaps in depth, agility, and contextual insight that most traditional analyst and association models overlook.

Learn more about this value- driven proprietary service offering here.

Complete Guide to Advanced Logistics Management Software in Riyadh.pdf

Complete Guide to Advanced Logistics Management Software in Riyadh.pdfSoftware Company Explore the benefits and features of advanced logistics management software for businesses in Riyadh. This guide delves into the latest technologies, from real-time tracking and route optimization to warehouse management and inventory control, helping businesses streamline their logistics operations and reduce costs. Learn how implementing the right software solution can enhance efficiency, improve customer satisfaction, and provide a competitive edge in the growing logistics sector of Riyadh.

The Data Platform Administration Handling the 100 PB.pdf

- 1. The Data Platform Administration Handling the 100 PB May 19th, 2022 Yongduck Lee Cloud Platform Department Rakuten Group, Inc.

- 2. 2 About me Lecture History - Colloquium Lecturer at KAIST Program Committee - BigComp2017/2019 - EDB 2016 Certification - Certified Scrum Master (CSM) - Certified Project Management Professional (PMP #1255421) … ETC Lee Yongduck Daniel A Vice Section Manager and Senior Architect at Data Storage and Processing Section in Rakuten Group, Inc. Started as Recommendation Engine Developer and now is focusing on researching and verifying new Big Data Technology and how to support users who want to use Big Data System. B.Sc in Korea University in 2001. 21 years in Japan and have been worked for many organization and company such as NHK, NTTD and Rakuten Group, Inc.

- 3. 3 CONTENTS 1. Global Internet & Data Explosion 2. Data in Rakuten 3. Data platform & Big Data Administrator in Rakuten 4. What Advantages as Engineer in Rakuten

- 4. 4 Internet & Globalization The Internet is the global system of interconnected computer networks that use the Internet protocol suite (TCP/IP) to link devices worldwide. It is a network of networks that consists of private, public, academic, business, and government networks of local to global scope, linked by a broad array of electronic, wireless, and optical networking technologies G C Vast Unstructured 80% Structured 20% 35.2 ZB in 2020 The origins of the Internet date back to research commissioned by the federal government of the United States in the 1960s to build robust, fault- tolerant communication with computer networks. https://en.wikipedia.org/wiki/Internet#World_Wide_Web * From IDC white paper & EMC hances Lobalization Information Structure Volume

- 5. 5 Internet Users Internet users are defined as persons who accessed the Internet in the last 12 months from any device, including mobile phones. https://en.wikipedia.org/wiki/List_of_countries_by_number_of_Internet_users#cite_note-UN_WPP-14

- 6. 6 Internet Users https://en.wikipedia.org/wiki/List_of_countries_by_number_of_Internet_users#cite_note-UN_WPP-14 In Japan 92.3% are using Internet ( Population 127,202,192 / Internet Users 117,400,000 ) At 2018

- 7. 7

- 8. 8

- 9. 9 The Big Data in Rakuten There are huge potential value and possibilities due to Diversity of Service and Users not only from Japan but also Global. It is very interesting and ideal environment for Data Scientiest and Data Analyst. Increase synergy effect on personalization, clustering, segmentation, etc. by combining data from various services. The large volume of data every day, every month, and every year from services and users. It is a big challenge to store data and make it easy to utilize for data users as System Infrastructure Engineer and Data Engineer. Diversity and Synergy Scale

- 10. 10 Rakuten Hadoop and Kafka Supporting near-realtime & streaming processing in each region. Handling data totally around 1.3 Million Message/sec ( 10 GB/sec IN/OUT) around peak time at normal date. At 2021 Super Sale, we handled more than 2.5 times messages and traffics. Supporting Data Lake, Data Mart, and Data Analysis for Rakuten Service in each region. Lots of value mining from big data are being done by data scientist and contributing on Rakuten Service. Kafka: 800 Core, 20TB Mem, 4728 Topics Hadoop : 80K Core, 600 TB Mem, 160K TB Disk

- 11. 11 The Challenge on Administration

- 12. 12 The Big Data in Rakuten Platform/Middleware Administrator Users Project/Product Manager Big Data Platform Administrator Infra/Server Administrator Network Administrator Software/System Architect Software Developer

- 13. 13 Administration Use CASE (HBase) User reported performance issues on HBase but there were no issues or report from other users who are using other component on Hadoop. Confirm Way to get/put data on HBase • HBase Configuration Architecture, Work/Dataflow. Application/GC Logs • Dependency Component (*HDFS) READ/Write Performance Logs Application/GC Logs • DISK/Mem/CPU Load • Kernel Log • Network Connection Date & Time Matching Data Hot Spotting. Data or Configuration Caching HDFS JVM Config change Increasing Handler Increasing Scanner Interval HW Improvement Master Node Replacement Reduced RegionServers Move HDD to NVMe Dedicated RegionServers OS Configuration Root noprocs, nofiles increasing on Dedicated RS HBASE TCPNoDelay, Parallel Seeking , Master Table Locality WRITE/Short-READ/Long-READ Queue DEADLINE Scheduler, Hedged Reads, Short Circuit READ

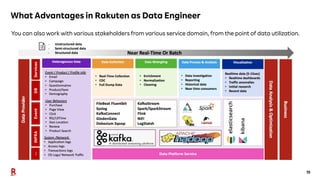

- 14. 14 What Advantages in Rakuten as Data Engineer You can go through all necessary domains of Big Data Platform to get rich experience for Big Data Platform Administrators. Rakuten has experts who have rich knowledges and experiences on each technical and management domain.

- 15. 15 What Advantages in Rakuten as Data Engineer You can also work with various stakeholders from various service domain, from the point of data utilization. DB Services Event INFRA …