Tiancheng Zhao - 2017 - Learning Discourse-level Diversity for Neural Dialog Models Using Conditional Variational Autoencoders

0 likes352 views

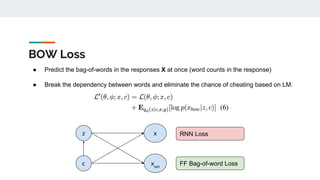

The document describes a method for generating diverse responses in neural dialog models using conditional variational autoencoders (CVAEs). Key points: 1. CVAEs are adapted to model open-domain conversations by conditioning responses on dialog context through latent variables, allowing multiple valid responses. 2. A knowledge-guided CVAE is also proposed to integrate expert knowledge via linguistic features extracted from responses. 3. A new bag-of-words loss is introduced to train the CVAE by predicting word counts, which helps alleviate issues with training CVAEs with RNN decoders.

1 of 29

Downloaded 29 times

![Introduction

However, dull response problem! [Li et al 2015, Serban et al. 2016]. Current solutions

include:

● Add more info to the dialog context [Xing et al 2016, Li et al 2016]

● Improve decoding algorithm, e.g. beam search [Wiseman and Rush 2016]

YesI don’t knowsure

Encoder Decoder

User: I am feeling quite happy today.

… (previous utterances)](https://image.slidesharecdn.com/p17-1061-170918185614/85/Tiancheng-Zhao-2017-Learning-Discourse-level-Diversity-for-Neural-Dialog-Models-Using-Conditional-Variational-Autoencoders-3-320.jpg)

![Conditional Variational Auto Encoder (CVAE)

● C is dialog context

○ B: Do you like cats? A: Yes I do

● Z is the latent variable (gaussian)

● X is the next response

○ B: So do I.

● Trained by Stochastic Gradient Variational

Bayes (SGVB) [Kingma and Welling 2013]](https://image.slidesharecdn.com/p17-1061-170918185614/85/Tiancheng-Zhao-2017-Learning-Discourse-level-Diversity-for-Neural-Dialog-Models-Using-Conditional-Variational-Autoencoders-8-320.jpg)

![Optimization Challenge

Training CVAE with RNN decoder is hard due to the vanishing latent variable problem

[Bowman et al., 2015]

● RNN decoder can cheat by using LM information and ignore Z!

Bowman et al. [2015] described two methods to alleviate the problem :

1. KL annealing (KLA): gradually increase the weight of KL term from 0 to 1 (need early stop).

2. Word drop decoding: setting a proportion of target words to 0 (need careful parameter

picking).](https://image.slidesharecdn.com/p17-1061-170918185614/85/Tiancheng-Zhao-2017-Learning-Discourse-level-Diversity-for-Neural-Dialog-Models-Using-Conditional-Variational-Autoencoders-12-320.jpg)

![Quantitative Metrics

d(r, h) is a distance function [0, 1] to measure the similarity between a reference and a hypothesis.

Appropriateness

Diversity

Ref resp1

Ref resp Mc

Context

Hyp resp 1

Hyp resp N

ModelHuman ... ...](https://image.slidesharecdn.com/p17-1061-170918185614/85/Tiancheng-Zhao-2017-Learning-Discourse-level-Diversity-for-Neural-Dialog-Models-Using-Conditional-Variational-Autoencoders-17-320.jpg)

![Quantitative Analysis Results

Metrics Perplexi

ty (KL)

BLEU-1

(p/r)

BLEU-2

(p/r)

BLEU-3

(p/r)

BLEU-4

(p/r)

A-bow

(p/r)

E-bow

(p/r)

DA

(p/r)

Baseline

(sample)

35.4

(n/a)

0.405/

0.336

0.3/

0.281

0.272/

0.254

0.226/

0.215

0.387/

0.337

0.701/

0.684

0.736/

0.514

CVAE

(greedy)

20.2

(11.36)

0.372/

0.381

0.295/

0.322

0.265/

0.292

0.223/

0.248

0.389/

0.361

0.705/

0.709

0.704/

0.604

kgCVAE

(greedy)

16.02

(13.08)

0.412/

0.411

0.350/

0.356

0.310/

0.318

0.262/

0.272

0.373/

0.336

0.711/

0.712

0.721/

0.598

Note: BLEU are normalized into [0, 1] to be valid precision and recall distance function](https://image.slidesharecdn.com/p17-1061-170918185614/85/Tiancheng-Zhao-2017-Learning-Discourse-level-Diversity-for-Neural-Dialog-Models-Using-Conditional-Variational-Autoencoders-20-320.jpg)

![The Effect of BOW Loss

Same setup on PennTree Bank for LM

[Bowman 2015]. Compare 4 setups:

1. Standard VAE

2. KL Annealing (KLA)

3. BOW

4. BOW + KLA

Goal: low reconstruction loss + small

but non-trivial KL cost

Model Perplexity KL Cost

Standard 122.0 0.05

KLA 111.5 2.02

BOW 97.72 7.41

BOW+KLA 73.04 15.94](https://image.slidesharecdn.com/p17-1061-170918185614/85/Tiancheng-Zhao-2017-Learning-Discourse-level-Diversity-for-Neural-Dialog-Models-Using-Conditional-Variational-Autoencoders-23-320.jpg)

Ad

Recommended

BERT: Bidirectional Encoder Representations from Transformers

BERT: Bidirectional Encoder Representations from TransformersLiangqun Lu BERT was developed by Google AI Language and came out Oct. 2018. It has achieved the best performance in many NLP tasks. So if you are interested in NLP, studying BERT is a good way to go.

PL Lecture 01 - preliminaries

PL Lecture 01 - preliminariesSchwannden Kuo Reason for the Subject

Language Design Trade-Offs Language

Evaluation Criteria

Programming Environments

Language Categories

Future Course Outline

[Paper review] BERT![[Paper review] BERT](https://cdn.slidesharecdn.com/ss_thumbnails/paperreviewbert-190507052754-thumbnail.jpg?width=560&fit=bounds)

![[Paper review] BERT](https://cdn.slidesharecdn.com/ss_thumbnails/paperreviewbert-190507052754-thumbnail.jpg?width=560&fit=bounds)

![[Paper review] BERT](https://cdn.slidesharecdn.com/ss_thumbnails/paperreviewbert-190507052754-thumbnail.jpg?width=560&fit=bounds)

![[Paper review] BERT](https://cdn.slidesharecdn.com/ss_thumbnails/paperreviewbert-190507052754-thumbnail.jpg?width=560&fit=bounds)

[Paper review] BERTJEE HYUN PARK BERT is a language representation model that was pre-trained using two unsupervised prediction tasks: masked language modeling and next sentence prediction. It uses a multi-layer bidirectional Transformer encoder based on the original Transformer architecture. BERT achieved state-of-the-art results on a wide range of natural language processing tasks including question answering and language inference. Extensive experiments showed that both pre-training tasks, as well as a large amount of pre-training data and steps, were important for BERT to achieve its strong performance.

PL Lecture 02 - Binding and Scope

PL Lecture 02 - Binding and ScopeSchwannden Kuo The slide talks about the aspect in binding and scope that programmer of modern language might not be fully aware, but good to know nontheless. Concept of scope and binding makes some programming language special case behavior more explainable and rememberable.

Bert

BertAbdallah Bashir The document discusses the BERT model for natural language processing. It begins with an introduction to BERT and how it achieved state-of-the-art results on 11 NLP tasks in 2018. The document then covers related work on language representation models including ELMo and GPT. It describes the key aspects of the BERT model, including its bidirectional Transformer architecture, pre-training using masked language modeling and next sentence prediction, and fine-tuning for downstream tasks. Experimental results are presented showing BERT outperforming previous models on the GLUE benchmark, SQuAD 1.1, SQuAD 2.0, and SWAG. Ablation studies examine the importance of the pre-training tasks and the effect of model size.

BERT introduction

BERT introductionHanwha System / ICT BERT is a deeply bidirectional, unsupervised language representation model pre-trained using only plain text. It is the first model to use a bidirectional Transformer for pre-training. BERT learns representations from both left and right contexts within text, unlike previous models like ELMo which use independently trained left-to-right and right-to-left LSTMs. BERT was pre-trained on two large text corpora using masked language modeling and next sentence prediction tasks. It establishes new state-of-the-art results on a wide range of natural language understanding benchmarks.

Pre trained language model

Pre trained language modelJiWenKim The document discusses recent developments in pre-trained language models including ELMO, ULMFiT, BERT, and GPT-2. It provides overviews of the core structures and implementations of each model, noting that they have achieved great performance on natural language tasks without requiring labeled data for pre-training, similar to how pre-training helps in computer vision tasks. The document also includes a comparison chart of the types of natural language tasks each model can perform.

1909 BERT: why-and-how (CODE SEMINAR)

1909 BERT: why-and-how (CODE SEMINAR)WarNik Chow This document provides an overview of BERT (Bidirectional Encoder Representations from Transformers) and how it works. It discusses BERT's architecture, which uses a Transformer encoder with no explicit decoder. BERT is pretrained using two tasks: masked language modeling and next sentence prediction. During fine-tuning, the pretrained BERT model is adapted to downstream NLP tasks through an additional output layer. The document outlines BERT's code implementation and provides examples of importing pretrained BERT models and fine-tuning them on various tasks.

NLP State of the Art | BERT

NLP State of the Art | BERTshaurya uppal BERT: Bidirectional Encoder Representation from Transformer.

BERT is a Pretrained Model by Google for State of the art NLP tasks.

BERT has the ability to take into account Syntaxtic and Semantic meaning of Text.

Natural Language Processing - Research and Application Trends

Natural Language Processing - Research and Application TrendsShreyas Suresh Rao This PPT is a Webinar delivered by Dr. Shreyas to explain the research and application trends in NLP area

BERT - Part 1 Learning Notes of Senthil Kumar

BERT - Part 1 Learning Notes of Senthil KumarSenthil Kumar M In this part 1 presentation, I have attempted to provide a '30,000 feet view' of BERT (Bidirectional Encoder Representations from Transformer) - a state of the art Language Model in NLP with high level technical explanations. I have attempted to collate useful information about BERT from various useful sources.

5. manuel arcedillo & juanjo arevalillo (hermes) translation memories

5. manuel arcedillo & juanjo arevalillo (hermes) translation memoriesRIILP This document discusses translation memory (TM) tools and features. It provides an overview of the history and evolution of TM tools, including their move to the cloud. It describes key TM features like leveraging previous translations, fuzzy matching, and analysis capabilities. It also explains that while TM tools all provide similar basic functions, they analyze data and display matches differently, which can result in varying word count metrics. Weighted word counts aim to standardize metrics by assigning different values to matches based on their degree of fuzziness.

From UML/OCL to natural language (using SBVR as pivot)

From UML/OCL to natural language (using SBVR as pivot)Jordi Cabot Validate your UML/OCL models by producing natural language descriptions that can be read by the stakeholder

BERT Finetuning Webinar Presentation

BERT Finetuning Webinar Presentationbhavesh_physics This document discusses fine-tuning the BERT model with PyTorch and the Transformers library. It provides an overview of BERT, how it was trained, its special tokens, the Transformers library, preprocessing text for BERT, using the BertModel class, the approach to fine-tuning BERT for a task, creating a dataset and data loaders, and training and validating the model.

An NLP-based architecture for the autocompletion of partial domain models

An NLP-based architecture for the autocompletion of partial domain modelsLola Burgueño The document presents an NLP-based approach to autocompleting partial domain models. It uses natural language processing techniques like word embeddings and morphological analysis on domain documentation to generate recommendations for concepts and relationships to add to an incomplete model. An evaluation on a past water supply project model achieved 62% recall and 4.46% precision in reconstructing the original model. Most accepted suggestions came from contextual domain knowledge over general knowledge sources.

Information Retrieval with Deep Learning

Information Retrieval with Deep LearningAdam Gibson This document provides an overview of using deep autoencoders to improve question answering systems. It discusses how deep autoencoders can encode text or images into codes that are indexed and stored. This allows for fast lookup of potential answer candidates. The document describes the components of question answering systems and information retrieval systems. It also provides details on how deep autoencoders work, including using a stacked restricted Boltzmann machine architecture for encoding and decoding layers.

BERT

BERTSang Hyun Jeon This document discusses NLP's "Imagenet Moment" with the emergence of transfer learning approaches like BERT, ELMo, and GPT. It explains that these models were pretrained on large datasets and can now be downloaded and fine-tuned for specific tasks, similar to how pretrained ImageNet models revolutionized computer vision. The document also provides an overview of BERT, including its bidirectional Transformer architecture, pretraining tasks, and performance on tasks like GLUE and SQuAD.

Reference Scope Identification in Citing Sentences

Reference Scope Identification in Citing SentencesAkihiro Kameda This document describes an approach to identify the scope of references in sentences containing multiple citations. The approach uses a conditional random field (CRF) sequence model with features including distance, position, part-of-speech tags, and syntactic dependencies. It first segments sentences using punctuation and conjunctions, then applies the CRF and a majority voting scheme to label each word as inside or outside the scope. The method achieved over 90% accuracy on a dataset of citations from ACL papers.

Deep Learning勉強会@小町研 "Learning Character-level Representations for Part-of-Sp...

Deep Learning勉強会@小町研 "Learning Character-level Representations for Part-of-Sp...Yuki Tomo 12/22 Deep Learning勉強会@小町研 にて

"Learning Character-level Representations for Part-of-Speech Tagging" C ́ıcero Nogueira dos Santos, Bianca Zadrozny

を紹介しました。

Hyoung-Gyu Lee - 2015 - NAVER Machine Translation System for WAT 2015

Hyoung-Gyu Lee - 2015 - NAVER Machine Translation System for WAT 2015Association for Computational Linguistics This document describes NAVER's machine translation systems for the WAT 2015 evaluation. For English-to-Japanese translation, the best system combined tree-to-string syntax-based machine translation with neural machine translation re-ranking, achieving a BLEU score of 34.60. For Korean-to-Japanese translation, the top system used phrase-based machine translation and neural machine translation re-ranking, obtaining a BLEU score of 71.38. The document also analyzes the effectiveness of character-level tokenization and other techniques for neural machine translation.

Machine Translation Introduction

Machine Translation Introductionnlab_utokyo Neural machine translation has surpassed statistical machine translation as the leading approach. It uses an encoder-decoder model with attention to learn translation representations from large parallel corpora. Recent developments include incorporating monolingual data through language models, improving attention mechanisms, and minimizing evaluation metrics like BLEU during training rather than just cross-entropy. Open problems remain around handling rare words, semantic meaning, and context. Future work may focus on multilingual models, low-resource translation, and generating text for other modalities like images.

Plug play language_models

Plug play language_modelsMohammad Moslem Uddin This document summarizes a conference paper published at ICLR 2020 that proposes a method called Plug and Play Language Models (PPLM) for controlled text generation using pretrained language models. PPLM allows controlling attributes of generated text like topic or sentiment without retraining the language model by combining it with simple attribute classifiers that guide the text generation process. The paper presents PPLM as a simple alternative to retraining language models that is more efficient and practical for controlled text generation.

[Paper Reading] Supervised Learning of Universal Sentence Representations fro...![[Paper Reading] Supervised Learning of Universal Sentence Representations fro...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingmt-supervisedlearningofuniversalsentencerepresentationsfromnaturallanguageinferencedata-171216153412-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Supervised Learning of Universal Sentence Representations fro...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingmt-supervisedlearningofuniversalsentencerepresentationsfromnaturallanguageinferencedata-171216153412-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Supervised Learning of Universal Sentence Representations fro...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingmt-supervisedlearningofuniversalsentencerepresentationsfromnaturallanguageinferencedata-171216153412-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Supervised Learning of Universal Sentence Representations fro...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingmt-supervisedlearningofuniversalsentencerepresentationsfromnaturallanguageinferencedata-171216153412-thumbnail.jpg?width=560&fit=bounds)

[Paper Reading] Supervised Learning of Universal Sentence Representations fro...Hiroki Shimanaka This document summarizes the paper "Supervised Learning of Universal Sentence Representations from Natural Language Inference Data". It discusses how the researchers trained sentence embeddings using supervised data from the Stanford Natural Language Inference dataset. They tested several sentence encoder architectures and found that a BiLSTM network with max pooling produced the best performing universal sentence representations, outperforming prior unsupervised methods on 12 transfer tasks. The sentence representations learned from the natural language inference data consistently achieved state-of-the-art performance across multiple downstream tasks.

Polymorphism

PolymorphismSherabGyatso This presentation discusses pointers, virtual functions, and polymorphism in C++. It defines pointers as variables that hold the addresses of other variables, and explains how pointers can point to objects through examples. Virtual functions allow a single base class pointer to refer to objects of derived classes by determining the function to call at runtime based on the object's type. Polymorphism means one name can have multiple forms, and it is a key feature of object-oriented programming that allows functions to work with objects of different types through virtual functions.

7. Trevor Cohn (usfd) Statistical Machine Translation

7. Trevor Cohn (usfd) Statistical Machine TranslationRIILP This document discusses statistical machine translation decoding. It begins with an overview of decoding objectives and challenges, such as ambiguity in possible translations. It then describes decoding phrase-based models using a linear model and dynamic programming approach, with approximations like beam search. Grammar-based decoding is also covered, including synchronous context-free grammar parsing and translation. Key challenges like search complexity and language model integration are addressed.

Transformers to Learn Hierarchical Contexts in Multiparty Dialogue

Transformers to Learn Hierarchical Contexts in Multiparty DialogueJinho Choi The document presents an approach using transformers to learn hierarchical contexts in multiparty dialogue. It proposes new pre-training tasks to improve token-level and utterance-level embeddings for handling dialogue contexts. A multi-task learning approach is introduced to fine-tune the language model for a Friends question answering (FriendsQA) task using dialogue evidence, outperforming BERT and RoBERTa. However, the approach shows no improvement on other character mining tasks from Friends. Future work is needed to better represent speakers and inferences in dialogue.

LEPOR: an augmented machine translation evaluation metric - Thesis PPT

LEPOR: an augmented machine translation evaluation metric - Thesis PPT Lifeng (Aaron) Han The document provides an overview of machine translation evaluation (MTE). It discusses existing MTE methods like BLEU, METEOR, WER, and their weaknesses. The author's thesis proposes a new metric called LEPOR that incorporates additional factors to address weaknesses. The additional factors include an enhanced length penalty, n-gram position difference penalty, and tunable parameters to handle cross-language performance differences. The thesis will experiment with LEPOR on various language pairs and shared tasks to evaluate its performance.

BERT - Part 2 Learning Notes

BERT - Part 2 Learning NotesSenthil Kumar M This Part 2 presentation is a more in-depth view of BERT - Bidirectional Encoder Representations from Transformer. The source links offer more depth to the brief overview in the slides

[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...![[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingnaacl-unsupervisedlearningofsentenceembeddingsusingcompositionaln-gramfeatures-181121025013-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingnaacl-unsupervisedlearningofsentenceembeddingsusingcompositionaln-gramfeatures-181121025013-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingnaacl-unsupervisedlearningofsentenceembeddingsusingcompositionaln-gramfeatures-181121025013-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingnaacl-unsupervisedlearningofsentenceembeddingsusingcompositionaln-gramfeatures-181121025013-thumbnail.jpg?width=560&fit=bounds)

[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...Hiroki Shimanaka (1) The document presents an unsupervised method called Sent2Vec to learn sentence embeddings using compositional n-gram features. (2) Sent2Vec extends the continuous bag-of-words model to train sentence embeddings by composing word vectors with n-gram embeddings. (3) Experimental results show Sent2Vec outperforms other unsupervised models on most benchmark tasks, highlighting the robustness of the sentence embeddings produced.

Word_Embedding.pptx

Word_Embedding.pptxNameetDaga1 The document discusses word embedding techniques used to represent words as vectors. It describes Word2Vec as a popular word embedding model that uses either the Continuous Bag of Words (CBOW) or Skip-gram architecture. CBOW predicts a target word based on surrounding context words, while Skip-gram predicts surrounding words given a target word. These models represent words as dense vectors that encode semantic and syntactic properties, allowing operations like word analogy questions.

Ad

More Related Content

What's hot (20)

NLP State of the Art | BERT

NLP State of the Art | BERTshaurya uppal BERT: Bidirectional Encoder Representation from Transformer.

BERT is a Pretrained Model by Google for State of the art NLP tasks.

BERT has the ability to take into account Syntaxtic and Semantic meaning of Text.

Natural Language Processing - Research and Application Trends

Natural Language Processing - Research and Application TrendsShreyas Suresh Rao This PPT is a Webinar delivered by Dr. Shreyas to explain the research and application trends in NLP area

BERT - Part 1 Learning Notes of Senthil Kumar

BERT - Part 1 Learning Notes of Senthil KumarSenthil Kumar M In this part 1 presentation, I have attempted to provide a '30,000 feet view' of BERT (Bidirectional Encoder Representations from Transformer) - a state of the art Language Model in NLP with high level technical explanations. I have attempted to collate useful information about BERT from various useful sources.

5. manuel arcedillo & juanjo arevalillo (hermes) translation memories

5. manuel arcedillo & juanjo arevalillo (hermes) translation memoriesRIILP This document discusses translation memory (TM) tools and features. It provides an overview of the history and evolution of TM tools, including their move to the cloud. It describes key TM features like leveraging previous translations, fuzzy matching, and analysis capabilities. It also explains that while TM tools all provide similar basic functions, they analyze data and display matches differently, which can result in varying word count metrics. Weighted word counts aim to standardize metrics by assigning different values to matches based on their degree of fuzziness.

From UML/OCL to natural language (using SBVR as pivot)

From UML/OCL to natural language (using SBVR as pivot)Jordi Cabot Validate your UML/OCL models by producing natural language descriptions that can be read by the stakeholder

BERT Finetuning Webinar Presentation

BERT Finetuning Webinar Presentationbhavesh_physics This document discusses fine-tuning the BERT model with PyTorch and the Transformers library. It provides an overview of BERT, how it was trained, its special tokens, the Transformers library, preprocessing text for BERT, using the BertModel class, the approach to fine-tuning BERT for a task, creating a dataset and data loaders, and training and validating the model.

An NLP-based architecture for the autocompletion of partial domain models

An NLP-based architecture for the autocompletion of partial domain modelsLola Burgueño The document presents an NLP-based approach to autocompleting partial domain models. It uses natural language processing techniques like word embeddings and morphological analysis on domain documentation to generate recommendations for concepts and relationships to add to an incomplete model. An evaluation on a past water supply project model achieved 62% recall and 4.46% precision in reconstructing the original model. Most accepted suggestions came from contextual domain knowledge over general knowledge sources.

Information Retrieval with Deep Learning

Information Retrieval with Deep LearningAdam Gibson This document provides an overview of using deep autoencoders to improve question answering systems. It discusses how deep autoencoders can encode text or images into codes that are indexed and stored. This allows for fast lookup of potential answer candidates. The document describes the components of question answering systems and information retrieval systems. It also provides details on how deep autoencoders work, including using a stacked restricted Boltzmann machine architecture for encoding and decoding layers.

BERT

BERTSang Hyun Jeon This document discusses NLP's "Imagenet Moment" with the emergence of transfer learning approaches like BERT, ELMo, and GPT. It explains that these models were pretrained on large datasets and can now be downloaded and fine-tuned for specific tasks, similar to how pretrained ImageNet models revolutionized computer vision. The document also provides an overview of BERT, including its bidirectional Transformer architecture, pretraining tasks, and performance on tasks like GLUE and SQuAD.

Reference Scope Identification in Citing Sentences

Reference Scope Identification in Citing SentencesAkihiro Kameda This document describes an approach to identify the scope of references in sentences containing multiple citations. The approach uses a conditional random field (CRF) sequence model with features including distance, position, part-of-speech tags, and syntactic dependencies. It first segments sentences using punctuation and conjunctions, then applies the CRF and a majority voting scheme to label each word as inside or outside the scope. The method achieved over 90% accuracy on a dataset of citations from ACL papers.

Deep Learning勉強会@小町研 "Learning Character-level Representations for Part-of-Sp...

Deep Learning勉強会@小町研 "Learning Character-level Representations for Part-of-Sp...Yuki Tomo 12/22 Deep Learning勉強会@小町研 にて

"Learning Character-level Representations for Part-of-Speech Tagging" C ́ıcero Nogueira dos Santos, Bianca Zadrozny

を紹介しました。

Hyoung-Gyu Lee - 2015 - NAVER Machine Translation System for WAT 2015

Hyoung-Gyu Lee - 2015 - NAVER Machine Translation System for WAT 2015Association for Computational Linguistics This document describes NAVER's machine translation systems for the WAT 2015 evaluation. For English-to-Japanese translation, the best system combined tree-to-string syntax-based machine translation with neural machine translation re-ranking, achieving a BLEU score of 34.60. For Korean-to-Japanese translation, the top system used phrase-based machine translation and neural machine translation re-ranking, obtaining a BLEU score of 71.38. The document also analyzes the effectiveness of character-level tokenization and other techniques for neural machine translation.

Machine Translation Introduction

Machine Translation Introductionnlab_utokyo Neural machine translation has surpassed statistical machine translation as the leading approach. It uses an encoder-decoder model with attention to learn translation representations from large parallel corpora. Recent developments include incorporating monolingual data through language models, improving attention mechanisms, and minimizing evaluation metrics like BLEU during training rather than just cross-entropy. Open problems remain around handling rare words, semantic meaning, and context. Future work may focus on multilingual models, low-resource translation, and generating text for other modalities like images.

Plug play language_models

Plug play language_modelsMohammad Moslem Uddin This document summarizes a conference paper published at ICLR 2020 that proposes a method called Plug and Play Language Models (PPLM) for controlled text generation using pretrained language models. PPLM allows controlling attributes of generated text like topic or sentiment without retraining the language model by combining it with simple attribute classifiers that guide the text generation process. The paper presents PPLM as a simple alternative to retraining language models that is more efficient and practical for controlled text generation.

[Paper Reading] Supervised Learning of Universal Sentence Representations fro...![[Paper Reading] Supervised Learning of Universal Sentence Representations fro...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingmt-supervisedlearningofuniversalsentencerepresentationsfromnaturallanguageinferencedata-171216153412-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Supervised Learning of Universal Sentence Representations fro...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingmt-supervisedlearningofuniversalsentencerepresentationsfromnaturallanguageinferencedata-171216153412-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Supervised Learning of Universal Sentence Representations fro...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingmt-supervisedlearningofuniversalsentencerepresentationsfromnaturallanguageinferencedata-171216153412-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Supervised Learning of Universal Sentence Representations fro...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingmt-supervisedlearningofuniversalsentencerepresentationsfromnaturallanguageinferencedata-171216153412-thumbnail.jpg?width=560&fit=bounds)

[Paper Reading] Supervised Learning of Universal Sentence Representations fro...Hiroki Shimanaka This document summarizes the paper "Supervised Learning of Universal Sentence Representations from Natural Language Inference Data". It discusses how the researchers trained sentence embeddings using supervised data from the Stanford Natural Language Inference dataset. They tested several sentence encoder architectures and found that a BiLSTM network with max pooling produced the best performing universal sentence representations, outperforming prior unsupervised methods on 12 transfer tasks. The sentence representations learned from the natural language inference data consistently achieved state-of-the-art performance across multiple downstream tasks.

Polymorphism

PolymorphismSherabGyatso This presentation discusses pointers, virtual functions, and polymorphism in C++. It defines pointers as variables that hold the addresses of other variables, and explains how pointers can point to objects through examples. Virtual functions allow a single base class pointer to refer to objects of derived classes by determining the function to call at runtime based on the object's type. Polymorphism means one name can have multiple forms, and it is a key feature of object-oriented programming that allows functions to work with objects of different types through virtual functions.

7. Trevor Cohn (usfd) Statistical Machine Translation

7. Trevor Cohn (usfd) Statistical Machine TranslationRIILP This document discusses statistical machine translation decoding. It begins with an overview of decoding objectives and challenges, such as ambiguity in possible translations. It then describes decoding phrase-based models using a linear model and dynamic programming approach, with approximations like beam search. Grammar-based decoding is also covered, including synchronous context-free grammar parsing and translation. Key challenges like search complexity and language model integration are addressed.

Transformers to Learn Hierarchical Contexts in Multiparty Dialogue

Transformers to Learn Hierarchical Contexts in Multiparty DialogueJinho Choi The document presents an approach using transformers to learn hierarchical contexts in multiparty dialogue. It proposes new pre-training tasks to improve token-level and utterance-level embeddings for handling dialogue contexts. A multi-task learning approach is introduced to fine-tune the language model for a Friends question answering (FriendsQA) task using dialogue evidence, outperforming BERT and RoBERTa. However, the approach shows no improvement on other character mining tasks from Friends. Future work is needed to better represent speakers and inferences in dialogue.

LEPOR: an augmented machine translation evaluation metric - Thesis PPT

LEPOR: an augmented machine translation evaluation metric - Thesis PPT Lifeng (Aaron) Han The document provides an overview of machine translation evaluation (MTE). It discusses existing MTE methods like BLEU, METEOR, WER, and their weaknesses. The author's thesis proposes a new metric called LEPOR that incorporates additional factors to address weaknesses. The additional factors include an enhanced length penalty, n-gram position difference penalty, and tunable parameters to handle cross-language performance differences. The thesis will experiment with LEPOR on various language pairs and shared tasks to evaluate its performance.

BERT - Part 2 Learning Notes

BERT - Part 2 Learning NotesSenthil Kumar M This Part 2 presentation is a more in-depth view of BERT - Bidirectional Encoder Representations from Transformer. The source links offer more depth to the brief overview in the slides

Hyoung-Gyu Lee - 2015 - NAVER Machine Translation System for WAT 2015

Hyoung-Gyu Lee - 2015 - NAVER Machine Translation System for WAT 2015Association for Computational Linguistics

Similar to Tiancheng Zhao - 2017 - Learning Discourse-level Diversity for Neural Dialog Models Using Conditional Variational Autoencoders (20)

[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...![[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingnaacl-unsupervisedlearningofsentenceembeddingsusingcompositionaln-gramfeatures-181121025013-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingnaacl-unsupervisedlearningofsentenceembeddingsusingcompositionaln-gramfeatures-181121025013-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingnaacl-unsupervisedlearningofsentenceembeddingsusingcompositionaln-gramfeatures-181121025013-thumbnail.jpg?width=560&fit=bounds)

![[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...](https://cdn.slidesharecdn.com/ss_thumbnails/paperreadingnaacl-unsupervisedlearningofsentenceembeddingsusingcompositionaln-gramfeatures-181121025013-thumbnail.jpg?width=560&fit=bounds)

[Paper Reading] Unsupervised Learning of Sentence Embeddings using Compositi...Hiroki Shimanaka (1) The document presents an unsupervised method called Sent2Vec to learn sentence embeddings using compositional n-gram features. (2) Sent2Vec extends the continuous bag-of-words model to train sentence embeddings by composing word vectors with n-gram embeddings. (3) Experimental results show Sent2Vec outperforms other unsupervised models on most benchmark tasks, highlighting the robustness of the sentence embeddings produced.

Word_Embedding.pptx

Word_Embedding.pptxNameetDaga1 The document discusses word embedding techniques used to represent words as vectors. It describes Word2Vec as a popular word embedding model that uses either the Continuous Bag of Words (CBOW) or Skip-gram architecture. CBOW predicts a target word based on surrounding context words, while Skip-gram predicts surrounding words given a target word. These models represent words as dense vectors that encode semantic and syntactic properties, allowing operations like word analogy questions.

Neural machine translation of rare words with subword units

Neural machine translation of rare words with subword unitsTae Hwan Jung This paper proposes using subword units generated by byte-pair encoding (BPE) to address the open-vocabulary problem in neural machine translation. The paper finds that BPE segmentation outperforms a back-off dictionary baseline on two translation tasks, improving BLEU by up to 1.1 and CHRF by up to 1.3. BPE learns a joint encoding between source and target languages which increases consistency in segmentation compared to language-specific encodings, further improving translation of rare and unseen words.

What is word2vec?

What is word2vec?Traian Rebedea General presentation about the word2vec model, including some explanations for training and reference to the implicit factorization done by the model

Nlp research presentation

Nlp research presentationSurya Sg This document provides an overview of natural language processing (NLP) research trends presented at ACL 2020, including shifting away from large labeled datasets towards unsupervised and data augmentation techniques. It discusses the resurgence of retrieval models combined with language models, the focus on explainable NLP models, and reflections on current achievements and limitations in the field. Key papers on BERT and XLNet are summarized, outlining their main ideas and achievements in advancing the state-of-the-art on various NLP tasks.

Poster Tweet-Norm 2013

Poster Tweet-Norm 2013pruiz_ The document summarizes the system architecture and results of Vicomtech-ik4's system for lexical normalization of Spanish tweets. It achieved an accuracy of 65.15% on the test set and 65.42% on the development set, improvements of 5.09% and 4.42% respectively over the task baseline. Key aspects of the system included domain-adapted edit distances, a language model to rank candidate normalizations, and rule-based and dictionary resources to generate candidates, with the largest source of errors being gaps in domain dictionaries.

End-to-end sequence labeling via bi-directional LSTM-CNNs-CRF

End-to-end sequence labeling via bi-directional LSTM-CNNs-CRFJayavardhan Reddy Peddamail This document summarizes a tutorial for developing a state-of-the-art named entity recognition framework using deep learning. The tutorial uses a bi-directional LSTM-CNN architecture with a CRF layer, as presented in a 2016 paper. It replicates the paper's results on the CoNLL 2003 dataset for NER, achieving an F1 score of 91.21. The tutorial covers data preparation from the dataset, word embeddings using GloVe vectors, a CNN encoder for character-level representations, a bi-LSTM for word-level encoding, and a CRF layer for output decoding and sequence tagging. The experience of presenting this tutorial to friends highlighted the need for detailed comments and explanations of each step and PyTorch functions.

Open vocabulary problem

Open vocabulary problemJaeHo Jang DELAB - sequence generation seminar

Title

Open vocabulary problem

Table of contents

1. Open vocabulary problem

1-1. Open vocabulary problem

1-2. Ignore rare words

1-3. Approximative Softmax

1-4. Back-off Models

1-5. Character-level model

2. Solution1: Byte Pair Encoding(BPE)

3. Solution2: WordPieceModel(WPM)

Tomáš Mikolov - Distributed Representations for NLP

Tomáš Mikolov - Distributed Representations for NLPMachine Learning Prague The document discusses word embedding techniques, specifically Word2vec. It introduces the motivation for distributed word representations and describes the Skip-gram and CBOW architectures. Word2vec produces word vectors that encode linguistic regularities, with simple examples showing words with similar relationships have similar vector offsets. Evaluation shows Word2vec outperforms previous methods, and its word vectors are now widely used in NLP applications.

Word_Embeddings.pptx

Word_Embeddings.pptxGowrySailaja The document discusses word embeddings, which learn vector representations of words from large corpora of text. It describes two popular methods for learning word embeddings: continuous bag-of-words (CBOW) and skip-gram. CBOW predicts a word based on surrounding context words, while skip-gram predicts surrounding words from the target word. The document also discusses techniques like subsampling frequent words and negative sampling that improve the training of word embeddings on large datasets. Finally, it outlines several applications of word embeddings, such as multi-task learning across languages and embedding images with text.

Transformer Seq2Sqe Models: Concepts, Trends & Limitations (DLI)

Transformer Seq2Sqe Models: Concepts, Trends & Limitations (DLI)Deep Learning Italia This document provides an overview of transformer seq2seq models, including their concepts, trends, and limitations. It discusses how transformer models have replaced RNNs for seq2seq tasks due to being more parallelizable and effective at modeling long-term dependencies. Popular seq2seq models like T5, BART, and Pegasus are introduced. The document reviews common pretraining objectives for seq2seq models and current trends in larger model sizes, task-specific pretraining, and long-range modeling techniques. Limitations discussed include the need for grounded representations and efficient generation for seq2seq models.

Engineering Intelligent NLP Applications Using Deep Learning – Part 2

Engineering Intelligent NLP Applications Using Deep Learning – Part 2 Saurabh Kaushik This document discusses how deep learning techniques can be applied to natural language processing tasks. It begins by explaining some of the limitations of traditional rule-based and machine learning approaches to NLP, such as the lack of semantic understanding and difficulty of feature engineering. Deep learning approaches can learn features automatically from large amounts of unlabeled text and better capture semantic and syntactic relationships between words. Recurrent neural networks are well-suited for NLP because they can model sequential data like text, and convolutional neural networks can learn hierarchical patterns in text.

[KDD 2018 tutorial] End to-end goal-oriented question answering systems![[KDD 2018 tutorial] End to-end goal-oriented question answering systems](https://cdn.slidesharecdn.com/ss_thumbnails/kdd2018tutorialend-to-endgoal-orientedquestionansweringsystems-180821081440-thumbnail.jpg?width=560&fit=bounds)

![[KDD 2018 tutorial] End to-end goal-oriented question answering systems](https://cdn.slidesharecdn.com/ss_thumbnails/kdd2018tutorialend-to-endgoal-orientedquestionansweringsystems-180821081440-thumbnail.jpg?width=560&fit=bounds)

![[KDD 2018 tutorial] End to-end goal-oriented question answering systems](https://cdn.slidesharecdn.com/ss_thumbnails/kdd2018tutorialend-to-endgoal-orientedquestionansweringsystems-180821081440-thumbnail.jpg?width=560&fit=bounds)

![[KDD 2018 tutorial] End to-end goal-oriented question answering systems](https://cdn.slidesharecdn.com/ss_thumbnails/kdd2018tutorialend-to-endgoal-orientedquestionansweringsystems-180821081440-thumbnail.jpg?width=560&fit=bounds)

[KDD 2018 tutorial] End to-end goal-oriented question answering systemsQi He End to-end goal-oriented question answering systems

version 2.0: An updated version with references of the old version (https://www.slideshare.net/QiHe2/kdd-2018-tutorial-end-toend-goaloriented-question-answering-systems).

08/22/2018: The old version was just deleted for reducing the confusion.

[AAAI 2019 tutorial] End-to-end goal-oriented question answering systems![[AAAI 2019 tutorial] End-to-end goal-oriented question answering systems](https://cdn.slidesharecdn.com/ss_thumbnails/aaai2019tutorialend-to-endgoal-orientedquestionansweringsystems-190128122117-thumbnail.jpg?width=560&fit=bounds)

![[AAAI 2019 tutorial] End-to-end goal-oriented question answering systems](https://cdn.slidesharecdn.com/ss_thumbnails/aaai2019tutorialend-to-endgoal-orientedquestionansweringsystems-190128122117-thumbnail.jpg?width=560&fit=bounds)

![[AAAI 2019 tutorial] End-to-end goal-oriented question answering systems](https://cdn.slidesharecdn.com/ss_thumbnails/aaai2019tutorialend-to-endgoal-orientedquestionansweringsystems-190128122117-thumbnail.jpg?width=560&fit=bounds)

![[AAAI 2019 tutorial] End-to-end goal-oriented question answering systems](https://cdn.slidesharecdn.com/ss_thumbnails/aaai2019tutorialend-to-endgoal-orientedquestionansweringsystems-190128122117-thumbnail.jpg?width=560&fit=bounds)

[AAAI 2019 tutorial] End-to-end goal-oriented question answering systemsQi He End-to-end goal-oriented question answering systems

- End-to-End Workflow

- Basic version: Single-Turn Question Answering

- Advanced version: Multi-Turn Question Answering

- LinkedIn real scenarios: LinkedIn Help Center, Analytics Bot

Ara--CANINE: Character-Based Pre-Trained Language Model for Arabic Language U...

Ara--CANINE: Character-Based Pre-Trained Language Model for Arabic Language U...IJCI JOURNAL Recent advancements in the field of natural language processing have markedly enhanced the capability of machines to comprehend human language. However, as language models progress, they require continuous architectural enhancements and different approaches to text processing. One significant challenge stems from the rich diversity of languages, each characterized by its distinctive grammar resulting ina decreased accuracy of language models for specific languages, especially for low-resource languages. This limitation is exacerbated by the reliance of existing NLP models on rigid tokenization methods, rendering them susceptible to issues with previously unseen or infrequent words. Additionally, models based on word and subword tokenization are vulnerable to minor typographical errors, whether they occur naturally or result from adversarial misspellings. To address these challenges, this paper presents the utilization of a recently proposed free-tokenization method, such as Cannine, to enhance the comprehension of natural language. Specifically, we employ this method to develop an Arabic-free tokenization language model. In this research, we will precisely evaluate our model’s performance across a range of eight tasks using Arabic Language Understanding Evaluation (ALUE) benchmark. Furthermore, we will conduct a comparative analysis, pitting our free-tokenization model against existing Arabic language models that rely on sub-word tokenization. By making our pre-training and fine-tuning models accessible to the Arabic NLP community, we aim to facilitate the replication of our experiments and contribute to the advancement of Arabic language processing capabilities. To further support reproducibility and open-source collaboration, the complete source code and model checkpoints will be made publicly available on our Huggingface1 . In conclusion, the results of our study will demonstrate that the free-tokenization approach exhibits comparable performance to established Arabic language models that utilize sub-word tokenization techniques. Notably, in certain tasks, our model surpasses the performance of some of these existing models. This evidence underscores the efficacy of free-tokenization in processing the Arabic language, particularly in specific linguistic contexts.

Enriching Word Vectors with Subword Information

Enriching Word Vectors with Subword InformationSeonghyun Kim 1) The document proposes a new word vector model that represents words as the sum of their character n-gram vectors to better capture morphological information.

2) It tests this model on nine languages and shows it outperforms previous models on word similarity and analogy tasks.

3) Representing words as combinations of character n-grams allows the model to learn representations for out-of-vocabulary words.

ورشة تضمين الكلمات في التعلم العميق Word embeddings workshop

ورشة تضمين الكلمات في التعلم العميق Word embeddings workshopiwan_rg This document provides an introduction to word embeddings in deep learning. It defines word embeddings as vectors of real numbers that represent words, where similar words have similar vector representations. Word embeddings are needed because they allow words to be treated as numeric inputs for machine learning algorithms. The document outlines different types of word embeddings, including frequency-based methods like count vectors and co-occurrence matrices, and prediction-based methods like CBOW and skip-gram models from Word2Vec. It also discusses tools for generating word embeddings like Word2Vec, GloVe, and fastText. Finally, it provides a tutorial on implementing Word2Vec in Python using Gensim.

Generating sentences from a continuous space

Generating sentences from a continuous spaceShuhei Iitsuka 1) The document summarizes a research paper that proposed using a variational autoencoder (VAE) model to generate natural language sentences from a continuous latent space.

2) It showed the VAE model could outperform an RNN language model baseline on a missing word imputation task, suggesting the VAE better captures global sentence characteristics.

3) Analysis found the VAE learns topics and lengths of sentences, and can generate grammatical sentences when interpolating in the latent space, showing promise for text generation.

IRJET- Survey on Deep Learning Approaches for Phrase Structure Identification...

IRJET- Survey on Deep Learning Approaches for Phrase Structure Identification...IRJET Journal This document discusses deep learning approaches for identifying phrase structures in sentences. It begins with an introduction to natural language processing and phrase structure grammar. Traditional n-gram and rule-based approaches to phrase structure identification are described. Recent deep learning methods for natural language tasks that have been applied to phrase structure identification are then summarized, including word embeddings, convolutional neural networks, recurrent neural networks and recursive neural networks. The document concludes that deep learning requires less manual feature engineering and has achieved good performance on many NLP tasks, but still has room for improvement, especially on tasks involving unlabeled data.

How to expand your nlp solution to new languages using transfer learning

How to expand your nlp solution to new languages using transfer learningLena Shakurova Expanding NLP models to new languages typically involves annotating new data sets which is time and resource expensive. To reduce the costs one can use cross-lingual embeddings enabling knowledge transfer from languages with sufficient training data to low-resource languages. In this talk, you will hear about the challenges in learning cross-lingual embeddings for multilingual resume parsing.

Ad

More from Association for Computational Linguistics (20)

Muis - 2016 - Weak Semi-Markov CRFs for NP Chunking in Informal Text

Muis - 2016 - Weak Semi-Markov CRFs for NP Chunking in Informal TextAssociation for Computational Linguistics This paper contributes a noun phrase-annotated SMS corpus and proposes a weak semi-Markov CRF model for noun phrase chunking in informal text. The weak semi-CRF model improves training speed over linear-CRF and semi-CRF models while maintaining similar accuracy. Experiments on the SMS corpus show the weak semi-CRF achieves F1 scores comparable to other models but trains faster, especially with larger training data sizes.

Castro - 2018 - A High Coverage Method for Automatic False Friends Detection ...

Castro - 2018 - A High Coverage Method for Automatic False Friends Detection ...Association for Computational Linguistics This document presents a new method for automatically detecting false friends between Spanish and Portuguese using word embeddings. The method builds word vector spaces for each language using word2vec, finds a linear transformation between the spaces, and measures vector distances to classify word pairs as cognates or false friends. In experiments on a dataset of 710 word pairs, the method achieved state-of-the-art accuracy of 77.28% and high coverage of 97.91%, outperforming previous work. Future work will explore using different word embeddings and fine-grained classifications of partial false friends.

Castro - 2018 - A Crowd-Annotated Spanish Corpus for Humour Analysis

Castro - 2018 - A Crowd-Annotated Spanish Corpus for Humour AnalysisAssociation for Computational Linguistics This document describes a Spanish language corpus for humor analysis that was created through crowd-sourcing annotations. Over 27,000 tweets were collected from humorous accounts and annotated through a web interface. The corpus contains over 100,000 annotations of the tweets' humor and funniness. Inter-annotator agreement was higher for this corpus than a previous Spanish humor corpus. The dataset will help analyze subjectivity in humor and was used in a shared task on humor classification and funniness prediction.

Muthu Kumar Chandrasekaran - 2018 - Countering Position Bias in Instructor In...

Muthu Kumar Chandrasekaran - 2018 - Countering Position Bias in Instructor In...Association for Computational Linguistics This document discusses position bias in instructor interventions in MOOC discussion forums. It finds that instructors are more likely to intervene in threads that appear higher on the discussion forum user interface due to their recent activity. To address this, it proposes a debiased classifier that weights examples based on their propensity for intervention. It finds this approach identifies intervention opportunities that were overlooked due to position bias. The debiased classifier outperforms a standard classifier on several metrics, demonstrating it can better predict unbiased intervention needs.

Daniel Gildea - 2018 - The ACL Anthology: Current State and Future Directions

Daniel Gildea - 2018 - The ACL Anthology: Current State and Future DirectionsAssociation for Computational Linguistics The document summarizes the history and current state of the ACL Anthology, a repository of publications from ACL-sponsored conferences. It discusses how the Anthology was established in 2001 and is now maintained by volunteers, containing over 45,000 papers. The presentation calls for community involvement to help future-proof the Anthology through efforts like migrating its infrastructure and improving documentation. It also proposes hosting the Anthology on the main ACL website and recruiting a new editor.

Elior Sulem - 2018 - Semantic Structural Evaluation for Text Simplification

Elior Sulem - 2018 - Semantic Structural Evaluation for Text SimplificationAssociation for Computational Linguistics The document presents SAMSA, a new automatic evaluation measure for structural text simplification. SAMSA uses semantic parsing to measure the preservation of semantic structures and relations between an original text and its simplified version. It correlates significantly better with human judgments of meaning preservation and structural simplicity than prior reference-based metrics. SAMSA is the first evaluation method designed specifically for structural simplification operations like sentence splitting.

Daniel Gildea - 2018 - The ACL Anthology: Current State and Future Directions

Daniel Gildea - 2018 - The ACL Anthology: Current State and Future DirectionsAssociation for Computational Linguistics NLP OSS 2018 - W18-2504

Wenqiang Lei - 2018 - Sequicity: Simplifying Task-oriented Dialogue Systems w...

Wenqiang Lei - 2018 - Sequicity: Simplifying Task-oriented Dialogue Systems w...Association for Computational Linguistics (1) Sequicity is a framework that simplifies task-oriented dialogue systems using single sequence-to-sequence architectures.

(2) It formalizes dialogues as sequences of belief spans and responses and decodes them in two stages: generating a belief span followed by a response.

(3) An experiment on two datasets found that a two-stage CopyNet instantiation of Sequicity outperformed several baselines in effectiveness, efficiency and handling out-of-vocabulary requests.

Matthew Marge - 2017 - Exploring Variation of Natural Human Commands to a Rob...

Matthew Marge - 2017 - Exploring Variation of Natural Human Commands to a Rob...Association for Computational Linguistics The document summarizes a study that explored how people's strategies for giving commands to a robot change over time during a collaborative navigation task. Ten participants each directed a robot for one hour via dialogue. Initially, participants predominantly used metric units like distances in their commands, but over time their commands increasingly referred to environmental landmarks. The study collected audio, text, and robot data to analyze parameters in commands. Future work aims to automate dialogue response generation based on this data.

Venkatesh Duppada - 2017 - SeerNet at EmoInt-2017: Tweet Emotion Intensity Es...

Venkatesh Duppada - 2017 - SeerNet at EmoInt-2017: Tweet Emotion Intensity Es...Association for Computational Linguistics The document describes a system for estimating emotion intensity in tweets. It takes a lexicon-based and word vector-based approach to create sentence embeddings for tweets. Various regression models are trained and an ensemble is used to predict emotion intensity scores between 0-1 for anger, sadness, joy and fear. The system achieved third place in predicting emotion intensity and second place for intensities over 0.5. Future work involves using contextual sentence embeddings to improve predictions.

Satoshi Sonoh - 2015 - Toshiba MT System Description for the WAT2015 Workshop

Satoshi Sonoh - 2015 - Toshiba MT System Description for the WAT2015 WorkshopAssociation for Computational Linguistics This document describes Toshiba's machine translation system submitted to the WAT2015 workshop. It discusses using statistical post-editing (SPE) to improve rule-based machine translation (RBMT) output, as well as combining SPE and SMT systems using reranking with recurrent neural network language models. Experimental results show that the combined system achieved the best BLEU and RIBES scores compared to the individual SPE and SMT systems on several language pairs, including Japanese-English and Chinese-Japanese. However, human evaluation correlations were not entirely clear.

Chenchen Ding - 2015 - NICT at WAT 2015

Chenchen Ding - 2015 - NICT at WAT 2015Association for Computational Linguistics WAT - W15-5004 (Poster)

John Richardson - 2015 - KyotoEBMT System Description for the 2nd Workshop on...

John Richardson - 2015 - KyotoEBMT System Description for the 2nd Workshop on...Association for Computational Linguistics The document describes improvements made to the KyotoEBMT machine translation system. It discusses using forest parsing of input sentences to handle parsing errors and syntactic divergences. It also describes using the Nile alignment tool along with constituent parsing to improve word alignments from the training corpus. New features were added and the reranking was improved by incorporating a neural machine translation-based bilingual language model.

John Richardson - 2015 - KyotoEBMT System Description for the 2nd Workshop on...

John Richardson - 2015 - KyotoEBMT System Description for the 2nd Workshop on...Association for Computational Linguistics El documento describe el sistema de traducción basado en ejemplos KyotoEBMT. El sistema utiliza análisis de dependencia tanto del idioma origen como del idioma destino y puede manejar ambigüedades en las hipótesis de traducción mediante el uso de reglas de rejilla. Los resultados oficiales del WAT2015 muestran mejoras en las métricas BLEU y RIBES con la reranqueación de traducciones, aunque la reranqueación empeora la evaluación humana para la dirección de traducción japonés-chino. El sistema Ky

Zhongyuan Zhu - 2015 - Evaluating Neural Machine Translation in English-Japan...

Zhongyuan Zhu - 2015 - Evaluating Neural Machine Translation in English-Japan...Association for Computational Linguistics This document evaluates several neural machine translation models for English to Japanese translation. It finds that simple neural models outperform statistical machine translation baselines. Soft attention models with LSTM units performed best. However, training these models on pre-reordered data hurt performance. The neural models tended to produce grammatically correct but incomplete translations by omitting information. Replacing unknown words helped some models but more sophisticated solutions are needed for models trained on natural order data.

Zhongyuan Zhu - 2015 - Evaluating Neural Machine Translation in English-Japan...

Zhongyuan Zhu - 2015 - Evaluating Neural Machine Translation in English-Japan...Association for Computational Linguistics This document evaluates various neural machine translation models for English to Japanese translation. It compares different network architectures, recurrent units, and training data configurations. Results show that soft-attention models outperformed multi-layer encoder-decoder models, and training on pre-reordered data hurt performance. Neural machine translation models tended to generate grammatically correct but incomplete translations.

Satoshi Sonoh - 2015 - Toshiba MT System Description for the WAT2015 Workshop

Satoshi Sonoh - 2015 - Toshiba MT System Description for the WAT2015 WorkshopAssociation for Computational Linguistics Toshiba presented their machine translation system for the WAT2015 workshop. Their system uses statistical post-editing (SPE) to correct rule-based machine translation (RBMT) output. It also combines SPE and phrase-based statistical machine translation (SMT) results by reranking the merged n-best lists using a recurrent neural network language model. Evaluation showed the combined system achieved the best results on most language pairs compared to SPE and SMT individually. Analysis of system selections by the combination found it primarily chose translations from SPE.

Chenchen Ding - 2015 - NICT at WAT 2015

Chenchen Ding - 2015 - NICT at WAT 2015Association for Computational Linguistics The document summarizes research conducted by NICT at the WAT 2015 workshop. They tested simple translation techniques like reverse pre-reordering for Japanese-to-English and character-based translation for Korean-to-Japanese. The techniques were found to work effectively and the researchers encourage wider use of these techniques if confirmed through human evaluation at the workshop.

Graham Neubig - 2015 - Neural Reranking Improves Subjective Quality of Machin...

Graham Neubig - 2015 - Neural Reranking Improves Subjective Quality of Machin...Association for Computational Linguistics Neural reranking of machine translation output improves both automatic metrics and subjective human evaluations of translation quality. The document analyzes reranking results from a statistical machine translation system using an attentional neural machine translation model. Reranking corrected errors related to reordering, insertion, deletion, substitution and conjugation. Specifically, it improved phrasal reordering, auxiliary verb insertion/deletion, and coordinate structures. The gains were mainly in grammatical aspects rather than lexical selection. While reranking is shown to be effective, questions remain about comparing it to pure neural machine translation and neural language models.

Graham Neubig - 2015 - Neural Reranking Improves Subjective Quality of Machin...

Graham Neubig - 2015 - Neural Reranking Improves Subjective Quality of Machin...Association for Computational Linguistics This document discusses using neural reranking to improve the subjective quality of machine translation. It finds that reranking N-best lists generated by a baseline machine translation system using neural models leads to improvements in both automatic metrics like BLEU and manual evaluations of translation quality. A qualitative analysis shows that reranking most improves reordering, insertion, and conjugation errors while having less success with terminology. The analysis suggests neural reranking is an effective technique for machine translation enhancement.

Muis - 2016 - Weak Semi-Markov CRFs for NP Chunking in Informal Text

Muis - 2016 - Weak Semi-Markov CRFs for NP Chunking in Informal TextAssociation for Computational Linguistics

Castro - 2018 - A High Coverage Method for Automatic False Friends Detection ...

Castro - 2018 - A High Coverage Method for Automatic False Friends Detection ...Association for Computational Linguistics

Castro - 2018 - A Crowd-Annotated Spanish Corpus for Humour Analysis

Castro - 2018 - A Crowd-Annotated Spanish Corpus for Humour AnalysisAssociation for Computational Linguistics

Muthu Kumar Chandrasekaran - 2018 - Countering Position Bias in Instructor In...

Muthu Kumar Chandrasekaran - 2018 - Countering Position Bias in Instructor In...Association for Computational Linguistics

Daniel Gildea - 2018 - The ACL Anthology: Current State and Future Directions

Daniel Gildea - 2018 - The ACL Anthology: Current State and Future DirectionsAssociation for Computational Linguistics

Elior Sulem - 2018 - Semantic Structural Evaluation for Text Simplification

Elior Sulem - 2018 - Semantic Structural Evaluation for Text SimplificationAssociation for Computational Linguistics

Daniel Gildea - 2018 - The ACL Anthology: Current State and Future Directions

Daniel Gildea - 2018 - The ACL Anthology: Current State and Future DirectionsAssociation for Computational Linguistics

Wenqiang Lei - 2018 - Sequicity: Simplifying Task-oriented Dialogue Systems w...

Wenqiang Lei - 2018 - Sequicity: Simplifying Task-oriented Dialogue Systems w...Association for Computational Linguistics

Matthew Marge - 2017 - Exploring Variation of Natural Human Commands to a Rob...

Matthew Marge - 2017 - Exploring Variation of Natural Human Commands to a Rob...Association for Computational Linguistics

Venkatesh Duppada - 2017 - SeerNet at EmoInt-2017: Tweet Emotion Intensity Es...

Venkatesh Duppada - 2017 - SeerNet at EmoInt-2017: Tweet Emotion Intensity Es...Association for Computational Linguistics

Satoshi Sonoh - 2015 - Toshiba MT System Description for the WAT2015 Workshop

Satoshi Sonoh - 2015 - Toshiba MT System Description for the WAT2015 WorkshopAssociation for Computational Linguistics

John Richardson - 2015 - KyotoEBMT System Description for the 2nd Workshop on...

John Richardson - 2015 - KyotoEBMT System Description for the 2nd Workshop on...Association for Computational Linguistics

John Richardson - 2015 - KyotoEBMT System Description for the 2nd Workshop on...

John Richardson - 2015 - KyotoEBMT System Description for the 2nd Workshop on...Association for Computational Linguistics

Zhongyuan Zhu - 2015 - Evaluating Neural Machine Translation in English-Japan...

Zhongyuan Zhu - 2015 - Evaluating Neural Machine Translation in English-Japan...Association for Computational Linguistics

Zhongyuan Zhu - 2015 - Evaluating Neural Machine Translation in English-Japan...

Zhongyuan Zhu - 2015 - Evaluating Neural Machine Translation in English-Japan...Association for Computational Linguistics

Satoshi Sonoh - 2015 - Toshiba MT System Description for the WAT2015 Workshop

Satoshi Sonoh - 2015 - Toshiba MT System Description for the WAT2015 WorkshopAssociation for Computational Linguistics

Graham Neubig - 2015 - Neural Reranking Improves Subjective Quality of Machin...

Graham Neubig - 2015 - Neural Reranking Improves Subjective Quality of Machin...Association for Computational Linguistics

Graham Neubig - 2015 - Neural Reranking Improves Subjective Quality of Machin...

Graham Neubig - 2015 - Neural Reranking Improves Subjective Quality of Machin...Association for Computational Linguistics

Ad

Recently uploaded (20)

To study Digestive system of insect.pptx

To study Digestive system of insect.pptxArshad Shaikh Education is one thing no one can take away from you.”

Understanding P–N Junction Semiconductors: A Beginner’s Guide

Understanding P–N Junction Semiconductors: A Beginner’s GuideGS Virdi Dive into the fundamentals of P–N junctions, the heart of every diode and semiconductor device. In this concise presentation, Dr. G.S. Virdi (Former Chief Scientist, CSIR-CEERI Pilani) covers:

What Is a P–N Junction? Learn how P-type and N-type materials join to create a diode.

Depletion Region & Biasing: See how forward and reverse bias shape the voltage–current behavior.

V–I Characteristics: Understand the curve that defines diode operation.

Real-World Uses: Discover common applications in rectifiers, signal clipping, and more.

Ideal for electronics students, hobbyists, and engineers seeking a clear, practical introduction to P–N junction semiconductors.

To study the nervous system of insect.pptx

To study the nervous system of insect.pptxArshad Shaikh The *nervous system of insects* is a complex network of nerve cells (neurons) and supporting cells that process and transmit information. Here's an overview:

Structure

1. *Brain*: The insect brain is a complex structure that processes sensory information, controls behavior, and integrates information.

2. *Ventral nerve cord*: A chain of ganglia (nerve clusters) that runs along the insect's body, controlling movement and sensory processing.

3. *Peripheral nervous system*: Nerves that connect the central nervous system to sensory organs and muscles.

Functions

1. *Sensory processing*: Insects can detect and respond to various stimuli, such as light, sound, touch, taste, and smell.

2. *Motor control*: The nervous system controls movement, including walking, flying, and feeding.

3. *Behavioral responThe *nervous system of insects* is a complex network of nerve cells (neurons) and supporting cells that process and transmit information. Here's an overview:

Structure

1. *Brain*: The insect brain is a complex structure that processes sensory information, controls behavior, and integrates information.

2. *Ventral nerve cord*: A chain of ganglia (nerve clusters) that runs along the insect's body, controlling movement and sensory processing.

3. *Peripheral nervous system*: Nerves that connect the central nervous system to sensory organs and muscles.

Functions

1. *Sensory processing*: Insects can detect and respond to various stimuli, such as light, sound, touch, taste, and smell.

2. *Motor control*: The nervous system controls movement, including walking, flying, and feeding.

3. *Behavioral responses*: Insects can exhibit complex behaviors, such as mating, foraging, and social interactions.

Characteristics

1. *Decentralized*: Insect nervous systems have some autonomy in different body parts.

2. *Specialized*: Different parts of the nervous system are specialized for specific functions.

3. *Efficient*: Insect nervous systems are highly efficient, allowing for rapid processing and response to stimuli.

The insect nervous system is a remarkable example of evolutionary adaptation, enabling insects to thrive in diverse environments.

The insect nervous system is a remarkable example of evolutionary adaptation, enabling insects to thrive

Biophysics Chapter 3 Methods of Studying Macromolecules.pdf

Biophysics Chapter 3 Methods of Studying Macromolecules.pdfPKLI-Institute of Nursing and Allied Health Sciences Lahore , Pakistan. This chapter provides an in-depth overview of the viscosity of macromolecules, an essential concept in biophysics and medical sciences, especially in understanding fluid behavior like blood flow in the human body.

Key concepts covered include:

✅ Definition and Types of Viscosity: Dynamic vs. Kinematic viscosity, cohesion, and adhesion.

⚙️ Methods of Measuring Viscosity:

Rotary Viscometer

Vibrational Viscometer

Falling Object Method

Capillary Viscometer

🌡️ Factors Affecting Viscosity: Temperature, composition, flow rate.

🩺 Clinical Relevance: Impact of blood viscosity in cardiovascular health.

🌊 Fluid Dynamics: Laminar vs. turbulent flow, Reynolds number.

🔬 Extension Techniques:

Chromatography (adsorption, partition, TLC, etc.)

Electrophoresis (protein/DNA separation)

Sedimentation and Centrifugation methods.

Presentation of the MIPLM subject matter expert Erdem Kaya

Presentation of the MIPLM subject matter expert Erdem KayaMIPLM Presentation of the MIPLM subject matter expert Erdem Kaya

Introduction to Vibe Coding and Vibe Engineering

Introduction to Vibe Coding and Vibe EngineeringDamian T. Gordon Introduction to Vibe Coding and Vibe Engineering

Contact Lens:::: An Overview.pptx.: Optometry

Contact Lens:::: An Overview.pptx.: OptometryMushahidRaza8 A comprehensive guide for Optometry students: understanding in easy launguage of contact lens.

Don't forget to like,share and comments if you found it useful!.

APM Midlands Region April 2025 Sacha Hind Circulated.pdf

APM Midlands Region April 2025 Sacha Hind Circulated.pdfAssociation for Project Management APM event hosted by the Midlands Network on 30 April 2025.

Speaker: Sacha Hind, Senior Programme Manager, Network Rail

With fierce competition in today’s job market, candidates need a lot more than a good CV and interview skills to stand out from the crowd.

Based on her own experience of progressing to a senior project role and leading a team of 35 project professionals, Sacha shared not just how to land that dream role, but how to be successful in it and most importantly, how to enjoy it!

Sacha included her top tips for aspiring leaders – the things you really need to know but people rarely tell you!

We also celebrated our Midlands Regional Network Awards 2025, and presenting the award for Midlands Student of the Year 2025.

This session provided the opportunity for personal reflection on areas attendees are currently focussing on in order to be successful versus what really makes a difference.

Sacha answered some common questions about what it takes to thrive at a senior level in a fast-paced project environment: Do I need a degree? How do I balance work with family and life outside of work? How do I get leadership experience before I become a line manager?

The session was full of practical takeaways and the audience also had the opportunity to get their questions answered on the evening with a live Q&A session.

Attendees hopefully came away feeling more confident, motivated and empowered to progress their careers

K12 Tableau Tuesday - Algebra Equity and Access in Atlanta Public Schools